Abstract

Mediated social touch (MST) is a popular way to communicate emotion and connect people in mobile communication. This article applies MST gestures with vibrotactile stimuli in two online communication modes—asynchronous and synchronous communication (texting and video calling) to enhance social presence for mobile communication. We first designed the application that included the visual design of MST gestures, the vibrotactile stimuli design for MST gestures, and the interface design for texting and video calling. Then, we conducted a user study to explore if the MST gestures with vibrotactile stimuli could increase social presence in texting and video calling compared to MST gestures without vibrotactile stimuli. We also explored if the communication modes affected the social presence significantly when applying MST signals. The quantitative data analysis shows that adding vibrotactile stimuli to MST gestures helps to increase social presence in the aspects of co-presence, perceived behavior interdependence, perceived affective understanding, and perceived emotional interdependence. Adding vibrotactile stimuli to MST gestures causes no significant differences in attentional allocation and perceived message understanding. There is no significant difference between texting and video calling when applying MST signals in mobile communication. The qualitative data analysis shows that participants think MST gestures with vibrotactile stimuli are interesting, and they are willing to use them in mobile communication, but the application design should be iterated based on their feedback.

1. Introduction

Mediated social touch (MST) describes one person touching another person over a distance with tactile or kinesthetic feedback (Haans & Ijsselsteijn, Citation2006). Some researchers have demonstrated that the mobile device is the most wanted non-wearable device to communicate MST signals (Rognon et al., Citation2022). The recent haptic technology in mobile devices—embedded vibration actuators, makes it possible to communicate MST signals through mobile devices (Gordon & Zhai, Citation2019; Rantala et al., Citation2013). For example, the Taptic Engine has been embedded in iPhones since iPhone 7, which could simulate various vibrotactile effects for different applications (Liu et al., Citation2018). Some researchers have developed prototypes and applications for mobile devices to communicate MST signals, such as patting, slapping, kissing, tickling, poking, and stroking (Furukawa et al., Citation2012; Hemmert et al., Citation2011; Park et al., Citation2013, Citation2016; Teyssier et al., Citation2018; Zhang & Cheok, Citation2021).

MST signals are important in mobile communication since it can elicit feelings of social presence (van Erp & Toet, Citation2015). Social presence describes the degree to which a user is perceived as real (Gunawardena & Zittle, Citation1997; Hadi & Valenzuela, Citation2020; Oh et al., Citation2018) and with access to intelligence, intentions, and sensory impressions (Biocca, Citation2006). Haptic feedback is a popular and useful way to increase social presence and convey more affective information in mediated social interaction (Huisman, Citation2017; Oh et al., Citation2018; van Erp & Toet, Citation2015) during phone calls, video conferencing, and text messaging (van Erp & Toet, Citation2015). For example, some researchers have applied haptic feedback for mediated social interaction in a collaborative environment (Basdogan et al., Citation2000; Chellali et al., Citation2011; Giannopoulos et al., Citation2008; Sallnäs, Citation2010; Yarosh et al., Citation2017) to increase social presence.

However, not too many MST studies focused on mobile devices with haptic feedback in social presence. We will apply the haptic technology (Wei et al., Citation2022d), using a mobile device embedded with a linear resonance actuator (LRA) to explore the field of MST signals, online communication, and social presence.

The objective of this article is to increase social presence in mobile communication by applying MST gestures with vibrotactile stimuli. In this study, we present the MST gesture design with vibrotactile stimuli for mobile communication. The detailed design factors in this study are the visuals of MST gestures, the vibrotactile stimuli of MST gestures and the interface of mobile communication applications. In the user study, we will explore if the MST gestures with vibrotactile stimuli has a higher social presence than the MST gestures without vibrotactile stimuli. We will also explore if there is a significant difference between synchronous (video calling) and asynchronous communication (texting) in social presence when applying MST signals.

2. Related work

2.1. MST gestures with haptic stimuli on mobile devices

Many researchers developed prototypes for communicating MST gestures with haptic stimuli on mobile devices. Zhang and Cheok (Citation2021) designed a haptic device—Kissenger to display kissing when using mobile devices for online communication to make users feel a deeper emotional connection. The haptic device could capture the real-time sensor data and transmit it to the mobile application and other users over the communication network. Users can use the haptic device for touch communication during video or audio calls. Besides the communication between a dyad, this haptic device can also be used in social applications, such as Skype, Facebook, and WhatsApp for multiple people communicating together. Park et al. (Citation2016) designed CheekTouch to transmit MST signals, such as patting, slapping, tickling, and kissing during a phone call. The haptic prototype was attached to the mobile phone. Users can use different finger gestures on the phone screen to trigger different vibrotactile stimuli on the other party’s cheek. Park et al. (Citation2013) designed POKE to transmit MST signals during a phone call. This prototype was an inflatable surface that could be attached to the back of the mobile device for inputting MST gestures and the front of the mobile device for receiving MST signals. Hoggan et al. (Citation2012) proposed Pressage and ForcePhone. Users can squeeze the side of the phone with different pressures to trigger different vibrations on the recipient’s phone during a phone call. Teyssier et al. (Citation2018) developed MobiLimb, for mobile devices. It could provide MST signals, such as stroking and patting with haptic stimuli. Furukawa et al. (Citation2012) designed KUSUGURI, with this tactile interface, the user could share a body part with another user. The dyads could use this interface for bidirectional tickling. Bales et al. (Citation2011) designed CoupleVIBE, which could be used for person-to-person touch. It sends touch cues between partners’ mobile phones by vibrations to share location information. Pradana et al. (Citation2014) designed a ring-shaped device to transmit MST signals when using mobile phones for telecommunications. Ranasinghe et al. (Citation2020) designed a haptic device—EnPower. Users can customize MST signals on mobile phones and transmit them to a glove for visually impaired people to perceive (Ranasinghe et al., Citation2020). Zhang et al. (Citation2021) designed SansTouch for remote handshaking with a mobile phone.

Based on the above, we found some space for further investigation:

Communication modes. Many researchers focused on traditional phone calls, with the phone on the ear. This study will consider other communication modes, such as video calling and texting. Zhang and Cheok (Citation2021) considered video calling, but there was an additional prototype for the mobile device. This study will use a mobile device embedded with an LRA to display vibrotactile stimuli directly without extra devices.

Touch modality. In the above studies, visual information and haptic information are mostly separated. Wilson and Brewster (Citation2017) has demonstrated that combining multiple modalities could increase the available range of emotional states. This study will apply multimodal MST signals, with visual and haptic information together. We will explore if multi-modal modalities have better feedback than the single modality.

2.2. Social presence with haptic stimuli

Social presence with haptic stimuli has been explored in collaborative environments (Basdogan et al., Citation2000; Chellali et al., Citation2011; Giannopoulos et al., Citation2008; Sallnäs, Citation2010; Yarosh et al., Citation2017), remote communication (Nakanishi et al., Citation2014), affect communication (Ahmed et al., Citation2020), interaction with a virtual agent (Ahmed et al., Citation2020; Lee et al., Citation2017), and behavior influence (Hadi & Valenzuela, Citation2020). Researchers used haptic devices, such as wearables (Yarosh et al., Citation2017), robot hands (Chellali et al., Citation2011; Nakanishi et al., Citation2014), designed haptic prototypes for hands (Basdogan et al., Citation2000; Giannopoulos et al., Citation2008; Lee et al., Citation2017) and feet (Lee et al., Citation2017), smartwatches (Hadi & Valenzuela, Citation2020), and mobile devices (Hadi & Valenzuela, Citation2020).

Oh et al. (Citation2018) reviewed studies on haptic social presence and found that haptic stimuli influenced social presence significantly. A positive relationship existed between haptic stimuli and perceptions of social presence (Oh et al., Citation2018).

Based on the above, we observe the following:

Haptic devices. In social presence, most haptic devices are wearables, robots, and other prototypes developed to display haptic stimuli. Not many studies applied mobile devices in the social presence field. Hadi and Valenzuela (Citation2020) used the mobile device and conducted studies to show that text messages with haptic alerts help improve consumer performance on related tasks. The increased sense of social presence helped to drive this effect. But the context of their work differs from ours. They applied haptic alert messages to influence behavior, while we planned to apply vibrotactile stimuli for MST gestures in mobile communication to increase social presence.

Contexts. Most studies focused on collaborative tasks [e.g., collaboratively designing and drawing a new logo or poster (Yarosh et al., Citation2017), and passing an object between two people (Sallnäs, Citation2010)]. This study will consider a daily casual chatting context without specific collaboration tasks.

2.3. Asynchronous and synchronous MST signals transmission and emotional expressions in mobile application

Synchronous ways include face-to-face and telephone conversations, such as phone calls and video calling (Robinson & Stubberud, Citation2012). Asynchronous communication is usually conducted through email, online discussion boards, or direct messages, such as texting in WhatsApp, WeChat, or iMessage (Bailey et al., Citation2021).

Many researchers have developed prototypes and applications for synchronous MST signals in mobile communication. For phone calls, researchers have designed different prototypes, such as Kissenger (Zhang & Cheok, Citation2021), CheekTouch (Park et al., Citation2016), POKE (Park et al., Citation2013), ForcePhone and Pressage (Hoggan et al., Citation2012), CoupleVIBE (Bales et al., Citation2011), and Bendi (Park et al., Citation2015). For video calling, prototypes can communicate MST, such as remote handshaking (Nakanishi et al., Citation2014) and kissing (Zhang & Cheok, Citation2021). Other MST signals for synchronous communication, examples are tickling with KUSUGURI (Furukawa et al., Citation2012), grasping by a mobile sized prototype (Hemmert et al., Citation2011) and handshaking by SansTouch (Zhang et al., Citation2021).

Researchers have also developed prototypes and applications for asynchronous communication, adding vibrotactile stimuli to the specific text or using multimodal emojis during texting. Pradana et al. (Citation2014) designed a ring-shaped wearable system—Ring U, which could promote emotional communication between people during texting messages using vibrotactile expressions. MobiLimb (Teyssier et al., Citation2018) could allow users send a tactile emoji during texting. The other user can feel the tactile emoji on the back of the hand while holding the phone or on the wrist with MobiLimb (Teyssier et al., Citation2018). Israr et al. (Citation2015) designed Feel Messenger, a social and instant messaging application that provided emojis and expressions with vibrotactile stimuli. Wilson and Brewster (Citation2017) designed Multi-Moji for mobile communication applications, which combined vibrotactile, thermal, and visual stimuli together to expand the affective range of feedback. Wei et al. (Citation2020) proposed a method to design vibrotactile stimuli for emojis and stickers in online chatting applications.

Based on the above, we find that:

For synchronous communication, most MST signals on mobile devices have a single modality during a phone call, mainly the haptic modality. This article will consider multimodal MST signals in visual and haptic modalities. Besides phone calls, we will consider other communication modes of texting and video calling. Zhang and Cheok (Citation2021) considered kissing on mobile devices during video calls, but the MST types were limited. Users needed to use their lips and cheeks to touch the prototype to feel kissing (Zhang & Cheok, Citation2021), which might not be convenient for some people. This study will consider designing for a phone on the hands.

For asynchronous communication, most studies mainly applied multimodal emoji to expand the affective range of feedback. Most emojis express different looks and emotions. There is a lack of considering specific multimodal MST signals on mobile devices. This study will consider multimodal MST signals in online communication.

3. Application design

3.1. Design of mediated social touch

3.1.1. Selection of MST gestures

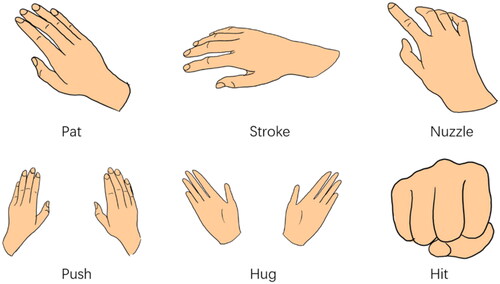

We explored the physical properties and designed vibrotactile stimuli for 23 MST gestures in our previous studies (Wei et al., Citation2022a, Citation2022b). We only choose six MST gestures (“Hit,” “Pat,” “Stroke,” “Nuzzle,” “Push,” and “Hug”) in this study for the following reasons:

This research is to explore if adding vibrotactile stimuli could increase the social presence and if there is a significant difference between synchronous (video calling) and asynchronous communication (texting) in social presence when applying MST signals. The key point is not the categories of MST gestures. It is not necessary to apply all MST signals.

Due to the technical limitation at this stage, we cannot apply all MST signals on the interface because the interface is too small to display all MST signals without overlapping.

As touch communicates emotion (Hertenstein et al., Citation2006, Citation2009), we want to select MST gestures with different emotional expressions (Hertenstein et al., Citation2009) and frequently used in mobile communication (Wei et al., Citation2022a). We selected “Hit,” “Hug,” “Nuzzle,” “Pat,” “Push,” and “Stroke” in this study for the following reasons:

From the perspective of emotional expression. We found “Hit,” ‘Hug,” “Nuzzle,” “Pat,” “Push,” and “Stroke” could cover rich emotional expressions, such as “Anger,” “Happiness,” “Sadness,” “Disgust,” “Love,” “Gratitude,” and “Sympathy” between male-male, male-female, female-female, female-male (Hertenstein et al., Citation2009). We do not consider “Fear” in this study, as our dialogue in the user study will not cause fear.

From the perspective of usage frequency. Wei et al. (Citation2022a) shows that users use “Hug,” “Pat,” and “Stroke” frequently and sometimes use “Hit,” “Nuzzle,” and “Push.” It is effective to apply these MST gestures in this study.

shows the possible expressions these selected MST gestures could express (Hertenstein et al., Citation2009) and the usage frequency in mobile communication (Wei et al., Citation2022a).

Table 1. Selection of MST gestures.

3.1.2. Visual design

We need visual hints for MST gesture input. We applied the concrete figure of hands as the sticker for MST gesture input for the following reasons:

Visual compensation. It is difficult for vibrotactile stimuli to express it as a single modality (Ernst & Banks, Citation2002; Wei et al., Citation2022b). To make the receiver understand the accurate MST signals sent by the sender in online communication, we apply the concrete figure of hands to display the accurate MST gestures. To make the sender aware of what MST signals they are sending, we make the sender’s and receiver’s phones display the same concrete figure of hands.

Demands in the user study. The MST gestures corresponds to the real social touch (RST) gestures in this study. We want the visual information of hands in MST gestures and RST gestures to be similar, to avoid the fantasy of cute figures of hands affecting the research results. We designed the visuals of MST gestures similar to the real hands.

The principles to design visual stimuli of MST gestures are as follows:

User-defined gestures. We designed visual stickers based on user-defined gestures for MST. The reason is that user-defined gestures are extracted from users’ natural gestures, which can reflect the users’ typical behavior (Wobbrock et al., Citation2009). We applied the elicitation study (Wobbrock et al., Citation2009), obtained user-defined gestures for MST, and calculated each user-defined gesture’s agreement rate in our previous study (Wei et al., Citation2022a).

Emoji. For some MST gestures that are not easy to understand only based on the figure of user-defined gestures (Wei et al., Citation2022a), we design it based on Emoji. The reason is that Emoji has been widely used and validated effective to be understood and accepted by users.

shows the visual design concepts. We designed the visual sticker based on user-defined gestures for “Hit,” “Pat,” “Stroke,” “Nuzzle,” and “Push.” The visual stickers of these MST gestures are similar to user-defined gestures.

Table 2. Visual design inspiration.

For “Hug,” Wei et al. (Citation2022a) show that “Hug” and “Squeeze” have the same user-defined gesture with different contexts. Regarding visual modality as the single channel, it is not easy to differentiate the user-defined gestures of these two MST gestures. Wei et al. (Citation2022a) also found that the user-defined gesture for “Hug” was in the metaphor group. So, we refer to Emoji to choose a unique one for “Hug.” shows the visual stickers we designed for the input MST gestures in this study.

3.1.3. Vibrotactile stimuli

In our previous study, we provided a design process (Wei et al., Citation2022b) to design vibrotactile stimuli for MST gestures based on the social touch properties, such as pressure and duration (Wei et al., Citation2022a). We applied the recommended vibrotactile stimuli for these MST gestures (except for “Stoke”). The results showed that the vibrotactile stimuli for “Stroke” were too strong (Wei et al., Citation2022b). In this study, we changed the frequency of “Stroke” to give it a gentle intensity for an iteration.

shows the parameters and recorded accelerations of vibrotactile stimuli for the MST gestures (Wei et al., Citation2022b).

Table 3. Details of vibrotactile stimuli for the MST gestures selected from Wei et al. (Citation2022b).

3.2. Interface design

We developed texting and video calling interfaces with haptic input and display using Android Studio (API: 25).

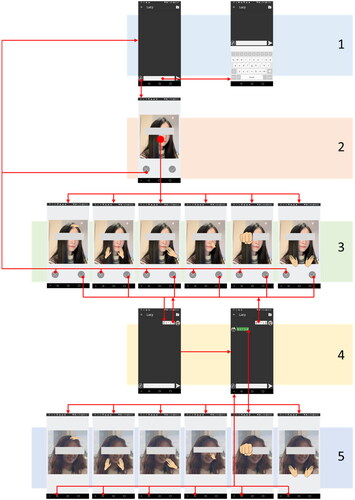

3.2.1. Interface for texting and sticker animation

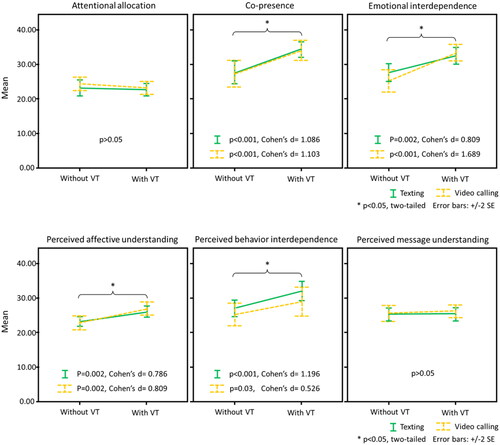

shows the interface for texting. The visual stickers of MST gestures are invisible at first. The visual sticker of MST gestures displays when the user touches a specific area. When the user’s finger does not touch the area, the visual sticker of MST gestures becomes invisible again. The positions of the visual stickers are fixed. We set the positions of visual stickers considering two aspects: (1) proper positions in real life and (2) evenly distributed throughout the interface. For example, “Stroke” on the head, “Nuzzle” on the nose, “Hit” in the face, “Pat” on the shoulder, and “Push” the shoulder are all possible gestures in real life. “Hug” around the shoulder could also be possible in real life and for evenly distributed needs in the interface. show the positions and the display of different MST gestures:

Figure 2. Interface for texting. (a) interface for texting words, (b) interface for the participants’ inputting MST gestures (the avatar is the experimenter), (c) positions of each MST gesture, (d) interface for the participants’ receiving MST gestures (the avatar is the participant), and (e) positions of each MST gesture. We developed this texting interface based on an open-source application (https://github.com/Baloneo/LANC).

Stroke. We set the position of “Stroke” on the upper head. The visual sticker displays when the user touches the upper head of the photo. The visual sticker of “Stroke” moves laterally along with the user finger’s lateral movement on the touchscreen.

Nuzzle. We set the “Nuzzle” position on the center of the nose. The visual sticker displays when the user touches the nose. The “Nuzzle” sticker moves back and forth along with the user’s finger’s lateral movement on the touchscreen.

Hit. We set the position of “Hit” on the left side of the head (). The visual sticker displays when the user touches the left side of the head of the photo. There is no lateral movement.

Pat. We set the position of “Pat” on the right side of the shoulder (). The visual sticker displays when the user touches the right shoulder. There is no lateral movement.

Push. We set the position of “Push” in the middle of two shoulders and at a lower position than “Pat.” The visual sticker displays when the user touches a specific area. There is no lateral movement.

Hug. We set the position of “Hug” around the middle of two shoulders and at a lower position than “Push.” The visual sticker displays when the user touches a specific area. There is no lateral movement.

The position of each MST gesture on the experimenter’s photo is the same as those on the participant’s photo ().

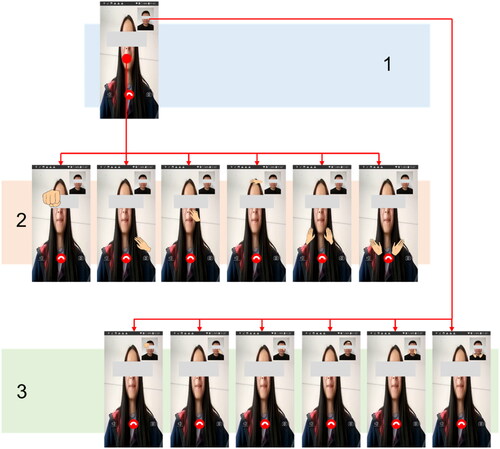

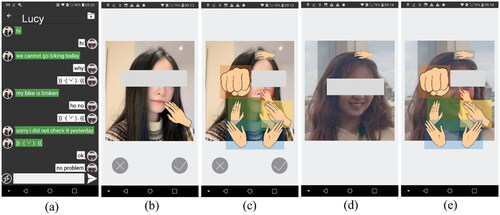

3.2.2. Interface for video calls

shows the interface for video calls. The relative positions of stickers in the video calling interface are similar to those in the texting interface. “Stroke” the upper head, “Hit” the left side of the head, “Nuzzle” the center of the nose, “Pat” the right shoulder, “Push” the shoulder, and “Hug” the lower part of the shoulder ().

Figure 3. Interface for video calls. (a) original interface for video calls, (b) interface for the participants’ inputting MST gestures (the full-screen image is the experimenter), (c) positions of each MST gesture on the experimenter’s image, (d) interface for the participant’s receiving MST gestures (the image box in the upper right corner shows the participant, and (e) positions of each MST gesture on the participant’s image. We developed this video calling interface based on an open-source application (https://github.com/xmtggh/VideoCalling).

For the participant’s image box in the upper right corner of the interface, the relative positions of MST gestures are similar to those on the experimenter’s image (the full-screen image). The size of the stickers is smaller than that of the experimenter’s image ().

3.3. Interface structure

We developed an application software for texting and video calling. We created the necessary interfaces and functionalities for the user study. We do not consider other interfaces or functionalities irrelevant to this study, such as the login or logout parts.

3.3.1. Texting

shows the structure of texting. There are five layers ():

Table 4. Description of the interface structure of texting.

Layer 1. This layer shows the texting interface. The basic layout is similar to that in existing applications (e.g., WhatsApp, WeChat, Teams on mobile devices). The primary functions on this page are texting, sending the message, and jumping to Layer 2—the MST gestures input interface. We provided a haptic button (

) on the left lower corner of this page. On its right are the text input box and the send button.

) on the left lower corner of this page. On its right are the text input box and the send button.Layer 2 and Layer 3. These two layers show MST gestures input interface. Pressing the haptic button in Layer 1 leads to Layer 2. The central part with the image of the other person is the area for MST gestures input. We provided buttons for confirming (

) and cancelling (

) and cancelling ( ) the MST gestures input. When cancelling the MST gestures input, the interface jumps back to Layer 1. When starting to input the MST gestures, Layer 3 shows the sticker of MST gestures. When confirming the MST gestures input, the interface jumps to Layer 4 and automatically sends the textual MST icon (}}◝(ˊᵕˋ)◟{{).

) the MST gestures input. When cancelling the MST gestures input, the interface jumps back to Layer 1. When starting to input the MST gestures, Layer 3 shows the sticker of MST gestures. When confirming the MST gestures input, the interface jumps to Layer 4 and automatically sends the textual MST icon (}}◝(ˊᵕˋ)◟{{).Layer 4. Pressing the textual MST icon from the participant’s side (the right side in the interface, ) leads to Layer 3, which shows what the participant has sent. Pressing the textual MST icon from the other side (the left side in the interface, ) leads to Layer 5.

Layer 5. This layer is the MST signals display interface, which shows what MST signals the other person sends.

3.3.2. Video calling

shows the structure of the interface for video calls. There are three layers ():

Table 5. Description of the interface structure of video calling.

Layer 1. This layer shows the layout of the video calling interface. The basic layout of this interface is similar to that in existing applications (e.g., WeChat or Teams on mobile devices). The primary function on this page is transmitting the synchronous image of the dyads.

Layer 2. This layer describes the MST gestures input interface. The display area of the other people is also the MST gestures input area. The participants could input MST gestures directly on the interface when video calling with other people, which seems like the participant is touching other people through the touchscreen face to face.

Layer 3. This layer describes the MST signals display interface. The window in the upper right corner displays the synchronous image of the participants and the MST signals that other people send, which seems like the other person is touching the participant through the touchscreen face to face.

4. User study

4.1. Research questions

We design and apply MST signals in online communication. There are two research questions, as follows:

If applying the MST gestures with vibrotactile stimuli could increase social presence in texting and video calling compared to MST gestures without vibrotactile stimuli?

Is there a significant difference between synchronous (video calling) and asynchronous communication (texting) in social presence when applying MST signals?

4.2. Experiment design

We will test two communication modes—texting and video calling, in two conditions—MST gestures without vibrotactile stimuli and MST gestures with vibrotactile stimuli. We apply a 2 × 2 mixed factorial experimental design. The condition is the within-subjects factor, and the communication mode is the between-subjects factor ().

Table 6. Descriptions of variables.

The independent variables are the condition and the communication mode. The dependent variable is social presence. Visual feedback is included in both conditions.

4.3. Participants

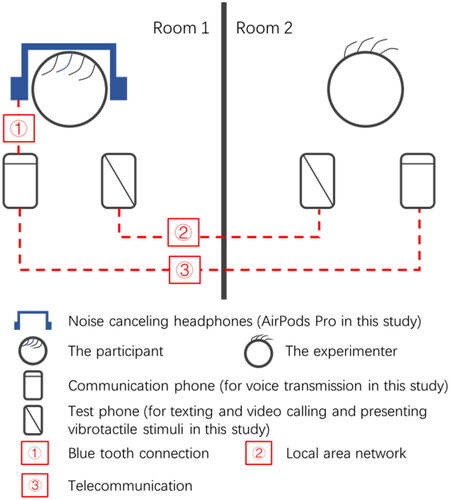

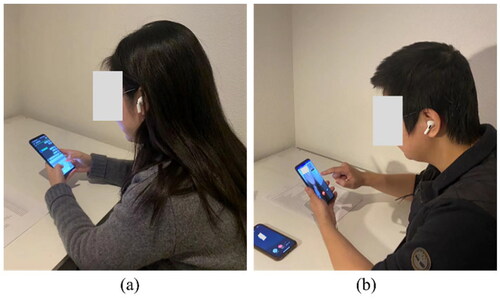

We recruited 40 participants (aged 22–37, mean = 27.13, SD = 3.65, 24 females and 16 males) from the local university to participate in the user study. Participants were asked to wear noise-cancelling headphones to avoid the sounds from the vibrotactile stimuli (). All participants have experience using smartphones and online communication applications for texting and video calling.

Figure 6. Test environment. (a) Texting and (b) video calling (the second phone on the desk is used for voice transmission).

Participants were asked to hold the test phone with two hands when texting (). When inputting MST gestures, participants were asked to hold the test phone with their left hand and input MST gestures with the right index finger (). Although both the thumb and index finger are mainly used for interaction with a smartphone’s touchscreen (Le et al., Citation2019), we consider using the index finger when inputting MST gestures. The reason is that shows that the index finger is more frequently used than the thumb finger in the user-defined gesture for the chosen MST gestures in this study.

4.4. Experiment setup

4.4.1. Apparatus

We used a customized version of the LG V30 smartphone as our test phone, the same as that in our previous study (Wei et al., Citation2022a, Citation2022b, Citation2022d). It contains a Linear Resonance Actuator (LRA) (MPlus 1040). The LRA could convert the drive signals to vibrotactile stimuli.

We have two smartphones (both are LG V30). One is with the software that triggers MST gestures with vibrotactile stimuli (with visual and haptic feedback). The other is with the software triggers MST gestures without vibrotactile stimuli (with only visual feedback). During the user study, if participants need to test MST gestures with vibrotactile stimuli, they will use the one that can provide vibrotactile stimuli. Or they will use the one providing no vibrotactile stimuli to test different conditions.

4.4.2. Experiment environment

Participants sat in front of a desk, wearing noise-cancelling headphones (Airpods Pro), which also had a function of telephony (). The experimenter stayed in another room to interact with the participants with texting or video calling (). The experiment was a role-play scenario setting. Participants were asked to imagine they were talking with a friend online. The role-play setting could help participants to stay focused on the topic and conversation (Seland, Citation2006; Simsarian, Citation2003). The details of the environments are as follows:

Texting. Participants and the experimenter used the test phones to chat over the local area network (LAN).

Video calling. Due to the technical limitation, the video call application we developed could not transmit voice well. We provided an iPhone to participants. The headphones were connected to the iPhone. The experimenter used another phone to call the iPhone in the participants’ place. Participants used the test phone for synchronous video images transmission and used the iPhone with headphones for synchronous voices transmission ().

4.4.3. Scenario

We try to create a situation in which the participants may feel disappointed then relieved, so that they may use the MST signals to express their emotions. We provide one scenario with two activities: A1—go biking and A2—visiting a garden in the user study. In the scenario, the experimenter and the participant have arranged one activity for the weekends. However, due to some reasons, the arranged activity could not be conducted. The experimenter contacted the participant by texting or video calling to discuss these things and tried to suggest a new activity.

Some participants may prefer A1, while other participants may prefer A2. In order to decrease the preference difference of activity and not let the activity order affect the experimental results, the order of activities provided to each participant is counterbalanced.

When A1—go biking is the arranged activity while A2—visiting a garden is the suggested new activity, the details of A1 and A2 presented to participants are as follows:

A1: “You (the participant) bought a new bicycle last week, and you want to find a partner to go biking very much. You invited your friend Lucy (the experimenter) to go biking on weekends. Lucy has not used her bike for a long time. However, she forgot to check if her bike was ready to use. On Saturday morning, you were waiting for Lucy to set off. She suddenly found that her bike was broken. She needed to tell you that you could not go biking today (by texting or video calling).”

A2: “Your friend Lucy (the experimenter) suddenly found that a seasoned garden just opened on this Saturday. This garden was only open for the public twice a year, two weeks in spring, two weeks in autumn. You missed the open time last autumn. You wanted to visit this garden very much. Now is a great chance to go there.”

When A2—visiting a garden is the arranged activity while A1—go biking is the suggested new activity, the details of A2 and A1 presenting to participants are as follows:

A2: “Your friend Lucy (the experimenter) invited you (the participant) to go to a seasoned garden this weekend. This garden was only open for the public twice a year, two weeks in spring, and two weeks in autumn. You missed the open time last autumn. Now is a great chance to go there. You and Lucy planned to meet at the bus station on Saturday morning. Before leaving the house, Lucy checked the details of the garden. Suddenly she found that she got the opening hour wrong. The garden would open next week in this spring, not this week. So, you could not go to the garden today.”

A1: “Lucy (the experimenter) found the weather was great today. She wanted to invite you (the participant) to go biking to a forest.”

We gave an example of dialogue in texting (selected from one participant in the user study) to show how the experimenter communicate with the participant, as follows:

Experimenter (initiates the conversation): ‘Sorry, we cannot go to the park today. I remembered a wrong opening hour. It will open next weekend, not this weekend.’

Participant: ‘That is so bad’, ‘}}◝(ˊᵕˋ)◟{{’.

Experimenter (proposes another activity): ‘The weather is great, maybe we could go biking to the forest?’

Participant: ‘Great! Even better!’, ‘}}◝(ˊᵕˋ)◟{{’

Experimenter (suggests a meeting time and location): ‘Then we could meet at your house in 20 minutes. See you.’

Participant: ‘See you.’, ‘}}◝(ˊᵕˋ)◟{{’

4.4.4. Measures

We collected quantitative data and qualitative data in the user study.

We applied a measurement of Networked Minds Measure of Social Presence Inventory (NMSPI) (Harms & Biocca, Citation2004) for quantitative data. This questionnaire has been validated to have the ability to distinguish levels of social presence for mediated interactions (Harms & Biocca, Citation2004), which is effective for MST signals measurement in this study. There are six dimensions (). Each dimension has six items in a 7-point Likert scale ranging from one (strongly disagree) to seven (strongly agree). The detailed Likert scale items are in (Harms & Biocca, Citation2004). An example of the questionnaire is in . Based on our applications, we introduced what the dimensions and questions mean in . The whole questionnaire was used to rate the MST signals function in this study.

Table 7. Example questionnaire of NMSPM (Harms & Biocca, Citation2004).

For qualitative data, we interviewed participants. For privacy concerns, we only record the voice of participants and only the transcripts were kept and used for analysis. There are two fixed questions and an open discussion for each participant:

Can you feel that you are touching the other person, or the other person is touching you (MST gestures with vibrotactile stimuli)?

If this function is available in a real online communication application, will you use it (MST gestures with vibrotactile stimuli)?

Talking about anything that came to your mind based on the experiences of the test.

4.5. Procedure

We first briefly introduced the test to the participants and provided consent forms and questionnaires.

We introduced the test interface to the participants. As the visual stickers of MST gestures were not visible at first, a training session was needed for participants to get familiar with the test interface and the position of MST gestures.

We have two communication modes—texting and video calling. Twenty participants (P1–P20 in ) test texting, while the other 20 participants (P21–P40 in ) test video calling (between-subjects). We have two conditions—MST gestures with vibrotactile stimuli and MST gestures without vibrotactile stimuli (within-subjects).

Table 8. Test communication modes, conditions, and activities for participants.

The order of activities providing to each participant is counterbalanced. shows the activities and the order for participants.

After the introduction and the training session, the detailed procedure for each participant was as follows:

Participants first tried one communication mode with one condition, having online communication with the experimenter. For example, a participant first tried using MST gestures without vibrotactile stimuli when texting.

Participants filled the 7-point Likert scale on the questionnaire for subjective ratings. (e.g., subjective ratings for MST gestures without vibrotactile stimuli when texting).

Participants tried the same communication mode with the other condition, having online communication with the experimenter. For example, the participant tried using MST gestures with vibrotactile stimuli when texting.

Participants filled the 7-point Likert scale on another questionnaire for subjective ratings (e.g., subjective ratings for MST gestures with vibrotactile stimuli when texting).

The experimenter interviewed the participants.

5. Results

5.1. Quantitative results

5.1.1. Between-subjects analysis

We used SPSS 23.0 to analyze data. We conducted the Shapiro–Wilk test for the normality test because Mishra et al. (Citation2019) showed that this method was appropriate for small sample sizes analysis (<50 samples). All data sets in each dimension conformed to normalized distribution (p > 0.01 in behavior interdependence, p > 0.05 in the rest five dimensions). There were no interactive effects between the communication mode and the condition in all dimensions (p > 0.05).

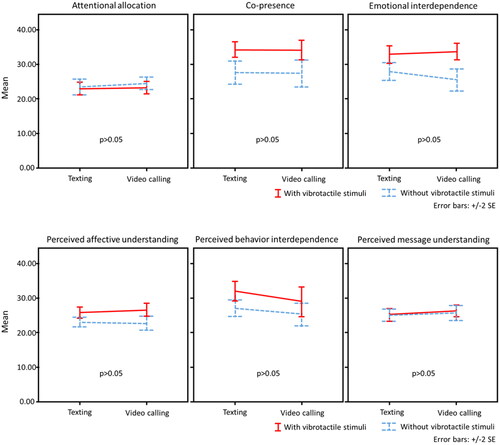

We conducted one-way MANOVA to test if the communication mode matters in each dimension of social presence. showed no significant differences between different communication modes in each dimension of social presence (p > 0.05). This indicated there was no significant difference between texting and video calling when applying the MST signals in online communication. We also found no significant gender effects during the evaluation (p > 0.05).

5.1.2. Within-subjects analysis

We conducted paired sample t-test to explore if adding vibrotactile stimuli to MST gestures matters when the difference value follows the normalized distribution (Liang et al., Citation2019), or we conducted the Wilcoxon rank test (Meek et al., Citation2007). All data sets conformed to normalized distribution (p > 0.05) except for perceived messaging understanding in video calling (p = 0.001).

showed that MST gestures with vibrotactile stimuli provided significantly higher co-presence, perceived behavior interdependence, perceived affective understanding, perceived emotional interdependence than MST gestures without vibrotactile stimuli (p < 0.05). No significant differences could be found in perceived message understanding and attentional allocation of social presence (p > 0.05).

5.2. Qualitative results

5.2.1. Thematic analysis

We conducted a thematic analysis (Braun & Clarke, Citation2012) for qualitative analysis. We recorded the voices of participants during the interview and the recordings were transcribed as qualitative data. After familiarizing with the transcripts of participants’ ideas (phase 1 in Braun & Clarke, Citation2012), we provided initial codes (descriptive sentences in and ) based on participants’ interviews (phase 2 in Braun & Clarke, Citation2012). We inducted themes (phase 3 to phase 5 in Braun & Clarke, Citation2012). We will provide iterative recommendations based on the quantitative analysis (phase 6 in Braun & Clarke, Citation2012) in the later Discussion section.

Table 9. Qualitative analysis of texting.

Table 10. Qualitative analysis of video calling.

5.2.2. Texting

For the first question about if participants could touch or be touched by other people via MST signals when texting, participants mainly provided three types of answers (). Eight participants said they could touch or be touched via this function. Six participants mentioned they could touch others by MST signals, but it was difficult to be touched via this function. P6 and P9 said that they felt they could touch others via MST signals. When receiving the MST signals, they just felt vibrations on their hands, or the mobile phone was vibrating. This suggests that gestures with vibrotactile stimuli and visual information might make the touch more real. Six participants could not touch or be touched via this function. Two of them said this function provided a way to express emotion rather than send touch. One participant could feel the vibration when interacting with the touchscreen, but that vibration could not be regarded as social touch. The rest participants said they were just interacting with the touchscreen or with an electronic pet, or just inputting some gestures on a photo, which could not make them touch or be touched by others via MST signals.

For the second question about if participants would use this function in online communication, three types of answers were provided (). Nine participants mentioned that they would use this function in online communication. Eight participants said that they would use this function with conditions. Participants mentioned that they would be attracted more by a more straightforward and more rational interactive interface, and more types of interesting MST signals. And they also wanted to customize virtual and cartoon avatars. One participant mentioned he/she would use this function with familiar people. For unfamiliar people, texting with words was enough. Two participants said they got used to using only words rather than MST signals, emojis, or stickers during texting.

For answers to open questions, we found six themes, including Touch, Interactive interface, Avatar, Multimodal MST signals, Customized demands, and other factors (). The Touch theme has four dimensions, including Type, Position, Visual sticker, and Vibrotactile stimuli (). shows the themes and descriptions.

5.2.3. Video calling

For the first question about if participants could touch or be touched by other people via MST signals during video calls, participants mainly provided four types of answers (). Nine participants said they could touch or be touched by this function. Two participants mentioned they could touch other people via MST signals, but it was difficult to be touched via MST signals. P9 said she could “Hug” and “Stroke,” but the “Hit” was too gentle. Eight participants could not touch or be touched via this function. Four of them said they could feel vibrations, which could not be regarded as social touch. Two of them mentioned they could feel strong emotional expressions rather than social touch. Two participants mentioned the right hand was performing gestures, but the left hand felt vibrations, which made it difficult to touch or be touched via this function.

For the second question about if participants would use this function in online communication, two types of answers were provided (). Eighteen participants would use this function in online communication. Two participants would use this function with conditions. One of them mentioned he/she would use this function with familiar people. The other one said he would use it if the application could be designed better.

For answers to open questions, we found six themes, including Touch, Interface layout, Emotional expression, Multimodal MST signals, Customized demands, and other factors (). The Touch theme has four dimensions, including Type, Position, Visual sticker, and Vibrotactile stimuli (). shows the themes and descriptions.

6. Discussions

6.1. MST gestures with vibrotactile stimuli and social presence

The research results showed that MST gestures with vibrotactile stimuli increased the social presence in general. This result conforms to previous studies about social presence, which indicates that haptic stimuli help to increase social presence in remote communication in different contexts (Ahmed et al., Citation2020; Basdogan et al., Citation2000; Chellali et al., Citation2011; Giannopoulos et al., Citation2008; Hadi & Valenzuela, Citation2020; Lee et al., Citation2017; Nakanishi et al., Citation2014; Oh et al., Citation2018; Sallnäs, Citation2010; Yarosh et al., Citation2017).

In this study, we further analyzed different dimensions of social presence. Adding vibrotactile stimuli to MST gestures helped increase social presence in co-presence, perceived behavior interdependence, perceived affective understanding, and perceived emotional interdependence. Adding vibrotactile stimuli to MST gestures caused no significant differences in social presence from attentional allocation and perceived message understanding. We discuss each dimension as follows:

Attentional allocation means the amount of attention the user allocates to and receives from an interactant (Harms & Biocca, Citation2004). There were no significant differences in attentional allocation, no matter if there were vibrotactile stimuli or not, in both video calling and texting. Possible reasons are as follows:

For video calling, participants’ attention was mostly on inputting MST gestures, which might lead to no significant differences in attentional allocation. Many participants mentioned that they paid more attention to their own manipulations. They did not pay much attention to MST signals sent from the other person. Maybe the size of the right upper box showing their own images () was too small to be noticed no matter if there were vibrations or not.

For video calling, the hard-to-differentiate vibrotactile stimuli might cause no significant differences in attentional allocation. Many participants mentioned the vibrotactile stimuli for each MST gesture were difficult to differentiate. Sometimes, the dyads were sending MST signals simultaneously. They just felt the mobile device was vibrating, but they did not know who triggered these vibrations. The vibrotactile stimuli could not work well in this dimension. Users’ confusions might result in similar attentional allocation no matter if there were vibrations or not.

For texting, the interaction—“going to the next page” may cause no big differences in attentional allocation. The haptic icon led participants to the next page for inputting and perceiving the MST signals. Many participants mentioned that it was inconvenient to press a button and go to the next page for further interaction. Participants wanted to input or receive MST signals just on the texting page directly. If participants went to another page, the process—“going to the next page” might get their attention rather than the vibrotactile stimuli.

For texting, the interaction—“poking a photo” may affect participants’ attentional allocation. Many participants mentioned that “poking a photo” was strange, which might have caught too much of attention. This situation might cause no significant differences in this dimension during texting.

For perceived message understanding, MST signals are also presented with stickers in this study. No matter if there were vibrotactile stimuli or not, participants could easily know what the other person wanted to express.

For co-presence, many participants mentioned MST gestures with vibrotactile stimuli was interesting. Although many participants said they could not differentiate the vibrotactile stimuli during video calling since sometimes the vibrotactile stimuli triggered by the participant and the experimenter displayed simultaneously, the vibrotactile stimuli could still make them feel something.

For perceived affective understanding and perceived affective interdependence, the results showed that MST gestures with vibrotactile stimuli provided significantly higher social presence than MST gestures without vibrotactile stimuli in these two dimensions. This result conforms to other related studies, which have demonstrated that vibrotactile stimuli help express emotions better (Akshita et al., Citation2015; Pradana et al., Citation2014; Takahashi et al., Citation2017; Wilson & Brewster, Citation2017).

For perceived behavioral interdependence, many participants mentioned that they would like to use MST signals with vibrotactile stimuli to express their emotions, which could be a new way for them to express emotion.

Based on the above, we found that vibrotactile stimuli mainly help increase social presence in aspects of co-presence, perceived behavior interdependence, perceived affective understanding, and perceived emotional interdependence. And it would not affect the message understanding and attentional allocation in mobile communication.

6.2. Communication mode and social presence

Existing studies mainly focused on one communication mode [e.g., phone calls (Hoggan et al., Citation2012; Park et al., Citation2013, Citation2015, Citation2016), texting (Pradana et al., Citation2014), or video calling (Nakanishi et al., Citation2014)]. This study applied two communication modes together and compared whether MST caused differences in these two communication modes.

The result showed no significant differences between texting and video calling when applying MST signals in online communication. Although there might be limitations in the current design, interview results showed that most participants found the interaction was interesting. Participants were willing to use it.

For future design, we need to have a deeper insight into users’ needs of MST signals in different communication modes. We need to apply the MST signals to different modes based on the users’ possible different needs in different modes.

6.3. Implications for future design

We discuss implications for future design based on participants’ interview results, from the perspective of vibrotactile stimuli, visual design, interface design, and interface structure.

6.3.1. Vibrotactile stimuli for MST gestures

About the vibrotactile stimuli for MST gestures, we provided some implications for future design as follows:

More compound waveform composition types (Wei et al., Citation2022b, Citation2022d) are needed. Many participants felt the vibrotactile stimuli were similar. They could only feel the length difference (long and short). These comments on vibrotactile stimuli illustrated that participants were willing to feel more interesting and changing vibrotactile stimuli than simple short pulse or long and unchanging vibrotactile stimuli.

Applying multi-modal stimuli to increase the perceiving of MST signals is necessary. We should notice that people may not have the same perceiving of one MST signal in different contexts or different communication modes. This result conforms to the previous study (Salminen et al., Citation2009), which shows the vibrotactile stimuli could be more pleasant and less arousing in the bus than in the laboratory. We suggest increasing the perceiving of MST signals in other ways, such as using multi-modal stimuli (haptic, visual, audio, thermal, et al.) because Wilson and Brewster (Citation2017) have demonstrated that multi-modal stimuli increase the available range of perception. For example, some researchers developed Multi-moji (Wilson & Brewster, Citation2017), which combined vibrotactile, visual, and thermal stimuli together, and VibEmoji (An et al., Citation2022), which provided vibrotactile stimuli, animation effects, and emotion stickers together in mobile communication.

Making the vibrotactile stimuli and visual stickers more matched in a specific context and communication mode could be considered. We added visual information and context in this study. Ernst and Banks (Citation2002) mentioned that vision and touch both provided information for estimating the MST signals. Visual information is helpful when judging size, shape, or position (Ernst & Banks, Citation2002). The dominant channel to feel the MST signals may be different between the only vibrotactile stimuli condition (Wei et al., Citation2022b) and the vibrotactile stimuli along with visual and context information in this study. The vibrotactile stimuli were designed based on metaphorical cues, while the visual stickers were designed based on gesture movements. It may have caused confusion. The possible solutions are iterating the vibrotactile stimuli or the visual stickers to make them more matched in a specific context and communication mode. For example, in this study, the vibrotactile stimulus for “Hug” was designed to express a feeling of force exerted on other people (Wei et al., Citation2022b). But the visual sticker of “Hug” comes from emoji, which may not express the feeling of force. The vibrotactile stimuli and visual sticker may not match. We may add varying colors or a progress bar to express a metaphorical force change rather than a simple touch sticker.

We consider designing and iterating vibrotactile stimuli in a specific context with a specific communication mode. Some participants said they focused more on sending MST signals. The sense of touch was stronger when sending MST signals. When sending MST signals, participants performed gestures and actively felt the vibrotactile stimuli. When receiving MST signals, participants passively felt the vibrotactile stimuli without gestures. However, we tested vibrotactile stimuli from these two situations—sending with gestures and receiving without gestures (Wei et al., Citation2022c). There were no significant differences between these two conditions on the likelihood to be understood as a specific MST gesture. These contradictory situations show that the context plays an important role in MST communication. In our earlier study (Wei et al., Citation2022c), there was no context (no video calling or texting). Participants were asked to press the graphic button on the touchscreen, triggering vibrotactile stimuli. Participants could focus more on the vibrotactile stimuli. In this study, more contexts and visual information required attention and participants could not focus as much on the vibrotactile stimuli. So, iterating and testing vibrotactile stimuli in a specific context with a specific communication mode may inspire the design.

6.3.2. Visual design of MST gestures

About the visual design of MST gestures, we suggested as follows:

Richer visual design is needed. In the user study, we applied simple hand gesture stickers in the application. However, many participants said that the visual design of MST gesture could be more interesting. For example, 3D effects could be considered.

Customization is needed. Some participants suggested cuter stickers. “Cute” is a concept that can be culture related, and it may suggest that the cultural background should be taken into consideration. Customized MST types and visual stickers could contribute to this point. For example, Memoji and Digital Touch in iMessage could make users customize their stickers and touch gestures.

6.3.3. Interface design

About the interface design, the layout iteration, preview function, and possibility for customization are design opportunities, as follows:

The layout of the video calling interface needs iteration. The box showing the participants’ image and received MST signals in the right upper corner () was too small to notice. Future interface design could consider enlarging the small box to solve this problem. We could make the size of two split screens equal.

Pre-view MST gestures before sending is needed. Many participants said they could not remember the MST gestures’ position on the interface as all MST gestures were invisible at first. We could present participants pre-view window for MST gestures.

Participants also wanted to customize the MST gestures’ types and positions. We only set six typical MST gestures and provided fixed positions for each MST gesture in this study. Some participants said the present MST signals could not express some of their feelings and they hoped the positions could be flexible. We designed 23 MST gestures and provided at least one CWC type of vibrotactile stimuli for each MST gesture in (Wei et al., Citation2022b). We could consider adding the MST gestures list and vibrotactile stimuli list and making participants choose their preferred and frequently used MST gestures with vibrotactile stimuli.

6.3.4. Interface structure

Interface structure needs iteration as follows:

In texting, we need to integrate the MST gestures inputting and perceiving function in the texting interface rather than creating a separate interface. Many participants mentioned that “going to the next page” was not convenient. However, the existing texting interface already has a clear layout for users to text. We need to consider how to integrate the MST signals functions in this layout.

There should be a time difference between the vibrotactile stimuli triggered by the two people in the communication during video calling. Participants said it was difficult to differentiate whether the vibrotactile stimuli were from themselves or the experimenter. Consider providing a time difference between the vibrotactile stimuli triggered by the two people in the communication so that users can perceive them more clearly.

6.4. Limitations

Some participants mentioned that they could feel the vibrotactile stimuli on their left hand (holding the mobile device). Their right hand performed gestures but could not feel the vibrotactile stimuli. For vibrotactile stimuli, it is difficult to solve this problem only on a smartphone. Maybe wearables could solve this problem, but use of the wearables means additional load for the users. Another possible way to solve this problem is to consider audio together with visual and vibrotactile stimuli. The richer modalities may help make people not focus on one modality, making the MST signals more realistic. Our test device has an LRA, which could provide vibrotactile and audio stimuli together. Based on our system, we have already investigated how to design vibrotactile and audio stimuli together (Wei et al., Citation2022d). We can consider combining the audio stimuli in future designs.

Our user study was based on the selected six MST gestures, which could be frequently used and covered rich emotional expressions. The results are effective based on these six MST gestures. We still have 17 more MST gestures. In future research, we will consider providing more choices and making users experience more MST gestures.

7. Conclusions and future work

This study applied MST gestures with vibrotactile stimuli in mobile communication (texting and video calling). We conducted a user study and found no significant difference between texting and video calling when applying MST signals in online communication. Adding vibrotactile stimuli to MST gestures helps to increase social presence in the aspects of co-presence, perceived behavior interdependence, perceived affective understanding, and perceived emotional interdependence. Adding vibrotactile stimuli to MST gestures causes no significant differences in attentional allocation and perceived message understanding. Participants thought MST gestures with vibrotactile stimuli was attractive and they were willing to use it, but the application design should be improved based on users’ needs.

For future work, we need to improve the design of vibrotactile stimuli for some MST gestures, visual designs of the MST gestures, and interface design for different communication modes. We can also consider vibrotactile, visual and audio stimuli together to create a richer MST signal effect. We will also consider providing more MST signals and making users experience more MST signals in mobile communication.

Ethical approval

This study was conducted according to the guidelines of the local Ethical Review Board from the Department of Industrial Design (protocol code: ERB2021ID15).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Qianhui Wei

Qianhui Wei is a PhD candidate in the Department of Industrial Design, Eindhoven University of Technology, with research topics including social haptics, multimodal user interface, and human–computer interaction.

Jun Hu

Jun Hu is an associate professor of design research on social computing with the Department of Industrial Design, Eindhoven University of Technology.

Min Li

Min Li is a parttime associate professor with the Eindhoven University of Technology. He is a Member of the IEEE Design and Implementation of Signal Processing Systems Technical Committee (IEEE-DISPS TC).

References

- Ahmed, I., Harjunen, V. J., Jacucci, G., Ravaja, N., Ruotsalo, T., & Spape, M. (2020). Touching virtual humans: Haptic responses reveal the emotional impact of affective agents. IEEE Transactions on Affective Computing, 1–12. https://doi.org/10.1109/TAFFC.2020.3038137

- Akshita, Sampath, H., Indurkhya, B., Lee, E., & Bae, Y. (2015). Towards multimodal affective feedback: Interaction between visual and haptic modalities. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 2043–2052). https://doi.org/10.1145/2702123.2702288

- An, P., Zhou, Z., Liu, Q., Yin, Y., Du, L., Huang, D. Y., & Zhao, J. (2022). VibEmoji: Exploring user-authoring multi-modal emoticons in social communication. In CHI Conference on Human Factors in Computing Systems (pp. 1–17). https://doi.org/10.1145/3491102.3501940

- Bailey, D., Almusharraf, N., & Hatcher, R. (2021). Finding satisfaction: Intrinsic motivation for synchronous and asynchronous communication in the online language learning context. Education and Information Technologies, 26(3), 2563–2583. https://doi.org/10.1007/s10639-020-10369-z

- Bales, E., Li, K. A., & Griwsold, W. (2011). CoupleVIBE: Mobile implicit communication to improve awareness for (long-distance) couples. In Proceedings of the ACM 2011 Conference on Computer Supported Cooperative Work (pp. 65–74). https://doi.org/10.1145/1958824.1958835

- Basdogan, C., Ho, C. H., Srinivasan, M. A., & Slater, M. (2000). An experimental study on the role of touch in shared virtual environments. ACM Transactions on Computer–Human Interaction, 7(4), 443–460. https://doi.org/10.1145/365058.365082

- Biocca, F. (1997). The Cyborg’s dilemma: Progressive embodiment in virtual environments. Journal of Computer-Mediated Communication, 3(2), 1–29. https://doi.org/10.1111/j.1083-6101.1997.tb00070.x

- Braun, V., & Clarke, V. (2012). Thematic analysis. In H. Cooper, P. M. Camic, D. L. Long, A. T. Panter, D. Rindskopf, & K. J. Sher (Eds.), APA handbook of research methods in psychology, Vol 2: Research designs: Quantitative, qualitative, neuropsychological, and biological (pp. 57–71). American Psychological Association. https://doi.org/10.1037/13620-004

- Chellali, A., Dumas, C., & Milleville-Pennel, I. (2011). Influences of haptic communication on a shared manual task. Interacting with Computers, 23(4), 317–328. https://doi.org/10.1016/j.intcom.2011.05.002

- Ernst, M. O., & Banks, M. S. (2002). Humans integrate visual and haptic information in a statistically optimal fashion. Nature, 415(6870), 429–433. https://doi.org/10.1038/415429a

- Furukawa, M., Kajimoto, H., & Tachi, S. (2012). KUSUGURI: A shared tactile interface for bidirectional tickling. In Proceedings of the 3rd Augmented Human International Conference (pp. 1–8). https://doi.org/10.1145/2160125.2160134

- Giannopoulos, E., Eslava, V., Oyarzabal, M., Hierro, T., González, L., Ferre, M., & Slater, M. (2008). The effect of haptic feedback on basic social interaction within shared virtual environments. In International Conference on Human Haptic Sensing and Touch Enabled Computer Applications, LCNS (Vol. 5024, pp. 301–307). https://doi.org/10.1007/978-3-540-69057-3_36

- Gordon, M. L., & Zhai, S. (2019). Touchscreen haptic augmentation effects on tapping, drag and drop, and path following. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (pp. 1–12). https://doi.org/10.1145/3290605.3300603

- Gunawardena, C. N., & Zittle, F. J. (1997). Social presence as a predictor of satisfaction within a computer–mediated conferencing environment. American Journal of Distance Education, 11(3), 8–26. https://doi.org/10.1080/08923649709526970

- Haans, A., & Ijsselsteijn, W. (2006). Mediated social touch: A review of current research and future directions. Virtual Reality, 9(2–3), 149–159. https://doi.org/10.1007/s10055-005-0014-2

- Hadi, R., & Valenzuela, A. (2020). Good vibrations: Consumer responses to technology-mediated haptic feedback. Journal of Consumer Research, 47(2), 256–271. https://doi.org/10.1093/jcr/ucz039

- Harms, C., & Biocca, F. (2004). Internal consistency and reliability of the networked minds measure of social presence. In Seventh Annual International Workshop: Presence, 2004 (pp. 246–251).

- Hemmert, F., Gollner, U., Löwe, M., Wohlauf, A., & Joost, G. (2011). Intimate mobiles: Grasping, kissing and whispering as a means of telecommunication in mobile phones. In Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services (pp. 21–24). https://doi.org/10.1145/2037373.2037377

- Hertenstein, M. J., Holmes, R., McCullough, M., & Keltner, D. (2009). The communication of emotion via touch. Emotion, 9(4), 566–573. https://doi.org/10.1037/a0016108

- Hertenstein, M. J., Keltner, D., App, B., Bulleit, B. A., & Jaskolka, A. R. (2006). Touch communicates distinct emotions. Emotion, 6(3), 528–533. https://doi.org/10.1037/1528-3542.6.3.528

- Hoggan, E., Stewart, C., Haverinen, L., Jacucci, G., & Lantz, V. (2012). Pressages: Augmenting phone calls with non-verbal messages. In Proceedings of the 25th Annual ACM Symposium on User Interface Software and Technology (pp. 555–562). https://doi.org/10.1145/2380116.2380185

- Huisman, G. (2017). Social touch technology: A survey of haptic technology for social touch. IEEE Transactions on Haptics, 10(3), 391–408. https://doi.org/10.1109/TOH.2017.2650221

- Israr, A., Zhao, S., & Schneider, O. (2015). Exploring embedded haptics for social networking and interactions. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (pp. 1899–1904). https://doi.org/10.1145/2702613.2732814

- Le, H. V., Mayer, S., & Henze, N. (2019). Investigating the feasibility of finger identification on capacitive touchscreens using deep learning. In Proceedings of the 24th International Conference on Intelligent User Interfaces (pp. 637–649). https://doi.org/10.1145/3301275.3302295

- Lee, M., Bruder, G., & Welch, G. F. (2017). Exploring the effect of vibrotactile feedback through the floor on social presence in an immersive virtual environment. In IEEE Virtual Reality (pp. 105–111). https://doi.org/10.1109/VR.2017.7892237

- Liang, G., Fu, W., & Wang, K. (2019). Analysis of t-test misuses and SPSS operations in medical research papers. Burns & Trauma, 7(31), 3–7. https://doi.org/10.1186/s41038-019-0170-3

- Liu, S., Cheng, H., Chang, C., & Lin, P. (2018). A study of perception using mobile device for multi-haptic feedback. In International Conference on Human Interface and the Management of Information (pp. 218–226). https://doi.org/10.1007/978-3-319-92043-6_19

- Meek, G. E., Ozgur, C., & Dunning, K. (2007). Comparison of the t vs. Wilcoxon Signed-Rank Test for Likert scale data and small samples. Journal of Modern Applied Statistical Methods, 6(1), 91–106. https://doi.org/10.22237/jmasm/1177992540

- Mishra, P., Pandey, C. M., Singh, U., Gupta, A., Sahu, C., & Keshri, A. (2019). Descriptive statistics and normality tests for statistical data. Annals of Cardiac Anaesthesia, 22(1), 67–72. https://doi.org/10.4103/aca.ACA_157_18

- Nakanishi, H., Tanaka, K., & Wada, Y. (2014). Remote handshaking: Touch enhances video-mediated social telepresence. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 2143–2152). ACM. https://doi.org/10.1145/2556288.2557169

- Oh, C. S., Bailenson, J. N., & Welch, G. F. (2018). A systematic review of social presence: Definition, antecedents, and implications. Frontiers in Robotics and AI, 5(114), 114–135. https://doi.org/10.3389/frobt.2018.00114

- Park, Y. W., Bae, S. H., & Nam, T. J. (2016). Design for sharing emotional touches during phone calls. Archives of Design Research, 29(2), 95–106. https://doi.org/10.15187/adr.2016.05.29.2.95

- Park, Y. W., Baek, K. M., & Nam, T. J. (2013). The roles of touch during phone conversations: Long-distance couples’ use of POKE in their homes. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1679–1688). https://doi.org/10.1145/2470654.2466222

- Park, Y. W., Park, J., & Nam, T. J. (2015). The trial of Bendi in a coffeehouse: Use of a shape-changing device for a tactile-visual phone conversation. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 2181–2190). https://doi.org/10.1145/2702123.2702326

- Pradana, G. A., Cheok, A. D., Inami, M., Tewell, J., & Choi, Y. (2014). Emotional priming of mobile text messages with ring-shaped wearable device using color lighting and tactile expressions. In Proceedings of the 5th Augmented Human International Conference (pp. 1–8). https://doi.org/10.1145/2582051.2582065

- Ranasinghe, N., Jain, P., Tolley, D., Chew, B., Bansal, A., Karwita, S., Ching-Chiuan, Y., & Yi-Luen Do, E. (2020). EnPower: Haptic interfaces for deafblind individuals to interact, communicate, and entertain. In Proceedings of the Future Technologies Conference (pp. 740–756). https://doi.org/10.1007/978-3-030-63089-8_49

- Rantala, J., Salminen, K., Raisamo, R., & Surakka, V. (2013). Touch gestures in communicating emotional intention via vibrotactile stimulation. International Journal of Human–Computer Studies, 71(6), 679–690. https://doi.org/10.1016/j.ijhcs.2013.02.004

- Robinson, S., & Stubberud, H. A. (2012). Communication preferences among university students. Academy of Educational Leadership Journal, 16(2), 105–113. https://www.proquest.com/scholarly-journals/communication-preferences-among-university/docview/1037692095/se-2

- Rognon, C., Bunge, T., Gao, M., Conor, C., Stephens-Fripp, B., Brown, C., & Israr, A. (2022). An online survey on the perception of mediated social touch interaction and device design. IEEE Transactions on Haptics, 15(2), 372–381. http://arxiv.org/abs/2104.00086

- Sallnäs, E. L. (2010). Haptic feedback increases perceived social presence. In International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (pp. 178–185). https://doi.org/10.1007/978-3-642-14075-4_26

- Salminen, K., Rantala, J., Laitinen, P., Interactive, A., Surakka, V., Lylykangas, J., & Raisamo, R. (2009). Emotional responses to haptic stimuli in laboratory versus travelling by bus contexts. In 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops (pp. 1–7). https://doi.org/10.1109/ACII.2009.5349597

- Seland, G. (2006). System designer assessments of role play as a design method: A qualitative study. In Proceedings of the 4th Nordic Conference on Human–Computer Interaction: Changing Roles (pp. 222–231). https://doi.org/10.1145/1182475.1182499

- Simsarian, K. T. (2003). Take it to the next stage: The roles of role playing in the design process. In CHI’03 Extended Abstracts on Human Factors in Computing Systems (pp. 1012–1013). https://doi.org/10.1145/765891.766123

- Smiling Face with Open Hands (2015). [WWW Document]. Emojipedia. https://emojipedia.org/hugging-face/

- Takahashi, H., Ban, M., Osawa, H., Nakanishi, J., Sumioka, H., & Ishiguro, H. (2017). Huggable communication medium maintains level of trust during conversation game. Frontiers in Psychology, 8, 1–8. https://doi.org/10.3389/fpsyg.2017.01862

- Teyssier, M., Bailly, G., Pelachaud, C., & Lecolinet, E. (2018). MobiLimb: Augmenting mobile devices with a robotic limb. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology (pp. 53–63). https://doi.org/10.1145/3242587.3242626

- van Erp, J. B. F., & Toet, A. (2015). Social touch in human–computer interaction. Frontiers in Digital Humanities, 2, 1–14. https://doi.org/10.3389/fdigh.2015.00002

- Wei, Q., Hu, J., & Li, M. (2022a). User-defined gestures for mediated social touch on touchscreens. Personal and Ubiquitous Computing, 1–16. https://doi.org/10.1007/s00779-021-01663-9

- Wei, Q., Hu, J., & Li, M. (2022b). Designing mediated social touch signals.

- Wei, Q., Hu, J., & Li, M. (2022c). Active and passive mediated social touch with vibrotactile stimuli in mobile communication. Information, 13(2), 63. https://doi.org/10.3390/info13020063

- Wei, Q., Li, M., Hu, J., & Feijs, L. (2020). Creating mediated touch gestures with vibrotactile stimuli for smart phones. In Proceedings of the Fourteenth International Conference on Tangible, Embedded, and Embodied Interaction (pp. 519–526). https://doi.org/10.1145/3374920.3374981

- Wei, Q., Li, M., Hu, J., & Feijs, L. M. (2022d). Perceived depth and roughness of virtual buttons with touchscreens. IEEE Transactions on Haptics, 15(2), 315–327. https://doi.org/10.1109/toh.2021.3126609

- Wilson, G., & Brewster, S. A. (2017). Multi-Moji: Combining thermal, vibrotactile & visual stimuli to expand the affective range of feedback. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 1743–1755). https://doi.org/10.1145/3025453.3025614

- Wobbrock, J. O., Morris, M. R., & Wilson, A. D. (2009). User-defined gestures for surface computing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1083–1092). https://doi.org/10.1145/1518701.1518866

- Yarosh, S., Mejia, K., Unver, B., Wang, X., Yao, Y., Campbell, A., & Holschuh, B. (2017). SqueezeBands: Mediated social touch using shape memory alloy actuation. In Proceedings of the ACM on Human–Computer Interaction (pp. 1–18). https://doi.org/10.1145/3134751

- Zhang, Y., & Cheok, A. D. (2021). Turing-test evaluation of a mobile haptic virtual reality kissing machine. Global Journal of Computer Science and Technology, 21(E1), 1–16. https://computerresearch.org/index.php/computer/article/view/2038

- Zhang, Z., Alvina, J., & Heron, R. (2021). Touch without touching: Overcoming social distancing in semi-intimate relationships with SansTouch. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1–13). https://doi.org/10.1145/3411764.3445612