?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This work proposes a new method for computing acceptance regions of exact multinomial tests. From this an algorithm is derived, which finds exact p-values for tests of simple multinomial hypotheses. Using concepts from discrete convex analysis, the method is proven to be exact for various popular test statistics, including Pearson’s Chi-square and the log-likelihood ratio. The proposed algorithm improves greatly on the naive approach using full enumeration of the sample space. However, its use is limited to multinomial distributions with a small number of categories, as the runtime grows exponentially in the number of possible outcomes. The method is applied in a simulation study, and uses of multinomial tests in forecast evaluation are outlined. Additionally, properties of a test statistic using probability ordering, referred to as the “exact multinomial test” by some authors, are investigated and discussed. The algorithm is implemented in the accompanying R package ExactMultinom. Supplementary materials for this article are available online.

1 Introduction

Multinomial goodness-of-fit tests feature prominently in the statistical literature and a wide range of applications. Tests relying on asymptotics have been available for a long time and have been rigorously studied all through the 20th century. The use of various test statistics has been investigated with Pearson’s Chi-square and the log-likelihood ratio statistic being vital examples. These statistics are members of the general family of power divergence statistics (Cressie and Read Citation1984). With the widespread availability of computing power, Monte Carlo simulations and exact methods have also gained popularity.

Tate and Hyer (Citation1973) and Kotze and Gokhale (Citation1980) used the “exact multinomial test,” which orders samples by probability, to assess the accuracy of asymptotic tests of a simple null hypothesis against an unspecified alternative. In the words of Cressie and Read (Citation1989), this “has provided much confusion and contention in the literature.” In accordance with Gibbons and Pratt (Citation1975) and Radlow and Alf (Citation1975), they conclude that the asymptotic fit of a test should be assessed using the appropriate exact test based on the test statistic in question. Nevertheless, the exact multinomial test is intuitively appealing, and, as Kotze and Gokhale (Citation1980) put it, “[i]n the absence of […] a specific alternative, it is reasonable to assume that outcomes with smaller probabilities under the null hypothesis offer a stronger evidence for its rejection and should belong to the critical region.” In Section 2, an asymptotic Chi-square approximation to the exact multinomial test is derived, and an exemplary comparison of popular test statistics in terms of power is provided.

Regardless of the test statistic used, computing an exact p-value by fully enumerating the sample space is computationally challenging, as the test statistic and the probability mass function have to be evaluated at every possible sample of which there are for samples of size n with m categories. An improvement on this method has been proposed by Bejerano, Friedman, and Tishby (Citation2004) for the family of power divergence statistics. Other approaches aimed at exact Pearson’s Chi-square and log-likelihood ratio tests exist (see, e.g., Baglivo, Olivier, and Pagano Citation1992; Hirji Citation1997; Rahmann Citation2003; Keich and Nagarajan Citation2006). In this work, a new approach to exact multinomial tests is investigated.

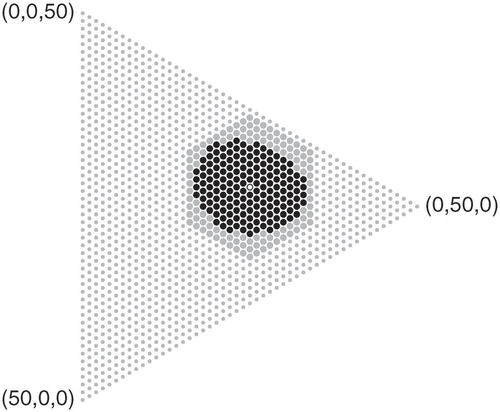

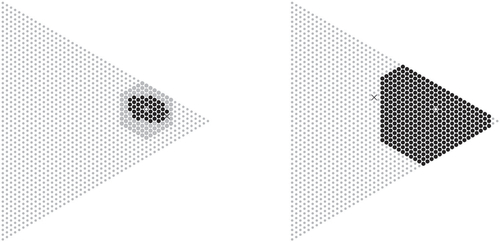

The key observation underlying the proposed algorithm is that acceptance regions at arbitrary levels contain relatively few points, which are located in a neighborhood of the expected value under the null hypothesis as illustrated in , and an acceptance region can be found by iteratively evaluating points within a ball of increasing radius around the expected value (w.r.t. the Manhattan distance). The algorithm uses this to compute an exact p-value from the probability mass of the largest acceptance region that does not contain the observation. If p-values below an arbitrary threshold are not computed exactly, the runtime of the algorithm is guaranteed to be asymptotically faster than the approach using full enumeration as the diameter of any acceptance region essentially grows at a rate proportional to the square root of the sample size. This is detailed and proven to work for various popular test statistics in Section 3.

Fig. 1 An acceptance region (black dots) at level for the null

and samples of size n = 50 with m = 3 categories. Only points within the ball (big dots) around the expectation (hollow dot) have to be considered to find this region.

Furthermore, the algorithm is illustrated to work well in applications detailed in Section 4. In particular, the algorithm’s runtime is compared to the full enumeration method in a simulation study, and the resulting p-values are used to assess the fit of asymptotic Chi-square approximations and investigate differences between several test statistics. As an application in forecast evaluation, the use of multinomial tests for uncertainty quantification within the so-called calibration simplex (Wilks Citation2013) is outlined and justified.

The R programming language (R Core Team Citation2020) has been used for all computations throughout this work. An implementation of the proposed method is provided within the R package ExactMultinom (Resin Citation2020).

2 A Brief Review on Testing a Simple Multinomial Hypothesis

Consider a multinomial experiment summarizing

iid trials with

possible outcomes. Let

denote the unit

-simplex or probability simplex and

the sample space, which is a regular discrete

-simplex. The distribution of X is characterized by a parameter

encoding the occurrence probabilities of the outcomes on any trial, or

for short. The multinomial distribution

is fully described by the probability mass function (pmf)

Suppose that the true parameter p is unknown. Consider the simple null hypothesis for some

. The agreement of a realization

of X with the null hypothesis is typically quantified by means of a test statistic

. Given such a test statistic T and presuming from now on that w.l.o.g. high values of

indicate “extreme” observations under the null distribution

, the p-value of x is defined as the probability

(1)

(1) of observing an observation that is at least as extreme under the null hypothesis.

The family of power divergence statistics introduced by Cressie and Read (Citation1984) offers a variety of test statistics for multinomial goodness-of-fit tests. It is defined as(2)

(2) and as the pointwise limit in (2) for

. Notably, this includes Pearson’s Chi-square statistic

as well as the log-likelihood ratio (or G-test) statistic

Under a null hypothesis with for all

, every power divergence statistic is asymptotically Chi-square distributed with m – 1 degrees of freedom.

A natural test statistic arises if an “extreme” observation is simply understood to mean an unlikely one, that is, if the pmf itself is used as test statistic. In what follows, a strictly decreasing transformation of the pmf is used instead, which ensures that large values of the test statistic indicate extreme observations. Furthermore, this strictly decreasing transformation is chosen such that the resulting test statistic is asymptotically Chi-square distributed. To this end, let Γ denote the Gamma function andthe continuous extension of the pmf

to the convex hull of the discrete simplex

. The probability mass test statistic is defined as

Obviously, the choice of strictly decreasing transformation does not affect the (exact) p-value given by (1) for . The following theorem gives rise to an asymptotic approximation of p-values derived from the probability mass test statistic, which has not been studied previously. In the simulation study of Section 4, the fit of this approximation is assessed empirically using exact p-values computed with the new method for samples of size n = 100 with m = 5 categories.

Theorem 1.

If follows a multinomial distribution with

and

such that

for

, then

converges in distribution to a Chi-square distribution

with m – 1 degrees of freedom as

.

Proof.

By Lemma 8 (in Appendix A, supplementary materials), the difference between the log-likelihood ratio and the probability mass statistic is

Clearly, the bounded terms converge to zero in probability, and the terms converge to zero in probability by the continuous mapping theorem. Hence, the probability mass statistic has the same asymptotic distribution as the log-likelihood ratio statistic. □

In what follows, the focus is on the Chi-square, log-likelihood ratio and probability mass statistics.

2.1 Acceptance Regions

As outlined in the introduction, acceptance regions are of major importance to the idea pursued in this work. Given a test statistic T, the acceptance region at level is defined using p-values given by (1) as

Equivalently, the acceptance region can be written as the sublevel set of at the

-quantile

of

under the null hypothesis

, that is,

(3)

(3)

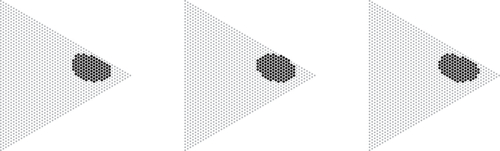

As illustrated in , the probability mass test statistic typically yields acceptance regions that contain relatively few points, because the regions contain the samples with the largest null probabilities. However, as samples with equal null probabilities are either all included or all excluded, smaller acceptance regions might be feasible at some levels α. If tests are randomized to ensure equal level and size of the test, this property can be refined to yield an optimality property of the probability mass test’s critical function.

Fig. 2 Acceptance regions (black) of probability mass (left), Chi-square (center) and log-likelihood ratio (right) statistics at level for n = 50 and

. The regions contain 108, 111, and 111 points, respectively (left to right). The tests are of size 0.0495, 0.0492, and 0.0481, respectively.

In Section 3, it is shown that acceptance regions of the Chi-square, log-likelihood ratio and probability mass test statistic all grow at a rate , as their diameter grows at a rate

if

is fixed, see Proposition 7.

2.2 Power and Bias

The power function of a test T of the null hypothesis at level α is

which is the probability of rejecting the null hypothesis at level α if the true parameter is p. The size of a test is its power at

. A test T is said to be unbiased (for the null

at level α) if its power is minimized at

.

In the case of the uniform null hypothesis, that is, , Cohen and Sackrowitz (Citation1975, Theorem 2.1) proved that the power function increases away from

for test statistics of the form

if h is a convex function. They concluded that tests based on the Chi-square and the log-likelihood ratio test statistic are unbiased for the uniform null hypothesis. As a corollary to their theorem, it shall be noted that this also applies to the probability mass test statistic.

Corollary 2

(to Cohen and Sackrowitz Citation1975, Theorem 2.1). The probability mass test is unbiased for the uniform null hypothesis .

Proof.

Since the probability mass statistic can be written asthis is an immediate consequence of the fact that the Gamma function is logarithmically convex on the positive real numbers, which is part of a characterization given by the Bohr-Mollerup theorem (Beals and Wong Citation2010, Theorem 2.4.2). □

Many authors (e.g., West and Kempthorne Citation1972; Cressie and Read Citation1984; Wakimoto, Odaka, and Kang Citation1987; Pérez and Pardo Citation2003) have conducted small sample studies to investigate the power of Chi-square, log-likelihood ratio and other tests. When conducting such studies, , and α need to be chosen, all of which influence the resulting power function. Furthermore, it is frequently infeasible to assess the power function across all alternatives, and so alternatives of interest need to be picked. Therefore, most of these studies focused on the case of the uniform null hypothesis. In this case, the Chi-square test has greater power for alternatives that assign a large proportion of the probability mass to relatively few categories, whereas the log-likelihood ratio test has greater power for alternatives that assign considerable probability mass to many categories (see also Koehler and Larntz Citation1980).

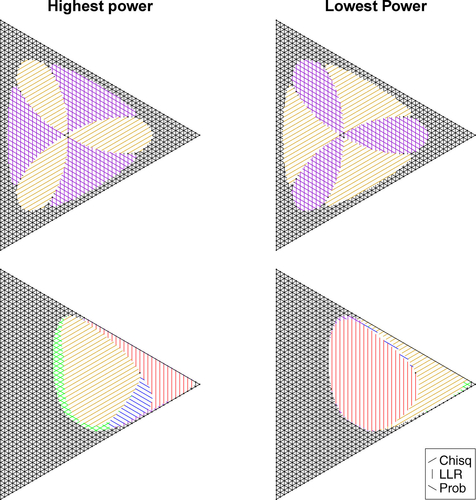

In the ternary case, that is, if m = 3, comparisons on the full probability simplex are visually accessible. illustrates, which of the three test statistics yields the highest and lowest power across the full ternary probability simplex. As the actual test size, which is frequently smaller than the level α, depends on the test statistic, the resulting power functions are difficult to compare directly. To account for this, the tests are randomized to ensure that their respective size matches the level. For a test T and level α, let denote the actual size of the test. The critical function

defines a randomized test,Footnote1 which rejects the null hypothesis with probability

if x is observed. The power function of the randomized version of a test T at level α is

Fig. 3 Ternary plots indicating which randomized tests of size yields the highest (left) and lowest (right) power for the uniform null hypothesis

(top) and

(bottom) for n = 50. Overlapping lines indicate nearly equal powers (

).

With this, the probability mass test minimizes the acceptance region in the sense that it minimizes the sumacross all randomized tests

with

.

suggests that the probability mass test and the log-likelihood ratio test for the uniform null hypothesis at level are the same for n = 50. This is a coincidence, and for other choices of α (e.g.,

, for which coincidentally the probability mass statistic yields the same acceptance region as the Chi-square statistic) the acceptance regions differ, and so do the power functions.

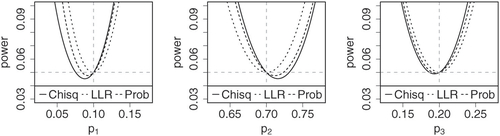

quantitatively compares power along alternatives of the formfor

and

. This yields parameterizations of the lines through π and a corner of the probability simplex. The figures illustrate that in the case

and

, the log-likelihood ratio test, arguably, does not show any visible bias, whereas the Chi-square test shows the most bias. The power function of the probability mass test lies in between the other power functions across most of the probability simplex, and so the probability mass test might serve as a good compromise in terms of power.

3 Exact p-Values via Acceptance Regions

Throughout this section, T is a test statistic, and and

are fixed. To ease notation, the subscripts in the pmf of the null distribution are omitted, that is,

and the test statistic T is considered as a function on the sample space only, that is,

. Let

be a rescaled version of the Manhattan distance and

the discrete ball with radius

and center

. Furthermore,

denotes the ith vector of the standard basis of

, where δij

is the Kronecker delta.

3.1 Finding Acceptance Regions Using Discrete Convex Analysis

As alluded to in the introduction, an acceptance region for

can be found without enumerating all points of the sample space

, but only considering points in some ball around the expected value for many test statistics. Specifically, if T is weakly quasi M-convex, that is, if for all distinct

there exist indices

such that

and

the following theorem, which is proven at the end of this section, holds.

Theorem 3.

Let T be weakly quasi M-convex, and suppose and

are such that

. Let

be the smallest level such that the sublevel set

satisfies

. If

, then A is the acceptance region

.

Hence, an acceptance region can be found by iteratively enumerating a ball of increasing radius with arbitrary center until a sublevel set with enough probability mass is found and this sublevel set remains unchanged upon further increasing the ball, as illustrated in the introduction for an acceptance region of the probability mass statistic, see .

The following proposition ensures that this approach can be applied to the Chi-square, log-likelihood ratio and probability mass test statistics.

Proposition 4.

The probability mass test statistic

is weakly quasi M-convex.

The power divergence test statistic

is weakly quasi M-convex if

.

Proof.

Throughout the proof, let such that

, and define the index sets

Let

and w.l.o.g.

. Then

Both double products contain an equal number of multiplicands (since ) and are nonempty (since

). As the entire product is at least 1, there exist indices

and

and natural numbers

and

such that the second inequality holds in

Therefore, the inequality

holds.

See Appendix B, supplementary materials.

The rest of this section is devoted to the proof of Theorem 3, which uses the existence of certain sequences in the sublevel sets of weakly quasi M-convex functions given by the first part of the following lemma. It can be shown that the existence of such sequences characterizes a “weakly quasi M-convex set.” For further details on weak quasi M-convexity and discrete convex analysis in general, see Murota (Citation2003).

Lemma 5.

Let T be a weakly quasi M-convex function and be the sublevel set of T at

.

If

and

, then there exists a sequence

with

, xd = y and

for all

.

Suppose

and

are such that

is not empty. If

, then A = L is the sublevel set of T at t.

Proof.

Proof by induction on d: Let

and

. If d = 0, then

satisfies the condition. If d > 0, there exist i, j such that

and

(or

, in which case interchanging x and y and i and j yields the former formula for

) by weak quasi M-convexity of T. Then

and

By induction hypothesis, there exists a sequence

, such that

is the sought-after sequence.

Assume there exists some

and fix

. By part a), the sublevel set L contains a sequence

with

and

for

. By the reverse triangle inequality

, and, since

, there is an xj such that

, which yields

, a contradiction (as

). Therefore,

, and hence A = L.

With this, the theorem is readily proven as follows.

Proof of Theorem 3.

Let be minimal such that

has probability mass

and

. Recall that the acceptance region

is the sublevel set (3) at

, and note that

holds, as

. By Lemma 5(b), A is the sublevel set at t, and hence

. Since t is minimal, it follows that

and

. □

3.2 Computing a p-Value

As described in the previous section, an acceptance region can be determined by taking an arbitrary point and increasing the radius of a ball around this center point until the acceptance region is found using the criterion provided by Theorem 3. Obviously, the center of the ball should lie within the acceptance region, ideally at its center, to minimize the necessary iterations and number of points for which to evaluate the pmf and the test statistic. The expected value of the multinomial distribution, which is the center of mass of all probability weighted points in the discrete simplex, is known, and it is close to the center of mass of the acceptance region, as the region contains most of the mass. Therefore, a point close to the expected value is a suitable center for the ball.

The p-value of an observation x can be found by computing the total probability of the largest acceptance region not containing the observation, as formalized by Algorithm 1 and the following theorem.

Theorem 6.

Let T be weakly quasi M-convex and . Suppose

are such that

. If

satisfies

, then

.

Proof.

By Lemma 5(b), the set A is the sublevel set at , and hence

. □

The condition in Theorem 6 ensures that the sublevel set A is not empty, as otherwise the empty set may falsely be identified as the largest acceptance region not containing x. The case where no point y with

is known requires special care. In this case, Algorithm 1 enumerates an acceptance region containing the observation itself to avoid premature termination.

To avoid enumerating unreasonably large balls, Algorithm 1 only determines exact p-values above a threshold θ and otherwise indicates that the p-value is smaller than the threshold θ by returning a value of 0. shows the points evaluated by Algorithm 1 for an observation with p-value greater, respectively, smaller than some threshold θ.

Fig. 5 Points (big dots) in for which the probability mass and test statistic are evaluated given the marked observations

(left) and

(right) under the null hypothesis

and

. The p-values are 0.3049 (left) and less than

(right). The black region on the left is the largest acceptance region not containing the observation x.

Algorithm 1:

Compute exact p-value above some threshold.

Input: Observation , hypothesis

, threshold

Output: Exact p-value or 0 if the p-value is less than θcompute

minimizing

If then set y = xinitialize r = 0,

repeat

add f(z) to SumProb for points

with

increment

set

until (

and

) or SumProb

If then return

else return 0

3.3 Implementation

Enumeration of the full sample space can be implemented using a simple recursion, as in the R packages EMT (Menzel Citation2013) and XNomial (Engels Citation2015). Whereas EMT is written purely in R, the function xmulti of the XNomial package uses an efficient C++ subroutine for the recursion. To enumerate the samples at a given radius r in the repeat-loop of Algorithm 1, a similar, more complicated recursive scheme is implemented in the R package ExactMultinom using a C++ subroutine to allow for fast recursions.

As an alternative, Bejerano, Friedman, and Tishby (Citation2004) proposed a branch and bound approach to compute exact multinomial p-values, as implemented by Bejerano (Citation2006). However, the branch and bound approach does not consider the probability mass statistic, and its implementation is limited to the log-likelihood ratio test. In contrast, the implementation of Algorithm 1 simultaneously computes p-values for the Chi-square, log-likelihood ratio and probability mass test statistics, as does xmulti. Further discussion of the branch and bound approach and other methods is deferred to Appendix D, supplementary materials, as none of these methods have been tailored to the probability mass test and other approaches do not produce “strictly exact” p-values (Keich and Nagarajan Citation2006).

The current implementation of Algorithm 1 accurately finds p-values of order roughly as small as . Smaller p-values often lead to negative output because of limited computational precision in the addition of many floating point numbers. To ensure accurate results, I recommend to choose θ no less than

with the current implementation.

During early runs of the simulation study described in Section 4, it was noticed that the runtime of Algorithm 1 tends to increase drastically if the null distribution contains a very small probability for some

. In this case, the acceptance region is very flat, containing mostly points within a lower dimensional face of the discrete simplex, as hits in category i are improbable under the null. Hence, the asymptotic advantage of Algorithm 1 discussed in the next section requires large sample size n to take effect under sparse null hypotheses. As a heuristic, which turned out to be an effective remedy, the implementation does not enumerate entire balls if

, but only considers points

with small zi

, by skipping all points z for which

.

3.4 Runtime Complexity

The discrete simplex contains

points, and so the full enumeration takes

operations to compute a p-value. In comparison, the acceptance regions at a fixed level

only contain

points, and this continues to hold for the smallest ball centered at the expected value containing the acceptance region, as proven by Proposition 7. Therefore, Algorithm 1 only takes

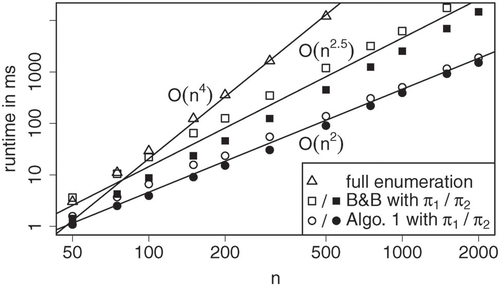

operations to determine a p-value above the threshold θ. shows runtime as a function of n for m = 5. Whereas the runtime of the full enumeration method depends only on the parameters m and n, the runtime of the implementation of Algorithm 1 described in Section 3.3 depends on both the parameter π and the observation x. As with the branch and bound approach, the uniform null hypothesis results in a longer runtime than sparse null hypotheses, but the difference is less pronounced. Furthermore, the runtime of Algorithm 1 increases if the p-value of x is small, which is further investigated in the simulation study of Section 4.1. As the runtime increases exponentially in m, Algorithm 1 is only feasible if the number of categories m is small.

Fig. 6 Mean runtime across 10 samples with p-values of about 0.001 under null hypotheses and

, respectively, using full enumeration, the branch and bound (B&B) approach and Algorithm 1.

Proposition 7.

Let and

. Then there exists

such that

for sufficiently large n.

Proof.

Consider the canonical extension of T to

and let

denote a ball in

with boundary

. Let

and

. If

, then every

can be written as

for some

.

Let be the

-quantile of

for

. As Tn

converges to

in distribution, the sequence

of quantiles converges to the

-quantile

(see, Van der Vaart Citation1998, Lemma 21.2). Consequently, the maximum

exists, and the set

contains the acceptance region

for every n.

As is convex (by Lemma 9 in Appendix C, supplementary materials) and thus has convex sublevel sets, it suffices to show that n0 can be chosen such that

converges to a value greater t to ensure that

for sufficiently large n.

In case , observe that

does not depend on n, and so the canonical extension

of the Chi-square statistic at radius

is bounded from below by

. This bound becomes arbitrarily large as n0 is increased.

In case or

, if n0 is fixed,

converges uniformly to

for

(by Lemma 10 in Appendix C, supplementary materials). Hence,

converges to

. □

4 Application

In this section, the use of the new method is illustrated in a simulation study. On the one hand, this serves to show the improvements in runtime in comparison to some other methods. On the other hand, this sheds some light on the fit of the asymptotic approximation to the probability mass test provided by Theorem 1 for a moderate sample size (n = 100). As a practical application in forecast evaluation, the usage of exact multinomial tests to increase the information conveyed by the calibration simplex (Wilks Citation2013), a graphical tool used to assess ternary probability forecasts, is outlined.

4.1 Simulation Study

For the simulation study, pairs of null hypothesis parameters and samples were generated as iid realizations of the random quantity (P, X) with

being uniformly distributed on the unit simplex and

. For each pair, p-values were computed using various test statistics and algorithms. Thereby, no specific null hypothesis had to be chosen and instead a wide variety was considered. By drawing samples from the null hypotheses, p-values follow a uniform distribution on

. Various aspects of the tests and algorithms in question can be examined using the resulting rich dataset and subsets thereof.

The following results were obtained using such pairs with samples of size n = 100 drawn from multinomial distributions with m = 5 categories. Exact p-values were computed using the implementation of Algorithm 1 provided by the accompanying R package. To illustrate the speedup achieved by the new method in this study, the full enumeration method provided by the xmulti function of the XNomial package (Engels Citation2015) and the branch and bound approach (Bejerano, Friedman, and Tishby Citation2004) were applied to the first 104 pairs. Essentially, the computational cost of the full enumeration is constant, independent of the null hypothesis at hand and the resulting p-value, whereas the cost of Algorithm 1 increases as the p-value decreases and also varies with the null hypothesis similar to the cost of the branch and bound approach.

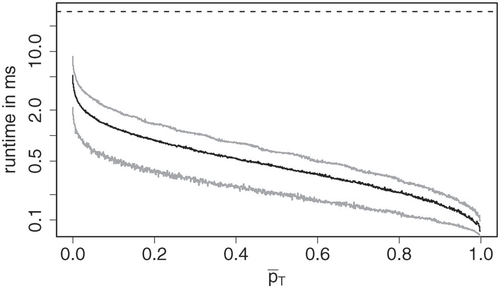

The implementation of Algorithm 1 took an average of 0.59 ms to compute a p-value, improving on the branch and bound approach (1.78 ms), even though the latter only computes p-values for the log-likelihood ratio test, and full enumeration (29.76 ms). Perhaps surprisingly, Monte Carlo estimation (using xmonte from XNomial, which simulates 10,000 samples by default) took almost twice as long (53.49 ms) as the full enumeration. illustrates the connection between runtime and size of the resulting p-values for the new method. As there are other factors influencing the runtime and the implementation computes p-values for multiple statistics simultaneously, samples were ordered by their mean p-value and put in groups of 1000 samples with similar mean p-value (in particular, the groups contain samples with p-values in between the empirical

- and

-quantile for

). The figure shows mean runtime in each group as well as the 5%- and 95%-quantile.

Fig. 7 Runtime against mean p-value in groups of 1000 samples with similar mean p-value. The black line shows mean runtime per group, whereas the gray lines are the 5% and 95%-quantile. The dashed line shows the mean runtime using full enumeration.

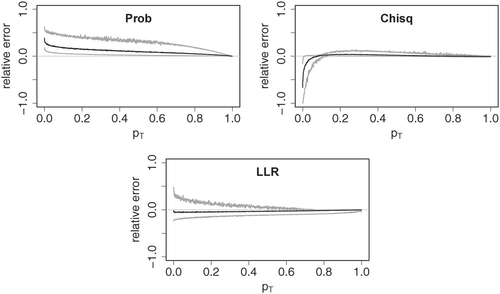

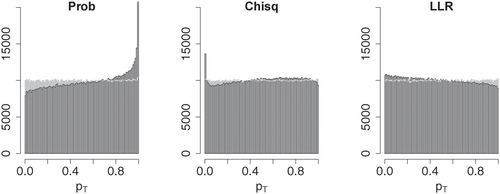

To illustrate the fit of the classical Chi-square approximation, the probability of a Chi-square distribution with m – 1 degrees of freedom exceeding the values of the test statistics for each pair were computed. shows relative errors of the asymptotic approximations to the p-values for the three test statistics. Given a test statistic T and asymptotic approximation to the exact p-value

, the relative error is the deviation from the exact value in parts of said value,

. The asymptotic approximation to the Chi-square statistic is quite accurate in most cases, but tends to underestimate small p-values (< 0.1). The asymptotic approximation to the log-likelihood ratio statistic tends to slightly underestimate p-values on average. While the exact p-values are valid in that

for all

, underestimation may result in invalid p-values. Asymptotic approximations of Pearson’s Chi-square and the log-likelihood ratio have been studied well, and the classical Chi-square approximations can be improved by using moment corrections (see Cressie and Read Citation1989, and references therein). Furthermore, the errors typically increase if some category has small expectation under the null hypothesis. The approximation to the probability mass p-values provided by Theorem 1 produces somewhat larger errors especially for large p-values, and it clearly overestimates the p-values. This is emphasized by the fact that within the simulation data only a vanishingly small number of p-values was slightly underestimated, all of which were well over 0.9. illustrates how estimation errors influence the distribution of the resulting p-values. Whereas the exact p-values appear to follow a uniform distribution, the asymptotic p-values clearly deviate from uniformity. For the probability mass statistic, the asymptotic test yields a conservative test, whereas the asymptotic log-likelihood ratio test (and also the asymptotic Chi-square test at small significance levels) is slightly anti-conservative.

Fig. 8 Relative errors of asymptotic approximations to p-values for probability mass (Prob), Chi-square (Chisq) and log-likelihood ratio (LLR) test statistic. The plots were obtained using the same grouping scheme as in .

Fig. 9 Histograms of asymptotic approximations to p-values for probability mass (Prob), Chi-square (Chisq), and log-likelihood ratio (LLR) test statistic in black. The gray histograms show respective exact p-values. The rightmost bar within the left histogram is not fully shown and extends further up to over 30000 counts.

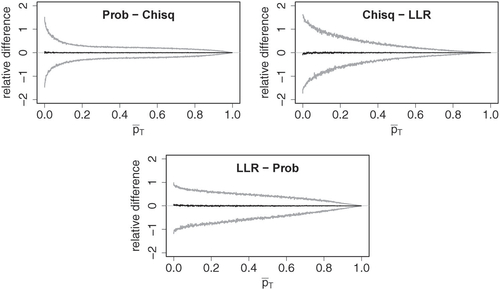

shows relative differences between exact p-values obtained with the three test statistics. Given test statistics T and , the relative difference between p-values

and

is

, where

. It can be seen that the choice of test statistic can make quite a difference. A closer look at the simulation data revealed that these differences tend to be smaller if expectations for all categories are large under the null. To provide some numerical insights, lists exact and asymptotic p-values.

Fig. 10 Relative differences between exact p-values of probability mass (Prob), Chi-square (Chisq), and log-likelihood ratio (LLR) test statistic against mean of compared p-values. The plots were obtained using the same grouping scheme as in .

Table 1 Exact p-values pT

and asymptotic p-values of five randomly selected pairs

with

.

4.2 The Calibration Simplex

Turning to an application in forecast verification, consider a random variable X and a probabilistic forecast F for X. For an introduction to probabilistic forecasting in general, see Gneiting and Katzfuss (Citation2014). A probabilistic forecast is said to be calibrated if the conditional distribution of the quantity of interest given a forecast coincides with the forecast distribution, that is,(4)

(4) holds almost surely. Suppose now that X maps to one of three distinct outcomes only. Then, a probabilistic forecast is fully described by the probabilities it assigns to each outcome. In this case, the calibration simplex (Wilks Citation2013) can be used to graphically identify discrepancies between predicted probabilities and conditional outcome frequencies. Given iid realizations

consisting of forecast probabilities (vectors within the unit 2-simplex) and observed outcomes encoded 1, 2, and 3, forecast-outcome pairs with similar forecast probabilities are grouped according to a tessellation of the probability simplex. Thereafter, calibration is assessed by comparing average forecast and actual outcome frequencies within each group.

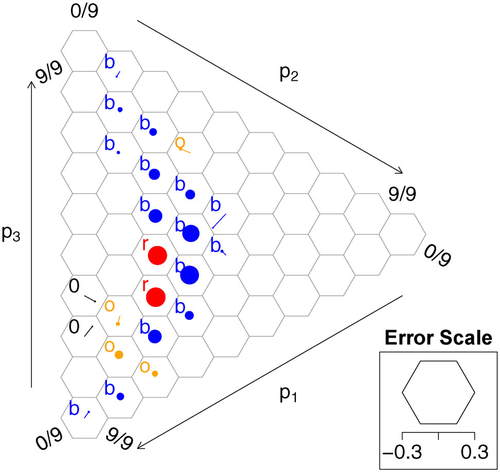

As illustrated in , the calibration simplex is a graphical tool used to conduct this comparison visually. The groups are determined by overlaying the probability simplex with a hexagonal grid. The circular dots correspond to nonempty groups of forecasts given by a hexagon. The dots’ areas are proportional to the number of forecasts per group. A dot is shifted away from the center of the respective hexagon by a scaled version of the difference in average forecast probabilities and outcome frequencies. This provides valuable insight into the forecast’s distribution and the conditional distribution of the quantity of interest. However, it is not apparent how big the differences may be merely by chance.

Fig. 11 Calibration Simplex with color-coded p-values from the log-likelihood ratio statistic evaluating a total of 21,240 club soccer predictions by FiveThirtyEight (https://projects.fivethirtyeight.com/soccer-predictions/) for matches from September 2016 until April 2019. Outcomes are encoded as “home win”,

“draw” and

“away win”. Only groups containing at least 10 forecasts are shown. Blue (b) indicates a p-value

> 0.1, orange (o)

, red (r)

and black (0)

.

If the forecast is calibrated, then, by (4), the outcome frequencies within a group of size n with mean forecast

follow a generalized multinomial distribution (the multinomial analog of the Poisson binomial distribution), that is, a convolution of multinomial distributions

with parameters

. If these parameters only deviate little from their mean

, then, presumably, the generalized multinomial distribution should not deviate much from a multinomial distribution with parameter

. Under this presumption, multinomial tests can be applied to quantify the discrepancy within each group through a p-value. As the number of outcomes m = 3 is small, exact p-values are efficiently computed by Algorithm 1 even for large sample sizes n.

In , p-values obtained from the log-likelihood ratio statistic are conveyed through a coloring scheme. Note that a p-value is exactly zero only if an outcome is forecast to have zero probability and said outcome still realizes. was generated using the R package CalSim (Resin Citation2021).

The calibration simplex can be seen as a generalization of the popular reliability diagram. In light of this analogy, the use of multinomial tests to assess the statistical significance of differences in predicted probabilities and observed outcome frequencies serves the same purpose as consistency bars in reliability diagrams introduced by Bröcker and Smith (Citation2007). Consistency bars are constructed using Monte Carlo simulation. To justify the above presumption, the multinomial p-values used to construct were compared to p-values computed from 10,000 Monte Carlo samples obtained from the generalized multinomial distributions. To this end, the standard deviation of the Monte Carlo p-values was estimated using the estimated p-value in place of the true generalized multinomial p-value. Most of the multinomial p-values were quite close to the Monte Carlo estimates with an absolute difference less than two standard deviations, whereas two of them deviated on the order of 6 to 8 standard deviations from the Monte Carlo estimates, which nonetheless resulted in a relatively small absolute error. In particular, using the Monte Carlo estimated p-values did not change . As computation of the Monte Carlo estimates from the generalized multinomial distributions is computationally expensive, the multinomial p-values serve as a fast and adequate alternative. Further improving uncertainty quantification within the calibration simplex is a subject for future work.

5 Concluding Remarks

A new method for computing exact p-values was investigated. It has been illustrated that the new method works well when the number m of categories is small. This results in a concrete speedup in practical applications as illustrated through a simulation study. As a further application not discussed in this work, the new method appears to be well suited to determine level set confidence regions discussed in Chafai and Concordet (Citation2009) and Malloy, Tripathy, and Nowak (Citation2021). When m is too large for exact methods to be feasible, other methods may be used to approximate exact p-values as hinted at in Appendix D, supplementary materials. Such an approach may be added to the ExactMultinom package in a future version.

Regarding the choice of test statistic, the “exact multinomial test” was treated as a test statistic and the asymptotic distribution of the resulting probability mass statistic was derived. Like most prominent test statistics, the probability mass statistic yields unbiased tests for the uniform null hypothesis. It was shown that a randomized test based on the probability mass statistic can be characterized in that it minimizes the respective (weighted) acceptance region.

Although asymptotic approximations work well in many use cases, there are cases, where these approximations are not adequate, for example, when dealing with small sample sizes or small expectations. On the other hand, there is nothing to be said against the use of exact tests whenever feasible, and it is recommended in the applied literature (McDonald Citation2009, p. 83) for samples of moderate size up to 1000. As the available implementations of exact multinomial tests in R use full enumeration, the new implementation increases the scope of exact multinomial tests for practitioners.

Supplemental Material

Download Zip (193.4 KB)Acknowledgments

The author would like to thank Tilmann Gneiting, Alexander I. Jordan, and Sebastian Lerch for helpful comments, discussions and continued encouragement as well as two anonymous reviewers for their constructive comments.

Supplementary Materials

Appendices:Mathematical details complementing the proofs of Theorem 1, Proposition 4, and Proposition 7, and a short discussion of other methods. (pdf)

Package ExactMultinom: R package containing the implementation described in Section 3. (GNU zipped tar file)

Additional code: R code used for the simulation study in Section 4. (.R file)

Disclosure Statement

The author reports there are no competing interests to declare.

Additional information

Funding

Notes

1 Randomized tests like this traditionally arise in the theory of uniformly most powerful tests, see for example, Lehmann and Romano (Citation2005, chap. 3).

References

- Baglivo, J., Olivier, D., and Pagano, M. (1992), “Methods for Exact Goodness-of-Fit Tests,” Journal of the American Statistical Association, 87, 464–469. DOI: 10.1080/01621459.1992.10475227.

- Beals, R., and Wong, R. (2010), Special Functions: A Graduate Text (Vol. 126), Cambridge: Cambridge University Press.

- Bejerano, G. (2006), “Branch and Bound Computation of Exact p-values,” Bioinformatics, 22, 2158–2159. DOI: 10.1093/bioinformatics/btl357.

- Bejerano, G., Friedman, N., and Tishby, N. (2004), “Efficient Exact p-value Computation for Small Sample, Sparse, and Surprising Categorical Data,” Journal of Computational Biology, 11, 867–886. DOI: 10.1089/cmb.2004.11.867.

- Bröcker, J., and Smith, L. A. (2007), “Increasing the Reliability of Reliability Diagrams,” Weather and Forecasting, 22, 651–661. DOI: 10.1175/WAF993.1.

- Chafai, D., and Concordet, D. (2009), “Confidence Regions for the Multinomial Parameter with Small Sample Size,” Journal of the American Statistical Association, 104, 1071–1079. DOI: 10.1198/jasa.2009.tm08152.

- Cohen, A., and Sackrowitz, H. B. (1975), “Unbiasedness of the Chi-square, Likelihood Ratio, and other Goodness of Fit Tests for the Equal Cell Case,” The Annals of Statistics, 3, 959–964. DOI: 10.1214/aos/1176343197.

- Cressie, N., and Read, T. R. C. (1984), “Multinomial Goodness-of-Fit Tests,” Journal of the Royal Statistical Society, Series B, 46, 440–464. DOI: 10.1111/j.2517-6161.1984.tb01318.x.

- Cressie, N., and Read, T. R. C. (1989), “Pearson’s X2 and the Loglikelihood Ratio Statistic G2: A Comparative Review,” International Statistical Review, 57, 19–43. DOI: 10.2307/1403582.

- Engels, B. (2015), XNomial: Exact Goodness-of-Fit Test for Multinomial Data with Fixed Probabilities. R package version 1.0.4. Available at https://CRAN.R-project.org/package=XNomial.

- Gibbons, J. D., and Pratt, J. W. (1975), “P-values: Interpretation and Methodology,” The American Statistician, 29, 20–25.

- Gneiting, T., and Katzfuss, M. (2014), “Probabilistic Forecasting,” Annual Review of Statistics and its Application, 1, 125–151. DOI: 10.1146/annurev-statistics-062713-085831.

- Hirji, K. F. (1997), “A Comparison of Algorithms for Exact Goodness-of-Fit Tests for Multinomial Data,” Communications in Statistics - Simulation and Computation, 26, 1197–1227. DOI: 10.1080/03610919708813435.

- Keich, U., and Nagarajan, N. (2006), “A Fast and Numerically Robust Method for Exact Multinomial Goodness-of-Fit Test,” Journal of Computational and Graphical Statistics, 15, 779–802. DOI: 10.1198/106186006X159377.

- Koehler, K. J., and Larntz, K. (1980), “An Empirical Investigation of Goodness-of-Fit Statistics for Sparse Multinomials,” Journal of the American Statistical Association, 75, 336–344. DOI: 10.1080/01621459.1980.10477473.

- Kotze, T. J. V. W., and Gokhale, D. V. (1980), “A Comparison of the Pearson-X2 and Log-Likelihood-Ratio Statistics for Small Samples by Means of Probability Ordering,” Journal of Statistical Computation and Simulation, 12, 1–13. DOI: 10.1080/00949658008810422.

- Lehmann, E. L., and Romano, J. P. (2005), Testing Statistical Hypotheses. Springer Texts in Statistics (3rd ed.), New York: Springer.

- Malloy, M. L., Tripathy, A., and Nowak, R. D. (2021), “Optimal Confidence Sets for the Multinomial Parameter,” in 2021 IEEE International Symposium on Information Theory (ISIT), pp. 2173–2178. DOI: 10.1109/ISIT45174.2021.9517964.

- McDonald, J. H. (2009), Handbook of Biological Statistics (2nd ed.), Baltimore: Sparky House Publishing.

- Menzel, U. (2013), EMT: Exact Multinomial Test: Goodness-of-Fit Test for Discrete Multivariate Data. R package version 1.1. Available at https://CRAN.R-project.org/package=EMT.

- Murota, K. (2003), Discrete Convex Analysis. SIAM Monographs on Discrete Mathematics and Applications, Philadelphia: Society for Industrial and Applied Mathematics (SIAM).

- Pérez, T., and Pardo, J. A. (2003), “On Choosing a Goodness-of-Fit Test for Discrete Multivariate Data,” Kybernetes, 32, 1405–1424. DOI: 10.1108/03684920310493323.

- R Core Team (2020), R: A Language and Environment for Statistical Computing, Vienna, Austria: R Foundation for Statistical Computing. Available at https://www.R-project.org/.

- Radlow, R., and Alf, E. F. J. (1975), “An Alternate Multinomial Assessment of the Accuracy of the χ2 Test of Goodness of Fit,” Journal of the American Statistical Association, 70, 811–813.

- Rahmann, S. (2003), “Dynamic Programming Algorithms for Two Statistical Problems in Computational Biology,” in Algorithms in Bioinformatics. WABI 2003., Lecture Notes in Computer Science (Vol. 2812), pp. 151–164, Berlin, Heidelberg: Springer.

- Resin, J. (2020), ExactMultinom: Multinomial Goodness-of-Fit Tests. R package version 0.1.2. Available at https://CRAN.R-project.org/package=ExactMultinom.

- Resin, J. (2021), CalSim: The Calibration Simplex. R package version 0.5.2. Available at https://CRAN.R-project.org/package=CalSim.

- Tate, M. W., and Hyer, L. A. (1973), “Inaccuracy of the X2 Test of Goodness of Fit when Expected Frequencies are Small,” Journal of the American Statistical Association, 68, 836–841.

- Van der Vaart, A. W. (1998), Asymptotic Statistics, Volume 3 of Cambridge Series in Statistical and Probabilistic Mathematics, Cambridge: Cambridge University Press.

- Wakimoto, K., Odaka, Y., and Kang, L. (1987), “Testing the Goodness of Fit of the Multinomial Distribution based on Graphical Representation,” Computational Statistics & Data Analysis, 5, 137–147.

- West, E. N., and Kempthorne, O. (1972), “A Comparison of the Chi2 and Likelihood Ratio Tests for Composite Alternatives,” Journal of Statistical Computation and Simulation, 1, 1–33. DOI: 10.1080/00949657208810001.

- Wilks, D. S. (2013), “The Calibration Simplex: A Generalization of the Reliability Diagram for Three-Category Probability Forecasts,” Weather and Forecasting, 28, 1210–1218. DOI: 10.1175/WAF-D-13-00027.1.