ABSTRACT

Graduate medical education (GME) and Clinical Competency Committees (CCC) have been evolving to monitor trainee progression using competency-based medical education principles and outcomes, though evidence suggests CCCs fall short of this goal. Challenges include that evaluation data are often incomplete, insufficient, poorly aligned with performance, conflicting or of unknown quality, and CCCs struggle to organize, analyze, visualize, and integrate data elements across sources, collection methods, contexts, and time-periods, which makes advancement decisions difficult. Learning analytics have significant potential to improve competence committee decision making, yet their use is not yet commonplace. Learning analytics (LA) is the interpretation of multiple data sources gathered on trainees to assess academic progress, predict future performance, and identify potential issues to be addressed with feedback and individualized learning plans. What distinguishes LA from other educational approaches is systematic data collection and advanced digital interpretation and visualization to inform educational systems. These data are necessary to: 1) fully understand educational contexts and guide improvements; 2) advance proficiency among stakeholders to make ethical and accurate summative decisions; and 3) clearly communicate methods, findings, and actionable recommendations for a range of educational stakeholders. The ACGME released the third edition CCC Guidebook for Programs in 2020 and the 2021 Milestones 2.0 supplement of the Journal of Graduate Medical Education (JGME Supplement) presented important papers that describe evaluation and implementation features of effective CCCs. Principles of LA underpin national GME outcomes data and training across specialties; however, little guidance currently exists on how GME programs can use LA to improve the CCC process. Here we outline recommendations for implementing learning analytics for supporting decision making on trainee progress in two areas: 1) Data Quality and Decision Making, and 2) Educator Development.

Introduction

Graduate medical education (GME) and Clinical Competency Committees (CCCs) are evolving to monitor trainee progression using competency-based medical education (CBME) principles and outcomes [Citation1–4]. CBME promotes effective, individualized development of residents [Citation3,Citation5], though evidence suggests CCCs fall short of this goal [Citation6–8], despite CBME advancing for over 20 years [Citation9,Citation10]. Challenges include that evaluation data are often incomplete, insufficient, poorly aligned with performance, conflicting or of unknown quality [Citation11]. CCCs struggle to organize, analyze, visualize, and integrate data elements across sources, collection methods, contexts, and time-periods, which makes advancement decisions difficult [Citation11,Citation12]. These issues are even more urgent in CBME, where more data are required for every learner to individualize development [Citation13–16]. ‘Learning analytics have significant potential to improve competence committee decision making, yet their use is not yet commonplace [Citation15,Citation16].

Learning analytics is the interpretation of multiple data sources gathered on trainees to assess academic progress, predict future performance, and identify potential issues to be addressed with feedback and individualized learning plans (ILPs) [Citation15,Citation17,Citation18]. What distinguishes LA from other educational approaches is systematic data collection and advanced digital interpretation and visualization to inform educational systems [Citation19,Citation20]. These data are necessary to: 1) fully understand educational contexts and guide improvements; 2) advance proficiency among stakeholders to make ethical and accurate summative decisions; and 3) clearly communicate methods, findings, and actionable recommendations for a range of educational stakeholders [Citation15,Citation16,Citation21].

The ACGME released the third edition CCC ‘Guidebook’ for Programs in 2020 [Citation1] and the 2021 Milestones 2.0 supplement of the Journal of Graduate Medical Education (JGME Supplement) presented important papers that describe evaluation and implementation features of effective CCCs [Citation22]. Principles of LA underpin national GME outcomes data [Citation23] and training across specialties [Citation21,Citation24]; however, our careful review of these documents [Citation1,Citation22] indicate that little guidance currently exists on how GME programs can use LA to improve the CCC process.

In this paper, we outline recommendations for implementing learning analytics for supporting decision making on trainee progress in two areas: (1) Data Quality and Decision-Making and (2) Educator Development.

Data quality and decision making

We outline our long- and short-term recommendations for data quality and decision making in . In the near future, data quality standards that are actually embedded in information systems would help GME programs ensure that data quality checks occur prior to CCC meetings. In addition, active engagement of residents in the evaluation process [Citation25]; and monitoring of assessor quality are needed [Citation26]. Aspirational goals include implementing systemized data processing to reduce human error [Citation16] and automating ACGME cloud-based data visualization processes to reduce workload redundancies and improve efficiencies.

Table 1. Effective Use of Learning Analytics by CCCs: Data Quality and Use in Progression Decision Making.

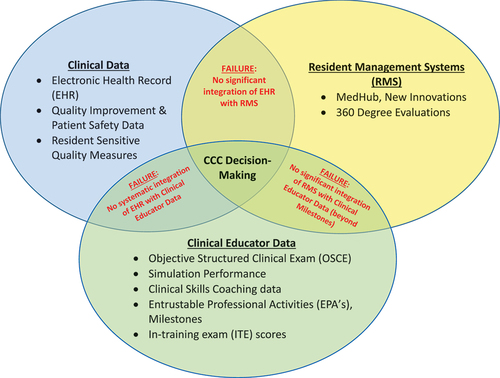

Accreditation guides should point to strategies that can aid data analysis and decision-making [Citation1,Citation32]. Aspirational goals include collaborating with GME data management systems (e.g., New Innovations, MedHub) to develop step-by-step protocols for collating and synthesizing all available data to optimize data visualizations and dashboards. For example, programs can currently access the ACGME predictive probability values (PPVs), which indicate the probability of individual trainees reaching Milestone target Level 4 by graduation [Citation21,Citation24]. illustrates the GME LA landscape and calls out existing failures of data integration for CCCs [Citation33]. Promising studies that link educational quality to patient data and outcomes, underscore the importance of data integration and analytics [Citation19,Citation34–37]. Integration of patient electronic health record data would complement trainee multi-source evaluation data, enhance the CCC decision-making process [Citation34,Citation37], and has been shown to enrich resident feedback and learning [Citation38]. Lastly, creating formal networks of data teams, educational scientists, and CCC members to share complementary LA expertise and collaborate on scholarship would further develop this important work [Citation19,Citation23,Citation27].

Educator Development and Learning Analytics

Educators and trainees need to learn how to manage large streams of quantitative [Citation39] and qualitative [Citation40] data output as they flow to customized resident portfolios to inform CCC decisions and Individualized Learning Plans (ILPs) [Citation28,Citation41]. CCC members need a certain level of analytics literacy toward becoming ‘Diagnostic Assessors’ that address knowledge gaps [Citation27]. Additional opportunities involve having data that informs both faculty and program development [Citation12,Citation42]. outlines recommendations for both educator and program development to apply analytics to CCC processes. Rich LA can enhance trainee development and foster a growth mindset promoting self-reflection, self-directed learning, and co-production of ILPs [Citation1,Citation7,Citation17,Citation25,Citation28,Citation48–51].

Table 2. Effective Use of Learning Analytics by CCCs: Educator Development.

In terms of educator development, the 2021 JGME supplement [Citation22] proposes that new CCC member orientation includes: 1) assessment and the use of the Milestones; 2) group decision-making; 3) awareness of biases; and 4) the impact of CCC decisions on patient care outcomes [Citation12]. We propose that LA and data interpretation training be included in this orientation along with ongoing educator development that promotes a shared mental model of how informatics can support CCC processes and decisions [Citation12,Citation52]. In addition, simultaneously developing educators and trainees could be done by creatively imbedding ongoing innovative learning experiences on data quality and processes into current educational routines [Citation12,Citation53].

In the more distant future, GME would benefit from faculty trained in educational research through formal training and clinical informatics programs sponsored by the National Library of Medicine [Citation44], ACGME [Citation45], and/or biomedical programs for healthcare professionals [Citation46]. This trained cadre could then ensure CCC members are fully informed about practical LA principles. Lastly, more research is needed to improve the utility of LA and effectiveness of CCCs long-term using well-established outcomes (e.g., tracking GME outcomes [Citation23], board certification status, and disciplinary actions) [Citation19,Citation20,Citation54].

Next steps in supporting these considerable efforts

CCCs engage core GME faculty and program coordinators who balance multiple competing demands [Citation55]. Ideally, a well-designed analytics infrastructure allows for the utilization of more data with less effort [Citation32,Citation56]. Investing in additional team members, ideally education scientists, can accelerate use of LA within CCCs [Citation27]. Options for gaining additional resources include: 1) negotiating with the sponsoring institution to obtain funds or share analytic personnel/resources; 2) applying for local, regional, or national funding; 3) using departmental funds; or 4) altering ACGME requirements [Citation57] to require this work.

Mechanisms for funding GME need to change to enable these kinds of innovations. Applying for grants to support this work as educational innovation is possible, but would not lead to a sustained infrastructure to support improvement [Citation58]. The availability of departmental funds will likely vary by discipline, as those with high clinical revenue may be more likely to provide support. Similarly, large programs with research sections or university-based, affiliated or university administered programs may find expertise in informatics departments, schools of public health or education schools to guide integration of learning analytics into GME processes. Altering or adding program roles and full-time equivalent requirements in ACGME Common Program Requirements [Citation57] may be complex due to employment laws, but times are changing, and residency training funding mechanisms also need revisions. A recent communication with Eric Holmboe, MD, Chief Research, Milestones Development and Evaluation Officer at the ACGME, elucidated that the ACGME is beginning a digital transformation and exploring learning analytics as part of that transformation (Cite: Personal Communication 1/26/23).

In summary, action is needed to fully realize CBME in residency training. Evolutionary pathways for inclusion of LA and educator and trainee development in guiding literature, such as the next CCC Guidebook [Citation1] and ACGME Common Program Requirements [Citation57], would help advance these efforts. Collaborating to co-produce generalizable LA processes with external stakeholders, including Residency Data Management Systems, to inform efficient CCC processes will be essential to advance CBME.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Andolsek K, Padmore J, Hauer KE, et al. Accreditation Council for Graduate Medical Education (ACGME) Clinical Competency Committees a Guidebook for Programs 3rd Edition. Published 2020. Accessed May 14, 2022. https://www.acgme.org/globalassets/acgmeclinicalcompetencycommitteeguidebook.pdf

- Ekpenyong A, Padmore JS, Hauer KE. The Purpose, Structure, and Process of Clinical Competency Committees: guidance for Members and Program Directors. J Grad Med Educ. 2021;13(2s):45–7.

- Frank JR, Snell LS, ten CO, et al. Competency-based medical education: theory to practice. Med Teach. 2010;32(8):638–645.

- van Melle E, Hall AK, Schumacher DJ, et al. Capturing outcomes of competency-based medical education: the call and the challenge. Med Teach. 2021;43(7):794–800. DOI:10.1080/0142159X.2021.1925640

- van Melle E, Frank JR, Holmboe ES, et al. A Core Components Framework for Evaluating Implementation of Competency-Based Medical Education Programs. Acad Med. 2019;94(7):1002–1009.

- Hauer KE, Chesluk B, Iobst W, et al. Reviewing Residents’ Competence: a Qualitative Study of the Role of Clinical Competency Committees in Performance Assessment. Acad Med. 2015;90(8):1084–1092. DOI:10.1097/ACM.0000000000000736

- Goldhamer MEJ, Martinez-Lage M, Black-Schaffer WS, et al. Reimagining the Clinical Competency Committee to Enhance Education and Prepare for Competency-Based Time-Variable Advancement. J Gen Intern Med. Published online April 20, 2022;37(9):2280–2290.

- Ekpenyong A, Edgar L, Wilkerson L, et al.A multispecialty ethnographic study of clinical competency committees (CCCs) Med TeachPublished online May 30, 20221–910.1080/0142159X.2022.2072281

- Batalden P, Leach D, Swing S, et al. General Competencies and Accreditation in Graduate Medical Education. Health Aff. 2002;21(5):103–111.

- Nasca TJ, Philibert I, Brigham T, et al. The Next GME Accreditation System — Rationale and Benefits. N Engl J Med. 2012;366(11). DOI:10.1056/NEJMsr1200117

- Pack R, Lingard L, Watling CJ, et al. Some assembly required: tracing the interpretative work of Clinical Competency Committees. Med Educ. 2019;53(7):723–734.

- Heath JK, Davis JE, Dine CJ, et al. Faculty Development for Milestones and Clinical Competency Committees. J Grad Med Educ. 2021;13(2s):127–131.

- Misra S, Iobst WF, Hauer KE, et al. The Importance of Competency-Based Programmatic Assessment in Graduate Medical Education. J Grad Med Educ. 2021;13(2s):113–119.

- Goldhamer MEJ, Pusic MV, Jpt C, et al. Can Covid Catalyze an Educational Transformation? Competency-Based Advancement in a Crisis. N Engl J Med. 2020;383(11):1003–1005.

- Thoma B, Ellaway RH, Chan TM. From Utopia Through Dystopia: charting a Course for Learning Analytics in Competency-Based Medical Education. Acad Med. 2021;96(7S):S89–95.

- Thoma B, Bandi V, Carey R, et al. Developing a dashboard to meet Competence Committee needs: a design-based research project. Can Med Educ J. Published online January 7, 2020. DOI:10.36834/cmej.68903

- Lockyer JM, Armson HA, Könings KD, et al. Impact of Personalized Feedback: the Case of Coaching and Learning Change Plans. In The Impact of Feedback in Higher Education. Springer International Publishing; 2019. DOI:10.1007/978-3-030-25112-3_11

- Ekpenyong A, Zetkulic M, Edgar L, et al. Reimagining Feedback for the Milestones Era. J Grad Med Educ. 2021;13(2s):109–112.

- Triola MM, Hawkins RE, Skochelak SE. The Time is Now: using Graduates’ Practice Data to Drive Medical Education Reform. Acad Med. 2018;93(6):826–828.

- Triola MM, v PM. The Education Data Warehouse: a Transformative Tool for Health Education Research. J Grad Med Educ. 2012;4(1):113–115.

- Hamstra SJ, Yamazaki K. A Validity Framework for Effective Analysis and Interpretation of Milestones Data. J Grad Med Educ. 2021;13(2s):75–80.

- Andolsek KM, Jones MD, Ibrahim H, et al. Introduction to the Milestones 2.0: assessment, Implementation, and Clinical Competency Committees Supplement. J Grad Med Educ. 2021;13(2s):1–4.

- Burk-Rafel J, Marin M, Triola M, et al. The AMA Graduate Profile: tracking Medical School Graduates into Practice. Acad Med. 2021;96(11S):S178–179. DOI:10.1097/ACM.0000000000004315

- Yamazaki K, Holmboe E, Sangha S Accreditation Council for Graduate Medical Education (ACGME) Milestones PPV National Report. Published 2021. Accessed May 14, 2022. https://www.acgme.org/globalassets/pdfs/milestones/acgme-ppv-ms-report—final-111921.pdf

- Eno C, Correa R, Stewart NH, et al. Milestones Guidebook for Residents and Fellows. Accessed July 9, 2022. https://www.acgme.org/globalassets/pdfs/milestones/milestonesguidebookforresidentsfellows.pdf

- Kogan JR, Conforti LN, Iobst WF, et al. Reconceptualizing variable rater assessments as both an educational and clinical care problem. Acad Med. 2014;89(5):721–727.

- Simpson D, Marcdante K, Souza KH, et al. Job Roles of the 2025 Medical Educator. J Grad Med Educ. 2018;10(3):243–246.

- Holmboe ES Work-based Assessment and Co-production in Postgraduate Medical Training. GMS J Med Educ. 2017;34(5):Doc58. Published online November 15, 2017.

- Englander R, Holmboe E, Batalden P, et al. Coproducing Health Professions Education: a Prerequisite to Coproducing Health Care Services? Acad Med. 2020;95(7):1006–1013. DOI:10.1097/ACM.0000000000003137

- Bpmp BM, Batalden P, Margolis P, et al. Coproduction of healthcare service. BMJ Qualit Safety. 2016;25(7):509–517. DOI:10.1136/bmjqs-2015-004315

- Lawlor KB, Mj H. Smart goals: how the application of smart goals can contribute to achievement of student learning outcomes. Developments in Business Simulation and Experiential Learning, 2012;39:259-261. 2012;39.

- Boscardin C, Fergus KB, Hellevig B, et al. Twelve tips to promote successful development of a learner performance dashboard within a medical education program. Med Teach. 2018;40(8):855–861.

- Tolsgaard MG, Boscardin CK, Park YS, et al. The role of data science and machine learning in Health Professions Education: practical applications, theoretical contributions, and epistemic beliefs. Adv Health Sci Educ. 2020;25(5):1057–1086.

- Sebok-Syer SS, Goldszmidt M, Watling CJ, et al. Using Electronic Health Record Data to Assess Residents’ Clinical Performance in the Workplace. Acad Med. 2019;94(6):853–860.

- Schumacher DJ, Holmboe ES, van der Vleuten C, et al. Developing Resident-Sensitive Quality Measures. Acad Med. 2018;93(7):1071–1078.

- Asch DA. Evaluating Obstetrical Residency Programs Using Patient Outcomes. JAMA. 2009;302(12). DOI:10.1001/jama.2009.1356

- Smirnova A, Sebok-Syer SS, Chahine S, et al. Defining and Adopting Clinical Performance Measures in Graduate Medical Education. Acad Med. 2019;94(5):671–677. DOI:10.1097/ACM.0000000000002620

- Sebok-Syer SS, Shaw JM, Sedran R, et al. Facilitating Residents’ Understanding of Electronic Health Record Report Card Data Using Faculty Feedback and Coaching. Acad Med. 2022;97(11S):S22–28. DOI:10.1097/ACM.0000000000004900

- Ellaway RH, Topps D, Pusic MD. Big and Small. Acad Med. 2019;94(1):31–36.

- Ginsburg S, Watling CJ, Schumacher DJ, et al. Numbers Encapsulate, Words Elaborate: toward the Best Use of Comments for Assessment and Feedback on Entrustment Ratings. Acad Med. 2021;96(7S):S81–86.

- Stt L, Burke AE. Individualized Learning Plans: basics and Beyond. Acad Pediatr. 2010;10(5):289–292.

- Heath JK, Dine CJ, Burke AE, et al. Teaching the Teachers with Milestones: using the ACGME Milestones Model for Professional Development. J Grad Med Educ. 2021;13(2s):124–126.

- Holmboe ES, Yamazaki K, Nasca TJ, et al. Using Longitudinal Milestones Data and Learning Analytics to Facilitate the Professional Development of Residents. Acad Med. 2020;95(1):97–103.

- NIH National Library of Medicine. NLM’s University-based Biomedical Informatics and Data Science Research Training Programs. Accessed June 10, 2022. https://www.nlm.nih.gov/ep/GrantTrainInstitute.html

- Accreditation Council for Graduate Medical Education https://www.acgme.org/globalassets/PFAssets/ProgramRequirements/381_ClinicalInformatics_2020. pdf?ver=2020 06 29 163724 707&ver=2020 06 29 163724 707, accessed 6/11/22. ACGME Program Requirements for Graduate Medical Education in Clinical Informatics. Published 2020. Accessed June 10, 2022. ACGME Program Requirements for Graduate Medical Education in Clinical Informatics

- U.S. Department of Health and Human Services. HRSA-21-013i. Executive Summary. The Health Resources and Services Administration (HRSA), Ruth L. Kirschstein National Research Service Award. Published 2021 Accessed June 10, 2022. https://grants.hrsa.gov/2010/Web2External/Interface/Common/EHBDisplayAttachment.aspx?dm_rtc=16&dm_attid=5bfd05e2-a558-483f-bdb8-2462c5f18ea5

- Dickey CC, Thomas C, Feroze U, et al. Cognitive Demands and Bias: challenges Facing Clinical Competency Committees. J Grad Med Educ. 2017;9(2):162–164.

- Sargeant J, Armson H, Chesluk B, et al. The Processes and Dimensions of Informed Self-Assessment: a Conceptual Model. Acad Med. 2010;85(7). DOI:10.1097/ACM.0b013e3181d85a4e

- Dweck CS. Mindset: the New Psychology of Success. New York, NY: Random House Digital, Inc; 2008.

- Richardson D, Kinnear B, Hauer KE, et al. Growth mindset in competency-based medical education. Med Teach. 2021;43(7):751–757. DOI:10.1080/0142159X.2021.1928036

- Eva KW, Regehr G. Self-Assessment in the Health Professions: a Reformulation and Research Agenda. Acad Med. 2005;80(Supplement):S46–54.

- Edgar L, Jones MD, Harsy B, et al. Better Decision-Making: shared Mental Models and the Clinical Competency Committee. J Grad Med Educ. 2021;13(2s):51–58.

- Kuo AK, Wilson E, Kawahara S, et al. Meeting Optimization Program: a “Workshop in a Box” to Create Meetings That are Transformational Tools for Institutional Change. MedEdPORTAL. Published online April 13, 2017. 10.15766/mep_2374-8265.10569

- Weinstein DF, Thibault GE. Illuminating Graduate Medical Education Outcomes in Order to Improve Them. Acad Med. 2018;93(7):975–978.

- Accreditation Council for Graduate Medical Education (ACGME) Data Resource Book Academic Year 2020-2021. Accessed May 18, 2022. https://www.acgme.org/globalassets/pfassets/publicationsbooks/2020-2021_acgme_databook_document.pdf

- Chan T, Sebok-Syer S, Thoma B, et al. Learning Analytics in Medical Education Assessment: the Past, the Present, and the Future. AEM Educ Train. 2018;2(2):178–187.

- Accreditation Council for Graduate Medical Education (ACGME) Common Program Requirements. Published 2021. Accessed May 16, 2022. https://www.acgme.org/globalassets/PFAssets/ProgramRequirements/CPRResidency2021.pdf

- Carney PA, Waller E, Green LA, et al. Financing Residency Training Redesign. J Grad Med Educ. 2014;6(4):686–693.