?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

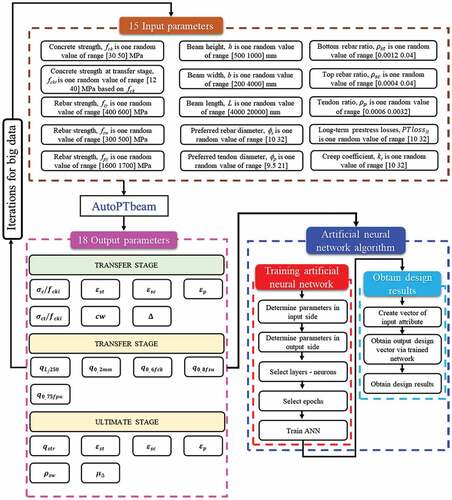

This research demonstrates how pre-tensioned concrete beams (PT beams) are designed holistically using artificial neural networks (ANNs). To establish reverse design scenarios, large input and output data are generated using the mechanics-based software AutoPTbeam. ANN-trained reverse-forward networks are proposed to solve reverse designs with 15 input and 18 output parameters for engineers. ANNs for reverse designs pre-tensioned concrete beams are formulated based on 15 input structural parameters to investigate the performances of PT beams with pin-pin boundaries. Useful reverse designs based on neural networks can be established by relocating preferable control parameters on an input-side, such as when four output parameters () (reverse scenario) are reversely pre-assigned on an input-side, all associated design parameters, including crack width, rebar strains at transfer load stage, rebar strains, and displacement ductility ratio at ultimate load stage are computed on an output-side. Deep neural networks trained by chained training scheme with revised sequence (CRS) for the reverse network of Step 1 show the better design accuracies when compared to those obtained based on ANNs trained by parallel training method (PTM) and based on shallow neural networks trained by CRS when the deflection ductility ratios (μΔ) within generated big datasets are reversely pre-assigned on an input-side.

GRAPHICAL ABSTRACT

1. Introduction

1.1. Previous studies

Several artificial neural network-based studies of prestressed beams have been conducted. Torky and Aburawwash (Torky and Aburawwash Citation2018) published a simple prestressed beam to demonstrate the viability of neural networks over traditional approaches. They proposed a deep learning approach to optimizing prestressed beams. Their data, however, are limited to beam depth, beam width, bending moment, eccentricity, and a number of strands.

Sumangala and Jeyasehar (Sumangala and Antony Jeyasehar Citation2011) also studied a damage assessment procedure using an artificial neural network (ANN) for prestressed concrete beams. They formulated the methodology using the results obtained from an experimental study conducted in the laboratory. The measured output from both static and dynamic tests was taken as input to train the neural network based on MATLAB. The quantitative evaluation of the degree of damage was possible by ANN using the natural frequency and stiffness in the post-cracking range. Their damage assessment procedure was validated using the data available in the literature, and the outcome is presented in Sumangala and Antony Jeyasehar (Citation2011). Lee et al. (Citation2019) proposed a novelty detection approach for tendons of PSC bridges based on a convolutional autoencoder (CAE). The proposed method used simulation data from nine different accelerometers. According to their research, the accuracies of CAEs for multi-vehicle are 79.5%–85.8% for 100% and 75% damage severities. Sanqiang Yang and Yong Huang also (Yang and Huang Citation2021) identified damages of prestressed concrete beam bridge based on a convolutional neural network. An additional study has been performed by (Alhassan, Ababneh, and Betoush Citation2020) who studied an innovative model for accurate prediction of the transfer length of prestressing strands based on artificial neural networks. Tam et al. (Citation2004) also diagnosed prestressed concrete pile defects using probabilistic neural networks. Mo and Han (Citation1995) investigated prestressed concrete frame behavior with neural networks. Slowik, Lehký, and Novák (Citation2021) investigated reliability-based optimization of a prestressed concrete roof girder using a surrogate model and the double-loop approach, while Khandel et al. (Citation2021) used a statistical damage detection and localization approach to evaluate the performance of prestressed concrete bridge girders using fiber Bragg grating sensors based on artificial neural networks. Roya (Solhmirzaei et al. Citation2020) investigated 360 test results ultra high performance concrete beams using different machine learning algorithms, resulting in good accuracies in predicting beam failure mode and shear capacity.

However, these studies are not dedicated to a holistic design of prestressed beams for practical applications. In the current study, both forward and reverse designs for prestressed beams are possible, with real-world big datasets of 50,000 generated using the full scale of structural mechanics-based software and trained using their previous training methods shown below. Hong, Pham, and Nguyen (Citation2021) proposed training methods based on a feature selection for a reverse design of doubly reinforced concrete beams. They trained large datasets using TED, PTM, CTS, and CRS that they developed. Hong et al. also presented artificial intelligence-based novel design charts for doubly reinforced concrete beams (Hong, Nguyen, and Nguyen Citation2021) and novel design methods for reinforced concrete columns (Hong, Nguyen, and Pham Citation2022). Hong and Pham (Citation2021) performed reverse designs of doubly reinforced concrete beams using Gaussian process regression models enhanced by sequence training/designing techniques based on feature selection algorithms. The training methods developed in Hong, Pham, and Nguyen (Citation2021), Hong, Nguyen, and Nguyen (Citation2021) Hong, Nguyen, and Pham (Citation2022), and Hong and Pham (Citation2021) are used to map 15 input parameters to 18 output parameters for a holistic design of pre-tensioned concrete beams. The large datasets, which contain 33 parameters, including 15 input and 18 output parameters, aid in the accurate training of ANNs that represent prestressed beams.

1.2. Research significance

There are numerous available computer-aided engineering tools, including CAD packages, FEM software, self-written calculation codes, that are used to study the performance of the PT structures. In this study, ANNsprogramed based on MATLAB Deep Learning Toolbox (MathWorks Citation2022a), MATLAB Parallel Computing Toolbox (MathWorks Citation2022b), MATLAB Statistics and Machine Learning Toolbox (MathWorks Citation2022c), and MATLAB R2022a (MathWorks) are used to design PT structures based on EC2 2004 (CEN (European Committee for Standardization) Citation2004) in both forward and reverse directions. When big data is good enough to show a tendency of PT beam behaviors, a reverse solution is generalizable by using ANNs trained on big data. Based on large datasets, forward and reverse designs using ANNs are possible, with no need for primary engineering knowledge to effectively determine design parameters for practices. The proposed networks allow for both forward and reverse designs for PT beams, which is difficult to achieve with classic techniques. AI-based PT beam design with sufficient training accuracy can completely replace classical design software while exhibiting excellent productivity for both forward and reverse designs. To better demonstrate the design steps, numerical examples are provided. Design charts are also constructed for design scenarios. Design charts can be extended as further as possible to meet the requirements of engineers.

The proposed method is advantageous in that it is less dependent on problem types such as column, beam, frame, seismic design, and so on, and instead relies on characteristics of large datasets of the considered problem. As a result, the proposed method’s applications are not limited to designing PT beams but can also be extended to other structures.

2. Formulations of forward design; description of input and output parameters

2.1. Description of input parameters

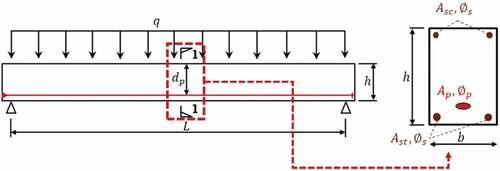

ANNs are implemented in designing bonded pre-tensioned beams with pin-pin ends shown in , illustrating beam width (mm) b, beam depth (mm) h, and beam length (mm) L. presents fifteen input parameters to generate big datasets using AutoPTbeam for an AI-based reverse design. Concrete properties include compressive cylinder strength of concrete at 28 days (MPa, fck), compressive cylinder strength of concrete at transfer stage (MPa, fcki), and long-term deflection factor considering creep effect, (normally 2.5 ~ 3.5, Kc). Reinforcing bar properties include top, bottom rebar ratios (ρst and ρsc), yield stress of rebar (MPa, fsy), yield stress of stirrup (MPa, fsw), and preferred diameter (mm, ). Tendon properties include tendon ratios (ρp), yield stress (MPa, fpy), and preferred diameter (mm,

). Long-term pre-stress losses (PTLosslt) is also one of fifteen input parameters. Tensile strength of tendon fpu is set as 1860 MPa, and hence, fpu is not included in inputs of big datasets.

Table 1. Fifteen input parameters to generate big datasets using AutoPTbeam for pre-tensioned beams.

2.2. Description of output parameters

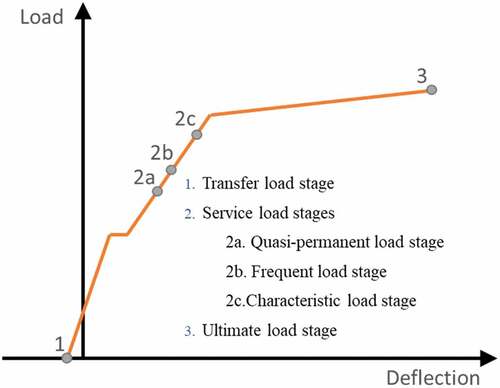

There are three load stages considered in prestressed designs, as shown in . The present study investigates 18 output parameters, including seven output parameters at the transfer load stage, five output parameters at the service load limit stages, and six outputs at the ultimate load limit stage, respectively, as shown in .

Table 2. 18 output of forward designs of a PT beam.

illustrates seven outputs of forward designs at a transverse stage, where EC 2 (CEN (European Committee for Standardization) Citation2004) requires concrete compressive stress () to be smaller than

, crack widths to be under a limitation depending on environmental condititons, and top rebar stress (

) to be less than a smaller number between

. It is noted that, EC2 (CEN (European Committee for Standardization) Citation2004) determines crack limitations using six classes of environmental exposures, which are classified based on a risk of being attacked by carbonation-induced corrosion, chloride-induced corrosion, freeze thaw, or chemicals.

shows five nominal strengths, corresponding to maximum applied loads on beams without violating service limitations required by EC2 (CEN (European Committee for Standardization) Citation2004). There are three sub-stages named quasi-permanent, frequent, and characteristic in a service load stage, where EC2 (CEN (European Committee for Standardization) Citation2004) requires PT beams to satisfy five design limitations. First of all, deflections should not exceed a limitation of L/250 at a quasi-permanent load stage. Secondly, crack widths under frequent load combinations should not exceed a limitation (Uncrack, 0.1 mm, 0.2 mm or 0.4 mm, etc.). Thirdly, concrete compressive stresses () under characteristic load combinations should be smaller than

to avoid longitudinal cracks. Finally, rebar stresses are limited at a minimum of

and

, whereas tendon stresses (

) should not exceed a smaller number between

and

to avoid unacceptable cracks or deformations at characteristic load combinations.

presents six outputs of forward designs at ultimate stages. AutoPTbeam calculates maximum nominal strength, rebar strains, tendon strains, and deflection ductility at ultimate stage, providing a clear insight into structural behaviors under fracture loads.

summarizes all the 33 input and output parameters used for generating large datasets for training ANNs. The large datasets are used for AI-based design for both forward (Section 4) and reverse designs (Section 5) of simply supported bonded pre-tensioned beams for all stages.

2.3. Generation of large structural datasets and network training

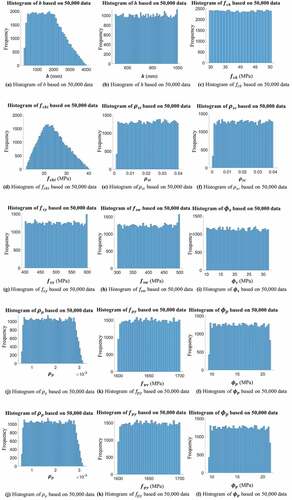

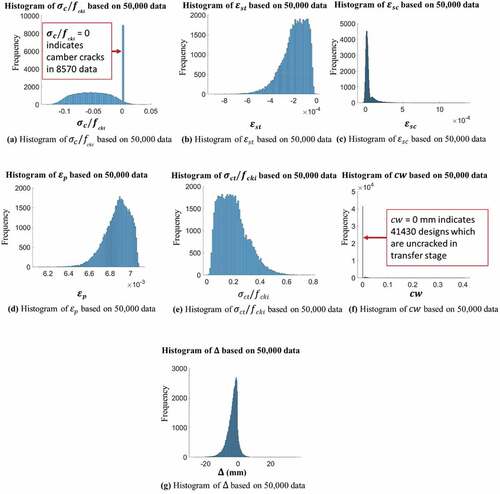

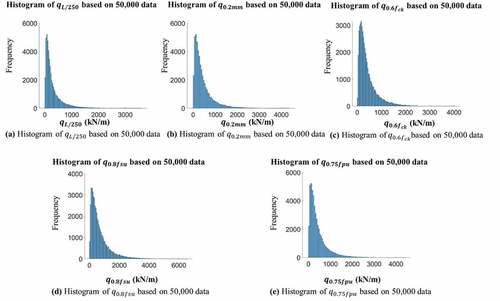

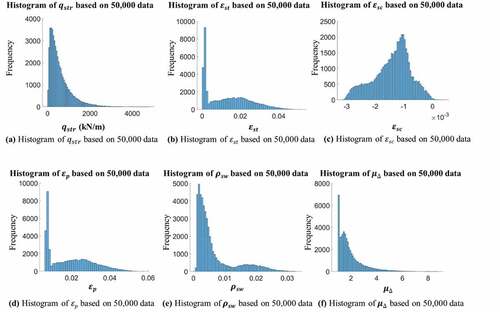

and contain a list of random variables, their corresponding ranges, and data distributions from the large structural datasets. The algorithm of AutoPTbeam shown in ) was developed for designing the forward design of bonded pre-tensioned beams by Cuong and Hong (Nguyen and Hong Citation2021). ) shows flow charts for generating large structural datasets for training ANNs. displays large structural datasets with selected non-normalized fifteen inputs and eighteen outputs generated based on ).

Figure 6. Histogram of six outputs of an ultimate stage based on 50.000 data (a) Algorithm for designing bonded pre-tensioned beams (AutoPTbeam) (b) Generation of large datasets.

Figure 7. Generation of large datasets of bonded pre-tensioned beams for AI-based design (Nguyen and Hong Citation2021)

Table 3. List of random variables and corresponding ranges of the big structural datasets.

Regression models are often evaluated using several common metrics, namely Mean Absolute Error (MAE), Mean Absolute Percentage Error (MAPE), Root Mean Squared Error (RMSE), and Coefficient of Determination () (Naser and Alavi Citation2020). The present paper employs MAE and correlation coefficients (R) calculated according to (1) and (2) to preliminarily benchmark performances of ANN models. However, it is noted that reliabilities of ANN models shall only be concluded based on errors in practical designs. Engineers must always check differences between results provided by ANN predictions and structure mechanic calculations. The present paper also suggests several methods, such as adjusting numbers of layers, numbers of neurons, or CRS training scheme, to enhance design accuracies.

Where:

Table

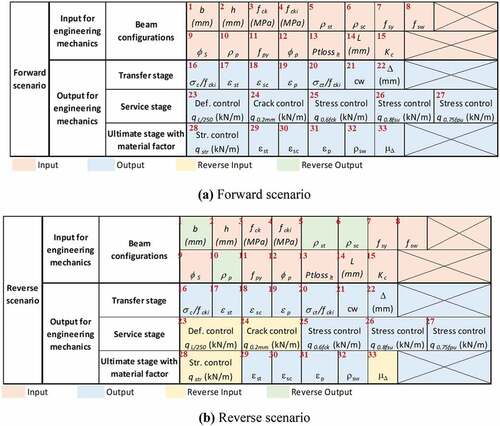

3. Design scenarios

shows the investigation of one forward design and one reverse design. An ANN predicts eighteen outputs for a set of fifteen inputs. ) depicts a reverse scenario. In a reverse scenario, four design parameters (b, ρst,ρsc, and ρp) are calculated on an output-side when nominal capacities of pre-tensioned beams (qL/250,q0.2 mm,qstr, and μΔ) are prescribed on an input-side. Q0.2 mm and μΔ represent applied loads reaching crack width of 0.2 mm and deflection ductility ratios, respectively. Nominal capacities resisting applied distributed load (qL/250) of 70 kN/m at SLL (service load level) reaching a deflection of L/250 and resisting applied distributed load (qstr) of 150 kN/m at ULL (ultimate load level) are, then, calculated.

4. Parallel training method (PTM)

4.1. Training accuracies and design tables

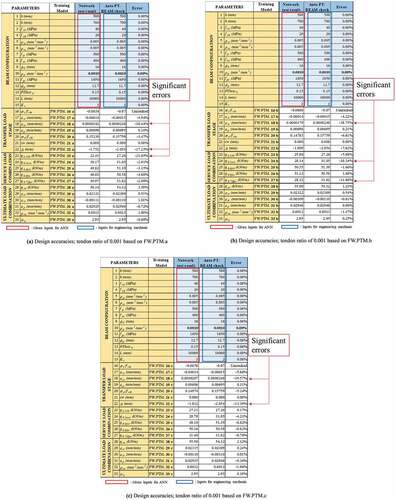

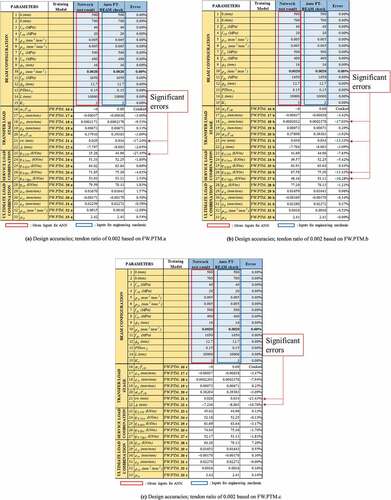

shows a training summary of forward designs based on PTM. Each output parameter of the forward ANNs is mapped by 15 input parameters separately with 500 validation checks. The FW.PTM a, b, and c models are based on 15 layers-15 neurons, 20 layers-20 neurons, and 25 layers-25 neurons, respectively. Each training ANN is denoted by FW.PTM.16a, for example, where FW.PTM means a forward ANN trained by PTM while 16a implies output sequential number concerning the 16th output which is σct/fcki as shown in ). Training accuracies with the number of layers and neurons of forward designs based on PTM for 18 output parameters are summarized in where training accuracies in mapping Output 16–22, Output 23–27, and Output 28–33 are presented. Two types of designs with tendon ratios set as 0.001 and 0.002 are, then, performed as shown in respectively where output parameters are obtained in shown in Boxes 16 to 33 for given input parameters shown in Boxes 1 to 15. Three designs are performed by FW.PTM.a, FW.PTM.b, and FW.PTM.c based on 15 layers-15 neurons, 20 layers-20 neurons, and 25 layers-25 neurons, respectively. The design accuracies are verified by structural mechanics-based software AutoPTbeam. The largest errors for tendon ratios ρp of 0.0010 and 0.002 are found for an upper rebar strain εst of −19.57% and −21.43% at a transfer stage, respectively, when an ANN is trained based on FW.PTM.18c. Notably, design accuracies are reduced when compared to those based on TED as shown in when networks are trained on each output parameter separately, however, the error is still significant based on PTM. CTS or CRS methods that were developed by (Hong, Pham, and Nguyen Citation2021) and (Hong Citation2021) can be used to improve design accuracy further. Lagrange-based optimizations were also developed by (Hong and Nguyen Citation2021) that demonstrates acceptable design accuracies for use in design applications.

Table 4. Training summary based on PTM for a forward design.

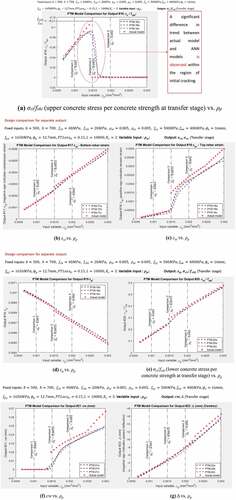

4.2. Design charts corresponding to ρp for forward design

Design charts shown in are established based on the following parameters; pre-assigned inputs are parameters b = 500 mm, h = 700 mm, fck = 40 MPa, fcki = 20 MPa, ρst = 0.005, ρsc = 0.005, fsy = 500 MPa, fsw = 400 MPa, = 16 mm, fpy = 1650 MPa,

= 12.7 mm, PTLosslt = 0.15, L = 10,000 mm, and Kc = 3; output parameter is σc/fcki at transfer stage.

In , design parameters (σc/fcki,εst,εsc,εp, and σct/fcki) are plotted in Y-axis by varying tendon ratio (ρp) which is shown with X-axis. In ), σc/fcki (Output # 16) represents upper concrete stress per concrete strength at transfer stage. Upper concrete is usually in tension during the transfer stage because the load is upward. In actual concrete sections, tension concrete stresses ( of Output # 16 and

Output # 20) immediately falls to 0 when reaching its tensile concrete strength at the transfer stage (fcki) by an assumption that tensile concrete strength is lost after cracking. A discontinuity is shown in tensile concrete sections due to this assumption, whereas an ANN with a feed-forward network approximates a discontinuity based on real-valued continuous functions. A significant difference in data tendency between tensile concrete sections and ANNs is observed at the vicinity of initial cracking.

) plotted based on present design aides for εst,εsc,εp, and σct/fcki (Output # 17, 18, 19, and 20, respectively) as a function of ρp. Design parameters (εst,εsc,εp, and σct/fcki) are obtained rapidly and accurately for a given tendon ratio (ρp). ) also show plots for cw (Output # 21) vs. ρp and Δ (Output # 22) vs. ρp, respectively.

5. Reverse design based on artificial neural networks (ANNs)

5.1. Design based on PTM using deep neural networks (DNN)

5.1.1. Formulation of ANNs based on back-substitution (BS) applicable to reverse designs

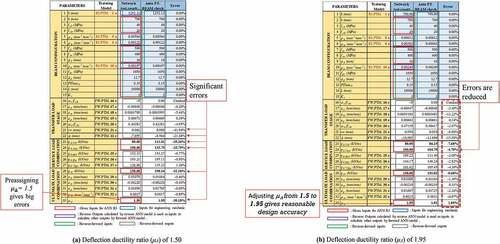

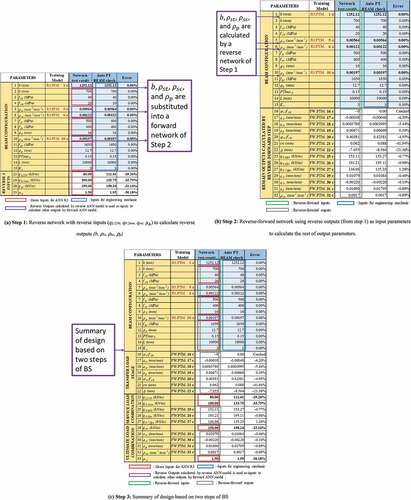

In a reverse scenario shown in ) and , design parameters (b, ρst,ρsc, and ρp in green cell) targeting nominal capacities (qL/250,q0.2 mm,qstr, and μΔ in the yellow cell) are calculated on an output-side the reverse network of Step 1. summarizes the training accuracy based on PTM. An ANN trained by R3.PTM.a based on 20 layers-20 neurons is used to calculate design parameters (b, ρst,ρsc, and ρp) as shown in Boxes 1, 5, 6, and 10 on an output-side the Step 1 of .

Figure 12. Design results based on 20 layers-20 neurons for Step 1;qL/250 = 80 kN/m at SLL (at a deflection of L/250), qstr = 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 1.50 at ULL.

Table 5. Training accuracies of a reverse scenario based on PTM; reverse network of Step 1.

An ANN with FW.PTM.c based on 40 layers and 40 neurons is implemented in training the forward network of Step 2, when 18 outputs including reverse input parameters (qL/250,q0.2 mm,qstr, and μΔ which were already prescribed in Boxes 23, 24, 28, and 33 in Step 1) are calculated in Boxes 16 to 33 on an output-side of Step 2. Fifteen input design parameters including b, ρst,ρsc, and ρp pre-calculated on an output-side the reverse network of Step 1 are used in Boxes 1 to 15 of the forward network of Step 2.

5.1.2. Design accuracies

5.1.2.1. Adjusting reverse input parameters to enhance design accuracies

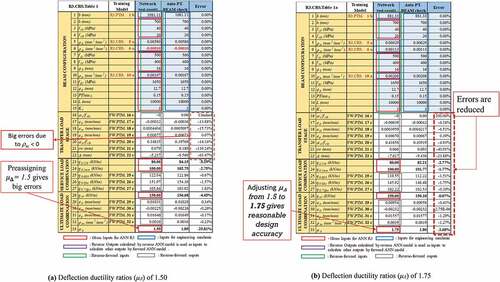

In of a reverse scenario, selected design parameters include input parameters as follows; h = 700 mm, fck = 40 MPa, fcki = 20 MPa, fsy = 500 MPa, fsw = 400 MPa, фs = 16 mm, (diameter of rebar), fpy = 1650 MPa, фp = 12.7 mm (diameter of tendon), PTLosslt = 0.15, L = 10,000, and Kc = 3 when the following reverse parameters are reversely pre-assigned on an input-side; qL/250 = 80 KN/m (SLL), q0.2 mm = 100 KN/m (SLL), qstr = 150 KN/m (ULL), and deflection ductility ratios (μΔ) of 1.5.

When a deflection ductility ratio (μΔ) of 1.50 is used, the reverse network of Step 1 produces a beam width (b) of 1252.12 mm, a lower tensile rebar ratio (ρst) of 0.00564, a compressive rebar ratio (ρsc) of 0.00122, and a tendon ratio (ρp) of 0.00197 in Boxes 1, 5, 6, and 10 on the output side of ). Significant errors are caused in a design as shown in Step 2 forward network, with the largest errors reaching −41.94% (0.062 mm vs. 0.088 mm) and −21.38% (−7.055 mm vs. −8.564 mm) for crack width and deflection (camber) at transfer load limit state, respectively, as shown in ). This is due to the reverse input parameter such as deflection ductility ratio (μΔ) of 1.50 being incorrectly pre-assigned out of data range at ULL in Step 1 of . Errors of crack width and deflection (camber) at transfer load limit state reach −8.00% (0.050 mm vs. 0.054 mm) and 15.58% (−10.985 mm vs. −12.696 mm), respectively, indicating that errors decrease when deflection ductility ratio (μΔ) of 1.50 is adjusted to 1.95 as shown in ). These design parameters lead to targeted nominal capacities resisting applied distributed load (qL/250 = 80 kN/m) shown in Box 23 of ) with an error equal to −7.68% (80 kN/m vs. 86.15 kN/m) when the deflection reaches L/250 at SLL. An error is −39.26% for qL/250 when the deflection ductility ratio (μΔ) of 1.50 is used as shown in Box 28 of ). An error of −0.68% (150 kN/m vs. 151.02 kN/m) for nominal capacities of qstr = 150 kN/m shown in Box 28 of ) is also improved when compared to an error of −32.16% shown in ).

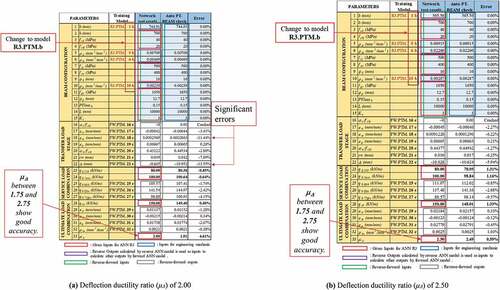

5.1.2.2. Influence of layer and neurons on design accuracies

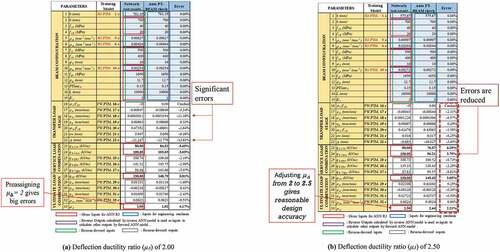

shows how a number of hidden layers and neurons influence the accuracies of reverse designs. ANNs based on R3.PTM.a, R3.PTM.b, and R3.PTM.c based on 20 layers-20 neurons, 30 layers-30 neurons, and 40 layers-40 neurons are implemented in the reverse network of Step 1 of , respectively, where the deflection ductility ratio (μΔ) of 2.00 is adjusted to 2.50. An ANN with FW.PTM.c based on 40 layers and 40 neurons is trained in the forward network of Step 2 to obtain 18 outputs in Boxes 16 to 33. The best design accuracies are found with ANNs using R3.PTM.c, which is based on 40 layers-40 neurons and based on a deflection ductility ratio (μΔ) of 2.50. The network parameters depend on a number of hidden layers and neurons. Input conflicts should also be removed by selecting reversely pre-assigned input parameters that satisfy structural mechanics.

Figure 14. Design results based on 20 layers-20 neurons for Step 1; qL/250 = 80 kN/m at SLL (at a deflection of L/250), qstr = 150 kN/m at ULL, and deflection ductility ratio (μΔ) of 2.00 and 2.50 at an ultimate load limit state (ULL).

5.2. Design based on CRS using deep neural networks (DNN)

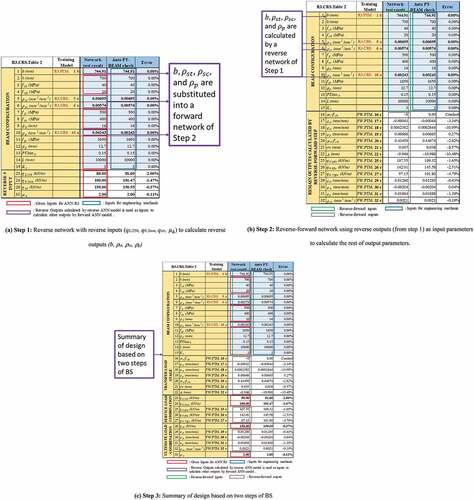

5.2.1. Formulation of ANNs based on back-substitution (BS) applicable to reverse designs

Readers are referred to the book (Hong Citation2021) to review the CRS method which is used in training the reverse network. A sequence of training networks should be determined based on the feature scores shown in . Reverse inputs (qL/250,q0.2 mm,qstr, and μΔ) are pre-assigned in the reverse network of Step 1 to calculate reverse outputs (b, ρst,ρsc, and ρp) as shown in Reverse Scenario 3 of , where training accuracies based on CRS with deep neural networks (DNN) are presented.

Table 6. Training sequence of a reverse scenario based on CRS (DNN).

Training a reverse ANN in Step 1 using the CRS method, rather than the PTM described in Section 5.1, begins with beam width (b) because beam width (b) can be used to train networks without other output parameters, such as ρst,ρsc, and ρp, as feature indexes as shown in . Important feature indexes affecting beam width (b) include h (5.77), L (10.92), qL/250 (4.14), q0.2 mm (19.05), and qstr (3.04) without ρst and ρp as shown in . Numbers in indicate feature scores selected using the NCA method (Hong and Pham Citation2021). As shown in , beam width (b) is, then, used as a feature index to train ρst, resulting in higher training accuracy for ρst. Similarly, beam width (b) and ρst are used as feature indexes to train ρp, followed by training ρsc using all output parameters, beam width (b), ρst, and ρp, as feature indexes. The output parameters located on an output-side cannot be used to train other output parameters when using the TED training method (Hong Citation2021).

5.2.2. Design accuracies

Lower rebar ratio (ρst) has a feature score of 18.70 on ρp whereas the upper rebar ratio (ρsc) is influenced by lower tensile rebar ratio (ρst) and tendon ratio (ρp) as much as 11.35 and 3.74, respectively. In the back-substitution method, ANNs with R3.CRS.aare trained based on 20 layers-20 neurons, respectively, for the reverse networks of Step 1 as shown in whereas an ANN with FW.PTM.c based on 40 layers and 40 neurons is implemented in training the forward network of Step 2 when 18 outputs including reverse input parameters (qL/250,q0.2 mm,qstr, and μΔ which are already reversely prescribed in Boxes 23, 24, 28, and 33 in Step 1) are calculated in Boxes 16 to 33 as shown in . It is noted that an ANN is trained on beam width b based on R3.PTM.b with 30 layers-30 neurons.

Figure 17. Design accuracies of a reverse scenario based on CRS (DNN); qL/250 = 80 kN/m at SLL (at a deflection of L/250), qstr = 150 kN/m at ULL, deflection ductility ratio (μΔ) of 2.00 at ULL; 20 layers-20 neurons for Step 1.

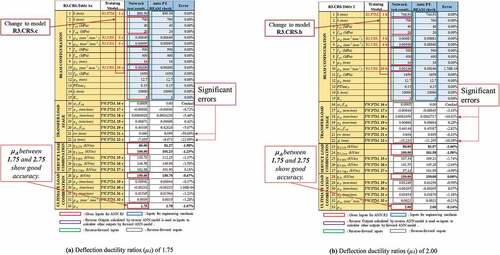

In designs, qL/250 = 80 kN/m at a deflection of L/250 at SLL and qstr = 150 kN/m at ULL are reversely pre-assigned on an input side. Design accuracies based on the reversely pre-assigned deflection ductility ratio (μΔ) of 2.00 shown in ) are acceptable. Design accuracies based on reversely pre-assigned deflection ductility ratios (μΔ) of 1.75 shown in ) are acceptable whereas those based on pre-assigned deflection ductility ratios (μΔ) of 1.50 shown in ) yield errors that are unacceptable for use in design practice. Input conflicts occur when the deflection ductility ratios (μΔ) is 1.5. Deflection ductility ratios (μΔ) are adjusted from 1.50 to 1.75 as shown in ) to be within large data ranges.

Figure 18. Design accuracies of a reverse scenario based on CRS (DNN); qL/250 = 80 kN/m at SLL (at a deflection of L/250), qstr = 150 kN/m at ULL, deflection ductility ratio (μΔ) of 1.50 adjusted to 1.75 at ULL; 20 layers-20 neurons for Step 1.

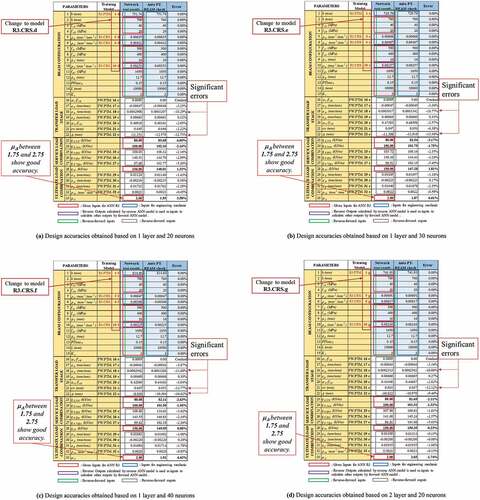

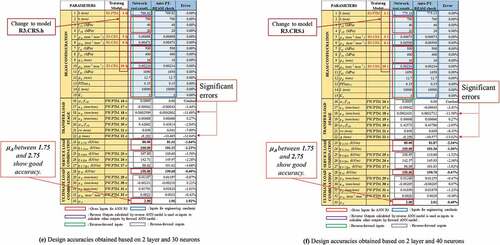

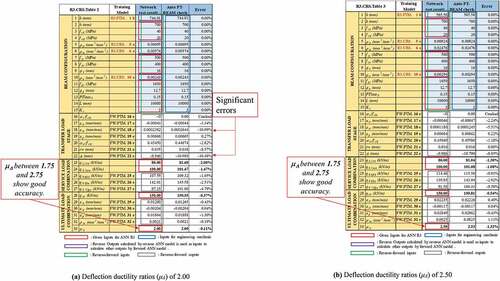

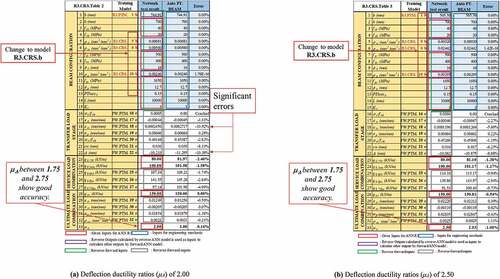

investigates the influence of a number of layers-neurons used for CRS in Step 1 on design accuracies of ANNs for a reverse design. For the reverse networks of Step 1, ANNs with R3.CRS.a, R3.CRS.b, and R3.CRS.c are trained using 20 layers-20 neurons, 30 layers-30 neurons, and 40 layers-40 neurons, respectively, as shown in for reversely pre-assigned deflection ductility ratios (μΔ) of 2.00 and 2.50. When FW.PTM.c based on R3.CRS.a, R3.CRS.b, and R3.CRS.c is used, design accuracies similar to those of the three networks are found. For the reverse network of Step 1 trained based on 40 layers-40 neurons shown in ), the largest error is found with −9.32% (91.98 kN/m vs.100.56 kN/m when compared to those calculated based on structural mechanics) for q0.75fpu that represents nominal strength when tendons reach stresses smaller of 0.75 fpu and fpy. The next largest error of deflection (camber) at transfer load limit state reaches −7.27% (−9.896 mm vs. −10.616 mm) compared with those calculated using structural mechanics. Good accuracies among all three designs shown in are obtained when deflection ductility ratios (μΔ) within a range between 1.75 and 2.75 are trained. Fewer input conflicts occur with a deflection ductility ratio (μΔ) of 2.50 than with 2.00.

Figure 19. Design accuracies of a reverse scenario based on CRS (DNN); qL/250 = 80 kN/m at a deflection of L/250 at SLL, qstr = 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 2.00, 2.50 at ULL; 20 layers-20 neurons for Step 1.

Figure 20. Design accuracies of a reverse scenario based on CRS (DNN); qL/250 = 80 kN/m at a deflection of L/250 at SLL, qstr = 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 2.00, 2.50 at ULL; 30 layers-30 neurons for Step 1.

Figure 21. Design accuracies of a reverse scenario based on CRS (DNN); qL/250, 80 kN/m at a deflection of L/250 at SLL, qstr 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 2.00, 2.50 at ULL; 40 layers-40 neurons for Step 1.

It should be noted that networks used in the first reverse networks of Step 1 can be replaced by CRS to improve design accuracy.

5.2.3. Over-fitting

Because over-fitting occurs during training ANNs, the design accuracies are obtained using R3.CRS.c (40 layers-40 neurons) in are slightly lower than those obtained using R3.CRS.a (20 layers-20 neurons) and R3.CRS.b (30 layers-30 neurons) in . When 20 layers-20 neurons are used, design accuracies comparable to those obtained with 30 layers-30 neurons are obtained.

5.2.4. Design charts

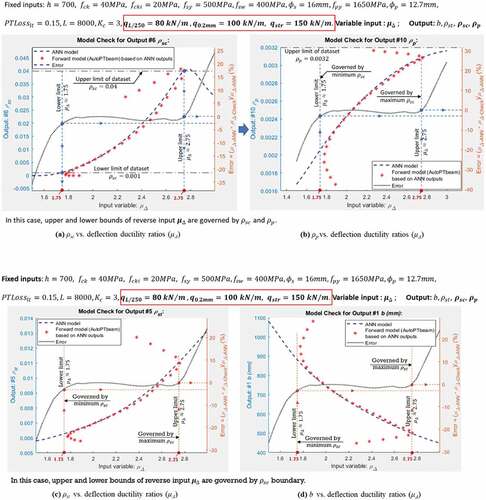

) present reciprocal design charts for design parameters of ρsc,ρp,ρst, and b concerning ductility (μΔ). Design parameters of ρsc,ρp,ρst, and b are determined accurately based on deflection ductility ratios (μΔ) within ranges between 1.75 and 2.75 as shown in ), indicating that upper and lower bounds of deflection ductility ratios (μΔ) are governed by of ρsc,ρp,ρst, and b as can be seen in . Design charts to determine ρsc,ρp,ρst, and b are obtained using obtained based on the boundary of deflection ductility ratios (μΔ) between 1.75 and 2.75. Errors increase rapidly outside this region in which AutoPTbeam results also diverge. ) are useful for calculating design parameters, ρsc,ρp,ρst, and b, for specified deflection ductility ratios (μΔ) between 1.75 and 2.75.

5.2.5. Design examples

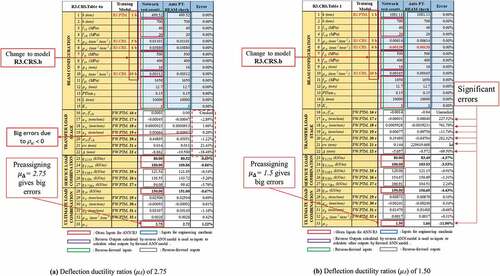

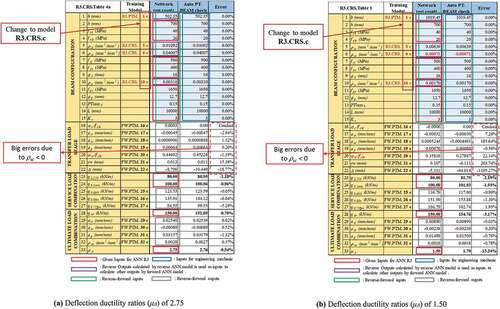

show additional design examples for a reverse scenario based on CRS (DNN) that investigate the influence of a number of layers-neurons for Step 1 on design accuracies. In , ANNs with R3.CRS.b and R3.CRS.c are trained using 30 layers-30 neurons and 40 layers-40 neurons, respectively, for the reverse networks of Step 1 when the deflection ductility ratios (μΔ) of 2.75 and 1.50 at ULL are reversely pre-assigned based on qL/250 = 80 kN/m at SLL and qstr = 150 kN/m at ULL. ANNs with FW.PTM.c based on 40 layers and 40 neurons are used to train the forward network of Step 2, when 18 outputs including reverse input parameters (qL/250,q0.2 mm,qstr, and μΔ which are already reversely prescribed in Boxes 23, 24, 28, and 33 in Step 1) are calculated in Boxes 16 to 33 as shown in . However, design accuracies are not acceptable because the pre-assigned deflection ductility ratios (μΔ) are not with the range between 1.75 and 2.75 as shown in .

Figure 23. Design accuracies of Reverse Scenario based on CRS (DNN); qL/250 = 80 kN/m at a deflection of L/250 at SLL, qstr = 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 2.75, 1.50 at ULL; 30 layers-30 neurons for Step 1.

Figure 24. Design accuracies of Reverse Scenario based on CRS (DNN); qL/250 = 80 kN/m at a deflection of L/250 at SLL, qstr = 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 2.75, 1.50 at ULL; 40 layers-40 neurons for Step 1.

Figure 25. Design accuracies of Reverse Scenario based on CRS (DNN); qL/250 = 80 kN/m at a deflection of L/250 at SLL, qstr = 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 2.50, 3.00 at ULL; 30 layers-30 neurons for Step 1.

Figure 26. Design accuracies of Reverse Scenario based on CRS (DNN); qL/250 = 80 kN/m at a deflection of L/250 at SLL, qstr = 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 2.50, 3.00 at ULL; 40 layers-40 neurons for Step 1.

Figure 27. Design accuracies of Reverse Scenario based on CRS (DNN); qL/250 = 80 kN/m at a deflection of L/250 at SLL, qstr = 150 kN/m at ULL, and deflection ductility ratios (μΔ) of 1.75, 2.00 at ULL; 40 layers-40 neurons and 30 layers-30 neurons for Step 1.

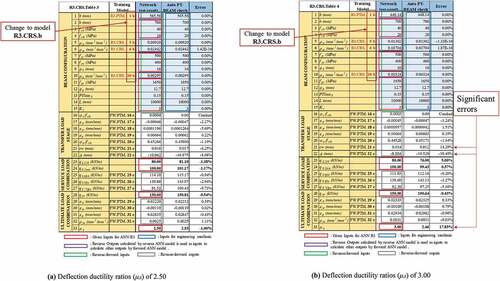

In , ANNs with R3.CRS.b and R3.CRS.c are trained using 30 layers-30 neurons and 40 layers-40 neurons, respectively, for the reverse networks of Step 1 when deflection ductility ratios (μΔ) of 2.50 and 3.00 at ULL are reversely pre-assigned based on qL/250 = 80 kN/m at SLL and qstr = 150 kN/m at ULL. ANNs with FW.PTM.c based on 40 layers and 40 neurons are used to train the forward network of Step 2, when 18 outputs including reverse input parameters (qL/250,q0.2 mm,qstr, and μΔ which are already reversely prescribed in Boxes 23, 24, 28, and 33 in Step 1) are calculated in Boxes 16 to 33 as shown in . Design accuracies based on the pre-assigned deflection ductility ratio (μΔ) of 2.50 are improved because the pre-assigned deflection ductility ratio (μΔ) is within the range between 1.75 and 2.75 whereas design accuracies based on the pre-assigned deflection ductility ratio (μΔ) of 3.00 are not improved as much as those with deflection ductility ratio (μΔ) of 2.50. After all, the ductility ratio (μΔ) of 3.00 falls outside the range between 1.75 and 2.75 as shown in .

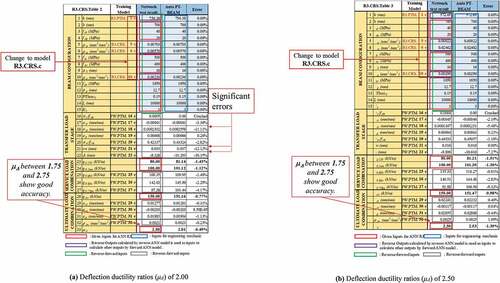

In ), ANNs with R3.CRS.c and R3.CRS.b are trained based on 40 layers-40 neurons and 30 layers-30 neurons for the reverse networks of Step 1, respectively. The deflection ductility ratios (μΔ) of 1.75 at ULL for ) and 2.00 at ULL and for ) are reversely pre-assigned based on qL/250 = 80 kN/m at SLL and qstr = 150 kN/m at ULL. ANNs with FW.PTM.c based on 40 layers and 40 neurons are implemented in training the forward network of Step 2 when 18 outputs including reverse input parameters (qL/250,q0.2 mm,qstr, and μΔ which are already reversely prescribed in Boxes 23, 24, 28, and 33 in Step 1) are calculated in Boxes 16 to 33 as shown in ). Design accuracies based on the pre-assigned deflection ductility ratio (μΔ) of 2.00 shown in ) are improved because the ductility ratio (μΔ) of 2.00 is within the range between 1.75 and 2.75 whereas design accuracies based on the ductility ratio (μΔ) of 1.75 shown in ) are not improved as much as those with the ductility ratio (μΔ) of 2.00. This is due to the ductility ratio (μΔ) of 1.75 being on the data region’s boundary, as shown in .

5.3. Design based on CRS using shallow neural networks (SNN)

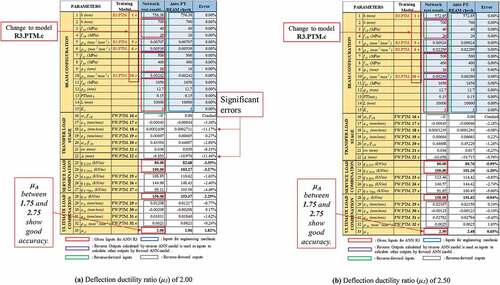

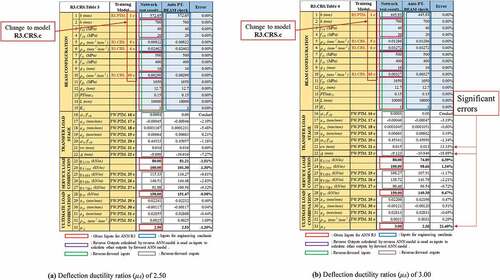

presents training accuracies of a reverse scenario based on CRS using three types of deep and six types of shallow layers implemented in obtaining reverse outputs (b, ρst,ρsc, and ρp) in the reverse networks (based on DNN and SNN) of Step 1. The sequence of the training networks determined for deep layers is also used for shallow networks as can be seen in . The reverse output parameter, beam width b, is firstly obtained in Box 1 of ) based on R3.CRS.d. The rest of the reverse output parameters, ρst,ρsc, and ρp, based on R3.CRS.d are also obtained in Boxes 5, 6, and 10 of ), respectively. R3.CRS.a to R3.CRS.i using three types of deep and six types of shallow layers shown in are tested to determine R3.CRS.d which produced the best design accuracies for b, ρst,ρsc, and ρp. Deep ANNs trained by R3.PTM.a to R3.PTM.c are used to map input parameters to beam width b based on 20 to 40 two hidden layers with 20 to 40 neurons and shallow ANNs trained by R3.PTM.d to R3.PTM.i based on one and two hidden layers with 20 to 40 neurons.

Table 7. Training accuracies of a reverse scenario based on deep and shallow ANNs; training accuracies obtained by mapping b based on PTM and ρst,ρsc, and ρp based on CRS.

Similarly, deep ANNs trained by R3.CRS.a to R3.CRS.c based on 20 to 40 hidden layers – 20 to 40 neurons and shallow ANNs trained by R3.CRS.d to R3.CRS.i based on 1 and 2 hidden layers – 20 to 40 neurons are used to map input parameters to the rest of the reverse output parameters, ρst,ρsc, and ρp, respectively, For example, R3.CRS.10e represents an ANN trained based on 1 hidden layer – 30 neurons to obtain ρp in the reverse network of Step 1 as shown in . ANNs with FW.CRS.c are implemented in determining 18 outputs in the forward network of Step 2 as shown in Boxes 16 to 33 of .

A dataset representing 15% of the total large datasets that are not used to train networks is used to verify training accuracies. Users can, however, provide the dataset ratio for verifying training accuracies. ) investigate the impact of a number of hidden layers and neurons implemented in Step 1 reverse networks on design accuracies obtained in Step 2 forward networks. When using shallow ANNs trained by R3.CRS.d to R3.CRS.i, design accuracies are similar to those obtained by deep ANNs trained by R3.CRS.a to R3.CRS.c, implying that ANNs trained with the CRS method in the reverse forward network of Step 1 can serve to determine accurate reverse outputs (b, ρst,ρsc, and ρp) with both deep and shallow layers as long as enough neurons are used. However, design accuracies heavily depend on the ranges of datasets. Pre-assigning a deflection ductility ratio (μΔ) out of the ranges between 1.7 to 2.75 causes significant errors as shown in . The reversely pre-assigned input parameters should be adjusted to reduce design accuracies within the ranges between 1.7 to 2.75.

In , design accuracies are compared with those based on structural calculations. Acceptable design accuracies are found as shown in ) where the a deflection ductility ratio (μΔ) of 2.00 is reversely pre-assigned on an input side. Errors of upper rebar strains (εsc) and deflection (camber) at transfer load limit state range from −10.77% to −11.68% and from −9.46% to −16.62%, respectively, when a reverse scenario is designed based on ANNs trained by R3.PTM.d, R3.CRS.e, R3.CRS.f, R3.CRS.g, R3.CRS.h, R3.CRS.i with 1 layer – 20 neurons as shown in ), respectively, where the deflection ductility ratios (μΔ) of 2.00.

5.4. Comparison of design based on CRS with those based on PTM

) based on deep neural networks trained by R3.CRS.b (30 layers-30 neurons for the reverse network of Step 1) shows higher design accuracies compared with those obtained in ) based on ANNs trained by R3.PTM.c (40 layers-40 neurons) and ) based on shallow neural networks trained by R3.CRS.h (2 layers-30 neurons) when the deflection ductility ratios (μΔ) of 2.00 is reversely pre-assigned on an input-side.

6. Conclusions

Based on ANNs, this study demonstrates how to design pre-tensioned concrete beams. To establish reverse design scenarios, large amounts of input and output parameters are generated. For engineers, reverse designs with 15 input and 18 output parameters are proposed using ANN-trained reverse-forward networks. Here are some of the study’s findings.

(1) Two-step networks are formulated to solve reverse design scenarios. In the reverse network of Step 1, reverse output parameters are determined which are, then, used as input parameters in the forward network of Step 2 to determine the design parameters.

(2) TED, PTM, and CRS can be used for both the reverse and forward networks with selected training parameters such as a number of hidden layers, neurons, and validation checks. Extra input features can be selected from the output-side when training ANNs based on CRS which is not possible with TED, in which all inputs are mapped to entire outputs simultaneously. For additional explanations of training methods such as CRS and TED, readers are referred to the book (Hong Citation2021) and (Hong Citation2019).

(3) Selection of training networks depends on many parameters such as feature scores, the volume of datasets, and types of big datasets. In particular, when the volume of datasets is insufficient, deep neural networks (DNN) provide less accurate design accuracies than shallow neural networks (SNN). Deep neural networks trained with CRS outperform shallow neural networks trained with CRS and ANNs trained with PTM for the reverse network in Step 1.

(4) ANNs formulated to train reverses datasets generated from pre-tensioned concrete beams are adequate to produce acceptable design accuracies for use in practical designs. Design tables derived from reverse designs can be extended to plot design charts that connect all design parameters to achieve entire designs in a streak. The reverse design facilitates the rapid identification of design parameters, helping engineers with fast decisions based on acceptable accuracies.

Acknowledgments

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT 2019R1A2C2004965).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Won-Kee Hong

Dr. Won-Kee Hong is a Professor of Architectural Engineering at Kyung Hee University. Dr. Hong received his Master’s and Ph.D. degrees from UCLA, and he worked for Englelkirk and Hart, Inc. (USA), Nihhon Sekkei (Japan) and Samsung Engineering and Construction Company (Korea) before joining Kyung Hee University (Korea). He also has professional engineering licenses from both Korea and the USA. Dr. Hong has more than 30 years of professional experience in structural engineering. His research interests include a new approach to construction technologies based on value engineering with hybrid composite structures. He has provided many useful solutions to issues in current structural design and construction technologies as a result of his research that combines structural engineering with construction technologies. He is the author of numerous papers and patents both in Korea and the USA. Currently, Dr. Hong is developing new connections that can be used with various types of frames including hybrid steel–concrete precast composite frames, precast frames and steel frames. These connections would help enable the modular construction of heavy plant structures and buildings. He recently published a book titled as “Hybrid Composite Precast Systems: Numerical Investigation to Construction” (Elsevier).

Manh Cuong Nguyen

Manh Cuong Nguyen is currently enrolled as a master candidate in the Department of Architectural Engineering at Kyung Hee University, Republic of Korea. His research interest includes AI and precast structures.

Tien Dat Pham

Tien Dat Pham is currently enrolled as a Ph.D. candidate in the Department of Architectural Engineering at Kyung Hee University, Republic of Korea. His research interest includes AI and precast structures.

Thuc Anh Le

Thuc Anh Le is currently enrolled as a master candidate in the Department of Architectural Engineering at Kyung Hee University, Republic of Korea. Her research interest includes AI and precast structures.

References

- Alhassan, M. A., A. N. Ababneh, and N. A. Betoush. 2020. “Innovative Model for Accurate Prediction of the Transfer Length of Prestressing Strands Based on Artificial Neural Networks: Case Study.” Case Studies in Construction Materials 12: e00312. doi:10.1016/j.cscm.2019.e00312.

- CEN (European Committee for Standardization). (2004). “Eurocode 2 : Part 1-1: General Rules and Rules for Buildings.” In Eurocode 2: Vol. BS En 1992.

- Hong, W. K. 2019. Hybrid Composite Precast Systems: Numerical Investigation to Construction. Elsevier: Woodhead Publishing.

- Hong, W. K. 2021.Artificial intelligence-based Design of Reinforced Concrete Structures.Daega.

- Hong, W. K., and M. C. Nguyen. 2021. “AI-based Lagrange Optimization for Designing Reinforced Concrete Columns.” Journal of Asian Architecture and Building Engineering 1–15. doi:10.1080/13467581.2021.1971998.

- Hong, W. K., V. T. Nguyen, and M. C. Nguyen. 2021. “Artificial intelligence-based Noble Design Charts for Doubly Reinforced Concrete Beams.” Journal of Asian Architecture and Building Engineering 1–23. doi:10.1080/13467581.2021.1928511.

- Hong, W.-K., M. C. Nguyen, and T. D. Pham. 2022. “Optimized Interaction P-M Diagram for Rectangular Reinforced Concrete Column Based on Artificial Neural Networks Abstract.” Journal of Asian Architecture and Building Engineering. doi:10.1080/13467581.2021.2018697.

- Hong, W. K., and T. D. Pham. 2021. “Reverse Designs of Doubly Reinforced Concrete Beams Using Gaussian Process Regression Models Enhanced by Sequence training/designing Technique Based on Feature Selection Algorithms.” Journal of Asian Architecture and Building Engineering 1–26. doi:10.1080/13467581.2021.1971999.

- Hong, W. K., T. D. Pham, and V. T. Nguyen. 2021. “Feature Selection Based Reverse Design of Doubly Reinforced Concrete Beams.” Journal of Asian Architecture and Building Engineering 1–25. doi:10.1080/13467581.2021.1928510.

- Khandel, O., M. Soliman, R. W. Floyd, and C. D. Murray. 2021. “Performance Assessment of Prestressed Concrete Bridge Girders Using Fiber Optic Sensors and Artificial Neural Networks.” Structure and Infrastructure Engineering 17 (5): 605–619. doi:10.1080/15732479.2020.1759658.

- Lee, K., S. Jeong, S. H. Sim, and D. H. Shin. 2019. “A Novelty Detection Approach for Tendons of Prestressed Concrete Bridges Based on A Convolutional Autoencoder and Acceleration Data.” Sensors (Switzerland) 19 (7). doi:10.3390/s19071633.

- MathWorks, (2022a). Deep Learning Toolbox: User's Guide (R2022a). Retrieved July 26, 20122 from: https://www.mathworks.com/help/pdf_doc/deeplearning/nnet_ug.pdf

- MathWorks, (2022b). Parallel Computing Toolbox: Documentation (R2022a). Retrieved July 26, from: https://uk.mathworks.com/help/parallel-computing/

- MathWorks, (2022c). Statistics and Machine Learning Toolbox: Documentation (R2022a). Retrieved July 26, from: https://uk.mathworks.com/help/stats/

- MathWorks, (2022d). MATLAB (R2022a)

- Mo, Y. L., and R. H. Han. 1995. “Investigation of Prestressed Concrete Frame Behavior with Neural Networks.” Journal of Intelligent Material Systems and Structures 6 (4): 566–573. doi:10.1177/1045389X9500600414.

- Naser, M. Z., and A. Alavi. 2020. “Insights into Performance Fitness and Error Metrics for Machine Learning.” arXiv Preprint arXiv 2006: 00887.

- Nguyen, M. C., and W. K. Hong. 2021. “Analytical Prediction of Nonlinear Behaviors of Beams post-tensioned by Unbonded Tendons considering Shear Deformation and Tension Stiffening Effect.” Journal of Asian Architecture and Building Engineering 1–22. doi:10.1080/13467581.2021.1908299.

- Slowik, O., D. Lehký, and D. Novák. 2021. “Reliability-based Optimization of a Prestressed Concrete Roof Girder Using a Surrogate Model and the double-loop Approach.” Structural Concrete 22 (4): 2184–2201. doi:10.1002/suco.202000455.

- Solhmirzaei, R., H. Salehi, V. Kodur, and M. Z. Naser. 2020. “Machine Learning Framework for Predicting Failure Mode and Shear Capacity of Ultra High Performance Concrete Beams.” Engineering Structures 224 (August): 111221. doi:10.1016/j.engstruct.2020.111221.

- Sumangala, K., and C. Antony Jeyasehar. 2011. “A New Procedure for Damage Assessment of Prestressed Concrete Beams Using Artificial Neural Network.” Advances in Artificial Neural Systems 2011: 1–9. doi:10.1155/2011/786535.

- Tam, C. M., T. K. L. Tong, T. C. T. Lau, and K. K. Chan. 2004. “Diagnosis of Prestressed Concrete Pile Defects Using Probabilistic Neural Networks.” Engineering Structures 26 (8): 1155–1162. doi:10.1016/j.engstruct.2004.03.018.

- Torky, A. A., and A. A. Aburawwash. 2018. “A Deep Learning Approach to Automated Structural Engineering of Prestressed Members.” International Journal of Structural and Civil Engineering Research, January 2018: 347–352. doi:10.18178/ijscer.7.4.347-352.

- Yang, S., and Y. Huang. 2021. “Damage Identification Method of Prestressed Concrete Beam Bridge Based on Convolutional Neural Network.” Neural Computing & Applications 33 (2): 535–545. doi:10.1007/s00521-020-05052-w.