ABSTRACT

The concept of meaningful human control has taken centre stage in the debate on autonomous weapons systems (AWS). While the precise meaning of the concept remains contested, convergence is emerging of the notion that humans should keep control over the use of force. In this paper, I investigate how political actors make sense of meaningful human control as a response to increasing use of autonomous function in weapons and how these processes intersect with imaginations of future warfare. I argue that these diverse visions of human control contribute to either the legitimisation or the delegitimisation of certain types of AWS and ways of conducting future warfare. The paper analyses the international debate about autonomous weapons systems at the United Nations and shows how the relevant actors imagine meaningful human control as a response to AWS meaningful human control. This also influences what options for political regulation are made thinkable and viable.

Introduction

The ongoing integration of Artificial Intelligence (AI) and robotics into militaries worldwide to enable increasing autonomous functions in weapon systems has accelerated in recent years. The first potential use of such an autonomous weapons system (AWS) that could search for and attack targets without human intervention was documented in 2020 (United Nations Citation2021). The Turkish Kargu-2 loitering munition, an uncrewed aerial vehicle that flies over a designated area and crashes into pre-defined targets without further human input, possibly hunted down and attacked armed forces in Libya autonomously (Kallenborn Citation2021). While there is still no absolute certainty about the autonomous capabilities of that system, developments in autonomous technologies are ongoing. The Russian war against Ukraine has once again evoked reports of the use of such systems (Kallenborn Citation2022). Developments in military systems that could identify, track and attack targets without being directly controlled by a human are on the rise. Questions about the ethical, legal and security-related challenges posed by such autonomous weapons systems, how they might be put to use in future warfare and options for their political regulation and governance have gained increasing academic attention (see introductory article of this special issue). Even though it is not uncommon to regulate technologies that can be used for warfare—as the examples of nuclear power or chemical and biological agents demonstrate—the pre-emptive regulation or prohibition of specific technologies before they are fully developed or fielded is rather exceptional (Carpenter Citation2014, 104; Garcia Citation2015; Rosert and Sauer Citation2021). Nevertheless, AWS have been on the agenda of the United Nations Convention on Certain Conventional Weapons (CCW) since 2014. While the political deliberations about the possibility of regulating AWS were formalised through the Group of Governmental Experts (GGE) in 2017, the technological developments of automated and autonomous features in weapons are continuing largely unchecked.

The ongoing political and academic debate on AWS is characterised by contested notions of their precise definition, and discord on how to address the potential legal, ethical and security challenges such systems raise through reducing human reasoning and decision-making capacities. One of the few areas of convergence seems to be the notion that humans must remain in control of the use of force in warfare (Boulanin et al. Citation2020; Crootof Citation2016; Ekelhof Citation2019). The primacy of human decision-making and responsibility was also captured as political consensus in the 11 Guiding Principles of the GGE in 2019 as the first substantive results of the debate (UN-CCW Citation2019). The concept of meaningful human control (MHC) has emerged as a response to the multiplicity of challenges posed by the increasing automatisation and autonomisation of warfare. However, there is not much agreement on what human control means conceptually or in practice, and even less on how human control is supposed to be meaningful (International Panel on the Regulation of Autonomous |Weapons Citation2019; Citation2021).

Research on autonomous weapon systems and algorithmic warfare has increasingly looked into how the co-constitution of politics and autonomous technologies takes place in discourses and practices (Amoore Citation2009; Bode and Huelss Citation2018; Suchman Citation2020). Yet, less attention has been paid to the question of how the actors involved in the political process themselves make sense of MHC in the debate on autonomous weapons systems. How, then, do actors imagine (meaningful) human control over autonomous weapons systems, and what options for regulating future warfare become viable or untenable through these visions? This matters because “the emergence of new weapons systems with autonomous features can shape the way force is used and affects our way of understanding of what is appropriate” (Bode and Huelss Citation2022, 8), thereby legitimising certain kinds of violence and warfare in the future. In this article, I advance two arguments: 1) I argue that the discursive and practical ambiguity surrounding the concept of MHC allows for more leeway in the concrete policy response; 2) by investigating how policymakers, practitioners, activists and scientists create meaning and reconcile competing visions of MHC, I show how these imaginings of human control contribute to the legitimisation or delegitimisation of certain types of autonomous weapons systems and ways of waging future war.

The article proceeds as follows. First, I present an overview of how the debate on autonomous weapon systems became internationally relevant, and the challenges associated with increasing autonomy in weapons systems. The conceptual framework is introduced in the second section of this article and provides a contribution to the growing theoretical attention to imaginations and visions of international security and warfare. Drawing on literature on the algorithmic turn in security studies (Bellanova, Jacobsen, and Monsees Citation2020; Leese and Hoijtink Citation2019; Suchman Citation2020), and specifically on recent research on imaginaries in international security (Csernatoni Citation2022; Martins and Mawdsley Citation2021; McCarthy Citation2021) and AI narratives (Cave, Dihal, and Dillon Citation2020a), this section builds a framework for analysing the different visions and narratives of human control that are advanced in the political discourse at the GGE. Methodological considerations of how to research visions in discourses are then introduced. The subsequent analysis proceeds in three steps: 1) mapping of the relevant actors in the debate of the GGE on LAWS; 2) identification of the prevalent visions translated into narratives of meaningful human control along the clusters of actors; and 3) an in-depth discourse analysis of how these narratives contribute to the legitimisation or delegitimisation of certain autonomous technologies and their use in future warfare. The article concludes that the ambiguity and vagueness of the concept also runs the risk of increasingly turning human control into a panacea that will either be rendered meaningless or pave the way to discursively legitimise certain ways of algorithmic warfare and violence.

The international debate on autonomous weapons systems

The debate on how to regulate autonomous weapons systems took off in the early 2010s with the International Committee for Robot Arms Control (ICRAC), a network of academics concerned with the potential problems of the military use of autonomy, being the first to address the issue (Sharkey Citation2012; Asaro Citation2012). In 2012 the non-governmental organisation (NGO) Human Rights Watch took interest in the topic and published the first report on the matter (Human Rights Watch Citation2012), forming the Campaign to Stop Killer Robots with other NGOs and civil society actors involved a year later (Carpenter Citation2014; Rosert and Sauer Citation2021). After taking up the issue at the UN Human Rights Council by Special Rapporteur on Extrajudicial Killings Christof Heyns (Citation2013), governments agreed on multilateral informal expert talks starting in 2014 at the UN CCW. During the 2016 CCW Review Conference, the state parties to the CCW decided to implement a formal open-ended Group of Governmental Experts (GGE), specifically focused on lethal autonomous weapons systems (LAWS)Footnote1, to further the discussion on the topic (UN-CCW Citation2016). The GGE remains the only international forum to discuss the different dimensions of LAWS and it is mandated to find consensus recommendations for state parties to negotiate. The specific arrangement of an outcome document varies from a code of conduct, legally binding treaty or additional protocol to the CCW to reiterations that existing international humanitarian law (IHL) is sufficient to govern applications of autonomy in weapons systems.

One of the most fundamental problems to this day has been finding a common understanding of what an autonomous weapons system is, what its characteristics are and how to differentiate between different levels of automation or autonomy. At its core, the discussion about coming up with a generally agreed upon definition is important in order to precisely delineate what we are talking about (Ekelhof Citation2017; Horowitz Citation2016). Part of the definitional problem is how to draw the line between potentially legal technologies, which could also include weapons technologies with certain autonomous functions that exist already, and those that would be deemed wholly incompatible with international legal and ethical norms. Many existing weapons systems already rely on autonomous functions, especially in defensive close-in weapon systems, surface-to-air missile systems, or the above-mentioned loitering munitions (Bode and Watts Citation2021; Boulanin and Verbruggen Citation2017). The focus on the critical functions of selecting and engaging targets in the absence of immediate human decision-making or intervention has become the foundation of the international debate (ICRC Citation2021; United States Department of Defense Citation2012). It also allows the discussion to focus on the human element in the use of force, and how to conceptualise meaningful human control specifically over these critical elements in warfare.

Many scholars perceive the development of autonomous weapons as a challenge to the future security and stability of the international system. Developments in autonomy could lead to arms races and escalation, accidental warfare due to machine error, and could lower the threshold for the use of force if states can spare their own human soldiers’ lives (i.e. Altmann and Sauer Citation2017; Horowitz Citation2019). On a legal level, many law experts and scholars debate whether autonomous systems would be able to adhere to the basic IHL principles of distinction, proportionality, and military necessity (Crootof Citation2015; Egeland Citation2016; Garcia Citation2016). Legal and ethical considerations also grapple with the issue of responsibility and accountability for actions by autonomous machines (Saxon Citation2022). Another ethical question concerns human dignity and whether machines should be able to make life and death decisions over human beings in the first place (Asaro Citation2012; Rosert and Sauer Citation2019; Sharkey Citation2019). As a response to the ethical, legal, and security policy challenges, the concept of meaningful human control has gained widespread attention. From the very beginning, the question of how to ensure (meaningful) human control has been a central feature of the academic but also of the political discussion on AWS. Brought forward by the NGO Article Citation36 (Citation2013), it has been subject to heated debate at the political process at the GGE on LAWS. The very concept of meaningful human control remains contested and is subject to diverging interpretations by actors involved in the GGE.

The co-constitution of autonomous weapons systems and political regulation

The previous section has highlighted the uncertainty, ambiguities, and diverging interpretations of the potential future developments and impacts of autonomous weapons systems. How we understand these technologies, how meaning is constructed and knowledge is produced, has repercussions for how and what political and social options are made viable and intelligible. As Schwarz (Citation2021) points out, much of the public debate on autonomous weapons systems, within and outside the UN, takes place with a clear instrumentalist view of these technologies. This perspective is marked by an understanding of technologies as mere instruments or tools in the hands of technologically advanced countries, and implies that humans retain full agency and decision-making capacities vis-à-vis their deployment. However, these accounts offer only a partial picture at best, as they predominantly treat the weapons technologies themselves as black boxes and as separate from their social and political contexts (Drezner Citation2019). Theoretical developments in the intersection of critical security studies and science and technology studies (STS) emphasise that technologies are deeply embedded in social processes and the contexts in which they are developed and applied. As sociotechnical systems, these technologies are co-constitutive with international politics, not separate from them (Coker Citation2018; Leese and Hoijtink Citation2019).

Imaginaries and narratives of future warfare and autonomous technologiesFootnote2

The reliance on imagination and visions of technologies and future warfare characterises much of the academic and political debate on emerging technologies in the field of AWS. The uncertainty that is at the heart of the international system lends itself to imagining futures that influence present policy-making. Imaginations are a very human way to deal with unknowable futures and mitigate uncertainty. Therefore, politics are always future-oriented in that they attempt to regulate those imagined futures (Stevens Citation2016). Imagining the future is also an important (de-)legitimisation tactic, since these imaginations and ideas of the future influence what actors perceive as potential modes of warfare, pathways for technological developments and finally viable options for dealing with them in the present: “visions of the future serve to regulate the present in historically unprecedented ways” (Stevens Citation2016, 95).

Imaginations are a constitutive element in the formation of social and political life. Scholars in security studies and International Relations (IR) are increasingly making use of theoretical insights by STS on the concept of imaginary.Footnote3 Despite the variety of definitions and uses of the term “imaginary” (for an overview see McNeil et al. Citation2017), they share an understanding of imaginaries as the basis for collective interpretations of the social world. This is accompanied by a growing interest in IR to analyse how actors make sense of—or imagine—the future, and the influence thereof on shaping present social and political life. The concept of sociotechnical imaginaries (STI) especially has gained traction in the field of international security (Berling, Surwillo, and Sørensen Citation2022; Csernatoni Citation2022; Martins and Mawdsley Citation2021; McCarthy Citation2021). While terms like (sociotechnical) imaginaries, visions, narratives or discourses are interrelated and are often used interchangeably, some conceptual clarifications are necessary. STI are produced through discourses and practices alike—they rely on language as well as material performance. They are “collectively held, institutionally stabilized, and publicly performed visions of desirable futures” (Jasanoff Citation2015, 4). Narratives play an important role in the formation of imaginaries, but they also portray a wider range of visions apart from the dominant vision that forms the STI (Cave, Dihal, and Dillon Citation2020b; McCarthy Citation2021). Visions, like narratives, are more diffuse and held by smaller groups. Processes of collective imaginary-building can turn a variety of visions into a stable and predominant imaginary (Csernatoni Citation2022; Hilgartner Citation2015).

Narratives lend themselves especially well to analysing different understandings of technology and how actors make sense of them in a messy world. The analysis of narratives as organising visions and a specific representation of technology has been an integral part of the critical study of military technologies, i.e. in the analysis of narratives around ballistic missile defence (Peoples Citation2010), the gendered language of nuclear weapons (Cohn Citation1987), and most recently, in the imaginative thinking about AI technologies (Cave, Dihal, and Dillon Citation2020b). The way that AI and autonomous technologies are portrayed and represented in narratives can facilitate to understand their political, social and ethical consequences and visions of meaningful human control as a response to these potential implications. These narratives also shape the socio-political possibilities for regulating AI-related technologies, and autonomous weapons systems specifically (Bareis and Katzenbach Citation2022). Narratives of violence and violent imaginaries can serve to examine how certain modes of warfare and uses of violent technologies are legitimised. Narratives produce and reproduce violence as social phenomena “which render violent conflict a legitimate and widely accepted mode of human conduct” (Jabri Citation1996, 1). Violent imaginaries are one of these representations that legitimise violence by making it imaginable in the first place (Demmers Citation2017; Schröder and Schmidt Citation2001). In this article, I analyse narratives as the discursive manifestation of visions that are in competition for becoming stabilised and collectively held imaginaries.

Methodological reflections

The study of imaginations and visions of future warfare and technologies lends itself particularly well to an interpretivist methodology that enquires how actors make sense of the social and political world (Berling, Surwillo, and Sørensen Citation2022, 8; Jasanoff Citation2015, 24; Schwartz-Shea and Yanow Citation2012). Deploying an interpretive approach in this article allows me to analyse how visions of human control legitimise or delegitimise technological changes in the development of weapons with certain autonomous functions and the social practices of utilising the military technologies in specific modes of warfare (Chenou Citation2019; Csernatoni Citation2022). Analysing the discourse about meaningful human control elucidates how the contestation of the concept brings about different narratives that can shape and manifest the imaginary of human control. While a collectively shared and stabilised imaginary of human control has not (yet) manifested, the narratives that are deployed shape it through ongoing reformulations and renegotiations.

By using a critical discourse analysis (CDA), I endeavour to uncover the sense-making of sociotechnical futures and how they shape socio-political order and options for regulation in the present (Bareis and Katzenbach Citation2022; Klimburg-Witjes Citation2023). The CDA studies language as the conveyor of meanings and interpretations and focuses on how meaning-making reproduces structures of power and inequality. Its strength lies in the contextualisation of the discourse into broader social and political structures in which actors are embedded (Fairclough, Mulderrig, and Wodak Citation2013; McCarthy Citation2021). Therefore, it is important to consider when the first visions were produced in the discourse, who was involved and how they saw potential governance and regulation of new types of technology (Martins and Mawdsley Citation2021).

The analysis commenced with an abductive exploration of the primary sources of the GGE on LAWS debate to identify the main actors as well as their positioning in the discursive space. The documents span official statements and working papers as well as written contributions by a total number of 34 actors—ranging from states to civil society organisations to other entities—from the initiation of the GGE in 2017 to 2022.Footnote4 The actors were then clustered together with what narratives of meaningful human control they most frequently engage with and how they are situated in the discourse with other actors. The next section presents the results of these first two steps of the analysis. Lastly, the CDA will unpack the five prevalent narratives of meaningful human control, situate them within the wider political and academic contexts and examine how they are applied as (de-)legitimisation strategies. In this main section of the analysis, these diverging and somewhat contradictory narratives about human control over the use of force are being illustrated by exemplary quotes by the respective actors. In this process of constant re-negotiation of meaning-making, different visions of human control emerge that compete for the role of an overarching imaginary.

Meaningful human control between legitimisation and delegitimisation of autonomous weapons systems and future warfare

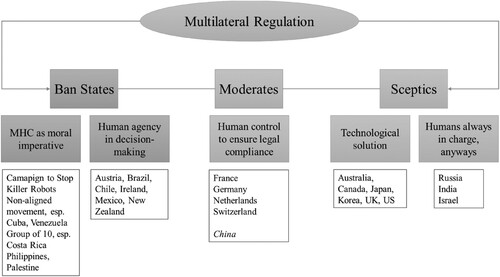

The mapping categorises the relevant actors, that is those that contribute regularly and substantially to the deliberations at the GGE, into three groups: ban advocates, moderates and sceptics (based on Bode and Huelss Citation2022, 35). For one, this mapping shows the diversity of positions regarding the possibilities of multilateral regulation of autonomous weapons. Second, it shows a clear power divide: those countries that are more likely to develop these weapons technologies are against regulation while those that are more likely to be at the receiving end of them, especially countries from the Global South, are advocating for preventive regulation (Bode Citation2019). shows the categorisation of actors according to where they stand with regard to their imagination of MHC over autonomous weapons systems.

In the analysis of the international debate on AWS, I have identified five key narratives that exemplify the diverging visions of MHC. The group of states that are sceptical of a multilateral regime to regulate AWS can be divided into two subgroups due to the different narratives of human control they employ. Countries like Australia, Canada, Japan, the Republic of Korea, the United Kingdom and the United States envision a technological solution to the issue of control over AWS. Others, most prominently Russia, Israel and India, imagine humans always being in charge of the use of force in warfare, and therefore that no regulation of AWS would be necessary. Even though the sceptical countries never explicitly refer to MHC as a concept in the debate—instead using other terms such as “human supervision”, “involvement” or “judgement”, or more generally, “human command and control” (Australia et al. Citation2022)—they do utilise distinct narratives as a response to MHC. On the other side of the spectrum, actors that advocate for a legally binding prohibition of AWS can also be divided into two subgroups according to the two narratives of MHC that the respective states place at the forefront of their reasoning. The first subgroup, consisting of the Campaign to Stop Killer Robots, the non-aligned movement and the Group of 10Footnote5 envisions MHC as a moral imperative based on ethical considerations of preserving human dignity in warfare. Even though the second subgroup, comprised of Austria, Brazil, Chile, Ireland, Mexico and New Zealand, also advocates for a legally binding regulation of AWS, they predominantly imagine MHC as the primacy of human agency in decision-making in the use of force and the conduct of warfare. Lastly, the moderate group, consisting of Germany, France, the Netherlands, Switzerland and to some degree China, all imagine human control as an assurance of legal compliance when using AWS. China is difficult to pinpoint because they rarely refer to other states’ narratives, and their definition of AWS as self-evolving, “intelligent” weapons systems that evade all possibilities for human intervention (China Citation2018) differs greatly from common understandings. Yet, their narrative of human control is also a rather legalistic.

shows the different narratives of MHC that have emerged as a reference point in the debate, while the concept itself remains rather vague. This inherent imprecision, some argue, can be beneficial, because it leaves room for flexibility and interpretation to convince more states to agree on it (Crootof Citation2016). The NGO Article 36—the creator of the concept—admits to this openness, since it is not meant to be an end in itself but to open pathways to think about the need for regulating AWS (Moyes Citation2016). Furthermore, the addition of “meaningful” has mostly been dropped from the debate, as agreeing on the precise nature of what exactly it entails seems to be too contentious. In the following empirical analysis, I expand on the rationales behind these different visions and how they are embedded in distinct narratives in the debate. The ways in which the future is imagined and articulated in these narratives influences perceptions of technology in warfare, human-machine relations, and human agency in relation to AWS.

No need for human control: legitimising autonomous weapons systems through technical solutions

The sceptical group in the debate at the GGE advances specific visions of how future warfare will be fought with autonomous technologies that do not require human control to be codified or formalised as a distinct concept. The US argument that “LAWS should effectuate the intent of commanders and operators” (United States Citation2021, 5) reinforces an instrumentalist narrative in which weapons technologies are seen as neutral tools in the hands of human operators (Schwarz Citation2021). The instrumentalist narrative underscores a vision in which a technological solution for the problem of AWS is all that is needed. It can go as far as justifying that humans are always in charge, regardless, and thus the codification of a new legal norm of human control is not needed. Israel, for instance, argues that “all weapons, including LAWS, are and will always be utilized by humans. (…) Humans are always those who decide, and LAWS are decided upon” (Israel Citation2018). By placing the human as the ultimate decision-maker in every instance of using autonomous weapons systems, there is no need for any discussion about meaningful human control.

The imagination of future warfare and human-machine relations is influenced by references to today’s nature of control in warfare. The distributed nature of control in military decision-making is probably best represented by air defence systems with automated and autonomous functions, such as is argued by the United States:

In some cases, less human involvement might be more appropriate. For example, in certain defensive autonomous weapon systems, such as the Phalanx Close-In Weapon System, the AEGIS Weapon System, and Patriot Air and Missile Defense System, the weapon system has autonomous functions that assist in targeting incoming missiles or other projectiles. The machine can strike incoming projectiles with much greater speed and accuracy than a human gunner could achieve manually (United States Citation2018, 2).

The Russian Federation presumes that potential LAWS can be more efficient than a human operator in addressing the tasks by minimizing the error rate. (…) The use of highly automated technology can ensure the increased accuracy of weapon guidance on military targets, while contributing to lower rate of unintentional strikes against civilians and civilian targets (Russian Federation Citation2019, 4).

The imagination of future warfare as a combination of humans and machines legitimises warfare with very specific visions of how this technological solution will affect war in a positive way:

Warfare is highly complex and requires a high degree of human-machine teaming to support effective decision-making. (…) The effective combination of both will improve capability, accuracy, diligence and speed of decisions, whilst maintaining and potentially enhancing confidence in adherence to IHL. (United Kingdom Citation2018).

Meaningful human control as moral human agency: delegitimising autonomous weapons use in future warfare

Those who try to politically regulate or pre-emptively ban AWS often refer to the need for human control as a moral and ethical consideration. The vision of human control as a moral imperative has gained widespread attention and is reproduced in the narrative of guaranteeing human dignity in the conduct of warfare. Even if autonomous weapons systems were better at complying with directives and distinguishing between combatants and civilians than human soldiers, should machines be allowed to make decisions over life and death (Rosert and Sauer Citation2019)? The threat to human dignity cannot be overcome by technological improvements to accuracy or predictability.

Allowing innate human qualities to inform the use of force is a moral imperative. Weapons systems do not possess compassion and empathy, key checks on the killing of civilians. Furthermore, as inanimate objects, machines cannot truly appreciate the value of human life and thus delegating life-and-death decisions to machines undermines the dignity of their victims (Human Rights Watch Citation2018).

Human decision-making capacities takes centre stage in this narrative because “the more complex and speedy the digital back-end, the more limited are the human capacities, the greater the scope for mediation and the greater the capacity for the technology to direct the human’s practices and focus” (Schwarz Citation2021, 56). With the human increasingly become part of the human-machine complex through higher levels of technological integration and features of human-machine interaction, real human agency actually fades away. The lines between machine and human decision-making become increasingly blurry, especially in targeting practices, where human agency becomes increasingly diffused (Danielsson and Ljungkvist Citation2022).

Meaningful human control as moral human agency in decision-making delegitimises the use of certain autonomous technologies in warfare not only on ethical but also on legal grounds. Imagining human control as a way to ensure compliance with international law also rests on the centrality of human agency to assume responsibility over the use of force and to remain accountable (Saxon Citation2022). The moderate group at the GGE frequently refers to a two-tier approach developed by France and Germany which would “outlaw fully autonomous lethal weapons systems operating completely outside human control and a responsible chain of command, as well as regulate other lethal weapons systems featuring autonomy in order to ensure compliance with the rules and principles of international humanitarian law” (Finland et al. Citation2022, 1).

The significance of human agency in imagining algorithmic war has become more central in critical discourses. However, the narrative of moral human agency and MHC leaves a critical blind spot: Who is the human in this debate and who is regarded as a “meaningful human” who can exercise control over these systems? The debate over MHC reflects a specific Western narrative of an “idealized, rational, rights-holding, masculine individual at its core” (Williams Citation2021, 2). The meaningfulness of being human and the attribution of agency is therefore not a given: it is discursively produced within certain imaginations of future warfare and socio-technical systems. What is more, the discourse itself exhibits epistemic power imbalances. Those against whom autonomous weapons systems will most likely be used do not have a voice in this discourse, and their knowledge is hardly taken into consideration. The imagination of future uses of AWS in war not only points towards the provisions of war by those who wage it, but also those who will be the victims of that war: “We cannot but extrapolate that, in all probability, the Global South is where autonomous weapons systems will be initially tested and used by the developers of these systems” (Palestine Citation2021). Meaningful human control is imagined to provide a way out of the legacies of violence and war and to delegitimise certain ways of waging war in the future.

Conclusion

The concept of meaningful human control has emerged in the political as well as the academic debate as a central response to increasing developments in autonomy in weapons systems. While questions of technology and international security have been receiving ever more scholarly attention, the narratives and imaginations of emerging technologies, human-machine relations, and future warfare have so far been understudied. In this article I have argued that how actors involved in the political debate on AWS make sense of intangible socio-technical systems and envision MHC as a response is essential to legitimising or delegitimising future warfare. Visions of the future are an important (de-)legitimisation tactic in the debate, and some imagined futures de facto decrease regulatory incentives for states.

The analysis has shown that, while the concrete definition and meaning of human control remain contested, it is nonetheless imagined in myriad ways that translate into five distinct narratives in the debate. The visions by sceptical states in the debate highlight how certain types of AWS and ways of waging future warfare are being increasingly legitimised. Those that favour a political regulation of AWS envision MHC as a means to regulate AWS and delegitimise their use in future war. These competing visions of MHC have normative and political implications. The narrative that humans are always in control not only makes a multilateral regulation of AWS untenable, but at the same time legitimises these weapons systems per se. In contrast, narratives of MHC as a moral imperative prioritise ethical questions and counter that AWS could not fulfil the requirements of human dignity, thereby rendering them illegitimate means of warfare. Narratives of increased accuracy and precision to protect civilians in warfare reflect a specific vision where technological improvements are imagined as the solution to the problem of algorithmic warfare and regulation over AWS becomes unnecessary. Other actors contradict these narratives by emphasising the insufficiency of a mere technological fix to preserve human agency in lethal decision-making. Lastly, moderates try to bridge the gap between these opposing imaginaries by drawing on legalistic visions of human control.

The imaginations at play here are very specific in how they pertain to a distinct vision of future war that is mostly focused on the critical targeting functions of autonomous weapons systems. At the same time, only focusing on those critical functions legitimises other military applications of AI, and only makes specific modalities of human control viable and meaningful. Mundane data practices for instance are neither questioned nor challenged—they increasingly become legitimised (Suchman, Follis, and Weber Citation2017). The narratives and imaginations present in this debate are built on techno-scientific objectivity that make the use of AWS in war thinkable and doable as a more legitimate way of warfare. Visions of how to ensure MHC as a response to the challenges posed by AWS make certain regulatory practices viable and tenable while also potentially hindering other options. The openness of human control enables actors to negotiate differing understandings and meanings. This flexibility, however, also runs the risk of rendering human control void of any meaning or significance, or making it impossible in the end (Bode and Watts Citation2021; Schwarz Citation2021). This analysis has shown that the legitimisation of certain types of algorithmic violence is already underway, by making them imaginable and thereby viable.

Acknowledgements

I presented various draft versions of this article at different academic conferences and want to thank everyone for their valuable feedback. My special thanks go out to the convenors of this Special Issue. I want to specifically thank Ingvild Bode, Kate Chandler, Denise Garcia, Lauren Gould, Marijn Hoijtink, and Bao-Chau Pham. I also want to acknowledge the very constructive comments by the two anonymous reviewers who have made this a great experience for an early-career scholar.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 When explicitly referring to the debate at the GGE I will use the term “LAWS”, and will use “AWS” in this article generally.

2 I am sincerely grateful to the anonymous Reviewer 2 who has pushed me to clarify my conceptual framework and the distinction between the concepts of imaginaries, narratives, visions and discourses used in this article. Their comments and suggestions were tremendously helpful.

3 STS has a rich history of theoretical conceptualisations of the imaginary, ranging from the technoscientific imaginary as “future possibility through technoscientific innovation” (Marcus Citation1995, 4), modern social imaginaries (Taylor Citation2004) or sociotechnical imaginaries as a way of understanding the relationship between national technoscientific policies and social order (Jasanoff and Kim Citation2009).

4 In 2021 and 2022 I conducted field research at the GGE on LAWS meetings at the UN in Geneva. Therefore, some of the observations in the analysis are informed by my participation in these meetings.

5 The Group of 10 is a loose coalition of ten states from the Global South that have joint forces in the debate: Argentina, Costa Rica, Ecuador, El Salvador, Panama, the State of Palestine, Peru, the Philippines, Sierra Leone and Uruguay. In some instances other countries have joined this group, namely Kazakhstan and Nigeria.

References

- Altmann, Jürgen, and Frank Sauer. 2017. “Autonomous Weapon Systems and Strategic Stability.” Survival 59 (5): 117–142. https://doi.org/10.1080/00396338.2017.1375263

- Amoore, Louise. 2009. “Algorithmic War: Everyday Geographies of the War on Terror.” Antipode 41 (1): 49–69. https://doi.org/10.1111/j.1467-8330.2008.00655.x

- Amoroso, Daniele, and Guglielmo Tamburrini. 2021. “Toward a Normative Model of Meaningful Human Control Over Weapons Systems.” Ethics & International Affairs 35 (2): 245–272. https://doi.org/10.1017/S0892679421000241

- Article 36. 2013. “Structuring Debate on Autonomous Weapons Systems: Memorandum for Delegates to the Convention on Certain Conventional Weapons (CCW).” Accessed March 18, 2022. https://article36.org/wp-content/uploads/2020/12/Autonomous-weapons-memo-for-CCW.pdf.

- Asaro, Peter. 2012. “On Banning Autonomous Weapon Systems: Human Rights, Automation, and the Dehumanization of Lethal Decision-Making.” International Review of the Red Cross 94 (886): 687–709. https://doi.org/10.1017/S1816383112000768

- Australia, Canada, Japan, Republic of Korea, United Kingdom, and United States. 2022. “Principles and Good Practices on Emerging Technologies in the Area of Lethal Autonomous Weapons Systems: 07.03.2022.” https://meetings.unoda.org/meeting/57989/documents.

- Bareis, Jascha, and Christian Katzenbach. 2022. “Talking AI Into Being: The Narratives and Imaginaries of National AI Strategies and Their Performative Politics.” Science, Technology, & Human Values 47 (5): 855–881. https://doi.org/10.1177/01622439211030007.

- Bellanova, Rocco, Katja L. Jacobsen, and Linda Monsees. 2020. “Taking the Trouble: Science, Technology and Security Studies.” Critical Studies on Security 8 (2): 87–100. https://doi.org/10.1080/21624887.2020.1839852

- Berling, Trine V., Izabela Surwillo, and Sandra Sørensen. 2022. “Norwegian and Ukrainian Energy Futures: Exploring the Role of National Identity in Sociotechnical Imaginaries of Energy Security.” Journal of International Relations and Development 25 (1): 1–30. https://doi.org/10.1057/s41268-021-00212-4

- Bode, Ingvild. 2019. “Norm-Making and the Global South: Attempts to Regulate Lethal Autonomous Weapons Systems.” Global Policy 10 (3): 359–364. https://doi.org/10.1111/1758-5899.12684

- Bode, Ingvild, and Hendrik Huelss. 2018. “Autonomous Weapons Systems and Changing Norms in International Relations.” Review of International Studies 44 (3): 393–413. https://doi.org/10.1017/S0260210517000614

- Bode, Ingvild, and Hendrik Huelss. 2022. Autonomous Weapons Systems and International Norms. Montreal & Kingston: McGill-Queen's University Press.

- Bode, Ingvild, and Tom Watts. 2021. Meaning-Less Human Control: Lessons from Air Defence Systems on Meaningful Human Control for the Debate on AWS. Joint Report, Drone Wars UK, Oxford and Centre for War Studies, Odense.

- Boulanin, Vincent, Neil Davison, Netta Goussac, and Moa P. Carlsson. 2020. Limits on Autonomy in Weapon Systems: Identifying Practical Elements of Human Control: Report, Stockholm International Peace Research Institute and International Committee of the Red Cross. Stockholm. https://www.sipri.org/sites/default/files/2020-06/2006_limits_of_autonomy.pdf.

- Boulanin, Vincent, and Maaike Verbruggen. 2017. Mapping the Development of Autonomy in Weapon Systems: Report, Stockholm International Peace Research Institute. Stockholm. https://www.sipri.org/sites/default/files/2017-11/siprireport_mapping_the_development_of_autonomy_in_weapon_systems_1117_1.pdf.

- Brazil, Chile, Mexico. 2021. “Elements for a Future Normative Framework Conducive to a Legally Binding Instrument to Address the Ethical, Humanitarian and Legal Concerns Posed by Emerging Technologies in the Area of (Lethal) Autonomous Weapons (LAWS): Written Commentary.” https://reachingcriticalwill.org/images/documents/Disarmament-fora/ccw/2021/gge/documents/Brazil-Chile-Mexico.pdf.

- Carpenter, R. C. 2014. Lost Causes: Agenda Vetting in Global Issue Networks and the Shaping of Human Security. Ithaca: Cornell University Press.

- Cave, Stephen, Kanta Dihal, and Sarah Dillon. 2020a. AI Narratives: A History of Imaginative Thinking About Intelligent Machines. Oxford: Oxford University Press.

- Cave, Stephen, Kanta Dihal, and Sarah Dillon. 2020b. “Introduction: Imagining AI.” In AI Narratives: A History of Imaginative Thinking About Intelligent Machines, edited by Stephen Cave, Kanta Dihal, and Sarah Dillon, 1–22. Oxford: Oxford University Press.

- Chenou, Jean-Marie. 2019. “Elites and Socio-Technical Imaginaries: The Contribution of an IPE-IPS Dialogue to the Analysis of Global Power Relations in the Digital Age.” International Relations 33 (4): 595–599. https://doi.org/10.1177/0047117819885161a

- China. 2018. “Position Paper, UN-CCW GGE on LAWS: 11.04.2018.” CCW/GGE.1/2018/WP.7.

- Cohn, Carol. 1987. “Sex and Death in the Rational World of Defense Intellectuals.” Signs: Journal of Women in Culture and Society 12 (4): 687–718. https://doi.org/10.1086/494362.

- Coker, Christopher. 2018. “Still ‘The Human Thing’? Technology, Human Agency and the Future of War.” International Relations 32 (1): 23–38. https://doi.org/10.1177/0047117818754640

- Crootof, Rebecca. 2015. “The Killer Robots Are Here: Legal and Policy Implications.” Cordozo Law Review 36 (5): 1837–1915.

- Crootof, Rebecca. 2016. “A Meaningful Floor for “Meaningful Human Control”.” Temple International & Comparative Law Journal 30 (1): 53–62.

- Csernatoni, Raluca. 2022. “The EU’s Hegemonic Imaginaries: From European Strategic Autonomy in Defence to Technological Sovereignty.” European Security 31 (3): 395–414. https://doi.org/10.1080/09662839.2022.2103370

- Cummings, M. L. 2019. “Lethal Autonomous Weapons: Meaningful Human Control or Meaningful Human Certification? [Opinion].” IEEE Technology and Society Magazine, 20–26. https://doi.org/10.1109/MTS.2019.2948438.

- Danielsson, Anna, and Kristin Ljungkvist. 2022. “A Choking(?) Engine of War: Human Agency in Military Targeting Reconsidered.” Review of International Studies, 1–21. https://doi.org/10.1017/S0260210522000353.

- Demmers, Jolle. 2017. Theories of Violent Conflict: An Introduction. 2nd ed. London: Routledge.

- Drezner, Daniel W. 2019. “Technological Change and International Relations.” International Relations 33 (2): 286–303. https://doi.org/10.1177/0047117819834629

- Egeland, Kjølv. 2016. “Lethal Autonomous Weapon Systems Under International Humanitarian Law.” Nordic Journal of International Law 85 (2): 89–118. https://doi.org/10.1163/15718107-08502001

- Ekelhof, Merel. 2017. “Complications of a Common Language: Why It Is so Hard to Talk About Autonomous Weapons.” Journal of Conflict and Security Law 22 (2): 311–331. https://doi.org/10.1093/jcsl/krw029

- Ekelhof, Merel. 2019. “Moving Beyond Semantics on Autonomous Weapons: Meaningful Human Control in Operation.” Global Policy 10 (3): 343–348. https://doi.org/10.1111/1758-5899.12665

- Fairclough, Norman, Jane Mulderrig, and Ruth Wodak. 2013. “Critical Discourse Analysis.” In Critical Discourse Analysis: Volume I: Concepts, History, Theory, edited by Ruth Wodak, 79–101. Los Angeles: Sage.

- Finland, France, Germany, the Netherlands, Norway, Spain, and Sweden. 2022. “Working Paper, UN-CCW GGE on LAWS: 13.07.2022.” https://documents.unoda.org/wp-content/uploads/2022/07/WP-LAWS_DE-ES-FI-FR-NL-NO-SE.pdf.

- Garcia, Denise. 2015. “Humanitarian Security Regimes.” International Affairs 91 (1): 55–75. https://doi.org/10.1111/1468-2346.12186

- Garcia, Denise. 2016. “Future Arms, Technologies, and International Law: Preventive Security Governance.” European Journal of International Security 1 (1): 94–111. https://doi.org/10.1017/eis.2015.7

- Heyns, Christof. 2013. “Report of the Special Rapporteur on Extrajudicial, Summary or Arbitrary Executions: General Assembly Human Rights Council Twenty-Third Session.” A/HRC/23/47.

- Hilgartner, Stephen. 2015. “Capturing the Imaginary: Vanguards, Visions and the Synthetic Biology Revolution.” In Science and Democracy: Making Knowledge and Making Power in the Biosciences and Beyond, edited by Stephen Hilgartner, Clark Miller, and Rob Hagendijk, 33–55. London: Routledge.

- Holland, Michel A. 2020. The Black Box, Unlocked: Predictability and Understandability in Military AI. Geneva: United Nations Institute for Disarmament.

- Holland, Michel A. 2021. Known Unknowns: Data Issues and Military Autonomous Systems. Geneva: United Nations Institute for Disarmament.

- Horowitz, Michael. 2016. “Why Words Matter: The Real World Consequences of Defining Autonomous Weapons Systems.” Temple International & Comparative Law Journal 30 (1): 85–98.

- Horowitz, Michael. 2019. “When Speed Kills: Lethal Autonomous Weapon Systems, Deterrence and Stability.” Journal of Strategic Studies 42 (6): 764–788. https://doi.org/10.1080/01402390.2019.1621174

- Horowitz, Michael, and Paul Scharre. 2015. “Meaningful Human Control in Weapon Systems: A Primer.” Working Paper.

- Human Rights Watch. 2012. “Losing Humanity: The Case Against Killer Robots.” Accessed May 17, 2019. https://www.hrw.org/report/2012/11/19/losing-humanity/case-against-killer-robots.

- Human Rights Watch. 2018. “Statements at the CCW GGE on LAWS on Agenda Item 6b: 11.04.2018.” https://docs-library.unoda.org/Convention_on_Certain_Conventional_Weapons_-_Group_of_Governmental_Experts_(2018)/2018_LAWS6b_HRW.pdf.

- ICRC. 2021. “ICRC Position on Autonomous Weapon Systems.” https://www.icrc.org/en/document/icrc-position-autonomous-weapon-systems.

- International Panel on the Regulation of Autonomous Weapons. 2019. “Focus on Human Control: Report No. 5.” www.ipraw.org.

- International Panel on the Regulation of Autonomous Weapons. 2021. “Building Blocks for a Regulation on LAWS and Human Control: Updated Recommendations to the GGE on LAWS.” www.ipraw.org.

- Israel. 2018. “Statement at the CCW GGE on LAWS on Agenda Item 6b: 11.04.2018.” https://docs-library.unoda.org/Convention_on_Certain_Conventional_Weapons_-_Group_of_Governmental_Experts_(2018)/2018_LAWS6b_Israel.pdf.

- Jabri, Vivienne. 1996. Discourses on Violence: Conflict Analysis Reconsidered. Manchester: Manchester University Press.

- Jasanoff, Sheila. 2015. “Future Imperfect: Science, Technology, and the Imaginations of Modernity.” In Dreamscapes of Modernity: Sociotechnical Imaginaries and the Fabrication of Power, edited by Sheila Jasanoff, and Sang-Hyun Kim, 1–33. Chicago: The University of Chicago Press.

- Jasanoff, Sheila, and Sang-Hyun Kim. 2009. “Containing the Atom: Sociotechnical Imaginaries and Nuclear Power in the United States and South Korea.” Minerva 47 (2): 119–146. https://doi.org/10.1007/s11024-009-9124-4.

- Kallenborn, Zachary. 2021. “Was a Flying Killer Robot Used in Libya? Quite Possibly.” https://thebulletin.org/2021/05/was-a-flying-killer-robot-used-in-libya-quite-possibly/#post-heading.

- Kallenborn, Zachary. 2022. “Russia May Have Used a Killer Robot in Ukraine. Now What?” https://thebulletin.org/2022/03/russia-may-have-used-a-killer-robot-in-ukraine-now-what/.

- Klimburg-Witjes, Nina. 2023. “A Rocket to Protect? Sociotechnical Imaginaries of Strategic Autonomy in Controversies About the European Rocket Program.” Geopolitics Online first.

- Leese, Matthias, and Marijn Hoijtink. 2019. “How (Not) To Talk About Technology: International Relations and the Question of Agency.” In Technology and Agency in International Relations, edited by Marijn Hoijtink, and Matthias Leese, 1–23. London: Routledge.

- Marcus, George E. 1995. “Introduction.” In Technoscientific Imaginaries: Conversations, Profiles, and Memoirs, edited by George E. Marcus, 1–9. Late Editions 2. Chicago: University of Chicago Press.

- Martins, Bruno O., and Jocelyn Mawdsley. 2021. “Sociotechnical Imaginaries of EU Defence: The Past and the Future in the European Defence Fund.” JCMS: Journal of Common Market Studies 59 (6): 1–17. https://doi.org/10.1111/jcms.13197

- McCarthy, Daniel R. 2021. “Imagining the Security of Innovation: Technological Innovation, National Security, and the American Way of Life.” Critical Studies on Security, 196–211. https://doi.org/10.1080/21624887.2021.1934640

- McNeil, Maureen, Arribas-Ayllon-Michael Joan Haran, Adrian Mackenzie, and Richard Tutton. 2017. “Conceptualizing Imaginaries of Science, Technology, and Society.” In The Handbook of Science and Technology Studies, edited by Ulrike Felt, Rayvon Fouché, Clark A. Miller, and Laurel Smith-Doerr, 435–463. 4th ed. Cambridge, Massachusetts: The MIT Press.

- Moyes, Richard. 2016. “Key Elements of Meaningful Human Control.” Accessed May 20, 2022. https://www.article36.org/wp-content/uploads/2016/04/MHC-2016-FINAL.pdf.

- Palestine. 2021. “Statement at the CCW GGE on LAWS: 30.09.2021.” https://reachingcriticalwill.org/images/documents/Disarmament-fora/ccw/2021/gge/statements/30Sept_Palestine.pdf.

- Peoples, Columba. 2010. Justifying Ballistic Missile Defence: Technology, Security and Culture. Cambridge: Cambridge University Press.

- Riebe, Thea, Stefka Schmid, and Christian Reuter. 2020. “Meaningful Human Control of Lethal Autonomous Weapon Systems: The CCW-Debate and Its Implications for VSD.” IEEE Technology and Society Magazine, 36–51. https://doi.org/10.1109/MTS.2020.3031846

- Rosert, Elvira, and Frank Sauer. 2019. “Prohibiting Autonomous Weapons: Put Human Dignity First.” Global Policy 10 (3): 370–375. https://doi.org/10.1111/1758-5899.12691

- Rosert, Elvira, and Frank Sauer. 2021. “How (Not) To Stop the Killer Robots: A Comparative Analysis of Humanitarian Disarmament Campaign Strategies.” Contemporary Security Policy 42 (1): 4–29. https://doi.org/10.1080/13523260.2020.1771508.

- Russian Federation. 2019. “Potential Opportunities and Limitations of Military Uses of Lethal Autonomous Weapons Systems: CCW/GGE.1/2019/WP.1.” https://unoda-documents-library.s3.amazonaws.com/Convention_on_Certain_Conventional_Weapons_-_Group_of_Governmental_Experts_(2019)/CCW.GGE.1.2019.WP.1_R%2BE.pdf.

- Saxon, Dan. 2022. Fighting Machines: Autonomous Weapons and Human Dignity. Philadelphia: University of Pennsylvania Press.

- Schröder, Ingo, and Bettina Schmidt. 2001. “Introduction: Violent Imaginaries and Violent Practices.” In Anthropology of Violence and Conflict, edited by Bettina Schmidt, and Ingo Schröder, 1–24. London: Routledge.

- Schwartz-Shea, Peregrine, and Dvora Yanow. 2012. Interpretive Research Design: Concepts and Processes. London: Routledge.

- Schwarz, Elke. 2021. “Autonomous Weapons Systems, Artificial Intelligence, and the Problem of Meaningful Human Control.” Philosophical Journal of Conflict and Violence 5 (1): 53–72. https://doi.org/10.22618/TP.PJCV.20215.1.139004

- Sharkey, Noel. 2012. “The Evitability of Autonomous Robot Warfare.” International Review of the Red Cross 94 (886): 787–799. https://doi.org/10.1017/S1816383112000732

- Sharkey, Amanda. 2019. “Autonomous Weapons Systems, Killer Robots and Human Dignity.” Ethics and Information Technology 21 (2): 75–87. https://doi.org/10.1007/s10676-018-9494-0

- Stevens, Tim. 2016. Cyber Security and the Politics of Time. Cambridge: Cambridge University Press.

- Suchman, Lucy. 2020. “Algorithmic Warfare and the Reinvention of Accuracy.” Critical Studies on Security 8 (2): 175–187. https://doi.org/10.1080/21624887.2020.1760587

- Suchman, Lucy, Karolina Follis, and Jutta Weber. 2017. “Tracking and Targeting.” Science, Technology, & Human Values 42 (6): 983–1002. https://doi.org/10.1177/0162243917731524

- Taylor, Charles. 2004. Modern Social Imaginaries. Durham: Duke University Press.

- UN-CCW. 2016. “Final Document of the Fifth Review Conference: Fifth Review Conference of the High Contracting Parties to the Convention on Prohibitions or Restrictions on the Use of Certain Conventional Weapons Which May Be Deemed to Be Excessively Injurious or to Have Indiscriminate Effects.” CCW/CONF V/10.

- UN-CCW. 2019. “Report of the 2019 Session of the Group of Governmental Experts on Emerging Technologies in the Area of Lethal Autonomous Weapons Systems: CCW/GGE.1/2019/3.”

- United Kingdom. 2018. “Human Machine Touchpoints: The United Kingdom’s Perspective on Human Control over Weapon Development and Targeting Cycles: CCW/GGE.2/2018/WP.1.” https://docs-library.unoda.org/Convention_on_Certain_Conventional_Weapons_-_Group_of_Governmental_Experts_(2018)/2018_GGE%2BLAWS_August_Working%2BPaper_UK.pdf.

- United Nations. 2021. “Letter Dated 8 March 2021 from the Panel of Experts on Libya Established Pursuant to Resolution 1973 (2011) Addressed to the President of the Security Council: S/2021/229.” https://documents-dds-ny.un.org/doc/UNDOC/GEN/N21/037/72/PDF/N2103772.pdf?OpenElement.

- United States. 2018. “Human-Machine Interaction in the Development, Deployment and Use of Emerging Technologies in the Area of Lethal Autonomous Weapons Systems: CCW/GGE.2/2018/WP.4.” https://docs-library.unoda.org/Convention_on_Certain_Conventional_Weapons_-_Group_of_Governmental_Experts_(2018)/2018_GGE%2BLAWS_August_Working%2BPaper_US.pdf.

- United States. 2021. “U.S. Proposals on Aspects of the Normative and Operational Framework, UN-CCW GGE on LAWS: 27.09.2021.” CCW/GGE.1/2021/WP.3.

- United States Department of Defense. 2012. Directive 3000.09: Autonomy in Weapon Systems.

- Williams, John. 2021. “Locating LAWS: Lethal Autonomous Weapons, Epistemic Space, and “Meaningful Human” Control.” Journal of Global Security Studies 6: 4.