ABSTRACT

Research projects that actively involve ‘crowds’ or non-professional ‘citizen scientists’ are attracting growing attention. Such projects promise to increase scientific productivity while also connecting science with the general public. We make three contributions. First, we argue that the largely separate literatures on ‘Crowd Science’ and ‘Citizen Science’ investigate strongly overlapping sets of projects but take different disciplinary lenses. Closer integration can enrich research on Crowd and Citizen Science (CS). Second, we propose a framework to profile projects with respect to four types of crowd contributions: activities, knowledge, resources, and decisions. This framework also accommodates machines and algorithms, which increasingly complement or replace professional and non-professional researchers as a third actor. Finally, we outline a research agenda anchored on important underlying organisational challenges of CS projects. This agenda can advance our understanding of Crowd and Citizen Science, yield practical recommendations for project design, and contribute to the broader organisational literature.

1. Opening the boundaries of project participation

Science has for a long time been dominated by professional scientists who work individually or in well-defined teams, operating within a distinctive institutional setting (Shapin Citation2008; Dasgupta and David Citation1994; Strasser et al. Citation2019; Sauermann and Stephan Citation2013). More recently, projects have emerged in which the ‘lead investigators’ who organise and lead projects involve ‘crowds’ of individuals who self-select in response to open calls for participation. Notably, these crowds consist not only of professional scientists but also non-professional ‘citizens’. Rationales for involving crowds include higher scientific productivity resulting from additional labour inputs and diverse knowledge, but also the potential to bridge between science and the broader public (Bonney et al. Citation2014; Van Brussel and Huyse Citation2018). In addition to being extremely open with respect to participation, such projects also tend to be more open than traditional projects with respect to the transparency of research processes and the disclosure of intermediate results and data (Franzoni and Sauermann Citation2014). As such, Crowd Science and Citizen Science (collectively abbreviated as ‘CS’) fall within the realm of Open Innovation in Science (Beck et al. Citation2021). To illustrate, consider just three examples:

The online platform Zooniverse currently hosts over 80 research projects in the natural and medical sciences, as well as the humanities. Projects are led by professional scientists who ask the general public to help with data generation and analysis by classifying or transcribing images as well as sound and video files. The platform has a crowd of over 2.2 million volunteers who have contributed a large volume of labour to data-related tasks but who have also made important discoveries in the process (Simpson, Page, and De Roure Citation2014; Sauermann and Franzoni Citation2015). Among others, contributors have transcribed ancient papyri (Williams et al. Citation2014), have made accessible U.S. Supreme Court Judges’ conference notes for legal studies (Black and Johnson Citation2018), and have discovered new planets and other astronomical objects (Lintott et al. Citation2009). Projects have resulted in over 100 scientific publicationsFootnote1 and have made data publicly available for other researchers to build on.

Just One Giant Lab (JOGL) provides an open platform for crowds to tackle research and innovation challenges, especially related to sustainable development goals. In 2020, JOGL started the OpenCovid19 Initiative, which allows anyone to propose research projects, recruit contributors, and solicit financial as well as non-financial resources (Kokshagina Citationforthcoming; Santolini Citation2020). Crowd members who wish to join can use listings and filtering mechanisms to find projects that best match their skills and interests, and team members can interact with each other using a range of communication tools. As of April 2021, the Initiative is hosting 123 projects in areas such as tracing and predicting the spread of Covid, studying the pandemic’s socio-economic and environmental consequences, developing diagnostic tests, as well as investigating potential Covid treatments.Footnote2

The project CurieuzeNeuzen enlisted a crowd of over 2,000 citizens of the city of Antwerp to collect data on air quality and to participate in data analysis, interpretation, and the diffusion of results. The team of lead investigators was composed of volunteers and professional scientists, holding expertise in diverse areas such as scientific research, computer programming, and communication. The data produced by the project were used to investigate predictors of variation in nitrogen dioxide concentrations in cities and to validate and improve existing computer models. Project results were also used to inform policy debates and to advocate for stricter regulations regarding traffic and air pollution. A survey among participants suggested that the project increased their awareness of environmental problems as well as their intentions to take personal action to improve air quality (Van Brussel and Huyse Citation2018; Irwin Citation2018). The original CurieuzeNeuzen project has been replicated and expanded in other regions of Belgium, drawing tens of thousands of participants (Weichenthal et al. Citation2021).

Policymakers and funding agencies support Crowd and Citizen Science efforts, hoping that CS can accelerate scientific knowledge production and increase the societal impact of research (European Commission Citation2018; US Congress Citation2016). There is also a growing community of practitioners and scholars who study CS initiatives from within particular scientific domains such as biology or astronomy (Sullivan et al. Citation2009; Ponciano et al. Citation2014; European Citizen Science Association Citation2015; Petridis et al. Citation2017). However, not all observers are optimistic: Many professional scientists remain sceptical about involving non-professionals in research (Burgess et al. Citation2017), and many CS projects face challenges in attracting enough contributors, coordinating project work, ensuring the quality of processes and outputs, and reconciling scientific as well as non-scientific project goals (Sauermann et al. Citation2020; Aceves‐Bueno et al. Citation2017; Druschke and Seltzer Citation2012).

We believe that management and innovation scholars are uniquely equipped to advance research on Crowd and Citizen Science, both to understand this emerging organisational form and to improve project design for the benefit of science and society. At the same time, CS can serve as an intriguing research context to study more general questions. To stimulate and accelerate such work, this article makes three main contributions. First, we discuss similarities and differences between two streams of research that have investigated CS using the terminology of ‘Citizen Science’ and ‘Crowd Science’, respectively (Section 2). Our conclusion is that these streams study strongly overlapping sets of projects but use different disciplinary lenses. We suggest that integrating aspects of both streams may yield fruitful avenues for future research on CS. Second, scholars have proposed several taxonomies of CS projects, focusing on different and often disconnected dimensions. Although this diversity may be beneficial to satisfy different descriptive and analytical needs, it risks a fragmentation of research. We propose a multi-dimensional framework that is grounded in prior research on knowledge production and organisational theory and that highlights four fundamental types of contributions that can be made by the crowd (Section 3). We illustrate how this framework can be used to create descriptive ‘profiles’ of CS projects and discuss how it can be extended to account for the increasing role of machines as an additional actor (Section 4). Building on this framework, we outline in Section 5 an agenda for future research on CS that is anchored on four underlying organisational challenges: The division of tasks, the allocation of tasks to different actors, the provision of rewards to motivate contributions, and the provision of information to enable successful collaboration and decision making (Puranam, Alexy, and Reitzig Citation2014). Finally, we argue that CS is not only an interesting phenomenon in itself, but that the open and transparent nature of CS projects makes them an ideal context to study related phenomena such as the digital transformation of research and the role of large platforms, the increasing size and specialisation of scientific teams, crowdsourcing, co-creation, as well as the gig economy and online labour markets (Section 6).

2. Two complementary lenses: Citizen Science and Crowd Science

2.1. Citizen Science

In its most common use, the term Citizen Science refers to projects that involve non-professional ‘citizens’ directly in scientific research (Haklay et al. Citation2021). Such projects are often led by professional scientists but can also be organised entirely by citizens.Footnote3 Some of these projects have published case studies and reports on their activities, often in outlets specific to the scientific fields in which they operate (Bonney et al. Citation2009; ExCiteS Citation2019; Druschke and Seltzer Citation2012; Willett et al. Citation2013). Scholars in science and technology studies as well as the information sciences have also investigated such projects (Wiggins and Crowston Citation2011; Ceccaroni et al. Citation2019; Ottinger Citation2010).

The involvement of citizens in research has two historical origins (Strasser et al. Citation2019). One is the long tradition of ‘amateur naturalists’ and ‘gentleman scientists’ who pursued research often out of their own interest and curiosity prior to the professionalisation of science in the mid-19th century (Shapin Citation2008). A second important influence are radical science movements of the 1960s and 70s that were critical of academic and corporate science and often responded to perceived threats resulting from science and innovation such as nuclear war, air pollution, or pesticides. The primary goal of these movements was to advocate for a science that serves the interests of the citizens (Strasser et al. Citation2019; Irwin Citation2001). Mechanisms to accomplish this goal included protests, public deliberations and engagement exercises, but also efforts of citizens to produce their own knowledge without depending on the professional system.

Partly reflecting these different historical origins, there are different views regarding the nature and goals of Citizen Science (Woolley et al. Citation2016). While one main tradition of Citizen Science focuses on the potential of citizens to contribute to academic knowledge production through activities such as data collection and environmental monitoring (Bonney Citation1996), the second tradition focuses on opportunities to make science more responsive to the needs of citizens and to incorporate their local knowledge and personal experience (Irwin Citation1995). In recent work, these different streams have been synthesised into contrasting ideal-type views of CS: a ‘productivity view’ focusing on scientific output, and a ‘democratization view’ considering scientific but also non-scientific goals such as citizen empowerment, education, awareness, and advocacy (Sauermann et al. Citation2020).

Some Citizen Science practitioners and scholars continue to challenge the scientific establishment, including its professional norms and standards. Some projects also see their primary purpose in societal change, inclusiveness, or advocacy rather than the generation of scientific knowledge in the traditional sense (Ottinger Citation2010). However, ‘mainstream’ Citizen Science – as advocated and shaped by its large membership organisations in both Europe and the USA – considers the goal of knowledge production as essential (ECSA Citation2020; Turrini et al. Citation2018).

2.2. Crowd Science

The term Crowd Science refers to projects that involve larger ‘crowds’ of individuals in research. This term has emerged more recently and is used primarily in the fields of management and economics (Franzoni and Sauermann Citation2014; Scheliga et al. Citation2018; Lyons and Zhang Citation2019; West and Sims Citation2018). The focus of this work is on the fact that project participants come from outside a focal lab or research organisation and respond to open calls for contributions; relationships are governed by general participation rules rather than individual contracts. Whereas Citizen Science focuses on the fact that contributors are not professional scientists, this aspect is less central in Crowd Science: Although most Crowd Science projects engage with citizen crowds, crowds may also consist of professional scientists and other experts. Examples include Harvard Medical School’s effort to crowdsource research problems from within the university community (Guinan, Boudreau, and Lakhani Citation2013), as well as a series of Polymath projects that require high levels of training in mathematics (Polymath Citation2012). Discussions of Crowd Science typically start from the vantage point of professional scientists who enlist crowds to advance their research. As such, there has been little consideration of projects that are driven entirely by crowd members.

Research on Crowd Science draws heavily on the literature in ‘crowdsourcing’ (e.g. Tucci, Afuah, and Viscusi Citation2018; Jeppesen and Lakhani Citation2010; Poetz and Schreier Citation2012) to understand both benefits and challenges of involving crowds. Compared to (firm-sponsored) crowdsourcing, however, Crowd Science projects tend to be more transparent with respect to their internal processes and also often disclose intermediate and final results (Franzoni and Sauermann Citation2014). This openness provides opportunities for collaboration and knowledge spillovers but also creates challenges regarding incentives and appropriability (see also Dasgupta and David Citation1994). Crowd Science projects also typically do not pay crowd members for their work and do not use tournament-based financial prizes (Scheliga et al. Citation2018).Footnote4

Given their disciplinary backgrounds, scholars using the Crowd Science lens focus on potential benefits in terms of knowledge production. Although this also includes greater alignment between research and the needs of the broader public (Beck et al. Citation2020), this literature has paid little attention to non-scientific project outcomes such as citizen learning, empowerment, or advocacy. As such, it is much more aligned with the ‘productivity view’ of Citizen Science than with the ‘democratization view’ (see Section 2.1).

Most of the projects investigated in the Crowd Science literature operate online. For example, Zooniverse projects use a sophisticated internet platform to involve crowd members in the classification of digital objects such as images, videos, or audio files (Lyons, Zhang, and Rosenbloom Citation2019; Sauermann and Franzoni Citation2015). The Ludwig Boltzmann Gesellschaft has completed two projects to crowdsource research questions in mental health and traumatology, using the website ‘Tell Us!’ to solicit contributions from a broad range of individuals across time and space (Beck et al. Citation2020). Epidemium, a collaborative effort to advance cancer research using big data, brings together crowd members with cancer knowledge and data science expertise using an online platform that hosts open data sets and serves as a repository for crowd contributions (Benchoufi et al. Citation2017). Crowd Science researchers’ focus on online projects is not surprising given that internet-based technologies are often necessary to reach and organise large and diverse crowds (Brabham Citation2008; Scheliga et al. Citation2018). In contrast, Citizen Science research has studied a broader range of projects in both online and offline contexts. Even in the latter, however, technological tools and infrastructure are often critical. For example, smartphones with GPS technology and cameras have significantly increased the scale and data quality of projects in environmental monitoring (Haklay Citation2013). We will return to the important role of technology in Section 4 below.

2.3. Integration

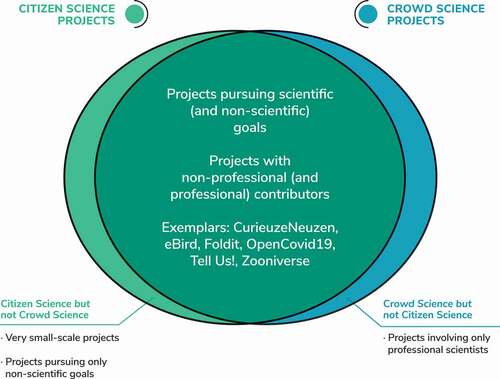

The prior discussion suggests that there are a few types of projects that would fall within the dominant definition of one of the literatures but not the other: Researchers studying Crowd Science would have little to say about small-scale projects taking place offline and pursuing primarily non-scientific goals such as advocacy, while scholars of Citizen Science would consider projects out of scope that solicit contributions only from professional scientists (see ). Upon closer inspection, however, it becomes clear that Crowd Science and Citizen Science scholars study a strongly overlapping set of projects, with landmark examples such as Zooniverse, eBird, and Foldit figuring prominently in both literatures (e.g. Sauermann and Franzoni Citation2015; Raddick et al. Citation2013). Thus, in the large majority of cases, ‘Crowd Science’ and ‘Citizen Science’ do not refer to different types of projects. Rather, these terms reflect different disciplinary lenses used to study the same general phenomenon, focusing on different features to describe projects, highlighting different mechanisms when analysing how projects operate, and using different criteria when discussing project success.Footnote5

Going forward, we expect that both streams of literature will continue to evolve and make important contributions in their respective domains.Footnote6 At the same time, there are opportunities for complementarities and convergence. For example, Crowd Science research could consider a broader range of outcomes, including scientific outputs such as data and publications but also non-scientific outcomes such as citizen learning (Beck et al. Citation2021). Similarly, Citizen Science research will increasingly investigate large-scale online projects (Newman et al. Citation2012), and theories as well as empirical methods used by scholars of Crowd Science may prove useful in the future. We hope that our discussion in subsequent sections of this article may be valuable for both communities. Indeed, we will continue to draw on both literatures when reviewing prior work and making our conceptual arguments, referring to Crowd Science and Citizen Science collectively as ‘CS’.

In the next section, we review existing taxonomies of CS and propose a more flexible multi-dimensional approach to ‘profile’ projects. This approach is useful for general descriptive purposes, while focusing on dimensions that should resonate particularly with scholars in management, economics, as well as science and innovation studies.

3. A multi-dimensional framework to profile CS projects

3.1. Limitations of existing taxonomies

Scholars have proposed a variety of taxonomies of CS projects (see for examples). Many of these have emerged for descriptive purposes or as part of evaluation practices carried out by lead investigators and funding agencies (Strasser et al. Citation2019). As such, they emphasise selected dimensions that were salient in particular contexts. Some taxonomies – especially those tied to the ‘democratization view’ of CS – involve implicit hierarchies that ascribe a higher value to projects that involve citizens more extensively (Strasser et al. Citation2019; Arnstein Citation1969).

Table 1. Examples of existing CS project taxonomies

As shown in , many taxonomies consider different underlying dimensions jointly to define mutually exclusive types of projects. While this approach can yield intuitive categories, it makes it difficult to account for less common combinations of project characteristics and to accommodate novel types of projects. For example, one prominent taxonomy distinguishes

Contributory projects that are ‘designed by scientists and for which members of the public primarily contribute data’,

Collaborative projects that are ‘designed by scientists and members of the public contribute data but also help to refine project design, analyze data, and/or disseminate findings’, and

Co-created projects that are ‘designed by scientists and members of the public working together and for which at least some of the public participants are actively involved in most or all aspects of the research process’ (Shirk et al. Citation2012).

This taxonomy originated in a context where the crowd contributed primarily data, and it is unable to accommodate prominent projects such as Foldit (where crowds help solve problems) or Tell Us! (where crowds identify research questions but are not involved in other stages of the research).

In the following, we propose to ‘profile’ CS projects using a multi-dimensional framework. The goal is not to classify projects in the sense of a taxonomy, but to describe projects flexibly with respect to the contributions that are made by the crowd at different stages of the research process. The framework highlights four types of contributions that feature prominently in research on knowledge production and organisational theory. This, in turn, enables us to analyse CS more deeply as a novel form to organise scientific research (Puranam, Alexy, and Reitzig Citation2014) and points to promising avenues for future work especially by management and innovation scholars (Section 5).

3.2. Multi-dimensional framework

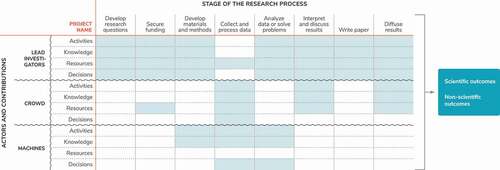

We start by conceptualising the research process as consisting of several different stages such as defining research questions, analysing data, and diffusing findings to the broader public (Beck et al. Citation2021; Shirk et al. Citation2012; Newman et al. Citation2012). These stages form the ‘backbone’ of our framework (visualised in ). Although we use a linear representation, this is clearly a simplification – research processes tend to be highly nonlinear and teams may iterate between stages. Moreover, not all projects involve all the activities depicted in and some projects may have additional stages. As such, analysts using the framework may modify the stages to ensure the inclusion of all relevant activities in a particular context and to achieve the appropriate balance between detail and parsimony. Journals and professional associations are currently going through a similar process in order to codify lists of research activities that can be used to define authorship and disclose author contributions (Sauermann and Haeussler Citation2017; CASRAI Citation2018).Footnote7

Building on this backbone, we can now characterise projects with respect to the contributions made by the crowd at each stage. In doing so, it is important to keep in mind that different projects can work with different crowds.Footnote8 For example, the crowd in the Zooniverse project Galaxy Zoo as well as in CurieuzeNeuzen included primarily regular citizens that were quite diverse with respect to the level of education, income, or demographic characteristics (Van Brussel and Huyse Citation2018; Raddick et al. Citation2013). In contrast, the crowd in a series of Polymath projects was much smaller, consisting of individuals with training in mathematics, including professionals as well as non-professionals (Franzoni and Sauermann Citation2014).Footnote9

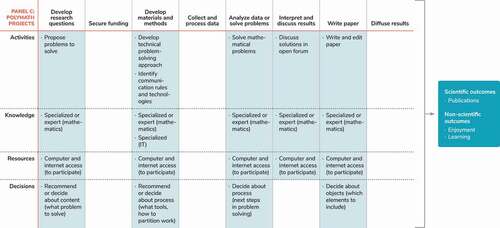

Our framework has four key dimensions: (1) the particular activities carried out by the crowd, (2) the knowledge contributed when performing these activities, (3) other resources contributed, and (4) the decisions made. shows how mapping crowd contributions along these four dimensions leads to distinct ‘profiles’ of crowd involvement, using the examples of Galaxy Zoo (Panel A), CurieuzeNeuzen (Panel B), and Polymath (Panel C). We now discuss each dimension in more detail.

Activities. The first dimension captures the particular activities performed by the crowd. This dimension is important because research activities are the essential building blocks of the knowledge production process (Haeussler and Sauermann Citation2020; Shibayama, Baba, and Walsh Citation2015). Engaging in different types of activities also allows crowd members to accomplish non-scientific goals such as learning and advocacy (Druschke and Seltzer Citation2012). At an aggregate level, information about activities is already captured by the process stages that form the backbone of the framework (e.g. developing research questions or collecting data). As such, entries for this dimension can convey more specific details about the role crowd members play. If the project profile is to be used for analytical rather than descriptive purposes, entries can also capture theoretically relevant constructs, e.g. the size or complexity of individual tasks (see Section 5 on future research opportunities regarding task division).

In Galaxy Zoo, contributors’ main task is to classify images of galaxies using a simple series of questions. Some crowd members also discuss galaxies and help others with difficult cases in a forum.Footnote10 Both of these activities fit within the broader stage of ‘collect and process data’ (, Panel A). The project CurieuzeNeuzen involved crowd members in several different stages of the research. Most importantly, they physically collected air samples outside their home windows in different locations and also provided data on traffic intensity as well as the geometrical configuration of their streets. Crowd members also participated in a presentation of results and flagged results that stood out. Based on their understanding of the local conditions, residents from particular areas of the city engaged in the verification of results and contributed insights to explain unexpected or deviant findings. Participants also contributed to the diffusion of results, e.g. by displaying posters with project results at their houses for neighbours and those passing by. In the project Polymath, crowd members participate in essentially all stages: They propose and select problems to solve, design methods through ongoing discussions, collaboratively solve problems, and help write the papers under the collective pseudonym D.H.J. Polymath (e.g. Polymath Citation2012).

Knowledge. Although some activities are primarily physical in nature (e.g. collecting air samples in CurieuzeNeuzen), almost all activities require crowd members to contribute different types of knowledge. Indeed, knowledge is a key input examined by scholars studying science and innovation processes (Singh and Fleming Citation2010; Gruber, Harhoff, and Hoisl Citation2013; Von Hippel Citation1994) and also figures prominently in discussions of crowdsourcing (Afuah and Tucci Citation2012). Within this dimension, analysts can describe concretely what particular knowledge crowd members bring to bear. For analytical purposes, it will often be useful to consider different types of knowledge that are practically or theoretically relevant. One possible distinction is that between common knowledge held in the general population, specialised knowledge held by a smaller part of the population, and domain-specific expert knowledge gained through formal education or long-term practice (Franzoni and Sauermann Citation2014).

shows the respective entries for our example projects. In Galaxy Zoo, most crowd members use common knowledge to judge basic characteristics of galaxies such as their shape. Some crowd members use specialised knowledge gained through experience on the platform to manage discussions and to help others with difficult cases. In CurieuzeNeuzen, crowd members used common knowledge to set up measurement devices outside their windows as part of the ‘collect and process data’ stage. However, they used common as well as specialised knowledge (in this case, their understanding of the local environment) when identifying interesting patterns in the data and coming up with explanations for unexpected results. They also used specialised knowledge regarding the project process and results when helping diffuse results. In Polymath, contributions require specialised or even expert knowledge in mathematics. Some crowd members also contribute specialised knowledge regarding how complex discussions can be organised effectively and what technical tools might be helpful.Footnote11

Resources other than effort and knowledge. Studies of science and knowledge production highlight that research also requires other types of resources such as money, materials, and equipment (Stephan Citation2012; Furman and Stern Citation2011). Contributions of such resources tend to be less visible in prior taxonomies of CS projects (). However, they are central to some projects such as SETI@home, where citizens contribute primarily computing power. Similarly, a growing number of projects involve crowd members primarily as providers of financial resources – e.g. on the crowdfunding platform Experiment.com (Sauermann, Shafi, and Franzoni Citation2019; Franzoni, Sauermann, and Di Marco Citation2021). Finally, some projects such as American Gut ask crowd members to collect and contribute specimens such as stool and blood samples or personal data generated via automatic tracking devices (Wiggins and Wilbanks Citation2019; McDonald et al. Citation2018; Newman et al. Citation2012).Footnote12

Even if such resources are not the crowd’s primary contribution to a project, crowd members often have to provide resources in order to perform research activities. For example, online CS projects typically require access to computers and the internet, while offline projects often require means of transportation to perform data collection or attend meetings (Newman et al. Citation2012). The lack of access to resources or the associated costs may prevent some individuals to contribute their effort and knowledge, increasing inequality and selection biases in project participation (Franzen et al. Citation2021).

To illustrate this dimension, consider again the examples in . In Galaxy Zoo, the primary resource required from contributors (other than their effort and knowledge) is computer and internet access. In CurieuzeNeuzen, crowd members provided space in front of their windows to collect air samples and contributed their own transportation to pick up and drop off collection devices and attend project meetings. Crowd members also contributed a share of the project budget through crowdfunding (Irwin Citation2018). Contributors in Polymath require primarily computer and internet access to participate in online discussions. Although we described resources quite specifically in , broader categories (e.g. tangible vs. intangible, or low vs. high cost) can also be useful.

Decisions. The fourth dimension captures to what extent crowd members contribute by taking decisions in different stages of the research. Decision-making is a pervasive but also challenging aspect of scientific research, and the decisions that are made shape both the rate and the direction of output (Latour and Woolgar Citation1979; Stephan Citation2012). Not surprisingly, decision-making is also a key process studied in the general organisational literature (Puranam, Alexy, and Reitzig Citation2014). The analyst completing a project profile may choose to describe decisions made by crowd members in specific detail, but may also indicate more generally participants’ level of involvement in decision making, ranging from none to providing input and recommendations for lead investigators (‘consultation’) to having full control over particular aspects of the project (see Arnstein Citation1969). It can also be useful to distinguish what the decisions are about, e.g. about project content (e.g. what to do), project processes (how to do), or about concrete study objects (e.g. is the bird in this image of species A or species B).

Many projects grant few decision rights to the crowd, while others allow crowd members a significant degree of influence. Indeed, decision-making is at the centre of discussions around the normatively ‘right’ degree of crowd involvement and shapes the degree to which CS can ‘democratise’ science (Arnstein Citation1969; Haklay Citation2013; Strasser et al. Citation2019).Footnote13 This final dimension also reveals important connections to prior dimensions. For example, different types of activities (dimension 1) tend to involve different types of decisions, and different types of decisions likely require different kinds of knowledge (dimension 2).

shows the key decisions made by crowd members in our three example projects. In Galaxy Zoo, crowd members have little control over the project content or process. The main decisions they make are tied directly to the primary task – classifying galaxies by deciding between different options (e.g. smooth vs. spiral galaxy). Participants in CurieuzeNeuzen were involved in a somewhat broader range of decisions such as where to locate collection devices (within guidelines set by the lead investigators) or providing recommendations regarding which data patterns to highlight in reports. In contrast, Polymath projects give contributors many opportunities to make recommendations and decisions: The initial problem to be solved was defined by mathematician Timothy Gowers. For subsequent Polymath projects, other lead investigators and ultimately crowd members proposed several alternative problems. Contributors decided which problems to pursue through blog discussions and informal polls.Footnote14 Both the direction of the work and the problem-solving approach were deliberately kept open at the outset and emerged through collective decisions as participants collaborated over time.Footnote15

These four primary dimensions of our framework – activities, knowledge, resources, and decisions – focus on the contributions made by the crowd. In addition, we also capture in the Outcomes that resulted from these various contributions and the projects more generally (see our related discussion of project goals in Section 2). The primary outcomes of Galaxy Zoo and Polymath were scientific (e.g. peer-reviewed publications and/or data for future research use), although the projects also provided enjoyment and learning to participants (Raddick et al. Citation2013). CurieuzeNeuzen resulted in publications and valuable data but also yielded important non-scientific outcomes such as citizens’ learning about their local environments, changes in citizens’ traffic-related attitudes and behaviours, as well as policy influence (Van Brussel and Huyse Citation2018).

3.3. Discussion of the framework

Before we build on our framework to discuss new developments in CS (Section 4) and to identify opportunities for future research (Section 5), we highlight a few important points.

First, the primary purpose of the framework is to create a conceptual and visual space that allows analysts to map the contributions of crowds to a research project. The creation of a project ‘profile’ per se is purely descriptive and should not be confused with the assessment and evaluation of the observed level of crowd involvement. In particular, evaluators may disagree over whether a particular profile of crowd contributions should qualify as ‘active involvement’ in research, and whether a particular project deserves the label of Citizen Science or Crowd Science. This question is not inconsequential, given that several funding agencies have dedicated programmes to run (and study) CS projects, or that framing projects as CS may seem to fulfill requirements regarding ‘broader impacts’ (Davis and Laas Citation2014; SwafS Citation2017).

Perhaps the most contentious question in this respect is whether crowd contributions of resources alone – without contributions of effort or knowledge – qualify as ‘active involvement’ in research (Del Savio, Prainsack, and Buyx Citation2016; Haklay et al. Citation2020). One view is that crowd members who only provide access to computing power or money do not play an active role in the research, and that citizens who donate personal specimens or data should be seen as study subjects rather than investigators. Others argue that such resource contributions can be significant enough to justify the label of Citizen Science (ECSA Citation2020; Socientize Consortium Citation2013). We do not think there is a general answer to this question. Rather than focusing on terminology, evaluators should assess projects based on the profile of crowd contributions and the goal they wish to accomplish. For example, a funding agency seeking to help democratise science may place a high emphasis on the breadth of citizen involvement and on the level of decision rights granted to participants. As such, this agency may not want to support a project that only asks the crowd to provide access to computing power. Another agency focusing on research output might support such a project because it efficiently leverages idle resources available from the crowd. Both agencies will benefit from a thorough understanding of the particular nature of crowd involvement. As such, funding agencies and other evaluators could routinely ask applicants to submit a project profile of expected crowd contributions as well as scientific and non-scientific outcomes. In a similar vein, CS projects could publish such profiles to help potential crowd participants determine whether there is a match in terms of the required levels of commitment, knowledge, access to resources, and opportunities to participate in decision making, but also in terms of the scientific and non-scientific goals a project seeks to accomplish.

Second, although we used the framework to profile projects with respect to contributions of the crowd, it can also be used to identify the contributions of other types of ‘actors’. Most importantly, it can be used to map the contributions made by lead investigators, i.e. the individuals who organise and lead CS projects. These lead investigators are often professional scientists (e.g. the organisers of Zooniverse projects) but can also include citizens with relevant expertise in different domains (e.g. in CurieuzeNeuzen). Considering the resulting profiles jointly will then allow for an explicit comparison of the roles of lead investigators and crowds in different stages of the research process.

A third important point is that the crowd contributions or project outcomes that were initially planned may not be realised. For example, some projects plan for extensive crowd involvement but fail to attract enough contributors, while others realise that contributors lack the knowledge and time required to perform the assigned activities (Crowston, Mitchell, and Østerlund Citation2019). Similarly, the contributions of the crowd in a particular project may change, e.g. when some key contributors start with narrow tasks but take on additional responsibilities over time (Lintott et al. Citation2009). Learning due to extended project participation can also allow crowd members who started with common knowledge to contribute specialised expert knowledge later in a project’s life (Crowston et al. Citation2019; Ponti et al. Citation2018). If such nuances are important for the analyst, they can be captured by creating multiple profiles for a given project, reflecting planned versus realised crowd contributions or contributions at different points in time.

Finally, we recognise that our multi-dimensional framework is more complex than prior taxonomies of CS projects. We believe that this complexity is justified given the greater ability to represent crowd contributions in a nuanced manner, to avoid confounding different dimensions, and to create a space for unusual and novel types of projects. For example, our framework can easily accommodate the growing number of projects that involve crowds only in generating research questions (Guinan, Boudreau, and Lakhani Citation2013; Beck et al. Citation2020), while such projects do not fit in the popular taxonomy distinguishing contributory, collaborative, and co-created projects (see ). That being said, we limited the framework to four dimensions that are firmly grounded in theories of innovation and organisation and that are also practically relevant.Footnote16

4. From tool to third actor: the rise of machines

Technological advances have fuelled an increase in the scale and scope of CS projects by providing powerful tools and infrastructure (Newman et al. Citation2012). As noted earlier, for example, smartphones with GPS have improved citizens’ ability to collect high quality observational data, and online platforms provide the core infrastructure for large projects such as Foldit and eBird. Digital technologies and algorithms also help lead investigators to ‘manage’ crowds, keeping contributors engaged through gamification (Waldispühl et al. Citation2020), verifying the accuracy of contributors’ submissions (Sullivan et al. Citation2018), correcting for cognitive or behavioural biases of the crowd (Kamar, Kapoor, and Horvitz Citation2015), providing just-in-time training and support (Katrak-Adefowora, Blickley, and Zellmer Citation2020), or assigning contributors to tasks based on their levels of expertise and prior performance (Kamar, Hacker, and Horvitz Citation2012; Trouille, Lintott, and Fortson Citation2019).

In addition to serving as tools and infrastructure that enable CS projects, advanced technologies such as artificial intelligence (AI) and robots increasingly perform core research activities themselves (Franzen et al. Citation2021; Ceccaroni et al. Citation2019). As such, it is useful to consider ‘machines’ as distinct actors that make their own contributions, augmenting and sometimes substituting for crowds and lead investigators (Raisch and Krakowski Citation2021; Brynjolfsson and Mitchell Citation2017). In this section, we briefly discuss some of these developments, show how they can be captured in the framework developed in Section 3, and consider implications for the future of CS.

Many well-known CS projects – including those on the platform Zooniverse – ask crowd members to classify images, videos, and other objects (see , Panel A). Human classifiers were especially important early-on, when computers were unable to recognise even relatively simple patterns with sufficient quality (Hopkin Citation2007). But lead investigators seized opportunities to use crowd-generated data to train and improve algorithms (Ceccaroni et al. Citation2019; Rafner et al. Citation2021). Once crowds have generated training data, algorithms are often more efficient, which is particularly important given the increasing scale and speed at which new raw data become available in many fields (Kuminski and Shamir Citation2016; Walmsley et al. Citation2021). Indeed, some Zooniverse projects were retired from the platform once citizens had generated enough training data, allowing algorithms to perform the remaining work (Trouille, Lintott, and Fortson Citation2019).

Although automated classifications can be interpreted as the substitution of crowds by machines, the initial need for crowd-generated training data also indicates a complementarity between crowds and machines. More importantly, advanced CS projects have moved beyond static function allocation to either crowds or machines (De Winter and Dodou Citation2014) to create hybrid systems that ‘augment’ humans and exploit new types of complementarities (Rafner et al. Citation2021; Raisch and Krakowski Citation2021). For example, the project eBird distributes individual classification tasks intelligently between citizens, algorithms, and experts, allowing humans and machines to learn from each other and to improve the performance of the overall system (Kelling et al. Citation2012). Some Zooniverse projects optimise the activities of humans and machines dynamically – volunteers who perform above certain thresholds are moved up to higher ‘workflow levels’ where they work on objects that could not be classified reliably by entry-level volunteers or algorithms, using more flexible interfaces and even creating new classification categories (Trouille, Lintott, and Fortson Citation2019; Walmsley et al. Citation2021). Although large and well-funded platforms such as eBird and Zooniverse are at the forefront of developing such hybrid systems, efforts to integrate crowds and machines (‘human-in-the-loop’) are quite common in projects focusing on data collection and processing (Franzen et al. Citation2021; Gander, Ponti, and Seredko Citation2021).

The roles of crowds and machines are also shifting in problem solving activities. Several early CS projects recognised the advantages humans had in solving complex problems that were challenging for the computational approaches available at the time. The project Foldit, for example, achieved significant successes in protein structure prediction (Cooper et al. Citation2010). However, Foldit players and lead investigators soon started to incorporate creative solution approaches into algorithms, dramatically increasing the effectiveness of automated problem-solving (Franzoni and Sauermann Citation2014). Modern algorithms are very effective (Yang et al. Citation2020; Senior et al. Citation2020) and it is perhaps not surprising that Foldit has gradually moved from protein structure prediction to more challenging problems such as protein design.Footnote17 Similarly, the platform Science at Home initially used crowds to help solve problems in quantum physics (Jensen et al. Citation2021) but has now started to additionally investigate crowd members’ human problem solving as a core area of research – creating new ambiguities regarding the role of citizens as active participants in research vs. study subjects (see Section 3.3).Footnote18 Finally, problem solving using analogies has long been considered too difficult for algorithms but recent research demonstrates that hybrid systems combining crowds and machines can leverage the respective strengths of both actors (Kittur et al. Citation2019).

Although some research activities are increasingly carried out by algorithms rather than crowds or professional scientists, there are other areas where humans continue to have distinct advantages. One is the ability to recognise important problems and to formulate research questions that should be studied in the first place (Beck et al. Citation2020; Brynjolfsson and Mitchell Citation2017). Another advantage is that humans are better at noticing and dealing with unexpected objects or events, a skill that remains difficult for algorithms trained using existing data (Heaven Citation2019; Trouille, Lintott, and Fortson Citation2019).Footnote19 A final advantage is humans’ ability to operate flexibly in physical space. For example, the platform iNaturalist uses mostly algorithms to classify images for environmental monitoring, but it still requires human crowd members to explore their physical natural environments and to capture and upload images (Weinstein Citation2018; Green et al. Citation2020). That being said, technologies are also making inroads in these areas. For example, automated camera traps help monitor wildlife populations, wireless sensor networks collect and transmit data for environmental monitoring, and robotic systems can carry out monitoring of plant growth in community gardens (Joshi et al. Citation2018; Swanson et al. Citation2015; Newman et al. Citation2012). Perhaps most strikingly, researchers are working on ‘robot scientists’ that perform all activities of the research process, starting from the generation of research questions (AI-based, using libraries of existing research), to the execution of physical experiments using robots, to the codification of results that are then fed back into the algorithm to derive new hypotheses (Sparkes et al. Citation2010; Shi et al. Citation2021).

What might the increasing capabilities of new technologies imply for the future development of CS? First and foremost, many of these technologies will likely benefit projects by providing better tools and infrastructure that enable increased participation and higher performance – in terms of scientific output but also other project outcomes such as citizen learning (Newman et al. Citation2012; Green et al. Citation2020). Second, even when taking over core research tasks, new technologies may have positive effects if they free crowds from the need to perform repetitive tasks and enable them to focus on more interesting and intellectually challenging activities and objects (Franzen et al. Citation2021; Trouille, Lintott, and Fortson Citation2019). However, to the extent that such work is limited in volume, or requires additional knowledge and resources that pose barriers for crowd participants, there is a risk that CS becomes less inclusive by focusing primarily on expert volunteers (Raisch and Krakowski Citation2021; Brynjolfsson and Mitchell Citation2017). This may not be a concern from a ‘productivity’ perspective, but may limit CS’s potential to advance the non-scientific goals highlighted by the ‘democratization view’ (Section 2). Our conjecture is that there may be a divergence in the CS landscape: Some projects may pursue primarily productivity goals by complementing crowds with new technologies but also letting machines do the work if that is more efficient than relying on the crowd. Other projects may use technologies to broaden citizen participation and improve non-scientific outcomes such as learning and awareness – continuing to rely heavily on crowds even if that means tradeoffs in scientific efficiency. Either way, the goals of the lead investigators as well as the guidance provided by policy makers and funding agencies will play an important role in shaping the future development of CS projects in light of the increasing capabilities of machines.

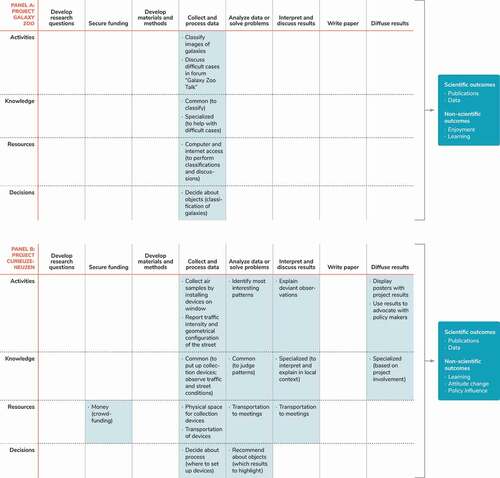

Although we cannot tell how exactly the roles of crowds, lead investigators, and machines will develop in the future, the framework developed in Section 3 can help to study such developments. We already discussed how our framework can be applied to crowds as well as lead investigators (Section 3.3); it is a small step to also profile projects with respect to the involvement of machines. provides a stylised example: It shows for a hypothetical project how each of the three types of actors is involved in different stages of the research process (blue cells indicate active involvement of a particular actor in a particular stage). Such project profiles are useful for descriptive purposes but can also provide the starting point for researchers studying Crowd and Citizen Science. As such, we now turn to the final part of this article: Outlining an agenda for future research on CS, focusing on key organisational questions that result from the collaboration between lead investigators, crowds, and machines.

Figure 2. Conceptual framework and illustrative ‘profiles’ of crowd contributions for Galaxy Zoo (Panel A), CurieuzeNeuzen (Panel B), and Polymath projects (Panel C).

Figure 3. Profile of project contributions made by lead investigators, crowd, and machines .

5. CS as a ‘new form of organizing’: opportunities for future research

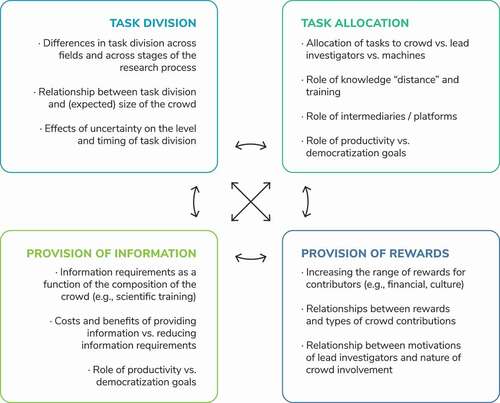

The descriptive framework developed in Sections 3 and 4 allows us to characterise CS projects comprehensively with respect to the activities, knowledge, resources, and decisions that are contributed by different actors – the crowd, lead investigators, as well as machines. To structure our discussion of future research opportunities, we additionally build on a second framework that enables a sharper focus on key organisational aspects that are underlying the observed CS project profiles. In particular, we conceptualise CS as a ‘new form of organizing’ scientific knowledge production that differs from traditional academic research with respect to four central aspects (Puranam, Alexy, and Reitzig Citation2014; Walsh and Lee Citation2015): The mapping of the overall project goal into component tasks (task division), the allocation of tasks to different actors (task allocation), mechanisms to motivate and incentivise contributions (provision of rewards), and mechanisms to supply contributors with the information required to perform their work and coordinate with each other (provision of information). We briefly discuss how CS differs from traditional research with respect to each of these organisational aspects,Footnote20 highlight specific research questions regarding each aspect, and show how the descriptive project profiles developed in Sections 3 and 4 can help study these organisational questions.

5.1. Task division

The first aspect of organising is that the overall project goals have to be mapped onto contributory tasks that can be performed by different actors. In traditional research projects, task division is often kept at a relatively high level of aggregation (e.g. broad research activities such as data collection versus data analysis), and these broader categories also serve to credit (and blame) professional contributors (CASRAI Citation2018). Depending on the size of the project, task division can be more or less formal and can emerge or change over the course of a project (see Haeussler and Sauermann Citation2020; Walsh and Lee Citation2015).

Task division at a high level of aggregation is still relevant in CS, especially as it relates to the division of labour between different types of actors such as crowds and lead investigators (as reflected in ). However, CS projects tend to go further by dividing tasks more finely within these broad categories in order to allow a large number of contributors to participate. Consider extreme examples such as Galaxy Zoo, which has broken the task ‘classify thousands of galaxies’ into thousands of micro-tasks that involve looking at a single galaxy and making a handful of simple classification decisions. Participants in the project Polymath work on larger sub-tasks, sometimes solving significant parts of the overall mathematical problem. However, considering that mathematics is a field where research has traditionally been done by individuals or very small teams (Wuchty, Jones, and Uzzi Citation2007), Polymath still involves a greater division of tasks than traditional projects. Another observation is that CS projects vary considerably with respect to the timing of task division. Large projects requiring common knowledge (e.g. Galaxy Zoo and CurieuzeNeuzen) often divide tasks ex ante, while smaller projects requiring specialised knowledge and longer-term involvement of crowd members tend to manage task division more flexibly (e.g. Polymath). Potential explanations are that large projects would face greater adjustment costs when changing task division, but also that their optimal task division may depend less on which particular crowd members join the project.

This discussion raises several interesting questions for future research: First, how does the level of task division depend on the nature of the overall problem (e.g. as reflected in differences across fields)? Relatedly, how does task division differ across activities within a project, e.g. data collection versus the formulation of research questions? Second, how does optimal task division depend on the size of the crowd? Finally, how do the level and timing of task division depend on uncertainty, both with respect to the research itself but also regarding the number and composition of crowd members who respond to the call for participation? Although motivated by the particular context of CS, questions such as these are quite general and are being tackled also in related contexts, most notably crowdsourcing (Afuah Citation2018) and traditional scientific teams (Haeussler and Sauermann Citation2020). As such, researchers can build on these existing literatures and may also be able to contribute insights back to those domains.

The multi-dimensional profiles developed in Sections 3 and 4 can serve as a starting point to investigate some of these questions empirically. For example, entries on the first dimension (activities) provide direct insights on the tasks performed by crowd members as well as by lead investigators and machines. When coded with respect to the ‘size’ of tasks (with smaller size indicating greater task division), the resulting data can be used to examine the relationships between task division and other interesting variables such as the scientific field and the number of crowd contributors.

5.2. Task allocation

After tasks have been divided, they need to be allocated to different contributors. In traditional research projects, tasks are typically assigned by lead investigators based on their understanding of team members’ interests and capabilities (Owen-Smith Citation2001; Freeman et al. Citation2001). In CS projects, individual contributors are not known ex ante and most participate only for a short time, making it difficult for lead investigators to learn about their skills (Sauermann and Franzoni Citation2015). As such, lead investigators typically broadcast an open call for contributions and crowd members self-select based on the perceived fit between their skills and interests on the one hand, and the tasks offered by the project on the other (see also Afuah Citation2018; Puranam, Alexy, and Reitzig Citation2014). However, some lead investigators also allocate tasks more explicitly based on contributors’ emerging track record, e.g. by promoting core contributors to deal with difficult cases (Trouille, Lintott, and Fortson Citation2019) or asking them to moderate a discussion forum (Rohden Citation2018).

Future research is needed on a first-order question: When and why do lead investigators assign activities to crowds in the first place – rather than to professional team members or machines? Following the literature on crowdsourcing and open innovation, one reason might be the crowd’s access to valuable knowledge. Among others, this might involve a greater diversity of knowledge inputs, specialised knowledge resulting from particular experiences (e.g. as patients), as well as knowledge of pre-existing problems and solutions (Beck et al. Citation2020; Afuah and Tucci Citation2012; Felin and Zenger Citation2014). A second reason is that CS contributors can contribute large volumes of effort that would be difficult or even impossible to mobilise within the traditional system (Sauermann and Franzoni Citation2015). The profiles developed in Section 3 can help us study when and why lead investigators allocate tasks to the crowd. For example, entries for the second and third dimensions in allow us to see what types of knowledge and other resources crowd members contribute. If the knowledge contributed is ‘common’, the primary rationale for allocating tasks to the crowd is likely related to effort and cost (e.g. image classification in Galaxy Zoo). If the knowledge contributed is ‘specialised’, lead investigators likely invite crowds also to access knowledge that may otherwise be difficult to get (e.g. residents’ knowledge about local conditions that helped interpret data in CurieuzeNeuzen). Indeed, explicitly comparing the profiles of contributions made by crowds vs. lead investigators vs. machines () may yield novel insights into the rationales for involving different types of actors, and into potential complementarities between the contributions these actors make at different stages of the research.Footnote21

A second area for future research on task allocation is the self-selection at the level of individual crowd members. In conventional crowdsourcing, organisations spend considerable time to clarify what particular inputs they are looking for, or what particular problems they are trying to solve (Lakhani Citation2011). Crowd members can then assess whether a particular project is a match for their own profile of skills or knowledge. While this idea should in principle also apply in the context of CS, self-selection may be difficult to the extent that there is a greater ‘distance’ between professional scientists and non-professional citizens with respect to knowledge about scientific processes and substance, making it difficult for citizens to see where they can best contribute (see Brossard, Lewenstein, and Bonney Citation2005; Bonney et al. Citation2016). As such, it would be interesting to study how citizens’ self-selection into CS projects depends on their levels of education, on the specific types of contributions requested (dimensions 1–4 of our framework), but also the outreach strategies used by lead investigators. Interestingly, while much of the current research on self-selection in crowdsourcing focuses on task characteristics and skill profiles in a static sense (Afuah Citation2018), many CS projects offer training opportunities and facilitate citizen involvement in order to overcome knowledge gaps (Druschke and Seltzer Citation2012; Van Brussel and Huyse Citation2018). Future research could study task allocation when projects can ‘upskill’ contributors, and whether self-selection matters not only with respect to current skills and knowledge but also the willingness to learn.

Finally, future research should study how intermediaries and new technologies can support projects with both task division and task allocation. For example, the Zooniverse platform offers sophisticated infrastructure to divide tasks and assign them to contributors using algorithms (see Section 4). Just One Giant Lab allows projects to post specific needs and enables volunteers to efficiently search for tasks that match their skills and interests (Kokshagina Citationforthcoming). The platform SciStarter uses AI to recommend new projects that are expected to be a good match based on crowd members’ characteristics, past project choices, as well as activity within projects (Zaken et al. Citation2021).

5.3. Provision of rewards

The third organisational challenge is to motivate contributors to perform their respective tasks (Puranam, Alexy, and Reitzig Citation2014). In traditional academic science, salient rewards include authorship and reputation among peers, which translate into other benefits such as job security (tenure) and income (Stephan Citation2012; Merton Citation1973). Curiosity and desires for social impact can also be important motivators (Cohen, Sauermann, and Stephan Citation2020).

CS projects typically do not provide any financial rewards for contributors (Sauermann and Franzoni Citation2015; Haklay et al. Citation2021). Given that crowd members are not socialised in the traditional institution of science and their number is large, there is also a limited role for authorship and peer recognition, although a few CS projects have granted authorship to individual crowd members or have recognised the crowd under a collective pseudonym (e.g. Polymath Citation2012; Khatib et al. Citation2011; Lintott et al. Citation2009). As such, CS projects appear to rely on a more limited range of motivators: One is crowd members’ intrinsic interest in particular topics such as astronomy or ornithology (Sauermann and Franzoni Citation2013). Another motivator is crowd members’ desire to contribute to science generally, or to help address grand challenges such as climate change and environmental pollution (Geoghegan et al. Citation2016). Some CS projects such as CurieuzeNeuzen or Tell Us! can have direct connections to contributors’ personal problems, offering opportunities for the ‘self-use’ of project outputs (e.g. learning about air quality in one’s own street) or promising longer-term personal benefits from scientific results (e.g. improved medical treatments). Finally, some projects motivate contributors through gamification (Khatib et al. Citation2011; Hamari, Koivisto, and Sarsa Citation2014) or by enabling personal interactions that create social rewards.

Future research could investigate if the range of rewards can be expanded. In particular, crowdsourcing projects in other domains typically rely on financial incentives, including effort-based pay but also sophisticated tournament mechanisms (Boudreau, Lacetera, and Lakhani Citation2011). Although there is a strong belief in the Citizen Science community that citizens should participate as unpaid ‘volunteers’ (perhaps to distinguish CS from commercial crowdsourcing), not paying contributors may result in systematic biases by excluding individuals who need to earn an income to support their livelihood (Haklay et al. Citation2021). As such, relying on unpaid volunteers may be particularly problematic for projects that seek to ‘democratise’ science by including citizens who are typically less represented in the scientific enterprise. A related important question – both from a functional and an ethical perspective – is whether and how crowd members should share in any economic payoffs that are generated by CS projects (Afuah Citation2018; Guerrini et al. Citation2018).Footnote22 Going beyond rewards in a narrow sense, prior research suggests that organisational culture and norms may also play an important role in motivating effort and in discouraging misconduct (Ouchi Citation1979; Rasmussen Citation2019). Whether and how project-level culture can ‘work’ in CS is a particularly interesting question given that projects such as Galaxy Zoo and Polymath operate for many years, but most contributors participate for only very short amounts of time (Sauermann and Franzoni Citation2015; Harrison and Carroll Citation1991).

Especially if the range of rewards can be expanded, future work should examine how the effectiveness of different reward mechanisms depends on the particular contributions that are requested from the crowd (dimensions 1–4 in ). Relatedly, we need to understand better how crowd members’ intrinsic rewards are shaped by task division and task allocation (see prior sections), and how new technologies can be employed to make CS projects more engaging and rewarding (Trouille, Lintott, and Fortson Citation2019; Hamari, Koivisto, and Sarsa Citation2014). However, our discussion in Section 4 suggests that machines may not only enhance crowd motivation but may also make it less important: Once the fixed costs of development have been incurred, machines often need little additional ‘reward’, providing an attractive outside option for lead investigators who find it difficult or costly to attract and retain crowd contributors (Hamid and Bonnie Citation2014). Thus, studying rewards for the crowd is not only interesting per se, but also has important implications for task allocation and the potential substitution between crowds and machines.

Finally, future research is needed on the rewards for lead investigators (Geoghegan et al. Citation2016). The question of motivation may be straightforward for citizen leaders who start projects addressing concrete local problems (e.g. CurieuzeNeuzen) or for professional scientists who can tap into the crowd to achieve vastly superior scientific performance (e.g. Galaxy Zoo). To understand the potential of CS beyond these more obvious cases, however, research is needed on the rewards that motivate a broader range of (potential) lead investigators. Among others, we need to understand how professional scientists assess the potential benefits (and costs) of using CS practices and how ‘accurate’ their estimates are. Research could also explore to what extent scientists value non-scientific in addition to scientific outcomes, and how such preferences differ across individuals and fields (Cohen, Sauermann, and Stephan Citation2020). Finally, it would be interesting to study how lead investigators’ different motivations predict which research activities they allocate to the crowd (dimension 1 in our framework), how much they involve the crowd in decision making (dimension 4), and whether they think of crowds and machines as complements or substitutes (Section 4).

5.4. Provision of information

The final organisational challenge is to supply contributors with the information they need to perform their tasks and coordinate with others. In traditional research, information is typically shared in team meetings or through individual interactions between the lead investigators and other team members (Owen-Smith Citation2001). Many CS projects also rely on meetings and physical co-location (e.g. CurieuzeNeuzen) as well as online discussion tools to share information (e.g. Polymath). Rather than providing information, however, other projects reduce the need for information by breaking activities into simple micro-tasks that require little information to perform, and by modularising tasks such that less coordination is needed between crowd members (e.g. Galaxy Zoo). Some projects also employ algorithms to coordinate crowd contributors without the need to actually share the necessary information with the individuals themselves (Kamar, Hacker, and Horvitz Citation2012; Trouille, Lintott, and Fortson Citation2019).

Existing research has studied the provision of information in traditional organisational contexts as well as in more novel contexts such as open source software development and distributed work (Dahlander and O’Mahony Citation2010; Shah Citation2006; Majchrzak, Malhotra, and Zaggl Citation2021; Okhuysen and Bechky Citation2009). Many of the insights from this work may generalise to CS as well. Nevertheless, information provision deserves more attention specifically in CS projects because contributors – especially non-professional citizens – may have a significantly larger information ‘deficit’ than the actors investigated in prior research. For example, while a distributed team of programmers working on open source software can rely on a shared understanding of how programming works, it will take more information to coordinate between a professional physicist and citizen scientists without training in physics. Especially given the short-term involvement of many contributors, this greater need for information may lead CS projects to primarily rely on modularisation as well as algorithms and project infrastructure, rather than trying to transfer information through training or direct communications. Future research could study such issues by relating the coordination mechanisms employed by projects to the levels of crowd involvement in research activities and decision making (dimensions 1 and 4), but also to the characteristics of the crowd. Similarly, the overlap in the activities performed by lead investigators and crowd members (visible in ) may shape coordination needs within and across groups of actors, and may be correlated with the use of different coordination mechanisms. Again, we suspect that the solutions that lead investigators employ to address this fourth organisational challenge depend heavily on the degree to which they pursue productivity versus democratisation goals.

summarises opportunities for future research on the four organisational aspects, while also highlighting important interdependencies. We believe that progress on this research agenda will help scholars better understand how Crowd and Citizen Science projects are currently organised but may also yield valuable recommendations for improving project design.

6. Summary and conclusion

Science is becoming increasingly open and collaborative (Beck et al. Citation2021). Although openness with respect to research outputs and data is now well established in many fields, an even more radical development is the opening of traditional organisational boundaries by involving crowds, including citizens who are not professional scientists. In this paper, we integrate and expand upon two related streams of research: Crowd Science and Citizen Science. Our discussion of differences and similarities (Section 2) concluded that the two streams study strongly overlapping sets of projects but do so focusing on different outcomes (related to ‘productivity’ vs. ‘democratisation’) and different defining mechanisms (e.g. self-selection in response to an open call vs. involvement of non-professionals). Although management and innovation scholars tend to follow the Crowd Science tradition, the two streams are complementary and can jointly advance our understanding of an important development in science.

In Section 3, we proposed a multi-dimensional framework that improves upon prior taxonomies of CS by providing a conceptual and visual space to flexibly characterise the different contributions made by the crowd. In Section 4, we additionally recognised the important role of technology, which is evolving from supporting infrastructure to an actor that takes over distinct roles in the research process. Our framework allows analysts to create comprehensive ‘profiles’ of the contributions that lead investigators, crowds, and machines make to particular projects. These profiles can serve both descriptive and analytical purposes and can be valuable for CS organisers as well as funding agencies seeking to assess the level and nature of crowd involvement. By highlighting empty cells in the profiles of current CS projects (see ), the framework may also reveal opportunities for a deeper integration of crowds into the research process.

The involvement of crowds in scientific research can yield significant benefits in terms of both productivity and democratisation, but it also poses important organisational challenges. Scholars with a background in management, economics, and innovation studies are well equipped to study these challenges and develop recommendations for lead investigators and policy makers. To stimulate such research, we conceptualised CS as a ‘new form of organizing’ (Puranam, Alexy, and Reitzig Citation2014) and derived pressing research questions related to task division, task allocation, provision of rewards, and provision of information (Section 5). This discussion can also be useful for CS practitioners and scholars in other fields by highlighting fundamental organisational issues that have been less explicit in prior work, and by linking to a rich body of research that can help understand and leverage organisational design parameters.

Although our focus has been on Crowd and Citizen Science as the phenomenon of interest, CS can also serve as an intriguing research context to examine a broad range of more general phenomena and research questions. We conclude by highlighting just a few of these opportunities. First, many CS projects are at the forefront of using digital technologies as enabling infrastructure but also to complement or replace human lead investigators and crowds (see Section 4). As such, CS may enable scholars to study the challenges and opportunities arising from digital transformation, including aspects such as virtual collaboration, gamification, and hybrid intelligence (Martins, Gilson, and Maynard Citation2004; Kittur et al. Citation2013; Hamari, Koivisto, and Sarsa Citation2014). Second, CS projects involve crowds of varying sizes and compositions, use crowds for a broad range of activities, and use many different mechanisms to organise distributed work (see ). Studying this heterogeneity may enable research in crowdsourcing to better understand the contingency factors involved in working with crowds, and to explore more extensive types of crowd involvement such as co-creation (Afuah Citation2018; Boudreau and Lakhani Citation2013; Poetz and Schreier Citation2012). Crowdsourcing scholars may also find it stimulating to study a context where crowds are not paid, raising a host of new challenges related to self-selection, motivation for sustained engagement, and goal (mis-)alignment. Third, although CS provides many benefits to participants and enables new types of research, some projects also raise concerns regarding the potential replacement of paid workers by unpaid crowds as well as the ethical treatment of contributors who volunteer to help create significant value (Hamid and Bonnie Citation2014). As such, scholars studying the gig economy and online labour markets may find CS interesting because it pushes the limits with respect to parameters such as the level of pay, the size of crowds, but also the scope and geographic distribution of projects and contributors (Kittur et al. Citation2013; Wood et al. Citation2019; Chen et al. Citation2019). Last but not least, studying CS may yield important insights into more general developments in science and innovation. For example, there is a clear trend towards larger teams and increasing specialisation in virtually all fields (Wuchty, Jones, and Uzzi Citation2007; Singh and Fleming Citation2010), raising new challenges regarding task allocation, coordination, and the sharing of rewards (Haeussler and Sauermann Citation2020; Teodoridis, Bikard, and Vakili Citation2019). Relatedly, growing team size and resource requirements in science may increase entry barriers for new Principal Investigators and create tensions between efficiency and diversity in research (Freeman et al. Citation2001; National Academies Citation2018). In many respects, large CS projects and global platforms such as Zooniverse, eBird, and ScienceAtHome represent extremes of these developments and may provide fruitful research settings for scholars interested in the future of the science and innovation enterprise.

An overarching appeal of Crowd and Citizen Science as a research setting is that projects are unusually open and transparent, allowing us to look deeply into their internal organisation and to measure typically unobserved processes and outcomes. The best way to start might be to just join one of the many projects inviting contributions in areas such as astronomy, biology, medicine, and even the social sciences.Footnote23

Acknowledgments

We thank Timothy Gowers (Polymath), Chris Lintott (Zooniverse), Filip Meysman (CurieuzeNeuzen), and Marc Santolini (OpenCovid19) for sharing insights on their projects and commenting on our article. We also thank Robin Brehm, Chris Hesselbein, Marisa Ponti, Julia Suess-Reyes, and Robbie Ward for extremely helpful feedback. We are grateful for funding from ESMT Berlin as well as the Austrian National Foundation for Research, Technology and Development, grant for Open Innovation in Science. All remaining errors are our own.

Notes

1 https:/www.zooniverse.org/about/publications; accessed 17 April 2021.

2 https://app.jogl.io/program/opencovid19; accessed 17 April 2021.

3 The literature struggles to clearly distinguish ‘professional scientists’ and ‘citizens’, e.g. because many Citizen Science participants have scientific training, including PhDs (Raddick et al. Citation2013). Some observers emphasise that such participants contribute outside of their roles as employees of scientific institutions, i.e. as private citizens (Strasser et al. Citation2019).