?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

In this article, an algorithm guaranteeing asymptotic stability for parametric model order reduction by matrix interpolation is proposed for the general class of high-dimensional linear time-invariant systems. In the first step, the system matrices of the high-dimensional parameter-dependent system are computed for a set of parameter vectors. The local high-order systems are reduced by a projection-based reduction method and stabilized, if necessary. Secondly, the low-order systems are transformed into a consistent set of generalized coordinates. Thirdly, a new procedure using semidefinite programming is applied to the low-order systems, converting them into strictly dissipative form. Finally, an asymptotically stable reduced order model can be calculated for any new parameter vector of interest by interpolating the system matrices of the local low-order models. We show that this approach works without any limiting conditions concerning the structure of the large-scale model and is suitable for real-time applications. The method is illustrated by two numerical examples.

1. Introduction

Accurate modelling of dynamical systems can lead to large-scale systems of ordinary differential equations. If these systems are used for the purpose of optimization, simulation or control, even powerful computer systems reach their limitations, especially concerning execution time and available memory. As a remedy, methods of model order reduction (MOR) have been developed which approximate the input–output behaviour of the original system by a low-order one [Citation1]. In addition, properties of the high-order system like stability, passivity or the Port-Hamiltonian structure should be preserved, see, e.g., [Citation2,Citation3]. For many engineering applications, the high-order system additionally depends on parameters, such as material parameters. For this case, methods of parametric MOR (pMOR) have been developed [Citation4]. They construct a low-order system that approximates the large-scale one for the entire parameter domain. Hence, one can obtain a reduced system for every parameter value of the domain without the need to repeat the reduction procedure.

Besides other pMOR methods, interpolation-based methods were suggested in the literature. One approach is the interpolation of reduced system matrices [Citation5–Citation10], which can handle arbitrary parametric dependencies and has a low computational effort. However, it does not necessarily lead to asymptotically stable interpolated systems. Hence, some stability-preserving approaches were proposed for matrix interpolation, most of them being restricted to systems showing certain conditions concerning the structure of the large-scale model. In [Citation5], stable interpolation on matrix manifolds is proposed for systems of ordinary differential equations in second-order form. Stability-preserving matrix interpolation for dissipative systems is suggested in [Citation11], for Port-Hamiltonian systems in [Citation12] and for passive systems in [Citation13,Citation14]. Concerning the general class of linear time-invariant (LTI) systems, a technique is suggested in [Citation15,Citation16] for passivity-preserving parameterized MOR which exploits the solution of high-order linear matrix inequalities (LMIs). Some stability-preserving methods are proposed in [Citation11,Citation17] for the general class of LTI systems. These approaches have in common that they modify one of the two reduced order bases (ROBs) of the local systems to enforce stability for the interpolated systems. The approach from [Citation11] requires the solution of high-dimensional generalized Lyapunov equations, which is computationally very expensive. The method from [Citation17] determines an optimal stabilizing solution with regard to a quality function that is found by solving high-order optimization problems. For reducing the computational effort, the authors also propose low-order but suboptimal optimization problems.

In the following, a procedure for stability-preserving pMOR for the general class of parameter-dependent, LTI systems is presented, where all steps of the (stability-preserving) matrix interpolation are exclusively performed in low-dimensional vectors and matrices. Preliminary studies for the proposed method were published in [Citation18], and a detailed description can also be found in the dissertation [Citation7]. The method starts with optimization steps similar to [Citation17]. However, it searches for the modified ROBs in the available low-order subspaces, and hence, the optimization steps can be done very efficiently.

A short overview of MOR and pMOR by matrix interpolation is given in Section 2. The new stability-preserving method is presented in Section 3 and is compared to an existing approach in Section 4. Numerical results are presented in Section 5.

2. Preliminaries

2.1. Parameter-dependent LTI systems

In this article,Footnote1 we consider a parameter-dependent, LTI and high-dimensional system of order n. Its representation in the time domain is

where ,

,

and

are the system matrices which depend on the parameter vector

with domain

. The vectors

,

and

denote the inputs, outputs and states of the system at time

, respectively. The initial value of the state vector is

. Performing a Laplace transformation on system (Equation (1)) and setting

, we obtain the representation of the system in the frequency domain.

where s ∈ μ is the complex frequency. When we refer to the system independent from its representation, it is denoted by G(p) in the following.

2.2. Asymptotic stability

Stability is an important property of system (Equation (1)). There exist various stability definitions [Citation1,Citation19,Citation20]. In this article, the following concept of stability is examined.

Definition 2.1: The system (Equation (1)) is said to be asymptotically stable if one finds for all initial conditions

.

The following theorem gives necessary and sufficient conditions.

Theorem 2.2 ([Citation21]): The system (Equation (1)) with non-singular is asymptotically stable if and only if

• S1: there exists a Lyapunov function, i.e. a function with

and

for all

.

• S2: the eigenvalues of the pencil lie in the open left half of the complex plane.

A special class of systems is of particular interest in this article.

Definition 2.3 ([Citation22]): The system (Equation (1)) is said to be strictly dissipative if it satisfies and

.

A strictly dissipative system possesses the Lyapunov function and hence is asymptotically stable [Citation23]. Strictly dissipative systems have the following interesting property which will be used in Section 3.3 for proposing a stability-preserving interpolation method.

Corollary 2.4 ([Citation11,Citation17]): Given the strictly dissipative systems with

and

for

, the system obtained by the superposition of the system matrices

and

with weighting functions

and at least one

possesses the Lyapunov function

and hence is asymptotically stable according to criterion S1 from Theorem 2.2.

2.3. Projection-based MOR

Imagine that we compute the parametric system for a set of grid points

with

. We denote

by

and similarly for the other matrices and the resulting non-parametric system is denoted by

with

and

. Then, the goal of MOR is to find a low-dimensional system

of order

whose output is a good approximation of the output of the original system

, i.e.,

when the same input is fed to the systems. For this, projection matrices

and

, which are referred to as ROBs, are calculated. The case

is called one-sided reduction and the case

is referred to as two-sided reduction. The ROBs span the right

and left subspace

, respectively. For the calculation of the ROBs, we can apply projection-based reduction methods such as Krylov subspace methods, truncated balanced realization (TBR) or proper orthogonal decomposition, see, e.g., [Citation1] and references therein. Using the approximation

with the reduced state vector

and enforcing the Petrov–Galerkin condition

leads to the reduced order model

where the reduced system matrices are given by

2.4. Parametric MOR by matrix interpolation

Methods of pMOR have been developed to approximate the parameter-dependent, high-order system while preserving the parameter-dependency in the reduced-order model. The method of interest in this article interpolates precomputed reduced system matrices. It was proposed in [Citation5,Citation6,Citation9,Citation10] and a unifying framework was suggested in [Citation7,Citation8]. We sum up four preprocessing steps (offline phase) and the interpolation step (online phase).

The domain μ is sampled for a set of vectors

with

. Then, the system

is computed for the vectors in the set

which results in a set of high-order systems

.

Each system in the set μ is reduced individually to order

, where any projection-based reduction method can be applied according to Section 2.3 resulting in a set of low-order systems

.

The reduced systems of the set

have different bases

. These right ROBs need to be adjusted to the basis of the reference subspace

. An approach that captures the most important directions of all subspaces and has a low computational effort uses the economy version of the SVD

(6)

(6)

The right ROBs are transformed for

with matrices

to

so that a quality function is minimized. A possible quality function is the modal assurance criterion (MAC) between the local basis

and the reference basis

which can be written according to [Citation7,Citation24]

(7)

(7)

The solution with the minimum norm value,

, is

. A second possible quality function is the distance (DS) between the local basis

and the reference basis

measured in the Frobenius norm [Citation5,Citation7,Citation24]

(8)

(8)

The solution with the minimum value of the cost function is

using the pseudoinverse

.

In analogy, the bases

need to be adjusted to the basis of the reference subspace

, which can, e.g., be calculated according to Equation (6) by replacing the respective values. The left ROBs are transformed for

with matrices

to

so that a quality function is minimized. For the MAC approach, we obtain the quality function

(9)

(9)

with the optimal solution . For the DS approach, we obtain

with the optimal solution .

The steps discussed so far are performed in the offline phase and have to be done only once. They result in a set of reduced-order systems with

Note that these systems differ from systems (Equation (4)) only in their state-space representation, but not in their eigenvalues or input–output behaviour.

In the online phase, i.e. when a reduced order model for the new parameter vector is supposed to be calculated, this is simply done by the interpolation of the precomputed matrices with weighting functions

. Then, the new reduced system for the parameter vector

is obtained by

with

The system matrices can also be interpolated element-wise and on matrix manifolds.

2.5. Problem formulation

The matrix interpolation (Equation (13)) does not always lead to an asymptotically stable system (Equation (12)), even if all reduced systems in the set are asymptotically stable. In other words, the reduction (Equations (12) and (13)) does not guarantee asymptotic stability of the reduced model.

Example: Consider the two asymptotically stable systems and

with matrices

For example, the interpolated matrix

has the spectrum {–4.5, 0.5} and the resulting system is therefore unstable according to criterion S2 of Theorem 2.2.

An idea to solve this problem is to minimally change the left ROBs such that the interpolated reduced system is guaranteed to be asymptotically stable.

3. Stability-preserving interpolation of reduced system matrices

3.1. Calculation of a set of asymptotically stable, reduced systems

The proposed method starts with a set of high-order systems . These systems are reduced individually to the order

according to Equation (4), and we assume that the reduced systems

are asymptotically stable. In order to ensure this stability, two general approaches are possible: (i) We can use stability-preserving reduction methods such as TBR or extended versions of Krylov subspace methods. (For the latter, the stability-preserving, adaptive rational Krylov (SPARK) from [Citation25] guarantees stability and the iterative rational Krylov algorithm (IRKA) from [Citation26] delivers asymptotically stable systems as long as it converges.) (ii) For MOR methods that do not implicitly guarantee stability, one can easily check if the reduced systems are asymptotically stable by examining their eigenvalues according to criterion S2 from Theorem 2.2; if a system is then found to be unstable, it can be stabilized via post-processing, e.g. by modifying the left or right ROBs [Citation27,Citation28].

3.2. Adjustment of the right ROBs

The reduced systems of the set have different bases

and it was shown in Section 2.4 that they need to be adjusted to the basis of the reference subspace for accuracy reasons. For this, the right ROBs are transformed for

to

with

using the MAC approach or with

using the DS approach as described in Section 2.4.

3.3. Stabilizing procedure using semidefinite programming

In this section, a stabilization method is described for the interpolation of reduced matrices. The demands that we impose on the method are – besides stability preservation – accuracy and the operation on low-order systems. The main idea is the following: starting from the transformed bases for

with

using the MAC approach or with

using the DS approach (cf. Section 2.4), we minimally modify the new left ROBs

until stability is guaranteed (again note that the eigenvalues and the input–output behaviour of the local models remain unchanged).

3.3.1. Feasibility problem

To begin with, an abstract optimization problem is formulated in which a convex objective function is minimized subject to the constraint that the interpolated system

is asymptotically stable for the domain

. According to criterion S1 from Theorem 2.2, this constraint is equivalent to the existence of a Lyapunov function

. Hence, we obtain the optimization problem

According to Corollary 2.4, the existence of a Lyapunov function is guaranteed for the superposition of strictly dissipative systems when non-negative weighting functions are applied. Hence, the optimization problem (Equation (16)) is rewritten as problems, one for each grid point [Citation17]. We introduce for each

the basis

as optimization variable and we aim to calculate this variable so that the system

is strictly dissipative and the objective function

reaches its minimum value:

Unfortunately, the matrices are of high-order, and hence, the optimization problems are intractable. As a remedy, the new bases

are searched in the available subspaces

. For this, the matrices

are introduced as new variables, which provide the new bases

and the optimization problems

which only contain low-order matrices. For solving these problems efficiently using interior point methods such as described, e.g., in [Citation29], they are transformed into semidefinite programs. These kinds of optimization problems deal with symmetric positive or negative (semi)definite matrices as variables. To get to this form, the change of variables

is introduced, where the new variables are symmetric matrices [Citation21]. Then, Equation (21) is inserted into inequality (Equation (20)), which gives the necessary constraint on the definiteness of the optimization variables

For Equation (19) it follows

Finally, the new optimization problems are

For assessing the solvability of problems (Equation (24)), the theory of convex optimization tells us that only the constraints have to be examined and the quality function can be set to 0 which is referred to as feasibility problem [Citation30]. In this case, the constraints are LMIs which are equivalent to the generalized Lyapunov inequality [Citation31]. This inequality and accordingly the optimization problem (Equation (24)) admit a solution because the eigenvalues of the pencil lie in the open left half of the complex plane as a result of Section 3.1.

3.3.2. Objective functions

In Section 3.3.1, the feasibility problem was introduced which guarantees stable matrix interpolation for all solutions satisfying the constraints. In this section, amongst these solutions, the optimal stabilizing one is determined with regard to an objective function. The objective function, which needs to be minimized, is chosen in such a way that we obtain an accurate matrix interpolation. For this, we can use the objective functions described in Equation (4) which adjust (for each ) the basis

to the basis of the reference subspace

by transforming it to

with

. For the MAC approach, we insert the new choice for

from Equation (21) into the objective function (Equation (9)) and we obtain

The matrices and

can be precomputed, and hence, the objective function that only comprises low-order matrices is obtained:

This objective function is convex as the composition of the Frobenius norm, which is a convex function, with the affine map convex [Citation30]. For the DS approach, we insert the new choice for

from Equation (21) into the objective function (Equation (10)):

Unfortunately, this objective function is of large order since the matrices have the size

. As a remedy, we take the square of the Frobenius norm, rewrite it using the trace and obtain

The low-order matrices ,

and

can again be precomputed. When the third, constant term is left out, the final low-order objective function is obtained:

The first term is convex because the composition of the square which is a convex, non-decreasing function for non-negative arguments and the convex Frobenius norm is convex. The entire quality function is convex as the sum of the first convex function and the trace, which is a linear function, is also convex [Citation30].

3.3.3. Algorithm

The proposed method modifies the left ROBs for an accurate interpolation while making the local reduced models’ representations strictly dissipative and thereby guaranteeing stability of any interpolated reduced model. For this, the objective function (Equation (26) or (29)) is minimized for every grid point subject to the constraint (Equations (22) and (23)). As the objective functions are convex and the constraints are linear, a convex optimization problem is obtained which is referred to as Stability Algorithm Based on Linear Matrix Inequalities (STABLE) and which is given in Algorithm 1.

Algorithm 1 STABLE

input: Matrices ,

for

, reference basis

output: Matrices for

1: for to

do

2: Compute ,

,

3: Solve the convex optimization problem (MAC or DS approach):

4: Compute

5: end for

As STABLE is a low-order optimization problem, it can easily be implemented and solved, e.g. in MATLAB, using convex optimization solver packages that rely on semidefinite programming such as CVX [Citation32,Citation33]. These packages are based on algorithms which solve an optimization problem iteratively. According to, e.g., [Citation29,Citation31,Citation34–Citation36], the complexity is at most per iteration and in practice the solvers perform much better. The required number of iterations can be considered in practice almost independent of the problem size, ranging between 5 and 50, as long as the numerics is kept well-conditioned. Hence, the solution of the semidefinite programs is currently restricted to sizes for the reduced order

up to some hundreds.

Then, STABLE delivers for each the transformation matrix

which results in the strictly dissipative reduced system

with

and

. This ensures asymptotic stability of any interpolated reduced model, according to Corollary 2.4. The price paid is a slight increase of the minimum value of the objective function since the left ROBs are distorted as little as possible compared to the method from Section 2.4. For the case that the feasibility problem (Equation (24)) only allows a very limited solution set, one might obtain a more distinct distortion of the left ROBs, which can lead to noticeable interpolation errors.

Remark 3.1 In a dual way, we could adjust the left ROBs for accuracy reasons such as described in Section 2.4 and solve an optimization problem in order to modify the right ROBs for an accurate interpolation while preserving the stability, see dissertation [Citation7] for detailed information.

3.4. Online interpolation process

In the online phase, the reduced order model for a new parameter

is computed by interpolation of the precomputed matrices as in Equation (13). Following Corollary 2.4, the interpolation process needs to take place with non-negative weighting functions

and a positive weighting function

for at least one

. One example for such weighting functions are linear basis functions. Then, the interpolated system

possesses the Lyapunov function

and hence is asymptotically stable for the entire domain

.

4. Comparison to an approach in the literature

A method is proposed in [Citation11] which guarantees stable interpolation of reduced system matrices for the special case of strictly dissipative high-order systems. It applies for each the one-sided reduction

, adjusts the right ROB with

and chooses for the left ROB the matrices

.

Proposition 4.1: STABLE comprises the method from [Citation11] as a special case for strictly dissipative systems and extends it to the general class of LTI systems.

Proof. For high-order system, with

and

perform a one-sided reduction method with

, which leads to a reduced system fulfilling

and

[Citation23]. For the adjustment of the right ROBs, just like in [Citation11], take the matrix

which corresponds to the MAC approach (Equation (27)). For adjusting the left ROBs, we consider the objective function (Equation (25))

which reaches its minimum value, , for

. The same holds true for the objective function of the DS approach (Equation (27)). The optimal solution belongs to the feasible set because it fulfils the first constraint (Equation (22)) owing to

and the second constraint (Equation (23)) because of

Hence, STABLE delivers the solution which is the result from [Citation11].

5. Numerical examples

5.1. Motivating example

Consider a high-order system with

. The domain

with

is sampled for two values

. The resulting systems

with

are reduced to order

using projection matrices

and

, where the columns are an orthonormal basis. This is supposed to result according to Equation (4) in the asymptotically stable systems

with

and

from Equation (14).

5.1.1. Matrix interpolation without using STABLE

Firstly, the interpolation procedure will be demonstrated without using STABLE. The respective values are labelled with without STABLE (WS). The transformation matrices are found to be according to Equations (7) or (8) and (9) or (10) with

. The adjusted ROBs are

and the transformed systems are

according to Equation (11). The interpolated system is

with

, where

are linear weighting functions. The maximum value of the real part of the eigenvalues

of

is positive for

and hence unstable systems are obtained.

5.1.2. Matrix interpolation using STABLE

Secondly, the proposed stability-preserving method is demonstrated. For adjusting the right ROBs, we obtain the transformation matrices according to objective functions (Equation (7) or (8)), which is equivalent to the approach without using STABLE. For adjusting the left ROBs, we use STABLE which is demonstrated graphically in the following. To begin with, we introduce the variables of the algorithm.

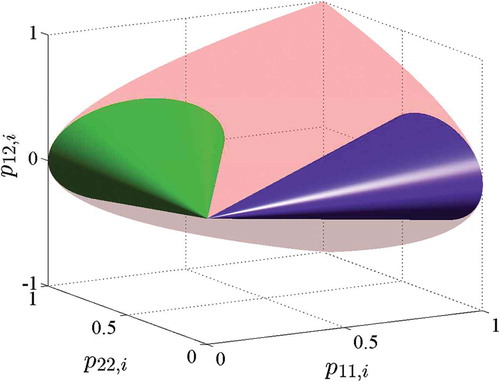

Then, the first constraint (Equation (22)) is described for the two systems by the convex cones . The intersection of the convex cone of positive definite matrices with the linear space from the second constraint (Equation (23)) provides for the two systems the convex cones

The convex cones

, which are non-empty since the systems

are asymptotically stable [Citation31], comprise all stability-preserving solutions

.

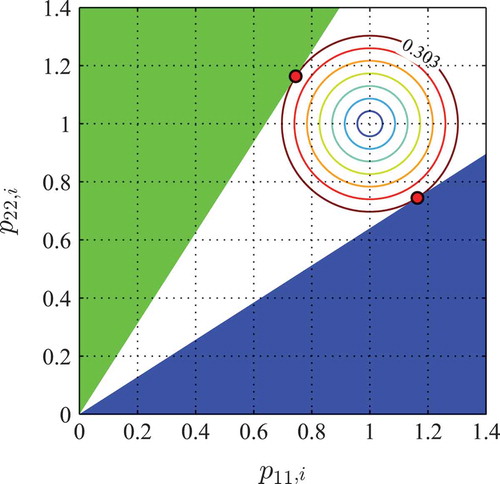

The convex cones are illustrated in and the axes show the entries of the matrices

. Consider the objective function (Equations (26) and (28))

Then, STABLE minimizes the objective function so that the optimal solution

is an element of the respective convex cone

:

For solving the convex program STABLE, the package CVX is used [Citation32,Citation33]. The results are obtained using solver SeDuMi [Citation37]. Operating on a 2.20-GHz processor, solving the semidefinite programs lasted s on average for each of the two grid points. A graphical interpretation of the optimization problem is given in . The axes show the entries

of the matrices

for

. The objective functions

from Equation (31) describe circles in the plane

with midpoint

and radius

. The convex cones

are shown as triangles which are the intersection of the convex cones from with the plane

. The algorithm STABLE searches for the solutions

with the minimum value of the objective functions

so that the solutions lie in the convex cones

. This is the case for the solutions (Equation (32)) which are the points where the circle with radius

touches the cones. This results in the transformation matrices

which lead to the reduced systems

. Then, the interpolated reduced system is

with

and

with linear weighting functions

. The interpolated system possesses the Lyapunov function

and hence is asymptotically stable for the entire domain

. The price paid is an increase of the minimum value of the objective functions

since the left ROBs are distorted as little as possible to

(see ).

Table 1. Comparison of the adjusted ROBs and of the respective minimum values of the objective functions for matrix interpolation with and without STABLE.

5.2. Anemometer

The anemometer is a flow sensing device [Citation38,Citation39]. It consists of a heater and two temperature sensors that are located before and after the heater. If there is no flow, the heat dissipates symmetrically into the fluid. This symmetry is disturbed if a flow is applied to the fluid, which leads to convection of the temperature field. Then, the fluid velocity can be determined from the difference between the temperatures measured with the sensors. The governing equation is the convection-diffusion partial differential equation

where denotes the mass density,

is the specific heat,

is the temperature and

is the heat flow into the system caused by the heater. The thermal conductivity varies in

and the fluid velocity in

. Finite element discretization of the convection-diffusion Equation (33), with zero Dirichlet boundary conditions, leads to the parameter-dependent state-space system of order

where is the state vector, containing the temperatures at the discretization nodes. The input is the heat source

and the output is the temperature difference between the sensors. The parameter vector is

and the parameter domain is normalized to

. For the sake of simplicity, the domain is sampled for the set of points of an equidistant grid

, which result in the set of

high-order systems

. The local systems are reduced to order

using IRKA, which result in a set of asymptotically stable reduced systems

according to Equation (4). For testing the systems

, we compute the error in

-norm at the points of the grid

using the formula

where is the largest singular value and

. For approximating this error, we choose

equally spaced frequencies

in the frequency range of interest

and we obtain the error

with

. We calculate the reference basis

using the non-weighted SVD approach (Equation (6)) and the reference basis

is determined in the dual way.

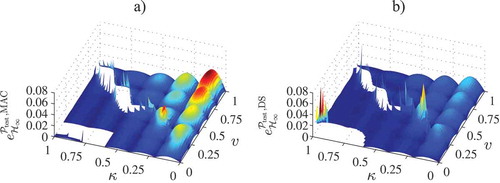

5.2.1. Matrix interpolation without using STABLE

Firstly, the interpolation procedure will be demonstrated without the stability-preserving method, which corresponds to the framework from Section 2.4. The respective values are labelled with WS. The transformation matrices for adjusting the right ROBs are calculated using the MAC or DS approach minimizing the objective function (Equation (7) or (8)) which results in or

for

. For adjusting the left ROBs, we obtain

or

using the MAC or DS approach minimizing the objective function (Equation (9) or (10)). The local systems are transformed according to Equation (11) and result in the systems

. The parametric reduced system

is obtained by interpolating the systems of the set

according to Equations (12) and (13) using bilinear weighting functions. For testing the system, we compute the error in

-norm at the points of a test grid

according to Equation (35). In , the error

is plotted for both quality functions. The reader can verify that there are regions in the domain – where the

-norm equals infinity – which exhibit instability of the interpolation procedure.

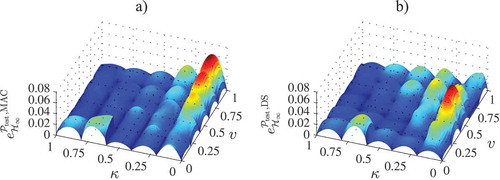

5.2.2. Matrix interpolation using STABLE

Secondly, the proposed stability-preserving method is applied. The transformation matrices for adjusting the right ROBs are for

for the MAC and DS approaches minimizing the objective function (Equation (7) or (8)). For adjusting the left ROBs, we calculate the transformation matrices

using STABLE with the MAC and DS approach. For specifying and solving the convex program STABLE, the package CVX is used [Citation32,Citation33]. The results are obtained using solver SeDuMi [Citation37] and verified with SDPT3 [Citation40]. Operating on a 2.20GHz processor, solving the semidefinite programs lasted

s on average for each of the

grid points. The local systems of the set

are transformed according to Equation (11) and result in the systems

. The parametric reduced system

is obtained by interpolating the systems of the set

according to Equations (12) and (13) using bilinear weighting functions. The error

according to Equation (35) of the reduced system

is shown in for the MAC approach and the DS approach. The MAC approach leads to the mean error

and the maximum error

. The DS approach has the mean error

and the maximum error

. The reader can verify that the proposed method preserves the stability of the interpolated system for the entire domain

. In addition, the method delivers nearly as accurate results as the method without stability preservation – compared for regions where the latter is asymptotically stable – since the left ROBs are distorted as little as possible.

6. Conclusions

In this article, a method for pMOR by matrix interpolation with stability preservation is presented. This approach makes neither demands on the structure of the original system nor needs post-processing during the online phase to achieve stability and therefore is suitable for real-time applications. The procedure is based on low-order convex optimization problems – so-called semidefinite programs – which can easily be implemented and solved.

Disclosure statement

No potential conflict of interest was reported by the authors.

Notes

1. The matrix 0n×n of size n × n contains only zeros. The matrix In is the identity matrix of size n. Symmetric matrices of size n are denoted by . Symmetric positive definite matrices of size n are denoted by

or

. Symmetric negative definite matrices of size n are denoted by

.

References

- A.C. Antoulas, Approximation of Large-Scale Dynamical Systems, SIAM, Philadelphia, 2005.

- S. Grivet-Talocia, Black-box passive macromodeling in electronics: trends and open problems, in ECMI Newsletter, Number 56, European Consortium for Mathematics in Industry, Helsinki, 2014, pp. 40–43.

- S. Gugercin, R.V. Polyuga, C. Beattie, and A. Van Der Schaft, Structure-preserving tangential interpolation for model reduction of Port-Hamiltonian systems, Automatica 48 (9) (2012), pp. 1963–1974. doi:10.1016/j.automatica.2012.05.052

- P. Benner, S. Gugercin, and K. Willcox, A survey of projection-based model reduction methods for parametric dynamical systems, SIAM Rev. 57 (4) (2015), pp. 483–531. doi:10.1137/130932715

- D. Amsallem and C. Farhat, An online method for interpolating linear parametric reduced-order models, SIAM J. Sci. Comput. 33 (5) (2011), pp. 2169–2198. doi:10.1137/100813051

- J. Degroote, J. Vierendeels, and K. Willcox, Interpolation among reduced-order matrices to obtain parameterized models for design, optimization and probabilistic analysis, Int. J. Numer. Methods Fluids 63 (2) (2010), pp. 207–230.

- M. Geuss. A Black-box method for parametric model order reduction based on matrix interpolation with application to simulation and control, PhD thesis, submitted, TU München, 2015.

- M. Geuss, H. Panzer, and B. Lohmann, On parametric model order reduction by matrix interpolation, Proceedings of the European Control Conference, Zurich, Switzerland, 2013, pp. 3433–3438.

- B. Lohmann and R. Eid, Efficient order reduction of parametric and nonlinear models by superposition of locally reduced models, in Methoden und Anwendungen der Regelungstechnik. Erlangen-Münchener Workshops 2007 und 2008, B. Lohmann and G. Roppenecker, eds., Shaker-Verlag, Aachen, 2009, pp. 1–20.

- H. Panzer, J. Mohring, R. Eid, and B. Lohmann, Parametric model order reduction by matrix interpolation, at-Automatisierungstechnik 58 (8) (2010), pp. 475–484. doi:10.1524/auto.2010.0863

- R. Eid, R. Castañé-Selga, H. Panzer, T. Wolf, and B. Lohmann, Stability-preserving parametric model reduction by matrix interpolation, Math. Comput. Model. Dyn. Syst. 17 (4) (2011), pp. 319–335. doi:10.1080/13873954.2011.547671

- M. Giftthaler, T. Wolf, and H. Panzer, Parametric model order reduction of Port-Hamiltonian systems by matrix interpolation, at-Automatisierungstechnik 62 (9) (2014), pp. 619–628. doi:10.1515/auto-2013-1072

- O. Farle, S. Burgard, and R. Dyczij-Edlinger, Passivity preserving parametric model-order reduction for non-affine parameters, Math. Comput. Model. Dyn. Syst. 17 (3) (2011), pp. 279–294. doi:10.1080/13873954.2011.562901

- F. Ferranti, G. Antonini, T. Dhaene, and L. Knockaert, Guaranteed passive parameterized model order reduction of the Partial Element Equivalent Circuit (PEEC) method, IEEE Trans. Electromagn. Compat. 52 (4) (2010), pp. 974–984. doi:10.1109/TEMC.2010.2051949

- L. Knockaert, T. Dhaene, F. Ferranti, and D. De Zutter, Model order reduction with preservation of passivity, non-expansivity and markov moments, Syst. Control. Lett. 60 (1) (2011), pp. 53–61. doi:10.1016/j.sysconle.2010.10.006

- E.R. Samuel, F. Ferranti, L. Knockaert, and T. Dhaene, Passivity-preserving parameterized model order reduction using singular values and matrix interpolation, IEEE Trans. Compon. Packag. Manuf. Technol. 3 (6) (2013), pp. 1028–1037. doi:10.1109/TCPMT.2013.2248196

- B.N. Bond and L. Daniel, Stable reduced models for nonlinear descriptor systems through piecewise-linear approximation and projection, IEEE Trans. Computer-Aided Des. Integr. Circuits Syst. 28 (10) (2009), pp. 1467–1480. doi:10.1109/TCAD.2009.2030596

- M. Geuss, H. Panzer, T. Wolf, and B. Lohmann, Stability preservation for parametric model order reduction by matrix interpolation, Proceedings of the European Control Conference, Strasbourg, France, 2014, pp. 1098–1103.

- T. Kailath, Linear Systems, Prentice Hall, Englewood Cliffs, 1980.

- H.K. Khalil, Nonlinear Systems, 3rd ed., Prentice Hall, Upper Saddle River, NJ, 2002.

- T. Stykel, Analysis and numerical solution of generalized Lyapunov equations, PhD thesis, TU Berlin, 2002.

- H. Panzer, B. Kleinherne, and B. Lohmann, Analysis, Interpretation and generalization of a strictly dissipative state space formulation of second order systems, in Methoden und Anwendungen der Regelungstechnik. Erlangen-Münchener Workshops 2011 und 2012, B. Lohmann and G. Roppenecker, eds., Shaker-Verlag, Aachen, 2013, pp. 59–75.

- R. Castañé-Selga, B. Lohmann, and R. Eid, Stability preservation in projection-based model order reduction of large scale systems, Eur. J. Control 18 (2) (2012), pp. 122–132. doi:10.3166/ejc.18.122-132

- M. Geuss, H. Panzer, I. Clifford, and B. Lohmann, Parametric model order reduction using pseudoinverses for the matrix interpolation of differently sized reduced models, Proceedings of the 19th IFAC World Congress, Cape Town, South Africa, 2014, pp. 9468–9473.

- H. Panzer, S. Jaensch, T. Wolf, and B. Lohmann, A greedy rational Krylov method for μ2-pseudooptimal model order reduction with preservation of stability. Proceedings of the American Control Conference, Washington, DC, 2013, pp. 5512–5517.

- S. Gugercin, A.C. Antoulas, and C. Beattie, μ2 model reduction for large-scale linear dynamical systems, SIAM J. Matrix Anal. Appl. 30 (2) (2008), pp. 609–638. doi:10.1137/060666123

- D. Amsallem and C. Farhat, Stabilization of projection-based reduced-order models, Int. J. Numer. Methods Eng. 91 (4) (2012), pp. 358–377. doi:10.1002/nme.v91.4

- B.N. Bond and L. Daniel, Guaranteed stable projection-based model reduction for indefinite and unstable linear systems, Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, San Jose, CA, 2008, pp. 728–735.

- L. Vandenberghe and S. Boyd, Semidefinite programming, SIAM Rev. 38 (1) (1996), pp. 49–95. doi:10.1137/1038003

- S. Boyd and L. Vandenberghe, Convex Optimization, Cambridge University Press, New York, NY, 2004.

- S. Boyd, L. El Ghaoui, E. Feron, and V. Balakrishnan, Linear matrix inequalities in system and control theory, in Studies in Applied Mathematics, SIAM, Philadelphia, PA, 1994.

- M. Grant and S. Boyd, CVX: MATLAB software for disciplined convex programming, version 2.0, Aug, 2012. Available at http://cvxr.com/cvx.

- M. Grant and S. Boyd, Graph implementations for nonsmooth convex programs, in Recent Advances in Learning and Control, Lecture Notes in Control and Information Sciences, V. Blondel, S. Boyd, and H. Kimura, eds., Springer, London, 2008, pp. 95–110.

- Y. Nesterov and A. Nemirovskii, Interior-point polynomial algorithms in convex programming, in Studies in Applied Mathematics, SIAM, Philadelphia, PA, 1994.

- M.J. Todd, Semidefinite optimization, Acta Numer. 10 (2001), pp. 515–560. doi:10.1017/S0962492901000071

- L. Vandenberghe and S. Boyd, A polynomial-time algorithm for determining quadratic Lyapunov functions for nonlinear systems. Proceedings of the European Conference Circuit Theory Design, Davos, Switzerland, 1993, pp. 1065–1068.

- J.F. Sturm, Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones, Optim. Methods Softw. 11 (1999), pp. 625–653. doi:10.1080/10556789908805766

- MOR Wiki. Available at http://www.modelreduction.org.

- C. Moosmann, E.B. Rudnyi, A. Greiner, J.G. Korvink, and M. Hornung, Parameter preserving model order reduction of a flow meter. Proceedings of the NSTI Nanotech, 3, Anaheim, CA, 2005, pp. 684–687.

- R.H. Tütüncü, K.C. Toh, and M.J. Todd, Solving semidefinite-quadratic-linear programs using SDPT3, Math. Programming Ser. B 95 (2003), pp. 189–217. doi:10.1007/s10107-002-0347-5