?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

At present, China’s power grid has entered a new stage of ultra-high voltage, long-distance, large-capacity, large-unit, AC and DC hybrid interconnection grid, and the generator excitation system has an important impact on the stability of the power system. As the core component of the excitation system, the exciter plays a vital role in the safe operation of the excitation system. In view of the above problems, this paper proposes an improved CNN exciter fault diagnosis research method. To be able to learn more abundant fault features, this article first builds a dilated convolutions which is types of deep convolutional neural network (DCNN) to expand the receptive field of the convolution kernel. Then build the Pythagorean Spatial Pyramid Pooling Layer (PTSPP) to further enhance the feature information of the extracted samples. Finally, this article will generate two-dimensional matrix samples from the collected excitation voltage and excitation current signals for model training. The experimental results show that the proposed PTSPP-DCNN method has high classification accuracy in the fault diagnosis of the exciter system. The comparison results show that the fault classification accuracy of the proposed method is higher than other deep learning methods.

1. Introduction

At present, China’s power grid has entered a new stage of ultra-high voltage, long-distance, large capacity, large units, and AC/DC Hybrid Interconnected Power Grid. The operation state of generator units determines whether the power grid can operate safely and stably (Tao, Hao, Huan, et al. Citation2021), and the generator excitation system has an important impact on the stability of the power system, especially the widespread application of large capacity and high parameter units in actual production and operation (Rui and Bin Citation2020), which puts forward higher requirements for the reliability of the excitation system, Exciter is the core component of generator excitation system, so it is necessary to carry out condition evaluation and fault analysis (Xudong, Chuanbao, Lai, et al. Citation2020; Zhang et al. Citation2015; Ting-Jun Citation2019).

Some scholars have done a lot of research on the research method of fault diagnosis of the exciter system. Jiao Ningfei et al (Ningfei, Han, and Weiguo Citation2019). simplified the modelling of aero generator exciter based on the neural network method. Changcheng et al. (Citation2008). used the method of combining a genetic algorithm and an extension neural network to diagnose the fault of the excitation system. The experimental results show that this method is better than the traditional neural network method. To accurately classify the inrush current. Zhang et al. (Citation2020). realised the simulation analysis of transformer ground fault inrush current based on the least square method. In the subsequent research. Dong et al. (Citation2021). effectively suppressed the rotor side overcurrent based on the improved fuzzy control strategy and improved the stability of the doubly-fed hydropower rotor. Wang Fuquan and his team (Fuquanet al. Citation2020) proposed an excitation current vector control strategy without current feedback. Although the above methods can detect the faults of the excitation system, they can not achieve intelligent fault diagnosis.

In recent years, the deep learning method has been widely used in various fault diagnosis fields with its advantages of high efficiency, strong plasticity, and universality (Shouqiang et al. Citation2020; Yang et al. Citation2021; Yong, Min, and Zhiyi Citation2021). Compared with traditional data-driven methods, deep learning uses convolution kernel and weight to convolute local regions to extract feature information. Yang Yanjie et al (Yanjie, Jinjin, and Zhang Citation2021). applied convolutional neural network to excitation units and successfully classified thyristor fault and three-phase bus current identification fault; In the subsequent research, his team proposed a fault classification method of double bridge parallel excitation power unit combining CNN and LSTM (Yanjie et al. Citation2021). The experimental and simulation results show that the proposed method has high classification accuracy. However, the structure types of convolution layer and pooling layer in the above CNN research methods are relatively fixed, and can not provide more accurate classification results for input data with different structure lengths. In addition, the traditional convolution operation may cause the loss of feature details.

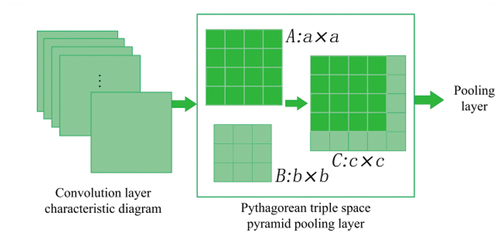

Spatial Pyramid Pooling (SPP) can pool feature maps of different sizes into fixed-size feature vectors, which are input into the fully connected layer to avoid information loss through cropping and deformation operations. At present, the SPP method has been used in action recognition, remote sensing hyperspectral image classification, and handwritten text image classification (Wu et al. Citation2021; Yuejuan and Xiaosan Citation2022; Yang et al. Citation2019). MA Liling (Liling et al. Citation2020) used spatial pyramid pooling and one-dimensional convolutional neural network to diagnose faults such as motor short circuits, which solved the problem of scale mismatch in the diagnosis process. LIU Xuting (Xutinget al. Citation2018) used spatial pyramid pooling to reduce the output dimension of the network and the calculation amount of the classifier to realise the fault diagnosis of the chiller. Although spatial pyramid pooling performs well in diagnosis, the SPP layer is directly connected to the fully connected layer and cannot make full use of SPP to obtain sufficient features, resulting in loss of location information. It also increases the number of connections in the fully connected layer and increases the training parameters. Therefore, this paper constructs a Pythagorean triple space pyramid pooling layer (PTSPP) structure. Make full use of SPP to obtain features to solve the problem of location information loss.

Expanded convolution (Xin et al. Citation2020) is an effective deep learning method. Without increasing the parameters of CNN system, holes are inserted into the original convolution kernel to expand the range of convolution kernel. In dilated convolutions, the size of the new convolution kernel depends on the dilation rate, which defines the spacing between values when the convolution kernel processes the data. ZHANG Tianqi (Tianqi, Haojun, and Shaopeng Citation2022) compared the dilated convolution module with the non-expanded convolution module, and proved that the dilated convolution can selectively transmit features and obtain more temporal correlation information; YU Tianwei (Tianweiet al. Citation2022) set different dilation rates of dilated convolution to extract contextual features at multiple spatial scales, enhancing the feature extraction ability of the model, and ultimately proving that dilated convolution has excellent extraction ability. Therefore, this paper uses the method of dilated convolution to extract the fault features in the exciter signal for diagnosis.

In summary, in order to accurately diagnose the fault of the exciter, this paper starts with the pooling layer and convolution layer of CNN, and proposes a research method PTSPP-DCNN for exciter fault diagnosis based on the combination of Pythagoras triple space pyramid pooling layer and dilated convolution.

2. Basic theory

2.1. Convolutional neural network

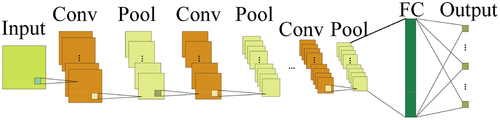

Convolutional neural network (CNN) is a variant of multilayer neural networks. It is usually composed of convolution layer and pooling layer alternately. CNN has three basic features: local acceptance domain, shared weight, and pooling. The number of training parameters can be reduced without losing the ability to express. CNN is mainly composed of input layer, convolution layer, pooling layer, full connection layer, and output layer. The basic CNN framework is shown in .

In the convolution layer, each neuron forms a mapping relationship with a small region of the input neuron. The input region is called the local acceptance region. All neurons share weights in the same kernel. Finally, the local region is convoluted with the learned convolution kernel and weight to extract the feature information. The convolution operation between the input neuron and the convolution kernel can be expressed as:

Where is the characteristic graph of

which is 1 layer,

representing the first input characteristic graph of layer L-1,

represents the convolution kernel connecting the

input characteristic graph and the

characteristic graph,

represents deviation, and

represents two-dimensional convolution operation.

is a ReLU activation function whose expression is defined as:

The pooling layer is used to obtain representative feature information, which is realised by summarising the feature response of the neuron region of the previous layer. Its role is mainly to reduce network parameters and reduce the resolution of the feature map. For the input characteristic graph xi, the output characteristic graph yi is represented as:

Where r is the pool size, and the maximum pool operation is usually applied. It represents the maximum amount of activation of the input and output regions. After the multi-level stacking of the convolution layer and the pooling layer, the full connection layer is connected. In the full join layer, the dropout technique is often used as a regularisation method to suppress overfitting. Finally, the classifier is used to connect the full connection layer and the output layer to complete the classification task.

2.2. Dilative convolution

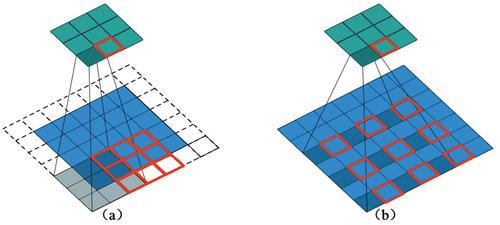

Dilated convolution is also called hole convolution. Its mechanism is to insert holes into the original convolution kernel without increasing the parameters of CNN system, to expand the range of convolution kernel. In dilated convolution, the size of the new convolution kernel depends on the dilation rate, which defines the distance between the values when the convolution kernel processes data. is the convolution map of the original CNN convolution (expansion rate is 1) and the expansion convolution (expansion rate is 2), respectively(The shaded portion of the image is step 1 and the red box is step 2).

Figure 2. Dilation convolution layers with different dilated rates: (a) dilated rate is 1, (b) dilated rate is 2.

The points in the shaded part in represent the sampling points on the image, and the sampled image is convoluted with the convolution kernel. In dilation convolution, the characteristics of the output image depend on the dilation rate. shows the convolution operation when the original CNN convolution (dilated rate is 1). shows the convolution operation when expanding convolution (dilated rate is 2). It can be found from the Figure that after the dilated convolution operation, the receptive field of the dilated convolution increases from 3 × 3 to 5 × 5. From the perspective of convolution kernel, dilated convolution inserts zeros of the number of dilated rates between adjacent points of traditional convolution kernel to expand the size of convolution kernel. While the input image does not lose any information, the diversity of feature information extraction is increased, which not only increases the generalisation ability of CNN model for fault information training but also improves the classification accuracy of the model.

2.3. Pyramid pool layer of Pythagorean space

The PTSPP layer structure is illustrated in . The advantage of PTSPP is that it pools the same image into two levels of different sizes first. The two levels of output are then used to compose the new feature output. The output feature map can be transferred to the convolution layer for another round of feature extraction. In order to promote the combination of two SPP outputs, select two smaller pooling levels a, b (a>b) from the Pythagorean triples, and the resulting reconstructed matrix C satisfies the following relationship:

3. PTSPP-DCNN fault diagnosis research method

In order to effectively diagnose the fault of the exciter in the excitation system, and classify its common fault characteristics. This paper presents an improved research method for CNN fault diagnosis. The convolution layer and pooling layer of CNN are optimised, and a fault diagnosis research method named PTSPP-DCNN is proposed.

3.1. Data Preprocessing

In order to adjust the activation state of the neural network, the input data of the neural network need to be normalised, which plays an important role in the effective training of the model. Previously, Local Response Normalization (LRN) was used to obtain local normalisation features in small neighbourhoods. Recently, the Batch Normalisation (BN) method was proposed (Zhuyun et al. Citation2020). It has become the cornerstone of deep learning fault diagnosis and has been applied to the framework of deep learning fault diagnosis to improve the adaptability of neural networks.

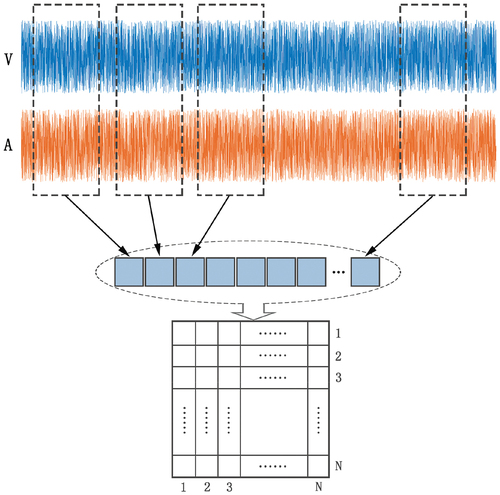

In this paper, the collected excitation voltage and excitation current signal data of the excitation system are used as the input of the algorithm. First, the one-dimensional voltage signal and one-dimensional current signal are transformed into a two-dimensional matrix. The size of the two-dimensional matrix is 2*M, and M is the length of the signal. Finally, the two-dimensional matrix signal is intercepted into the sample size of N2. The advantage of this data preprocessing method is that it is fast to operate, can better adapt to the input of a two-dimensional convolution network, and retains the characteristics of the signal to a great extent. The signal preprocessing method is shown in .

3.2. Structure Framework of PTSPP-DCNN

This paper presents a research method PTSPP-DCNN for exciter fault diagnosis based on the combination of dilated convolution and Pythagorean triple space pyramid pooling layer. Its specific flowchart is shown in . The steps are as follows:

Collect excitation voltage and current signal data of exciter;

Pre-process the collected data, generate training and test samples, the size of which is N2, and act as the input of CNN network;

Build the frame structure of the PTSPP-DCNN method and input samples for test training;

The correctness and effectiveness of the proposed algorithm are verified by experimental data, and the fault classification and diagnosis of the exciter system are realised.

4. Case Analysis

4.1. Experimental setup

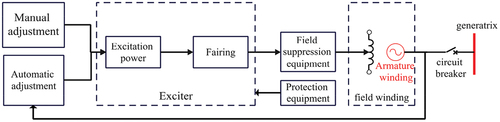

This paper collects data from the exciter of an excitation system experimental platform built by a company. The circuit diagram of the excitation system is shown in .

The excitation system consists of a rectifier, de-excitation device, excitation power supply, and protection device. A three-dimensional model of the exciter is shown in . From , it can be seen that the exciter consists of four parts: rotor core, magnetic bridge, permanent magnet, and excitation winding.

During the test, excitation voltage and current signals under four different fault conditions are collected, and the four fault type labels are shown in . In , the normal working state of the exciter is indicated by label NO, starting fault by label EF, unstable fault by label EI, loss of excitation fault by FF, and overtemperature fault by carbon brush by label CO. The data of excitation voltage and current signals at the unit end collected in the experiment are 500 groups, each with a length of 2048. Voltage and current signals form a 2-dimensional array as sample input of the proposed algorithm. A total of 500 data samples. The PTSPP-DCNN algorithm proposed in this paper is applied to train and test data samples.

Table 1. Exciter fault type label.

4.2 Parameter setting of PTSPP-DCNN algorithm

The parameters of the PTSPP-DCNN algorithm proposed in this paper are as follows: PTSPP-DCNN consists of an input layer, four dilated convolutions, three pooling layers, a pyramid pooling layer for Pythagoras ternary space, a full connection layer, and an output layer. The input data samples are processed into a two-dimensional matrix with a size of 64 × 64. The dilated rates of the four dilated convolution layers are 1, 2, 3, and 5 respectively. The filling and dilated rates are the same. The core size of the dilated convolution layer is 3 × 3 and the step size is 1.

In the pooling layer, use a maximum pooling with a core size of 2 × 2. The level of the pyramidal pooling layer of the Pythagoras ternary space is set to (8,6); In the connective layer, the number of nerves was set to 50. The Softmax regression function is used in the full connection layer and output layer, and the ReLU function is selected as the activation function in the convolution layer. The number of iterations is 100. The optimiser is Adam and the learning rate is set to 0.01. Batch_size is 100. The specific parameters of the PTSPP-DCNN algorithm are shown in .

Table 2. PTSPP-DCNN parameter settings.

4.2. Test results and comparative analysis

To verify the accuracy of the test results, the proposed algorithm is used to process the test data. At the same time, four classification methods including one-dimensional convolution cyclic neural network (OCRNN), space pyramid pooling and CNN (SPP-CNN), CNN-LSTM, and dilated convolution neural network (DCNN) are experimentally compared. The main parameters of the four methods are described as follows: CNN-LSTM and DCNN have the same parameters. OCRNN consists of an input layer, two one-dimensional convolution layers, a long-term memory layer, and an output layer, and the convolution core is 3 × 3 in size. The input size of the long and short memory layers is 4096 and the number of hidden layer units is 1024.

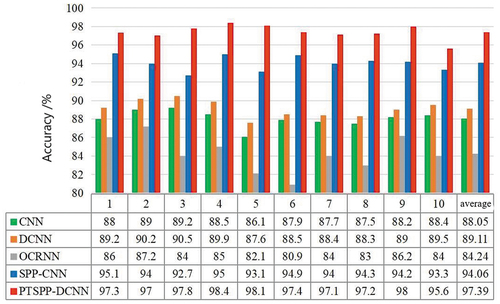

During the training process, the number of training samples and test samples are both 500. The PSPP-DCNN method proposed in this paper and the other four methods are used for test training respectively. The measured accuracy is shown in .

As can be seen from the experimental results in , the OCRNN method yields the lowest experimental results with an average test accuracy of 84.24%. The average accuracy of DCNN was 89.11%. The average test accuracy of the CNN-LSTM method is 92.37% higher than that of the DCNN and OCRNN methods. After adding dilated convolution, the average test accuracy of SPP-CNN is 94.06 %. The PTSPP-DCNN method proposed in this paper combines the advantages of dilated convolution and improved pooling layer, and its average test accuracy is as high as 97.39%. The result of all comparison methods is the best, which further proves the superiority of this method.

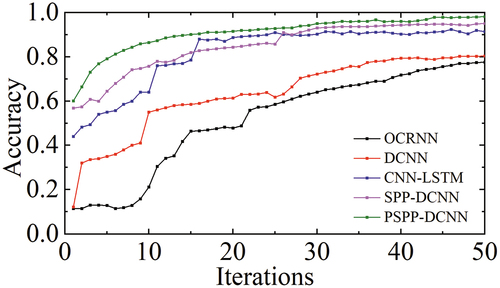

Select a set of data from the above ten classification accuracy experiments to verify the convergence speed of the proposed algorithm, as shown in . It can be seen from the figure that the PSSP-DCNN proposed in this paper has the fastest convergence speed. When iterating 30 times, the algorithm converges faster than the other four diagnostic algorithms. In summary, the PSSP-DCNN method proposed in this paper is superior to the traditional diagnostic methods in the diagnostic accuracy and convergence speed.

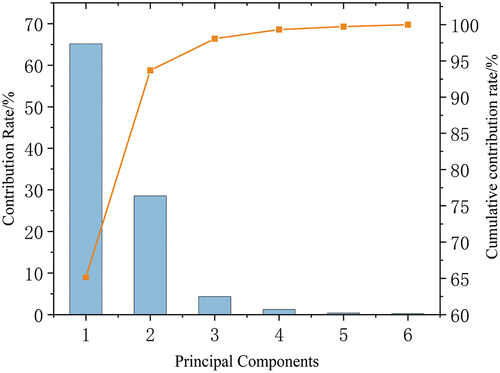

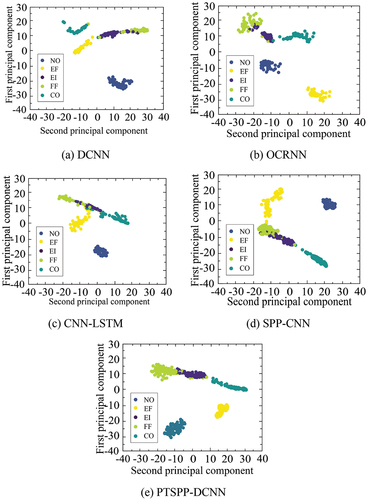

In order to more intuitively show the accuracy of the fault data classification of the exciter proposed in this paper, the T-distributed Stochastic Neighbourhood Embedding (t-SNE) algorithm is used for visual comparison. Firstly, the contribution rate of each principal component in several algorithms is calculated, as shown in .

From , it can be seen that the cumulative contribution rate of the first principal component and the second principal component reaches 93.69%. Therefore, this paper selects the first two principal components for dimensionality reduction visualisation analysis. The results are shown in . It can be seen from the graph that CNN-LSTM, DCNN, OCRNN, and SPP-CNN methods have three or more types of confusion, and the clustering effect is not good. The PTSPP-DCNN method has only two confusion results, and the clustering effect of the five fault feature labels of the exciter is better. The PTSPP-DCNN method extracts more classification features. It can be seen from the graph that the fault discrimination is higher, and it is more suitable for the fault feature classification of the exciter system than the other four methods.

5. Conclusion

In order to accurately extract exciter fault characteristics, an improved fault diagnosis method for CNN exciter is proposed in this paper. This method first constructs a dilated convolution layer to enlarge the sensing field of the convolution core. Then a pyramidal pool layer (PTSPP) in Pythagoras space was built to further enhance the feature information of the extracted samples. The experimental results show that the PTSPP-DCNN method proposed in this paper has a classification accuracy of 97.39% in fault diagnosis of the exciter system. The comparison results show that the fault classification accuracy of this method is better than other deep learning methods.

Declarations

I would like to declare on behalf of my co-authors that the work described was original research that has not been published previously, and not under consideration for publication elsewhere, in whole or in part. All the authors listed have approved the manuscript that is enclosed. At the same time, there is no competitive interest to declare.

Acknowledgments

The project was supported by the Major Programme of the National Natural Science Foundation of China (Grant No. 51675350)

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Changcheng, Xiang, Huang Xiyue, Yin Lishen, and Yang, Zuyuan. 2008. “Fault Diagnosis Based on Genetic Algorithm and Extension Neural Network.” Computer Simulation 25 (04): 249–252.

- Fuquan, Wang, and Zhou, Zhiyong Zhang Jun. 2020. “Vector Control of Excitation Current for Precision Air Flotation Turntable.” Chinese Journal of Scientific Instrument 41 (11): 66–73.

- Li-ling, MA., LIU. Xiao-ran, SHEN. Wei, and WANG. Jun-zheng. 2020. “Motor Fault Diagnosis Method Based on Improved One-Dimensional Convolutional Neural Network.” Transactions of Beijing Institute of Technology 40 (10): 1088–1093.

- Ningfei, Jiao, Xu Han, and Liu Weiguo. 2019. “Simplified Integrated Model of Aircraft Three-Stage Starter-Generator Based on Neural Network.” Micromotors 52 (01): 30–35.

- Rui, Yu, and Zhang Bin. 2020. “Analysis and Treatment of Low Current Coefficient Fault in Excitation System.” Fujian Hydropower 2020 (1): 33–34.

- Shouqiang, Kang, Zou Jiayue, Wang Yujing, Xie, J and Mikulovich, VI. 2020. “Fault Diagnosis Method of a Rolling Bearing Under Varying Loads Based on Unsupervised Feature Alignment”. Proceedings of the CSEE 40(1): 274–281.

- Tao, Wu, Liang Hao, Xie Huan, Yang, SHI, Yan, ZHAO and Zhang, Guangtao. 2021. “Key Technologies and Future Prospects of Excitation System Control.” Power Generation Technology 42 (02): 160–170.

- Tianqi, ZHANG., BAI. Haojun, and YE. Shaopeng. 2022. “Monaural Speech Enhancement Based on Attention-Gate Dilated Convolution Network.” Journal of Electronics & Information Technology 44 (09): 3277–3288.

- Tianwei, YU., ZHENG. Enrang, SHEN. Junge, and WANG. Kai. 2022. “Optical Remote Sensing Image Scene Classification Based on Multi-Level Cross-Layer Bilinear Fusion.” Acta Photonica Sinica 51 (02): 260–273.

- Ting-Jun, Li. 2019. “Analysis and Treatment of Generator Excitation System Faults.” Jilin Electric Power 47 (06): 54–56.

- Wenjun, Zhang, Zhang Wenbin, Yi Shanjun, Xu Liufei, and Yang Danlin. 2020. “Simulation Analysis of Inrush Current in Transformer Grounding Fault Based on Least Square Method.” Electronic Measurement Technology 43 (05): 47–50.

- Wu, Z L., X. Wang, C. Chen, and J. Su. 2021. “Research on Lightweight Infrared Pedestrian Detection Model Algorithm for Embedded Platform.” Security And Communication Networks, 2021 2021: 1–7. doi:10.1155/2021/1549772.

- Xin, Wang, Jiang Zhixiang, Zhang Yangkou, Jinqiao,Chang, Xinxu, and Xu, Dongdong. 2020. “Deep Clustering Speaker Speech Separation Based on Temporal Convolutional Network.” Computer Engineering and Design 41 (09): 2630–2635.

- Xudong, Chen, Yi Chuanbao, Lai Lu, Gao Hong, Liang Tingting, Feng Yupeng, and Liu Ye. 2020. “Research on Parallel Control Strategy of the Power Unit Used in AC Excitation System.” Electric Drive 50 (08): 107–112.

- Xuting, LIU., LI. Yiguo, SUN. Shuanzhu, Liu, X and Shen, J. 2018. “Fault Diagnosis of Chillers Using Sparsely Local Embedding Deep Convolutional Neural Network.” CIESC, Journal 69 (12): 5155–5163.

- Yang, Bo, YANG. Wenzhong, YIN. Yabo, HE. Xueqin, YUAN. Tingting, and LIU. Zeyang. 2019. “Short Text Clustering Based on Word Vector and Incremental Clustering.” Computer Engineering And Design 40 (10): 2985–2990+3055.

- Yang, Xu, Li Zhixiong, Wang Shuqing, W. Li, T. Sarkodie-Gyan, and S. Feng. 2021. “A Hybrid Deep-Learning Model for Fault Diagnosis of Rolling Bearings.” Measurement 169: 108502. doi:10.1016/j.measurement.2020.108502.

- Yanjie, Yang, Yuan Jinjin, and He. Zhang. 2021. “Fault Diagnosis of Excitation Unit Based on Improved Convolution Neural Network.” Journal of Computer Simulation 38 (06): 444–449.

- Yanjie, Yang, Dong Zhe, Yao Fang, Guangxi, S and Ruoyu, S. 2021. “Fault Diagnosis of Double Bridge Parallel Excitation Power Unit Based on 1D-CNN-LSTM Hybrid Neural Network Model.” Power System Technology 45 (05): 2025–2032.

- Yong, Deng, Cao Min, and Lai Zhiyi. 2021. “Ultrasonic Detection Pattern Recognition Method for Natural Gas Pipeline Gas Pressure Based on Deep Learning.” Journal of Electronic Measurement and Instrumentation 35 (10): 176–183.

- Yuejuan, REN, and GE Xiaosan. 2022. “An Road Synthesis Extraction Method of Remote Sensing Image Based on Improved DeepLabv3 + Network.” Bulletin of Surveying and Mapping 2022 (06): 55–61.

- Zhang,Gu Liang, Lu Tang, and Tang Han. Excitation System Dynamic Performance Evaluation of Generator Units Based on Intuitionistic Fuzzy Sets Strategy. Proceedings of the CSEE. 2015, 35(22): 5749–5756.

- Zhe, Dong, Liu Yili, Zhu Wei, and Liu Shuyu. 2021. “Research and Design of Doubly-Fed Hydropower Rotor-Side Converter Based on ADRC and Fuzzy Virtual Resistance.” Foreign Electronic Measurement Technology 40 (11): 148–154.

- Zhuyun, Chen, Mauricio Alexandre, Li Weihua, and K. Gryllias. 2020. “A Deep Learning Method for Bearing Fault Diagnosis Based on Cyclic Spectral Coherence and Convolutional Neural Networks.Mechanical Systems and Signal Processing.” Mechanical Systems and Signal Processing 140: 106683. doi:10.1016/j.ymssp.2020.106683.