?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

In this article we study and classify optimal martingales in the dual formulation of optimal stopping problems. In this respect we distinguish between weakly optimal and surely optimal martingales. It is shown that the family of weakly optimal and surely optimal martingales may be quite large. On the other hand it is shown that the surely optimal Doob-martingale, that is, the martingale part of the Snell envelope, is in a certain sense robust under a particular random perturbation. This new insight leads to a novel randomized dual martingale minimization algorithm that doesn't require nested simulation. As a main feature, in a possibly large family of optimal martingales the algorithm may efficiently select a martingale that is as close as possible to the Doob martingale. As a result, one obtains the dual upper bound for the optimal stopping problem with low variance.

1. Introduction

The last decades have seen a huge development of numerical methods for solving optimal stopping problems. Such problems became very prominent in the financial industry in the form of American derivatives. For such derivatives one needs to evaluate the right of exercising (stopping) a certain cash-flow (reward) process Z at some (stopping) time τ, up to some time horizon T. From a mathematical point of view this evaluation comes down to solving an optimal stopping problem

Typically the cash-flow Z depends on various underlying assets and/or interest rates and as such is part of a high dimensional Markovian framework. Particularly for high dimensional stopping problems, virtually all generic numerical solutions are Monte Carlo based. Most of the first numerical solution approaches were of primal nature in the sense that the goal was to construct a ‘good’ exercise policy and to simulate a lower biased estimate of

In this respect we mention, for example, the well-known regression methods by Longstaff and Schwartz (Citation2001), Tsitsiklis and Van Roy (Citation2001), and the stochastic mesh approach by Broadie and Glasserman (Citation2004), and the stochastic policy improvement method by Kolodko and Schoenmakers (Citation2006). For further references we refer to the literature, for example Glasserman (Citation2003) and the references therein.

In this paper we focus on the dual approach developed by Rogers (Citation2002), and Haugh and Kogan (Citation2004), initiated earlier by Davis and Karatzas (Citation1994). In the dual method the stopping problem is solved by minimizing over a set of martingales, rather than a set of stopping times,

(1)

(1)

A canonical minimizer of this dual problem is the martingale part,

of the Doob(-Meyer) decomposition of the Snell envelope

which moreover has the nice property that

(2)

(2)

That is, if one would succeed in finding , the value of

can be obtained from one trajectory of

only.

Shortly after the development of the duality method in Rogers (Citation2002) and Haugh and Kogan (Citation2004), various numerical approaches for computing dual upper bounds for American options based on it appeared. May be one of the most popular methods is the nested simulation approach by Andersen and Broadie (Citation2004), who essentially construct an approximation to the Doob martingale of the Snell envelope via stopping times obtained by the Longstaff & Schwartz method (Longstaff and Schwartz Citation2001). A few years later, a linear Monte Carlo method for dual upper bounds was proposed in Belomestny et al. (Citation2009). In fact, as a common feature, both Andersen and Broadie (Citation2004) and Belomestny et al. (Citation2009) aimed at constructing (an approximation of) the Doob martingale of the Snell envelope via some approximative knowledge of continuation functions obtained by the method of Longstaff & Schwartz or in another way. Instead of relying on such information, the common goal in later studies (Desai et al. Citation2012, Belomestny Citation2013, Schoenmakers et al. Citation2013, Belomestny et al. Citation2014), was to minimize the expectation functional in the dual representation (Equation1(1)

(1) ) over a linear space of generic ‘elementary’ martingales. Indeed, by parameterizing the martingale family in a linear way and replacing the expectation in (Equation1

(1)

(1) ) by the sample mean over a large set of trajectories, the resulting minimization comes down to solving a linear program. However, it was pointed out in Schoenmakers et al. (Citation2013) that in general there may exist martingales that are ‘weakly’ optimal in the sense that they minimize (Equation1

(1)

(1) ), but fail to have the ‘almost sure property’ (Equation2

(2)

(2) ). As a consequence, the estimator for the dual upper bound due to such martingales may have high variance. Moreover, an example in Schoenmakers et al. (Citation2013) illustrates that a straightforward minimization of the sample mean corresponding to (Equation1

(1)

(1) ) may end up with a martingale that is asymptotically optimal in the sense of (Equation1

(1)

(1) ) but not surely optimal in the sense of (Equation2

(2)

(2) ), when the sample size tends to infinity. As a remedy to this problem, in Belomestny (Citation2013) variance penalization is proposed, whereas in Belomestny et al. (Citation2014) the sample mean is replaced by the maximum over all trajectories.

In this paper we first extend the study of surely optimal martingales in Schoenmakers et al. (Citation2013) to the larger class of weakly optimal martingales. As a principal contribution, we give a complete characterization of weakly and surely optimal martingales and moreover consider the notion of randomized dual martingales. In particular, it is shown that in general there may be infinitely martingales that are optimal but not surely optimal. In fact, straightforward minimization procedures based on the sample mean in (Equation1(1)

(1) ) may typically return martingales of this kind, even if the Doob martingale of the Snell envelope is contained in the martingale family (as illustrated already in Schoenmakers et al. (Citation2013), though at a somewhat pathological example with partially deterministic cash-flows). As another main contribution we will show that surely optimal martingales play a distinguished role within the family of all optimal martingales. Namely, it will be proved that by perturbing a surely optimal martingale by particular randomization it will remain surely optimal, while any other martingale under this particular randomization turns to a suboptimal one. In the context of the surely optimal Doob martingale, this turns out to be a very useful feature, since the corresponding ‘ideal’ randomization involves information of the Doob martingale itself that can be estimated from an estimate of the Snell envelope. More specifically, under this ‘ideal’ randomization the Doob martingale, perturbed with it, remains guaranteed (surely) optimal, while any other surely or weakly optimal martingale turns to a suboptimal one. Of course, as a rule, this ‘ideal’ randomization is not known or available in practical applications. But, fortunately, it turns out that by just incorporating some simple (‘naive’) randomization using uniform random variables, the sample-mean minimization may return a martingale that is closer to the Doob-martingale than the one obtained without randomization. We thus end up with a martingale having a lower variance, which in turn guarantees that the corresponding upper bound based on (Equation1

(1)

(1) ) is tight (see Belomestny Citation2013, Schoenmakers et al. Citation2013). Compared to Belomestny et al. (Citation2014) and Belomestny (Citation2013), the benefit of this new randomized dual approach is its computational efficiency related to the fact that the resulting optimization problem can be solved via linear programing (in the case of linear classes of martingales). We have carried out numerical experiments for a couple of stylized examples that are simple enough to be treated analytically but yet rich enough to exhibit most features in the context of our study.

Finally, we underline that in this paper our emphasis is on the theoretical aspects and classification of the optimal martingales with potential applications to randomization procedures for selecting martingales with low variance. An extensive numerical analysis of a randomized dual martingale approach (albeit with slightly different randomization) as well as a comprehensive convergence analysis based on the theory of empirical processes is available in the follow-up work (Belomestny et al. Citation2022).Footnote1

The structure of the paper is as follows. Section 2 carries out a systematic theoretical analysis of optimal martingales. In Section 3 we deal with randomized optimal martingales and the effect of randomizing the Doob-martingale. More technical proofs are given in Section 4 and some first numerical examples are presented in Section 5.

2. Characterization of optimal martingales

Since practically any numerical approach to optimal stopping is based on a discrete exercise grid, we will work within in a discrete time setup. That is, it is assumed that exercise (or stopping) is restricted to a discrete set of exercise times for some time horizon T and some

For notational convenience we will further identify the exercise times

with their index j, and thus monitor the reward process

at the ‘times’

J.

Let be a filtered probability space with discrete filtration

An optimal stopping problem is a problem of stopping the reward process

in such a way that the expected reward is maximized. The value of the optimal stopping problem with horizon J at time

is given by

(3)

(3)

provided that Z was not stopped before j. In (Equation3

(3)

(3) ),

is the set of

-stopping times taking values in

and the process

is called the Snell envelope. It is well known that

is a supermartingale satisfying the backward dynamic programing equation (Bellman principle):

Along with a primal approach based on the representation (Equation3

(3)

(3) ), a dual method was proposed in Rogers (Citation2002) and Haugh and Kogan (Citation2004). Below we give a short self-contained recap while including the notions of weak and sure optimality.

Let be the set of martingales M adapted to

with

By using the Doob's optimal sampling theorem one observes that

(4)

(4)

for any

see Rogers (Citation2002) and Haugh and Kogan (Citation2004). We will say that a martingale M is weakly optimal, or just optimal, at j, for some

if

(5)

(5)

The set of all martingales (weakly) optimal at j will be denoted by

The set of martingales optimal at j for all

is denoted by

We say that a martingale M is surely optimal at j, for some

if

(6)

(6)

The set of all surely optimal martingales at j will be denoted by

The set of surely optimal martingales at j for all

is denoted by

Note that, obviously,

⊂

⊂

Now there always exists at least one surely optimal martingale, the so-called Doob-martingale coming from the Doob decomposition of the Snell envelope Indeed, consider the Doob decomposition of

that is,

(7)

(7)

where

is a martingale with

and

is predictable with

It follows immediately that

(8)

(8)

and so

is non-decreasing due to the fact that

is a supermartingale. One thus has by (Equation7

(7)

(7) ) on the one hand

and due to (Equation4

(4)

(4) ) on the other hand

Thus, it follows that (Equation6

(6)

(6) ) holds for arbitrary j, hence

Furthermore we have the following properties of the sets

and

Proposition 1

The sets and

for

and

are convex.

As an immediate consequence of Proposition 1; if there exist more than one weakly (respectively surely) optimal martingale, then there exist infinitely many weakly (respectively surely) optimal martingales.

Proposition 2

It holds that M for some

if and only if for any optimal stopping time

satisfying

one has that

Proof.

Let be an optimal stopping time. Suppose that M

On the one hand, one trivially has

and on the other, since M

(see (Equation5

(5)

(5) )),

(9)

(9)

The converse follows from (Equation9

(9)

(9) ) by taking conditional

-expectations.

It will be shown below that the class of the optimal martingales may be considerably large. In fact, any such martingale can be seen as a perturbation of the Doob martingale

For this, let us introduce some further notation and define

with

by convention and let, for

be the first optimal stopping time strictly after

That is, if

we define recursively

where

There so will be a last number,

say, with

Further, the family

defined by

(10)

(10)

is a consistent optimal stopping family in the sense that

and that

implies

The next lemma provides a corner stone for an explicit structural characterization of (weakly) optimal martingales.

Lemma 3

if and only if M is a martingale with

such that the identities

hold.

The following lemma anticipates sufficient conditions for a martingale M to be optimal, that is, to be a member of

Lemma 4

Let be an adapted sequence with

and consider the ‘shifted’ Doob martingale

Let

be the unique number such that

for any

If

satisfies for all

(11)

(11)

(12)

(12)

for

and

, then M satisfies the identities (i)–(ii) in Lemma 3.

The next lemma is merely a reformulation of the previous one in terms of the increments of .

Lemma 5

Let us represent an (arbitrary) adapted with

by

(13)

(13)

where each

is a

-measurable random variable. Then the conditions (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ) are equivalent to the following ones.

| (i) | On the | ||||

| (ii) | On | ||||

Proof.

Indeed, take j such that

If

then

and (Equation14

(14)

(14) ) and (Equation15

(15)

(15) ) imply with i = j−1 via (Equation13

(13)

(13) ),

respectively, which in turn imply (Equation11

(11)

(11) ) (note that

) and (Equation12

(12)

(12) ), respectively. Further if

we have to distinguish between

and

In both cases (Equation11

(11)

(11) ) is trivially fulfilled, while (Equation12

(12)

(12) ) is void in the first case, and in the second case it reads,

which is implied by (Equation13

(13)

(13) ) and (Equation16

(16)

(16) ) for

The converse direction, that is from (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ) to (Equation14

(14)

(14) ), (Equation15

(15)

(15) ), (Equation16

(16)

(16) ), goes similarly and is left to the reader.

Corollary 6

Any martingale with

that satisfies (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ), or (i) and (ii) via (Equation13

(13)

(13) ), is an optimal martingale. (Note that

trivially satisfies these conditions.)

Interestingly, the converse to Corollary 6 is also true and we so have the following characterization theorem.

Theorem 7

It holds that if and only if

where

is a martingale with

that satisfies (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ) in Lemma 4.

The proofs of Lemmas 3–4 and Theorem 7 are given in Section 4. In fact, Theorem 7 reveals that in general, besides the Doob martingale, there may exist a large set of optimal martingales From Theorem 7 we also obtain a characterization of the surely optimal martingales which is essentially the older result in Schoenmakers et al. (Citation2013), Thm. 6 (see Section 4 for the proof).

Corollary 8

It holds that if and only if

with

represented by (Equation13

(13)

(13) ) with all

satisfying (Equation16

(16)

(16) ) for

, and

for

In applications of dual optimal stopping, hence dual martingale minimization, it is usually enough to find martingales M that are ‘close to’ surely optimal ones, merely at some specific point in time i, that is, M ∈. Naturally, since

we may expect that in general the family of undesirable (not surely) optimal martingales at a specific time may be even much larger than the family

characterized by Theorem 7. A characterization of

and

is given by the next theorem, where we take i = 0 without loss of generality. The proof is given in Section 4.

Theorem 9

The following statements hold.

| (i) |

| ||||

| (ii) |

| ||||

After dropping the nonnegative term in the right-hand-sides of (Equation18

(18)

(18) ) and (Equation20

(20)

(20) ) we may obtain tractable sufficient conditions for a martingale to be optimal or surely optimal at a single date, respectively. In the spirit of Lemma 5 they may be formulated in the following way.

Corollary 10

Let for some martingale

represented by (Equation13

(13)

(13) ), then

| (i) |

| ||||

| (ii) |

| ||||

Remark 11

While the class of optimal martingales may be quite large in general, it is still possible that it is just a singleton (containing the Doob martingale only). For example, let the cash-flow

be a martingale itself, then it is easy to see that the only optimal martingale (at 0) is

(the proof is left as an easy exercise).

3. Randomized dual martingale representations

Let be some auxiliary measurable space that is ‘rich enough’. Let us consider random variables on

that are measurable with respect to the σ-field

While abusing notation a bit,

and

are identified with

and

respectively. Let further

be the given ‘primary’ measure on

and

be an extension of

to

in the sense that

In particular, if

is

-measurable, then

for some

, that is, X does not depend on

We now introduce randomized or ‘pseudo’ martingales as random perturbations of

-adapted martingales

. Let

be random variables on

such that

for

Then

(23)

(23)

is said to be a pseudo martingale. As such,

is not an

-martingale but

is. The results below on pseudo-martingales provide the key motivation for randomized dual optimal stopping. All proofs in this section are deferred to Section 4.

Proposition 12

| (i) | For any | ||||

| (ii) | Suppose | ||||

| (iii) | If the mean zero random perturbations | ||||

The next theorem states that, loosely speaking, there exists a particular randomization of the form (Equation26(26)

(26) ) connected with

with the following property: Any martingale different from

fails to be optimal under this particular randomization.

Theorem 13

Now suppose that with

and

being some martingale of the form (Equation13

(13)

(13) ). Let

be a sequence of random variables given by

(29)

(29)

where the

are assumed to be i.i.d. distributed on

independent of

with

It is further assumed that the r.v.

have a density p that is continuous in the interval

, vanishes on

, and is such that

. As such the randomizers (Equation29

(29)

(29) ) satisfy (Equation26

(26)

(26) ). Proposition 12 provides an upper bound (Equation24

(24)

(24) ) due to the pseudo martingale

Now, for the randomized martingale

one has

(30)

(30)

if

that is, if

.

The following corollary states that any martingale

randomized with (Equation29

(29)

(29) ), which is suboptimal in the sense of (Equation30

(30)

(30) ), cannot have zero variance. The proof relies on Theorem 13.

Corollary 14

Let M and as in Theorem 13, and

. Then

if and only if

Randomizing the Doob the martingale

Proposition 12 shows that, in principle, there is a remarkable freedom of perturbing any surely optimal martingale randomly while (Equation28

(28)

(28) ) remains true. The message of Theorem 13 is, on the one hand, that a particular randomization, namely (Equation29

(29)

(29) ), of any martingale different from

results in a non optimal (pseudo) martingale in the sense of (Equation30

(30)

(30) ). On the other hand, any randomization of

under (Equation26

(26)

(26) ) remains surely optimal in the sense (Equation28

(28)

(28) ). However, when one thinks of implementing this idea in practice the question arises: which target martingale

(there may be infinitely many) do we have in mind? Of course, the canonical candidate is the Doob martingale. An approximation may be directly inferred, for instance from any accompanying standard primal algorithm such as the Longstaff-Schwartz method. With

the randomization (Equation29

(29)

(29) ) takes by (Equation7

(7)

(7) ) the form

(31)

(31)

Thus, if

are approximate continuation functions due to some underlying Markovian process X obtained by the method of Longstaff-Schwartz for example, one may consider the randomizations

(32)

(32)

As a bottomline, if the (surely optimal) Doob martingale is a member of some larger martingale family that includes many weakly optimal martingales, by randomizing with (Equation31

(31)

(31) ) (or (Equation32

(32)

(32) )) all (or approximately all) weakly optimal members of this family can be sorted out in a suitable dual expectation minimization procedure. For first numerical examples we refer to Section 5.

4. Proofs

4.1. Proof of Lemma 1

It is enough to show the convexity of and

for any j. For any

and

one has

while by (Equation4

(4)

(4) ),

Similarly, for any

and

we have

while by (Equation4

(4)

(4) ),

In both cases the sandwich property completes.

4.2. Proof of Lemma 3

Suppose that M is a martingale with such that Lemma 3-(i) and (ii) hold. Then (ii) implies for

that

(33)

(33)

Now take

arbitrarily, and let

be such that

(Note that

is unique and

measurable). Then due to Lemma 3-(i) and (Equation33

(33)

(33) ),

On the other hand, one has

(see (Equation10

(10)

(10) )). Thus, by Proposition 2,

and hence

since i was taken arbitrarily.

Conversely, suppose that So for any

by Proposition 2. For l = 1 one thus has

and for l>1 it holds that

That is, (i) is shown. Next, for any l>1 it holds

which implies (ii).

4.3. Proof of Lemma 4

Assume that is adapted with

and that

satisfies (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ). For l>1 and

we may write,

(34)

(34)

By taking

in (Equation34

(34)

(34) ) and using

we then get

and thus

So from (Equation11

(11)

(11) ) we obtain with

i.e. Lemma 3-(i) for

If l = 1 and

Lemma 3-(i) is trivially fulfilled. So let us consider l = 1 and

Analogously, we then may write for

(35)

(35)

It is easy to see that (Equation35

(35)

(35) ) is also valid for

due to our assumption

Thus, for l = 1 and taking

we get from (Equation35

(35)

(35) ),

whence (Equation35

(35)

(35) ) implies for

that is Lemma 3-(i) holds also for

Let us now consider (ii) and take Now for

(Equation34

(34)

(34) ) implies with

(36)

(36)

Hence, since always

(Equation12

(12)

(12) ) implies for

(37)

(37)

i.e. Lemma 3-(ii) is proved.

4.4. Proof of Theorem 7

If , where

is a martingale with

that satisfies (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ) in Lemma 4 then

due to Corollary 6.

Let us now consider the converse and assume that with

Then

is adapted and may be written in the form (Equation13

(13)

(13) ) where the

are

-measurable and

for

Since

Lemma 3-(i) implies that for

(38)

(38)

since for each r with

one has

because

We now show for any i with

that (Equation11

(11)

(11) ) holds with

by backward induction. For

it follows from (Equation38

(38)

(38) ). Now suppose that for some i with

it holds that

(39)

(39)

One has by construction

Hence, since

with

and

(!),

and taking

-conditional expectations,

using the induction hypothesis (Equation39

(39)

(39) ). In view of (Equation38

(38)

(38) ) it follows that (Equation11

(11)

(11) ) holds for

Next, on the other hand, implies by Lemma 3-(ii) that for any fixed l>1,

(40)

(40)

Suppose that

and hence

Then (Equation40

(40)

(40) ) implies by (Equation13

(13)

(13) ) after a few manipulations,

with the usual convention

Thus, either the last three sums are zero due to

or we may use that

for

We thus get for

(41)

(41)

In particular, due to

for

this gives

(42)

(42)

Let us now show that (Equation12

(12)

(12) ) holds for

and

by backward induction. For

it follows from (Equation42

(42)

(42) ) by

that

that is (Equation12

(12)

(12) ) for

Now suppose that for some i with

it holds that

One thus has by construction

It then follows similarly by taking

-conditional expectations that

by the induction hypothesis (note again that

). Thus, (Equation12

(12)

(12) ) holds for

and so (Equation12

(12)

(12) ) is proved. We thus conclude that

is a martingale that satisfies (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ). The theorem is proved.

4.5. Proof of Corollary 8

Suppose that for some martingale

represented by (Equation13

(13)

(13) ). Since

Theorem 7 implies (via Corollary 5) that the

satisfy (Equation16

(16)

(16) ) for

. Further, for any

one has

since

So

by Doob's sampling theorem. Hence, by the sandwich property,

for all

This implies for any i with

that

due to

Conversely, if the satisfy (Equation16

(16)

(16) ) for

and further

for any i with

they also trivially satisfy (Equation15

(15)

(15) ) and (Equation14

(14)

(14) ), and so one has

by Theorem 7 (via Corollary 5). Furthermore it follows that

for any i with

so by Proposition 2

Hence,

and so

since i was arbitrary.

4.6. Proof of Theorem 9

Due to Proposition 2,

if and only if

with

which is equivalent with

(43)

(43)

(44)

(44) Since

for

(Equation43

(43)

(43) ) reads

(45)

(45) which in turn is equivalent with (Equation17

(17)

(17) ). Indeed, suppose that (Equation45

(45)

(45) ) holds. Then (Equation17

(17)

(17) ) clearly holds for

Now assume that (Equation17

(17)

(17) ) holds for

Then, by backward induction,

By next taking

-conditional expectations we get (Equation17

(17)

(17) ) for

For the converse, just take

in (Equation17

(17)

(17) ). We next consider (Equation44

(44)

(44) ), which may be written as

Using the Doob decomposition of the Snell envelope (Equation7

(7)

(7) ),

and that

this is equivalent with (Equation18

(18)

(18) ).

Suppose that

One has that

if and only if

Since

a.s., this implies

a.s., and so by

that

by the sandwich property. Now note that

is also a martingale with

a.s. Let us write (assuming that

)

That is,

is

-measurable with

so

and thus

a.s. By proceeding backwards in the same way we see that

for all

which implies

whence

for

i.e. (Equation19

(19)

(19) ). Since

(Equation20

(20)

(20) ) follows from (Equation18

(18)

(18) ) with

Conversely, if (Equation19

(19)

(19) ) and (Equation20

(20)

(20) ) hold, then

and due to (Equation20

(20)

(20) ), for each

by (Equation7

(7)

(7) ). That is

and so

4.7. Proof of Proposition 12

It holds that

by duality, hence (Equation24

(24)

(24) ). Further, if

one has with (Equation7

(7)

(7) ), for

due to Theorem 9-(ii), hence (Equation25

(25)

(25) ). For

given by (Equation27

(27)

(27) ) with

satisfying (Equation26

(26)

(26) ), we thus have

(46)

(46)

Then (Equation28

(28)

(28) ) follows by (Equation24

(24)

(24) ) and the sandwich property.

As for the last statement: By (Equation26(26)

(26) ) one has

and by Theorem 9-(ii) and Doobs decomposition (Equation7

(7)

(7) ),

That is,

since

for

Then, since

it follows that

a.s.

4.8. Proof of Theorem 13

Let and

be as stated, and let us assume that for

one has

(47)

(47)

We then have to show that

i.e.

We may write

By (Equation47

(47)

(47) ) we must have

(48)

(48)

Let us observe that

(49)

(49)

using

due Theorem 9-(ii) and (Equation7

(7)

(7) ) and

and

due to Proposition 12. Since, by assumption,

is some martingale with

, we have

by Doob's sampling theorem, and so (Equation48

(48)

(48) ) and (Equation49

(49)

(49) ) imply by the sandwich property,

(50)

(50)

Now inserting (Equation29

(29)

(29) ) yields,

(51)

(51)

By applying Lemma 15 below to

and

it follows that

However,

is a martingale with

so by Doob's sampling theorem

i.e.

and we thus must have

Since j,

was arbitrary it now follows that

for all j, hence

Lemma 15

Let U, V, W be real valued random variables with ,

and

almost surely, and, U being independent of the pair

with

for any

. One then must have

a.s.

Proof.

For arbitrary and K>0 we have, since

a.s.,

using that U is independent of the pair

Hence

and it thus follows that the set

has probability zero.

4.9. Proof of Corollary 14

If one has

due to Proposition 12. Let us take some

and assume that

From here we will derive a contradiction. As in the proof of Theorem 13 we write

(52)

(52)

Now,

implies by Theorem 13 that

(53)

(53)

That is, due to (Equation52

(52)

(52) ) and (Equation53

(53)

(53) ), there exists a constant c>0 such that

Using (Equation29

(29)

(29) ) and the fact that for any j,

(see Proposition 12), and

this implies

(54)

(54)

Consider the stopping time

Then, using

and (Equation54

(54)

(54) ), we must have that

almost surely. Since

is a martingale, Doob's sampling theorem then implies

hence a contradiction. That is, the assumption

was false.

5. Stylized numerical examples

5.1. Simple stylized numerical example

We first reconsider the stylized test example due to Schoenmakers et al. (Citation2013, Section 8), also considered in Belomestny et al. (Citation2014), where J = 2, ,

, and

is a random variable which uniformly distributed on the interval

. The optimal stopping time

is thus given by

and the optimal value is

. Furthermore, it is easy to see that the Doob martingale is given by

As an illustration of the theory developed in Sections 2–3, let us consider the linear span

as a pool of candidate martingales and randomize it according to (Equation31

(31)

(31) ). We thus consider the objective function

(55)

(55)

for some fixed

where

are i.i.d. random variables with uniform distribution on

Note that for this example

and

is the non-decreasing predictable process from the Doob decomposition. Moreover, it is possible to compute (Equation55

(55)

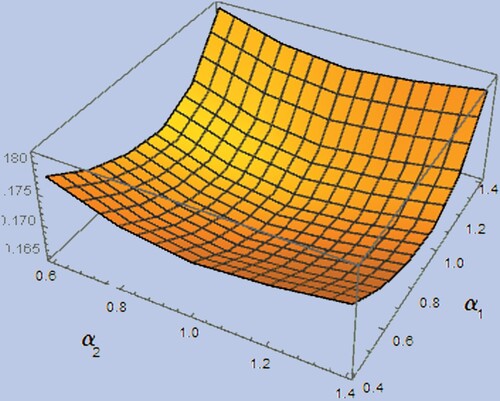

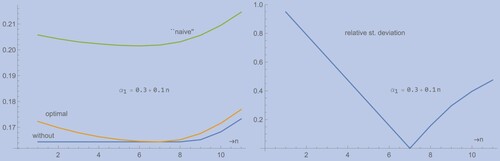

(55) ) in closed form (though we omit detailed expressions which can be conveniently obtained by Mathematica for instance). In figure (left panel) we have plotted (Equation55

(55)

(55) ) for

and

together with the objective function

due to a ‘naive’ randomization, not based on knowledge of the factor

Also, in figure (right panel), the relative standard deviations

of the corresponding random variables

are depicted as a function of α.

Figure 1. Left panel: objective functions (no randomization),

(optimal randomization), and

(‘naive’ randomization); right panel: relative deviations of

(without randomization),

(optimal randomization),

(‘naive’ randomization)

From Schoenmakers et al. (Citation2013, Section 8) we know that, and from the plot of in figure (left panel) we see that,

for

. On the other hand, the right panel plot shows that

may be relatively large for

and that the Doob martingale (i.e.

) is the only surely optimal one in our parametric family. Moreover, the objective function due to the optimal randomization attains its unique minimum at the Doob martingale, i.e. for

Further, the variance of the corresponding optimally randomized estimator attains its unique minimum zero also at

Let us note that these observations are anticipated by Theorem 13 and Corollary 14. The catch is that for each

the randomized

fails to be optimal in the sense of (Equation30

(30)

(30) ). We also see that both the optimal and the ‘naive’ randomization render the minimization problem to be strictly convex. Moreover, while the minimum due to the ‘naive’ randomization lies significantly above the true solution, the argument where the minimum is attained,

say, identifies nonetheless a martingale that virtually coincides with the Doob optimal one. That is,

and

is optimal corresponding to variance

, which can be seen in the right panel.

5.2. Stylized Bermudan call option

In order to exhibit the merits of randomization based on the theoretical results in this paper in a more realistic case, we have constructed an example that contains all typical features of a real life Bermudan option, but, is simple enough to be treated numerically in all respects on the other hand.

As in the previous example we take J = 2, and specify the (discounted) cash-flows as functions of the (discounted) stock prices

by

(56)

(56)

For S we take the log-normal dynamics

(57)

(57)

where

and

independent of

For the continuation function at j = 1 we thus have

(58)

(58)

where

is the standard normal density. While abusing notation a bit we will denote the cash-flows by

and

respectively. For the (discounted) option value at j = 0 one thus has

Further we obviously have

The Doob martingale for this example is thus given by

and the non-decreasing predictable component

is given by

For demonstration purposes we will quasi analytically compute the optimal randomization coefficient in (Equation31

(31)

(31) ),

by using a Black(-Scholes) type formula

and a numerical integration for obtaining the target value

. We now consider two martingale families.

| (M-Sty) | For any | ||||

| (M-Hermite) | Using that the (probabilistic) Hermite polynomials given by

| ||||

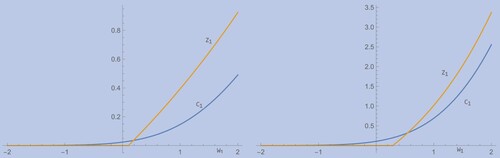

The parameters in (Equation56(56)

(56) ) and (Equation57

(57)

(57) ) are taken to be such that with a medial probability optimal exercise takes place at

In particular, we consider two cases specified with parameter sets

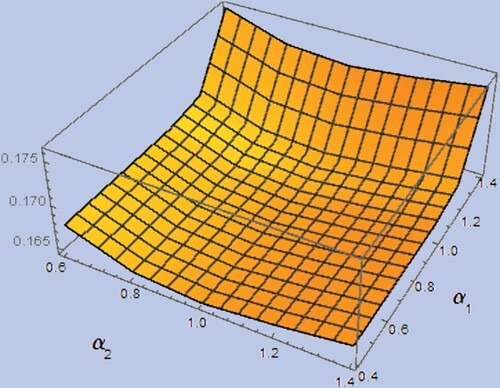

respectively. From figure we see that the probability of optimal exercise at j = 1 is almost 50% for (Pa1) and almost 30% for (Pa2). Let us visualize on the basis of martingale family (M-Sty) and parameters (Pa1) the effects of randomization. Consider the objective function

(61)

(61)

where θ scales the randomization due to i.i.d. random variables

uniformly distributed on

. I.e.for

there is no randomization and

gives the optimal randomization. Now restrict (Equation61

(61)

(61) ) to the sub domain

(while slightly abusing notation), i.e.

and

The function

i.e. (Equation61

(61)

(61) ) without randomization is visualized in figure , where expectations are computed quasi-analytically with Mathematica. From this plot we see that the true value

is attained on the line

for various

(i.e. not only in

). On the other hand,

i.e. (Equation61

(61)

(61) ) with optimal randomization, has a clear strict global minimum in

, see figure . Let us have a closer look at the map

for

and

respectively, and also at

due to the ‘naive’ randomization

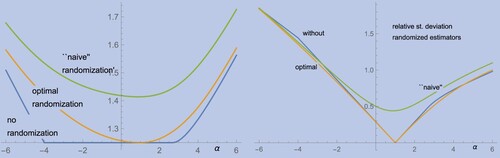

Figure 2. Cash-flow Z1 versus continuation value C1 as a function of W1 for (Pa1) (left) and (Pa2) (right).

where the scale parameter

is taken to be roughly the option value. (It turns out that the choice of this scale factor is not critical for the location of the minimum.) In fact, the results, plotted in figure , tell there own tale. The second panel depicts the relative deviation of

Figure 5. Left panel: objective functions of with

fixed, for BS-Call (Pa1) without, optimal, and ‘naive’ randomization; right panel: relative deviation of

(i.e. without randomization).

In fact, similar comments as for the example in Section 5.1 apply. The ‘naive’ randomization attains its minimum at

which we read off from the tables that generated this figure. We thus have found the martingale

which may be virtually considered surely optimal, as can be seen from the variance plot (second panel). Analog visualizations for the parameter set (Pa2) with analog conclusions may be given, though are omitted due to space restrictions.

Let us now pass on to a Monte Carlo setting, where we mimic the approach in real practice more closely. Based on N simulated samples of the underlying asset model, i.e.

we consider the minimization

(62)

(62)

for

(no randomization) and

(optimal randomization), along with the minimization

(63)

(63)

based on a ‘naive’ randomization where the coefficients

j = 0, 1, 2 are pragmatically chosen. In (Equation62

(62)

(62) ) and (Equation63

(63)

(63) ) M stands for a generic linearly structured martingale family, such as (Equation59

(59)

(59) ) and (Equation60

(60)

(60) ) for example. The minimization problems (Equation62

(62)

(62) ) and (Equation63

(63)

(63) ) may be solved by linear programing (LP). They may be transformed into a suitable form such that the (free) LP package in R can be applied. This transformation procedure is straightforward and spelled out in Desai et al. (Citation2012) for example. In the latter paper it is argued that the required computation time scales with N due to the sparse structure of the coefficient matrix involved in the LP setup. However, taking advantage of this sparsity requires a special treatment of the implementation of the linear program in connection with more advanced LP solvers (as done in Desai et al. Citation2012). Since this paper is essentially on the theoretical justification of the randomized duality problem (along with the classification of optimal martingales), we consider an in-depth numerical analysis beyond scope of this paper.

For both parameter sets (Pa1) and (Pa2), and both martingale families (Equation59(59)

(59) ) and (Equation60

(60)

(60) ) with

we have carried out the LP optimization algorithm sketched above. We have taken N = 2000 and for the ‘naive’ randomization

In the table , for (Pa1), and table , for (Pa2), we present for the minimizers

the in-sample expectation

, the in-sample standard deviation

and the path-wise maximum due to a single trajectory

followed by the corresponding ‘true’ values

based on a large ‘test’ simulation of

samples.

Table 1. LP minimization results due to and

for (Pa1).

Table 2. LP minimization results due to and

for (Pa2).

The results in tables tables – show that even a simple (naive) randomization at j = 0 leads to a substantial variance reduction (up to 10 times) not only on training samples but also on the test ones. We think that for more structured examples and more complex families of martingales even more pronounced variance reduction effect may be expected. For example, in general it might be better to take Wiener integrals, i.e. objects of the form where α runs through some linear space of basis functions, as building blocks for the martingale family. Also other types of randomization can be used, for example one may take different distributions for the r.v. ξ. However all these issues will be analyzed in a subsequent study.

Acknowledgments

J.S. gratefully acknowledges financial support from the German science foundation (DFG) via the cluster of excellence MATH+, project AA4-2.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 This work started after the first version of the present paper was available on arXiv:2102.01533, Febr. 2, 2021.

References

- Andersen, L. and Broadie, M., A primal-dual simulation algorithm for pricing multi-dimensional American options. Manage. Sci., 2004, 50(9), 1222–1234.

- Belomestny, D., Solving optimal stopping problems via empirical dual optimization. Ann. Appl. Probab., 2013, 23(5), 1988–2019.

- Belomestny, D., Bender, C. and Schoenmakers, J., True upper bounds for Bermudan products via non-nested Monte Carlo. Math. Finance, 2009, 19(1), 53–71.

- Belomestny, D., Hildebrand, R. and Schoenmakers, J., Optimal stopping via pathwise dual empirical maximisation. WIAS Preprint 2043, 2014.

- Belomestny, D., Bender, C. and Schoenmakers, J., Solving optimal stopping problems via randomization and empirical dual optimization. Math. Oper. Res., 2022. https://doi.org/10.1287/moor.2022.1306

- Broadie, M. and Glasserman, P., A stochastic mesh method for pricing high-dimensional American options. J. Comput. Finance, 2004, 7(4), 35–72.

- Davis, M.H.A. and Karatzas, I., A deterministic approach to optimal stopping. In Probability, Statistics and Optimisation. A Tribute to Peter Whittle, edited by F.P. Kelly, Wiley Series in Probability and Mathematical Statistics. Probability and Mathematical Statistics. pp. 455–466, 1994 (Wiley: Chichester).

- Desai, V.V., Farias, V.F. and Moallemi, C.C., Pathwise optimization for optimal stopping problems. Manage. Sci., 2012, 58(12), 2292–2308.

- Glasserman, P., Monte Carlo Methods in Financial Engineering, Vol. 53, 2003 (Springer Science & Business Media: New York).

- Haugh, M. and Kogan, L., Pricing American options: A duality approach. Oper. Res., 2004, 52(2), 258–270.

- Kolodko, A. and Schoenmakers, J., Iterative construction of the optimal Bermudan stopping time. Finance Stoch., 2006, 10(1), 27–49.

- Longstaff, F.A. and Schwartz, E.S., Valuing American options by simulation: A simple least-squares approach. Rev. Financ. Stud., 2001, 14(1), 113–147.

- Rogers, L.C.G., Monte Carlo valuation of American options. Math. Finance, 2002, 12(3), 271–286.

- Schoenmakers, J., Zhang, J. and Huang, J., Optimal dual martingales, their analysis, and application to new algorithms for Bermudan products. SIAM J. Financ. Math., 2013, 4(1), 86–116.

- Tsitsiklis, J. and Van Roy, B., Regression methods for pricing complex American style options. IEEE Trans. Neural. Net., 2001, 12(14), 694–703.