ABSTRACT

Our goal was to analyse the effects of performance funding in the specific conditions of Czech and Slovak institutions of higher education, based on the assumption that the key motive for introducing performance funding is to increase accountability. The article confirms that NPM tools still co-exist with other reform trajectories and framings and provides a detailed explanation of how performance funding schemes work in financing higher education-based science in selected transition countries. Finally, we observe that the effects of performance funding on accountability differ depending on the funding parameters and context. We also identified forms of adaptation not previously reported in the literature.

1. Introduction

The paper focuses on performance funding and its impact on the accountability of Czech and Slovak universities in implementing public administration programmes. Hood (Citation1991, Citation1995) and many other authors identified performance measures as the tool typical for New Public Management (NPM) as it developed in the UK. The public sector reform practice also implemented this NPM tool via performance funding systems. It did not disappear with the ‘end’ of the NPM as the ideology ‘Adieu NPM’ has still to be conceived with some sense of relativity. As a result, many NPM based tools and instruments are still used and optimized to support process improvements, and governments are still trying to optimize their internal workings (De Vries and Nemec Citation2013, 13).

Direct performance financing persists today, especially at the level of financing a university education. Many countries link the allocations/subsidies from the state budget for universities to selected performance indicators, to ‘reward’ better programmes and to stipulate social outcomes. However, such systems’ social outcomes seem to differ, depending on many local environmental features.

We can identify a long stream of literature that examines the impact of performance funding on universities in the US and other Western countries (Umbricht, Fernandez, and Ortagus Citation2017). These articles cover a wide variety of topics such as student admission, tuition fee increases (Kelchen and Stedrak Citation2016), impact on minorities (Chan, Mabel, and Mbekeami Citation2022), and interest groups (Tanberg Citation2010). Many articles also underline the unintended effects and implications for the accountability of universities (Dougherty et al. Citation2014; Hillman and Corral Citation2017). However, much less could be found for the conditions of transitional/post-transitional economies, where the Czech Republic and Slovakia belong. Existing articles deal primarily with problems such as academic inbreeding and internationalization (Macháček et al. Citation2022), low scientific publishing performance (Jurajda et al. Citation2017), unethical behaviour and predatory publishing (Macháček and Srholec Citation2021; Pisár and Šipikal Citation2018). The abovementioned context is prevalent in bibliometric and data analysis studies, but exploratory studies that would reveal the root of the problem in the CEE context are missing entirely.

Our paper seeks to fill this gap by analysing the effects of performance funding on science in the specific conditions of Czech and Slovak higher education institutions (HEIs). The primary motivation of our paper lies in the assumption that the key purpose for introducing performance funding is to increase accountability (Favero and Rutherford Citation2020).

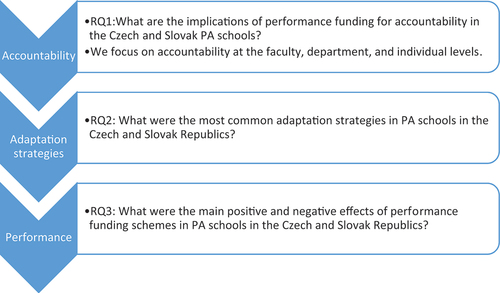

We use a research framework based on a layered approach (Porumbescu, Meijer, and Grimmelikhuijsen Citation2022) to analyse the change in accountability. The layered approach examines accountability at several levels: the macro level (faculty), the meso level (departments) and the micro level (individual scientists). The sub-objectives are to identify the impact of performance funding on scientific performance and the key adaptation strategies of the main actors. We use case studies for the Czech and Slovak Republics to achieve the objectives. Within each case study, we focus on the field of public administration and public policy. To conduct the case studies, we use desk research, bibliometric analysis and structured interviews with key stakeholders within HEIs, namely deans, vice-deans, heads of departments and researchers.

The added value of our paper lies in a more nuanced analysis of the effects of NPM tools in science than in bibliometric analysis alone. Our results show that performance funding increases the production of scientific articles but, on the other hand, has adverse effects on accountability at all system levels as the social dimension of accountability is reduced.

2. Theoretical background and conceptualization of research questions: centred on accountability

The introduction of performance funding in universities reflects broader changes in the public sector, associated with the advent of New Public Management and Reinventing Government. This period can be described as one of distrust in the public sector and its organizations. The focus of policymakers’ attention has become the accountability of public institutions; institutions must declare value for money creation.

Bovens (Citation2006, 3) defines accountability as a relationship between an actor and a forum, in which the actor has an obligation to explain and to justify his or her conduct, the forum can pose questions and pass judgment, and the actor may face consequences. Accountability is used as a synonym for responsiveness, responsibility and effectiveness. Authors also distinguished five dimensions of accountability: transparency, liability, controllability, responsibility, responsiveness (Bovens Citation2006, 8). Accountability mechanisms are now focusing on results instead of inputs, and institutions are beginning to collect and report data on their performance (Rutherford and Rabovsky Citation2014). On the higher education level these data should mirror academic impacts (different research outputs) and social impacts.

Part of the New Public Management in the spirit of the famous ‘let the managers manage’ is increasing institutions’ discretion and autonomy. Managers have greater freedom to apply their creativity and entrepreneurial spirit to complexly structured problems and are rewarded if their solution is successful but are also held accountable if their solution proves dysfunctional (Rutherford and Rabovsky Citation2014, 188). Authors often refer to this situation as a great bargain; institutions are given greater autonomy and discretion in exchange for greater accountability and related results in teaching and science (Herbst Citation2007).

Performance funding in universities at its full scale was first adopted in 1979 in Tennessee (Rutherford and Rabovsky Citation2014, 187). In the 1980s and 1990s, it spread to Europe and other countries. In the initial phases, performance funding was mainly linked to the accountability for undergraduate student outcomes. Subsequently, it also reached other areas such as science and research. It opens up the debate on measuring student success, quality, and performance in science and research (Rutherford and Rabovsky Citation2014). Dougherty and Natow (Citation2020) note that part of the accountability movement in the university environment was the then strong leaning of political and academic elites towards neoliberal ideologies. New Public Management also promotes implementing market principles in the university environment, where universities compete for resources, leading to greater efficiency of the whole system. The New Public Management also sees market or quasi-market mechanisms as a partial solution to the principal-agent problem in higher education (Dougherty and Natow Citation2020). The principal agent was initially a key theory by which we could analyse the effects of performance funding in the university field. Over time, other theoretical approaches have emerged that reflect the complexity of the university environment, multiple masters or question the efforts to fulfil the self-interest of actors in the university environment.

The literature is not uniform in identifying the impact of performance funding on the university environment. The effects of performance funding can be identified in two areas. The first comprises teaching and research (Texeira et al., Citation2023), rising student enrolment (Texeira et al., Citation2023), and improving curriculum. The second is student services (Li Citation2018.), better data collection and awareness of institutional performance (Li Citation2018), and student outcomes (Dougherty and Natow Citation2020). According to Li (Citation2018), the negatives are considered to be increased short-term certificates, increased selectivity of admissions criteria, and falling academic quality and expectations. In the field of research, we can identify the following results: an increase in the number of publications, an increase in scientific productivity, and a focus of research on publications in better quality journals (Dougherty and Natow Citation2020, Texeira et al., Citation2023). On the other hand, we can also observe negative phenomena such as the creation of citation networks, publications in predatory journals, false authorship, a focus on short-term results, and a loss of willingness to take risks (Dougherty and Natow Citation2020; Texeira et al., Citation2023). The term ‘unintended effects’ (Dougherty and Natow Citation2020) can also be used for a more general analysis of the impact of public performance funding. This category includes the gaming of performance measures at the university level, rising compliance costs, reduced intake of less advanced students, the narrowing of institutional mission, stratification of institutions and academic labour forces, and damaged motivation of higher education personnel (Dougherty and Natow Citation2020, 469).

Texeira et al. (Citation2023) conclude that the differences between the impact of performance funding on teaching and research are due to the design of performance-based funding schemes adopted by individual countries. Mizrahi (Citation2021) distinguishes between the American and European models of performance funding. In the US, performance funding focuses primarily on aspects related to teaching, while research is funded by the government, private sources and foundations on a competitive and merit basis (Mizrahi Citation2021, 299). However, in Europe, performance funding is used for teaching and research. In the European system, funding through public budgets is dominant, while private budgets play only a marginal role (Mizrahi Citation2021, 299). Texeira et al. (Citation2023) show that European performance funding systems are also quite heterogeneous. The following models can be identified: the formula type with an output component, performance agreements, and assessment exercises. Texeira et al. (Citation2023) also introduce the main groups of indicators that are used: the number of credits of students, the number of first and second-cycle graduates, the number of Ph.D. graduates, third-party funding, the number of publications, the quality of publications, and peer review assessment. Texeira et al. (Citation2023) emphasize the dynamic nature of performance funding. First of all, the performance funding has evolved differently in different countries, and several generations of performance funding models can be identified in specific countries.

Dynamics can also be identified in the effects of implementing performance funding systems because the most significant effects of performance funding are achieved during initial implementation, and fade over time (Texeira et al., Citation2023).

We begin our conceptualization with Pollitt’s (Citation2013) assumption that there is not just one logic in performance systems that will lead to a causal relationship between performance management system and performance, but rather there exists some alternative logic that can create relationships between actors. In higher education we can assume that actors use a combination of goal-oriented instrumental/technical rationality and are primarily motivated by pay and job security; they can also try to behave according to local norms and may often be driven by altruistic or at least collective values. On the other hand, they may also try to ensure the survival of the organization, create a cushion, or demonstrate good enough performance to avoid the phenomenon of naming and shaming (Pollitt Citation2013). These logics could have competing natures. It may not always be the case that one logic can be the absolute winner; on the contrary, several logics can work side by side and possibly alternate their dominance over time. Changing a subject’s behaviour over time in order to meet the requirements of a performance scheme could be deemed an adaptation strategy. In some cases the accountability mechanism may work and lead to the desired performance, but on the other hand gaming and cheating may occur. Gaming means bending the rules and cheating means directly breaking the rules (Pollitt Citation2013, 354). Gaming and cheating can generate a performance paradox, which means that performance indicators are not correlated with real performance (Pollitt Citation2013).

In the Czech Republic and Slovakia, we do not yet have enough empirical evidence to describe the impact of NPM on the efficiency of the public sector. However, the available empirical studies (Plaček et al., Citation2021; Plaček et al., Citation2020; and Plaček et al. Citation2021) are very sceptical about the positive effects. They conclude that basic assumptions such as the use of performance information (Nitzl, Sicilia, and Steccolini Citation2019), the balance of the relationship between organizational autonomy and control (Yu Citation2023) and, of course, political accountability (Hong, Hyoung, and Son Citation2020) are not met for the successful implementation of NPM tools.

If we focus on the issue of higher education in the Czech Republic and Slovakia, we can see very similar problems. Plaček et al. (Citation2015) point out the problem of implementation in benchmarking in higher education. Nemec et al. (Citation2012) talk about an unstable, underfunded environment with a lack of accountability in the system. As mentioned in the introduction, several studies describe the impact of performance funding on scientific performance. Jurajda et al. (Citation2017) note that the performance of Czech and Slovak scientists still lags far behind Western standards. Using Beal’s list, Macháček and Srholec (Citation2021) point to the persistent practice of publishing in predatory or grey-area journals. Munich and Hrendash (Citation2019) and Hrendash et al. (Citation2018) also note that the dominant strategy in this context is to publish in domestic journals indexed in the Web of Science. Finally, Bajgar (Citation2022) also points to the low publication performance and citation impact of prominent scientists who review grant applications. According to the study’s conclusions, grant evaluators have a much lower publication performance than do grant applicants. However, the above studies are only scientometric in nature and do not describe how the accountability mechanisms work in a given system.

The fact that accountability is a critical element of the system is proven by the evidence, which, although anecdotal in nature, demonstrates the significant impact of accountability on the science system and society’s trust in science. In both the Czech and Slovak systems, there have been cases of prominent scientists, academic officials and politicians whose academic practices have shown signs of cheating and gaming, such as publishing an excessive number of publications in journals that tend to fall into the grey zone or publishing in outright predatory journals. Cases were also identified where those involved published in journals that were far from their expertise and their contribution to the production of the article was not identifiable. There are also cases of falsification of scientific data in order to achieve prestigious publications and obtain scientific grants.

This story shows that the accountability problem is endemic and permeates the entire system. This accountability problem is also why we focus on a layered approach to analysing accountability. It is important to focus on what effect performance funding has on accountability at the faculty level (macro level), the department level (meso level), and the individual level (micro level), and what the relationships between the levels are.

We start from the premise that it is first necessary to examine the implications of performance funding for accountability at the faculty, department and individual levels. The governments in selected countries decided to stimulate the accountability (academic and social impacts of universities) through performance funding. To achieve this goal, they distribute public money to universities on the basis of the criteria set by the performance scheme (e.g. the quality of publications expressed in terms of impact factor, or the article impact score of the journals in which the publications appear). “Publications can ultimately contribute to higher ratings and thus attract more public money ‘. (Musiał-Karg, Zamęcki, and Rak Citation2023)’. Changes in the value of the criteria provide information on whether the system works more or less effectively (Sulkowski, Citation2016). ‘University and faculty authorities are very aware that points for publications determine the level of their government grants and/or the possibility of running courses’. (Musiał-Karg, Zamęcki, and Rak Citation2023)

Performance funding may have different impacts at different levels of actors. Indeed, it is very often the case that performance funding does not translate to lower levels (departments, individuals).

This effect is reflected in the choice of adaptation strategies. It can include the adaptation of standards of quality for scientific activity, for example, or gaming the system. At the end of the process, we can see the results of performance funding in any given context. Following shows the structure and logic of research question.

3. Methodology

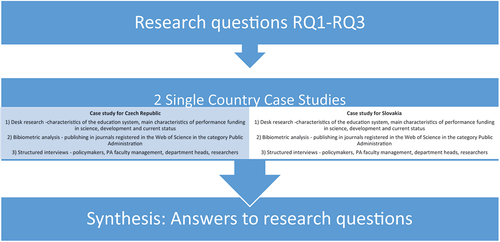

Our study is explanatory in nature, trying to describe the mechanisms and factors at work in a given context (Grossi and Thomasson Citation2015). We follow the assumption that the effects of performance funding are highly context-dependent (Mizrahi Citation2021, 299; Texeira et al., Citation2023). The logic of selecting the Czech Republic and Slovakia is based on the following assumption: both states implemented performance schemes in higher education, both states’ scientific performance is still low in comparison with Western countries (Jurajda et al. Citation2017), both states show similar problems with scientific accountability as we have described in the conceptualization part, and documents are available about performance schemes. We have chosen public administration programmes in order to reduce the complexity of possible comparison, public administration programmes are in both states relatively small in comparison with other programmes (e.g. economics, engineering). So, we were able map the problem in greater detail.

In 1990, the Czechoslovak Socialist Republic was renamed the Czech and Slovak Federative Republic until 1993, when it was broken up into the Czech and Slovak Republics. Both countries are suitable subjects for a comparative study because of their shared history. However, some recent studies show divergent trajectories in selected public policies, e.g. concerning the non-profit sector (Plaček et al. Citation2021) or cultural policy (Plaček et al. Citation2018).

We chose the same research strategy for each country case study in order to compare the results across the countries. Each case study used desk research, bibliometric analysis and deep-structured interviews. Desk research using official government documents was used to describe the performance funding system and the changes it has undergone.

The bibliometric analysis focuses on research outputs of universities, it analyses the articles published between 2009 and 2022 in journals indexed in the Web of Science in the public administration category. The articles were ranked according to the following criteria: at least one of the authors of the publication must have an affiliation to a Czech and Slovak institution; and the ‘type of publication’ must contain ‘Article’; i.e. all entries marked ‘review’ or ‘editorial’ were excluded. Articles were then ranked according to impact factor values in quartiles 1 to 4. The number of articles in each quartile is a basic indicator of productivity within performance scheme. The number of articles in the first quartile by impact factor also reflects the quality of the output. It is based on the simple assumption that journals with high impact factors attract a larger number of submissions and have a challenging per review process. It makes it possible to assess whether universities are using the public money allocated for scientific activities efficiently (responsible), which is one of the dimensions of accountability.

After the bibliometric analysis, we conducted the deep-structured interviews. We used purposive sampling methods for the structured interviews. We approached faculty leadership at dean, vice dean, department head and individual researcher levels. The recruitment of respondents took place from August to October 2022. Some of them identified themselves in their answers as representing more than one role, as their roles changed during the period under review, and they gained personal experience with the described methodologies from different perspectives. Information on the structure of the respondents is attached in Annex 1 ().

All respondents were presented with the following definition of accountability at the beginning of the interview: ’accountability is claimed to sustain or raise the quality of performance’ (Huisman and Currie Citation2004, 531). Faculties are accountable for the development of scientific knowledge (academic impact), the development of society as a whole, local development (social impact), integrity, ethics, and the transparency of all processes. Faculties are accountable to the state (Ministry of Education), citizens and companies who provide resources, students and their parents, and local communities. Researchers are accountable to supervisors (especially heads of departments). They are accountable for academic impact, social impact, and the ethical delivery of outputs. We followed up on the previous definition in the interview with the following questions (see ):

Table 1. Interview questions.

The interviews were recorded and then transcribed and coded according to the respondents’ answers. We performed coding at three stages: interview authors were responsible for first round coding, after that the results were checked by the members of the team who were not involved with the interview in order to eliminate subjective bias. In the third round, we went back to the literature and bibliometric data and discussed the coding results among the whole team. We adopted an interpretivist position, because we want to understand the meaning that actors attach to performance schemes (Grima, Georgescu, and Prud’homme Citation2020). ‘We drew on grounded theory to reveal how individuals interpret the reality of their work and adjust to it. It is a useful approach in cases where there is no explicit hypothesis to test’ (Grima, Georgescu, and Prud’homme Citation2020, 35).

shows an overview of our research strategy.

It is also important to state the limitations of our research strategy. We are aware of several potential problems with bias. The first issue could be called social desirability bias. The statements of individual respondents may be subjective. Our sample is dominated by representatives from academia, who may perceive the effects of performance funding quite differently from other stakeholder groups such as the public, politicians, and the like. A second type of bias is selection bias. As we mentioned, we used a purposive sampling approach. This can risk omitting some important respondents due to selection sample strategy. Another potential problem is the limited generalizability of our results. The chosen exploratory strategy describes the functioning of factors and mechanisms in a specific time and context, resulting in new assumptions that need to be verified by other empirical methods.

4. Results

In this section, we present the results of the case studies for the Czech Republic and Slovakia and answer the research questions.

4.1. Case study Czech Republic

The public performance-based funding of HEIs focuses on results in educational and creative activities and uses more indicators related to students, staff and R&D outputs. The performance-based component of HEIs funding took R&D results into account for the first time in 2011. Since then, there has been only one occasion when the rules were the same for two consecutive years. The rules are based on several interrelated parameters. The first parameter is the share of the performance-based component in institutional funding. It was set at 10% in 2011, 20% in the following year raised to 24% in 2015–16. It was reduced again after 2018, and since then has fluctuated between 16.66%-17.54%. The second parameter is the weight of R&D outputs in the performance-based component. It was 25% in 2011, which increased to 34.3% by 2016. In 2017, it was set at 30%. As already mentioned, the performance indicators also include the external (not from Ministry of Education Youth and Sports) income (6.5% since 2019), which, in particular, considers the R&D grant resources obtained from national and supranational providers. These resources then form the basis for setting the performance-based budget component in subsequent years.

The third parameter is how R&D outputs are reported, which was first anchored methodologically in 2004 and changed regularly until 2017. The research and development evaluation system (nicknamed ‘RIV’ after the Czech abbreviation for the Register of Results Information) has undergone a major transformation since 2004. The system was originally designed only to evaluate the number of research results of individual organizations. However, over time it has become an essential instrument of the performance component of higher education funding. The evaluation system has allocated several ‘RIV points’ to each publication output. Some details on the development of the evaluation system are described in .

Table 2. Details of the research outcomes system evolution in the Czech Republic.

Only with the introduction of the new science evaluation Methodology 2017+ was it possible to achieve a gradual shift in the evaluation according to the number of publication points obtained. In 2019, for the first time, the number of outputs was partly based on the quality of journals according to the Article Influence Score (WoS) and the Scimago Journal Rank (SCOPUS).

4.1.1. Bibliometric analysis

The following chart presents the results of the bibliometric analysis for the years 2009 to 2022 (see Results of bibliometric analysis).

The chart shows an overall increase in articles in journals indexed in the Web of Science due to the implementation of performance schemes. In 2018, the number of articles in journals considered by the Czech performance scheme to be of high quality started to grow (Q1 and Q2). On the other hand, articles published in journals without an impact factor remained dominant in the Q4 category.

4.1.2. Impact on accountability

The experts’ answers show that the transition from the RIV methodology to 2017+ has led to increased accountability of HEIs, especially in terms of strengthening the quality of research work and academic impact. This also goes for the ethics of scientific work. Regarding societal impact, the assessment is more restrained and ambiguous.

‘As far as our faculty is concerned, I can say that the quantity has clearly gone down in favour of quality, and this also applies to articles from Scopus journals to WoS journals and especially to journals in the 1st and 2nd quartiles’. (CZ4)

‘I saw the transition between these methodologies as a greater push for quality research in terms of impact journals. But the extent to which it’s going now, I see that as a bit of a detriment, because the pressure for those top outputs – the Q1s and things like that – that seems to me to be a bit out of touch with the reality of where those universities are or what the reality is’. (CZ10)

The reactions of HEIs so far indicate that accountability understood as a commitment to producing high-quality, internationally relevant scientific outputs has gradually improved. This improvement is evidenced by bibliographic data and the results of previous evaluations of science in HEIs involving panels of renowned international evaluators.

Several experts (CZ1, CZ4, CZ5, CZ7) also highlighted the problems arising from changes in interpersonal relationships within HEIs, which, in addition to excellence in research, must also strive for excellence in fulfilling other functions. Consequently, the challenge for the management of HEIs at all levels is creating an environment that also recognizes contributions other than pure research excellence.

‘The atmosphere at the faculty is not ideal because many people feel that they keep failing all the time. And yet, we all know how much work it takes to make a decent, even if not excellent, paper. ‘ (CZ5)

‘The system creates extremely skewing effects when it comes to the career progression of academics. Career progression is based on peer-review. It assesses whether these people are, generally speaking, good or bad. The moment there are sophisticated scientific metrics and very poor metrics on teaching, it naturally skews and protects the results of science and favours others. It forces academics to devote far more effort to science than is proportionately appropriate.‘ (CZ4)

‘The new methodology (2017+) completely destroys the idea of Humboldt University.‘ (CZ4)

4.1.3. Main adaptation strategies

Both schemes have understandably generated various adaptation strategies on the part of HEIs, their units, and individual researchers. The reaction to RIV has been quite similar everywhere. Institutions have generally produced a list of research outputs and publications representing, essentially, a price list. This price list allowed quality to be compensated by quantity. A widespread means of generating more outputs was to publish indexed conference or similar proceedings and to publish in local and scientifically low-impact journals with less demanding peer review procedures. Through a purposeful citation strategy, local journals increased their formal impact.

‘Of course, there were pressures to say, for example, that one good result = 10 stupid results…‘ (CZ6)

In the later Methodology 2017+, we can observe more variability in the adaptation strategies adopted. Some may appear somewhat extreme: since the methodology values a ratio of the number of publications placed in the first two quartiles, some institutions have introduced a ban on publishing in Q3 and Q4. (CZ7)

On the other hand, we more often encountered responses that research institutes did not resort to such a radical policy and viewed publications in the lower quartiles as a natural part of professional growth, especially for junior scholars. We can see the emergence of schemes enabling and incentivizing the hiring of top experienced researchers. Many HEIs and their units have begun establishing centres of research excellence to link individual researchers, and creating strong research teams capable of pursuing excellence in promising areas of study. However, recognizing that cutting-edge science cannot be done locally, we also see the development of various tools encouraging international collaboration and involvement in international research programmes.

‘Only few people are ready to publish in Q1 or Q2 right from the start of their career. If you don’t let them write elsewhere, they don’t have a chance to grow. If you’re managing something, you have to set achievable goals for people. We take that into account in our internal methodology, although Methodology 2017+ discourages it. We’ll pay four people from the outside to make A’s and B’s, and we’ll have an A on our evaluations‘. (CZ4)

‘You don’t just get Q1 here in Prague, Brno or Ostrava.‘ (CZ9)

‘Locally relevant research can easily stay in Q4 or in a journal that is not in WoS, because we know that this might be an important part of your mission.‘ (CZ2)

4.1.4. Negative and positive effects

As mentioned above, two different performance-based research funding systems have been used in the Czech Republic over the last twelve years: the ‘RIV‘ and the ‘Methodology 2017+‘. For both, we asked the experts we interviewed about their strengths and weaknesses.

Almost all interviewed experts (except CZ1 and CZ9) agreed that the main benefit of the first scheme was that it acted as an effective stimulus for academics to engage in their own creative work and publish their results in addition to teaching. Counting virtually all outputs, regardless of their merit and quality, led to the activation of most scholars, including those who, for various reasons, could not think of placing their results in top journals. Moreover, in fields where the quality of results is measured exclusively in the form of impact articles in respected journals, the establishment of a relative weighting of results has undeniably promoted the achievement of quality and international relevance of research.

‘Both schemes are beneficial in that they create pressure for internationally recognized performance. They provide some benchmarks. Where there is no measurement, there is no management. Measurement must exist – it leads to higher performance…‘ (CZ4)

The RIV scheme was (and still is) heavily criticized for many reasons. Many blame it for the negative consequences it has caused, which persist to this day. In particular, it is blamed for causing the long-term erosion of the benchmarks for quality and useful scientific work. Non-standard forms of publishing, such as indexed proceedings, self-published monographs, and the like, have become common practice in many disciplines to compensate for the inability to break into respected journals. Mutually citing ‘fraternities‘ have emerged.

I remember that, of course, quantity was very much preferred to quality. Many academics chose the easier path. That is, they looked for ways to score points and essentially contribute financially to the university and themselves by producing some scientific’ output. ‘ (CZ3).

With a few exceptions (CZ1, CZ10), experts agreed that the new science evaluation system represents a significant shift away from the ‘RIV‘ towards the desired support of quality research, generates a range of important information needed for the effective strategic management of research institutions, and the like.

However, some criticism was also expressed among the experts interviewed. It was mainly directed at some of the technical details of the methodology and the inability to capture the social relevance of the research (CZ4, CZ5, CZ7, CZ9).

The 2017+ Methodology does introduce a category of societal impact, but if you look at what gets points there, it’s just not outputs that meet that characteristic, because ultimately, it’s being evaluated from a scientific level anyway. The assessors quite often give a mark for social relevance commensurate with where the work was published and whether it was at a sufficient theoretical level. (CZ5)

4.2. Case study Slovak Republic

Like the Czech Republic, the public performance-based funding of HEIs focuses on results in educational and creative activities and is mainly based on indicators related to the number of students and R&D outputs. It started in Slovakia after the new university law was amended in 2002. The formula-based allocation is used for two financing subprogrammes, which represent approximately 90% of the total allocation:

- subprogramme 07711: Subsidy to cover educational costs of HEIs

- subprogramme 07712: Subsidy to cover R&D costs of HEIs

The R&D results are included as the calculation criterion in both subprograms. The allocation via subprogramme 07711 is mainly based on the following parameters – number of students, number of graduates, programme costs coefficient, R&D results, percentage of employed graduates. The institutional allocation via subprogramme 07712 is fully based on R&D results.

The formulas for calculating the R&D part of the subsidies via both subprogrammes remained very much the same from the beginning until today, but the partial weights and details change every year. The Ministry of Education prepares the actual formulas in early autumn, sends the proposal to the representative bodies of Slovak HEIs for consultation and on the base of their responses, it finalizes the formula for the coming year.

The calculation details of formulas are extremely complex, and it is not possible to present all their elements in any comprehensive and historical perspective. As an example, we provide the criterions used to allocate the R&D element within subprogramme 07711 for two different years ().

Table 3. R&D criterions of the allocation formula for subprogramme 07711, years 2015 and 2022.

From the point of view of our paper, the most interesting criterion is the share of the HEI in publishing activities based on a point system, which represents an important base for allocation in both subprograms and ‘produces‘ a critical part of the total revenue of HEIs. For the whole period of performance financing all categories of publications are included in the formula, the highest scores are given to books (monographs) and the articles in the WoS (Clarivate) database. The weight of these two types of outputs continuously increases, especially recently; but a high number of ‘lower rank‘ publications can even today generate important revenues.

4.2.1. Bibliometric analysis

The following presents the results of the bibliometric analysis for the years 2009 to 2022.

A trend very similar to that in the Czech Republic can be observed in the Slovak data. The number of publications is growing significantly, but journals without impact factor or journals in Q4 remain dominant.

4.2.2. Impact on accountability

The experts’ answers indicate that the motivation is mainly to increase the number of publications, and less with regard to real quality and academic ethics.

‘The current system does not motivate to do accountable research, but to “management” of research outputs’. (SK7)

By motivation I would understand that when a university teacher publishes, has projects - that is, has performance - he is adequately rewarded, which motivates him. (SK4)

The experts are of the opinion that the allocation system does not seem to generate real accountability for every type of social output that the HEIs should generate; their central aspect is pushing to publish more by any means.

‘Many researchers, but also “researchers”, have started to focus on “brands” (e.g. WoS or Scopus), regardless of real quality. It is precisely the offer of publishers such as MDPI and the focus on the aforementioned “brands” that has led in several cases to unethical behaviour, or behaviour that is contrary to the expected integrity of a scientist’. (SK6)

The main problem of the Slovak system is the fact that it practically does not cover non-academic (social) outputs and impacts of universities, its focus is on academic publications. It does not reflect contributions that may be critical for the country´s development.

‘The fact that I work (as a volunteer) on the level of the United Nations committee is neither reflected by the Slovak R&D evaluation system, nor by internal mechanisms of my university (SK7)’.

4.2.3. Main adaptation strategies

The allocation system motivates to some changes at all levels. At the faculty level, almost all, if not all HEIs have introduced some various forms of internal performance management schemes. These schemes try to copy the central formula and, in most cases, serve to calculate and compare the performance of departments or other internal units and individual performance. Internal performance schemes have very different features. For example, the ways in which the systems reward for publications and research grants differ significantly. In some faculties, there is no link between the amount of financing received by successful research grants and the salary of the successful researcher. Other faculties have transparent mechanisms, by which the researcher is rewarded as the principal investigator, and principal investigators determine rewards for team members.

Interesting answers were given from the department and individual teacher levels. According to these responses, the motivational power towards accountability of institutional performance financing systems is very problematic (SK2-SK10). The main message is that the primary motivation is to publish (‘publish or perish’). However, bottom-up motivation still ‘survives’, not as the rule, but rather as a result of personal dignity – SK9 specifically mentioned this aspect.

The real ‘power’ of performance motivation is also limited by specific factors related to the Slovak environment. The main specific issue of the Slovak system is related to the limited resources available. According to the OECD (2022), Slovakia´s public spending on tertiary education is only 0.7% of its relatively low GDP, and private resources are meagre, as there are no tuition fees at public HEIs. The total amount of resources for public financing of HEIs is capped by the state budget allocation, meaning that when more outputs are calculated in the formula, the individual value of a publication decreases (SK 1 mentioned that the ‘value’ of a Q1 article was 13,000 EUR in 2021, but only 8,000 EUR in 2022). With decreasing numbers of students in most programmes, many deans and heads of departments need to cope with a critical trade-off:

‘I have two choices – to keep the current employment, or to allocate the needed number of resources to the internal motivation system, to make it functional’. (SK1)

With limited resources, most deans prefer a ‘keeping employment’ strategy to performance-based management (this means that low-performing teachers are not in danger of losing their job). The resources available for performance rewards are marginal and cannot financially motivate.

‘I can allocate only 5–6% of our total salary budget to the performance rewards system, the rest must be used to cover basic salaries, defined by the central scheme’.(SK1)

4.2.4. Negative and positive effects

It is fully apparent that using the performance formula in financing Slovak HIEs generated adaptation strategies at all levels. HEIs, faculties, and departments introduced their internal motivation system (each of them differently). Some, but not all, researchers reacted by increasing the number of articles they published in indexed journals, and the total volume of research outputs increased visibly.

All interviewed experts agreed that the increased focus on research performance in the performance financing scheme must be the factor contributing most significantly to the visible growth of the number publications, including higher-quality international publications:

‘… after the introduction of performance funding, the publishing activity of universities has increased. This increase was most significant for previously underperforming universities’. (SK8)

However, compared to many other countries, the growth may not be fast enough in relative terms:

‘In relative terms, it may not be such a significant shift and compared to the international environment Slovakia is lagging behind in many respects’. (SK6)

As the specific positive impact from the performance-based financing scheme, some experts (SK1, SK9) also mentioned the fact that the new system also supported internationalization:

‘The new system also motivated towards higher internationalization, especially in the form of participation in international research grants, because universities are very well rewarded by the allocation formula for this activity’. (SK1)

However, real accountability was not created, and many pervasive effects were generated, as a result of weak points of the existing scheme, which has remained almost unchanged for last twenty years. The experts agree that the scheme does not motivate to accountability, but to mechanical and many times non-ethical increase of publication output. As the main non-ethical practice, predatory publishing was a problem mentioned by all those interviewed.

There are also other gaming strategies visible in Slovakia. For example, the experts mentioned an increasing number of authors per publication, predatory conferences, publishing monographs in Slovak abroad (especially in the Czech Republic), publishing in authors’ own faculty journals (still very visible) or creating their own journal abroad.

4.3. Answers for the research questions

We observe that the effects on accountability differ depending on the performance funding parameters and context. In quantity-dominated systems, we can observe several pervasive effects towards maximizing the amount of funding (predatory publishing, grey zone journals, hiring foreign researchers just to improve the score). These practices are becoming part of systems at all levels. If performance funding also takes into account the quality of the outcome, it has a positive effect on the system as there is greater acceptance of standards of quality and ethical research.

Performance financing may produce a form of ‘virtual accountability’. Faculties, departments and individuals accept the system setup externally. On the other hand, they know that they cannot succeed in this system without internal changes, so they create schemes at the level of faculties and individual departments that take into account different criteria. Performance financing with a focus on academic outputs in high level journals endangers the social accountability of HEIs. This is especially visible in the Slovak Republic, where social outputs are neglected and their delivery depends on the personal attitudes of scholars.

Performance financing also creates important dilemmas. At the faculty level, this is the dilemma between employment and performance appraisal. At the departmental level, it is the dilemma between supporting early career scholars, providing core teaching, and focusing purely on research. At the individual level, scientists must decide whether to focus on purely scientific criteria, which are reflected in the funding system, or on social impact, which is not reflected in the system. The instability of the whole system is also very significant; due to administrative and political decisions, there are frequent changes in the system, which reduces accountability of the system. It is also interesting to point out the differences and common elements between the two case studies.

We can identify several traces of gaming in all the contexts and funding schemes we have examined. Gaming at the surveyed institutions can be characterized as an effort to obtain as much funding as possible as easily as possible without major structural changes in the functioning of the faculties. In the early stages, it was an excess of publishing in their own journals and conferences, and a tolerance of publishing in predatory journals, which is still the case in Slovakia. As the system changes towards quality, we may observe an end to tolerance for predatory journals but continued tolerance for journals that are in the grey zone.

Other specific gaming strategies, that were mentioned by respondents across both case studies are:

hiring external researchers who are not involved in the actual running of the faculty but are only tasked with generating publications because the internal members of the departments are not capable of doing so;

an increase in the number of authors of publications and attempts to participate in international schemes from which authorship of quality publications can result;

publishing in one’s ‘own’ journals (managed by an author’s own faculty or consortium of faculties);

using foreign publishers to publish national monographs and the like.

As the main benefit, we can observe a significant increase in the number of publications in Web of Science indexed journals, bringing publishing practices in selected countries closer to those of developed Western countries. On the other hand, performance funding schemes can deliver several pervasive negative effects, especially if poorly designed (such as in the Slovak case). First, they generate an orientation towards outputs rather than outcomes. Second, there is also a tendency for the relevant actors to focus only on meeting the criteria of performance funding schemes and ignoring important societal aspects of science or those outside of performance schemes. Third, the faculty members are sometimes discouraged from publishing in low-ranked journals relevant to that country’s community.

It is also interesting to highlight the key differences between the case studies. In the case of Slovakia, more so than in the Czech Republic, the fiscal stress or underfunding of the entire system is palpable. The actors are more concerned with preserving jobs and maintaining the whole system at an acceptable level. On the other hand, the Czech system is more ambitious in terms of scientific demands. One of the main problems is that actors within the Czech system express concern that they will not be able to sustain this. We can also observe the persistence of unethical forms of publishing, although these are in decline in the Czech Republic, and are being replaced by more sophisticated forms of gaming. One can say that in the Czech system, a faster evolution of performance schemes can be observed, or a quicker reaction to the negative aspects associated with the implementation of performance schemes in science.

5. Conclusions

Our paper contributes to both the literature on performance funding and the literature on the implications of the New Public Management for different areas of the public sector. We show that full performance funding, as one of NPM tools remains alive in selected CEE countries, perhaps paradoxically in areas where its application is problematic from the outset due to the complexity and social dimension of the activities (Kukla-Acevedo, Streams, and Toma Citation2012).

Regarding the functionality of performance funding, if we were to give a clear answer to the question of whether performance schemes work in our case, we should say ‘Yes, but … ’. We cannot blame performance systems for everything that is wrong, the causality of pervasive effects must be proven with better data. We must also point out that the main performance schemes still coexist with internal performance schemes of faculties and departments as well as internal promotion track systems, which can also generate positive and negative effects that are not subjects of this study. But in our opinion, the biggest risk of implementing performance schemes lies in eliminating the disruptive potential of science (Drechsler Citation2019). p.132) describes this phenomenon as follows: ‘Scholarships today means that the incentive is to write replicative papers that state the same thing that we know already, for mainstream top journals, even high citation numbers do not count that much’.

Our article contributes to the now classic debate between Dan and Pollitt (Citation2015) and Drechsler and Randma-Liiv (Citation2016) about whether NPM can work in Central and Eastern Europe. We can see that the NPM tools’ effect change over time. In addition, we can observe that ‘fine tuning’ may improve their results: we can observe positive impact improvements resulting from even an incremental improvement of the original schemes.

On the other hand, notorious problems still persist due to the implementation of NPM tools in systems without a solid ethical foundation and with organizations lacking sufficient capacity for such implementation. The causes can be traced back to the establishment of the administrative systems in each country (Van der Kolk Citation2022). In the context of Central and Eastern Europe, applying the market principle has clashed with setting up the administrative system in the countries concerned (Bouckaert Citation2022a). In countries like the Czech Republic and Slovakia, ‘market mechanisms’ do not work in higher education. We may speak about a quasi-market environment, as the designs of performance funding systems do not let HEIs with poor performance disappear. Administrative and technical interventions in the system often reduce the differences between the best and the worst.

Our main findings are in line with the authors who argue that, in the case of European countries, performance schemes bring about an increase in the production of scientific articles. We also confirmed the literature findings (Dougherty and Natow Citation2020) that different performance schemes result in different forms of gaming. Our original contribution is that we have identified new, more sophisticated forms of gaming that respond to changes in the performance schema (e.g. employing scientists for the sole purpose of generating publications). We have also identified new accountability dilemmas raised by performance schemes in science; at the faculty level, it is the trade-off of maintaining employment and providing basic teaching vs. scientific performance. At the department head level, it is the need to support young scientists for whom publication in top journals is an elusive metric. At the individual scientist level, there is a trade-off between scientific performance and the social relevance of research. This results in HEIs accepting performance schemes mainly virtually, failing to implement the necessary changes in their internal workings, and creating their own performance schemes that work within HEIs to mitigate the pressure and adverse effects of performance funding.

Bouckaert (Citation2022a) expresses concern that market and network schemes cannot adequately address contemporary society’s problems. This problem can also be seen in the Czech and Slovak performance systems in science. Due to the COVID-19 pandemic and climate change, society is experiencing a crisis of confidence in science; the performance funding system ignores this problem and instead pushes for publications in foreign journals indexed in the Web of Science. As a result, the social accountability of scientists is reduced. This is contrary to the ideas of the Whole of Government (WoG), which is enshrined in the Whole of Society (WoS) context (Bouckaert Citation2022a, Citation2022b). Scientific output loses its inclusiveness to the rest of society, which raises the question of whether science should also address real societal problems or just focus on meeting indicators and hope these indicators were designed as proxies for societal relevance.

Our paper also opens new trajectories for further research. In the case of performance funding in HEIs, it is necessary to look at what new forms of gaming HEIs are using in response to changes in these schemes; it is also necessary to look at whether performance funding creates conflicts with the social mission of HEIs. In the case of NPM, it is inspiring to focus on how the effects of NPM schemes change over time, and if pervasive effects and risks connected with their use increase in less developed countries, where the implementation capacity might be limited or even lacking.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Bajgar, M. 2022. Publication Performance of Panelists of the Grant Agency of the Czech Republic (2019–2021). Praha, Czech Republic: Idea Cerge EI.

- Bouckaert, G. 2022a. “From NPM to NWS in Europe.” Transylvanian Review of Administrative Sciences 2022 (SI): 22–31. Special Issue. https://doi.org/10.24193/tras.SI2022.2.

- Bouckaert, G. 2022b. The Neo-Weberian State: From Ideal Type Model to Reality? Working Paper WP 2022/10. London: UCL Institute For Innovation and Public Purpose.

- Bovens, M. 2006. Analysing and Assessing Public Accountability. A Conceptual Framework. European Governance Papers, No. C-06-01. Utrecht: Utrecht University.

- Chan, M., Z. Mabel, and P. Mbekeami. 2022. Incentivizing Equity? The Effects of Performance-Based Funding on Race-Based Gaps in College Completion. Working Paper No 20-270. Annenberg: Annenberg Brown University.

- Dan, S. and Ch. Pollitt. 2015. “NPM Can Work: An Optimistic Review of the Impact of New Public Management Reforms in Central and Eastern Europe.” Public Management Review 17 (9): 1305–1332. https://doi.org/10.1080/14719037.2014.908662.

- De Vries, M. S., and J. Nemec. 2013. “Public Sector Reform. An Overview of Recent Literature and Research on NPM and Alternative Paths.” International Journal of Public Management 26 (1): 4–16. https://doi.org/10.1108/09513551311293408.

- Dougherty, K., M. S. Jones, S. R. Natow, L. Pheatt, and V. Reddy. 2014. Envisioning Performance Funding Impacts: The Espoused Theories of Action of the State Higher Education Performance Funding in Three States. New York: Columbia University.

- Dougherty, J. K., and S. R. Natow. 2020. “Performance-Based Funding for Higher Education: How Well Does Neoliberal Theory Capture Neoliberal Practice?” Higher Education 80 (3): 457–478. https://doi.org/10.1007/s10734-019-00491-4.

- Drechsler, W. 2019. “After Public Administration Scholarschip.” In A Research Agenda for Public Administration, A. Massey edited by, (pp.220-232). Edward Elgar. https://doi.org/10.4337/9781788117258.00019.

- Drechsler, W., and T. Randma-Liiv. 2016. “In Some Central and Eastern European Countries, Some NPM Tools May Sometimes Work: A Reply to Dan and Pollitt’s ‘NPM Can Work.” Public Management Review 18 (10): 1559–1565. https://doi.org/10.1080/14719037.2015.1114137.

- Favero, N., and A. Rutherford. 2020. “Will the Tide Lift All Boats? Examining the Equity Effects of Performance Funding Policies in U.S. Higher Education.” Research in Higher Education 61:1–25. https://doi.org/10.1007/s11162-019-09551-1.

- Grima, F., I. Georgescu, and L. Prud’homme. 2020. “How Physicians Cope with Extreme Overwork: An Exploratory Study of French Public Sector Healthcare Professionals.” Public Management Review 22 (1): 27–47. https://doi.org/10.1080/14719037.2019.1638440.

- Grossi, G., and A. Thomasson. 2015. “Bridging the Accountability Gap in Hybrid Organizations: The Case of Copenhagen Malmö Port.” International Review of Administrative Sciences 81 (3): 604–620. https://doi.org/10.1177/0020852314548151.

- Herbst, M. 2007. Financing Public Universities. The Case of Performance Funding. Dordrecht, Germany: Springer.

- Hillman, N., and D. Corral. 2017. “The Equity Implications of Paying for Performance in Higher Education.” American Behavioral Scientist 61 (14): 1757–1772. https://doi.org/10.1177/0002764217744834.

- Hong, S., K. S. Hyoung, and J. Son. 2020. “Bounded Rationality, Blame Avoidance, and Political Accountability: How Performance Information Influences Management Quality.” Public Management Review 22 (8): 1240–1263. https://doi.org/10.1080/14719037.2019.1630138.

- Hood, Ch. 1991. “A Public Management for All Seasons?” Public Administration 69 (1): 3–19. https://doi.org/10.1111/j.1467-9299.1991.tb00779.x.

- Hood, Ch. 1995. “Contemporary Public Management: A New Global Paradigm?” Public Policy and Administration 10 (2): 104–117. https://doi.org/10.1177/095207679501000208.

- Hrendash, T., S. Kozubek, and D. Munich. 2018. Publication Performance of Research Organization 2011-2015. Praha, Czech Republic: Idea Cerge EI.

- Huisman, J., and J. Currie. 2004. “Accountability in Higher Education: Bridge Over Troubled Water?” Higher Education 48 (4): 529–551. https://doi.org/10.1023/B:HIGH.0000046725.16936.4c.

- Jurajda, Š., S. Kozubek, D. Münich, and S. Škoda. 2017. “Scientific Publication Performance in Post-Communist Countries: Still Lagging Far Behind.” Scientometrics 112 (1): 315–328. https://doi.org/10.1007/s11192-017-2389-8.

- Kelchen, R., and L. T. Stedrak. 2016. “Does Performance-Based Funding Affect Colleges’ Financial Priorities?” Journal of Education Finance 41 (3): 302–321. https://doi.org/10.1353/jef.2016.0006.

- Kukla-Acevedo, S., M. E. Streams, and E. Toma. 2012. “Can a Single Performance Metric Do It All? A Case Study in Education Accountability.” The American Review of Public Administration 42 (3): 303–319. https://doi.org/10.1177/0275074011399120.

- Li, A. M. 2018. Lessons Learned: A Case Study of Performance Funding in Higher Education. Washington: Third Way.

- Macháček, V., and M. Srholec. 2021. “RETRACTED ARTICLE: Predatory Publishing in Scopus: Evidence on Cross-Country Differences.” Scientometrics 126 (3): 1897–1921. https://doi.org/10.1007/s11192-020-03852-4.

- Macháček, V., M. Srholec, R. M. Ferreira, N. Robinson-Garcia, and R. Costas. 2022. “Researchers’ Institutional Mobility: Bibliometric Evidence on Academic Inbreeding and Internationalization.” Science and Public Policy 49 (1): 85–97. https://doi.org/10.1093/scipol/scab064.

- Mizrahi, S. 2021. “Performance Funding and Management in Higher Education: The Autonomy Paradox and Failures in Accountability.” Public Performance & Management Review 44 (2): 294–320. https://doi.org/10.1080/15309576.2020.1806087.

- Munich, D., and T. Hrendash. 2019. A Comparison of Journal Citation Indices. Praha, Czech Republic: Idea Cerge EI.

- Musiał-Karg, M., L. Zamęcki, and J. Rak. 2023. “Debate: Publish or Perish? How Legal Regulations Affect scholars’ Publishing Strategies and the Spending of Public Funds by Universities.” Public Money & Management 1–2. https://doi.org/10.1080/09540962.2023.2213920.

- Nemec, J., D. Špaček, P. Suwaj, and A. Modrzejewski. 2012. “Public Management as a University Discipline in New European Union Member States.” Public Management Review 14 (8): 1087–1108. https://doi.org/10.1080/14719037.2012.657834.

- Nitzl, Ch., M. F. Sicilia, and I. Steccolini. 2019. “’exploring the Links Between Different Performance Information Uses, NPM Cultural Orientation, and Organizational Performance in the Public sector’.” Public Management Review 21 (5): 686–710. https://doi.org/10.1080/14719037.2018.1508609.

- Pisár, P., and M. Šipikal. 2018. “Negative Effects of Performance Based Funding of Universities: The Case of Slovakia.” The NISPAcee Journal of Public Administration and Policy 10 (2): 171–185. https://doi.org/10.1515/nispa-2017-0017.

- Plaček, M., C. del Campo, M. Půček, F. Ochrana, M. Křápek, and J. Nemec. 2021. “New Public Management and Its Influence on Museum Performance: The Case of Czech Republic in.” JEEMS Journal of East European Management Studies 339–361.

- Plaček, M., M. Křápek, J. Čadil, and B. Hamerníková 2020. “The Influence of Excellence on Municipal Performance: Quasi-Experimental Evidence From the Czech Republic.” SAGE Open, . doi:10.1177/2158244020978232.

- Plaček, M., J. Nemec, F. Ochrana, M. Půček, M. Křápek, and D. Špaček. 2021. “Do Performance Management Schemes Deliver Results in the Public Sector? Observations from the Czech Republic.” Public Money & Management 41 (8): 636–645. https://doi.org/10.1080/09540962.2020.1732053.

- Plaček, M., F. Ochrana, and M. Půček. 2015. “Benchmarking in Czech Higher Education: The Case of Schools of Economics.” Journal of Higher Education Policy & Management 37 (4): 374–384. https://doi.org/10.1080/1360080X.2015.1056601.

- Plaček, M., F. Ochrana, M. Půček, J. Nemec, and M. Křápek. 2018. “Devolution in the Czech and Slovak Institutions of Cultural Heritage.” Museum Management & Curatorship 33 (6): 594–609. https://doi.org/10.1080/09647775.2018.1496356.

- Plaček, M., G. Vaceková, M. Murray Svidroňová, J. Nemec, and G. Korimová. 2021. “The Evolutionary Trajectory of Social Enterprises in the Czech Republic and Slovakia.” Public Management Review 23 (5): 775–794. https://doi.org/10.1080/14719037.2020.1865440.

- Pollitt, Ch. 2013. “The Logic of Performance Management.” Evaluation 19 (4): 346–363. https://doi.org/10.1177/1356389013505040.

- Porumbescu, G., A. Meijer, and S. Grimmelikhuijsen. 2022. Government Transparency: State of the Art and New Perspectives. Cambridge, United Kingdom: Cambridge University Press.

- Rutherford, A., and T. Rabovsky. 2014. “Evaluating Impacts of Performance Fundings Policies on Students Outcome in Higher Education.” The Annals of the American Academy of Political and Social Science 655 (1): 185–208. https://doi.org/10.1177/0002716214541048.

- Sułkowski, L. 2016. “Accountability of University: Transition of Public Higher Education.” Entrepreneurial Business and Economics Review 4 (1), January: 9–21. https://doi.org/10.15678/EBER.2016.040102.

- Tanberg, A. D. 2010. “Politics, Interest Groups and State Funding of Public Higher Education.” Research in Higher Education 51 (5): 416–450. https://doi.org/10.1007/s11162-010-9164-5.

- Teixeira, P., R. Biscaia, and V. Rocha. 2023. “Competition for Funding or Funding for Competition? Analysing the Dissemination of Performance-Based Funding in European Higher Education and Its Institutional Effects.” International Journal of Public Administration 45 (2): 94–106. https://doi.org/10.1080/01900692.2021.2003812.

- Umbricht, M. R., F. Fernandez, and J. C. Ortagus. 2017. “An Examination of the (Un)intended Consequences of Performance Funding in Higher Education.” Educational Policy 31 (5): 643–673. https://doi.org/10.1177/0895904815614398.

- Van der Kolk, B. 2022. “Performance Measurement in the Public Sector: Mapping 20 Years of Survey Research.” Financial Accountability &management 38 (4): 703–729. https://doi.org/10.1111/faam.12345.

- Yu, J. 2023. “Agency Autonomy, Public Service Motivation, and Organizational Performance.” Public Management Review 25 (3): 522–548. https://doi.org/10.1080/14719037.2021.1980290.

Appendix

Table A1. Structure of the sample of respondents.