?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

University rankings have gained prominence in tandem with the global race towards excellence and as part of the growing expectation of rational, scientific evaluation of performance across a range of institutional sectors and human activity. While their omnipresence is acknowledged, empirically we know less about whether and how rankings matter in higher education outcomes. Do university rankings, predicated on universalistic standards and shared metrics of quality, function meritocratically to level the impact of long-established reputations? We address this question by analysing the extent to which changes in the position of UK universities in ranking tables, beyond existing reputations, impact on their strategic goal of international student recruitment. We draw upon an ad hoc dataset merging aggregate (university) level indicators of ranking performance and reputation with indicators of other institutional characteristics and international student numbers. Our findings show that recruitment of international students is primarily determined by university reputation, socially mediated and sedimented over the long term, rather than universities’ yearly updated ranking positions. We conclude that while there is insufficient evidence that improving rankings changes universities’ international recruitment outcomes, they are nevertheless consequential for universities and students as strategic actors investing in rankings as purpose and identity.

University rankings are now ubiquitous in the increasingly expanding higher education (HE) field.Footnote1 They are part of the broader move toward quantitative benchmarking and evaluation of performance across a range of institutional sectors and human activity (Berman and Hirschman Citation2018). Accounting for excellence is now a widely adopted practice in every organisational field, public or private. A growing literature, originating in North American and European scholarship, has paid both theoretical and empirical attention to rankings (See Lamont Citation2012 for a review). University rankings as a research topic has also attracted attention in recent years, most of the empirical work having been done in the US and focusing on how rankings change university organisational behaviour, administration, college admission policies and student choices (Espeland and Sauder Citation2016; Monks and Ehrenberg Citation1999). In Europe, existing empirical studies are mostly descriptive, either focusing on the shortcomings of the existing rankings and ways to improve them or taking a critical view to highlight more generally their biases and conflict with the fundamental missions of HE (Altbach Citation2012; Amsler and Bolsmann Citation2012; Dill and Soo Citation2005; Pusser and Marginson Citation2013; Shin, Toutkoushian, and Teichler Citation2011; Taylor and Braddock Citation2007). While their pervasiveness in the European context is now widely commented upon, systematic studies about whether, and how, university rankings matter in specific institutional outcomes are missing.

In this paper, we undertake an empirical analysis of the relationship between rankings and the internationalisation of UK universities, more specifically the internationalisation of their student body. Apart from its potential for generating revenue, the internationalisation of the student body is highly integrated into the idea of a world-class university. Attracting students from abroad, or sending their own abroad, is widely adopted as a priority and explicitly strategized by governments, tertiary education sectors and institutions alike (Buckner Citation2019; IAU Citation2019).

What is the role of rankings in shaping the sorting of international students across universities? Unlike long engrained, socially mediated reputations, university rankings are designed to measure ‘objective’ changes in quality over time based on shared and standardised metrics. Thus, shifts in rankings should impact on student recruitment over and beyond long-existing reputations. To investigate, we use aggregate level institutional data from 88 UK universities observed longitudinally and analyse the changes over time in the stock of international students in relation to changes in rankings. UK is the second most popular destination, after the US, in the world for international students (Hubble and Bolton Citation2021). In 2019/20 international students (excluding the ones from the European Union) constituted 22 percent of total student population studying at the UK universities, their numbers trebled since the turn of the twenty-first century. Using organisational level and longitudinal data, and controlling for several university characteristics, we analyse the performance of change in rankings versus long-held reputation in predicting the variation over time in international student enrolment across universities.

How do university rankings work empirically?

The potency of rankings as a tool for evaluation has been highlighted extensively in the increasingly important field of sociology of evaluation (Espeland and Stevens Citation2008; Fourcade and Healy Citation2017; Lamont Citation2012). The broader literature emphasises the rise of ‘new public management’ in a neoliberal environment, resulting in frameworks and instruments of evaluation rapidly diffusing from private to public sectors. In dealing with deregulation and ever greater budget cuts, governments and public institutions turn to the tools of new public management to ensure greater efficacy, thus blurring the boundaries between market and non-market evaluative schemes.

All evaluative schemes are based on classifications, and quantifiable indicators have become the ‘grammar’ underlying such classification practices (Berman and Hirschman Citation2018; Espeland and Stevens Citation2008; Fourcade Citation2016; Mau Citation2019). Quantified representations form part of assessments of performance, impact and risk in fields as wide-ranging as health, the economy, finance, education, human rights, corruption, the environment, food and leisure (Kevin et al. Citation2012). In the realm of HE governance, the culture of numerical evaluation manifests itself as university rankings but is also present in quality assessment of various kinds, such as the Research Evaluation Framework in the UK and academic annual reviews and promotions in the US and other parts of the world (Ramirez Citation2013). University rankings are based on a number of rationalised, quantifiable performance metrics (based on algorithms and weights) which act as ‘proxies for quality’. These include funding and citations (research excellence), entry levels (student selectivity), good honours and employability (student outcomes), student/staff ratio (teaching environment), and budget spent on academic services and facilities (quality of infrastructure). Although different weights are given to different indicators across the existing ranking schemes, the main categories do not change significantly – research indicators for example have a significant place in all of them – affirming a convergent model of university excellence (Hazelkorn Citation2007, 3; Hazelkorn Citation2014; Usher and Savino Citation2006). But more remarkably, like other educational indicators that underline global evaluation schemes (promulgated by the UN, UNESCO and OECD), these metrics are perceived as representing universalistic standards. They are expected to be applicable independent of local and national contexts; across HE systems, old or new; and HE institutions large, small, private and public.

The universalistic projection of standards differentiates university rankings from other evaluative schemes that have been extensively studied in the sociological literature, such as the agro-food sector, particularly the wine industry. In the wine industry, quality conventions (measures mobilised to evaluate quality) take into consideration both product and producer categories, thus there is plurality in the logics of evaluation. Different classification conventions apply to large industrial estates and craftsman wineries (Diaz-Bone Citation2013). For the product, quality is linked with territorial and temporal anchorage: a certain region, a certain grape, a certain time period creates the distinction in quality (Chauvin Citation2009). Tradition plays a role.

HE institutions long had reputations based on tradition, age, and even territory, which landed them at top of the prestige hierarchy. Ivy League, Oxbridge, Russel Group are shortcut terminology for such reputations. University rankings are supposed to go beyond such reputation-based categorizations and hierarchies by redistributing prestige based on universalistic standards and shared metrics of quality. They operationalise prestige as a metric system in relation to ‘objective’ performance, not as long-held, often socially mediated, perceptions about the quality of the institution. They are not to bestow distinction based on tradition, foundation year or any other particularistic criteria.Footnote2

The differential allocation of institutional status via rankings is thus perceived as a meritocratic process based on demonstrable outcomes, which can be enhanced by strategic organisational decisions and investments (Baltaru Citation2018; Keith Citation2001). Rankings are expected to signal improvements in quality and inform and influence a variety of universities’ stakeholders (e.g. applicants, students, employers, funders, other universities, ministries), who should be responsive to status changes as reflected in rankings.

To what extent do university rankings deliver on their promise of meritocratic mapping of quality and related status change? We investigate this question by examining whether changes in ranking performance impact on universities’ recruitment of international students. International student flows, more so than local students, are expected to be aware of and sensitive to rankings as they are the primary source of comparative information on higher education institutions beyond the national context (Mazzarol and Soutar Citation2002; Hazelkorn Citation2014). If indeed rankings distribute prestige meritocratically, we should find that shifts in rankings, as opposed to long-anchored ranking reputations, are consequential for international recruitment.

University rankings, reputation and sorting of international student flows

The relationship between rankings and reputation has not been explicitly theorised in the literature. Bourdieu has a prominent place in studies on university rankings, and rankings in general (Heffernan Citation2022). From a Bourdieusian perspective, rankings are means for reconfiguring reputation in universities’ struggle for gaining symbolic capital, and relatedly other forms of capital. Universities that are successful at the ‘ranking game’ are expected to attract best academics and students, win most research funding and charge higher tuition. Yet, universities do not enter the ranking competition from equal places; they carry the ‘cumulated scores’ of the past, as reflected in long-anchored reputations (Brosnan Citation2017). Striving for distinction and relative advantage, potential students and their families pay attention to institutional reputation to benefit from university’s symbolic capital and to better their social position down the road. Marginson (Citation2014) argues that, as a proxy to reputation, rankings have become integral part of ‘status culture’.

Rankings also figure heavily into the studies of higher education from a neo-institutionalist perspective. A particular argument has been the cultural role of rankings in constituting strategic and competitive actors and identities at both organisational and individual levels (Sauder and Espeland Citation2009; Hasse and Krucken Citation2013; Ramirez Citation2013). A standardised conception of higher education students follows: those who pursue the best educational returns and who can easily move and learn across HE institutions and systems. The projected actorhood of the university and its students boosts the global prominence of rankings and their wide-spread currency even in highly resource-limited higher education contexts. The neo-institutionalist scholars, however, also stress institutional path dependency and acknowledge the reputational structure of HE (Ramirez and Tiplic Citation2014). Once achieved, university prestige may exercise long term influence on resources and legitimation. Thus, while starting from different vantage points, both Bourdesian and neo-institutionalist scholarship suggest that institutional reputation can have independent and inordinate influence on university outcomes such as attracting international students – an indicator for prestige itself.

Empirically, systematic studies into the relationship between rankings, reputation and student recruitment are scarce. Research conducted in the US shows that shifts in an institution’s position in rankings can cause changes in the number and characteristics of domestic applicants (Bowman and Bastedo Citation2009; Meredith Citation2004; Monks and Ehrenberg Citation1999). In the UK, research found reputational signals to be important in decisions for study abroad (Findlay et al. Citation2010) and ranking emerge as a significant driver of international students’ decision-making in choosing universities based on analysis of the Higher Expectations Survey (Cebolla-Boado, Hu, and Nuhoḡlu Soysal Citation2017). Souto-Otero and Enders (Citation2017), using micro-level data from the International Student Barometer survey, conclude that reputation (along with other factors such as fee levels and quality of teaching) is more important than rankings in informing and influencing students, the potential users of rankings.

Apart from the latter, these studies do not explicitly differentiate between ranking position and reputation, which is our analytical interest here. Furthermore, they are mainly conducted from the lens of individual students, as the ‘consumers’ of rankings, focusing on how rankings shape student decision-making, along with other factors such as the personal environment of students. We supplement this literature moving to an organisational level analysis, inquiring whether aggregate level international student flows continue to be sorted and stratified across universities by historically consolidated institutional reputations rather than by changes in rankings as suggested by the meritocratic rationale.

Analysis

Data and variables

Our data are at the organisational level, and we analyse the extent to which university ranking position in contrast to reputation predicts the variation over time in international student recruitment. We set our dependent variable as the number of international, non-EU students enrolled in British universities, using the data provided yearly by the Higher Education Statistics Agency (HESA). For our analysis, we constructed an ad-hoc longitudinal dataset containing university level data for four data points: 2010/2011, 2014/2015, 2016/2017, and 2018/19. These four data points represent the available HESA tables providing longitudinal and comparable data across all our variables of interest.

Our analytical strategy requires operationalisation of rankings position and reputation, our key predictors, as distinct variables from each other. While rankings are expected to be responsive to yearly changes in performance, reputational prestige, once formed, has an anchoring effect, whether it is still deserved or not, and thus has long-term persistence (Baltaru Citation2018; Bowman and Bastedo Citation2011; Keith and Babchuk Citation1998; Schultz, Mouritsen, and Gabrielsen Citation2001; Taylor and Braddock Citation2007; see also Fine Citation2012). To capture these differences, we rely on data from two sources: data on university rankings from the Complete University Guide (CUG) and data on institutional reputation from historical ranking tables published by The Times and available at the Times Digital Archive. We chose CUG data, published since 2007, over other available rankings (such as The Guardian, Times Higher Education) for three reasons: its longitudinal rankings data are publicly accessible; it has consistently available data on all UK universities; and importantly it does not include subjective assessments of university reputation as one of its ranking criteria.Footnote3 The Times established the first ranking tables, as early as 1993; we use them to capture the initial formation of reputation about the UK’s ‘top’ universities.Footnote4 We are aware that some rankings, such as Times Higher Education for example, include international outlook (in terms of student and staff numbers) as a criterion; this is not the case for CUG or the initial period Times rankings, thus we do not risk multicollinearity with our independent variables.

We constructed the variables for ranking and reputation as follows. The percentage change in university rank, from 2010/2011 (the earliest publicly available CUG data) to 2018/2019 (the most recent cross-section in our data across all variables of interest), represents how much the university ‘objectively’ improved its position in rankings between these two time points. We have used the widest available measure of change in rankings, from 2010 to 2018, as opposed to yearly changes to allow as much variation as possible in the changes. In contrast, reputational prestige is time invariant and operationalised as a binary variable on the basis of (a) those universities which consistently ranked in the Top 20 in the early years (1993, 1994, 1995 and 1996) of The Times tables, and (b) the universities that are among the founders of the Russel Group in the 1990s or joined shortly after.Footnote5 These universities were coded ‘1’ as opposed to all other universities, which were coded ‘0’. The percentage change in university rank on the one hand, and reputation on the other, allow us to differentiate between the effects of universities’ incremental successes in the ranking tables and broader perceptions of universities’ previously built reputations.

As control variables, we introduce several university level characteristics (data also from HESA) that might bolster the number of international students. Where applicable, we operationalise these indicators as proportions (in total students or in total income) to net out the effect of university size.

Firstly, having an international outlook may increase universities’ visibility and appeal to prospective international students. We have two indicators to operationalise: the international research funding (European Union and non-European Union) as a proportion of total funding from research grants and contracts (measured in thousands of pounds) and the European Union (EU) students as a proportion of total students.

Secondly, universities increasingly invest in student-oriented services to attract and cater for international students. Universities’ service orientation has been operationalised as the expenditure spent on student and staff facilities as a proportion of total expenditure (measured in thousands of pounds). These facilities include careers advisory services, grants to student societies, accommodation offices, athletic and sporting facilities, transport, chaplaincy, student counselling and health services. Whilst expenditure on student facilities is included as a criterion in the CUG rankings, we have not identified multicollinearity between the two independent variables.

Thirdly, we include controls of income from tuition fees and education contracts as a proportion of total income. Recruitment of international students is often seen as a survival strategy in the context of an increasingly deregulated and underfunded HE sector, especially since they pay higher fees compared to home students. If a university’s budget heavily depends on tuition fees, the university is likely to be more eager to accept international students. Some universities may even set their entry standards low to increase their international intake. We do not include entry standards in the controls, as they are among the criteria that CUG uses in calculating the university rank and the two variables correlate highly (ρ > .80). The variable is measured in thousands pounds and includes all income from student fees from individual students as well as other sources such as the Student Loans Company, Local Education Authorities, the Student Awards Agency for Scotland, and the Department for Education for Northern Ireland.

And lastly, we include the total number of students to control for university size, as well as the number of international students the previous year. Large bodies of international students may help raise the profile and bolster the reputation of the universities internationally.

All independent and control variables (except reputation, a time invariant variable) were measured longitudinally, in line with the dependent variables. Table A1 in the Appendix provides the descriptive statistics.

Method

We ran two models, the second one including the lagged dependent variable () as a predictor to model inertia as proxied by the previous levels of international student enrolments. The data is based on 88 universities (approximately 70% of the UK university sector), observed at our four points in time. These are all universities with available longitudinal data across all our variables of interest.Footnote6 The sample includes various universities, large and small, more and less international in terms of the student body, and of different ranks and financial resources, as illustrated in Table A1 in the Appendix.

We opted for modelling of time series after confirming that the clustering of observations over time is statistically relevant.Footnote7 Regression models with random constant effects decompose the overall variation within (cross-time effect) as well as between years of observation.

Our model specification includes both time variant predictors (the percentage change in ranking position, number of students, expenditure) and our time invariant predictor: reputation. The inclusion of lagged independent variables helps us predict whether changes in university level characteristics nurture or inhibit the number of international students, over and above university reputation. We additionally control for time (by including dummy variables for each year) to account for the overall growth in international students over the period. The specification of our model is:

where αi is the number of international students in each university, which is adjusted yearly by a residual called ui(t-1). The slope modelling the effect of our predictors and the university level residual εi(t-1) is interpreted as in a standard regression model.

Last but not least, our VIF analysis confirms that there is no concern of multicollinearity (VIF < 2.5).

Findings

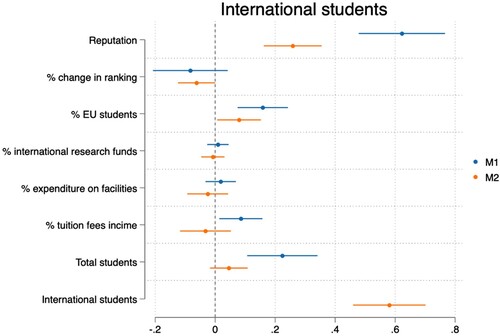

The results from the model predicting the number of international students are shown in (the table reporting exact estimates is presented in the Appendix A2). The figure shows the marginal effects at the 95 per cent confidence interval in each case. We find that the reputation of the university is the main factor boosting the numbers of international students. By contrast, improvements in ranking tables as measured in per cent changes in table position have little to no impact (the size of the corresponding estimate is fairly small in both models and statistically insignificant at the 5% level). This confirms that the driver of university internationalisation as measured by the number of international students is long-term consolidated reputational prestige and not our alternative short-term sensitive proxy (shift in ranking position).

Our controls appear to have a limited role in predicting the stock of international students across time. In Model 1, we see that international students are especially concentrated in large universities, with a large share of EU students and who are more reliant on tuition fees. However, university size and tuition fees are no longer statistically significant in Model 2, when controlling for the previous level of international students. This is expected, as the stock of international students has direct implications in terms of the size of the student body and the magnitude of tuition fee income. The share of EU students remains a positive and statistically significant predictor in both models. It is possible that the presence of EU students plays a role in defining a university’s international outlook, thus making it an attractive destination for the non-EU, international students too.

Expenditure on student facilities, which universities invest in strategically, as they are thought of as draws for international students and students in general, does not have any consistent effect. Neither do international research funds.

Finally, after introducing in our models the number of international students in the previous year, it becomes clear that a certain sort of Mathew-effect applies in attracting international students. Those universities which already attracted more international students in the past, currently also attract more of them: universities brand themselves ‘internationalized’.

Further checks

We further explored alternative operationalisations of ranking position to identify whether rankings become a significant predictor under a different model specification. Firstly, we have operationalised the changes in rankings based on the international QS Rankings. This operationalisation accounts for the possibility that international students consult international rankings more than national rankings. We have not used QS Rankings in the primary model because the sample size is limited; international rankings are available only for about half of our current sample. We aim to explain variation in international student numbers across a representative sample of UK universities, not only those who are included in the international rankings. Nevertheless, our results were replicated by re-running the model on the limited sample size afforded by international rankings (N = 46) as follows: university prestige was positive and statistically significant, while changes in QS Rankings were not a statistically significant predictor for international students (p > .05).

Secondly, we added a binary indicator to capture universities whose ranking change pushed them into ‘Top 10’ category. Being labelled as ‘Top Tier’ might carry more weight than generally moving up in the rankings (Meredith Citation2004). For these universities the ranking change was arguably statistically significant, i.e. at the 10% level (p = .051), along reputation, as opposed to alternative operationalisations (e.g. Top 25) which were insignificant. The persistent effect of university reputation when controlling for the most substantial change in university rankings (entering the Top 10), confirms the important qualitative difference between university reputation and rankings. We find that reputation remains the strongest predictor of international student numbers, compared with varied operationalisation of change in rankings.

Furthermore, we also run the models with an indicator capturing a more direct, strategic attempt of signalling internationalisation: having a Pro-Vice Chancellor (PVC) International (coded ‘1’), as opposed to not having one (coded ‘0’). We have not included this indicator in the main analysis due to the limited availability; the indicator is available only for 2017. The results showed no statistically significant effect of having a PVC International on the number of international students.

Finally, to discard the possibility that the age of university is the main catalyst of reputation, we estimated treatment effects models setting reputation as the treatment variable. Reputation in these models is endogenized using foundation year. Our results show that reputation remains a significant predictor of our dependent variable (number of international students) beyond the time since foundation.

Discussion

Empirically focusing on international student recruitment, our findings point to a rather diffuse and symbolic functioning of university rankings. We show that international students are sorted across British universities based on long-anchored institutional reputations, more so than on the basis of objective, precise indicators of quality conveyed by changing institutional position in ranking tables. Universities’ reputational prestige, as opposed to their organisational performance as measured in rankings, is the main determinant of their recruitment success. This contrasts sharply with the perception that efforts to boost yearly changes in rankings are consequential, a view that underpins significant changes in the governance and organisation of universities. Rankings fail to deliver their meritocratic promise; if anything, they help to further legitimate reputation as a symbolic good. Institutions long reputed to be at the top of the ‘prestige hierarchy’ benefit both from socially mediated perceptions of reputation and from rankings that reinforce the symbolic value of reputation.

If this is so, why do HE institutions and the sector continue to engage with rankings? In relation to international student mobility, rankings’ persistent presence in HE is buttressed by widely adhered human capital theories, which propagate a vision of education as an investment with national and individual level returns. This is the predominant framework that universities and policy makers use to make sense of international student mobility. Share of international students is viewed as a barometer of universities’ ability to effectively generate human capital and global talent by pursuing ‘world class education’. Concomitantly, international students are regarded as rational evaluators of universities’ performance, relying on rankings in order to compare the ‘university market’ and discern the worthiest universities at a particular point in time. Rankings technology, and its communication, makes possible comparison of institutions that are otherwise un-comparable on national and world scales, while also providing a common definition of the most valuable and worthiest education to invest in (Espeland and Sauder Citation2012; Ramirez Citation2013).

More profoundly, rankings help perpetuate cognitive self-constructions of universities and students as rational, goal-oriented agents (Hasse and Krucken Citation2013; Marcelo and Powell Citation2020). University rankings hold sway not only because they project universal quantifiable and comparable standards of worth, but also because investment in them conveys purposeful and capable actorhood with aspirations of mobility. For prospective students and graduates, pursuing rankings acts as a proxy to signal their proactive exercise of agency. From universities point of view, improving rankings is a strategic action, and in part seeking legitimacy in the increasingly standardised and transnationalized sphere of higher education. In other words, rankings become HE purpose and identity in themselves, decoupled from the very goal of enhancing educational excellence and internationalisation (Baltaru and Soysal Nuhoḡlu Citation2018; Bromley and Powell Citation2012; Espeland and Sauder Citation2012). Universities explicitly integrate improving standings in rankings into their mission statements, as an indicator of their ambition for excellence. The wider narratives and publication of rankings in the media makes it difficult for universities to ignore these listings. Small differences are magnified, and relative positioning is celebrated (Brankovic, Ringel, and Werron Citation2018; Fowles, Frederickson, and Koppell Citation2016), despite there being little empirical evidence on their impact on organisational outcomes. An annual cycle of key performance indicators (KPI) takes hold (e.g. increased enrolments, increased graduation rates, increased research funding, increased citations, increased engagement with society and businesses), tightly coupled with organisational strategies and action, while the KPIs themselves become increasingly removed from the goals and advancements they are supposed to signify.

As long as national governments are tied up with competitiveness in the global excellence race, it is likely that HE systems will continue relying on the universalistic logic of rankings to level the playing field for universities. Against the background of a manifestly uncertain future of the HE sector, narratives of excellence entrusted in rankings emerge as roadmaps and structure expectations and decisions (see Beckert and Bronk Citation2019). On the other hand, universities differ largely in their resources and local and regional positionings; in an increasingly multi-polar world, it is plausible that such differentiation will deepen. In such a context, to the extent to which participation in rankings is ‘voluntary’, governments and universities may reconsider whether engagement with rankings is indeed a strategic choice. Such reconsideration may shake the HE imaginary based on meritocracy, but it may also create the possible conditions for new ways of fomenting collective rationality across the HE field, possibly more productive manner than previously.

Concluding thoughts

Despite their centrality for university identity and missions, our findings clearly point to the overestimated role of rankings in shaping international student mobility. We show that universities’ efforts to attract international students through both organisational strategies (e.g. international funding connections, diversification of services and facilities) and improvements in their ranking are not consequential. However, our analysis has some limitations.

While our results are based on the widest timespan utilised in empirical research on this topic up to date, it is possible that analysis conducted using data from a longer period might produce different results than we report in this study. In the long-run rankings may indeed challenge the stability of the deeply ingrained HE status order and consequently the empirical impact of established reputations (Enders Citation2015; but see Fowles, Frederickson, and Koppell Citation2016). To give an example from world rankings, in the last decade, universities from China, South Korea, Taiwan, Hong Kong and Singapore experienced dramatic upward movement, putting these institutions visibly on the HE map. Future research should take into consideration the changes in the global HE field and include a longer time frame as more data become available, to provide firmer conclusions.

Future research should also look beyond the UK (and the US), cross-nationally, where HE systems are historically less stratified along long-term reputations and fee structures. It is possible that university rankings may indeed function differently in contexts where resources are not closely linked with reputation. Finally, in our analysis we explored the impact of rankings on international student recruitment, controlling for university reputation. Further research may employ the conceptual and empirical differentiation we propose, between yearly updated rankings and historically consolidated reputation, to assess how rankings work for a wider range of organisational outcomes.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 According to a 2013 survey by the European University Association, sixty per cent of European universities surveyed report that rankings play a role in their institutional strategies, eighty-six per cent monitor their position in rankings, and sixty per cent dedicate human resources to monitoring rankings through dedicated units or staff (EUA Citation2013).

2 Even when rankings are limited to specific groupings, on the basis of age and region for example (as in QS Top 50 Under 50, THE Asia University Rankings), the same quality conventions and benchmarks apply.

3 This is crucial as we are interested in operationalising reputation and rankings independently. The CUG criteria are: student-staff ratio, academic services expenditure, facilities expenditure, entry qualifications, degree classifications, degree completion (operationalised from the Higher Education Statistics Agency, HESA), graduate prospects, student satisfaction (operationalised from the National Student Survey, NSS), as well as research intensity and research quality (operationalised from the Research Excellence Framework, REF).

4 Note that the earlier ranking tables by the Times and the CUG ranking tables display significant overlap in terms of the indicators being used, ensuring comparability of our two variables. Similar to the CUG, the Times indicators included research and development (R&D) income; scores from the research assessment exercises taken by the Higher Education Funding Council; teaching assessments taken by the funding councils for England, Wales and Scotland; employment ranking based on measures such as the proportion of graduates going on to permanent employment; as well as: student and staff ratio, completion rates, proportion of first-class degrees awarded, and expenditure on services such as library spending. The criteria used display some variation from a year to another (e.g., in 1994 an indicator for completion rates is introduced, in 1995 the indicator for R&D income is dropped) but the overall the measures remain consistent over time.

5 The Russell Group, established in 1994, is a self-selected association of (initially 17) universities in the United Kingdom. Although the group comprises universities with rather mixed outcomes in terms of teaching and research, the label nevertheless managed to capture the perceptions as a ‘distinctive elite tier’ (Boliver Citation2015).

6 Outliers have been excluded, e.g., the Open University, where the total number of students is about ten times larger than the average university in our sample, due to the nature of distance educational provision.

7 The Breusch and Pagan Lagrangian Multiplier test has confirmed that observations are more similar within universities (χ 2 = 160.24, p<.001).

References

- Altbach, P. G. 2012. “The Globalization of College and University Rankings.” Change: The Magazine of Higher Learning 44 (1): 26–31.

- Amsler, S. S., and C. Bolsmann. 2012. “University Ranking as Social Exclusion.” British Journal of Sociology of Education 33 (2): 283–301.

- Baltaru, R. D. 2018. “Do Non-Academic Professionals Enhance Universities’ Performance? Reputation vs. Organisation.” Studies in Higher Education 44 (7): 1183–1196.

- Baltaru, R. D., and Y. Nuhoḡlu Soysal. 2018. “Administrators in Higher Education: Organisational Expansion in a Transforming Institution.” Higher Education 76 (2): 213–229. doi:10.1007/s10734-017-0204-3.

- Beckert, J., and R. Bronk. 2019. Uncertain Futures: Imaginaries, Narratives, and Calculative Technologies, MPIfG Discussion Paper 19/10 (Cologne, Max Planck Institute for the Study of Societies).

- Berman, E. P., and D. Hirschman. 2018. “The Sociology of Quantification: Where Are We Now?” Contemporary Sociology 47 (3): 257–266.

- Boliver, V. 2015. “Are There Distinctive Clusters of Higher and Lower Status Universities in the UK?.” Oxford Review of Education 41 (5): 608–627.

- Bowman, N. A., and M. N. Bastedo. 2009. “Getting on the Front Page: Organizational Reputation, Status Signals, and the Impact of U.S. News and World Report on Student Decisions.” Research in Higher Education 50 (5): 415–426.

- Bowman, N. A., and M. N. Bastedo. 2011. “Anchoring Effects in World University Rankings: Exploring Biases in Reputation Scores.” Higher Education 61 (4): 431–444.

- Brankovic, J., L. Ringel, and T. Werron. 2018. “How Rankings Produce Competition: The Case of Global University Rankings.” Zeitschrift für Soziologie 47 (4): 270–288.

- Bromley, P., and W. W. Powell. 2012. “From Smoke and Mirrors to Walking the Talk: Decoupling in the Contemporary World.” Academy of Management Annals 6 (1): 1–48.

- Brosnan, C. 2017. “Bourdieu and the Future of Knowledge in the University”.” In Bourdieusian Prospects, edited by L. Adkins, C. Brosnan, and S. Threadgold. Abingdon: Routledge.

- Buckner, E. 2019. “The Internationalization of Higher Education: National Interpretations of a Global Model.” Comparative Education Review 63 (3): 315–336.

- Cebolla-Boado, H., Y. Hu, and Y. Nuhoḡlu Soysal. 2017. “Why Study Abroad? Sorting of Chinese Students Across British Universities.” British Journal of Sociology of Education 39 (3): 365–380. doi:10.1080/01425692.2017.1349649.

- Chauvin, P. 2009. “What Globalization Has Done to Wine?” Review Economy, 27 July.

- Diaz-Bone, R. 2013. “Discourse Conventions in the Construction of Wine Qualities in the Wine Market.” Economic Sociology – European Electronic Newsletter 14 (2): 46–53. http://econsoc.mpifg.de/archive/econ_soc_14-2.pdf.

- Dill, D. D., and M. Soo. 2005. “Academic Quality, League Tables, and Public Policy: A Cross-National Analysis of University Ranking Systems.” Higher Education 49 (4): 495–533.

- Enders, J. 2015. “The Academic Arms Race: International Rankings and Global Competition for World-Class Universities”.” In The Institutional Development of Business Schools, edited by A. W. Pettigrew, E. Cornuel, and U. Hommel. Oxford: Oxford University Press.

- Espeland, W. N., M. Sauder, et al. 2012. “The Dynamism of Indicators.” In Governance by Indicators, edited by K. E. Davis. Oxford: Oxford University Press.

- Espeland, W. N., and M. Sauder. 2016. Engines of Anxiety: Academic Rankings, Reputation, and Accountability. New York: Russell Sage Foundation.

- Espeland, W. N., and M. L. Stevens. 2008. “A Sociology of Quantification.” Archives Europeennes de Sociologie 49 (3): 401–436.

- EUA (European University Association). . 2013. Internationalisation in European Higher Education: European Policies, Institutional Strategies and EUA Support (Brussels).

- Findlay, A. M., R. King, A. Geddes, F. Smith, M. A. Stam, M. Dunne, A. Skeldon, and J. Ahrens. 2010. Motivations and Experiences of UK Students Studying Abroad. BIS Research Paper No. 8. Dundee: University of Dundee.

- Fine, G. A. 2012. Sticky Reputations: The Politics of Collective Memory in Midcentury America. New York: Routledge.

- Fourcade, M. 2016. “Ordinalization.” Sociological Theory 34 (3): 175–195.

- Fourcade, M., and K. Healy. 2017. “Categories All the Way Down.” Historical Social Research 42 (1): 286–296.

- Fowles, J., H. G. Frederickson, and J. G. Koppell. 2016. “University Rankings: Evidence and a Conceptual Framework.” Public Administration Review 76 (5): 790–803.

- Hasse, R., and G. Krucken. 2013. “Competition and Actorhood: A Further Expansion of the Neo-Institutional Agenda.” Sociologia Internationalis 51 (2): 181–205.

- Hazelkorn, E. 2007. “The Impact of League Tables and Ranking Systems on Higher Education Decision-Making.” Higher Education Management and Policy 19 (2): 87–110.

- Hazelkorn, E. 2014. “The Effects of Rankings on Student Choices and Institutional Selection”.” In Access and Expansion Post-Massification: Opportunities and Barriers to Further Growth in Higher Education Participation, edited by B. Jongbloed, and H. Vossensteyn. London: Routledge.

- Heffernan, T. 2022. Bourdieu and Higher Education: Life in the Modern University. Springer.

- Hubble, S., and P. Bolton. 2021. International and EU students in Higher Education in the UK FAQs. Briefing Paper, CBP 7976, 15 February. House of Commons Library.

- IAU (International Association of Universities). 2019. 5th Global Survey on Internationalization of Higher Education. Paris: UNESCO.

- Keith, B. 2001. “Organizational Contexts and University Performance Outcomes: The Limited Role of Purposive Action in the Management of Institutions.” Research in Higher Education 42 (5): 493–516.

- Keith, B., and N. Babchuk. 1998. “The Quest for Institutional Recognition: A Longitudinal Analysis of Scholarly Productivity and Academic Prestige among Sociology Departments.” Social Forces 76 (4): 1495–1533.

- Kevin, D., A. Fisher, B. Kingsbury, and S. E. Merry. 2012. Governance by Indicators: Global Power Through Quantification and Rankings. Oxford: Oxford University Press.

- Lamont, M. 2012. “Toward a Comparative Sociology of Valuation and Evaluation.” Annual Review of Sociology 38 (1): 201–221.

- Marcelo, M., and J. W. Powell. 2020. “Ratings, Rankings, Research Evaluation: How do Schools of Education Behave Strategically Within Stratified UK Higher Education?” Higher Education 79: 829–846.

- Marginson, S. 2014. “University Rankings and Social Science.” European Journal of Education 49 (1): 45–59.

- Mau, S. 2019. The Metric Society. The Quantification of the Social World. Cambridge: Polity.

- Mazzarol, T., and G. N. Soutar. 2002. “’Push-pull’ Factors Influencing International Student Destination Choice.” The International Journal of Education Management 16 (2): 82–90.

- Meredith, M. 2004. “Why Do Universities Compete in the Ratings Game? An Empirical Analysis of the Effects of the U.S. News and World Report College Rankings.” Research in Higher Education 45 (5): 443–461.

- Monks, J., and R. G. Ehrenberg. 1999. “The Impact of U.S. News and World Report College Rankings on Admissions Outcomes and Pricing Policies at Selective Private Institutions”, Working Paper Series. Cambridge, MA, National Bureau of Economic Research.

- Pusser, B., and S. Marginson. 2013. “University Rankings in Critical Perspective.” The Journal of Higher Education 84 (4): 544–568.

- Ramirez, O. F. 2013. “World Society and the University as Formal Organization.” SISYPHUS Journal of Education 1 (1): 124–153.

- Ramirez, F. O., and D. Tiplic. 2014. “In Pursuit of Excellence? Discursive Patterns in European Higher Education Research.” Higher Education 67 (4): 439–455.

- Sauder, M., and W. N. Espeland. 2009. “The Discipline of Rankings: Tight Coupling and Organizational Change.” American Sociological Review 74 (2): 63–82.

- Schultz, M., J. Mouritsen, and G. Gabrielsen. 2001. “Sticky Reputation: Analyzing a Ranking System.” Corporate Reputation Review 4 (1): 24–41.

- Shin, J. C., R. K. Toutkoushian, and U. Teichler, eds. 2011. University Ranking: Theoretical Basis, Methodology and Impacts on Global Higher Education. New York: Springer.

- Souto-Otero, M., and J. Enders. 2017. “International Students and Employers Use of Rankings: A Cross-National Analysis.” Studies in Higher Education 42 (4): 783–810.

- Taylor, P., and R. Braddock. 2007. “International University Ranking Systems and the Idea of University Excellence.” Journal of Higher Education Policy and Management 29 (3): 245–260.

- Usher, A., and M. Savino. 2006. A World of Difference: A Global Survey of University League Tables. Toronto: The Educational Policy Institute.

Appendix

Table A1. Descriptive statistics.