?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

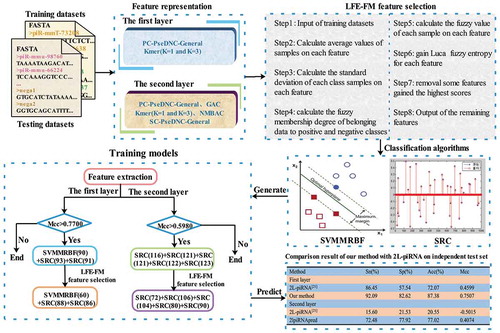

Piwi–interacting RNAs (piRNAs) are indispensable in the transposon silencing, including in germ cell formation, germline stem cell maintenance, spermatogenesis, and oogenesis. piRNA pathways are amongst the major genome defence mechanisms, which maintain genome integrity. They also have important functions in tumorigenesis, as indicated by aberrantly expressed piRNAs being recently shown to play roles in the process of cancer development. A number of computational methods for this have recently been proposed, but they still have not yielded satisfactory predictive performance. Moreover, only one computational method that identifies whether piRNAs function in inducting target mRNA deadenylation been reported in the literature. In this study, we developed a two-layered integrated classifier algorithm, 2lpiRNApred. It identifies piRNAs in the first layer and determines whether they function in inducting target mRNA deadenylation in the second layer. A new feature selection algorithm, which was based on Luca fuzzy entropy and Gaussian membership function (LFE-GM), was proposed to reduce the dimensionality of the features. Five feature extraction strategies, namely, Kmer, General parallel correlation pseudo-dinucleotide composition, General series correlation pseudo-dinucleotide composition, Normalized Moreau–Broto autocorrelation, and Geary autocorrelation, and two types of classifier, Sparse Representation Classifier (SRC) and support vector machine with Mahalanobis distance-based radial basis function (SVMMDRBF), were used to construct a two-layered integrated classifier algorithm, 2lpiRNApred. The results indicate that 2lpiRNApred performs significantly better than six other existing prediction tools.

1. Introduction

Piwi–interacting RNAs (piRNAs) are a distinct class of small noncoding RNAs (ncRNAs), which not only are significant functional RNA molecules but also bind to PIWI proteins [Citation1,Citation2]. piRNAs are usually expressed in germ cells and are approximately 26 to 32 nt long [Citation3–Citation5]. They share a strong preference for 5ʹ uridine residues in vertebrates and invertebrates [Citation6–Citation8]. They also play a significant role in transposon silencing, including in germ cell formation, germline stem cell maintenance, spermatogenesis, and oogenesis [Citation9–Citation13].

In fruit flies and humans, many transposons are present in the genome. These transposon elements may lead to genome instability by moving within genomes and induce mutations such as insertions, deletions, and substitutions. piRNA pathways are major genome defence mechanisms that maintain genome integrity. Many lines of evidence have shown that piRNAs have vital functions in tumorigenesis; for example, aberrantly expressed piRNAs have been indicated to play a role in the process of cancer development [Citation14–Citation16]. As such, predicting piRNAs and determining their functions are significant for drug development, as well as to clarify the biological significance of RNAs and obtain insight into piRNA pathways.

In recent years, several computational predictive methods have been developed to distinguish piRNAs from non-piRNAs. The first predictive technique was developed based on a support vector machine (SVM) and the k-mer feature encoding scheme [Citation17]. The second predictive technique, Piano, adopted a structure-sequence triple-element feature extraction strategy and SVM [Citation18]. Luo et al. [Citation19] developed an ensemble learning-based tool, Accurate piRNA prediction, based on the extraction of six different sequence-derived features. In 2016, Li et al. [Citation20] built a genetic algorithm-based weight ensemble predictor tool, GA-WE. Moreover, Liu et al. [Citation21] recently proposed 2 L-piRNA by incorporating PseKNC. To the best of our knowledge, 2 L-piRNA is currently the only two-layered ensemble classifier that can be utilized not only to identify piRNAs but also to determine whether they function in inducing target mRNA deadenylation. More recently, Wang et al. [Citation22] developed a program, piRNN, based a convolutional neural network classifier and k-mer encoding strategy to identify piRNA sequences.

The main contributions of this study are summarized as follows:

New technique: In the current study, a two-layered integrated classifier algorithm, namely 2lpiRNApred, integration of SRC and SVMMDRBF classifier algorithms was first employed to improve the forecasting accuracy of piRNAs and their functional types.

New method: A novel feature selection algorithm, LFE-GM, was proposed to decrease the dimensionality of the features with excellent performance, high computational efficiency.

Effective feature extraction strategies: A hybrid feature extraction strategies, composed by Kmer, General parallel correlation pseudo-dinucleotide composition, General series correlation pseudo-dinucleotide composition, Normalized Moreau–Broto autocorrelation, and Geary autocorrelation, were used to encode piRNAs and non-piRNAs, and choose the best combination in the first and second layers, respectively.

The rest of this study was organized as follows: In Section II, we introduced the preliminaries relative to the proposed LFE-GM feature selection algorithm and two-layered integrated classifier algorithm, namely 2lpiRNApred, including the collection and preprocessing of piRNAs and non-piRNAs related data sets, five types of feature extraction strategy, introducing two types of sub-classifiers to construct 2lpiRNApred integrated classifier algorithm, SRC and SVMMDRBF, the specific description of the proposed LFE-GM feature selection algorithm and the evaluation criteria of model construction and evaluation. The evaluation of 2lpiRNApred integrated classifier algorithm, the effectiveness of LFE-GM feature selection algorithm, and compared with existing tools, were introduced in Section III. The study ended with a conclusion in Section IV.

2. Materials and method

2.1. Data collection and preprocessing

In the present study, the datasets utilized to predict piRNAs and their functional types were constructed from NONCODE v3.0 [Citation23] and piRBase [Citation24]. From piRBase, sequences from mouse annotated as being piRNAs having the function of inducing target mRNA deadenylation (CPos+) and those without this function (CPos−) were collected. Additionally, from NONCODE v3.0, non-piRNA sequences from mouse (CNeg) were downloaded. For all collected sequences, we utilized the CD-HIT program [Citation25] with a threshold of 0.8 to remove the redundant samples. Finally, 798 CPos+, 1257 CPos−, and 2068 CNeg results were obtained and used to construct the benchmark dataset.

To further assess the performance of our predictor, we divided the benchmark dataset into two subsets, piRNA_tr and piRNA_te, which were utilized to train and test our predictive model, respectively. The training dataset piRNA_tr contained 709 CPos+, 709 CPos−, and 1418 CNeg, respectively, and the rest of the data: 90 CPos+, 548 CPos−, and 650 CNeg, none of which was in piRNA_tr, were used as the independent testing dataset. Considering CPos+ only have 90 in the testing dataset, all sequences from D.melanogaster annotated as being piRNAs having the function of inducing target mRNA deadenylation (CPos+) from piRBase were also collected as piRNA_te CPos+. For all collected D.melanogaster annotated CPos+ samples, we used CD-HIT program with a threshold of 0.8 to remove the redundant samples. Finally, the independent testing dataset piRNA_te included 109 CPos+(90 mouse annotated CPos+ and 19 D.melanogaster annotated CPos+), 548 CPos−, and 650 CNeg.

2.2. Feature extraction strategy

A significant issue in establishing a predictor is how to convert input peptide sequences into effective numerical features that can not only embody their intrinsic correlation but also be fed into a prediction model [Citation26–Citation30]. Herein, five types of features were applied to convert piRNAs into valid numerical vectors by using a web server ‘Pse-in-one’ developed by Liu et al., and its updated version ‘Pse-in-one 2.0’ [Citation31,Citation32]. These feature extraction strategies are as follows: basic kmer (Kmer), General parallel correlation pseudo-dinucleotide composition (PC), General series correlation pseudo-dinucleotide composition (SC), Normalized Moreau–Broto autocorrelation (NMBAC), and Geary autocorrelation (GAC). In this study, six different RNA physicochemical indices were utilized: rise, roll, shift, slide, tilt, and twist. The actual numerical values of the physicochemical indices are listed in . The extraction process is described below.

Table 1. The original values of the six physicochemical properties for dinucleotides in RNA.

2.2.1. Kmer

Kmer has been widely used to represent various DNA or RNA sequences [Citation33–Citation35]. In previous studies [Citation34,Citation35], Noble et al. and Gupta et al. successfully used this approach to represent human gene-regulated samples. In this study, we adopted Kmer to convert the variable lengths of piRNAs and non-piRNAs into fixed length vectors. To convert piRNAs and non-piRNAs into valid numerical vectors, we utilized 1–3-nt strings, including four kinds of 1-mer string: {i}(i = A/G/C/U), 16 kinds of 2-mer string: {ij} (i = A/G/C/U; j = A/G/C/U), and 64 kinds of 3-mer string: {ijt} (i = A/G/C/U; j = A/G/C/U; t = A/G/C/U), giving a total of 84 strings. A specific piRNA or non-piRNA sequence was represented by a numerical vector consisting of the occurrence frequencies of the 84 k-mer (k = 1, 2, 3) strings.

2.2.2. General parallel correlation pseudo-dinucleotide composition (PC)

Suppose an RNA sample D with L nucleic acid residues, that is:

where is the ith sequence position nucleotide along a given sequence. The PC measure [Citation36] of

is defined as follows:

where

represents the occurrence frequency of the kth dinucleotide (AA,AC,AG,AU,CA,CC,CG,CU,GA,GC,GG,GU,UA,UC, UG,UU) for a given RNA sequence,

,

and

The coupling factor is given by:

where indicates the numerical value of uth

physicochemical property for the dinucleotide

at position i.

2.2.3. General series correlation pseudo-dinucleotide composition (SC)

The SC strategy [Citation36] is just a variant of the PC strategy, which differs from it in terms of the method for calculating the correlation factors.

For the given RNA sequence of (1), the SC strategy of

can be defined as follows:

where

represents the occurrence frequency of the kth dinucleotide for a given RNA sequence, and

The coupling factor is given by

where ,

,

indicates the numerical value of uth

physicochemical property for the dinucleotide

in the ith position.

2.2.4. Normalized Moreau–Broto autocorrelation (NMBAC)

NMBAC [Citation36,Citation37] is a powerful feature extraction strategy that measures the correlation of the same physicochemical properties between two nucleotide residues separated by the distance of lag along the RNA sequence. NMBAC is defined as follows:

where is a dinucleotide,

indicates the value of

at the ith position for

,

represents the nearest neighbour correlations for an index

, while

considers the next second nearest neighbour for an index

, and so on.

2.2.5. Geary autocorrelation (GAC)

GAC [Citation36,Citation38] is a powerful feature extraction strategy that measures the correlation of the same physicochemical properties between two nucleotide residues separated by the distance of lag along the RNA sequence. GAC is defined as follows:

where is a dinucleotide,

indicates the value of

at the ith position for

,

represents the mean value for index u along the whole RNA sequence.

represents the nearest neighbour correlations for an index

, while

considers the next second nearest neighbour for an index

, and so on.

2.3. Two types of sub-classifier

2.3.1. The sparse representation classifier

The Sparse Representations Classifier (SRC) [Citation39] utilizes a distance metric to determine whether two samples are similar. It sparsely represents each test sample by linearly combining all of the training samples; each test sample can be assigned to the class producing the smallest residual. SRC and various algorithms improving on it, such as weighted SRC (WSRC), similarity WSRC, and local SRC (LSRC), have been widely used in various fields [Citation39–Citation45]. In this study, SRC was utilized to conduct our forecast model. The specific description of SRC is as follows:

First, input positive and negative training samples

any test sample

, where

,

represents the dimensionality of the extracted features, and

or

is the number of positive or negative training samples, respectively.

Normalize all columns of matrix

to become unit

-norm.

According to formula (12) or (13), solve the

-minimization problem:

(12)

(12)

or

(13)

(13)

where

is an optional error tolerance.

For test sample

, calculate the residue of the ith class

Here,

denotes a characteristic function choosing only coefficients related to the ith class for all of its nonzero entries;

, where 1 means positive class and 2 means negative class.

Finally, outputting

For the derivation and verification of the relevant formulas in the SRC classifier, please refer to a previous paper [Citation39].

2.3.2. SVMMDRBF classifier

Support vector machine (SVM) and metric learning have been demonstrated to be able to learn in many recent applications. The models obtained with SVM not only have the largest margin but also have a kernel trick. Furthermore, by utilizing a metric learning algorithm, a Mahalanobis distance function can be obtained, emphasizing relevant features and reducing the influence of uninformative features. Mei et al. [Citation46,Citation47] developed a new learning algorithm, SVM with Mahalanobis distance-based radial basis function kernel (SVMMRBF). In this study, SVMMRBF was been used to build our sub-classifier. In this part, the difference of SVM between Mahalanobis distance with Euclidean distance mainly was described.

Definition (Mahalanobis Distance): In the space H, suppose , where

represents the covariance matrix of the samples

,

, and

is the average value of the samples

. In that way,

is called Mahalanobis Distance in the space H.

For any training data set , where

, thus matrix

and each row of matrix

is a

dimensional sample vector

. Thus, matrix

. Suppose matrix

is a Nonsingular matrix, then the Mahalanobis Distance

can be easy to calculate. Other than this, the inverse of the matrix

cannot be calculated because it does not exist.

Suppose the rank of the matrix is

and

, pseudo-inverse of the matrix

can be denoted as

, thus

where represents an Elementary transformation matrix and

is the identity matrix.

represents a nonsingular diagonal matrix of rank

.

Then, can be represented as follows:

Nevertheless, the inverse of the matrix is usually exist .

After that, we will describe how to compute kernel functions with Mahalanobis distance in input space.

In general, the calculation of the kernel function is a calculation that transformed into the calculation of inner product for . Thus, the inner product based on Mahalanobis distance can be represented as follows:

where is the inverse for the covariance matrix of

. If the matrix

was a singular matrix, then change

as

.

Here, Gaussian-RBF was used as the kernel function:

The related results of five-fold cross-validation and independent test showed that the sub-classifier SVMMRBF was an effective algorithm at identifying piRNAs and determining whether they function in inducting target mRNA deadenylation. For a detailed description and the related Matlab codes of SVMMRBF, please visit our github: https://github.com/JianyuanLin/2lpiRNApred.

2.4. Feature selection based on Luca fuzzy entropy and Gaussian membership function

Feature selection has been viewed as a resource-intensive pretreatment step in many fields of bioinformatics [Citation48,Citation49]. In this study, a new feature selection algorithm, feature selection based on Luca fuzzy entropy and Gaussian membership function (LFE-GM), was proposed to reduce the dimensionality of the features and develop a thorough prediction model. The details of this approach are as follows:

First, calculate each feature’s mean value of positive or negative training sequences utilizing the following formula:

(14)

(14)

(15)

(15)

where

represent the numbers of positive/negative training sequences, and

indicate the jth feature values of the ith positive or negative training sequence; Dim means the number of extracted features.

Second, utilize formulas (16) and (17) to calculate each feature’s standard deviation of positive and negative training datasets,

(16)

(16)

(17)

(17)

respectively.

Construct fuzzy membership function of the positive or negative training dataset using formulas (14–17):

(18)

(18)

(19)

(19)

(20)

(20)

where

denotes the jth feature value of the ith training sample, and

indicates the jth feature fuzzy membership degree for the ith training sample

.

The jth feature fuzzy value [Citation50,Citation51] on the ith sample can be expressed as follows:

(21)

(21)

According to formula (22), Luca fuzzy entropy can be calculated [Citation52]:

(22)

(22)

It was worth nothing that, to work with Luca fuzzy entropy, we modified the values of

and

(when

, let

, and when

, let

;

).

Rearrange all features according to Luca fuzzy entropy H in ascending order, and remove some features that obtained the maximum Luca fuzzy entropy values.

2.5. Model Construction and Evaluation

To further improve the ability to identify piRNAs and their functional types, a new ensemble learning algorithm was proposed by using majority voting strategies to integrate the predictive results of SRC and SVMMRBF. The performance of 2lpiRNApred was evaluated utilizing the following four measurements:

where represents the number of positive sequences, while

indicates the total number of positive sequences that were incorrectly predicted as negative sequences;

represents the number of negative sequences, while

is the total number of negative sequences that were incorrectly predicted as positive sequences.

3. Results and discussion

3.1. Combining various features to improve predictive performance

To choose the optimal feature combination for identifying piRNAs and their functional types, two classifiers, SRC and SVMMRBF, were used to develop the optimal integration models. The SRC and SVMMRBF integration model in the first layer could be utilized to identify whether or not an RNA sequence is a piRNA, and the SRC integration model in the second layer was employed to further predict whether the identified piRNA sequences function in inducting target mRNA deadenylation. In the following section, only the specific process of constructing the optimal integration model in the first layer is considered.

To develop the optimal integration model, five feature extraction strategies were used to encode each RNA sequence. Five-fold cross-validation was utilized to train the SRC or SVMMRBF prediction model. The Matthews correlation coefficient (Mcc) was utilized as an evaluation measure to choose features because Mcc essentially reflects the correlation between the predicted result and the observed result; the higher the Mcc, the better the performance of the model.

Initially, PC was employed to encode piRNA and non-piRNA sequences, and PC in this work had two uncertain parameters: . The ranges considered for

were

and

; therefore, there were a total of

individual SRC or SVMMRBF prediction models. Three models for SVMMDRBF with Mcc values ≥0.6850 and 10 models for SRC with Mcc values ≥0.6840 were retained to further improve the predictive performance (see Tables Sa1–Sa3 in Supplementary Material for actual results). Then, all combinations of two models among the 10 models for SRC with three models selected for SVMMDRBF were further evaluated. Among the 45 (

) models, the Mcc value of the optimal integrated model (SVMMRBF1+ SVMMRBF2+ SVMMRBF3+ SRC7+ SRC8) was 0.6978 (Table Sa3). The results of models trained on the selected optimal integrated model and each sub-model using a hybrid feature extraction strategy in which PC was combined with any of Kmer, GAC, NMBAC, and SC encoding strategies on five-fold cross-validation are listed in Tables Sa4–Sa7. The top six models with Mcc values ≥0.7000 were combined with each other, and the combination of SVMMRBF3+ SRC7+ SRC8 for the PC+Kmer encoding strategies reached the best predictive performance, with Sn of 92.38%, Sp of 84.41%, Acc of 88.40%, and Mcc of 0.7704 for five-fold cross-validation (Table Sa8). Finally, the prediction results of the optimal model (SVMMRBF3+ SRC7+ SRC8) using the proposed LFE-GM feature selection algorithm are listed in Table Sa9; SVMMRBF3(60)+SRC7(88)+SRC8(86) obtained the best performance, with Sn of 91.89%, Sp of 85.54%, Acc of 88.72%, and Mcc of 0.7759. Here, SVMMRBF3(i) representing the top i features was chosen by using the proposed LFE-GM feature selection algorithm for improving prediction performance of sub-classifier SVMMRBF3, SRC7 (j) representing the top j features was chosen by using the proposed LFE-GM feature selection algorithm for improving prediction performance of sub-classifier SRC7, and so on.

Therefore, the optimal integration model in the first layer was built employing the feature combination PC+Kmer. Additionally, we utilized the same procedure to construct the SRC integration model in the second layer: SRC2(72)+SRC13(106)+SRC14(104)+SRC15(80)+SRC16(90), using the feature combination PC+Kmer+GAC+NMBAC+SC. The detailed results from constructing the SRC integration model in the second layer are listed in Supplementary Material (Tables Sb1–Sb9). It is worth noting that a majority voting strategy was used to construct the ensemble models in the first and second layers. shows a flow diagram of the construction of 2lpiRNApred.

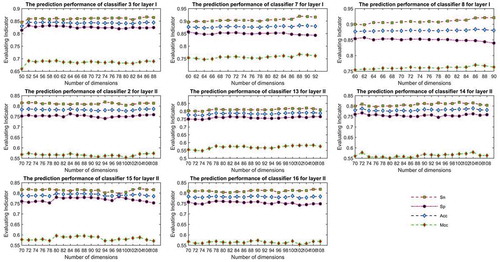

3.2. Effectiveness of LFE-GM feature selection

The LFE-GM feature selection results of the selected optimal models in the first and second layers are shown in and , respectively. The actual comparison results are listed in . As we can see from , utilizing LFE-GM, the features were rearranged for the selected optimal model SVMMRBF3+SRC7+SRC8 which obtained the Sp, Acc, and Mcc values of 84.41, 88.40, and 0.7704. The best-performing models were trained by SVMMRBF3(60)+ SRC7(88)+SRC8(86) which reached the best Sp, Acc, and Mcc values of 85.54, 88.72, and 0.775. The model SRC2(72)+SRC13(106)+SRC14(104)+SRC15(80)+SRC16(90) reached the best predictive performance in the second layer (see Supplementary Material Tables Sa9 and Sb9). Clearly, upon comparison with the optimal models without performing LFE-GM feature selection, both models after LFE-GM feature selection achieved better predictive performance.

Table 2. The results of LFE-GM feature selection utilizing PC+Kmer feature extracted strategies on our training dataset.

Table 3. The results of LFE-GM feature selection utilizing PC+Kmer(3)+GAC(9)+NMBAC(3)+SC (1,0.4) feature extracted strategies on our training dataset.

Table 4. Comparison of prediction results about whether the optimal models perming LFE-GM feature selection on our training dataset.

To further verify the validity of the proposed LFE-GM feature selection algorithm, shows the prediction performance of each sub-classifier using proposed LFE-GM feature selection algorithm by five-fold cross-validation in layers I and II. When identifying piRNA in the first layer, for sub-classifier SVMMRBF3, LFE-GM feature selection ranges from 50 to 88 with an interval of 2, and shows the corresponding values of Sn, Sp, Acc and Mcc, respectively. Clearly, when the feature number is 60, the sub-classifier SVMMRBF3 obtain the optimal prediction results; for sub-classifier SRC7 and SRC8, LFE-GM feature selection ranges from 60 to 92 and from 60 to 90 with an interval of 2, respectively. When the feature numbers are 88 and 86, the sub-classifiers SRC7 and SRC8 obtain the optimal prediction results, respectively. The optimal sub-classifiers SVMMRBF3(60), SRC7(88), and SRC8(86) generally led to 1.36%, 0.89% and 0.85% improvements with regard to no performing LFE-GM feature selection (i.e. SVMMRBF3(90), SRC7(93), and SRC8(91)), respectively.

When determines whether piRNAs function in inducting target mRNA deadenylation in the second layer, for sub-classifier SRC2, SRC13, SRC14, SRC15 and SRC16, LFE-GM feature selection ranges from 70 to 108 with an interval of 2, respectively. Clearly, when the feature numbers are 72, 106, 104, 80 and 90, the sub-classifiers SRC2, SRC13, SRC14, SRC15 and SRC16 obtain the optimal prediction results, respectively. Clearly, the optimal sub-classifiers SRC2(72), SRC13(106), SRC14(104), SRC15(80), and SRC16(90) are about 1.55%, 1.08%, 3.05%, 0.97% and 2.32% higher than no performing LFE-GM feature selection (i.e. SRC2(116), SRC13(121), SRC14(121), SRC15(122), and SRC16(123)), respectively.

Through the above analysis, the proposed LFE-GM feature selection algorithm is obviously effective for identifying piRNA in the first layer and determines whether piRNAs function in inducting target mRNA deadenylation in the second layer. In addition, as we can see from Supplementary Material Table Sa9 and Table Sb9, the integrated models SVMMRBF3(60)+SRC7(88)+SRC8(86) and SRC2(72)+ SRC13(106)+SRC14(104)+SRC15(80)+SRC16(90) obtained the best performance compared with all sub-classifiers, SVMMRBF3(90)+SRC7(93)+SRC8(91) and SRC2(116)+ SRC13(121)+RC14(121)+SRC15(122)+SRC16(123). This also shows the effectiveness of the integrated algorithm in identifying piRNA and determines whether piRNAs function in inducting target mRNA deadenylation.

3.3. Comparison with existing tools

To better test and verify the performance of 2lpiRNApred, we compared it with six other programs by using 5/10-fold cross-validation and an independent test. 2lpiRNApred was compared with GA-WE and 2 L-piRNA in their benchmark datasets using 5/10-fold cross-validation, in accordance with the comparable results listed in associated reports. The relevant results to identify piRNAs in the first layer by 5/10-fold cross-validation are shown in . 2lpiRNApred generally led to 1.29%–65.15% improvement with regard to accurate piRNA prediction, compared with GA-WE, Piano, k-mer, and 2 L-piRNA in our training dataset and the GA-WE human/mouse balanced benchmark dataset. Although the value of Sp obtained by 2lpiRNApred was about 6.3%–7.5%

Table 5. Comparison results on the same training dataset to identify piRNAs via 5/10-fold validation on our training dataset and GA-WE balanced benchmark dataset.

lower than that by k-mer, accurate piRNA prediction, and GA-WE, the values of Sn were about 1.8%–4.3% higher in the GA-WE Drosophila balanced benchmark dataset. Based on 5/10- fold cross-validation results, it can be concluded that the predictive tool was greatly affected by the selected benchmark dataset. However, 2lpiRNApred exhibited better robustness and its obtained accuracy ranged from 2.62% to 39.98%.

As can be seen in , 2lpiRNApred obtained the best Sn of 98.5%, which generally led to 3.3% and 3.6% improvements with regard to the second best accurate piRNA prediction and third best GA-WE, respectively. In terms of another evaluation criterion, Sp, 2lpiRNApred obtained the best average Sp of 88.65%, GA-WE obtained the second best average Sp of 85.48%, while accurate piRNA prediction obtained the third best average Sp of 83.55%. We also compared 2lpiRNApred with 2 L-piRNA, which is currently the only computational method for identifying whether piRNAs function in inducing target mRNA deadenylation; the comparison results are shown in . The comparison results clearly show that 2lpiRNApred was better than 2 L-piRNA.

Table 6. Comparison results with 2 L-piRNA [Citation21] via five-fold cross-validation on our training dataset.

To further test and verify the performance of 2lpiRNApred, we also compared it with piRNN and 2 L-piRNA, which the web-server or github was available in our independent test dataset. As can be seen in , 2lpiRNApred remarkably outperformed piRNN and 2 L-piRNA, and the values of Mcc by 2lpiRNApred were about 0.4875 and 0.2908 higher than those by piRNN and 2 L-piRNA when identifying piRNAs in the first layer. 2lpiRNApred furthered comparison with 2 L-piRNA, which was only one computational method that identifies whether piRNAs function in inducting target mRNA deadenylation, and the value of Acc by 2lpiRNApred was about 56.47% higher than that by 2 L-piRNA. These results indicated that 2lpiRNApred represented a remarkable improvement not only in identifying piRNAs, but also in determining whether piRNAs function in inducting target mRNA deadenylation.

Figure 2. The prediction performance of each sub-classifier using proposed LFE-GM feature selection algorithm by five-fold cross-validation in layer I and II.

Table 7. A comparison of the proposed predictor with 2 L-piRNA and piRNN on our independent test dataset.

Considering the similarity of the training dataset can influence the prediction results, we further investigated the performance of CarSite-II by setting thresholds from 0.8 to 0.5 with CD-HIT program. We reported the values of Sn, Sp, Acc, and MCC obtained on different thresholds in . The results showed that the performance was not sensitive to the similarity of the training samples from 0.8 to 0.5.

Table 8. Testing results using the CD-HIT Program to redundancy on our training datasets via five-fold cross-validation.

4. Conclusion

In this study, we proposed a novel feature selection algorithm, LFE-GM, to reduce the dimensionality of the features. Based on SRC and SVMMDRBF classifier algorithms and five feature extraction strategies (Kmer, PC, SC, NMBAC, and GAC), a two-layered integrated classifier algorithm, 2lpiRNApred, was constructed to identify piRNAs in the first layer and determine whether piRNAs function in inducing target mRNA deadenylation in the second layer. The main conclusions of this study are summarized as follows:

The proposed LFE-GM feature selection algorithm can reduce the dimensionality of the features with a high efficiency and accuracy.

According to the comprehensive performance of the two-layered integrated classifier algorithm, 2lpiRNApred, in this study, integration of SRC and SVMMDRBF classifier algorithms is perfectly tailored to identify piRNAs and determine whether piRNAs function in inducing target mRNA deadenylation, consequently obtaining satisfactory prediction results.

The proposed LFE-GM feature selection strategy in the feature selection module is proved to be an effective scenario to improve the performance of the proposed integrated algorithm.

The proposed 2lpiRNApred model solves the classification problem with three classes (class 1: piRNA involved in deadenylation, class 2: piRNA not involved in deadenylation, class 3: not a piRNA) with binary classifiers.

Availability and implementation

Disclosure of potential conflicts of interest

No potential conflicts of interest were disclosed.

Supplemental Material

Download Zip (243.9 KB)Supplementary material

Supplementary data for this article can be accessed here.

Additional information

Funding

Notes on contributors

Yun Zuo

Yun Zuo received the bachelor’s degree from Liaocheng University in 2015 and received the master’s degree from Dalian Maritime University with the Department of Mathematics in 2018. Currently, she is pursuing the Ph.D. degree in Department of Computer Science, Xiamen University. Her research is in the areas of bioinformatics and machine learning. Several related works have been published by Bioinformatics, Molecular BioSystems, Journal of Theoretical Biology etc.

Quan Zou

Quan Zou (M’13-SM’17) received the BSc, MSc and the PhD degrees in computer science from Harbin Institute of Technology, China, in 2004, 2007 and 2009, respectively. He worked in Xiamen University and Tianjin University from 2009 to 2018 as an assistant professor, associate professor and professor. He is currently a professor in the Institute of Fundamental and Frontier Sciences, University of Electronic Science and Technology of China. His research is in the areas of bioinformatics, machine learning and parallel computing. Several related works have been published by Science, Briefings in Bioinformatics, Bioinformatics, IEEE/ACM Transactions on Computational Biology and Bioinformatics, etc. Google scholar showed that his more than 100 papers have been cited more than 5000 times. He is the editor-in-chief of Current Bioinformatics, associate editor of IEEE Access, and the editor board member of Computers in Biology and Medicine, Genes, Scientific Reports, etc. He was selected as one of the Clarivate Analytics Highly Cited Researchers in 2018.

Jianyuan Lin

Jianyuan Lin graduated from Hangzhou University of electronic science and technology with a major in computer science and technology in 2017. He is currently studying for a master’s degree at Xiamen University. His current research interests include artificial intelligence, data mining, machine learning, and deep learning.

Min Jiang

Min Jiang received the bachelor’s and Ph.D. degrees in computer science from Wuhan University, Wuhan, China, in 2001 and 2007, respectively. He was a Post-Doctoral Research Fellow with the Department of Mathematics, Xiamen University, Xiamen, China, where he is currently a full Professor with the Department of Cognitive Science and Technology. His current research interests include machine learning, computational intelligence, neural-symbolic integration, dynamic multiobjective optimization, machine learning, software development, and basic theories of robotics.

Xiangrong Liu

Xiangrong Liu received his Ph.D. degree in control science and engineering from Huazhong University of Science and Technology at Wuhan, China in 2007. He finished his postdoctoral training in the school of Electronics Engineering and Computer Science at Peking University from 2007 to 2009. In 2009 he joined Xiamen University and is currently a professor in Department of Computer Science, Xiamen University. His research focuses on computational intelligence and bioinformatics.

References

- Claverie JM. Fewer genes, more noncoding RNA. Science. 2005;309(5740):1529.

- Mattick JS. The functional genomics of noncoding RNA. Science. 2005;309(5740):1527–1528.

- Aravin A, Gaidatzis D, Pfeffer S, et al. A novel class of small RNAs bind to MILI protein in mouse testes. Nature. 2006 Jul;442(7099):203–207.

- Lau NC, et al. Characterization of the piRNA complex from rat testes. Science. 2006 Jul;313(5785):363–367.

- Grivna ST, et al. A novel class of small RNAs in mouse spermatogenic cells. Gene Dev. 2006 Jul;20(13):1709–1714.

- Meenakshisundaram H. Existence of snoRNA, microRNA, piRNA characteristics in a novel non-coding RNA: x-ncRNA and its biological implication in homo sapiens. Washington D. 2009 Jan;1(2):31–40.

- Seto AG, Kingston RE, Lau NC, et al. The coming of age for piwi proteins. Mol Cell. 2007 Jun;26(5):603–609.

- Ruby JG, Jan C, Player C, et al. Large-scale sequencing reveals 21U-RNAs and additional microRNAs and endogenous siRNAs in C. elegans. Cell. 2006 Dec;127(6):1193–1207.

- Cox DN, Chao A, Baker J, et al. A novel class of evolutionarily conserved genes defined by piwi are essential for stem cell self-renewal. Gene Dev. 1998 Dec;12(23):3715–3727.

- Klattenhoff CA, Theurkauf W, et al. Biogenesis and germline functions of piRNAs. Development. 2008;135(1):3–9.

- Brennecke J, Aravin AA, Stark A, et al. Discrete small RNA-generating loci as master regulators of transposon activity in drosophila. Cell. 2007 Mar;128(6):1089–1103.

- Thomson T, Lin H, et al. The biogenesis and function of PIWI proteins and piRNAs: progress and prospect. Annu Rev Cell Dev Bi. 2009;25:355–376.

- Houwing S, Kamminga LM, Berezikov E, et al. A role for piwi and piRNAs in germ cell maintenance and transposon silencing in Zebrafish. Cell. 2007 Apr;129(1):69–82.

- Lu Y, Li C, Zhang K, et al. Identification of piRNAs in HeLa cells by massive parallel sequencing. Bmb Rep. 2010 Sep;43(9):635–641.

- Cheng J, Guo J-M, Xiao B-X, et al. piRNA, the new non-coding RNA, is aberrantly expressed in human cancer cells. Clin chim acta. 2011 Aug;412(17–18):1621–1625.

- Huang G, Hu H, Xue X, et al. Altered expression of piRNAs and their relation with clinicopathologic features of breast cancer. Clin Transl Oncol. 2013 Jul;15(7):563–568.

- Zhang Y, Wang X, Kang L, et al. A k-mer scheme to predict piRNAs and characterize locust piRNAs. Bioinformatics. 2011 Mar;27(6):771–776.

- Wang K, Liang C, Liu J, et al. Prediction of piRNAs using transposon interaction and a support vector machine. BMC Bioinformatics. 2014;15:419.

- Luo L, Li D, Zhang W, et al. Accurate prediction of transposon-derived piRNAs by integrating various sequential and physicochemical features. Plos One. 2016 Apr;11(4):e0153268.

- Li D, Luo L, Zhang W, et al. A genetic algorithm-based weighted ensemble method for predicting transposon-derived piRNAs. BMC Bioinformatics. 2016;17:329.

- Liu B, Yang F, Chou K-C, et al. 2L-piRNA: A two-layer ensemble classifier for identifying piwi-interacting RNAs and their function. Mol Ther-Nucl Acids. 2017;7:267–277.

- Wang K, Hoeksema J, Liang C, et al. piRNN: deep learning algorithm for piRNA prediction. Peerj. 2018;6:e5429.

- Bu D, Yu K, Sun S, et al. NONCODE v3. 0: integrative annotation of long noncoding RNAs. Nucleic Acids Res. 2012 Jan;40(D1):D210–D215.

- Zhang P, et al. piRBase: a web resource assisting piRNA functional study. Database-Oxford, no. bau110. 2014 Nov.

- Huang Y, Niu B, Gao Y, et al. CD-HIT suite: a web server for clustering and comparing biological sequences. Bioinformatics. 2010 Mar;26(5):680–682.

- Jia CZ, Zuo Y, et al. S-SulfPred: a sensitive predictor to capture S-sulfenylation sites based on a resampling one-sided selection undersampling-synthetic minority oversampling technique. J Theor Biol. 2017;422:84–89.

- Wu H-Y, Lu C-T, Kao H-J, et al. Characterization and identification of protein O-GlcNAcylation sites with substrate specificity. BMC Bioinformatics. 2014;15:S1.

- Shao J, Xu D, Tsai S-N, et al. Computational identification of protein methylation sites through Bi-profile bayes feature extraction. Plos One. 2009;4:e4920.

- Xie D, Li A, Wang M, et al. LOCSVMPSI: a web server for subcellular localization of eukaryotic proteins using SVM and profile of PSI-BLAST. Nucleic Acids Res. 2005 Jul;33(Web Server):W105–W110.

- Jones DT. Protein secondary structure prediction based on position-specific scoring matrices. J Mol Biol. 1999;292(2):195–202.

- Liu B, Liu, F., Wang, X, et al. Pse-in-one: a web server for generating various modes of pseudo components of DNA, RNA, and protein sequences. Nucleic Acids Res. 2015 Jul;43(W1):W65–W71.

- Liu B, Wu H, Chou K-C, et al. Pse-in-one 2.0: an improved package of web servers for generating various modes of pseudo components of DNA, RNA, and protein sequences. Nat Sci. 2017;9:67–91.

- Lee D, Karchin R, Beer MA, et al. Discriminative prediction of mammalian enhancers from DNA sequence. Genome Res. 2011 Dec;21(12):2167–2180.

- Noble WS, Kuehn S, Thurman R, et al. Predicting the in vivo signature of human gene regulatory sequences. Bioinformatics. 2005 Jun;21(1):I338–I343.

- Gupta S, Dennis J, Thurman RE, et al. Predicting human nucleosome occupancy from primary sequence. Plos Comput Biol. 2008;4:e1000134.

- Chen W, Zhang X, Brooker J, et al. PseKNC-general: a cross-platform package for generating various modes of pseudo nucleotide compositions. Bioinformatics. 2015 Jan;31(1):119–120.

- Feng Z-P, Zhang C-T, et al. Prediction of membrane protein types based on the hydrophobic index of amino acids. J Protein Chem. 2000 May;19(4):269–275.

- Sokal RR, Thomson BA, et al. Population structure inferred by local spatial autocorrelation: an example from an Amerindian tribal population. Am J Phys Anthropol. 2006 Jan;129(1):121–131.

- Wright J, et al. Demo: robust face recognition via sparse representation. Ieee Fg. 2008;1-2:942–943.

- Wright J, Ma Y, Mairal J, et al. Sparse representation for computer vision and pattern recognition. P Ieee. 2010 Jun;98(6):1031–1044.

- Deng W, Hu J, Guo J, et al. Extended SRC: undersampled face recognition via intraclass variant dictionary. IEEE T Pattern Anal. 2012 Sep;34(9):1864–1870.

- Yang J, Zhang L, Xu Y, et al. Beyond sparsity: the role of L1-optimizer in pattern classification. Pattern Recognition. 2012 Mar;45(3):1104–1118.

- Guo S, et al. Similarity weighted sparse representation for classification. ICPR, pp. 1241–1244, Nov. 2012.

- Lu CY, Min H, Gui J, et al. Face recognition via weighted sparse representation. J Vis Commun Image R. 2013 Feb;24(2):111–116.

- Li CG, et al. Local sparse representation based classification. IEEE, no. 11580253, Aug 2010.

- Mei JY, Liu M, Karimi HR, et al. LogDet divergence-based metric learning with triplet constraints and its applications. IEEE T Image Process. 2014 Nov;23(11):4920–4931.

- Mei JY, Liu M, Wang Y-F, et al. Learning a mahalanobis distance-based dynamic time warping measure for multivariate time series classification. IEEE T Cybern. 2016 Jun;46(6):1363–1374.

- Zuo Y, Jia C, Li T, et al. Identification of cancerlectins by split Bi-profile bayes feature extraction. Curr Proteomics. 2018;15(3):196–200.

- Tung CW. Prediction of pupylation sites using the composition of k-spaced amino acid pairs. J Theor Biol. 2013 Nov;336(25):11–17.

- Hosseinzadeh M, et al. Using fuzzy undersampling and fuzzy PCA to improve imbalanced classification through rotation forest algorithm. CSSE. Aug 2015.

- Jia CZ, Zuo Y, Zou Q, et al. O-GlcNAcPRED-II: an integrated classification algorithm for identifying O-GlcNAcylation sites based on fuzzy undersampling and a k-means PCA oversampling technique. Bioinformatics. 2018 Jun;34(12):2029–2036.

- Luca AD, Termini S, et al. A definition of a nonprobabilistic entropy in the setting of fuzzy sets theory. Inf Control. 1972;20(4):301–312.