ABSTRACT

Participatory Design (PD) is increasingly applied to tackle public health challenges, demanding new disciplinary collaborations and practices. In these contexts, any proposed intervention must be supported by evidence that demonstrates it is likely to have the desired effect, particularly if it relies on investment of public funds. An evidence base can include evidence and theory from prior research, evidence generated through primary research, and evaluation. PD research generates evidence through collaboration directly with people who may use or receive an intervention, understanding their experiences and aspirations in situated contexts, without using formal abstractions or assuming evidence generated elsewhere will be directly applicable. Drawing on a case study of a collaboration with public health experts to develop an intervention using PD, we argue there is value in using existing evidence and theory to engage, inform, and inspire intended users of an intervention to participate in the design process. This article aims to support PD researchers and practitioners to consider how evidence can be integrated and produced through PD, enabling collaboration with other disciplines to produce evidence-based and theory-informed interventions to address complex public health challenges.

1. Introduction

Participatory Design (PD) is increasingly applied in public health contexts (Driedger et al. Citation2007), where healthcare systems and policy makers struggle to manage increasing demand due to ageing demographics, increased prevalence of long-term conditions and communicable diseases (WHO Citation2020). These challenges require new ways of encouraging citizens to change their behaviour, which requires the combined expertise of behavioural science, medical, and design communities (Mummah et al. Citation2016).

Design places value on innovative ideas that fulfil unmet needs; public health places importance on proven methods supported by rigorous evaluation. The process for designing new interventions is largely missing from intervention development guidance to date (Rousseau et al. Citation2019). Given the many persistent and complex public health challenges societies face, there is a role for design in developing innovative solutions (Rousseau et al. Citation2019; Bazzano and Martin Citation2017).

Ethical concerns are inherent in efforts to influence behaviour (Faden Citation1987; Niedderer et al. Citation2014), with potential to impinge on citizens’ rights, control, or responsibility, raising the question of who determines desirable behaviour. Concerns can be mitigated by involving people whose behaviour is being targeted for change in intervention design, providing the opportunity to validate the applicability of evidence to the local context (O’Brien et al. Citation2016). Integrating stakeholder involvement in intervention development is recommended (Oliver et al. Citation2004); public health researchers are increasingly collaborating with PD researchers to achieve this (Bowen et al. Citation2013). Integrating PD within intervention development enables insights gained from prior evidence to be aligned with input from people with lived experience (Hagen et al. Citation2012). However, there is a need to consider how to integrate evidence and theory as inputs to PD and how evidence generated is documented and weighed alongside other forms of knowledge (Moffatt et al. Citation2006).

PD is premised on active and democratised participation of intended users of a design, without making assumptions based on prior knowledge, and is underpinned by a process of mutual learning (Bratteteig et al. Citation2013). By collaborating with users and stakeholders as experts of their knowledge and ‘experience domain’ (Sleeswijk Visser Citation2009), authentic understandings of their contexts and challenges can be shared in parallel to being empowered to play a central role in the design process. This situation-specific perspective suggests evidence and theory generated elsewhere may not be applicable (Luck Citation2018) and designs produced through PD may not be transferable to other contexts (Frauenberger et al. Citation2015). However, public health interventions aim to impact at a population level, hence the need to understand how local PD participants’ views relate to the wider evidence base.

This article begins by exploring how designers and PD researchers currently use prior evidence as inputs to their design processes, and how evidence produced as outputs are communicated. We present a case study from an interdisciplinary project that incorporated PD to develop a social marketing intervention to encourage regular HIV testing. The approach used was based on learning from previous multidisciplinary intervention development projects (e.g. O’Brien et al. Citation2016; Macdonald et al. Citation2012), where PD practice was adapted to use evidence produced by collaborators from other disciplines, and in return to generate evidence through PD that is of value to intervention development. We reflect on the value of integrating prior evidence and theory within PD, and generation of evidence through PD, to provide insights to support PD’s application in tackling public health challenges.

2. Integrating evidence and theory in PD

2.1. Inputs: evidence

PD practice often begins with contextualisation. This may include literature review, primary research, or collaboration with subject experts to understand and frame a challenge and determine the best way to engage participants in the design process. This insight gathering is not intended to be exhaustive and would rarely be considered an output of research in its own right, in comparison with evidence-gathering processes used by public health researchers.

While we acknowledge many PD practitioners may use prior evidence within their process, we found limited examples of practice reported in this way. Hagen et al. (Citation2012) propose design artefacts and tools to integrate prior evidence with insights generated through PD for intervention development. Design artefacts ‘capture and communicate research findings in accessible ways’ (ibid, 7) as e.g. personas and design guidelines, enabling a shared language for discussion among participants with lived experience, health professionals, and designers. Artefacts are both inputs and outputs of design processes as they are iteratively validated and developed throughout.

Design artefacts and tools are widely discussed in PD literature, however we found limited examples explicitly describing tools integrating prior evidence. Experience-Based Co-Design (EBCD) is the most widely used approach in health contexts, offering a process for involving patients with lived experience of accessing a health service in codesigning improvements (Donetto et al. Citation2015) and interventions (Tsianakas et al. Citation2015). EBCD uses filmed narratives of patients’ health service experiences as ‘triggers’ for dialogue between patients and staff (Bate and Robert Citation2006). Originally films featured patients involved in the project; it was successfully adapted to use films from prior research to accelerate the process (Locock et al. Citation2014). Villalba et al. (Citation2019) translated evidence derived from literature review into ‘Health Experience Insight Cards’. The cards enabled health professionals to make practical use of experiences described in literature, directing discussion around real-life challenges to identify redesign opportunities.

2.2. Inputs: behaviour change theory

Design theory offers models (Niedderer et al. Citation2014) and processes that support designers to apply theory from behavioural sciences within design processes (Cash, Hartlev, and Durazo Citation2017). This includes toolkits (Hermsen, Renes, and Frost Citation2014) and design exemplars illustrating how theory has been applied in practice (Lockton, Harrison, and Stanton Citation2010).

van Essen, Hermsen, and Renes (Citation2016) propose integrating Behaviour Change (BC) theory to increase design effectiveness and provide a rationale for design decisions that builds a convincing case for commissioners. They caution the need to ensure integration of prescriptive theory does not hamper the creative process by limiting the freedom of designers to experiment and ‘drift’ (Krogh, Markussen, and Bang Citation2015), encouraging reflexive modes of inquiry.

While acknowledging theory can be generalised and abstract, Poggenpohl and Satō (Citation2009) assert that theory can be ‘building blocks’ from which better design emerges, suggesting designers ‘erase the mistaken notion that systematic knowledge and creativity are at odds’ (ibid, 10).

While the toolkits highlighted above were developed for designers to use within their practice, they can be used with participants in a PD process. O’Brien et al. (Citation2016) created a deck of cards with simplified descriptions of BC techniques found effective in supporting people in retirement to undertake regular physical activity. Retired people sorted through the cards to determine which techniques might be appropriate for the local context. However, the theoretical techniques were too abstract for participants to grasp; introducing BC techniques through prototypes later in the process allowed participants to provide critique.

Hermsen et al. (Citation2016) describe challenges in integrating theory from behavioural sciences in design processes due to the ‘inaccessibility’ of scientific knowledge. They highlight difficulties for designers in selecting appropriate theory due to lack of awareness of latest developments or a tendency to ‘cherry pick’ theory that suits their purposes regardless of conflicting evidence. They describe how designers tend to use evidence and theory as inspiration early in the design process but revert to ‘gut feelings’ to develop and evaluate emerging design ideas that may conflict with prior evidence.

2.3. Outputs: producing evidence and theory

Evidence generated through PD is often embodied in collaboratively produced prototypes (Hagen et al. Citation2012). Prototypes can integrate different types of information, embody theory and form a hypothesis to be tested (Koskinen et al. Citation2011). Creating prototypes can generate knowledge, however, this depends on careful documentation to ensure insights ‘do not disappear into the prototype, but are fed back into the disciplinary and cross-disciplinary platforms that can fit these insights into the growth of theory’ (Stappers Citation2007, 87). Explicit focus on theory and evidencing design choices ‘does not rule out tacit moves or aesthetics; it is not an either/or situation but an intelligent understanding and integration of the two’ (Poggenpohl and Satō Citation2009, 6). Design is criticised for being slow to develop, position and argue for the transferable knowledge it generates (Poggenpohl and Satō Citation2009). Limitations in documentation and descriptions of how design has been applied prevent wider application, particularly in public health contexts (Bazzano and Martin Citation2017).

The design rationale (Allan, Young, and Moran Citation1989) accompanying a prototype needs to articulate options considered and reasons for choices. Frauenberger et al. (Citation2015) argue rigour and accountability in PD are nuanced concepts, differing from other fields due to the ‘messy’ nature of the process. The need for ‘hard evidence’ of design decisions and any claim to the generalisability of findings do not align with PD’s underpinning philosophy (ibid). Frauenberger et al. (Citation2015) propose rigour is achieved through critical reflection, using appropriate language to communicate judgements and process.

Within intervention development, there is an imperative to undertake robust evaluation to evidence that the proposed intervention addresses the challenge identified (Skivington et al. Citation2021). Designers evaluate prototypes but rarely document or communicate the knowledge gained (Stappers Citation2007). Finished designs are typically handed over to be implemented by domain experts, with designers rarely involved in evaluation (Bazzano and Martin Citation2017).

It is necessary to consider how PD processes and the evidence generated are communicated to support implementation, particularly when collaborating with other disciplines where evidence generated through PD needs to integrate with other forms of knowledge.

3. Case study

3.1. Context

Social marketing can be effective in changing HIV testing behaviour (McDaid et al. Citation2019), bringing together methods from behavioural theory, persuasion psychology, and marketing science to design the appropriate delivery and marketing mix (place, price, product, and promotion) of health behaviour messages, based on an understanding of how these messages may be interpreted by viewers (Evans Citation2006). Within social marketing development, few studies report formative or pre-testing of interventions with intended recipients (Noar, Benac, and Harris Citation2007), despite evidence it increases likelihood of impact (Stead et al. Citation2007).

Within this context, public health and PD researchers collaborated on a series of interrelated projects that produced an evidence-based and theory-informed social marketing intervention brief to commission an intervention to encourage regular HIV testing.

3.2. Methods

Firstly, a systematic review (SR) was conducted of published evaluations of international social marketing interventions to encourage men who have sex with men (MSM) to undertake HIV testing (see McDaid et al. (Citation2019) for a full description of the SR). Interventions were analysed using social marketing (Hastings Citation2007), social semiotic (Jewitt and Oyama Citation2001) and behaviour change (Michie et al. Citation2013) theories, translated into data extraction tools enabling content analysis, which identified consistent aspects of effective interventions (Flowers et al. Citation2019; Riddell et al. Citation2020). Evidence generated informed an intervention development project integrating a series of PD workshops (described here) with intervention Optimisation Workshops exploring views on theory and application (involving PD workshop participants and health professionals who deliver and manage sexual health services). The academic team included PD researchers and social scientists with expertise in sexual health, alongside health improvement practitioners from the sexual health service commissioning the intervention.

Sixteen potential intervention recipients were recruited into two phases of PD: Exhibition Workshops and a Codesign Workshop. Social scientists led recruitment through existing networks, targeting a diverse mix of gay, bisexual, and other MSM. Participants were given a £40 voucher for each workshop they attended. Workshops were audio recorded.

3.2.1. The exhibition workshop

The first workshop was conducted three times with different groups (of 4 to 6 participants) to enable depth of discussion while permitting a diverse group totalling 14 MSM participants. Two PD researchers and one social scientist facilitated each session. A health improvement expert joined one session.

An icebreaker activity introduced the context of social marketing, inviting participants to share an example of a marketing intervention that resonated with them. The second activity aimed to sensitively discuss HIV testing and the specific BC the proposed intervention was trying to achieve. Four statements distilled from prior research (Flowers et al. Citation2017) were printed on a sheet as prompts, representing different reasons why people do not undertake HIV testing, alongside corresponding demographics (see ). For each statement, participants were asked to suggest possible barriers and facilitators for HIV testing. PD researchers recorded the discussion on the sheet.

The third activity sought to understand participants’ preferences and requirements for interventions. It aimed to build awareness and confidence in critiquing social marketing through exposure to different design strategies and theories. An exhibition of intervention materials from the SR was curated. From 19 analysed studies, visual materials from 11 were selected to showcase a range of different approaches. Intervention visuals were displayed on easels, wall-mounted shelves, a tablet computer (web-based intervention) and a TV screen (video-based interventions) (see ). Each intervention had a placard explaining: country of origin, mode of delivery, applied design strategies, and theories; initially concealed to avoid influencing participants’ first impressions, which they discussed as a group. The facilitator read out the information on the placards, and participants discussed their views. Finally, participants were asked if they thought the intervention would encourage them to test more frequently before a sticker was removed to reveal whether the intervention was found to have achieved effective BC. The ‘reveal’ was intended to keep the activity engaging, reminiscent of a game. Each workshop finished with a group discussion of participants’ overall impressions of the interventions and any conflicting preferences.

Workshop data (transcribed from annotated materials verified for completeness using audio recordings) were analysed using design questions (see ). Questions were based on the data extraction tool created for the SR (Riddell et al. Citation2020), which drew on social semiotic theory to deconstruct prior interventions’ design decisions and components. Findings from each workshop were tabulated and campaign attributes highlighted by participants as desirable in all three workshops were identified, alongside any attributes that provoked differences of opinion. This process resulted in design principles from participants’ commonly agreed attributes and conflicting requirements.

Table 1. Design questions distilled from the systematic review.

3.2.2. Codesign workshop

In the Codesign Workshop, 13 GBMSM participants (11 who took part in the Exhibition Workshop and two new participants) used bespoke design tools underpinned by BC techniques and design questions (see ) to design social marketing interventions.

An icebreaker activity invited participants to introduce themselves and reflect on the interventions critiqued in the Exhibition Workshops (on display), highlighting one memorable intervention. Three statements used in the previous workshops were reintroduced (see ), and participants were asked to choose a statement that resonated with them. This split participants into three groups, each supported by a PD researcher and a sexual health expert. The statements were then used as prompts for the groups to build personas based on their collective experiences without explicitly talking about themselves.

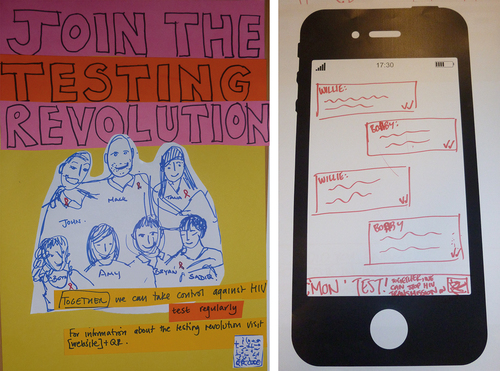

The design principles and conflicting requirements distilled from the Exhibition Workshops were introduced to stimulate discussion among the groups. The design activity was structured using questions (see ) printed on a tool (see ), which the PD researcher annotated to capture the conversation. BC techniques identified in the SR were printed on cards (see ), supported by applied examples drawn from the Exhibition Workshops. A storyboard template asked participants to think through how their persona would engage with the intervention and its behavioural impact. PD researchers supported participants to create a visual prototype of their intervention (see ).

3.2.3. Intervention designs

‘Join the Testing Revolution’ aimed to change behaviour by emphasising collective responsibility and caring for others through personally committing to regularly testing. This included the message that through regular testing, HIV could be eradicated. Participants wanted to target a broader audience beyond MSM, so the intervention featured a diverse friendship group with various genders, ethnicities, and body types. The intervention would be delivered as posters on public transport, social media and in pubs over an extended period, with QR codes to provide more targeted information.

‘C’Mon Test’ [local slang meaning Come on, Test] provided a light-hearted way of conveying the need for regular testing, alongside practical information on where and how to test. Posters featured a running dialogue between two characters, visually similar to social media messaging. The intervention was longitudinal, with the characters’ story and relationship unfolding over a series of iterations. Placing posters in public places (rather than targeting only venues GBMSM were likely to be), participants aimed to remove the stigma of HIV testing by communicating the positive consequences of knowing your status.

The third intervention (untitled) provided a message of hope for those who may be afraid of a potential diagnosis, represented by imagery of a hand reaching out. Messages were intended to dispel existing deep-rooted beliefs around HIV, using relatable examples of real testing experiences and a reassuring tone of a positive outcome regardless of the test result (i.e. focus on treatments available). The intervention was suitable for various settings (e.g. schools, prisons, and community venues), thus normalising testing without emphasising the risk to specific populations. Interestingly, this group contained older participants than in other groups, with lived experience of negatively framed social marketing interventions in the 1980s (Kershaw Citation2018).

3.2.4. Analysis and integration

Data from the Codesign Workshop included the transcribed workshop tools, audio recordings (used to check for additional content not annotated on tools), and prototypes. Each PD researcher produced a narrative description of their group’s intervention, including the rationale for design decisions. Transcribed tools were tabulated by question so responses across groups could be compared, and intervention designs were compared to the design principles and conflicting requirements distilled from Exhibition Workshops. Validated design principles and new design requirements common to the groups were combined to form a list of undisputed requirements (). All but one of the conflicting requirements were resolved, as the groups made the same decisions about what would work best in practice, as evidenced in their intervention designs. The remaining conflict was whether the intervention should explicitly target MSM. Two groups chose to target the general population to avoid stigmatising MSM as at risk of HIV, with one group explicitly targeting MSM. This was resolved through discussion in the Optimisation Workshops, resulting in this requirement within the intervention brief:

Intervention materials should provide clear information about the consequences of undiagnosed HIV infection and HIV transmission risks; this should be conveyed in ways which do not stigmatise all MSM and clarify that it is behaviours and not identity that confers HIV risk.

Social scientists conducted secondary analysis of tabulated data and narrative descriptions to identify: i) marketing mix key components: product, place, promotion, and price, producing a narrative summary; ii) BC techniques applied (using the itemised BC Taxonomy devised by Michie et al. (Citation2013)). Individual BC techniques and examples of their application identified from the SR and PD findings were discussed by health practitioners and MSM to explore acceptability, viability, and optimal application in Optimisation Workshops. These workshops additionally gathered data on systemic barriers and facilitators to implementation.

Consistent use of three underpinning theories (social marketing, social semiotics, and BC) enabled data to be integrated and compared. This highlighted differences between the SR and PD findings (McDaid et al. Citation2019; Langdridge et al. Citation2020; Riddell et al. Citation2020). PD participants’ negative views of explicitly sexual and naked imagery (i.e. they disliked these interventions and thought this kind of imagery would not encourage BC) could be viewed as contradictory considering their common use in interventions judged as effective within existing literature. In addition, participants disliked interventions that included stereotypes of MSM or used attractive actors with unrealistic physiques, despite these interventions being judged effective in previous studies.

3.2.5. Output

Findings of the SR, Exhibition, Codesign and Optimisation workshops, all underpinned by social marketing, social semiotic, and BC theories, were integrated into an evidence-based and theory-informed brief for commissioning. The brief described: background and research process, overall requirements (including behavioural goal, tone, mode of delivery, visual design, placement, duration), detailed intervention requirements, and issues for implementation. Based on the insight gained on PD participants’ varying HIV literacy and the idea proposed in the ‘C’Mon’ test prototype, a sequential intervention was outlined, aiming to build knowledge over time, with detailed proposed content for each stage. The brief was subsequently translated into an invitation to tender by health improvement specialists from the service, and a design agency was commissioned.

4. Discussion

Our discussion will consider how evidence was integrated through activities and tools, reflecting on the resulting impact on participation and the outputs of PD and the interdisciplinary process.

4.1. Validating the evidence base for the local context

Exhibition Workshops invited participants to share their knowledge of the context and critique interventions from the SR. This was carefully framed, explaining that placards contained researchers’ interpretations, and we sought their views as experts in the context. This missing perspective was identified as a limitation of the SR, in that the researchers were not representative of the intended recipients (McDaid et al. Citation2019).

PD participants’ negative views on the use of explicitly sexual and naked imagery, stereotyping, and attractive models may reflect that 12 of the 19 interventions reviewed were from outside the UK, from 2009 to 2016, which may not be in line with local participants’ current views. Design process and rationale for interventions were rarely reported, leaving us unable to determine if consistent use of these aspects in prior interventions was due to evidence of effectiveness (e.g. through pre-testing with intended recipients comparing sexualised and non-sexualised imagery), assumed effectiveness because of common use, or a lack of originality (see Langdridge et al. (Citation2020) for a more nuanced discussion on the use of sexualised imagery in interventions). Our PD findings are supported by Drumhiller et al. (Citation2018), who found MSM prefer images that are not identifiable as MSM to avoid stigmatising this group as solely affected by HIV.

This highlights the value of integrating PD outputs with other forms of knowledge generated during intervention development and the importance of including PD as one component of a multi-stage, multi-method design. SR findings were filtered through PD, outputting validated, updated, and supplemented evidence. Optimisation Workshops gave a further opportunity to iterate findings, discussing contradictory evidence with all stakeholders to determine where priority should be given. The multi-stage process enabled evidence outputs from each stage to form inputs to the next, resulting in an iterated and integrated evidence base validated for the local context.

4.2. Making complex evidence and theory tangible, engaging and informative

The exhibition of intervention materials made complex theory tangible through applied examples. This resonates with the challenge O’Brien et al. (Citation2016) found in conveying BC theory to participants, however our example illustrates a way to achieve this before codesign activities. Participants responded well to the activity’s playful nature; the ‘reveal’ (of whether the intervention was effective) engaged participants in a rich discussion about whether strategies used in other countries would work for them.

Involvement of team members with sexual health expertise in the workshops was vital in answering participants’ questions and clarifying misconceptions or uncertainties around HIV testing and prognosis. It was an opportunity to appreciate the wide variation in existing knowledge about HIV: the key insight inspiring the sequential intervention design concept. Wider team involvement in the workshops gave them experience of the PD process: witnessing how engaged participants were and understanding the discussion’s nuance and the resulting designs’ rationales.

4.3. Building confidence and critique

Reflecting on the efficacy of the Exhibition Workshop format, this initial stage of mutual learning (Bratteteig et al. Citation2013) provided participants with a space to consider their preferences concerning design strategies and theory and gave them a shared language and reference points to enable collaboration in the Codesign Workshop. Researchers shared technical knowledge about different design strategies drawn from the SR, and participants related this to their own lived experiences to articulate their design requirements. The decision to repeat workshop one with three smaller groups was crucial for nurturing this capacity building and developing participants’ confidence in an intimate environment. By separating the Exhibition and Codesign activities into two distinct stages, participants had time to reflect on what they had seen and learned before implementing this knowledge in a codesign setting.

By introducing prior evidence, it could be argued we led participants rather than being open to their views and ideas. Participants’ perspectives take precedence; where this is made clear to participants through careful facilitation, prior evidence can be useful to open up and deepen debate. Furthermore, it provided participants with an opportunity to share fresh insight or dispute the validity of evidence generated in other contexts. The facilitator needs to encourage this kind of critique, presenting evidence with the caveat that it may or may not be relevant. We would argue evidence empowered participants rather than led them. By informing them about different design strategies and theories, advising about practicalities and latest advice about testing, and breaking down the process into clear steps, participants were empowered to design interventions.

PD was not intended to produce the final design, instead being used to elicit requirements. It could be argued intended intervention recipients should be involved in PD following development of the evidence-based and theory-informed brief. We argue this earlier participation is vital to validate the evidence base for the local context and ensure intended recipients have a role in setting the agenda.

4.4. Integrating the outputs of PD with other forms of knowledge

Three underpinning theories (BC taxonomy, social marketing, social semiotics) functioned as ‘anchoring mechanisms’ (Hermsen et al. Citation2016) and ensured the findings of each stage could be separated into their component parts and consistently compared. There is concern in the design field that this approach from behavioural science is reductionist, oversimplifying complex acts to establish the influence of single factors (ibid). This difference in perspective can be seen in the approaches used in Codesign and Optimisation Workshops: participants considered intervention design holistically during codesign and discussed BC techniques separately during the Optimisation Workshops. While designers are comfortable with the messiness of the design process (Frauenberger et al. Citation2015), theory can provide structure to make it more accessible to collaborators from other disciplines (Hermsen et al. Citation2016). Working collaboratively with social scientists ensured robust theory selection and supported theory to guide development and evaluation of emerging design ideas (ibid).

As described by Hagen et al. (Citation2012), evidence and theory-informed design artefacts created as inputs for PD workshops (e.g. the exhibition placards and BC cards) also evidence what was considered and taken forward (or not) into codesigned intervention prototypes, and how design decisions were informed by theory and prior evidence. In hindsight, including design artefacts and prototypes as outputs alongside the brief may have been of value to design agencies in understanding and responding to the brief, and to demonstrate the robustness of the process to support investment.

4.5. Validating constraints

Despite the importance placed on evidence in intervention development, it will always need to be weighed alongside current constraints of financial, staff, and service resources to ensure the service can deliver the intervention. Working in partnership with the sexual health service commissioning the intervention from the outset ensured the brief’s feasibility. Service constraints were explored with participants in the final stage of Optimisation Workshops; however, we reflect that just as prior evidence needed to be validated by intended intervention recipients, constraints should have been introduced and discussed prior to codesign, allowing participants to understand and challenge them. Introducing constraints requires the same careful consideration as prior evidence and theory, to ensure they inspire rather than hamper creativity (Krogh, Markussen, and Bang Citation2015).

4.6. Limitations

While this case study provides an example of how evidence and theory can be applied in PD research and reflections of researchers on the value this brought, comparative research would be required to conclude whether this results in enhanced outcomes from PD.

5. Conclusions

As PD is increasingly applied in public health contexts, it is necessary to consider how to draw upon and generate compelling evidence to demonstrate to funders, policy makers, and practitioners that proposed intervention designs will have the desired outcomes. This article demonstrated how evidence and theory can be applied in PD, and considered the value this brought in validating an evidence base locally, engaging and informing participants, building confidence, and providing a shared language for codesign. We reflected on the value of theory to support integration of evidence produced through PD with other forms of knowledge, how the multi-stage, multi-method approach resulted in an iterated and integrated evidence base, and how design artefacts can evidence the robustness of the process.

While the tools and activities presented are bespoke to the context, this article offers researchers possible strategies to draw on prior evidence and theory within PD. There is an opportunity to further debate using and producing evidence through PD. Given the pressing need for innovation in tackling complex public health challenges, new approaches that involve people with lived experience alongside published knowledge of what can work are vital. By collaborating with and learning from other disciplinary perspectives, we can strengthen PD approaches and secure investment for implementation.

Ethical approval

Ethical approval for the codesign was granted from the University of Glasgow Ethics Committee (application no. 400170069).

Acknowledgments

We would like to thank our workshop participants for sharing their experiences and ideas.

Disclosure statement

No potential competing interest was reported by the authors.

Additional information

Funding

References

- Allan, M., R. M. Young, and T. P. Moran. 1989. “Design Rationale: The Argument Behind the Artifact.” ACM SIGCHI Bulletin 20 (SI): 247–252. doi:10.1145/67450.67497.

- Bate, P., and G. Robert. 2006. “Experience-Based Design: From Redesigning the System Around the Patient to Co-Designing Services with the Patient.” BMJ Quality & Safety 15 (5): 307–310. doi:10.1136/qshc.2005.016527.

- Bazzano, A. N., and J. Martin. 2017. “Designing Public Health: Synergy and Discord.” The Design Journal 20 (6): 735–754. doi:10.1080/14606925.2017.1372976.

- Bowen, S., K. McSeveny, E. Lockley, D. Wolstenholme, M. Cobb, and A. Dearden. 2013. “How Was It for You? Experiences of Participatory Design in the UK Health Service.” CoDesign 9 (4): 230–246. doi:10.1080/15710882.2013.846384.

- Bratteteig, T., K. Bødker, Y. Dittrich, P. H. Mogensen, and J. Simonsen. 2013. ”Methods: Organising Principles and General Guidelines for Participatory Design Projects”. In Routledge International Handbook of Participatory Design, 117–144. New York: Routledge.

- Cash, P. J., C. G. Hartlev, and C. B. Durazo. 2017. “Behaviour Change and Design.” Design Studies 48: 96–128. doi:10.1016/j.destud.2016.10.001. Elsevier Ltd.

- Donetto, S., P. Pierri, V. Tsianakas, and G. Robert. 2015. “Experience Based Co-Design and Healthcare Improvement: Realizing Participatory Design in the Public Sector.” The Design Journal 18 (2): 227–248. doi:10.2752/175630615X14212498964312 Taylor and Francis Ltd.

- Driedger, S. M., A. Kothari, J. Morrison, M. Sawada, E. J. Crighton, and I. D. Graham. 2007. “Using Participatory Design to Develop (Public) Health Decision Support Systems Through GIS.” International Journal of Health Geographics 6 (1): 53. doi:10.1186/1476-072X-6-53.

- Drumhiller, K., A. Murray, Z. Gaul, T. M. Aholou, M. Y. Sutton, and J. Nanin. 2018. “‘We Deserve Better!’: Perceptions of HIV Testing Campaigns Among Black and Latino MSM in New York City.” Archives of Sexual Behavior 47 (1): 289–297. doi:10.1007/s10508-017-0950-4.

- Evans, W. D. 2006. “What Social Marketing Can Do for You.” BMJ 333 (7562): 299.1. doi:10.1136/bmj.333.7562.299.

- Faden, R. R. 1987. “Ethical Issues in Government Sponsored Public Health Campaigns.” Health Education Quarterly, Vol. 14, 1–37 27–37. SAGE Publications Inc. doi:10.1177/109019818701400105.

- Flowers, P., C. Estcourt, P. Sonnenberg, and F. Burns. 2017. “HIV Testing Intervention Development Among Men Who Have Sex with Men in the Developed World.” Sexual Health 14 (1): 80–88. doi:10.1071/SH16081.

- Flowers, P., J. Riddell, N. Boydell, G. Teal, N. Coia, and L. McDaid. 2019. “What are Mass Media Interventions Made Of? Exploring the Active Content of Interventions Designed to Increase HIV Testing in Gay Men Within a Systematic Review.” British Journal of Health Psychology. doi: 10.1111/bjhp.12377.

- Frauenberger, C., J. Good, G. Fitzpatrick, and O. Sejer Iversen. 2015. “In Pursuit of Rigour and Accountability in Participatory Design.” International Journal of Human Computer Studies 74: 93–106. doi:10.1016/j.ijhcs.2014.09.004. Elsevier.

- Hagen, P., P. Collin, A. Metcalf, M. Nicholas, K. Rahilly, and N. Swainston. 2012. Participatory Design of Evidence-Based Online Youth Mental Health Promotion, Prevention, Early Intervention and Treatment. Melbourne: Young and Well Cooperative Research Centre.

- Hastings, G. 2007. Social Marketing: Why Should the Devil Have All the Best Tunes?. Amsterdam: Butterworth-Heinemann.

- Hermsen, S., R. J. Renes, and J. Frost. 2014. “Persuasive by Design: A Model and Toolkit for Designing Evidence-Based Interventions.” In Proceedings of the Chi Sparks 2014 Conference, 74–77. The Hague University of Applied Sciences.

- Hermsen, S., R. van der Lugt, S. Mulder, and R. Jan Renes. 2016. “How I Learned to Appreciate Our Tame Social Scientist: Experiences in Integrating Design Research and the Behavioural Sciences.” DRS2016: Future-Focused Thinking 4. doi:10.21606/drs.2016.17.

- Jewitt, C., and R. Oyama. 2001. “Visual Meaning: A Social Semiotic Approach.” In Handbook of Visual Analysis, edited by T. Van Leeuwen and C. Jewitt, 134–156. London: Sage Publications.

- Kershaw, H. 2018. “Remembering the ‘Don’t Die of Ignorance’ Campaign.” London School of Hygiene and Tropical Medicine. https://placingthepublic.lshtm.ac.uk/2018/05/20/remembering-the-dont-die-of-ignorance-campaign/

- Koskinen, I., J. Zimmerman, T. Binder, J. Redstrom, and S. Wensveen. 2011. Design Research Through Practice: From the Lab, Field and Showroom. Boston, MA: Morgan Kaufmann.

- Krogh, P. G., T. M. Markussen, and A. L. Bang. 2015 “Ways of Drifting—Five Methods of Experimentation in Research Through Design.” In ICoRD’15 – Research into Design Across Boundaries, edited by A. Chakrabarti, Vol. 1, 39–50. Bangalore, India, January: 7-9. New Delhi: Springer. doi:10.1007/978-81-322-2232-3_4.

- Langdridge, D., P. Flowers, J. Riddell, N. Boydell, G. Teal, N. Coia, and L. McDaid. 2020. “A Qualitative Examination of Affect and Ideology Within Mass Media Interventions to Increase HIV Testing with Gay Men Garnered from a Systematic Review.” British Journal of Health Psychology, August: 1–29. doi:10.1111/bjhp.12461.

- Lockton, D., D. Harrison, and N. A. Stanton. 2010. “The Design with Intent Method: A Design Tool for Influencing User Behaviour.” Applied Ergonomics 41 (3): 382–392. doi:10.1016/j.apergo.2009.09.001.

- Locock, L., G. Robert, A. Boaz, S. Vougioukalou, C. Shuldham, J. Fielden, S. Ziebland, M. Gager, R. Tollyfield, and J. Pearcey. 2014. “Using a National Archive of Patient Experience Narratives to Promote Local Patient-Centred Quality Improvement: An Ethnographic Process Evaluation of ‘Accelerated’ Experience-Based Co-Design.” Journal of Health Services Research & Policy 19 (4): 200–207. doi:10.1177/1355819614531565.

- Luck, R. 2018. “What is It That Makes Participation in Design Participatory Design?” Design Studies 59: 1–8. doi:10.1016/j.destud.2018.10.002.

- Macdonald, A. S., G. Teal, C. Bamford, and P. J. Moynihan. 2012. “Hospitalfoodie: An Inter-Professional Case Study of the Redesign of the Nutritional Management and Monitoring System for Vulnerable Older Hospital Patients.” Quality in Primary Care 20 (3): 169–177.

- McDaid, L., J. Riddell, G. Teal, N. Boydell, N. Coia, and P. Flowers. 2019. “The Effectiveness of Social Marketing Interventions to Improve HIV Testing Among Gay, Bisexual and Other Men Who Have Sex with Men: A Systematic Review.” In AIDS and Behavior. Springer US. doi:10.1007/s10461-019-02507-7.

- Michie, S., M. Richardson, M. Johnston, C. Abraham, J. Francis, W. Hardeman, M. P. Eccles, J. Cane, and C. E. Wood. 2013. “The Behavior Change Technique Taxonomy (V1) of 93 Hierarchically Clustered Techniques: Building an International Consensus for the Reporting of Behavior Change Interventions.” Annals of Behavioral Medicine 46 (1): 81–95. doi:10.1007/s12160-013-9486-6.

- Moffatt, S., M. White, J. Mackintosh, and D. Howel. 2006. “Using Quantitative and Qualitative Data in Health Services Research – What Happens When Mixed Method Findings Conflict?” BMC Health Services Research 6 (1): 28. doi:10.1186/1472-6963-6-28.

- Mummah, S. A., T. N. Robinson, A. C. King, C. D. Gardner, and S. Sutton. 2016. “IDEAS (Integrate, Design, Assess, and Share): A Framework and Toolkit of Strategies for the Development of More Effective Digital Interventions to Change Health Behavior.” Journal of Medical Internet Research 18 (12): e317. doi:10.2196/jmir.5927.

- Niedderer, K., R. Cain, S. Clune, D. Lockton, G. Ludden, J. Mackrill, and A. Morris. 2014. Creating Sustainable Innovation Through Design for Behaviour Change: Full Project Report. doi:10.1017/CBO9781107415324.004.

- Noar, S. M., C. N. Benac, and M. S. Harris. 2007. “Does Tailoring Matter? Meta-Analytic Review of Tailored Print Health Behavior Change Interventions.” Psychological Bulletin 133 (4): 673–693. doi:10.1037/0033-2909.133.4.673.

- O’-Brien, N., B. Heaven, G. Teal, E. H. Evans, C. Cleland, S. Moffatt, F. F. Sniehotta, M. White, J. C. Mathers, and P. Moynihan. 2016. “Integrating Evidence from Systematic Reviews, Qualitative Research, and Expert Knowledge Using Co-Design Techniques to Develop a Web-Based Intervention for People in the Retirement Transition.” Journal of Medical Internet Research 18 (8). doi:10.2196/jmir.5790.

- Oliver, S., L. Clarke-Jones, R. Rees, R. Milne, P. Buchanan, J. Gabbay, G. Gyte, A. Oakley, and K. Stein. 2004. “Involving Consumers in Research and Development Agenda Setting for the NHS: Developing an Evidence-Based Approach.” Health Technology Assessment 8 (15). doi:10.3310/hta8150.

- Poggenpohl, S., and K. Satō. 2009. Design Integrations: Research and Collaboration. Bristol, UK: Intellect Books.

- Riddell, J., G. Teal, P. Flowers, N. Boydell, N. Coia, and L. McDaid. 2020. “Mass Media and Communication Interventions to Increase HIV Testing Among Gay and Other Men Who Have Sex with Men: Social Marketing and Visual Design Component Analysis.” Health (United Kingdom) 26 (3), 338–360. doi:10.1177/1363459320954237.

- Rousseau, N., K. M. Turner, E. Duncan, A. O’-Cathain, L. Croot, L. Yardley, and P. Hoddinott. 2019. “Attending to Design When Developing Complex Health Interventions: A Qualitative Interview Study with Intervention Developers and Associated Stakeholders.” PLoS ONE 14 (10): 1–20. doi:10.1371/journal.pone.0223615.

- Skivington, K., L. Matthews, S. Anne Simpson, P. Craig, J. Baird, J. M. Blazeby, K. A. Boyd, N. Craig, D. P. French, E. McIntosh, and M. Petticrew. 2021. “A New Framework for Developing and Evaluating Complex Interventions: Update of Medical Research Council Guidance.”BMJ 374. doi:10.1136/bmj.n2061.

- Sleeswijk Visser, F. 2009. “Bringing the Everyday Life of People into Design.” Delft, The Netherlands, University of Technology.

- Stappers, P. J. 2007. “Doing Design as a Part of Doing Research.” In Design Research Now: Essays and Selected Projects, edited by R. Michel, 81–91. Basel: Birkhuser Verlag AG. doi:10.1192/bjp.111.479.1009-a.

- Stead, M., R. Gordon, K. Angus, and L. McDermott. 2007. “A Systematic Review of Social Marketing Effectiveness.” Health Education 107 (2): 126–191. doi:10.1108/09654280710731548.

- Tsianakas, V., G. Robert, A. Richardson, R. Verity, C. Oakley, T. Murrells, M. Flynn, and E. Ream. 2015. “Enhancing the Experience of Carers in the Chemotherapy Outpatient Setting: An Exploratory Randomised Controlled Trial to Test Impact, Acceptability and Feasibility of a Complex Intervention Co-Designed by Carers and Staff.” Supportive Care in Cancer 23 (10): 3069–3080. doi:10.1007/s00520-015-2677-x.

- van Essen, A., S. Hermsen, and R. J. Renes. 2016. “Developing a Theory-Driven Method to Design for Behaviour Change: Two Case Studies.” In DRS2016: Future-Focused Thinking Design Research Society 50th Anniversary Conference. doi:10.21606/drs.2016.71.

- Villalba, C., A. Jaiprakash, J. Donovan, J. Roberts, and R. Crawford. 2019. “Testing Literature-Based Health Experience Insight Cards in a Healthcare Service Co-Design Workshop.” In CoDesign. Taylor & Francis. doi:10.1080/15710882.2018.1563617.

- WHO. 2020. “Ten Threats to Global Health in 2019.” Accessed April 21. https://www.who.int/news-room/feature-stories/ten-threats-to-global-health-in-2019