Abstract

The present commentary aims to extend the work conducted by Karhulahti et al. (Citation2022), and more specifically to follow one of the research directions that they suggested but did not preregister, that is, to capitalize on network analysis (an item-based psychometric approach) to reinforce or – in contrast – to nuance the view that the four gaming disorder measurement tools that they scrutinized actually assess ontologically distinct constructs. Thanks to the open science approach endorsed by Karhulahti and colleagues, we were able to perform network analysis that encompassed all items from the four gaming disorder assessment tools used by the authors. Because of the very high density of connections among all available items, the analysis conducted suggests that these instruments are not reliably distinct and that their content strongly overlaps, therefore measuring substantially homogeneous constructs after all. Although not aligned with the main conclusions made by Karhulahti and colleagues, the current exploratory results make sense theoretically and require further elaboration of what is meant by ‘ontological diversity’ in the context of gaming disorder assessment and diagnosis.

Karhulahti et al. (Citation2022) are to be complimented for their recent article aiming to address ontological diversity among gaming disorder (GD) measurements. Their research is timely as, despite GD having been recognized as a mental condition and listed in the ICD-11 (see Billieux et al., Citation2021; Reed et al., Citation2022), uncertainty still abounds regarding its optimal identification and diagnosis (King et al., Citation2020). In particular, available epidemiological studies have reported disparate prevalence rates (from 1% to more than 10%-15%; see Karhulahti et al., Citation2022). This ample variation is mainly because GD research has relied on heterogeneous assessment and sampling strategies, complicating comparisons among studies. The improvement and harmonization of assessment strategies is thus an absolute priority in this field (Carragher et al., Citation2022; Király et al., Citation2022).

Karhulahti and colleagues postulated that ontological diversity in GD measurement is likely to account for existing variation in reported prevalence rates, potential (lack of) overlap between measurement instruments, or differential relationships with health-related indicators (e.g., psychopathological symptoms, disability). They capitalized on the new Peer Community in Registered Reports platform to test their research questions through a well-designed and preregistered study conducted within a nationally representative sample. They also shared all their code, data, and other relevant materials via the following Open Science Framework (OSF) repository: https://osf.io/v4cqd/. Through this novel, rigorous, and open approach, Karhulahti and colleagues were able to show that four different GD measurement tools (i.e., GAS7, IGDT10, GDT, and THL1; see the original paper for more details), which the authors consider as having ‘developed from diverse ontological grounds’, produce significantly different prevalence rates and varying degrees of group overlap depending on the tools usedFootnote1. From these results, they recommend that researchers ‘clearly define their construct of interest’ in future GD-related empirical studies and meta-analyses. This conclusion aligns with the view that (problematic) technology usage scales too often measure poorly defined constructs (see Davidson et al., Citation2022, for a critical demonstration and discussion).

Here, we aim to extend the work conducted by Karhulahti and colleagues and more specifically to follow one of the research directions that they suggested (but did not preregister), that is, to ‘chart further ontological differences and similarities between constructs and/or instrument’ by capitalizing on an item-based network model. Thanks to the open science approach endorsed by Karhulahti and colleagues, we were able to perform network analysis that encompassed all items of the four GD assessment tools used by the authors (i.e., GAS7, IGDT10, GDT, and THL1).

Network analysis is a data-driven approach designed to investigate relationships (‘edges’) between variables (‘nodes’). From a psychopathological point of view, this approach no longer considers syndromes or disorders as latent constructs, but rather as networks of symptoms that dynamically interact (Borsboom, Citation2017). Crucially for our present aim, such analysis can also be used to detect potential communalities among the items incorporated in the network (community detection analysis; see Traag & Bruggeman, Citation2009).

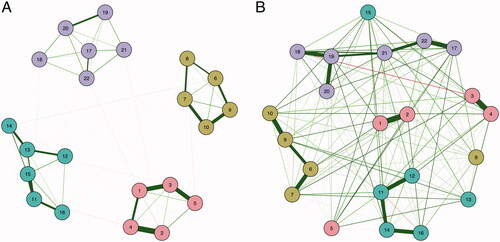

For example, in a recent study, Baggio et al. (Citation2022) capitalized on network analysis to show that different types of problematic online behaviors (i.e., online gaming, cyberchondria, problematic cybersex, problematic online shopping, problematic use of social networking sites, and problematic online gambling) constitute distinct entities – or constructs – with little overlap. According to Baggio et al. (Citation2022), an implication of this finding is that ‘Internet addiction’ does not constitute a unitary umbrella construct encompassing different problematic online behaviors, and that researchers should instead focus on the specific activities that are performed online. The network depicted in was simulated to illustrate high modularity (i.e., strong within-community relationships and weak between-community relationships), thus implying that the (hypothesized) distinctness between entities is reliable, in line with the network results obtained by Baggio et al. (Citation2022). In contrast, the network depicted in was simulated to illustrate low modularity (i.e., comparable within-community relationships and between-community relationships), thus implying that the (hypothesized) distinctness between entities is unreliable.

Figure 1. Simulated network analyses. The network depicted in was simulated to illustrate reliable distinctness between assumed entities (high modularity, i.e., strong within-community relationships and weak between-community relationships). The network depicted in was simulated to illustrate unreliable distinctness between assumed entities (low modularity, i.e., comparable within-community relationships and between-community relationships). Circles reflect items. Circle coloring reflects community membership. Green lines reflect positive pairwise associations between items, whereas red lines reflect negative pairwise associations between items. Line thickness reflects the magnitude of relationships between objects. The approach used to simulate these two networks and the related code are available from https://osf.io/p4x6t/.

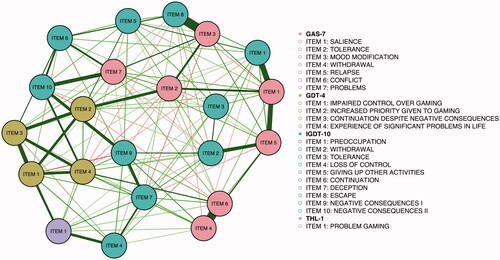

Against this background, conducting a network analysis on the data provided by Karhulahti and colleagues would be relevant in further demonstrating – and thus reinforcing – the view that the four GD measurement tools that they scrutinized actually assess distinct constructs. If this is the case, the network of GD items should be nearer to than to , with items from a single measurement tool (e.g., IGDT10) potentially clustering into a distinct community of items. Yet, and as displayed in , the network analysis that we performed from the data provided by Karhulahti and colleagues instead suggests that the various GD measurement tools are not reliably distinct and that their content strongly overlaps. Because of the very high density of connections among all available GD items, the results of the community detection analysis conducted (see Traag & Bruggeman, Citation2009) failed to show reliable communities of items (modularity value <0.3; see Clauset et al., Citation2004), therefore not supporting the multiple distinct constructs account. The complete description of the analysis that we computed and the code to reproduce it are available from the following OSF link: https://osf.io/p4x6t/.

Figure 2. Network analyses. The network depicted in was estimated from the data provided by Karhulahti et al. (Citation2022). The graphical behavior of the estimated network resembles the network depicted in , illustrating unreliable distinctness between assumed entities (low modularity, i.e., comparable within-community relationships and between-community relationships). Circles reflect items. Circle coloring reflects community membership. Green lines reflect positive pairwise associations between items, whereas red lines reflect negative pairwise associations between items. Line thickness reflects the magnitude of relationships between objects. The approach used to estimate this network and the related code are available from https://osf.io/p4x6t/.

The present results question the view that instruments such as GAS7, IGDT10, GDT, and THL1 measure separate constructs. Although not aligned with the main conclusions made by Karhulahti et al. (Citation2022), the current non-preregistered results make sense and require further elaboration of what is meant by ‘ontological diversity’.

To our view, exploring ontological diversity in GD measurement primarily necessitates taking into account the conceptual and etiological framework that has guided the development of these instruments. Crucially, research on excessive online behaviors has often been criticized for applying a confirmatory approach, which consisted in recycling existing criteria used to define GD, themselves being derived from the criteria used to define substance use disorders (Billieux et al., Citation2015; van Rooij et al., Citation2018). This is typically the case for measurement instruments such as the GAS7 or the IGDT10, which are clearly built on common grounds in terms of symptoms covered (e.g., tolerance, withdrawal). This confirmatory approach of assessment and diagnosis has proven problematic, as criteria used to define substance use disorder are not all valid for defining GD (Castro‐Calvo et al., Citation2021; Charlton & Danforth, Citation2007; Kardefelt-Winther et al., Citation2017). The GDT, although similarly anchored within a substance use disorder framework (GD is listed as a “disorder due to addictive behaviours” in ICD-11), follows a more conservative approach and only includes criteria that assess dysregulated or functionally impairing gaming patterns. The IGDT10, GAS7, and GDT thus share an important ontological ground: they all aim to measure an addictive disorder. Far for being non-problematic from a theoretical point of view, this reflects that this field has too often overlooked potential alternative etiological models to account for problematic video-gaming symptoms (Kardefelt-Winther, Citation2014; Starcevic & Aboujaoude, Citation2017).

Without a doubt, the heterogeneity in prevalence and group overlap identified by Karhulahti and colleagues is striking and further emphasizes that the assessment and the screening of GD need real improvements and harmonization (see also Carragher et al., Citation2022). Yet, we advance that the prevalence and overlap-related results reported by Karhulahti and colleagues reflect diversity in scoring methods or diagnostic systems rather than construct-related ontological diversity per se. For example, ICD-11 follows a monothetic approach (all criteria have to be present to endorse GD, which is not the case in the polythetical DSM-based approaches) and does not include overpathologizing criteria such as tolerance (see Castro-Calvo et al., Citation2021), thus producing a lower and more realistic prevalence rate. Similarly, the suggested scoring method for the GAS7 (criteria endorsed if answer is “sometimes”) is clearly laxer that that adopted for the IGDT10 (criteria endorsed if answer is “often”), thus explaining the huge difference in the prevalence rates observed. When it comes to group overlap, it is crucial to keep in mind that item formulation (or response modality) is likely to account for non-consistent answers between two instruments for the same participant. For example, the “loss of control” criterion refers to “time spent playing” in the IGDT10, but not in the GDT (see ). This makes these two items hardly comparable, and supports the view that the GDT item has better content validity (it covers “loss of control” in a broader way) than the view that these two items measure different constructs.

Table 1. Item formulation differences.

The current commentary aimed to complement the findings obtained by Karhulahti and colleagues in their well-designed study that combined the double advantage of fully endorsing open science principles and being conducted in a large and representative sample. From a meta perspective, our data-driven commentary also shows how the endorsement of open science practices promotes cumulative and complementary research efforts on a single high-quality data set.

Funding statement

Loïs Fournier is supported by the Swiss National Science Foundation (SNSF) under a Doc.CH Doctoral Fellowship [Grant ID: P000PS_211887]. The SNSF had no role in the study design, collection, analysis, or interpretation of the data, writing the manuscript, or the decision to submit the paper for publication.

Open Scholarship

This article has earned the Center for Open Science badges for Open Data and Open Materials through Open Practices Disclosure. The data and materials are openly accessible at https://osf.io/p4x6t/ and https://osf.io/p4x6t/. To obtain the author's disclosure form, please contact the Editor.

Disclosure statement

The authors have no conflict of interest to declare.

Notes

1 Other issues and results reported in Karhulahti et al. (Citation2022), such as mischievous responding, are beyond the scope of the current commentary and thus not discussed.

References

- Baggio S, Starcevic V, Billieux J, King DL, Gainsbury SM, Eslick GD, Berle D. 2022. Testing the spectrum hypothesis of problematic online behaviors: A network analysis approach. Addict Behav. 135:107451.

- Billieux J, Schimmenti A, Khazaal Y, Maurage P, Heeren A. 2015. Are we overpathologizing everyday life? A tenable blueprint for behavioral addiction research. J Behav Addict. 4(3):119–123.

- Billieux J, Stein DJ, Castro‐Calvo J, Higushi S, King DL. 2021. Rationale for and usefulness of the inclusion of gaming disorder in the ICD‐11. World Psychiatry. 20(2):198–199.

- Borsboom D. 2017. A network theory of mental disorders. World Psychiatry. 16(1):5–13.

- Carragher N, Billieux J, Bowden Jones H, Achab S, Potenza MN, Rumpf H, Long J, Demetrovics Z, Gentile D, Hodgins D, et al. 2022. Brief overview of the WHO Collaborative Project on the Development of New International Screening and Diagnostic Instruments for Gaming Disorder and Gambling Disorder. Addiction. 117(7):2119–2121.

- Castro-Calvo J, King DL, Stein DJ, Brand M, Carmi L, Chamberlain SR, Demetrovics Z, Fineberg NA, Rumpf H, Yücel M ‐, et al. 2021. Expert appraisal of criteria for assessing gaming disorder: An international Delphi study. Addiction. 116(9):2463–2475.

- Charlton JP, Danforth IDW. 2007. Distinguishing addiction and high engagement in the context of online game playing. Computers in Human Behavior. 23(3):1531–1548.

- Clauset A, Newman MEJ, Moore C. 2004. Finding community structure in very large networks. Phys Rev E Stat Nonlin Soft Matter Phys. 70(6 Pt 2):066111.

- Davidson BI, Shaw H, Ellis DA. 2022. Fuzzy constructs in technology usage scales. Computers in Human Behavior. 133:107206.

- Kardefelt-Winther D. 2014. A conceptual and methodological critique of internet addiction research: Towards a model of compensatory internet use. Computers in Human Behavior. 31:351–354.

- Kardefelt-Winther D, Heeren A, Schimmenti A, van Rooij A, Maurage P, Carras M, Edman J, Blaszczynski A, Khazaal Y, Billieux J. 2017. How can we conceptualize behavioural addiction without pathologizing common behaviours?: How to conceptualize behavioral addiction. Addiction. 112(10):1709–1715.

- Karhulahti V-M, Vahlo J, Martončik M, Munukka M, Koskimaa R, von Bonsdorff M. 2022. Ontological diversity in gaming disorder measurement: A nationally representative registered report. Addiction Research & Theory, Ahead of Print. 1–11.

- King DL, Chamberlain SR, Carragher N, Billieux J, Stein D, Mueller K, Potenza MN, Rumpf HJ, Saunders J, Starcevic V, et al. 2020. Screening and assessment tools for gaming disorder: A comprehensive systematic review. Clin Psychol Rev. 77:101831.

- Király O, Potenza MN, Demetrovics Z. 2022. Gaming disorder: Current research directions. Current Opinion in Behavioral Sciences. 47:101204.

- Király O, Sleczka P, Pontes HM, Urbán R, Griffiths MD, Demetrovics Z. 2017. Validation of the Ten-Item Internet Gaming Disorder Test (IGDT-10) and evaluation of the nine DSM-5 Internet Gaming Disorder criteria. Addict Behav. 64:253–260.

- Pontes HM, Schivinski B, Sindermann C, Li M, Becker B, Zhou M, Montag C. 2021. Measurement and conceptualization of gaming disorder according to the World Health Organization Framework: The development of the Gaming Disorder Test. Int J Ment Health Addiction. 19(2):508–528.

- Reed GM, First MB, Billieux J, Cloitre M, Briken P, Achab S, Brewin CR, King DL, Kraus SW, Bryant RA. 2022. Emerging experience with selected new categories in the ICD-11: Complex PTSD, prolonged grief disorder, gaming disorder, and compulsive sexual behaviour disorder. World Psychiatry. 21(2):189–213.

- Starcevic V, Aboujaoude E. 2017. Internet gaming disorder, obsessive-compulsive disorder, and addiction. Curr Addict Rep. 4(3):317–322.

- Traag VA, Bruggeman J. 2009. Community detection in networks with positive and negative links. Phys Rev E Stat Nonlin Soft Matter Phys. 80(3 Pt 2):036115.

- van Rooij AJ, Ferguson CJ, Colder Carras M, Kardefelt-Winther D, Shi J, Aarseth E, Bean AM, Bergmark KH, Brus A, Coulson M, et al. 2018. A weak scientific basis for gaming disorder: Let us err on the side of caution. J Behav Addict. 7(1):1–9.