?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The purpose of this work is to present a novel mode of convergence, complete second-order moment convergence with rate, which implies almost complete convergence and gives a smaller rate of convergence. Indeed, this mode is easier to obtain and gives better performances than those of the almost complete convergence in the case of the nonparametric estimators with kernels of the density function, of the distribution function and of the quantile function. A great advantage of the proposed approach is that less conditions are imposed to the kernel function thanks to the use of the mean squared error expression.

1. Introduction

The rate of convergence is an important concept that describes the speed with which a sequence converges to the limit. In this setting, a fundamental question is how fast the convergence is illuminating theoretical studies on the subject has been carried out proposing important new results, algorithms and applications to address this issue. The purpose of this work is to present a novel mode of convergence, namely the complete secondorder convergence with a rate. The novelty here is in the introduction of the complete secondorder convergence rate. Hence, we give proofs of different proprieties of this kind of convergence. The almost complete convergence is induced by second-order convergence in different context (see [Citation1–3] and recently see Yu et al. [Citation29]). The complete convergence concept was introduced by Hsu and Robbins [Citation4]. Then, it has been used by several authors such as Gut and Stadtmller [Citation5], Gut [Citation6,Citation7], Li et al. [Citation8], Sung [Citation9], Sung and Volodin [Citation10]. The interest of such a notion lies in the fact that almost complete convergence (a.c.) implies almost sure convergence (a.s.) due to the Borel–Cantelli lemma. However in some situations at least, it is much easier to obtain complete second-order moment convergence (c.s.m.) instead of almost complete convergence.

As a practical framework, rates of complete second-order moment convergence for the probability density , the distribution function and the quantile function kernel estimators are established. We also discuss about the speed at which these estimators converge. First, in the context of estimating probability density function, many studies using different methods have been proposed. The Kernel method is one of the best of these methods which seems to be convenient and does not require a multiple choice of parameters. Rosenblatt [Citation11] was the earliest pioneer of the class of kernel density estimators, using two parameters, namely the kernel and the bandwidth. For this estimator, convergence in probability was established by Parzen [Citation12]. Habbema et al. [Citation13], Hall and Kang [Citation14], Hall and Wand [Citation15], Gosh and Chaudhury [Citation16] and Gosh and Hall [Citation17] can be consulted for various works on the subject, in particular the estimation by classical kernels of the densities. In the case of independent observations, optimal rates of convergence to zero for mean square error and the bias of the kernel estimators have been addressed by several authors under varying conditions on the kernel (K) and the density (f ). As a contribution, a new complete second-order moment convergence with a rate is introduced for the first time to improve the rates of convergence for the bias and the mean square error (MSE) of kernel density estimators. Then the complete convergence of the density kernel estimator, under weaker conditions on the density function than those proposed in the literature, is achieved. As a consequence of the complete second-order moment convergence, the almost complete convergence is obtained with a better rate.

Second, the proposed mode of convergence is applied to the distribution function and quantiles kernel estimators. Notice that for the distribution function, Nadaraya [Citation18] proposed its kernel estimator. While, Parzen [Citation19] retraced the context of Nadaraya [Citation18] and constructed the kernel quantile estimators for which we established the rate of almost complete convergence. To the best of our knowledge, this is a new result obtained from the proposed convergence mode. A great advantage of the proposed approach is that less conditions are imposed to the kernel function thanks to the use of the mean squared error expression.

2. Complete second-order moment convergence with a rate

Throughout this paper, real-valued random variables are defined on a fixed probability space .

Let and

be two sequences of real numbers. We assume

does not vanish from a certain rank. We say that

is dominated by

if there exist a real number M and an integer

, such that, for all

, we have

and we note

.

Definition 2.1

A sequence of random variables is said to be almost complete second-order moment convergent (c.s.m) to the random variable X, with the convergence rate

, if

where

in a sequence of positive numbers. Note this mode

The following theorem shows that if converges in complete second-order moment to X with a rate

, then it is almost completely convergent with a rate of

Theorem 2.1

If a sequence of random variables verifies

then

And for

,

and the sequence

we obtain

Proof.

Suppose that then from the Markov inequality we obtain

so

Now,

is a rate of convergence, so

which equivalent to, for each

, there exist

such that for all

we have

. So

which implies

The following proposition gives some elementary calculus rules.

Proposition 2.1

Assume and

where

are real numbers. We have

| (1) |

| ||||

| (2) |

| ||||

| (3) |

| ||||

Proof.

Immediately from the following inequality:

We have

then

For

so

Now we have two properties which are consequences of the previous calculus rules.

Corollary 2.1

Assume ,

and

where

is a reel number. We have

| (1) |

| ||||

| (2) |

| ||||

Proof.

Follows directly from Proposition 2.1.

3. Theoretical applications

In this section, rates of complete second-order moment convergence for the probability density , the distribution function and the quantile function kernel estimators are established. The following remark is very important to establish these rates.

Remark 3.1

In all that follows, the supposition of the convergence of or

follows from the fact that, if

we have

, so the convergence of

implies the convergence of

. If

and

while

, the convergence is obtained.

3.1. Kernel density estimator

Let ,

,…,

be independent and identically distributed copies of a random variable X, which has unknown continuous probability density function f. The kernel density estimator, noted

, of the unknown density f defined by Parsen [Citation12]; Rosenblatt [Citation11], is given by

where

is a sequence of positive numbers, usually called a bandwidth or smoothing parameter, and K is an integrable Borel measurable function satisfying

and

, called kernel. Assume that the kernel K and the density f functions verify the following conditions:

| (H1) |

| ||||

| (H2) |

| ||||

| (H3) |

| ||||

| (H4) |

| ||||

Theorem 3.1

Under (H1)–(H4) and supposing that or

converges (see Remark 3.1), we have

(1)

(1)

Proof.

To prove (Equation1(1)

(1) ), notice first that the mean squared error MSE of

, defined by

can be written as

(2)

(2) Hence to prove (Equation1

(1)

(1) ), it suffices to show that

(3)

(3) and

(4)

(4) For the inequality (Equation3

(3)

(3) )

Using the condition (H2) of the kernel K, it follows that

Therefore

Since

converges, so

On the other hand for the inequality (Equation4(4)

(4) ), we have

Using Taylor's series expansion of the function f about a point x up to order 3, (H3), (H1) and (H4), one obtain

where θ is a real number between x and

. Hence

Thus

The proof is completed.

Remark 3.2

If K is a symmetric compactly supported kernel, we obtain the complete second-order moment convergence of under H4.

The choices of and

are not arbitrary because their expressions must be selected so that the convergence of the obtained series is ensured.

Example 3.1

By choosing and

, where

and

, we obtain

This Riemann's serie converges if

, thus if

. And

the right-hand side converge if

.

Consequently, combining the two conditions of right-hand side series, one can obtain (Equation1(1)

(1) ) for

Indeed, it can be checked that

if

, then

;

if

, then

;

if

, then

;

if 2s = 1−4s, than

.

So, the condition is always verified.

Corollary 3.1

Under (H1)–(H4), we have

Proof.

For the optimal bandwidth and a rate of convergence

, which satisfies the inequality

, where

is the rate of almost complete convergence of the density kernel estimator, one obtain

and

Combining the convergence conditions of the two series in the right hand side, we obtain (Equation1

(1)

(1) ) for

.

3.2. Kernel distribution function estimator

Let ,

,…,

be independent and identically distributed copies of a random variable X, which has unknown continuous probability density f and distribution F functions. The kernel distribution function estimator

, that was proposed by Nadaraya [Citation18], can be obtained by integrating the kernel density estimator

, as follows:

where the function H is defined from the kernel K as

Function H is a cumulative distribution function because K is a probability density function.

Assume that the following hypothesis are satisfied:

| (H5) |

| ||||

| (H6) |

| ||||

| (H7) |

| ||||

| (H8) |

| ||||

Then Theorem 3.2 states the complete second-order moment convergence of to F.

Theorem 3.2

Under (H1), (H3), (H5)–(H8) and supposing that or

converges (see Remark 3.1), we have

(5)

(5)

Proof.

To prove (Equation5(5)

(5) ), we use the same argument used to check (Equation1

(1)

(1) ). First for the bias, we have

Using integration by part, substitution

, a Taylor series expansion of the function F about the point x up to order 2, (H1), (H3) and (H8), we obtain

Now for the variance

Using (H1), (H5)–(H8), integration by part, substitution

one have

where

and

are two constants. Since

converges to 0, so for every

there exists

such that

for all

, then

and the desired result is obtained

Remark 3.3

When K is symmetric and has a compact support , the proprieties of H are given in Baszczynska [Citation20], then Hypothesis H5 and H7 are verified. We can obtain the MSE of the kernel distribution estimator

with the assumptions used by Azzalini [Citation21] and the kernel satisfying the above assumptions, we have the squared bias

and the variance

Then the MSE is given by

So under H8 we obtain

The next corollary gives a new rate of the almost complete convergence of to F.

Corollary 3.2

Under (H1), (H3), (H5)–(H8), we have

Proof.

Remarking that , where

is the rate of the almost complete convergence of

to F, we get

The last series converge for

.

Example 3.2

For the optimal bandwidth and

where

, one obtain

and

The two right-hand side series converge simultaneously if

3.3. Kernel quantile function estimator

Let be independent and identically distributed copies of a random variable with absolutely continuous distribution function F. Denoting

the corresponding order statistics. The quantile function, noted Q, is defined to be the left continuous inverse of F, given by

A kernel quantile estimator, based on the Nadaraya [Citation18] kernel distribution function estimator

, is defined as

and given by

where K is a density function, while

as

.

Our result of convergence is based on the expression of the MSE of the kernel quantile estimator, given by Sheather and Marron [Citation22] (Theorem 1, p. 5), when p is in the interior of , under conditions that the kernel K is symmetric about 0 with compact support, and

is continuous in a neighbourhood of p.

Theorem 3.3

Supposing that or

converges (see Remark 3.1), we have

(6)

(6)

Proof.

Building on Falk [Citation23] and David [Citation24], Sheather and Marron [Citation22] give the expressions of bias and variance of as

and

So

and

Finally we have obtained the almost complete convergence and the compete second-order moment convergence of the kernel quantile estimator

.

Example 3.3

In the same way of Example 3.2 and using the same and

we obtain

4. Simulation study

In this section, to present the performance of the new rate of convergence for a finite-size sample, we realize a simulation study. We give a visual impression of the quality of convergence by calculating the correspondent MSE together with the value of the rate of complete second-moment convergence and the value of the rate of almost complete convergence, based on a sample obtained from two theoretical models: Gamma kernel density estimates and the innovation one said Laplace kernel density developed by Khan and Akbar [Citation25], inspired to Chen's idea [Citation26]. Defined by

In the second part, Normal and Epanechnikov kernel distribution estimators are used with optimal bandwidth and different sizes of normal and exponential sample to give a performance of the new rate of convergence. Finally, with the Normal model, one perform the quantile (25%, 50%,75%) estimates for the same sample size.

4.1. Kernel density MSE

We propose two schemes of Kernel estimations, Laplace and Gamma kernel density's estimates with optimal bandwidth One conduct simulations of samples data from Exponential and Gamma density with sample sizes n = 100, n = 150, 200, 250, 300, 500, 800, 1000. We summarize the numerical calculations in Table .

Table 1. Mean simulated values and rate of convergences for density.

According to the results obtained in Table , we remark that the values of the CSMC rate of the kernel density estimator for Laplace kernel (resp. Gamma kernel) are closer to the Exp MSE (resp. Gamma MSE) values, than the ACC rate one.

4.2. Kernel distribution MSE

In this case, the Normal kernel distribution and Epanechnikov kernel distribution with optimal bandwidth are compared to Normal sample. The size varies between 100 and 1000. We summarize the numerical calculations in Table .

Table 2. Mean simulated values and rate of convergences for distribution.

By the results given in Table , we notice that, in the both cases normal and Eparechnikov kernels distribution, CSMC rate values of the kernel distribution estimator, are closer to the normal MSE and Exp MSE, respectively values than that of the ACC rate.

4.3. Kernel quantile MSE

Now we calculate the MSE of the normal kernel quantile estimator. The size varies between 100 and 1000. We summarize the numerical calculations in Table .

Table 3. Mean simulated values and rate of convergence for quantiles.

Eventually, we conclude that, in all cases, the CSMC rate of the kernel density, distribution and quantile estimators, gives better results than the ACC rate of the same estimators.

5. Real data analysis

Female infertility and BMI: This study aims to investigate the body mass index (BMI) of infertile women of childbearing age. We use data from 200 participants from the Ben Badis University Hospital Centre of Constantine, Algeria, in 2018.

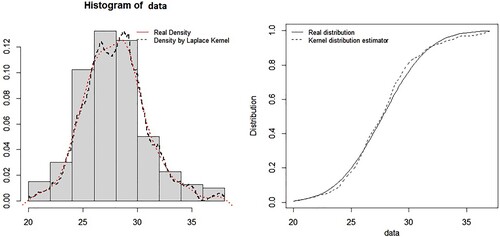

The first step is to establish the conformity test between the sample and a normal distribution. One obtain, D = 0.079226 smaller than p-value This implies the use of the density and the distribution of the normal law. The second step is to estimate the density and the distribution functions and to represent them in a graph (Figure ). For the MSE between the real density and a normal kernel density is equal to 0.003128583 and the rate of CSM convergence is 0.0008729775 and the AC convergence is

We obtain for the distribution, MSE= 0.003128583 and the rate of CSM convergence is

and the AC convergence is

For the same rate of convergence, one have the MSE quantiles given by (25%, 0.0260281), (50%, 0.04186125), (75%,0.058767206). Note that the results of the real data come supported those of the simulation.

6. Conclusion

The present work proposed a new method to obtain the convergence rate of the MSE that is much more efficient in the CSMC case. Indeed, the CSM convergence rate gives better results than that of AC convergence. The previous results indicate that the choice of this type of convergence using kernel method estimate is a good alternative to the almost complete one. We can apply this type of convergence in any estimation that requires the study of the MSE, such as for example neural network, least squares method, …, and following the suggestion, it can be applied in neutrosophic statistics developed in Smarandache [Citation27] and Afzal et al [Citation28]. Moreover, maybe we can apply this type of convergence to extend the theorem of the law of large numbers.

Disclosure statement

No potential conflict of interest was reported by the authors.

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

References

- Chow D. On the rate of moment convergence of sample function of a random variables with bounded support. Bull Inst Math Acd Sin. 1988;16:177–201.

- Liang H, Li D, Rosalsky A. Complete moment and integral convergence for sums of negatively associated random variables. Acta Math Sin (Engl Ser). 2010;26(3):419–432.

- Qui D, Chen P. Complete moment convergence for i.i.d random variables. Statist Probab Lett. 2014;91:76–82.

- Hsu P, Robbins H. Complete convergence and the law of large numbers. Proc Natl Acad Sci USA. 1947;33(2):25–31.

- Gut A, Stadtmüller U. An intermediate Baum–Katz theorem. Statist Probab Lett. 2011;81(10):1486–1492.

- Gut A. Marcinkiwicz laws and convergence rates in the law of large numbers for random variables with multidimensional indices. Ann Probab. 1978;6:469–482.

- Gut A. Convergence rates for probabilities of moderate deviations for sums of random variables with multidimensional indices. Ann Probab. 1980;8(2):298–313.

- Li D, Rao MB, Jiang T, et al. Complete convergence and almost sure convergence of weighted sums of random variables. J Theoret Probab. 1995;8(1):49–76.

- Sung SH. Complete convergence for weighted sums of random variables. Statist Probab Lett. 2007;77(3):303–311.

- Sung SH, Volodin A. On the rate of complete convergence for weighted sums of arrays of random elements. J Korean Math Soc. 2006;43(4):815–828.

- Rosenblatt M. Remarks on some non parametric estimates of a density function. Ann Math Statist. 1956;27(3):832–837.

- Parzen E. On estimation of a probability density function and mode. Ann Math Stat. 1962;33(3):1065–1076.

- Habbema JDF, Hermans J, Vanden Broee K. A stepwise discriminant analysis program using density estimation. In: Bruckmnann G, editor. Comp stat 1974, Proceedings in Computational Statistics. Vienna: Physica Verlag; 1974. p. 101–110.

- Hall P, Kang K-H. Bandwidth choice for non parametric classification. Ann Stat. 2005;33:284–306.

- Hall P, Wand MP. On non parametric discrimination using density differences. Biometrika. 1988;75(3):541–547.

- Ghosh AK, Chaudhuri P. Optimal smoothing in kernel analysis discriminant. Stat Sin. 2004;14:457–483.

- Ghosh AK, Hall P. On error-rate estimation in nonparametric classification. Stat Sin. 2008;18:1081–1100.

- Nadaraya EA. Some new estimates for distribution function. Theory of Probeb. Appl. 1964;9:497–500.

- Parzen E. Non parametric statistical data modelling. J Amer Stat Assoc. 1979;74(365):105–131.

- Baszczynska A. Kernel estimation of cumulative distribution function of a random variable with bounded support. Statist Trans. 2016;17:541–556.

- Azzalini A. A note on the estimation of a distribution function and quantiles by a kernel method. Biometrika. 1981;68(1):326–328.

- Sheather SJ, Marron JS. Kernel quantile estimators. J Amer Statist Assoc. 1990;85(410):410–416.

- Falk M. Relative deficiency of Kernel type estimators of quantiles. Ann Statist. 1984;12:261–268.

- David HA. Order statistics. 2nd ed. New York: John Wiley; 1981.

- Khan JA, Akbar A. Density estimation by Laplace kernel. Working paper. Department of Statistics, Bahauddin Zakariya, Multan, Pakistan. 2021.

- Chen SX. Probability density function estimation using gamma kernels. Ann Inst Statist Math. 2000;52(3):471–480.

- Smarandache F. Neutrosophic statistics vs. classical statistics, section in Nidus Idearum/superluminal physics. Vol. 7, 3rd ed. 2019. p. 117.

- Afzal U, Alrweili H, Ahamd N, et al. Neutrosophic statistical analysis of resistance depending on the temperature variance of conducting material. Sci Rep. 2021;11(1):Article ID 23939.

- Yu Y, et al. On the complete convergence for uncertain random variables. Soft Comput. 2022;26(3):1025–1031.