?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This paper constructs an unconditionally stable explicit difference scheme, marching backward in time, that can solve a limited, but important class of time-reversed 2D Burgers' initial value problems. Stability is achieved by applying a compensating smoothing operator at each time step to quench the instability. This leads to a distortion away from the true solution. However, in many interesting cases, the cumulative error is sufficiently small to allow for useful results. Effective smoothing operators based on , with real p>2, can be efficiently synthesized using FFT algorithms, and this may be feasible even in non-rectangular regions. Similar stabilizing techniques were successfully applied in other ill-posed evolution equations. The analysis of numerical stability is restricted to a related linear problem. However, extensive numerical experiments indicate that such linear stability results remain valid when the explicit scheme is applied to a significant class of time-reversed nonlinear 2D Burgers' initial value problems. As illustrative examples, the paper uses fictitiously blurred

pixel images, obtained by using sharp images as initial values in well-posed, forward 2D Burgers' equations. Such images are associated with highly irregular underlying intensity data that can seriously challenge ill-posed reconstruction procedures. The stabilized explicit scheme, applied to the time-reversed 2D Burgers' equation, is then used to deblur these images. Examples involving simpler data are also studied. Successful recovery from severely distorted data is shown to be possible, even at high Reynolds numbers.

1. Introduction

Following the 1951 seminal paper by Cole [Citation1], a large literature on the 2D Burgers' equation has developed, spawned by significant applications in science and engineering. Numerous references may be found in recent papers [Citation2–7]. As is often stressed, the 2D Burgers' coupled system is a useful simplification of the 2D incompressible Navier-Stokes equations, providing valuable insight into the behaviour of complex flows, together with the expected behaviour of possible numerical methods for computing such flows. Accordingly, numerical methods for the well-posed forward Burgers' initial value problem have been actively investigated.

On the other hand, very little seems known about possible numerical computation of the ill-posed time-reversed 2D Burgers' equation. The backward problem is of considerable interest, as it may enable computation of initial conditions that can produce desired flow patterns, as well as reconstructing the genesis of undesired flows. Such inverse design problems are actively being studied for the 1D Burgers' equation [Citation8–10]. The inverse Burgers' problem also plays an important role in studies of data assimilation for nonlinear geophysical fluid dynamics [Citation11–14]. Error estimates of logarithmic convexity type have been obtained for backward recovery in the 1D problem [Citation15, Citation16], but are not yet known in the 2D Burgers' problem. For the Navier-Stokes equations, backward error estimates are given in [Citation17, Citation18]. Further information about ill-posed continuation in partial differential equations may be found in [Citation19–22].

The present self-contained paper constructs an unconditionally stable explicit difference scheme, marching backward in time, that can solve an important but limited class of time-reversed 2D Burgers' initial value problems. Stability is achieved by applying a compensating smoothing operator at each time step to quench the instability. Eventually, this leads to a distortion away from the true solution. However, in many interesting cases, the cumulative effect of these errors is sufficiently small to allow for useful results. Effective smoothing operators based on , with real p>2, can be efficiently synthesized using FFT algorithms. Similar stabilizing techniques were successfully applied in other ill-posed evolution equations, [Citation23–26]. As was the case in these papers, the analysis of numerical stability given in Sections 3 and 4 below, is restricted to a related linear problem. However, extensive numerical experiments indicate that these linear stability results remain valid when the explicit scheme is applied to a significant class of time-reversed nonlinear Burgers' initial value problems.

Following [Citation1], we define the Reynolds number RE as follows

(1)

(1) where A is the area of the flow domain, ν is the kinematic viscosity and

is the maximum value of the initial velocity. In many numerical experiments on the well-posed forward 2D Burgers' equation, the objective is to demonstrate accurate calculation of an exact solution, known analytically together with its specific initial and time-dependent boundary values. Typically, with

, computations are carried out up to some fixed time

. In the present time-reversed context, where only approximate values are generally available at some positive time

, the objective is to demonstrate useful backward reconstruction from noisy data. As is well known, there is a necessary uncertainty in ill-posed backward recovery from imprecise data at

. For the 1D Burgers' equation, the error estimates at t=0 given in [Citation15, Citation16], contain a factor

. For the Navier-Stokes equations, the corresponding uncertainty may be significantly larger [Citation17, Citation18]. Taken together, these results indicate that successful backward recovery in the 2D Burgers' equation, with

, may not be feasible unless

.

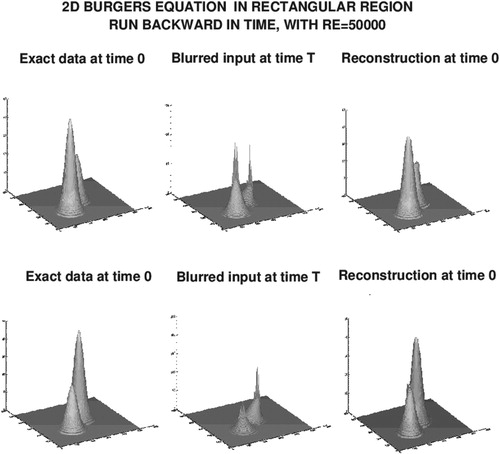

In Section 5 below, three instructive numerical experiments are discussed. In these experiments, the solutions have the value zero on the domain boundary. Interesting initial values are propagated forward up to a time , by numerically solving the well-posed forward 2D Burgers' equation. Despite the small value of

, considerable distortion of the initial values occurs. These limited precision numerical values are then used as input data at

, for the backward computation with the stabilized explicit scheme. In the first experiment, relatively simple data are used, involving two Gaussians on the unit square, and

with

. The second experiment involves images on the unit square, defined by highly non-smooth intensity data. Here,

and

. The last experiment, with

and

, involves non-smooth image data in an elliptical region.

2. 2D Burgers' equation

Let Ω be the unit square in with boundary

. Let

and

, respectively, denote the scalar product and norm on

. With

the kinematic viscosity, we consider the following 2D Burgers' system for

(2)

(2) together with periodic boundary conditions on

, and the initial values

(3)

(3) The well-posed forward initial value problem in Equation (Equation2

(2)

(2) ) becomes ill-posed if the time direction is reversed, and one wishes to recover

, from given approximate values for

. We contemplate such time-reversed computations by allowing for possible negative time steps

in the explicit time-marching finite difference scheme described below. With a given positive integer N, let

be the time step magnitude, and let

, denote the intended approximation to

, and likewise for

. It is helpful to consider Fourier series expansions for

, on the unit square Ω,

(4)

(4) with Fourier coefficients

given by

(5)

(5) and similarly for

. With given fixed

and p>1, define

, as follows

(6)

(6) For any

, let

be its Fourier coefficients as in Equation (Equation5

(5)

(5) ). Using Equation (Equation6

(6)

(6) ), define the linear operators P and S as follows

(7)

(7) As in [Citation23–26], the operator S is used as a stabilizing smoothing operator at each time step, in the following explicit time-marching finite difference scheme for the system in Equation (Equation2

(2)

(2) ), in which only the time variable is discretized, while the space variables remain continuous,

(8)

(8) The analyses presented in Sections 3 and 4 below are relevant to the above semi-discrete problem. In Section 5, where actual numerical computations are discussed, the space variables are also discretized, and FFT algorithms are used to synthesize the smoothing operator S.

3. Fourier stability analysis in linearized problem

As in [Citation23–26], useful insight into the behaviour of the nonlinear scheme in Equation (Equation8(8)

(8) ), can be gained by analysing a related linear problem with constant coefficients. With positive constants

consider the initial value problem on the unit square Ω,

(9)

(9) together with periodic boundary conditions on

. Unlike the case in Equation (Equation8

(8)

(8) ), the stabilized marching scheme

(10)

(10) with the linear operator L, is susceptible to Fourier analysis. If

, then the Fourier coefficients

satisfy

, where, with

as in Equation (Equation6

(6)

(6) ),

(11)

(11) Let R be the linear operator

. Then,

(12)

(12) on using Parseval's formula.

Lemma 3.1

Let be as in Equation (Equation6

(6)

(6) ), and let

be as in Equation (Equation11

(11)

(11) ). Choose a positive integer J such that if

we have

(13)

(13) With

choose

in Equation (Equation6

(6)

(6) ). Then,

(14)

(14) Hence, from Equation (Equation12

(12)

(12) ),

and

(15)

(15) Therefore, with this choice of

the explicit linear marching scheme in Equation (Equation10

(10)

(10) ) is stable.

Proof.

We first show how to find a positive integer J such that Equation (Equation13(13)

(13) ) is valid. We have

where

. Choose a positive integer J such that

. Then,

which implies Equation (Equation13

(13)

(13) ). Next, the inequality in Equation (Equation14

(14)

(14) ) is valid whenever

, since

. For

we have

and

. Hence

(16)

(16) since

. Also,

, since

for real x. Hence, with

for

we find

. Next, using Equation (Equation14

(14)

(14) ) in Equation (Equation12

(12)

(12) ) leads to

, which implies Equation (Equation15

(15)

(15) ). QED.

For functions on

, define the norm

as follows

(17)

(17)

Lemma 3.2

Let be the exact solution in Equation (Equation9

(9)

(9) ). Let

be as in Equation (Equation6

(6)

(6) ). Let P and S be as in Equation (Equation7

(7)

(7) ), and let L be the linear operator in Equation (Equation9

(9)

(9) ). Then,

where

is the truncation error. With the norm definition in Equation (Equation17

(17)

(17) ), and

(18) (:12.006)

(18) (:12.006)

Proof.

The inequality for the truncation error in Equation (Equation18

(18) (:12.006)

(18) (:12.006) ) follows naturally from a truncated Taylor series expansion. Using the inequality

for all real x, together with Parseval's formula, we have

(19)

(19) This proves the second inequality in Equation (Equation18

(18) (:12.006)

(18) (:12.006) ). The last inequality is a corollary of the second. QED.

In Lemma 3.1, the finite difference approximation satisfies Equation (Equation10

(10)

(10) ), whereas the exact solution

in Equation (Equation9

(9)

(9) ), satisfies

, where

is the truncation error. We need to estimate the error

.

Theorem 3.1

With let

be the unique solution of Equation (Equation9

(9)

(9) ) at

. Let

be the corresponding solution of the forward explicit scheme in Equation (Equation10

(10)

(10) ), and let

be as in Lemma 3.1. If

denotes the error at

we have

(20) (:2.007)

(20) (:2.007)

Proof.

With

(21)

(21) let R be the linear operator defined in Equation (Equation12

(12)

(12) ). Then,

, while

. Therefore

(22)

(22) Let

. Using Lemma 3.1, and letting

(23) (:2.0072)

(23) (:2.0072) We now proceed to estimate

. From Equation (Equation21

(21)

(21) ) and Lemma 3.2, we find for

,

(24)

(24) Therefore, Equation (Equation20

(20) (:2.007)

(20) (:2.007) ) follows from Equation (Equation23

(23) (:2.0072)

(23) (:2.0072) ). QED.

4. The stabilization penalties in the forward and backward linearized problem in Equation (9)

The stabilizing smoothing operator S in the explicit scheme in Equation (Equation10(10)

(10) ) leads to unconditional stability, but at the cost of introducing a small error at each time step. We now assess the cumulative effect of that error.

In the forward problem in Theorem 3.1, we may assume the given initial data to be known with sufficiently high accuracy that one may set

in Equation (Equation20

(20) (:2.007)

(20) (:2.007) ). Choosing

in Lemma 3.1, and putting

, Equation (Equation20

(20) (:2.007)

(20) (:2.007) ) reduces to

(25)

(25) Therefore, when using the explicit scheme in Equation (Equation10

(10)

(10) ), there remains the non-vanishing residual error

, as

. This is the stabilization penalty, which results from smoothing at each time step, and grows monotonically as

. Recall that

must be chosen large enough to satisfy Equation (Equation13

(13)

(13) ) in Lemma 3.1. Clearly, if

is large, the accumulated distortion may become unacceptably large as

, and the stabilized explicit scheme is not useful in that case. On the other hand, if

is small, as is the case in problems involving small values of t, it may be possible to choose p>2 and sufficiently large

, yet with small enough

that

is quite small. In that case, the stabilization penalty remains acceptable on

. As an example, with

, and

, we find

. For this important but limited class of problems, the absence of restrictive Courant conditions on the time step

in the explicit scheme in Equation (Equation10

(10)

(10) ), provides a significant advantage in well-posed forward computations of two-dimensional problems on fine meshes.

However, there is an additional penalty in the ill-posed problem of marching backward from , in that solutions exist only for a restricted class of data satisfying certain smoothness and other constraints. These data are seldom known with sufficient precision. We shall assume that the given data

at

, differ from such unknown exact data by small errors

:

(26)

(26)

Theorem 4.1

With let

be the unique solution of the forward well-posed problem in Equation (Equation9

(9)

(9) ) at

. Let

be the corresponding solution of the backward explicit scheme in Equation (Equation10

(10)

(10) ), with initial data

as in Equation (Equation26

(26)

(26) ). Let

be as in Lemma 3.1. If

denotes the error at

we have, with δ as in Equation (Equation26

(26)

(26) ),

(27) (:2.0101)

(27) (:2.0101)

Proof.

Let . Let R be the linear operator in Equation (Equation12

(12)

(12) ). Then,

, while

. Therefore

(28)

(28) Let

. Using Lemma 3.1, and letting

,

(29) (:2.009A)

(29) (:2.009A) As in the preceding Theorem, we may now use Lemma 3.2 to estimate

and obtain Equation (Equation27

(27) (:2.0101)

(27) (:2.0101) ) from Equation (Equation29

(29) (:2.009A)

(29) (:2.009A) ). QED.

It is instructive to compare the results in the well-posed case in Equation (Equation25(25)

(25) ), with the ill-posed results implied by Equation (Equation27

(27) (:2.0101)

(27) (:2.0101) ). For this purpose, we must reevaluate Equation (Equation27

(27) (:2.0101)

(27) (:2.0101) ) at the same t values that are used in Equation (Equation25

(25)

(25) ). With

,

, and

let

now denote the previously computed backward solution evaluated at

. With

, let

denote the error at

. Again, choosing

, we get from Equation (Equation27

(27) (:2.0101)

(27) (:2.0101) ),

(30) (:2.010B)

(30) (:2.010B) Here, the stabilization penalty is augmented by an additional term, resulting from amplification of the errors

in the given data at

, as indicated in Equation (Equation26

(26)

(26) ). Both of the first two terms on the right in Equation (Equation30

(30) (:2.010B)

(30) (:2.010B) ) grow monotonically as

, reflecting backward in time marching from

.

Let the exact solution at t=0, satisfy a prescribed

bound,

(31)

(31) Again, with large

, and

large enough to satisfy Equation (Equation13

(13)

(13) ) in Lemma 3.1, the non-vanishing residuals in Equation (Equation30

(30) (:2.010B)

(30) (:2.010B) ) lead to large errors, and the backward explicit scheme is not useful in that case. However, if

is small enough that

(32)

(32) with

as in Equations (Equation26

(26)

(26) ) and (Equation31

(31)

(31) ), we find, with

,

(33) (:2.01014)

(33) (:2.01014)

The second term on the right in Equation (Equation33(33) (:2.01014)

(33) (:2.01014) ) represents the fundamental uncertainty in ill-posed backward continuation from noisy data, for solutions satisfying the prescribed bounds

in Equations (Equation26

(26)

(26) ) and (Equation31

(31)

(31) ). That uncertainty is known to be best-possible in the case of autonomous selfadjoint problems. Therefore, in a limited but potentially significant class of problems, the stabilized backward explicit scheme for the linearized problem in Equation (Equation9

(9)

(9) ), can produce results differing from what is best-possible only by a small stabilization penalty as

.

For example, with parameter values such as we have

and

. Hence, with

we find

. We would then obtain from Equation (Equation33

(33) (:2.01014)

(33) (:2.01014) ),

(34) (:2.014)

(34) (:2.014)

Remark 1

In most practical applications of ill-posed backward problems, the values of M and δ in Equation (Equation34(34) (:2.014)

(34) (:2.014) ) are seldom known accurately. In many cases, interactive adjustment of the parameter pair

, in the definition of the smoothing operator S in Equation (Equation7

(7)

(7) ), based on prior knowledge about the exact solution, is crucial in obtaining useful reconstructions. This process is similar to the manual tuning of an FM station, or the manual focusing of binoculars, and likewise requires user recognition of a ‘correct’ solution. There may be several possible good solutions, differing slightly from one another. Typical values of

lie in the range

. A useful strategy, which avoids oversmoothing, is to begin with smaller values of ω and p, possibly leading to instability. Gradually increasing these quantities will eventually locate useful pairs

. An important advantage of the unconditional stability in the compensated explicit schemes, is the ability to conduct rapid trial computations, using relatively large values of

. Having located potentially useful pairs

, fine tuning these parameters, using smaller values of

, can then be considered.

Remark 2

As previously noted in [Citation24], for the class of problems where it is useful, the present methodology offers significant advantages over the Quasi-Reversibility Method, or QR method, developed in [Citation20]. Applied to the irreversible evolution equation the QR method alters that equation by adding the higher order spatial differential operator

, so that the new evolution equation is well-posed backward in time. However, explicit schemes for

are impractical on fine meshes, as they require a stringent Courant stability condition

if L is an elliptic differential operator of order

. On the other hand, for nonlinear multidimensional problems on fine meshes, implicit schemes require computationally intensive iterative solution of large nonlinear systems of difference equations at every time step. The present methodology does not alter the original evolution equation. Rather, it is based on a pre-designed stabilized explicit backward marching scheme, which is used directly on nonlinear problems by simply lagging the nonlinearity at the previous time step. Computational examples involving the explicit leapfrog scheme on

images are discussed in [Citation25]. Most importantly, a wide choice of compensating smoothing

operators S in Equation (Equation7

(7)

(7) ), involving

with possibly non-integer values of p, can be accessed within the same single computational procedure. This substantially increases the likelihood of achieving useful backward reconstructions.

5. Behavior in the nonlinear stabilized explicit scheme in Equation (8)

In the nonlinear system in Equation (Equation2(2)

(2) ), the ‘coefficients’ for the first-order derivative terms are

and

, rather than the positive constants a and b, which is the case in the linearized problem in Equation (Equation9

(9)

(9) ). However, if the solution to the nonlinear problem satisfies

for

, and suitable positive

, the stability analyses given in Sections 3 and 4 may be applicable. Theorems 3.1 and 4.1, and Equations (Equation25

(25)

(25) ) and (Equation33

(33) (:2.01014)

(33) (:2.01014) ), may provide helpful insight into the behaviour of the nonlinear explicit scheme in Equation (Equation8

(8)

(8) ). In particular, as discussed in Remark 1, we may expect to obtain useful results by interactive adjustment of the parameter pair

in Equation (Equation6

(6)

(6) ), in backward in time computations with the nonlinear scheme in Equation (Equation8

(8)

(8) ). As will be shown below, numerical experiments with examples similar to that discussed in Equation (Equation34

(34) (:2.014)

(34) (:2.014) ), appear to confirm such expectations.

5.1. Two Gaussians experiment at

With Ω the unit square in with boundary

and the kinematic viscosity

, consider the following initial value problem

(35)

(35) together with homogeneous boundary conditions on

, and the initial values

(36)

(36) where

(37)

(37) Plots of the initial values

are shown in the first column of Figure . From Equation (Equation1

(1)

(1) ), with

, we have

in this experiment. We shall apply the previously discussed nonlinear explicit scheme

(38)

(38)

With

, and S chosen as the identity operator in Equation (Equation38

(38)

(38) ), we first consider the

forward problem. We use centred finite differencing for the spatial derivatives

in

on a uniform grid with

. The results of this stable forward computation are shown in

the middle column in Figure . Note that the vertical scales in the middle column

differ from those in the other two columns. Indeed, in the middle column, the

computed maximum values for

on the

spatial grid, are, respectively, 144.54, and 209.25.

Figure 1. Using precomputed input data at time , shown in middle column, nonlinear explicit scheme in Equation (Equation8

(8)

(8) ), run backward in time, seeks to recover true initial data shown in leftmost column. Actually recovered data are shown in rightmost column. Note that maximum values in middle column are much larger than in leftmost column, and maximum values in the rightmost column are slightly smaller than in leftmost column.

Next, we consider the backward problem. Here, we use the previously computed values at time as input data, and apply the stabilized explicit scheme in Equation (Equation38

(38)

(38) ). With a uniform grid on the unit square Ω, FFT algorithms are the natural tool to use in synthesizing the smoothing operator S which is based on

. After a few interactive parameter trials, values of

, together with p=3.0, were arrived at. Because of the S-induced unconditional stability, it was possible to use a value of

five times larger in the backward computation, namely,

. The resulting recovered initial values are plotted in the right column of Figure . The maximum values in the recovered data are 46.7, as compared to the true values of 50.0.

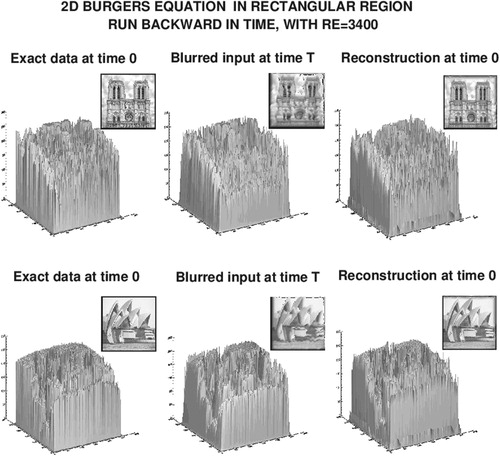

5.2. Image experiment at

Our next experiment, illustrated in Figures and , involves pixel grey scale images, defined on the unit square Ω. As is well known, many natural images are generated by highly non-smooth intensity data. Use of such data in ill-posed time-reversed evolution equations, presents significant challenges to any reconstruction algorithm. At the same time, the use of images is particularly instructive as it enables visualizing the distortion produced by the forward evolution, together with the subsequent attempt at undoing that distortion by marching backward in time.

Figure 2. Using precomputed input data at time , shown in middle column, nonlinear explicit scheme in Equation (Equation8

(8)

(8) ), run backward in time, seeks to recover the true images shown in leftmost column. Actually recovered mages are shown in rightmost column. Notice severe wavy distortion of sea wall in blurred Sydney Opera House image, shown at bottom in middle column, and its successful reconstruction in bottom rightmost image.

Figure 3. Backward recovery of the underlying intensity data that generate the images in the reconstruction experiment shown in Figure .

Here, the 2D Burgers' system in Equation (Equation35(35)

(35) ) is used, with

, the kinematic viscosity

, and homogeneous boundary conditions on

. The initial values are the intensity data, shown in the leftmost column of Figure , that define the images shown in the leftmost column of Figure . These intensities range from 0 to 255. Accordingly, with

we have

in this experiment.

With , and S chosen as the identity operator in Equation (Equation38

(38)

(38) ), stable computation of the forward problem on a uniform grid with

, produced the blurred images shown in the middle column of Figure .

In the absence of the leftmost and rightmost columns in Figure , the images in the middle column of that figure, when magnified, are almost unrecognizable, due to the severe blurring caused by the forward evolution. In particular, the sea wall surrounding the Sydney Opera House develops a striking wavy pattern in the bottom middle image, reflecting some form of turbulence associated with Burgers' equation. Such severe distortion is noteworthy, as each of and RE, are one order of magnitude smaller than was the case in the previous Gaussian data experiment.

Next, using the intensity data in the middle column of Figure as input at , we apply the stabilized explicit scheme in Equation (Equation38

(38)

(38) ) to march backward in time. With

, in the FFT-synthesized smoothing operator S, and a value of

three times larger than in the forward problem, we obtain the images shown in the rightmost column of Figure . These are the images defined by the recovered intensity data shown in the rightmost column of Figure . Evidently, credible reconstruction has been achieved, although the recovered images and data differ qualitatively and quantitatively from the exact values. However, such discrepancies are to be expected in ill-posed continuation from noisy data.

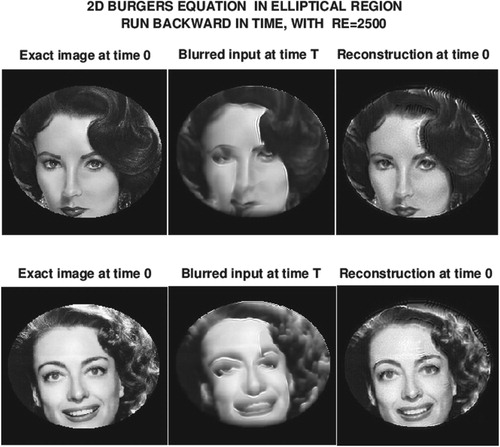

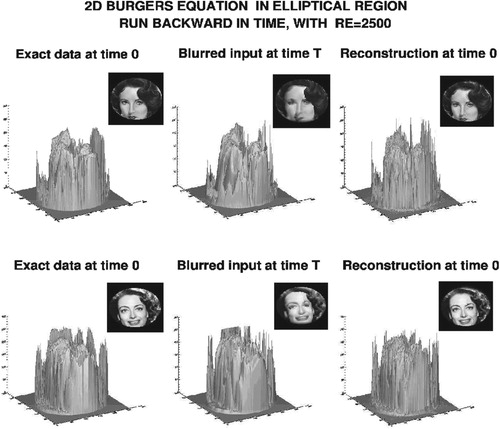

5.3. Image experiment in non-rectangular region at

In rectangular regions Ψ, the Fast Fourier Transform is an efficient tool for synthesizing for positive non-integer p. This was used to advantage in the previous two experiments. However, as was shown in [Citation25, Citation26], and will be shown again below, FFT Laplacian smoothing may be feasible for the 2D Burgers' equation in non-rectangular regions Ω, with zero Dirichlet data on an assumed smooth boundary

. Enclosing Ω in a rectangle Ψ, a uniform grid is imposed on Ψ, fine enough to sufficiently well approximate

The discrete boundary

consisting of the grid points closest to

is then used in place of

. We use centred finite differencing for the spatial derivatives in

in Equation (Equation35

(35)

(35) ). At each time step n in Equation (Equation38

(38)

(38) ), after applying the operators

to

respectively, on

, these discrete functions are then extended to all of Ψ by defining them to be zero on the grid points in

. FFT algorithms are then applied on Ψ to synthesize S in Equation (Equation38

(38)

(38) ), and produce

Retaining only the values of these discrete functions on Ω, the process is repeated at the next time step.

The last experiment, illustrated in Figures and , involves recognizable face images defined on an elliptical domain Ω, with area A=0.544, enclosed in the unit square Ψ. As before, the kinematic viscosity and

With

, we now have

. A

grid was placed on Ψ. As in the previous experiment, with S the identity operator,

, stable forward computation in Equation (Equation38

(38)

(38) ), using the intensity data shown in first column of Figure , produced the blurred images in the middle column of Figure , and the intensity data in the middle column of Figure . Although not as severe as in Figure , quite noticeable blurring is evident in Figure .

Figure 4. Using precomputed input data at time , shown in middle column, nonlinear explicit scheme in Equation (Equation8

(8)

(8) ), run backward in time, seeks to recover the true images shown in leftmost column. Actually recovered images are shown in rightmost column.

Figure 5. Backward recovery of the underlying intensity data that generate the images in the reconstruction experiment shown in Figure .

Next, using the intensity data in the middle column of Figure as input at , we apply the stabilized explicit scheme in Equation (Equation38

(38)

(38) ) to march backward in time, using the FFT strategy outlined in the first paragraph. With

in the smoothing operator S, and a value of

10 times larger than in the forward problem, we obtain the images shown in the rightmost column of Figure . These are the images defined by the recovered intensity data shown in the rightmost column of Figure . Again, quite good reconstructions are obtained, despite inevitable discrepancies between the exact and recovered data and images in Figures and .

6. Concluding remarks

To the author's knowledge, successful backward in time computations in the 2D Burgers' equation, have not previously appeared in the literature. The results obtained here offer a glimpse of what might be feasible, although the stabilized explicit scheme in Equation (Equation8(8)

(8) ) will generally be useful only in a limited class of problems. The successful first experiment in Section 5, at

, is noteworthy even though relatively simple data were involved. The second experiment, at a much smaller Reynolds number, was instructive in highlighting the severe distortions that can occur with highly complex non-smooth data, and the remarkable backward recovery that yet remains possible. The last experiment is important in illustrating the possible use of FFT-synthesized smoothing operators in non-rectangular regions.

Theoretical error estimates for backward reconstruction from noisy data, such as are given in [Citation15–19], necessarily reflect worse case error accumulation scenarios, and may be too pessimistic in individual situations. As in [Citation23–26], the use of 8 bit grey scale images provide challenging test examples, as well as an instructive way of exploring the feasibility of backward recovery with various types of complicated non-smooth data.

Disclosure statement

No potential conflict of interest was reported by the author.

References

- Cole JD. On a quasi-linear parabolic equation occurring in aerodynamics. Quart Appl Math. 1951;3:225–236. doi: 10.1090/qam/42889

- Abazari R, Borhanifar A. Numerical study of the solution of the Burgers and coupled Burgers equations by a differential transformation method. Comput Math Appl. 2010;59:2711–2722. doi: 10.1016/j.camwa.2010.01.039

- Zhu H, Shu H, Ding M. Numerical solutions of two-dimensional Burgers' equations by discrete Adomian decomposition method. Comput Math Appl. 2010;60:840–848. doi: 10.1016/j.camwa.2010.05.031

- Srivastava VK, Tamsir M, Bhardwaj U, et al. Crank-Nicolson scheme for numerical solutions of two-dimensional coupled Burgers' equations. Int J Sci Eng Res. 2011;2:1–7.

- Khan M. A novel technique for two dimensional Burgers equation. Alexandria Eng J. 2014;53:485–490. doi: 10.1016/j.aej.2014.01.004

- Wang Y, Navon IM, Wang X, et al. 2D Burgers equation with large Reynolds number using POD/DEIM and calibration. Int J Numer Meth Fluids. 2016;82:909–931. doi: 10.1002/fld.4249

- Zhanlav T, Chuluunbaatar O, Ulziibayar V. Higher-order numerical solution of two-dimensional coupled Burgers' equations. Am J Comput Math. 2016;6:120–129. doi: 10.4236/ajcm.2016.62013

- Ou K, Jameson A. Unsteady adjoint method for the optimal control of advection and Burgers' equation using high order spectral difference method. 49th AIAA Aerospace Science Meeting, 2011 January 4–7; Orlando, FL.

- Allahverdi N, Pozo A, Zuazua E. Numerical aspects of large-time optimal control of Burgers' equation. ESAIM Math Model Numer Anal. 2016;50:1371–1401. doi: 10.1051/m2an/2015076

- Gosse L, Zuazua E. Filtered gradient algorithms for inverse design problems of one-dimensional Burgers' equation. In: Gosse L, Natalini R, editors. Innovative algorithms and analysis. SINDAM Series. Springer; 2017. p. 197–227. doi: 10.1007/978-3-319-49262-9_7

- Lundvall J, Kozlov V, Weinerfelt P. Iterative methods for data assimilation for Burgers' equation. J Inv Ill-Posed Problems. 2006;14:505–535. doi: 10.1515/156939406778247589

- Auroux D, Blum J. A nudging-based data assimilation method for oceanographic problems: the back and forth nudging (BFN) algorithm. Proc Geophys. 2008;15:305–319. doi: 10.5194/npg-15-305-2008

- Auroux D, Nodet M. The back and forth nudging algorithm for data assimilation problems: theoretical results on transport equations. ESAIM:COCV. 2012;18:318–342. doi: 10.1051/cocv/2011004

- Auroux D, Bansart P, Blum J. An evolution of the back and forth nudging for geophysical data assimilation: application to Burgers' equation and comparison. Inverse Probl Sci Eng. 2013;21:399–419. doi: 10.1080/17415977.2012.712528

- Carasso A. Computing small solutions of Burgers' equation backwards in time. J Math Anal App. 1977;59:169–209. doi: 10.1016/0022-247X(77)90100-7

- Hào DN, Nguyen VD, Nguyen VT. Stability estimates for Burgers-type equations backward in time. J Inverse Ill Posed Probl. 2015;23:41–49. doi: 10.1515/jiip-2013-0050

- Knops RJ, Payne LE. On the stability of solutions of the Navier-Stokes equations backward in time. Arch Rat Mech Anal. 1968;29:331–335. doi: 10.1007/BF00283897

- Payne LE, Straughan B. Comparison of viscous flow backward in time with small data. Int J Nonlinear Mech. 1989;24:209-214. doi: 10.1016/0020-7462(89)90039-5

- Knops RJ. Logarithmic convexity and other techniques applied to problems in continuum mechanics. In: Knops RJ, editor. Symposium on non-well-posed problems and logarithmic convexity. Vol. 316, Lecture notes in mathematics. New York (NY): Springer-Verlag; 1973. p. 31–54.

- Lattès R, Lions JL. Méthode de Quasi-Réversibilité et Applications [The method of quasi-reversibility and applications]. Paris: Dunod; 1967.

- Ames KA, Straughan B. Non-standard and improperly posed problems. New York (NY): Academic Press; 1997.

- Carasso AS. Reconstructing the past from imprecise knowledge of the present: effective non-uniqueness in solving parabolic equations backward in time. Math Methods Appl Sci. 2012;36:249–261. doi: 10.1002/mma.2582

- Carasso AS. Stable explicit time-marching in well-posed or ill-posed nonlinear parabolic equations. Inverse Probl Sci Eng. 2016;24:1364–1384. doi: 10.1080/17415977.2015.1110150

- Carasso AS. Stable explicit marching scheme in ill-posed time-reversed viscous wave equations. Inverse Probl Sci Eng. 2016;24:1454–1474. doi: 10.1080/17415977.2015.1124429

- Carasso AS. Stabilized Richardson leapfrog scheme in explicit stepwise computation of forward or backward nonlinear parabolic equations. Inverse Probl Sci Eng. 2017;25:1–24. doi: 10.1080/17415977.2017.1281270

- Carasso AS. Stabilized backward in time explicit marching schemes in the numerical computation of ill-posed time-reversed hyperbolic/parabolic systems. Inverse Probl Sci Eng. 2018;1:1–32. doi: 10.1080/17415977.2018.1446952