?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

In this paper, the inverse problem for identifying the initial value of time-fractional diffusion wave equation on spherically symmetric region is considered. The exact solution of this problem is obtained by using the method of separating variables and the property the Mittag–Leffler functions. This problem is ill-posed, i.e. the solution(if exists) does not depend on the measurable data. Three different kinds landweber iterative methods are used to solve this problem. Under the priori and the posteriori regularization parameters choice rules, the error estimates between the exact solution and the regularization solutions are obtained. Several numerical examples are given to prove the effectiveness of these regularization methods.

1. Introduction

Nowadays, people find that the fractional derivative has much advantages in solving practical problem, such as in medical engineering [Citation1], chemistry and biochemistry [Citation2], finance and economics [Citation3–6], inverse scattering [Citation7]. Up to now, a lot of achievements have been made in solving the direct problems [Citation8–12] of fractional differential equations. However, when solving practical problems, the initial value, or source term, or diffusion coefficient, or part of the boundary value [Citation13,Citation14] is unknown, and it is necessary to invert them through some measurement data, which puts forward the inverse problem of fractional diffusion equation. The study of the fractional diffusion equation is still on an initial stage, the direct problem of it has been studied in [Citation15–17].

For the inverse problem of time-fractional diffusion equation as , there are a lot of research results. For identifying the unknown source, one can see [Citation18–26]. About backward heat conduction problem, one can see [Citation27–34]. About identifying the initial value problem, one can see [Citation35–37]. For an inverse unknown coefficient problem of a time-fractional equation, one can see [Citation38,Citation39]. About identifying the source term and initial data simultaneous of time-fractional diffusion equation, one can see [Citation40,Citation41]. About identifying some unknown parameters in time-fractional diffusion equation, one can see [Citation42]. About the inverse problem for the heat equation on a columnar axis-symmetric area, one can see [Citation43–48]. In [Citation43–45], Landweber regularization method, a simplified Tikhonov regularization method and a spectral method are used to identify source term on a columnar axis-symmetric area. In [Citation46–48], the authors considered a backward problem on a columnar axis-symmetric domain. In [Citation46], Yang et al. used the quasi-boundary value regularization method to solve the inverse problem for determining the initial value of heat equation with inhomogeneous source on a columnar symmetric domain. The error estimate between the regular solution and the exact solution under the corresponding regularization parameter selection rules is obtained. Finally, numerical example is given to verify that the regularization method is very effective for solving this inverse problem. In [Citation47], Cheng et al. used the modified Tikhonov regularization method to do with the inverse time problem for an axisymmetric heat equation. Finally, Hölder type error estimate between the approximate solution and exact solution is obtained. In [Citation48], Djerrar et al. used standard Tikhonov regularization method to deal with an axisymmetric inverse problem for the heat equation inside the cylinder

, and numerical examples is used to show that this method is effective and stable. About the inverse problem for the time-fractional diffusion equation as

on a columnar and spherical symmetric areas, one can see [Citation49,Citation50]. In [Citation49], Xiong proposed a backward problem model of a time-fractional diffusion-heat equation on a columnar axis-symmetric domain. Yang et al. in [Citation50] used Landweber iterative regularization method to solve identifying the initial value of time-fractional diffusion equation on spherically symmetric domain. Compare with the inverse problem of time-fractional diffusion equation as

, there are little research result for the inverse problem of time-fractional diffusion wave equation as

. Šišková et al. in [Citation51] used the regularization method to solve the inverse source problem of time-fractional diffusion wave equation. Liao et al. in [Citation52] used conjugate gradient method combined with Morozovs discrepancy principle to identify the unknown source for the time-fractional diffusion wave equation. Šišková et al. in [Citation53] used the regularization method to deal with an inverse source problem for a time-fractional wave equation. Gong et al. in [Citation54] used a generalized Tikhonov to identify the time-dependent source term in a time-fractional diffusion-wave equation. In recent years, in physical oceanography and global meteorology, the inversion of initial boundary value problems has always been a hot issue. In order to increase the accuracy of numerical weather prediction, usually by the model combined with the observation data of the inversion of initial boundary value problems, and in numerical weather prediction model, provide a reasonable initial field. At present, many domestic and foreign ocean circulation model, atmospheric general circulation model, numerical weather prediction model and torrential rain forecasting model belongs to the inversion of initial boundary value problems during initialization, so such problems of scientific research application prospect is very broad. Yang et al. in [Citation55] used the truncated regularization method to solve the inverse initial value problem of the time-fractional inhomogeneous diffusion wave equation. Yang et al. in [Citation56] used the Landweber iterative regularization method to solve the inverse problem for identifying the initial value problem of a space time-fractional diffusion wave equation. Wei et al. in [Citation57] used the Tikhonov regularization method to solve the inverse initial value problem of time-fractional diffusion wave equation. Wei et al. in [Citation58] used the conjugate gradient algorithm combined with Tikhonov regularization method to identify the initial value of time-fractional diffusion wave equation. Until now, we find that there are few papers for the inverse problem of time-fractional diffusion-wave equation on a columnar axis-symmetric domain and spherically symmetric domain. In [Citation59], the authors used the Landweber iterative method to solve an inverse source problem of time-fractional diffusion-wave equation on spherically symmetric domain. It is assumed that the grain is of a spherically symmetric domain diffusion geometry as illustrated in Figure (a-b), which is actually consistent with laboratory measurements of helium diffusion from a physical point of view from apatite. As a consequence of radiogenic production and diffusive loss,

which only depends on the spherically radius r and t denotes the concentration of helium. For the inverse problem of inversion initial value in spherically symmetric region, there are few research results at present. Whereupon, in this paper, we consider the inverse problem to identify the initial value of time-fractional diffusion-wave equation on spherically symmetric region and give three regularization methods to deal with this inverse problem in order to find a effective regular method.

In this paper, we consider the following problem:

(1)

(1) where

is the radius,

is the Caputo fractional derivative

, it is defined as

(2)

(2) The existence and uniqueness of the direct problem solution has been proved in the [Citation60]. The inverse problem is to use the measurement data

and the known function

to identify the unknown initial data

,

. The inverse initial value problem can be transformed into two cases:

| (IVP1): | Assuming | ||||

| (IVP2): | Assuming | ||||

Because the measurements are error-prone, we remark the measurements with error as and

and satisfy

(3)

(3)

(4)

(4) where

. In this paper,

represents the Hilbert space with weight

on the interval

of the Lebesgue measurable function.

and

represent the inner product and norm of the space of

, respectively.

is defined as follows:

(5)

(5) This paper is organized as follows. In Section 2, we recall and state some preliminary theoretical results. In Section 3, we analyse the ill-posedness of the problem (IVP1) and the problem (IVP2), and give the conditional stability result. In Section 4, we give the corresponding a priori error estimates and posteriori error estimates for three regularization methods. In Section 5, we conduct some numerical tests to show the validity of the proposed regularization methods. Since most of the solutions of fractional partial differential equations contain special functions (Mittag–Leffler functions), and the calculation of these functions is quite difficult. In this paper, the difficulties are overcome through [Citation61,Citation62]. Finally, we give some concluding remarks.

2. Preliminary results

In this section, we give some important Lemmas.

Lemma 2.1

[Citation57]

If , and

be arbitrary. Suppose μ satisfy

. Then there exists a constant

such that

(6)

(6)

Lemma 2.2

[Citation57]

For ,

and

, we have

(7)

(7)

Lemma 2.3

For ,

and

are relaxation factor and satisfy

,

,

,

, we have

(8)

(8)

(9)

(9)

Proof.

Refer to the appendix for the details of the proof.

Lemma 2.4

For ,

,

,

,

,

, we have

(10)

(10)

(11)

(11) where the constant

is given by

and

is given by

.

Proof.

Refer to the appendix for the details of the proof.

Lemma 2.5

For and any fixed T>0, there is at most a finite index set

such that

for

and

for

. Meanwhile there is at most a finite index set

such that

for

and

for

.

Proof.

From Lemma 2.2, we know that there exists such that

for

, thus we know

only if

. Since

, there are only finite

satisfying

. The proof for

is similar.

Remark 2.1

The index sets and

may be empty, that means the singular values for the operators

and

are not zeros. Here and below, all the results for

and

are regarded as the special cases.

Lemma 2.6

[Citation57]

For and

, there exists positive constants

,

depending on α, T such that

(12)

(12)

(13)

(13)

Lemma 2.7

For ,

,

,

,

,

, we have

(14)

(14)

(15)

(15) where

and

.

Proof.

Refer to the appendix for the details of the proof.

Lemma 2.8

For ,

,

,

,

,

, we have

(16)

(16)

(17)

(17) where

and

.

Proof.

Refer to the appendix for the details of the proof.

Lemma 2.9

For ,

,

,

,

, we have

(18)

(18)

(19)

(19)

Proof.

Refer to the appendix for the details of the proof.

Lemma 2.10

For ,

, p>0,

,

, we have

(20)

(20)

(21)

(21) where

and

.

Proof.

Refer to the appendix for the details of the proof.

Lemma 2.11

For ,

, p>0,

,

, we have

(22)

(22)

(23)

(23) where

and

.

Proof.

Refer to the appendix for the details of the proof.

3. The ill-posedness and the conditional stability

Define

(24)

(24) where

is the inner product in

, then

is a Hilbert space with the norm

and

Theorem 3.1

Let ,

, then there exists a unique weak solution and the weak solution for (Equation1

(1)

(1) ) is given by

(25)

(25) where

and

are the Fourier coefficients.

Let t = T in (Equation25(25)

(25) ), we have

Denote

and

Then we have

(26)

(26) and

(27)

(27) Now we put the definitions of

and

into (Equation26

(26)

(26) ) and (Equation27

(27)

(27) ), then the problem (IVP1) and the problem (IVP2) become the following integral equations

(28)

(28) where the integral kernel is

(29)

(29) And

(30)

(30) where the integral kernel is

(31)

(31) and due to [Citation59], we know the linear operators

and

are compact from

to

. The problem (IVP1) and the problem (IVP2) are ill-posed.

Let be the adjoint of

and

be the adjoint of

. Since

is a standard orthogonal system with weight

in the

, it is easy to verity

and

Hence, the singular values of

are

. Define

(32)

(32) It is clear that

are orthonormal in

, we can verity

(33)

(33)

(34)

(34) Therefore, the singular system of

is

.

By the similar verification, we know the singular system of is

, where

and

(35)

(35) In the following, the integral kernels given in (Equation29

(29)

(29) ) and (Equation31

(31)

(31) ) are rewritten as

(36)

(36)

(37)

(37) It is not hard to prove that the kernel spaces of the operators

and

are

and the ranges of the operators

and

are

Therefore, we have the following existence of the solutions for the integral equations:

Theorem 3.2

If , for any

, there exists a unique solution in

for the integral Equation (Equation28

(28)

(28) ) given by

(38)

(38) If

, for any

, there exists infinitely many solutions for the integral equation (Equation28

(28)

(28) ) , but exists only one best approximate solution in

as

(39)

(39)

Proof.

Suppose , put

into (Equation28

(28)

(28) ), according to the orthonormality of

, it is not hard to obtain the results.

Theorem 3.3

If , for any

, there exists a unique solution in

for the integral equation (Equation30

(30)

(30) ) given by

(40)

(40) If

, for any

, there exists infinitely many solutions for the integral equation (Equation30

(30)

(30) ) , but exists only one best approximate solution as

(41)

(41)

Proof.

The proof is similar to the Theorem 3.2.

We have the following theorem on conditional stability:

Theorem 3.4

When satisfies the a-priori bound condition

(42)

(42) where

and p are positive constants, we have

(43)

(43) where

is a constant.

Proof.

Due to (Equation39(39)

(39) ) and H

lder inequality, we have

(44)

(44) Applying Lemma 2.6 and (Equation39

(39)

(39) ), we obtain

(45)

(45) Combining (Equation44

(44)

(44) ) and (Equation45

(45)

(45) ), we can get

Theorem 3.5

As satisfies a-priori bound condition

(46)

(46) where

and p are positive constants, then

(47)

(47) where

is a constant.

Proof.

The proof is similar to the Theorem 3.4, so it is omitted.

4. Regularization method and error estimation

By referring to [Citation59,Citation64,Citation65], we find a classical Landweber regularization method and two fractional Landweber iterative regularization methods, but it has not been explained which of these methods is better. So, in this section, we mainly make use of two kinds of fractional Landweber regularization methods and the classical Landweber regularization method to solve the problem (IVP1) and the problem (IVP2). The error estimates between the exact solution and the corresponding regular solution are given, respectively. By using three regularization methods to solve the same problem, an optimal regularization method is obtained.

By [Citation64], the fractional Landweber regularization solution is given as follows:

(48)

(48) and

(49)

(49) By [Citation59], the Landweber regularization solution is given as follows:

(50)

(50) and

(51)

(51) By [Citation65], the modified iterative regularization solution is given as follows:

(52)

(52) and

(53)

(53) where

,

are relaxation factors and satisfy

,

,

.

4.1. The priori error estimate

Lemma 4.1

Suppose and

be

, there is a constant

to make:

where

.

Proof.

From Lemma 2.1, we have

Thus,

where

. We complete the proof of Lemma 4.1.

Remark 4.1

Suppose

From Lemma 4.1, (Equation3

(3)

(3) ) and (Equation4

(4)

(4) ), we easily know

.

Define an orthogonal projection operator , combining Equations (Equation3

(3)

(3) ) and (Equation4

(4)

(4) ), we have:

Define an orthogonal projection operator

, combining Equations (Equation3

(3)

(3) ) and (Equation4

(4)

(4) ), we have:

Theorem 4.1

Let . If the a-priori condition (Equation42

(42)

(42) ) and Lemma 4.1 hold, we have the following convergence estimate

(54)

(54) where

, and

denotes the largest integer smaller than or equal to x.

Proof.

Using the triangle inequality, we have

From (Equation8

(8)

(8) ) and Remark 4.1, we have

Then we can get

(55)

(55) For the second part,

(56)

(56) where

.

From (Equation10(10)

(10) ), we have

(57)

(57) Combining (Equation55

(55)

(55) ), (Equation56

(56)

(56) ) and (Equation57

(57)

(57) ), Theorem 4.1 is proved.

Theorem 4.2

Let . If the a-priori condition (Equation46

(46)

(46) ) and Lemma 4.1 hold, we have the following convergence estimate

(58)

(58) where

, and

denotes the largest integer smaller than or equal to x.

Proof.

The proof process is similar to Theorem 4.1, so it is omitted.

Theorem 4.3

Let . If the a-priori condition (Equation42

(42)

(42) ) and Lemma 4.1 hold, we have the following convergence estimate

(59)

(59) where

, and

denotes the largest integer smaller than or equal to x.

Proof.

By the triangle inequality, we have

On the one hand, from Lemma 4.1, we have

where

.

From Bernoulli inequality, we can deduce that

Thus

(60)

(60) On the other hand, using the a-priori bound condition, we can deduce that

(61)

(61) where

.

From (Equation16(16)

(16) ), we have

(62)

(62) Combining (Equation60

(60)

(60) ), (Equation61

(61)

(61) ) and (Equation62

(62)

(62) ), Theorem 4.3 is proved.

Theorem 4.4

Let . If the a-priori condition (Equation46

(46)

(46) ) and Lemma 4.1 hold, we have the following convergence estimate

(63)

(63) where

, and

denotes the largest integer smaller than or equal to x.

Proof.

The proof process is similar to Theorem 4.3, so it is omitted.

Theorem 4.5

Let . If the a-priori condition (Equation42

(42)

(42) ) and Lemma 4.1 hold, we have the following convergence estimate

(64)

(64) where

, and

denotes the largest integer smaller than or equal to x.

Proof.

By the triangle inequality, we have

(65)

(65) On the one hand, we have

Due to (Equation18

(18)

(18) ) and Lemma 4.1, we have

(66)

(66) On the other hand, we have

From (Equation16

(16)

(16) ), we have

(67)

(67) Combining (Equation65

(65)

(65) ), (Equation66

(66)

(66) ) and (Equation67

(67)

(67) ), Theorem 4.5. is proved.

Theorem 4.6

Let . If the a-priori condition (Equation46

(46)

(46) ) and Lemma 4.1 hold, we have the following convergence estimate

(68)

(68) where

, and

denotes the largest integer smaller than or equal to x.

Proof.

The proof process is similar to Theorem 4.5, so it is omitted.

4.2. The posteriori error estimate

Assume be the constant, the selection of

is that when

satisfying

(69)

(69) appears for the first time, the iteration stops, where

.

Lemma 4.2

Assume , then

is a continuous function;

;

;

is strictly decreasing for any

.

Theorem 4.7

If formula (Equation3(3)

(3) ) and (Equation4

(4)

(4) ) is true, then the regularization parameter

satisfies

(70)

(70) where

.

Proof.

From (Equation48(48)

(48) ), we have

For

, we have

Since

, we have

.

From the Morozov's discrepancy principle, we obtain

Using the a-prior bound condition, we have

where

.

So we have . From (Equation20

(20)

(20) ), we obtain

So we have

Then we obtain

The convergence result is given in the following theorem.

Theorem 4.8

Assuming that Lemma 4.1 and (Equation39(39)

(39) ) are valid, and the regularization parameter are given by Equation (Equation70

(70)

(70) ) , then

(71)

(71) where

.

Proof.

Using the triangle inequality, we have

(72)

(72) From Theorem 4.1, we have

(73)

(73) For the second part, combining (Equation33

(33)

(33) ) and

, we have

From (Equation3

(3)

(3) ), (Equation4

(4)

(4) ) and the Morozov's discrepancy principle, we have

Since

Combining Theorem 3.4 and (Equation70

(70)

(70) ), we have

(74)

(74) Combining (Equation72

(72)

(72) ), (Equation73

(73)

(73) ) and (Equation74

(74)

(74) ), we obtain the convergence estimate.

Assuming that is the given constant, the selection of

is that when

satisfying

(75)

(75) appears for the first time, the iteration stops, where

.

Lemma 4.3

Assume , then

is a continuous function;

;

;

is strictly decreasing for any

.

Theorem 4.9

If formula (Equation3(3)

(3) ) and (Equation4

(4)

(4) ) is true, then the regularization parameter

satisfies:

(76)

(76) where

.

Proof.

The proof process is similar to Theorem 4.7, so it is omitted.

Theorem 4.10

Assuming that (Equation3(3)

(3) ) , (Equation4

(4)

(4) ) and (Equation41

(41)

(41) ) are valid, and the regularization parameters are given by (Equation76

(76)

(76) ) , then we have

(77)

(77) where

.

Proof.

The proof process is similar to Theorem 4.8, so it is omitted.

Assuming that is the given constant, the selection of

is that when

satisfying

(78)

(78) appears for the first time, the iteration stops, where

.

Lemma 4.4

Assuming , then

is a continuous function;

;

;

is strictly decreasing for any

.

Theorem 4.11

If formula (Equation3(3)

(3) ) and (Equation4

(4)

(4) ) is true, then the regularization parameter

satisfies:

(79)

(79) where

.

Proof.

From (Equation50(50)

(50) ), we have

For

, we have

Since

, we have

.

On the one hand, we have

On the other hand, from the a-priori bound condition, we know

where

.

So, we have . Thus we have

and

The convergence result is given in the following theorem.

Theorem 4.12

Assuming that (Equation3(3)

(3) ) , (Equation4

(4)

(4) ) and (Equation39

(39)

(39) ) are valid, and the regularization parameters are given by (Equation79

(79)

(79) ) , then we hold

(80)

(80) where

.

Proof.

By the triangle inequality, we have

(81)

(81) For the first part, combining Theorem 4.3 and (Equation79

(79)

(79) ), we have

(82)

(82) For the second part, we have

From (Equation3

(3)

(3) ) and(Equation4

(4)

(4) ) and the Morozov's discrepancy principle, we have

Since

Under the a-prior bound condition of

, we obtain

(83)

(83) Combining (Equation81

(81)

(81) ), (Equation82

(82)

(82) ) and (Equation83

(83)

(83) ), we obtain the convergence estimate.

Assuming that is the constant, the selection of

is that when

satisfying

(84)

(84) appears for the first time, the iteration stops, where

.

Lemma 4.5

Assuming , then

is a continuous function;

;

;

is strictly decreasing for any

.

Theorem 4.13

If formula (Equation3(3)

(3) ) and (Equation4

(4)

(4) ) is true, then the regularization parameter

satisfies

(85)

(85) where

.

Proof.

The proof process is similar to Theorem 4.11, so it is omitted.

Theorem 4.14

Assuming that (Equation3(3)

(3) ) , (Equation4

(4)

(4) ) and (Equation41

(41)

(41) ) are valid, and the regularization parameters are given by (Equation85

(85)

(85) ) , then we hold

(86)

(86) where

.

Proof.

The proof process is similar to Theorem 4.12, so it is omitted.

Assuming that is the given constant, the selection of

is that when

satisfying

(87)

(87) appears for the first time, the iteration stops, where

.

Lemma 4.6

Assuming , then

is a continuous function;

;

;

is strictly decreasing for any

.

Theorem 4.15

For ,

,

, then we hold

(88)

(88) where

.

Proof.

From (Equation52(52)

(52) ), we have

then

which implies

.

On the one hand, we have

On the other hand, we obtain

where

.

From Lemma 2.6, we have

From (Equation22

(22)

(22) ), we know

Then we can deduce that

Theorem 4.16

Assuming that (Equation3(3)

(3) ) , (Equation4

(4)

(4) ) and (Equation39

(39)

(39) ) are valid, and the regularization parameters are given by (Equation88

(88)

(88) ) , then

(89)

(89) where

.

Proof.

By the triangle inequality, we have

(90)

(90) For the first part, combining Theorem 4.5, we have

(91)

(91) For the second part, we have

From (Equation3

(3)

(3) ), (Equation4

(4)

(4) ) and the Morozov's discrepancy principle, we have

Since

Under the a-prior bound condition of

, we have

(92)

(92) Combining (Equation90

(90)

(90) ), (Equation91

(91)

(91) ) and (Equation92

(92)

(92) ), we obtain the convergence estimate.

Assuming that is the given constant, the selection of

is that when

satisfies

(93)

(93) appears for the first time, the iteration stops, where

.

Lemma 4.7

Assuming , then

is a continuous function;

;

;

is strictly decreasing for any

.

Theorem 4.17

If formula (Equation3(3)

(3) ) and (Equation4

(4)

(4) ) is true, then the regularization parameter

satisfies

(94)

(94) where

.

Proof.

The proof process is similar to Theorem 4.15, so it is omitted.

Theorem 4.18

Assuming that (Equation3(3)

(3) ) , (Equation4

(4)

(4) ) and (Equation41

(41)

(41) ) are valid, and the regularization parameters are given by (Equation94

(94)

(94) ) , then we hold

(95)

(95) where

.

Proof.

The proof process is similar to Theorem 4.16, so it is omitted.

5. Numerical implementation and numerical examples

In this section, we present numerical examples obtained by using Matlab language [Citation70,Citation71] to illustrate the usefulness of the proposed method. Since the analytic solution of (Equation1(1)

(1) ) is difficult to obtain, we construct the final data

by solving the forward problem with the given data

and

by the finite difference method.

We choose T = 1, . Let

and

be the step sizes for time and space variables, respectively. The grid points in the time interval are labelled

,

, the grid points in the space interval are

,

, and set

.

Based on the idea in [Citation61–63,Citation66–69], we approximate the time-fractional derivatives by

(96)

(96) where

and

We approximate the space derivatives by

(97)

(97)

(98)

(98) Next, the measured data is given by the following random form

(99)

(99)

(100)

(100) In order to make the sensitivity analysis for numerical results, we calculate the

error by

(101)

(101) The relative

error is defined by

(102)

(102) And

(103)

(103) The relative

error is defined by

(104)

(104) Denote

. Then we obtain the following iterative scheme

(105)

(105) where

,

, and

is a tridiagonal matrix, here

where

,

, and

,

.

Thus we can obtain by iterative scheme (Equation105

(105)

(105) ).

For the regularized problem, we can also use the finite difference scheme to discretize Equation (Equation48(48)

(48) ).

In the computational procedure, we take p = 1. In discrete format, we take M = 100, N = 50 to compute the direct problem. We use the dichotomy method to solve (Equation69(69)

(69) ), and obtain a posteriori regularization parameter, where

.

Example 5.1

Take function .

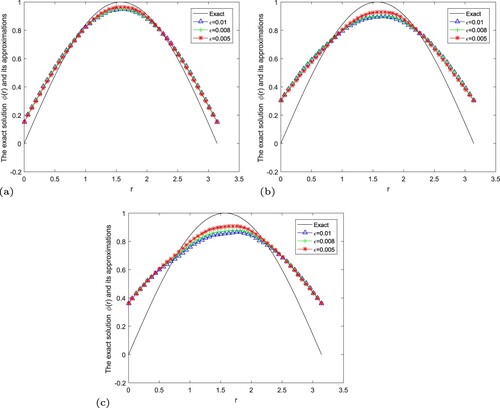

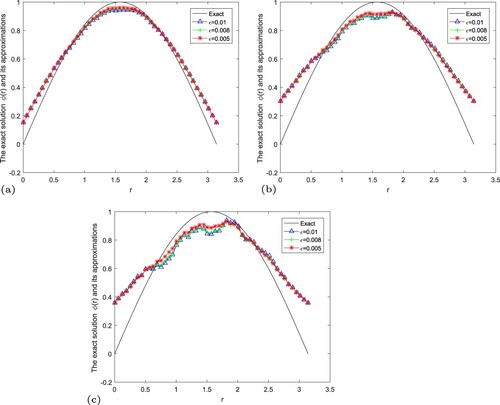

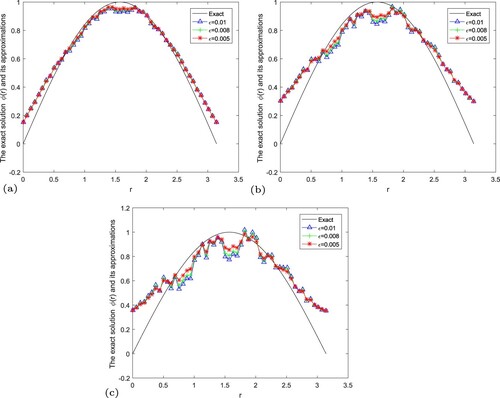

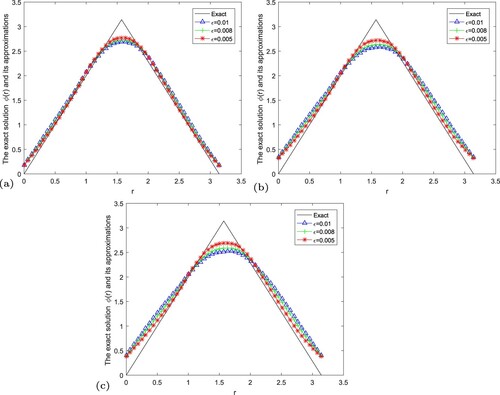

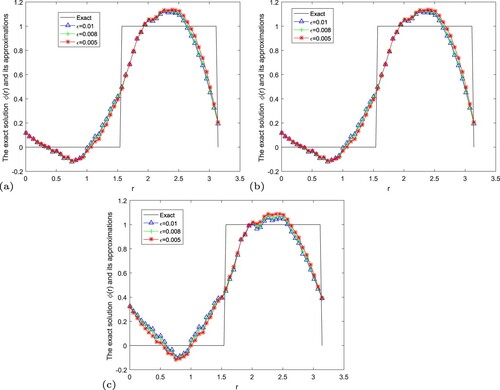

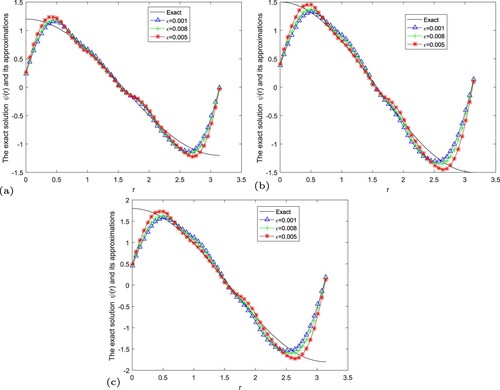

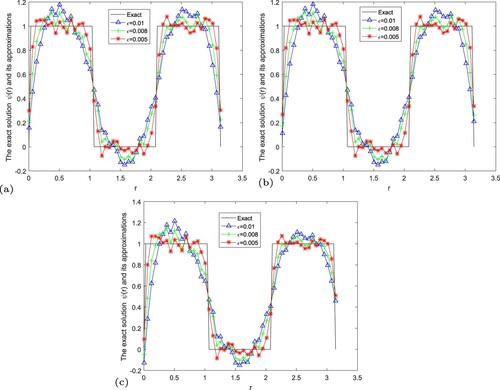

In Figures –, we give numerical results of Example 5.1 under the a posteriori parameter choice rule for various noise levels in the case of

. It can be seen that the numerical error also decreases when the noise is reduced. And the smaller α, the better the approximate effect.

Figure 1. The exact solution and regular solution of Landweber regularization method by using the a posteriori parameter choice rule for Example 5.1. (a) , (b)

, (c)

.

Figure 2. The exact solution and regular solution of fractional Landweber regularization method by using the a posteriori parameter choice rule for Example 5.1. (a) , (b)

, (c)

.

Figure 3. The exact solution and regular solution of modified iterative regularization method by using the a posteriori parameter choice rule for Example 5.1. (a) , (b)

, (c)

.

Example 5.2

Take function

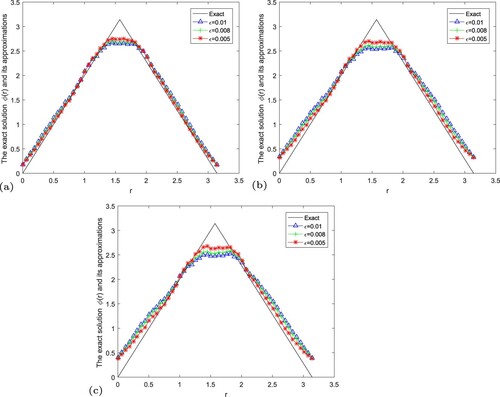

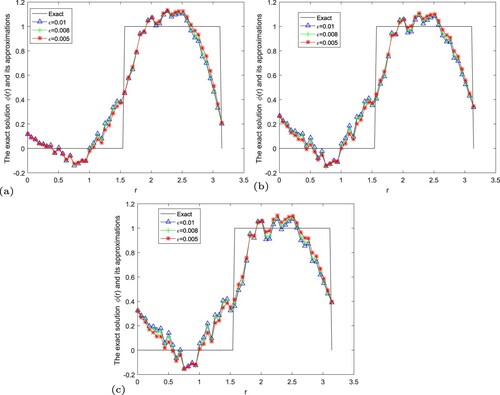

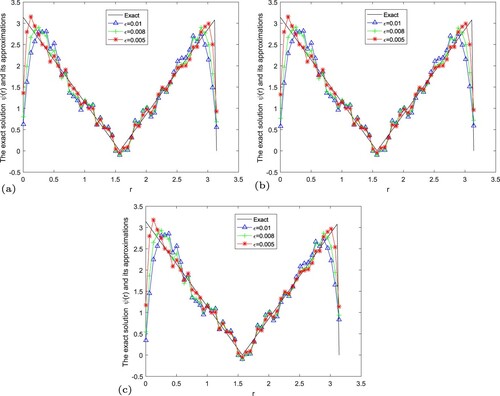

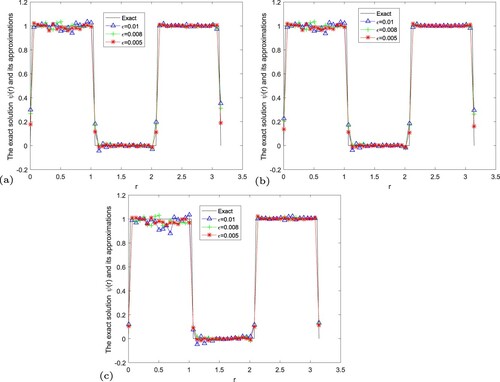

In Figures –, we give numerical results of Example 5.2 under the a posteriori parameter choice rule for various noise levels

in the case of

. It can be seen that the numerical error also decreases when the noise is reduced. And the smaller α, the better the approximate effect.

Figure 4. The exact solution and regular solution of Landweber regularization method by using the a posteriori parameter choice rule for Example 5.2. (a) , (b)

, (c)

.

Figure 5. The exact solution and regular solution of fractional Landweber regularization method by using the a posteriori parameter choice rule for Example 5.2. (a) , (b)

, (c)

.

Figure 6. The exact solution and regular solution of modified iterative regularization method by using the a posteriori parameter choice rule for Example 5.2. (a) , (b)

, (c)

.

We fix . For Table , when

, the iterative steps of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 597, 75 and 137. When

, the iterative steps of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 272, 41 and 67. When

, the iterative steps of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 262, 42 and 67. We can deduce that

is fixed, the fractional Landweber regularization method has fewer iteration steps. For

and

, the same result is obtained.

Table 1. The iteration steps of Example 5.1 for different regularization method.

We fix . When

, the iterative steps of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 597, 75 and 137. When

, the iterative steps of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 766, 93 and 170. When

, the iterative steps of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 1362, 138 and 261. We can deduce that

is fixed, the fractional

Landweber regularization method has fewer iteration steps. For and

, the same result is obtained.

In summary, the fractional Landweber regularization method has fewer iteration steps.

In Table , we use a computer with Intel(R) Core(TM) i5-6200U CPU @ 2.30 GHz 2.40 GHz and RAM of 4.00 GB to calculate the CPU time. The specific analysis is as follows: we fix . For Table , when

, the CPU time of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 11.9304 s, 1.7742 s and 2.8694 s. When

, the CPU time of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 15.4281 s, 1.5056 s and 3.4975 s. When

, the CPU time of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 27.4445 s, 2.4426 s and 5.4024 s. We can deduced that

is fixed, the fractional Landweber regularization method has fewer CPU time. For

and

, the same result is obtained.

Table 2. The CPU time of Example 5.1 for different regularization method.

We fix . When

, the CPU time of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 11.9304 s, 1.7742 s and 2.8694 s. When

, the CPU time of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 5.8485 s, 0.6786 s and 1.6987 s. When

, the CPU time of Landweber regularization method, fractional Landweber regularization method and modified iterative method are 5.7179 s, 0.7475 s and 1.7893 s. We can deduced that

is fixed, the fractional Landweber regularization method has fewer CPU time. For

and

, the same result is obtained. In summary, the fractional Landweber regularization method has fewer CPU time.

By Table , we can deduce that the errors between exact solution and approximate solution are smaller for fixed α and the smaller measurement error. And we infer that the error between exact solution and approximation solution of fractional Landweber method is smaller than that obtained by Landweber regularization method and modified iterative method for fixed α and ε.

Table 3. Error behaviour of Example 5.1 for different α with .

From Table , we can obtain the error between exact solution and approximation solution of fractional Landweber method is smaller than that obtained by Landweber regularization method and modified iterative method for fixed α and ε.

Table 4. Error behaviour of Example 5.2 for different α with .

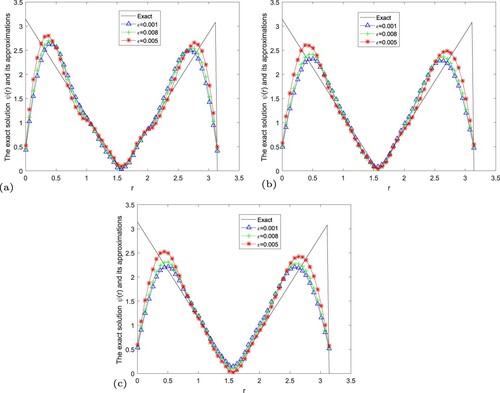

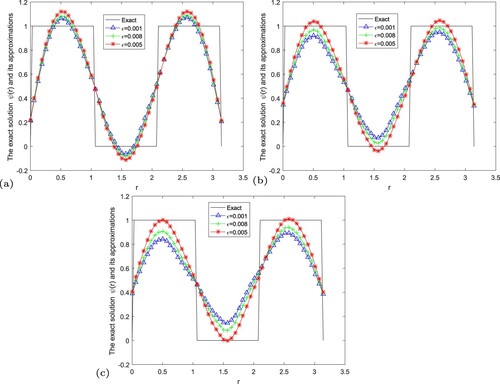

In Figures –, we give numerical results of Example 5.1 under the a posteriori parameter choice rule for various noise levels in the case of

. It can be seen that the numerical error also decreases when the noise is reduced. And the smaller α, the better the approximate effect.

In Figures –, we give numerical results of Example 5.2 under the a posteriori parameter choice rule for various noise levels in the case of

. It can be seen that the numerical error also decreases when the noise is reduced. And the smaller α, the better the approximate effect.

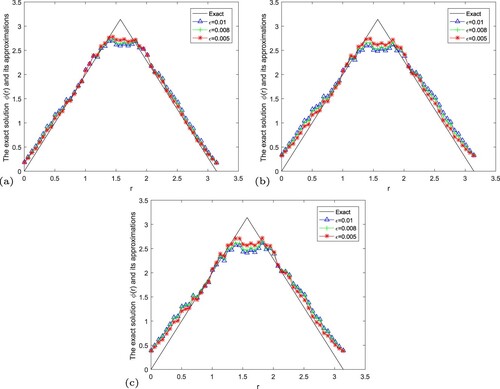

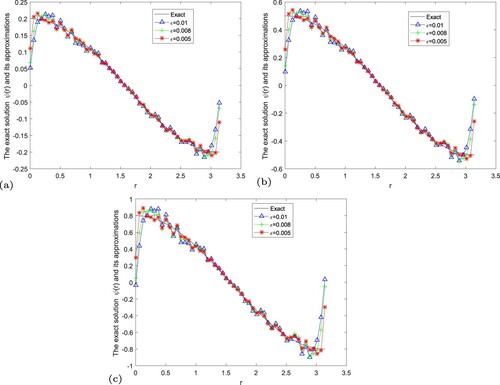

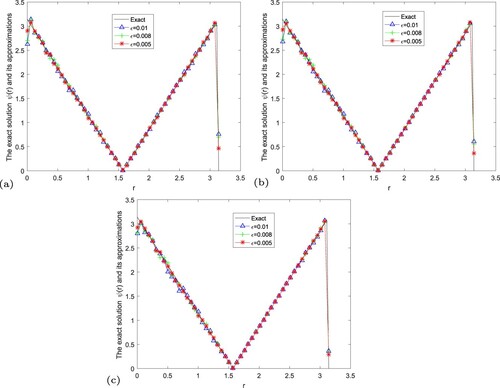

In Figures –, we give numerical results of Example 5.3 under the a posteriori parameter choice rule for various noise levels in the case of

. It can be seen that the numerical error also decreases when the noise is reduced. And the smaller α, the better the approximate effect.

Figure 7. The exact solution and regular solution of Landweber regularization method by using the a posteriori parameter choice rule for Example 5.3. (a) , (b)

, (c)

.

Figure 8. The exact solution and regular solution of fractional Landweber regularization method by using the a posteriori parameter choice rule for Example 5.3. (a) , (b)

, (c)

.

Figure 9. The exact solution and regular solution of modified iterative regularization method by using the a posteriori parameter choice rule for Example 5.3. (a) , (b)

, (c)

.

Example 5.3

Take function

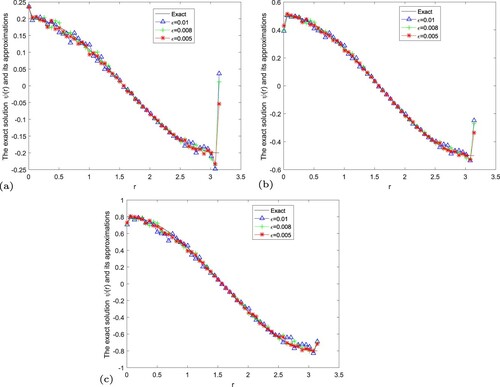

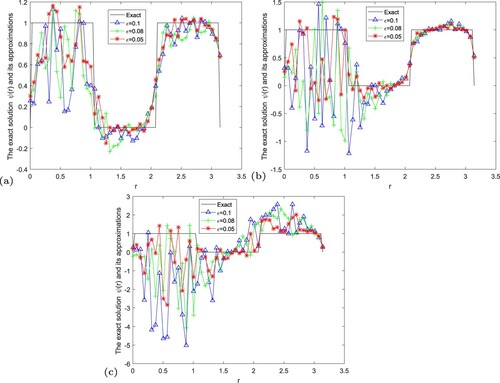

In Figures –, we give numerical results of Example 5.4 under the a posteriori parameter choice rule for various noise levels in the case of

. It can be seen that the numerical error also decreases when the noise is reduced. And the smaller α, the better the approximate effect.

Figure 10. The exact solution and regular solution of Landweber regularization method by using the a posteriori parameter choice rule for Example 5.4. (a) , (b)

, (c)

.

Figure 11. The exact solution and regular solution of fractional Landweber regularization method by using the a posteriori parameter choice rule for Example 5.4. (a) , (b)

, (c)

.

Figure 12. The exact solution and regular solution of modified iterative regularization method by using the a posteriori parameter choice rule for Example 5.4. (a) , (b)

, (c)

.

In Figures –, we give numerical results of Example 5.5 under the a posteriori parameter choice rule for various noise levels in the case of

. It can be seen that the numerical error also decreases when the noise is reduced. And the smaller α, the better the approximate effect.

Figure 13. The exact solution and regular solution of Landweber regularization method by using the a posteriori parameter choice rule for Example 5.5. (a) , (b)

, (c)

.

Figure 14. The exact solution and regular solution of fractional Landweber regularization method by using the a posteriori parameter choice rule for Example 5.5. (a) , (b)

, (c)

.

Figure 15. The exact solution and regular solution of modified iterative regularization method by using the a posteriori parameter choice rule for Example 5.5. (a) , (b)

, (c)

.

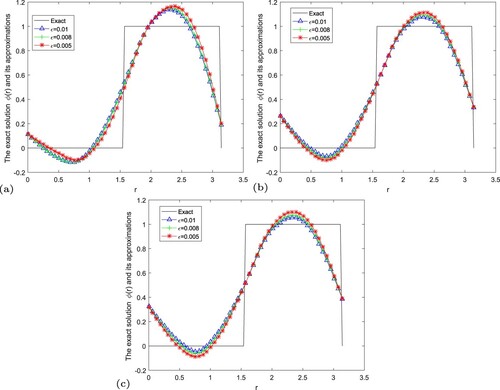

In Figures –, we give numerical results of Example 5.6 under the a posteriori parameter choice rule for various noise levels in the case of

. It can be seen that the numerical error also decreases when the noise is reduced. And the smaller α, the better the approximate effect.

Figure 16. The exact solution and regularization solution of Landweber regularization method by using the a posteriori parameter choice rule for Example 5.6. (a) , (b)

, (c)

.

Figure 17. The exact solution and regularization solution of fractional Landweber regularization method by using the a posteriori parameter choice rule for Example 5.6. (a) , (b)

, (c)

.

Figure 18. The exact solution and regularization solution of modified iterative method by using the a posteriori parameter choice rule for Example 5.6. (a) , (b)

, (c)

.

Example 5.4

Take function .

Example 5.5

Take function

Example 5.6

Take function

From the above conclusion, we know that the fractional Landweber regularization method is the most efficient and accurate. Next, the error of the regular solution and the exact solution is given in Figure under the rule of selecting the posterior regularization parameters when we choose

, respectively. We can know that if the noise levels becomes lager, the error between the exact solution and the regular solution by using the a posteriori parameter choice rule will become lager.

Figure 19. The exact solution and regular solution of fractional Landweber regularization method by using the a posteriori parameter choice rule for Example 5.6. (a) , (b)

, (c)

.

We can deduce that when ε and α are larger, the error between the exact solution and the regular solution by using the a posteriori parameter choice rule is greater.

6. Conclusion

An inverse problem for the time-fractional diffusion-wave equation on spherically symmetric domain is considered. Based on the conditional stability, we propose Landweber regularization method, fractional Landweber regularization method and modified iterative method to deal with the problem and derive the a-priori and a posteriori convergence eatimate. In addition, numerical examples verify that the fractional Landweber iterative regularization method is more efficient and accurate. In the following research work, I will continue to study the inverse problems of some equations on special regions, such as cylindrical symmetric regions or spherically symmetric regions, which should be very interesting, and then use appropriate regularization methods to solve them.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Hall MG, Barrick TR. From diffusion-weighted MRI to anomalous diffusion imaging. Magnet Reson Med. 2008;59(3):447–455.

- Yuste SB, Acedo L, Lindenberg K. Reaction front in an A+B→C reaction-subdiffusion process. Phys Rev E. 2004;69(3):036126.

- Scalas E, Gorenflo R, Mainardi F. Fractional calculus and continuous-time finance. Phys A. 2000;284(1):376–384.

- Kohlmann M, Tang T. Fractional calculus and continuous-time finance III: the diffusion limit. In: Mathematical finance; 2001. p. 171–180, Birkhauser Verlag, Basel-Boton-Berlin.

- Meerschaert MM, Scalas E. Coupled continuous time random walks in finance. Phys A. 2006;370(1):114–118.

- Mainardi F, Raberto M, Gorenflo R, et al. Fractional calculus and continuous-time finance II: the waiting-time distribution. Phys A. 2000;287(3-4):468–481.

- Li ZX. An iterative ensemble Kalman method for an inverse scattering problem in acoustics. Mod Phys Lett B. 2020;34(28):12.

- Luchko Y. Initial-boundary-value problems for the generalized multi-term time-fractional diffusion equation. J Math Anal Appl. 2011;374(2):538–548.

- Luchko Y. Some uniqueness and existence results for the initial-boundary value problems for the generalized time-fractional diffusion equation. Comput Math Appl. 2010;59(5):1766–1772.

- Luchko Y. Initial-boundary-value problems for the one-dimensional time-fractional diffusion equation. Fract Calc Appl Anal. 2012;15(1):141–160.

- Luchko Y. Maximum principle for the generalized time-fractional diffusion equation. J Math Anal Appl. 2009;351(1):218–223.

- Li ZY, Luchko Y, Yamamoto M. Asymptotic estimates of solutions to initial-boundary-value problems for distributed order time-fractional diffusion equations. Fract Calc Appl Anal. 2014;17(4):1114–1136.

- Hossein J, Afshin B, Seddigheh B. A novel approach for solving an inverse reaction-diffusion-convection problem. J Optim Theory Appl. 2019;183(2):688–704.

- Afshin B, Seddigheh B. Reconstructing unknown nonlinear boundary conditions in a time-fractional inverse reaction-diffusion-convection problem. Numer Meth Part D E. 2019;35(3):976–992.

- Luchko Y. Boundary value problems for the generalized time-fractional diffusion equation of distributed order. Fract Calc Appl Anal. 2009;12(4):409–422.

- Bai ZB, Qiu TT. Existence of positive solution for singular fractional differential equation. Appl Math Comput. 2008;215(7):2761–2767.

- Kemppainen J. Existence and uniqueness of the solution for a time-fractional diffusion equation. Fract Calc Appl Anal. 2011;14(3):411–417.

- Wang JG, Wei T. Quasi-reversibility method to identify a space-dependent source for the time-fractional diffusion equation. Appl Math Model. 2015;39(20):6139–6149.

- Zhang ZQ, Wei T. Identifying an unknown source in time-fractional diffusion equation by a truncation method. Appl Math Comput. 2013;219(11):5972–5983.

- Wang JG, Zhou YB, Wei T. Two regularization methods to identify a space-dependent source for the time-fractional diffusion equation. Appl Numer Math. 2013;68(68):39–57.

- Yang F, Fu CL, Li XX. A quasi-boundary value regularization method for determining the heat source. Math Method Appl Sci. 2015;37(18):3026–3035.

- Yang F, Fu CL. The quasi-reversibility regularization method for identifying the unknown source for time fractional diffusion equation. Appl Math Model. 2015;39(5-6):1500–1512.

- Yang F, Fu CL, Li XX. Identifying an unknown source in space-fractional diffusion equation. Acta Math Sci. 2014;34(4):1012–1024.

- Yang F, Fu CL, Li XX. The inverse source problem for time fractional diffusion equation: stability analysis and regularization. Inverse Probl Sci Eng. 2015;23(6):969–996.

- Yang F, Ren YP, Li XX, et al. Landweber iterative method for identifying a space-dependent source for the time-fractional diffusion equation. Bound Value Probl. 2017;2017(1):163.

- Yang F, Liu X, Li XX. Landweber iterative regularization method for identifying the unknown source of the time-fractional diffusion equation. Adv Differ Equ. 2017;2017(1):388.

- Zheng GH, Wei T. Two regularization methods for solving a Riesz–Feller space-fractional backward diffusion problem. Inverse Probl. 2010;26:115017(22pp).

- Cheng H, Fu CL. An iteration regularization for a time-fractional inverse diffusion problem. Appl Math Model. 2012;36(11):5642–5649.

- Liu JJ, Yamamoto M. A backward problem for the time-fractional diffusion equation. Appl Anal. 2010;89(11):1769–1788.

- Yang F, Ren YP, Li XX. The quasi-reversibility method for a final value problem of the time-fractional diffusion equation with inhomogeneous source. Math Method Appl Sci. 2018;41(5):1774–1795.

- Wang LY, Liu JJ. Data regularization for a backward time-fractional diffusion problem. Comput Math Appl. 2012;64(11):3613–3626.

- Wang JG, Zhou YB, Wei T. A posteriori regularization parameter choice rule for the quasi-boundary value method for the backward time-fractional diffusion problem. Appl Math Lett. 2013;26(7):741–747.

- Tuan NH, Long LD, Nguyen VT, et al. On a final value problem for the time-fractional diffusion equation with inhomogeneous source. Inverse Probl Sci Eng. 2017;25(9):1367–1395.

- Taghavi A, Babaei A, Mohammadpour A. A stable numerical scheme for a time fractional inverse parabolic equation. Inverse Probl Sci Eng. 2017;25(10):1474–1491.

- Yang F, Zhang Y, Li XX. The quasi-boundary value regularization method for identifying the initial value with discrete random noise. Bound Value Probl. 2018;2018(1):108.

- Yang F, Zhang Y, Liu X, et al. The Quasi-boundary value method for identifying the initial value of the space-time an fractional diffusion equation. Acta Math Sci. 2020;40B(3):641–658.

- Yang F, Pu Q, Li XX. The fractional Tikhonov regularization methods for identifying the initial value problem for a time-fractional diffusion equation. J Comput Appl Math. 2020;380:112998.

- Ozbilge E, Demir A. Inverse problem for a time-fractional parabolic equation. J Inequal Appl. 2015;2015(1):81.

- Babaei A, Banihashemi S. A stable numerical approach to solve a time-fractional inverse heat conduction problem. Iran J Sci Technol A. 2018;42(4):2225–2236.

- Ruan ZS, Yang J, Lu XL. Tikhonov regularisation method for simultaneous inversion of the source term and initial data in a time-fractional diffusion equation. E Asian J Appl Math. 2015;5(3):273–300.

- Yang F, Zhang P, Li XX, et al. Tikhonov regularization method for identifying the space-dependent source for time-fractional diffusion equation on a columnar symmetric domain. Adv Differ Equ. 2020;2020:128.

- Li GS, Zhang DL, Jia XZ, et al. Simultaneous inversion for the space-dependent diffusion coefficient and the fractional order in the time-fractional diffusion equation. Inverse Probl. 2013;29:065014.

- Yang F, Ren YP, Li XX. Landweber iteration regularization method for identifying unknown source on a columnar symmetric domain. Inverse Probl Sci Eng. 2018;26(8):1109–1129.

- Cheng W, Zhao LL, Fu CL. Source term identification for an axisymmetric inverse heat conduction problem. Comput Math Appl. 2010;59(1):142–148.

- Cheng W, Ma YJ, Fu CL. Identifying an unknown source term in radial heat conduction. Inverse Probl Sci Eng. 2012;20(3):335–349.

- Yang F, Sun YR, Li XX. The quasi-boundary value method for identifying the initial value of heat equation on a columnar symmetric domain. Numer Algor. 2019;82(2):623–639.

- Cheng W, Fu CL. A modified Tikhonov regularization method for an axisymmetric backward heat equation. Acta Math Sin. 2010;26(11):2157–2164.

- Djerrar I, Alem L, Chorfi L. Regularization method for the radially symmetric inverse heat conduction problem. Bound Value Probl. 2017;2017(1):159.

- Xiong XT, Ma XJ. A backward identifying problem for an axis-symmetric fractional diffusion equation. Math Model Anal. 2017;22(3):311–320.

- Yang F, Wang N, Li XX. A quasi-boundary regularization method for identifying the initial value of time-fractional diffusion equation on spherically symmetric domain. J Inverse Ill-Posed Probl. 2019;27(5):609–621.

- Šišková K, Slodička M. Identification of a source in a fractional wave equation from a boundary measyrement. J Comput Appl Math. 2019;349:172–186.

- Liao KF, Li YS, Wei T. The identification of the time-dependent source term in time-fractional diffusion-wave equations. E Asian J Appl Math. 2019;9(2):330–354.

- Šišková K, Slodička M. Recognition of a time-dependent source in a time-fractional wave equation. Appl Numer Math. 2017;121:1–17.

- Gong XH, Wei T. Reconstruction of a time-dependent source term in a time-fractional diffusion-wave equation. Inverse Probl Sci Eng. 2019;27(11):1577–1594.

- Yang F, Pu Q, Li XX, et al. The truncation regularization method for identifying the initial value on non-homogeneous time-fractional diffusion-wave equations. Mathematics. 2019;7(11):1007.

- Yang F, Zhang Y, Li XX. Landweber iterative method for identifying the initial value problem of the time-space fractional diffusion-wave equation. Numer Algor. 2020;83(4):1509–1530.

- Wei T, Zhang Y. The backward problem for a time-fractional diffusion-wave equation in a bounded domain. Comput Math Appl. 2018;75(10):3632–3648.

- Xian J, Wei T. Determination of the initial data in a time-fractional diffusion-wave problem by a final time data. Comput Math Appl. 2019;78(8):2525–2540.

- Yang F, Wang N, Li XX. Landweber iterative method for an inverse source problem of time-fractional diffusion-wave equation on spherically symmetric domain. J Appl Anal Comput. 2020;10(2):514–529.

- Sakamoto K, Yamamoto M. Initial value/boundary value problems for fractional diffusion-wave equations and applications to some inverse problems. J Math Anal Appl. 2011;382(1):426–447.

- Murio DA. Implicit finite difference approximation for time fractional diffusion equations. Comput Math Appl. 2008;56(4):1138–1145.

- Zhuang P, Liu F. Implicit difference approximation for the time fractional diffusion equation. J Appl Math Comput. 2006;22(3):87–99.

- Klann E, Ramlau R. Regularization by fractional filter methods and data smoothing. Inverse Probl. 2008;24(2):025018.

- Han YZ, Xiong XT, Xue XM. A fractional Landweber method for solving backward time-fractional diffusion problem. Comput Math Appl. 2019;78(1):81–91.

- Xiong XT, Xue XM, Qian Z. A modified iterative regularization method for ill-posed problems. Appl Numer Math. 2017;122:108–128.

- Abbaszadeh M, Dehghan M. Numerical and analytical investigations for neutral delay fractional damped diffusion-wave equation based on the stabilized interpolating element free Galerkin(IEFG) method. Appl Numer Math. 2019;145:488–506.

- Dehghan M, Abbaszadeh M. A finite difference/finite element technique with error estimate for space fractional tempered diffusion-wave equation. Comput Math Appl. 2018;75(8):2903–2914.

- Dehghan M, Abbaszadeh M. A Legendre spectral element method(ESM) based on the modified bases for solving neutral delay distributed-order fractional damped diffusion-wave equation. Math Method Appl Sci. 2018;41(9):3476–3494.

- Abbaszadeh M, Dehghan M. An improved meshless method for solving two-dimensional distributed order time-fractional diffusion-wave equation with error estimate. Numer Algor. 2016;75(1):173–211.

- Irfan T. Fundamentals of MATLAB language; 2019.

- Irfan T. Introduction to MATLAB; 2019.

Appendix

Proof

Proof of Lemma 2.3

We define two functions with :

and

Obviously

. These two functions are continuous when

.

For and

, using Lemma 3.3 in [Citation63], we have

. Therefore,

The proof of (Equation9

(9)

(9) ) is similar, so it is omitted.

Proof

Proof of Lemma 2.4

We introduce a new variable and define a function

. It is easy to verify that there exists a unique

with

such that

, we then have

The proof of (Equation11

(11)

(11) ) is similar, so it is omitted.

Proof

Proof of Lemma 2.7

From Lemma 2.6, we have

Define

,

it is easy to find the unique point

such that

. Therefore

The proof of (Equation15

(15)

(15) ) is similar, so it is omitted.

Proof

Proof of Lemma 2.8

For the first inequality, we have the following results. From Lemma 2.6, we have

Define

,

it is easy to find the unique point

such that

. Therefore

For the second inequality, we have the following inequality. From Lemma 2.6, we have

Define

,

it is easy to find the unique point

such that

. Therefore

Proof

Proof of Lemma 2.9

Let and

,

, then

and

.

Since , we have

. Hence from

,

and we have the following inequality holds,

(A1)

(A1) Due to for

and

, we have

(A2)

(A2) Combining (EquationA1

(A1)

(A1) ) and (EquationA2

(A2)

(A2) ), we have

The proof of (Equation19

(19)

(19) ) is similar, so it is omitted.

Proof

Proof of Lemma 2.10

If satisfies

, we obtain

therefore, we have

The proof of (Equation21

(21)

(21) ) is similar, so it is omitted.

Proof

Proof of Lemma 2.11

If satisfies

, we obtain

therefore, we have

The proof of (Equation23

(23)

(23) ) is similar, so it is omitted.