ABSTRACT

This article analyses and discusses attitudes and practices concerning verification among Swedish journalists. The research results are based on a survey of more than 800 Swedish journalists about their attitudes towards verification (Journalist 2018) and a design project where a prototype for verification in newsrooms – the Fact Check Assistant (FCA) – was developed and evaluated. The results of the survey show a lack of routines when it comes to verifying content from social media and blogs and considerable uncertainty among journalists about whether this kind of verification is possible.

The development of the prototype initially created reactions of interest and curiosity from the newsroom staff. Gradually, however, the degree of scepticism about its usability increased. A lack of time and a lack of knowledge were two of the obstacles to introducing new verification routines. It is not enough to introduce new digital tools, according to the journalists. Management must also allocate time for training. The paper’s ultimate conclusion is that changing journalists’ and editors’ attitudes towards verification in this digital age appears to be guided by newsroom culture rather than technical solutions.

Introduction

The discussion about fake news and disinformation has made fact-checking and verification a focus of journalism research. Fact-checking has developed into a new genre in news journalism, both within established news organisations and in separate entities, such as Politifact in the US and Faktiskt.no in Norway (Graves Citation2016).

However, there is an important difference between the notions of “fact-checking” and “verification” (Wardle Citation2018; Allern Citation2019). Fact-checking is the process of assessing the validity of claims that have reached the public domain through some form of media. For example, journalists could use political fact-checking to assess the validity of statements by politicians and public figures during election campaigns. These statements may be broadcast or published in traditional media or posted online. The results of a fact-check are often published with a judgement on a graded scale from “completely false” to “completely true”. This form of fact-checking requires an ability to cross-validate arguments based on earlier established facts.

When it comes to verification in the newsrooms, this practice has been part of the working process of journalists since modern forms of journalism were developed. The verification tools used in newsrooms have evolved over time to account for digitalization and proliferation of unverified multimedia content on social media. Verification is a basic norm in journalism and is traditionally carried out before or during the process of publishing (e.g., live reporting). Kovach and Rosenstiel define verification as the crucial divide between journalism and other kinds of media content, like entertainment, propaganda, and fiction – “the essence of journalism is a discipline of verification” (Citation2001, 71). In order to be properly applicable in daily work, many countries have codified the value of verification into formal ethical principles. In Sweden, “Accurate News” is the title of the first paragraph in the professional ethical rules of journalists.Footnote1

Verification of digital content is often associated with assessing the validity of content such as images, videos and texts that are circulated on social media. This verification is extensively technical in nature and requires a special set of skills in the domain of open-source intelligence (OSINT). There is evidence that political fact-checking and digital content verification can occasionally overlap, particularly when politicians support their claims using digital multimedia content circulating on social media. This leads to a demand for technical digital content verification experts and traditional political fact-checkers to work more collaboratively (International Fact-Checking Network Citation2020).

Furthermore, this professional commitment to verification is challenged by both external and internal factors in media development (Ekström Citation2020). There is a large flow of information from professional sources with sophisticated methods to news management. There are also sources that circulate “news” on different websites and social media platforms, and these sources are often hard to evaluate.

Internally in the newsrooms, journalists experience increased pressure in their daily work. Structural changes on the market have led to fewer journalists producing more content for multiple platforms, and the space for critical investigation and verification has been reduced (Witschge and Nygren Citation2009). In addition, accuracy ideals are challenged by the development of 24-hour rolling news practices and so-called liquid news, where verification becomes part of the publishing process rather than something that precedes it (Karlsson Citation2012; Widholm Citation2016).

The purpose of this article is to go deeper into the field of verification in today’s digital environment. The article describes a joint project between academics and leading Swedish media companies that aimed to develop and test a digital tool for verification of digital content and evaluating sources – the Fact Check Assistant (FCA). The article also presents a recent survey among Swedish journalists concerning attitudes towards verification.

This leads us to four research questions:

What are the attitudes of Swedish journalists towards verification?

Are there established routines for verification in the newsrooms?

How can a digital verification tool improve these routines, and what functions should be included in the tool?

What kinds of problems arise from the introduction of new tools and routines for assessing digital sources and verifying digital content?

Background

Verification in the Journalistic Process

There is common agreement on verification as a professional norm in journalism. However, it is complicated to study the journalistic process to see how verification is done in practice. Verification is a fluid and contested practice, and studies show there is a lack of consensus in how to verify (Hermida Citation2015). Only a small part of the journalistic work is devoted to cross-verification, the most usual form of verification. Cross-verification entails having at least two independent sources for a claim, “the juxtaposition of two news sources / … / against each other with the express intention of ascertaining the information’s reliability” (Godler and Reich Citation2017, 567). A German study shows that only 5.5 per cent of the working time is devoted to cross-verification (Machill and Beiler Citation2009). In an Israeli study, about half of the articles had any cross-verification (Godler and Reich Citation2017). In local journalism, cross-verification seems to be even more rare; in a Swedish study of coverage of local authorities and politics, only one-third of the articles had at least two independent sources (Nygren Citation2003).

Qualitative interviews with Canadian journalists show that verification is part of the whole working process. The need for cross-verification depends on how sensitive the issue is, and journalists use their experience when deciding on what to check or not (Shapiro et al. Citation2013). A common solution is to use well-known and authoritative sources in cases where verification is not possible. The degree of cross-verification is much lower if the source has been frequently used before (Godler and Reich Citation2017).

The conflict between speed and the need for verification is as old as journalism itself. Digitalization of the media system has brought this tension to a new level, and there are tendencies of softer attitudes towards verification among journalists. In a comparative study with journalists in Sweden, Poland, and Russia, about one-third of the participants agree that verification is not needed before publishing online; it can be done during the process. Forty-two percent believe that the online audience has lower demands on verification than print and broadcast audiences (Nygren and Widholm Citation2018).

Increasingly, transparency has become important as a professional norm – not to replace verification but to open the process for the audience. In live reporting, verification becomes a collaborative effort for journalists and the audience; facts become less fixed and more fluid (Hermida Citation2015). However, the journalist is still regarded as a truth-seeker, but often in collaboration with the audience. Another important strategy is to put the responsibility for the accuracy on the source by means of only referring to what is being said (Ekström Citation2020).

In this digital flow of information, social media platforms are important sources for journalists seeking information. Interviews with online journalists in Western Europe show great ambivalence towards these sources in terms of how to evaluate and verify facts and pictures from social media (Brantzaeg et al. Citation2016). Journalists often stick to trusted official online sources, and they often use traditional methods such as calling sources by telephone to verify the accuracy of what they find on social media. The level of knowledge about digital verification tools is low, and only a few journalists use them. What online journalists are asking for are tools that will help them conduct basic evaluations of accounts, geolocation, the time stamp, and the history of accounts, for example.

Attitudes towards verification are an important part of the different journalistic cultures that exist today. These cultures differ depending on the existing media systems and between different media types and media organisations (Zelizer Citation2005). There are two main dimensions concerning epistemology in the journalistic cultures according to Hanitzsch (Citation2007): objectivism and empiricism. The first of these dimensions is a philosophical question – is it possible to reach an objective “truth” or are the statements the result of subjective judgements? The second dimension is about how the journalist can justify his or her claims – by empirical means as facts or are they based on opinions, analyses, and values? The importance of verification among journalists depends on these questions.

When it comes to media systems, a clear difference has been observed earlier between the fact-based Anglo-Saxon tradition and the more subjective and analytical continental European journalism (Chalaby Citation1996). However, this has been changing over time. Over the last decade, fact-based journalism has been challenged by more subjective and values-based journalism, as, for example, in Fox News. As a reaction to this development, we observe the rise of fact-checking as a new genre within news journalism (cf. Graves Citation2016).

Methods

Action Research and a Survey

In her keynote at the Future of Journalism conference in 2017, Claire Wardle asked for more cooperation between academics and practitioners to research the information disorder (Wardle Citation2018). One example is her own organisation, First Draft, which is based at Harvard. By carrying out experimental projects that bring together media companies and journalism educators, the organisation tests new forms of fact-checking and verification.

The Fact-Check Assistant (FCA) is a similar project based in Sweden. In 2017, Swedish public service television (SVT) and Swedish public radio (SR), along with two large quality newspapers (Dagens Nyheter and Svenska Dagbladet), received a grant from the Swedish authority for research and innovation, Vinnova. The purpose of the grant was to develop a tool for verification of digital sources in the daily newsroom work – the FCA. Södertörn University was the academic partner responsible for development and coordination. The purpose of the project was to design a prototype in collaboration with journalists and tailor the tool to their specific needs to adopt a systematic and transparent method of verifying claims.

The development part of the Fact-Check Assistant can be described as action research as originally described by Lewin (Citation1946). Action research methodology is “research on the conditions and effects of various forms of social action, and research leading to action” (Lewin Citation1946, 35). Galtung (Citation2002) describes the methodology as a way to not only understand a problem but also to solve it with the people involved. Kemmis, McTaggart, and Nixon (Citation2014) argue that all action research shares some common key features, for example by rejecting conventional research approaches “where an external expert enters a setting to record and represent what is happening” (Kemmis, McTaggart, and Nixon Citation2014). Two features are apparent, according to Kemmis et al.:

the recognition of the capacity of people living and working in particular settings to participate actively in all aspects of the research process; and

the research conducted by participants is oriented to making improvements in practices and their settings by the participants themselves (Kemmis, McTaggart, and Nixon Citation2014).

The case study presented in the article displays methods that are common in interaction design, more specifically goal-directed design as described by Cooper et al. (Citation2014), which has earlier been used in a large number of media studies when implementing and testing new technical tools. In one such study, as described by Thurman, Dörr, and Kunert (Citation2017), an automated news writing robot was introduced to British journalists. In another study using interaction design, as described by Stray (Citation2019), AI is introduced to investigative reporters in the United States and Britain. In a third study, a creativity support tool was introduced to journalists in Norway (Maiden et al. Citation2018). In line with the methodology of interaction design, these studies have in common that they introduce, develop, test and implement new innovative digital tools for journalists and evaluate the result of the implementation.

Our developmental part of the project consisted of workshops where the purpose and idea of a fact-checking tool were discussed with journalists and researchers. After the workshops, a prototype fact-checking tool was developed based on the initial feedback received during the workshops. Journalists and researchers tested and evaluated the prototype and then it was further developed in an iterative process that started in late 2018 and ended in the spring of 2020. (The iterative development process is described in more detail further on in this article as this is part of the case study results.)

The method of goal-directed design (Cooper et al. Citation2014) entails that the users, in this case journalists and researchers, are engaged in the technical development of the tool. The main idea behind goal-directed design is to use the needs of the users as the starting point for developing the tool and then aim to build the tool in direct relation to the user needs. This development process usually takes place in a number of iterations with the users. Between every test or iteration, the prototype is developed further, and after a number of tests, the prototype is ready to be launched as a new product.

The other part of our study consists of empirical material from the survey “Journalist 2018” gathered by JMG, University of Gothenburg, Sweden. This is a broad survey on the ideals and daily work of Swedish journalists, and part of it included questions on verification, the answers to which we found particularly relevant to this study and are presented here for the first time. The survey was sent to a random sample of members of the Swedish Union of Journalists, which organises about 85–90 per cent of all journalists in the country. The response rate was 52 per cent, and the maximum number of respondents was 1,151. We did not address the questions on verification to freelancers, so the number of respondents to these particular questions was 876. We include the results from the survey in this article to analyse the point of departure for the FCA project, the existing attitudes among journalists towards verification of digital sources, and the situation in the newsrooms with regard to standards and routines.

This study is limited to one country, Sweden, with a media system described as “democratic-corporative” by comparative journalism research (Hallin and Mancini Citation2004). The system is characterized by strong journalistic professionalism and a low degree of political influence in media. This is important to keep in mind when comparing the results with other countries.

Results

A Need for Verification Routines

Very few of the Swedish journalists work in newsrooms with clear routines for verification of content from social media and blogs according to the survey. Only 17 per cent agree that there are clear routines, and 43 per cent disagree. There is also a large group with no opinion that give no answer, probably a sign of uncertainty in this area. There are some differences between media types; a slightly larger share of the journalists working in public service radio and television are aware of routines for verification of content from social media. This difference is also visible in other questions in the survey concerning verification. For example, journalists in public service media put more emphasis on equal verification in publishing on all platforms: 57 per cent of journalists in public service agree compared to 49 per cent in newspapers .

Table 1 . In my newsroom, there are clear routines in verifying content from social media and blogs (per cent on a scale of 1–5, where 1 is agree completely)

Is it possible to completely verify content on social media? Journalists demonstrated considerable uncertainty about this question. Less than one-third agree that it is possible, and an equal share disagree. Many respondents neither have an opinion nor answer this question.

There are also large differences between age groups regarding this question. Young journalists have greater confidence in verification of digital content, while their older colleagues are more sceptical. Thirty-seven per cent of the young journalists agree that this type of verification is possible, and only 20 per cent disagree. For journalists over the age of 50, the figures are the opposite: over 40 per cent feel that this kind of verification is not possible .

Table 2. It is possible to completely verify news and other information found in social media and websites? (per cent by age group on a scale of 1–5)

A deeper analysis shows a strong correlation between the answers to these questions (Pearson .983, significant at the 0.01-level). The journalists who answer that there are clear routines for verifying digital sources also believe that it is possible to do this type of verification. And the opposite: a lack of routines also results in lower confidence in the prospect of verifying digital sources. To summarise, the survey shows a low level of routines when it comes to verification of digital sources and a diverse picture when it comes to the possibilities to perform this kind of verification in daily work.

The results from the survey were also confirmed in the newsroom study assembled in the first step of the FCA project. One of the university researchers visited three newsrooms: local, regional, and national. With a combination of interviews and observations, he found that journalists were interested in digital verification and there was a need for tools to promote this. There was a general consensus for the idea that a digital assistant could function as a support mechanism to systematise the process and share knowledge with colleagues in the newsroom. At the same time, journalists were clear about the lack of time in their daily work, and some said a digital tool could be too much when they instead can use traditional methods like picking up the phone (Larssen Citation2020). He also found considerable resistance against verification as a general concept – one journalist was provoked and concluded, “it might give the impression that regular journalism does not do fact-checking. But that is exactly what we do, it’s what we are good at and what journalism is all about” (Larssen Citation2020, 209).

A Three-pillar Model

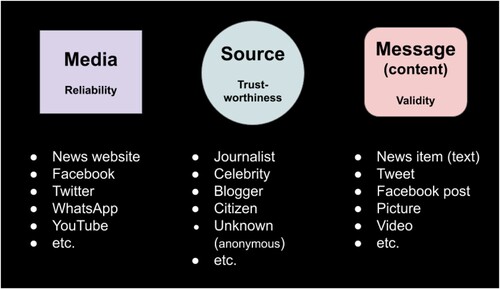

As the iterative development process of this case study is part of the study’s results, a common structure in goal-directed design studies (Cooper et al. Citation2014), we will describe the process in this section. The theoretical starting point for the developmental process is a model for assessing news credibility based on previous research by Metzger et al. (Citation2003). This shows that a three-pillar model can be applied by integrating a comprehensive evaluation of (1) the medium, (2) the source, and (3) the message (Metzger et al. Citation2003).

This model, shown in , served as our theoretical foundation for building a systematic verification process to assess news credibility in a digital environment. To assess each of these three pillars, the user must answer a set of questions in a methodological and consistent manner as described below.

Figure 1. The three-pillar model for assessing news credibility (Metzger et al. Citation2003).

Assessing the Medium

A medium can be an app, a website or any other service through which content can be posted or shared. To evaluate a medium, there are a number of questions that the journalist can answer, such as

Does the medium have a good reputation in the past (or is it blacklisted)?

Are there any technical security concerns (e.g., no SSL access)?

Does the WhoIs database entry support details provided publicly?

Is the medium known for not having any particular bias? Poor ranking, popularity, and reach?

Does the medium have liability terms (legally accountable)? Does it allow readers to flag/report content?

To answer these questions, it is possible to utilise a number of digital tools that are often used for investigating and assessing media platforms and applications. Two examples of such tools are WhoIs/reverse DNS checks to verify the authenticity of websites and Google Maps/Earth for verification of geobase information. It is important to acknowledge that tools to scrutinise a particular medium may vary depending on the type of the medium and the skill level of the journalist.

Assessing the Source

The source of particular content corresponds to the individual(s) or entity(-ies) that published or shared the content being fact-checked. Among the questions that help assess a source are

Does the source have verifiable credentials?

Did the source ever get caught publishing dis/misinformation in the past?

Does the source have a history of quality content published by reputable media?

Can you check the source's network of influence?

Can you check for any difficulty to authenticate information about the source?

Similar to media, journalists can scrutinise sources using various tools that are available either freely online or for a fee. Examples of tools that can provide insights into the identity and background of a source are LinkedIn, Facebook and Pipl.

Assessing the Message (Content)

Finally, journalists can dig deeper into the actual message to verify the content that has been posted or published. When evaluating the validity of specific content, it is important to consider its format, because the format dictates which verification tools are most suitable. For example, when verifying the authenticity of an image-based claim, this may be done through reverse image search tools, while it is more appropriate to assess the validity of a text-based statement by consulting a reliable online source. It would be necessary to investigate the validity of the content by answering a set of predefined questions based on whether the content is an image, video, or text or a combination of all three. For example, if the claim is based on an image or a video, questions that could be asked to assess the content’s validity would be

Was the image used in a misleading context? Has it been manipulated/doctored?

Does the image contain mismatching metadata?

Is there any audio manipulation in any way?

Has the image been fact-checked before?

Are dates, names, numbers, etc., correct?

Has the image used references that are verifiable?

There are many free and commercial tools that are often used by reporters to do advanced OSINT to verify the authenticity and validity of particular content (Hayden Citation2019). Examples of tools that are used to reverse-search multimedia content are Google Images and TinEye for images, the InVid browser plug-in and YouTube DataViewer. There are also tools to investigate whether an image was doctored or manipulated, such as Forensically, which is used to magnify and analyse images and extract metadata information such as the place and time the image was captured. Google and archive.org are examples of services that provide cached copies of content that existed on the web but was later removed.

Issuing a Verdict on a Claim's Validity

To document and collect results from the verification process, a model developed in the new genre of fact-checking was taken into the FCA project. Based on the answers to the questions about the medium, the source and the message, the journalist can make an overall assessment of the claim depending on the degree of confidence the journalist has in the final judgement. The scale available consists of the following scores: false, mostly false, mixed, mostly true, true and no decision. The journalist should justify the score by a thorough and evidence-based rationale. It is noteworthy that the verdict is meant as an interpretation by the journalist and not an objective fact in itself since not all fake news can be considered false. According to Molina et al. (Citation2019), false news is but one of seven types of online content that could be labelled as “fake news”. The other types of “fake news” are polarized content, satire, misreporting, commentary, persuasive information, and citizen journalism (Molina et al. Citation2019).

While it cannot guarantee a conclusive verdict, this three-pillar approach allows journalists to consistently and transparently show how the various questions were answered and where there are missing gaps in the investigation. It is important to document the journey from start to finish so it can be integrated into a sustainable knowledge base for future reference, potential distribution and sharing.

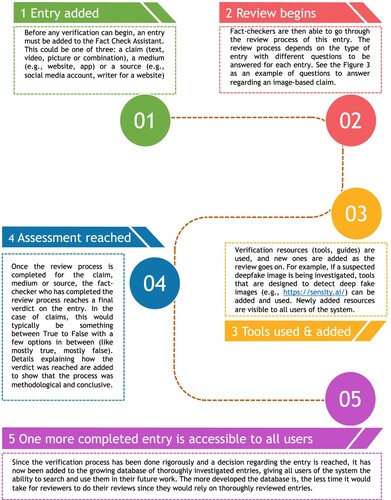

summarizes how the verification process of FCA is followed. All testers were guided through the process and also offered the possibility of watching extensive tutorials on the topic.

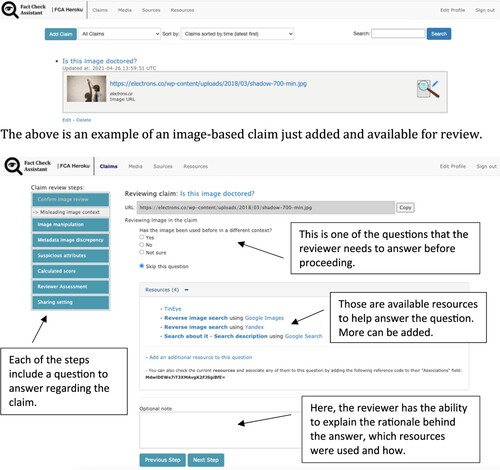

shows an example of how an image-based claim is reviewed. The questions on the left have resources that can be used to answer them. These resources can be added and updated continuously.

The above is an example of an image-based claim just added and available for review.

Workshops and a Prototype

Upon developing the theoretical framework of the three-pillar model in our project, it was important to put it to the test by not only training a set of journalists in how to use it and observing their use but also getting their feedback as part of a goal-directed design process (cf. Cooper et al. Citation2014). A total of fifteen journalists and editors working at six Stockholm-based Swedish media corporations and three journalists from other parts of the country participated in two full-day workshops. Most of the workshop participants expressed interest in implementing this model and found it helpful to speed up the process and make it consistent and systematic. They made suggestions to simplify and structure the process so they could break the work of verification down into separate tasks. For example, one task would be to review a particular medium and another task would be to assess the validity of a picture-based claim.

Participants recommended setting up a system that would allow them to work on certain verification tasks and then temporarily leave their desks. When they returned later, they wanted to be able to pick up where they had left off without losing the work they had already done. In other words, they requested having a memory-based system that would allow them to organise their time and work around their own pace. This request was attributed to the fact that they were rather busy with daily journalistic routines.

Another strong request that emerged from the workshops was to have a library or toolbox of all the available digital tools that would help them answer the questions in the three-pillar model. According to some of the attendees, each tool should be easy to learn, perhaps through a tutorial and documentation. Furthermore, they suggested allowing the toolbox to be customised with additional tools that could be added as needed.

The project team also arranged a number of field visits to present the prototype and get initial reactions from editorial managers in the media companies. While the general reactions were of interest and curiosity, there was an apparent degree of scepticism about the tool’s usability within newsrooms as well as towards the notion of sharing work with journalists in other media. In one particular meeting, the discussion about the prototype was highly critical, with the editorial managers taking the position that the prototype was not actually what was needed. Instead, they suggested creating a tool to discover disinformation that is poised to go viral. Since this was beyond the scope of the project, we did not pursue it.

After this initial step, the developers created a prototype online application. The prototype was based on the assumption that for every single piece of online content, there was at least one digital medium where that content was published or shared, and there was at least one source that had actively put that content – as the message – on the medium. Hence, the role of the prototype was to guide journalists through the verification process by evaluating the reliability of the medium, trustworthiness of the source, and validity of the content. Through the prototype, journalists would be able to add specific claims to fact-check. Each claim would have a reference to the medium, where it was published along with the source that published it.

Additionally, the prototype was meant to harness Web 2.0-based interactivity by allowing multiple journalists to verify and cross-validate the same claims. This approach, which relies on the wisdom of the crowd, has proven to be powerful in improving the reliability of the outcome, particularly if those involved in the process are vetted in advance (Kittur and Kraut Citation2008; Ullrich et al. Citation2008).

We chose the scalable Ruby on Rails development framework to develop the prototype given that it is a proven efficient open-source web development framework with a structure that adheres to object-oriented programming. Due to its multi-layered functional structure with the Model, View, and Controller components, it was easily scalable and could serve as a base for integrating new services with ease (Bächle and Kirchberg Citation2007). We also chose it for its speedy development process and high server performance in addition to its wide community and thorough documentation.

Feedback and Testing in the Newsrooms

In April 2019, the first version of the prototype was completed and ready for testing. Initially, the testing included students at the university's journalism department, and in May 2019 a workshop was held to give journalists from the Stockholm-based media a hands-on trial. During this workshop, the participants made important comments on various functions, and the developers subsequently revised the prototype in order to be more extensively tested. During the development stage, three trainees who participated in the earlier workshops volunteered to be “test pilots” for the prototype and provide feedback. Later, another five journalists with different backgrounds than the nine Stockholm-based media companies also tested and evaluated the prototype.

The test sessions and evaluations were recorded, and the test persons’ reactions and comments were collected and analysed. Semi-structured interviews were undertaken with journalists in the newsrooms as a follow-up of the test sessions and evaluations. The analyses of the workshop material aimed to discern and organise emerging patterns and themes in the respondents’ answers in relation to our research questions, which is an analysis method described by McCracken (Citation1988).

The testers commented that they experimented with the prototype during their daily routine verification processes. Since working on the tool required time and effort to take full advantage of its features and functions, the testers resorted back to their traditional methods of working to save time and effort. To them, this did not mean the tool was not useful, but it is complex and demanding in terms of time and energy compared to traditional methods. The testers indicated that the fast-paced work environment and lack of time for capacity building meant that they could no longer afford to acquire the necessary skills and practice using the tool to leverage it in their daily routines. Part of the reason for this was that they had started with an empty database: the tool contained no prior reviews of media, sources, or content. The assumption is that as more content is added, journalists would find the tool less time-consuming and more intuitive. After researching the entries that were added to the prototype database by the various testers, it was evident that the likelihood of misinformation in a particular message (claim) increased if the medium or source had been found earlier to be unreliable. There was consensus among the testers that it would have been important for the editors and managers to allocate sufficient time and resources for training in the tool and add content to its database on a continuous basis. “How I wish if someone else did the work by filling in the fact-checks so all I would do is search and know whether a claim is true or not,” one of the testers said.

The evaluation also found that the most effective way to encourage journalists to use the prototype was to fill the database with as many previously fact-checked entries as possible from as many journalists as possible. This step was a fundamental requirement for one of the testers since he did not have time to do the verification and populate the database. It was also unclear whether it was a good investment of time to learn how to use a new tool when there were already many other tools used in daily work.

Overall, the journalists who were introduced to the prototype expressed strong support for it, particularly because it could help them follow well-defined routines and share information with and learn from one another. However, the limitations of the tool also became clear in interviews with fact-checking specialists in the participating newsroom. At one of the newspapers, the journalist noted that the tool was useful only to the extent that he had the time to learn and work with it. This was also confirmed by a journalist at public service radio, who concluded that the tool is important but difficult to use immediately. It required time to learn and practise, time that she and other journalists did not necessarily have.

It is not enough to introduce new digital tools, according to the journalists. It is also necessary to “allocate hours and invest some effort in training in how to use it”. A solution in the short run would be to push for specialisation in advanced verification. At one of the newspapers, a special fact-checker holds this role in the newsroom, doing the verification when it is needed.

The journalists also concluded that time was not the only issue; there was also the issue of newsroom culture. As one of them noted, “the cultural mindset of most journalists not used to online verification is that they would rather make traditional phone calls”. Changing this culture requires support from a higher level, as one of the journalists emphasised:

“Higher management needs to put policies in place to ensure that journalists are given the time and resources to equip themselves with the knowledge and tools to properly verify digital content. This requires changes in culture and decisions that embrace the verification of digital content and make it a priority.”

Conclusions

Verification of facts is a basic norm in professional journalism (Kovach and Rosenstiel Citation2001; Hanitzsch Citation2007). Today, this norm is challenged by dual pressure: higher speed and more demands in news production on the one hand and an increasing flow of information from different digital sources on the other. To deal with this challenge and meet the public’s decreasing trust in news media, fact-checking has grown into a new genre in journalism (Graves Citation2016).

The results of the survey Journalist 2018 show a lack of routines in Swedish newsrooms when it comes to verifying digital sources. Only one out of six journalists agrees that there are clear routines in their newsroom. There is also considerable uncertainty about whether it is even possible to verify news and other information found in social media and websites – only one-third believe that it is possible.

Altogether, the results of the survey indicate that Swedish journalists are uncertain about digital sources. This was a promising start for the development of a prototype of a systematic verification tool of digital sources. In cooperation with Swedish news media, and with the three-pillar model as the theoretical framework, the prototype was developed as part of a goal-directed design process (Metzger et al. Citation2003; Cooper et al. Citation2014).

The reporters gave positive feedback during the workshops, but it soon became apparent that it would be increasingly difficult to introduce this new form of systematic verification into their daily work in the newsrooms. The user tests and evaluations showed that systematic verification takes too much time and is too complicated for ordinary journalists. It is easier to rely on old familiar methods and strategies, such as using the telephone and well-known established sources (cf. Brantzaeg et al. Citation2016).

Consequently, it is important that any technical tool for fact-checking and verification is easy to use and is integrated seamlessly into the editorial systems in newsrooms if it is to become a vital part of the working process. Also, traditional newsroom cultures that rely mainly on personal contacts to verify information need to evolve so that journalists are introduced to new digital tools and trained to acquire the technical know-how that would allow them to verify digital content effectively. However, it is not enough to introduce new digital tools to the staff – management also needs to allocate the necessary time for training, as seen in other recent studies. For example, Maiden and his co-authors assert that newsrooms are difficult environments for introducing digital innovations. The reasons for this are decreased newsroom autonomy, a newsroom work culture that is closed to innovation, a lack of management support for journalist training and setting up the conditions for successful uptake, the irrelevance of the new technologies, and the absence of innovative individuals (Maiden et al. Citation2019, 223).

Our study shows that new digital tools are not the only thing needed to improve verification routines in journalists’ daily work. It is also vital to change the newsroom culture related to verification, which brings us to the discussion of fact-based journalism in relation to values-based and subjective journalism (Hanitzsch Citation2007). Ultimately, the importance given to verification depends on the existing values within a specific journalistic culture, and these values must be acknowledged by the management in the organisation in order for verification to work well.

While the prototype's original goal is to improve the verification processes in Swedish newsrooms, the preliminary positive reactions from some IFCN members indicate that it may be beneficial to seek feedback from professional fact-checkers on the international stage, particularly as the global fact-checking movement continues to grow (Graves Citation2016) and need new and innovative tools to confront the growing challenges of disinformation online.

Limits of the Study and Future Research

Verification is a difficult area to research. It covers both professional ideals and the less ideal daily work, and especially the tensions between these areas. The results of the project are limited; they cover only one attempt to introduce new methods of verification of digital sources in Swedish newsrooms. If this is typical only for a strong professional journalistic culture like in Sweden, or if this is typical for journalism in general, still needs to be researched. In future comparative studies, it would be interesting to study the attitudes towards verification in different journalistic cultures.

Another future area for research could be the relations between traditional forms of verification and the use of new digital tools to verify digital content, for example to what extent different methods are used and in what situations? Also, how factors such as age and media formats and traditions are influencing the choice of methods in verification could be interesting to investigate. All these questions require more in-depth studies with other methods than we used in the project described in this article.

The clash between project ambitions to systematise verification and the newsroom conditions and professional culture is one of the more important results of this study. We hope scholars from other countries can follow with other action research projects to test how it is possible to improve verification – as verification still is “the essence of journalism”, as expressed by Kovach and Rosenstiel (Citation2001).

Acknowledgements

The authors wish to thank all media representatives and university students participating in this study. We appreciate your valuable input to this article.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Notes

1 The full code is available at: www.po.se/english/code -of-ethics

References

- Allern, Sigurd. 2019. “Journalistikk som “Faktasjekking.” In Journalistikk Profesjon og Endring, edited by Paul Bjerke, Birgitte Kjos Fonn, and Birgit Oe Mathisen, 167–187. Stamsund: Orkana forlag.

- Bächle, Michael, and Paul Kirchberg. 2007. “Ruby on Rails.” IEEE Software 24 (6): 105–108.

- Brantzaeg, Petter Bae, Marika Lüders, Jochen Spangenberg, Linda Rath-Wiggins, and Asbjorn Folstad. 2016. “Emerging Journalistic Verification Practices Concerning Social Media.” Journalism Practice 10 (3): 323–342.

- Chalaby, Jean K. 1996. “Journalism as an Anglo-American Invention.” European Journal of Communication 11 (3): 303–326.

- Cooper, Alan, Robert Reimann, David Cronin, Chrstopher Noessel, Jason Csizmadi, and Doug LeMoine. 2014. About Face. The Essentials of Interaction Design. Indianapolis: Wiley.

- Ekström, Mats. 2020. “Vad är Sanning i Journalistiken? [What is Truth in Journalism?].” In Sanning, Förbannad Lögn och Journalistik. [Truth, Lies and Journalism], edited by Lars Truedson, 37–55. Stockholm: Institutet för mediestudier.

- Galtung, Johan. 2002. “Peace Journalism: A Challenge.” In Journalism and the New World Order, edited by Wilhelm Kempf, and Heikki Luostarinen, 259–272. Göteborg: Nordicom.

- Godler, Yigal, and Zvi Reich. 2017. “Journalistic Evidence: Cross-Verification as a Constituent of Mediated Knowledge.” Journalism 18 (5): 558–574.

- Graves, Lucas. 2016. Deciding What’s True. The Rise of Political Fact-Checking in American Journalism. New York: Colombia University Press.

- Hallin, Daniel C, and Paolo Mancini. 2004. Comparing Media Systems. Three Models of Media and Politics. Cambridge: Cambridge University Press.

- Hanitzsch, Thomas. 2007. “Deconstructing Journalism Culture: Toward a Universal Theory.” Communication Theory 17: 367–385.

- Hayden, Michael E. 2019. Guide to Open Source Intelligence (OSINT). The Tow Center for Digital Journalism. New York: Columbia University.

- Hermida, Alfred. 2015. Nothing but the Truth: Redrafting the Journalistic Boundary of Verification. In Matt Carlson & Seth. C. Lewis (eds.), Boundaries of Journalism, 37-50. London: Routledge.

- International Fact-Checking Network. 2020. The elephant in the room: Fact-checking vs verification. YouTube. https://youtube.com/watch?v = GrpSwGj2p18.

- Karlsson, Michael. 2012. “Charting the Liquidity of Online News: Moving Towards a Method for Content Analysis of Online News.” International Communication Gazette 74 (4): 385–402.

- Kemmis, Stephen, Robin McTaggart, and Rhonda Nixon. 2014. The Action Research Planner – Doing Critical Participatory Action Research. Singapore: Springer, 167–187. DOI 10.1007/978-981-4560-67-2

- Kittur, Aniket, and Robert E. Kraut. 2008. “Harnessing the Wisdom of Crowds in Wikipedia: Quality Through Coordination.” Proceedings of the 2008 ACM conference on computer supported cooperative work, 37–46.

- Kovach, Bill, and Tom Rosenstiel. 2001. The Elements of Journalism. New York: Crown Publishers.

- Larssen, Urban. 2020. ““But Verifying Facts is What we do!”: Fact-Checking and Journalistic Professional Autonomy.” In Democracy and Fake News Information Manipulation and Post-Truth Politics, edited by Serena Giusti, and Elisa Piras, 199–213. London and New York: Routledge.

- Lewin, Kurt. 1946. “Action Research and Minority Problems.” Social Issues 2 (4): 34–46.

- Machill, Marcel, and Markus Beiler. 2009. “The Importance of the Internet for Journalistic Research.” Journalism Studies 10 (2): 178–203.

- Maiden, Neil, Konstantinos Zachos, Amanda Brown, George Brock, Lars Nyre, Aleksander Nygård Tonheim, Dimitris Apsotolou, and Jeremy Evans. 2018. “Making the News: Digital Creativity Support for Journalists.” CHI ‘18 Proceedings of the 2018 CHI conference on human factors in computing systems. (475). doi:10.1145/3173574.3174049.

- Maiden, Neil, Konstantinos Zachos, Amanda Brown, Lars Nyre, Balder Holm, Aleksander Nygård Tonheim, Claus Hesseling, Andrea Wagemans, and Dimitri Apostolou. 2019. “Evaluating the use of Digital Creativity Support by Journalists in Newsrooms.” Proceedings of the 2019 Conference on Creativity and Cognition, 222–232. doi:10.1145/3325480.3325484.

- Mccracken, Grant. 1988. The Long Interview. Newbury Park, CA: Sage.

- Metzger, Miriam J., Andrew J. Flanagin, Keren Eyal, Daisy R. Lemus, and Robert M. McCann. 2003. “Credibility for the 21st Century: Integrating Perspectives on Source, Message, and Media Credibility in the Contemporary Media Environment.” Annals of the International Communication Association 27 (1): 293–335.

- Molina, Maria D., S. Shyam Sundar, Thai Le, and Dongwon Lee. 2019. ““Fake News” is not Simply False Information: A Concept Explication and Taxonomy of Online Content.” American Behavioral Scientist 65 (2): 180–212.

- Nygren, Gunnar. 2003. Granskning med Förhinder: Lokaltidningarnas Granskning i Fyra Kommuner. [Scrutiny with Prevention: The Local Newspapers’ Scrutiny in Four Municipaliteis]. Rapportserie nr. 4 ed. Sundsvall: Demokratiinstitutet.

- Nygren, Gunnar, and Andreas Widholm. 2018. “Changing Norms Concerning Verification. Towards a Relative Truth in Online News?” In Trust in Media and Journalism, edited by Kim Otto, and Andreas Köhler, 39–59. Wiesbaden: Springer. doi:10.1007/978-3-658-20765-6_3.

- Shapiro, Ivor, Colette Brin, Isabelle Bédard-Brûlé, and Kasia Mychajlowycz. 2013. “Verification as a Strategic Ritual: How Journalists Retrospectively Describe Processes for Ensuring Accuracy.” Journalism Practice 7 (6): 657–673. doi:10.1080/17512786.2013.765638.

- Stray, Jonathan. 2019. “Making Artificial Intelligence Work for Investigative Journalism.” Digital Journalism 7 (8): 1076–1097. doi:10.1080/21670811.2019.1630289.

- Thurman, Neil, Konstantin Dörr, and Jessica Kunert. 2017. “When Reporters get Hands-on with Robo-Writing: Professionals Consider Automated Journalism’s Capabilities and Consequences.” Digital Journalism 5 (10): 1240–1259. doi:10.1080/21670811.2017.1289819.

- Ullrich, Carsten, Kerstin Borau, Heng Luo, Xiaohong Tan, Liping Shen, and Ruimin Shen. 2008. “Why web 2.0 is Good for Learning and for Research: Principles and Prototypes.” Proceedings of the 17th international conference on World wide Web. (pp. 705-714).

- Wardle, Claire. 2018. “The Need for Smarter Definitions and Practical, Timely Empirical Research on Information Disorder.” Digital Journalism 6 (8): 951–963.

- Widholm, Andreas. 2016. “Tracing Online News in Motion.” Digital Journalism 4 (1): 24–40.

- Witschge, Tamara, and Gunnar Nygren. 2009. “Journalism: A Profession Under Pressure?” Journal of Media Business Studies 6 (1): 37–59.

- Zelizer, Barbie. 2005. “The Culture of Journalism.” In Mass Media and Society, edited by James P. Curran, and Michael Gurevitch, 198–214. London: Hodder Arnold.