?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Scholars are divided over whether communicating to the public the existence of scientific consensus on an issue influences public acceptance of the conclusions represented by that consensus. Here, we examine the influence of four messages on perception and acceptance of the scientific consensus on the safety of genetically modified organisms (GMOs): two messages supporting the idea that there is a consensus that GMOs are safe for human consumption and two questioning that such a consensus exists. We found that although participants concluded that the pro-consensus messages made stronger arguments and were likely to be more representative of the scientific community’s attitudes, those messages did not abate participants’ concern about GMOs. In fact, people’s pre-manipulation attitudes toward GMOs were the strongest predictor of of our outcome variables (i.e. perceived argument strength, post-message GMO concern, perception of what percent of scientists agree). Thus, the results of this study do not support the hypothesis that consensus messaging changes the public’s hearts and minds, and provide more support, instead, for the strong role of motivated reasoning.

Introduction

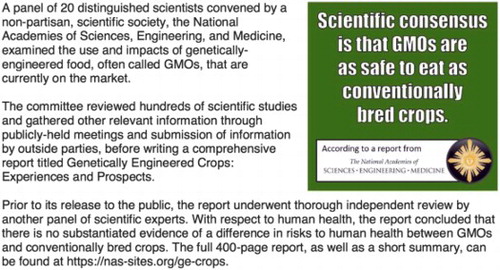

In May of 2016, the National Academies of Sciences, Engineering and Medicine (NASEM) released its report, “Genetically Engineered Crops: Experiences and Prospects.” Written by a panel of experts from diverse disciplines relevant to the issue, the consensus statement included a comprehensive evaluation of the literature, as well as expert and public input. As part of this process, the panelists examined the purported risks and benefits associated with genetically engineered crops and foods (often more colloquially known as genetically-modified organisms, or GMOs), and examined a range of issues including their environmental effects (e.g. crop yields, abundance and diversity of insects, insecticide use, herbicide use), human health effects (e.g. nutrient composition, influence on allergies, incidence of health problems) and other social and economic effects. The NASEM committee concluded that there was “no substantiated evidence of a difference in risks to human health between currently commercialized genetically engineered (GE) crops and conventionally bred crops,” and “no conclusive cause-and-effect evidence of environmental problems from the GE crops.” That carefully composed language was translated by media, such as The New York Times, to mean that GE crops are safe (e.g. Pollack, Citation2016), and the Pew Research Center similarly interpreted this finding as suggesting that GM foods are safe to eat (Kennedy & Funk, Citation2016).

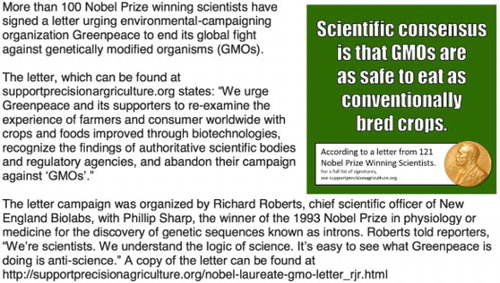

The NASEM is not the only “expert voice” to have spoken on the issue of GMOs. More than 100 Nobel Prize winners signed an open letter to the environmental advocacy organization Greenpeace, castigating the organization’s anti-GMO campaign (Agre et al., Citation2016). In the body of their June 29th, 2016 letter, the Nobel Laureates wrote:

Scientific and regulatory agencies around the world have repeatedly and consistently found crops and foods improved through biotechnology to be as safe as, if not safer than those derived from any other method of production. There has never been a single confirmed case of a negative health outcome for humans or animals from their consumption. Their environmental impacts have been shown repeatedly to be less damaging to the environment, and a boon to global biodiversity.

Though their statement is consistent with that of the NASEM panel, and the Nobel Laureate signatories are esteemed experts in their respective fields of chemistry, physics, literature, medicine, and economics, it should be noted that only some have expertise in biology and fewer still are experts in agricultural biotechnology. As such, the stated position of the Nobel Laureates might logically be seen as less authoritative than that of the NASEM panel of experts.

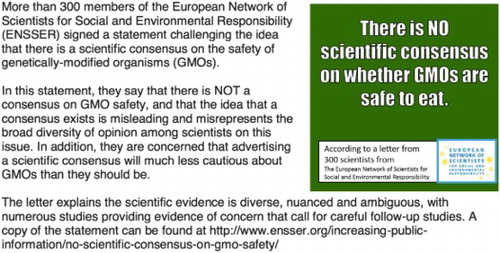

In contrast to the consensus statements by the NASEM panel and the Nobel Laureates concluding that GMOs are safe, other scientists with varying domains of expertise have argued that there is considerable uncertainty about the risks and benefits of GMOs, and therefore that there can be no valid scientific consensus about the safety of GMOs. For example, more than 300 members of the European Network of Scientists for Social and Environmental Responsibility (ENSSER) signed a statement, dated October 21, 2013, that challenges the assertion that there is scientific consensus regarding the impacts of GMOs on human health and the environment (Hilbeck et al., Citation2015). “This claimed consensus on GMO safety does not exist … .,” ENSSER chairperson Dr. Angelika Hilbeck, stated. “Such claims may place human and environmental health at undue risk and create an atmosphere of complacency.” However, like the letter signed by the Nobel Laureates, the signatories from ENSSER represented experts from a multitude of disciplines, many of which are unrelated to GMOs, and may similarly be viewed as carrying less authoritative weight.

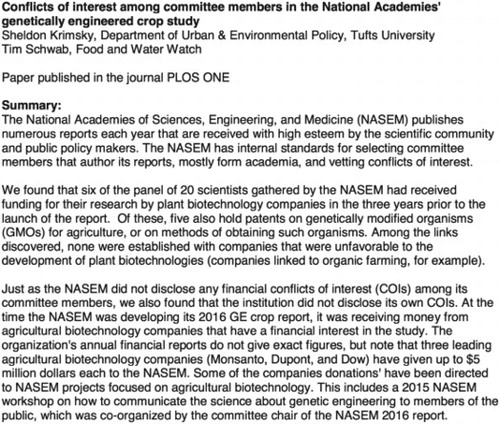

More recently, Sheldon Krimsky and Tim Schwab, a scholar and a journalist known for taking strong positions against GMOs, challenged the NASEM consensus panel’s statement by questioning the impartiality of the panelists who wrote the report (Krimsky & Schwab, Citation2017). They asserted that among the 20 panelists, six received funding from plant biotechnology companies in the three years prior to the launch of the report, five hold patents on GMOs or on the methods of obtaining GMOs, and no panelists had relationships with companies critical of GMO technology (such as those involved with organic farming). Krimsky and Schwab contended that these circumstances reflect undisclosed conflicts of interest that render the conclusions drawn by the panel suspectFootnote1.

The messages from the NASEM, the Nobel Laureates, the ENSSER, and Krimsky and Schwab clearly differ as to whether they support or reject a scientific consensus surrounding the safety of GMOs. Perhaps less apparent to the public is that the relevant expertise these sources bring to the question also varies significantly. Does the public differentiate among these competing voices when considering the messages about GMOs? Are public attitudes influenced by messages about scientific consensus or are they primarily driven by their preexisting views?

In this study we ask (RQ1) whether there are differences in how each of these real-world messages are evaluated, focusing on whether participants believe the messages are representative of the scientific community as a whole—thus, describing the “scientific consensus”— and how strong they perceive the arguments to be. In addition, we ask (RQ2) whether, and if so, to what extent these messages influence participants’ concern about GMOs, including public perceptions of how many scientists agree that GMOs are as safe as their more conventionally grown counterparts.

Does consensus messaging influence public opinion?

To what extent do messages from such varied experts influence public opinion on GMOs? Most work examining the effectiveness of messaging about scientific consensus has focused on climate change. Some have argued that highlighting the scientific consensus on a particular issue can thwart political polarization of that issue (e.g. van der Linden, Clarke, & Maibach, Citation2015; van der Linden, Leiserowitz, Feinberg, & Maibach, Citation2015). This theory seems reasonable. After all, our society is one that relies on a division of cognitive labor (e.g. Kitcher, Citation1990; Weisberg, & Muldoon, Citation2009). We typically trust experts to produce accurate and reliable knowledge in their respective fields (Hardwig, Citation1991; Hendriks, Kienhues, & Bromme, Citation2016). Thus, it ought to follow that we (as non-expert publics) will defer to in-domain experts’ positions, once we know what those positions are.

Yet, public opinion surveys appear to show that members of the public do not know what those positions are, sometimes misunderstanding the consensus surrounding an issue or whether a consensus even exists (e.g. Pew, Citation2016). These misunderstandings have been attributed to exposure to false media balance, a situation in which issues are presented as a two-sided debate, even when the preponderance of evidence is on one side (e.g. Boykoff, Citation2013; see also Dixon & Clarke, Citation2013), as well as to the spread of misinformation by those opposed to specific proposed policy responses (Oreskes & Conway, Citation2010). Therefore, some theorize that correcting misperceptions that surround people’s understanding of consensus can act as a “gateway belief” that leads to positive changes in public attitudes and support (e.g. van der Linden, Leiserowitz et al., Citation2015). In sum, these scholars argue that communicating with the public about scientific consensus can help increase public acceptance and support for positions and actions aligned with that consensus.

However, there are both theoretical and empirical reasons to believe that consensus messaging is ineffective (e.g. Pearce et al., Citation2017). It is widely known, for example, that people engage in motivated reasoning (Kunda, Citation1990), and that information is processed through audience members’ “values filters” (e.g. Brossard, Scheufele, Kim, & Lewenstein, Citation2009; Dunlap & McCright, Citation2008; Kahan, Braman, Slovic, Gastil, & Cohen, Citation2009). Importantly, those who discover that scientists disagree with their own opinions can be motivated to reject scientific consensus to resolve cognitive dissonance (see Pasek, Citation2017). For example, individuals may doubt the credibility of those crafting consensus statements or even interpret consensus as collusion, not as a reflection of the actual weight of evidence. For instance, research has shown that the perceived credibility of environmental and climate scientists is polarized along party lines (Gauchat & O’Brien, Citation2017; Pew Citation2016), and although the issue of GMOs is not politically polarized, only 35% of U.S. adults trust scientists to give full and accurate information about GMOs (Kennedy & Funk, Citation2016). Moreover, recent work by Pasek (Citation2017) found support for the hypothesis that recognizing that scientific consensus exists (i.e. identification of the presence of scientific consensus) is influenced by an individual’s worldview (Kahan, Citation2015; Kahan, Citation2017), and people are “perfectly comfortable” asserting that their views differ from those of most scientists (Pasek, Citation2017). Therefore, those who are motivated to reject the conclusion that GMOs are safe, due to their prior values and beliefs, can comfortably counter argue either that the message is not representative of scientists’ views in general, or like Krimsky and Schwab (Citation2017) question the motivations or credibility of the scientists involved.

What is meant by “scientific consensus”?

Before forming conclusions about whether scientific consensus influences public opinion, scholars must first agree on how to operationalize awareness of scientific consensus, as public knowledge (or opinion) of scientific consensus has been measured in different ways. On one hand, many large-scale public opinion surveys on climate change have assessed awareness of scientific consensus by simply asking participants whether scientists agree on the issue or notFootnote2 (e.g. APPC, Citation2015; Pew, Citation2014; PRRI, Citation2014). In contrast, van der Linden and colleagues, have measured awareness of scientific consensus by asking participants to estimate what percent of climate scientists agree that climate change is happening and is human caused, from 0 to 100 percent (e.g. van der Linden, Leiserowitz et al., Citation2015; van der Linden, Leiserowitz, Rosenthal, & Maibach, Citation2017). Because the latter method of measurement is not bound by the same statistical restrictions as the binary or categorical response options, it allows researchers to capture more variance among the responses. However, the measure also brings up an important question: in the public view, what percentage of scientists must agree for scientific consensus to be established? Any change in perception of consensus found using the measure may not actually indicate that people are moving from rejecting to accepting that there is a consensus. Instead, changes could be reflective of people who already perceive that there is a consensus shifting from their own initial estimates of the percentage of scientists who agree on an issue (e.g. 75%) to the one specified by the consensus message (e.g. 97%, van der Linden, Leiserowitz et al., Citation2015; van der Linden et al., Citation2017).

In addition, we should also ask what establishes consensus in the minds of the public? Before describing the current experiment, we first discuss three potential methods of establishing scientific consensus that are relevant to the messages used in the current study.

Scientists’ opinions

As highlighted by Powell (Citation2016), the Oxford English Dictionary defines consensus as “agreement in opinion; the collective unanimous opinion of a number of persons.” Thus, one definition of scientific consensus is scientists’ opinion; and when communicators use scientific consensus with the intent to persuade, they are hoping that the audiences will defer to that opinion. That is, the public will defer to what scientists collectively believe to be true, without requiring them to produce evidence to back those judgments.

In this context, appeals such as “4 out of 5 dentists recommend flossing” or “97% of climate scientists have concluded that human-caused global warming is happening” (e.g. Cook et al., Citation2013; Oreskes, Citation2004) might be interpreted as an appeal to the public to accept these scientists’ personal beliefs. It is important to note that this theory is reminiscent of the deficit/diffusion model of science communication. In diffusion models, communication channels are unidirectional: Information flows from experts to uninformed publics who are expected to defer to scientific authority. However, as Pearce et al. (Citation2017) stated: “focusing on consensus amongst experts as a route to policy progress misunderstands the role of scientific knowledge in public affairs and policymaking.” Over and over again, communication research has found that deficit/diffusion models of communication are overly simplistic and fail to consider how deeply political many of these issues can be (Hansen, Holm, Frewer, Robinson, & Sandøe, Citation2003; Nisbet & Mooney, Citation2007; Sturgis & Allum, Citation2004). As a result, efforts have been made by science communication scholars to push beyond deficit/diffusion models toward more participatory ones (e.g. Palmer & Schibeci, Citation2014).

Also, crucial is whose opinion counts when establishing consensus? For example, in the messages discussed in this introduction about GMOs, we described different types of expert voices on the issue, ranging from the expert panelists convened for the NASEM committee to Nobel Laureates and ENSSER signatories with expertise not relevant to GMOs. Do the Nobel Laureates’ or ENSSER signatories’ opinions on GMOs count as demonstrations of scientific consensus? It is likely that they should not, given that the majority of those involved do not have expertise relevant to agricultural biotechnology. However, both groups leverage their presumed credibility as scientists and scholars (broadly construed) to imply authority on the issue.

The question of who counts as a scientist also matters in establishing scientific consensus. For example, a study by Pew Research Center that aimed to compare the public’s and scientists’ views on science issues operationalized “scientists” as anyone who was a member of the American Association for the Advancement of Science (AAAS; Pew, Citation2015). Pew found that only 87% of AAAS members say that climate change is mostly due to human activityFootnote3 (Pew, Citation2015). Importantly, however, members of AAAS range from academics actively publishing in various scientific domains to members of the public who are merely curious about science. Should all of these individuals count as “scientists?” Who does the public think counts as a scientist (see Suldovsky, Landrum, & Stroud, Citationunder review)? In fact, using AAAS members as a proxy for scientists, and concluding that 87% agree that climate change is mostly anthropogenic in origin undermines the AAAS’s own statement that, “Based on the evidence, about 97% of climate scientists agree that human-caused climate change is happening,” which it reports on its website’s climate change page (whatweknow.aaas.org; see also van der Linden, Leiserowitz, Feinberg, & Maibach, Citation2014). Arguably, the ability of scientific consensus to influence public opinion depends, at least in part, on who the public considers to be a scientist as well as the expertise and trustworthiness that they afford individuals within that domain (e.g. Landrum, Eaves, & Shafto, Citation2015).

Weight of evidence

In contrast to interpreting scientific consensus as the percentage of scientists who agree on an issue, another possibility is that scientific consensus is perceived to exist based on weight of evidence. Indeed, the original review study from which the 97% number used by AAAS and van der Linden and colleagues (as well as numerous others) arose did not describe the percent of scientists who, on a survey, stated that they believed in human-caused climate change. Instead, the study by Cook et al. (Citation2013), from which this percentage was derived, was a published review of the titles and abstracts of all peer-reviewed articles from 1991 to 2011 that appeared in a Web of Science search using the terms “global climate change” and “global warming” (Cook et al., Citation2013; Powell, Citation2016). In their analysis, the authors included all of the articles that, in their abstracts, explicitly took the stance that climate change exists and was human caused.

This review article was not precisely based on weight of evidence, but it is not precisely a report of opinions either. Powell (Citation2016) argues that Cook et al. (Citation2013) underestimated the percentage of authors’ opinions on climate change by discounting those who did not explicitly endorse the existence of anthropogenic climate change, but instead implicitly endorsed it by examining the effects of anthropogenic climate change. For example, Powell argues, a review of 100 recent articles on lunar craters neither endorse nor reject the idea that those craters were created by meteorite impacts (Citation2016); nonetheless, this does not mean that the authors of these articles deny the craters’ existence.

For a true weight of evidence argument, meta-analyses and reviews of all literature relevant to climate change would need to be compiled and summarized. However, the kind of data on which climate scientists rely does not lend itself well to meta-analysis. The effects of climate change are measured in diverse ways by different disciplines, including atmospheric sciences, agriculture, ecology, entomology, geology, geography, medicine, microbiology, soil science, and others. This expansive literature can be difficult to aggregate, and thus, it is understandable that climate change communicators may prefer to simplify the consensus argument by referring to the estimated proportion of scientists who agree that climate change is real and is human-caused.

Statements from elite scientific organizations

Another basis for establishing scientific consensus is the existence of statements published by consensus panels convened by respected scientific organizations, such as NASEM. The NASEM convenes panels of top experts on an issue (from a variety of disciplinary perspectives) to evaluate the relevant existing literature on that issue to identify what science knows, what is unknown, and steps for future research. With regard to climate change, NASA’s global climate change website (climate.nasa.gov/scientific-consensus/) includes appeals to multiple scientific societies such as AAAS, NASEM, the American Chemical Society, the American Geophysical Union, the American Medical Association, the American Meteorological Society, the American Physical Society, and the Geological Society of America.

Consensus appeals using statements from scientific societies can be perceived as combining scientific opinion with weight of evidence, particularly when these statements are crafted as part of a report on a review of the existing literature by experts with relevant expertise (as is the case with the NASEM panels and reports from the Intergovernmental Panel on Climate Change, or IPCC). Moreover, as a collective group of scientists, each institution likely carries more credibility and social power than any individual scientist or a survey of multiple scientists.

Current study

Here, we focus on the effects of some of the recent messages communicating about the scientific consensus surrounding GMOs discussed above: the report from the National Academies of Sciences, Engineering, and Medicine (NASEM), the letter from the Nobel Laureates (NOBEL), the signed statement by members of the European Network of Scientists for Environmental and Social Responsibility (ENSSER), and the article by Sheldon Krimsky and Tim Schwab (KRIM). In our view, the NASEM panel establishes one type of scientific consensus on GMOs (i.e. a statement from an elite organization following a review of the evidence by relevant experts) whereas the other messages merely support or challenge the existence of a consensus. However, the public may interpret these messages differently than we do. Nobel Laureates arguably are perceived as elite scientists by the public; thus, the public could respect Nobel laureates’ opinions on GMOs and interpret their statement as a demonstration of “scientific consensus” even though the vast majority are not experts on the topic. Similarly, the ENSSER signatories’ statement could be interpreted by the public as representing a scientific consensus that there is no consensus on GMOs. The ENSSER signatories purport to hold positions as scientists and the organization’s emphasis on environmental responsibility in challenging the existence of a scientific consensus on “new genetic modification techniques”Footnote4 could make the signatories appear to have topical – if not directly relevant – expertise. Finally, Krimsky and Schwab composed and published a research article to directly challenge the credibility of the NASEM panel in order to contest the establishment of a scientific consensus on GMOs. These scholars who are publishing about GMOs may be interpreted as experts on the topic, but they also demonstrate a method by which people can reject scientific consensus if they are ideologically predisposed to do so.

How are these different, real-world messages interpreted and are they likely to influence public opinion on GMOs? Here we examine differences in how each of the messages are evaluated (RQ1), looking at whether participants believe the messages are representative of the scientific community and how strong they perceive the arguments to be. In addition, we look at whether, and if so, to what extent these messages influence participants’ concern about GMOs (RQ2), including their perceptions of how many scientists agree that GMOs are as safe as their more conventionally grown counterparts.

Method

We conducted a randomized online survey experiment with a sample of participants from Amazon’s Mechanical Turk (MTurk, N = 1,983)Footnote5. Participants ranged in age from 18 to 81 (M = 36.13, Median = 33 years, SD = 11.8). Regarding self-identified race and ethnicity, about 8% of the sample were Black or African American and 7.6% were Hispanic (see for more detail); 57.5% identified as female, and the median years of education (excluding kindergarten) was 16 (i.e. a 4-year college degree, M = 15.13, SD = 2.48). The sample leaned liberal (Median = “somewhat liberal”), where 52% reported being very or somewhat liberal, 23% reported being moderate, and about 25% identified as very or somewhat conservative. See for a comparison of our sample to census estimates.

Table 1. Our sample demographics compared to the 2015 ACS demographic and housing estimates (https://factfinder.census.org).

Messages tested

Five messages were used in the current study. Two of these, the NASEM and NOBEL messages, contended that scientists agree that GMOs are as safe to eat as conventionally bred crops. Two other messages, ENSSER and KRIM stated, instead, that there is no scientific consensus surrounding GMOs. The fifth message, which acted as our control, was not about GMOs at all, but briefly described the history of baseball. Importantly, while the messages in this study retained the relevant main arguments of their original sources, they were not identical to those written by the organizations. Instead, the language in each message was simplified and abbreviated to increase the likelihood that study participants would read the entire text. In addition, each message was presented with a graphic that helped highlight the bottom line argumentFootnote6. The language of the messages and associated images can be found in the attached Appendix and on the study’s page on osf.io (https://osf.io/4tue8/).

Experiment design

Participants were randomly assigned to read one message in isolation; whether it was one of the pro-consensus messages (i.e. NASEM, NOBEL), one of the anti-consensus messages (i.e. ENSSER, KRIM), or the control message (i.e. history of baseball). All participants answered a series of questions about the messages they read, their views about GMOs, and some items about scientific consensus more broadly (including what percent of scientists must agree on an issue for a “consensus” to be established). These items are described in more detail below.

Reported frequency of purchasing non-GMO labeled foods

Because we aimed to measure any effects of the messages on GMO concern, it was important to have a pre-experiment measurement of attitudes towards GMOs. However, we were concerned that asking about GMO attitudes prior to the experimental manipulation would bias our sample. Instead, participants were asked about the frequency with which they purchase foods with the non-GMO label (on a scale from 1 = Never, even if available, to 5 = Always, when available), an item that was embedded in a series of 9 other shopping behaviors, such as whether the participants use reusable grocery bags, purchase bottled water, use coupons, and/or shop at grocery stores that specialize in organic and natural food. Having the non-GMO item embedded in a series of shopping behaviors helped to conceal our aim to establish a preliminary measurement of participants’ anti-GMO attitudes.

To test the item’s effectiveness as a proxy for anti-GMO attitudes, we compared it to the items we ask later in the survey that comprise our GMO concern index; conducting this comparison only in the control condition, for which GMO concern could not be influenced by the experimental manipulation. In the control condition (n = 100), the average anti-GMO proxy score was 2.77 out of 5 (Median = 3, or “Sometimes select foods with non-GMO label”, SD = 1.09). A Cronbach’s alpha test of inter-item reliability showed that the anti-GMO proxy item is moderately and positively correlated with the whole GMO concern scale (correlation when the item is dropped from the scale: r = 0.44). When the item is included with the others to form the GMO concern index, Cronbach’s alpha is 0.85, but dropping the item slightly increases alpha to 0.90. Thus, although the item is not a perfect approximation of general anti-GMO attitudes, it provides an operable approximation for the purpose of this study.

Dependent variables

Interpretation of the messages (manipulation check).

To check that participants interpreted the consensus and anti-consensus messages as intended (i.e. to serve as a manipulation check), we asked whether the message that they saw presented a case that: (a) people should be concerned that the GMOs currently on the market are not safe, (b) people should withhold judgement about whether the GMOs currently on the market are safe or not safe, or, (c) people should not be concerned, the GMOs that are currently on the market are safe. The two consensus messages should have been interpreted as (c), whereas the two anti-consensus messages should be interpreted as (b). This item was not displayed to participants in the control condition in which the message did not discuss GMOs.

Representative of the scientific community

Participants were also asked how likely it is, in their opinion, that the position on GMOs taken by the organization (as described in the statements) represents the position of the scientific community as a whole on the issue. Participants used a 6-point Likert-type response scale from 1 (extremely unlikely) to 6 (extremely likely). This item was not displayed to the participants in the control condition.

Argument strength

To capture participants’ perceptions of the strength of the message’s argument, we asked a series of four items that have been used to test argument strength in previous literature (Zhao, Strasser, Capella, Lerman, & Fishbein, Citation2011). These include how believable participants found the argument to be, how convincing they found the argument that was made by the statement, how much more confident the statement makes them feel about eating GMOs, and how much more anxious the statement makes them feel about eating GMOs. Each item was scored on a 5-point Likert-type scale. A parallel analysis suggested that the scale breaks down into two separate factors.Footnote7 Thus, we calculated argument strength using only the “believable” and “convincing” itemsFootnote8. These items also were not displayed to participants in the control condition.

Concern about GMOs

As alluded to earlier, we wanted to capture participants’ concern about GMOs to measure the direct and indirect influences of the messages on this variable. To do this, we asked three questions about GMOs that were scored so that higher scores represented stronger anti-GMO attitudes (i.e. greater concern about GMOs). One item focused on agreement with the statement that “GMOs are as safe as conventionally-grown crops” on a scale from 1 (strongly agree) to 6 (strongly disagree). Another focused on whether foods with GMOs are (1) much better for your health than foods with no GMOs, (2) a little better, (3) neither better nor worse, (4) a little worse, or (5) much worse for your health. Finally, one question focused on how risky participants’ perceived eating GMOs to be, from 1 (very low risk) to 5 (very high risk). The order of these items was randomized among participants. A parallel analysis (using the nFactors package in R) demonstrates that these items load onto one factor, and their inter-item reliability is α = 0.89 (95% CI = 0.89, 0.90). To create an index of GMO concern, we standardized and averaged these three variables (i.e. GMOConcern) and then the average was standardized (i.e. ZGMOConcern)Footnote9. In the control condition, for which participants would not be influenced by the manipulation, the average GMO concern score was 0.03 (Median=-0.9, SD = 1.05, n = 101) and scores ranged from −2.09 to 2.15.

Percent of scientists who agree

Because other studies of consensus (though primarily focused on climate change) measured participants’ estimations of the percent of scientists who agree with a statement (e.g. that anthropogenic climate change is happening; that GMOs are as safe as non-GMO counterparts for human consumption), we also measured this variable in this way. Participants were told:

Scientific consensus is the collective judgment of a community of scientists in a particular field on a particular issue. The existence of scientific consensus implies that scientists agree on the position, but it does not mean that all scientists agree. As far as you know, what percent of scientists (who are working in GMO-relevant fields) agree with the statement that “the GMOs currently on the market are as safe as their non-GMO counterparts”? If you aren’t sure, take your best guess.

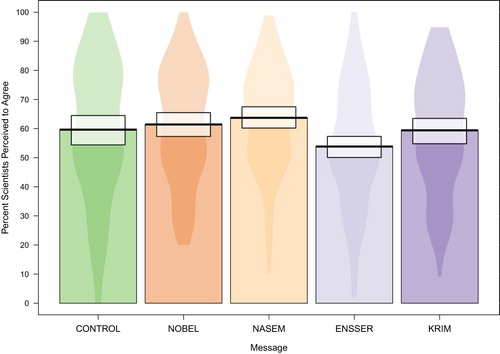

Participants were presented with a slider scale and they could choose any whole number between 0 and 100. This item was also displayed to participants in the control condition. In that condition, the average estimated percent of scientists in agreement was about 60% (Median = 60%, SD = 24%, n = 101).

Results

This study focuses on five conditions in which participants were only shown one message: The National Academies of Sciences, Engineering, and Medicine message (i.e. NASEM), the Nobel prize winning scientists message (i.e. NOBEL), the anti-consensus message from the European Network of Scientists for Social and Environmental Responsibility (i.e. ENSSER), a summary of Krimsky’s & Schwab’s paper (i.e. KRIM) challenging the findings from the NASEM panel, and the control message (i.e. CONTROL) briefly describing the history of baseball. Analyses were conducted in R (R Core Team, Citation2017) and the results of the ANOVAs are reported using type III sums of squares with partial eta squared measures of effect size (see ). R code is available in the supplementary materials.

Preliminary analysis

Prior to testing our research questions, we wanted to ensure that the messages were interpreted as intended – that is, that the consensus messages communicated that there is a scientific consensus that GMOs are safe for human consumption and the anti-consensus messages communicated that people should withhold judgment about the safety of GMOs – and whether interpretation of the messages varied based on people’s attitudes toward GMOs (i.e. the frequency with which people purchase non-GMO labeled foods).

Interpretation of the message

The majority of the participants interpreted the messages as intended: 95% of those who saw the NASEM message and 83% who saw the NOBEL message interpreted those messages as meaning that GMOs are safe, whereas 77% of those who saw the ENSSER message and 62% of those who saw the KRIM message interpreted those messages as meaning that people should withhold judgement (“wait and see”)Footnote10.

To test the influence of anti-GMO attitudes on interpretation of the message, we conducted a multinomial logistic regression, comparing the probabilities that participants say the message meant “not safe” or “wait and see” to the probabilities that participants say the message meant “safe”. Moreover, each message was compared to the NASEM messageFootnote11. As stated above, participants in the NASEM and NOBEL conditions were most likely to interpret the messages as stating that GMOs are safe. Although the ENSSER and KRIM messages generally were perceived as telling participants to withhold judgment (i.e. “wait and see”), as the anti-GMO proxy increased (i.e. participant’s frequency of purchasing non-GMO labeled food), so did the likelihood of their interpreting the messages as saying GMOs are not safe, b = 0.20, p = .011 (effect of anti-GMO proxy see ).

Table 2. Coefficients (b) and averaged predicted probabilities (pp) from multinomial logistic regression.

For a statistical summary of the following results, see . Type 3 sums of squares are reported with partial eta squared measurements of effect size.

Table 3. Summary of study results.

RQ1. How are the messages evaluated?

Is the message representative of the scientific community?

Our first analyses examined whether the perception that the message was representative of “the scientific community as a whole” varied based on message (i.e. condition), frequency with which one purchases non-GMO labeled food, and/or an interaction between the two. Participants in the control condition were not asked this question.

Our analysis found that participants generally perceived the two consensus messages as more representative of the scientific community than the two anti-consensus messages (NASEM vs. ENSSER: diff = 0.56, 95% CI[0.10, 1.01], p = .009; NASEM vs. KRIM: diff = 0.54, 95% CI[0.06, 1.02], p = .022; NOBEL vs. ENSSER: diff = 0.51, 95% CI[0.05, 0.97], p = .025; NOBEL vs. KRIM: diff = 0.49, 95% CI[0.00, 0.98], p =.050). The two consensus messages did not significantly differ from one another (NASEM vs. NOBEL: diff = 0.05, 95% CI[–0.43, 0.53], p = .993); and the two anti-consensus messages were also not significantly different from each other (ENSSER vs. KRIM: diff = 0.02, 95% CI[–0.44, 0.48], p = 1.00).

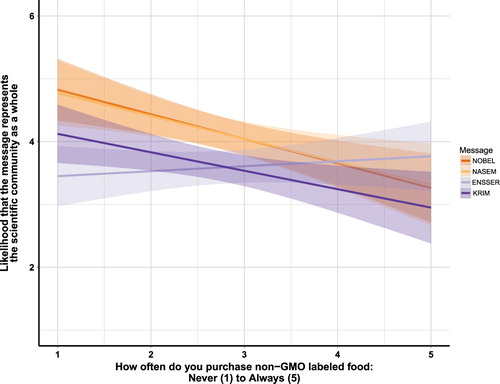

Moreover, people who were more frequent purchasers of non-GMO labeled foods perceived the messages as being less likely to be representative of the scientific community (B = –0.39). More importantly, however, there was an interaction effect suggesting that while the perception that the NASEM, NOBEL, and KRIMFootnote12 messages represent the scientific community drops as the frequency with which people report purchasing non-GMO labeled foods increases, for the ENSSER anti-consensus message, the relationship is a slightly positive one (see ).

How strong are the arguments made by the messages?

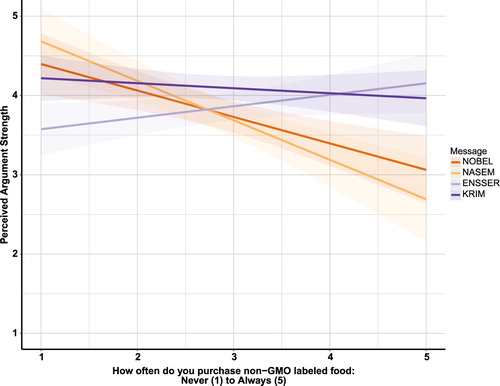

We were also interested in examining whether the messages were perceived to vary in argument strength. To examine this, we conducted a GLM on the argument strength score, using message, frequency of purchasing non-GMO labeled foods, and the interaction between the two as predictors. Despite a small main effect of message (see ), post-hoc tests with Tukey correction show no significant effects between the conditions, apart from a marginal effect between the KRIM and NOBEL messages (diff = 0.32, 95% CI[–0.02, 0.68], p = .078), with participants rating the NOBEL message (M = 3.79, SD = 0.99) lower on average than the KRIM message (M = 4.11, SD = 0.7).

People who report purchasing non-GMO labeled food more often, rated the messages as lower in argument strength (B =–0.33, raw correlation: Pearson’s r = –0.17, 95% CI[–0.27, –0.07], p < .001). Moreover, there was an interaction effect suggesting that, for the two pro-consensus messages, the perceived argument strength decreases as anti-GMO proxy scores increase. In contrast, the perceived argument strength for the two anti-consensus messages stay relatively stable across all levels of the anti-GMO proxy, with argument strength for the ENSSER message slightly increasing (see ).

RQ2: do the messages differentially influence beliefs about GMOs?

To examine this question, we first looked at concern about GMOs and then examined beliefs about the scientific consensus surrounding GMOs (as measured by the perception of the proportion of scientists who agree that GMOs are safe as their non-GMO counterparts).

Do the messages influence concern about GMOs?

One of the purposes of consensus messaging – at least as it is described in the gateway belief model – is to influence attitudes towards the topic. Therefore, an important question for the current research is whether the messages used had an impact on concern about GMOs. To examine this question, we compared GMO concern in the four single message conditions (i.e. NOBEL, NASEM, ENSSER, & KRIM) to GMO concern in the control condition. As with the previous two analyses, we conducted a GLM analysis with message, frequency with which people purchase non-GMO labeled food, and the interaction between the two as predictors.

Unlike with the previous variables, we found no statistically significant effect of message. Unsurprisingly, people who report purchasing non-GMO labeled food more frequently were also more concerned about GMOs (B = 0.43; raw correlation: r = .53, 95% CI[0.46, 0.60], p < .001). However, there was no significant interaction of message and frequency of purchasing non-GMO labeled foods on GMO concern. See .

Do the messages influence perceptions of scientific consensus?

Our last dependent variable is one modeled after van der Linden et al. (Citation2017), which operationalizes participants’ identification or understanding of consensus as their estimates of what percentage of scientists agree. Here, we asked participants what percentage of scientists (who are working in GMO-relevant fields) agree with the statement that “the GMOs currently on the market are as safe as their non-GMO counterparts?” Again, it is important to note that in the control condition, for which there was no message about GMO consensus, participants on average estimated that 59.64% of scientists agree that GMOs are as safe as their non-GMO counterparts. We found no effect of message, F(4, 472) = 0.64, p = .632Footnote13. See .

Figure 3. Violin plot of percent of scientists perceived to agree by condition. The “violins” illustrate kernel probability density (i.e. width is representative of the proportion of responses located at that value on the Y-axis). The thick black line is located at the mean value for each message and the error, illustrated by the white box, is the Bayesian high density interval.

Participants who reported purchasing more non-GMO labeled foods reported lower percentages of scientists who would be in agreement (B = –5.92, raw correlation: r = –0.24, 95% CI[–0.32, –0.16], p < .001), but there were no interactions between purchasing non-GMO labeled foods and message read (i.e., condition). See .

Discussion

This study was designed to evaluate four different messages about the consensus (or lack thereof) regarding GMOs, each from a different “expert” voice: The National Academies of Sciences, Engineering, and Medicine consensus panelists’ message (i.e. NASEM), the Nobel prize winning scientists’ consensus message (i.e. NOBEL), the anti-consensus message from the European Network of Scientists for Social and Environmental Responsibility (i.e. ENSSER), and a summary of Krimsky’s & Schwab’s anti-consensus paper (i.e. KRIM) challenging the findings from the NASEM panel.

Generally, participants interpreted the messages as intended: the two consensus messages were interpreted as saying that the GMOs currently on the market are safe and the two anti-consensus messages were seen as saying that judgment of the safety of GMOs should be withheld. Participants saw the two consensus messages as more representative of the scientific community and as stronger arguments than the two anti-consensus messages. Importantly, however, there were interaction effects with our proxy for anti-GMO attitudes, measured before participants saw the messages: the more frequently participants reported purchasing non-GMO labeled food items, the less the consensus messages were seen as representative of the scientific community and as being strong arguments. People's reported frequency of purchasing non-GMO labeled foods did not seem to influence the two anti-consensus messages in the way that it influenced the consensus messages.

Moreover, the consensus and anti-consensus messages did not seem to assuage or provoke GMO concern compared to the control condition. Although this contrasts with what would be predicted by the gateway belief model (van der Linden, Leiserowitz et al., Citation2015), experimental data conducted by the same authors finds results similar to ours (also see Kahan, Citation2017). In a study using the data that was also reported as part of their gateway belief model (which appeared in van der Linden et al., Citation2014), the authors report finding no significant effects of their consensus messaging treatments on important climate change attitudesFootnote14, such as climate change belief and support for action (van der Linden et al., Citation2014).

Prior work has also examined the influence of consensus messaging on the estimated percentage of scientists who agree. In those cases, the consensus statements specifically articulated a percentage of scientists in agreement (e.g. 97% of scientists). However, the consensus messages about GMOs currently in the media do not mention a specific percentage of scientists who agree that GMOs are safe. So, we were interested in whether reading a consensus message would increase participants’ estimations of what percent of scientists agree, in the absence of an anchoring point. Yet, like for GMO concern, participants’ estimates of what percent of scientists agree that GMOs are safe was only predicted by the anti-GMO attitudes proxy (i.e. the frequency with which they purchased non-GMO labeled foods before reading the messages), and not by either the pro or anti-consensus messages.

There is some debate over the power of consensus messaging to move people’s opinions on issues such as climate change (e.g. Pearce et al., Citation2017) and whether framing knowledge about scientific consensus is actually a “gateway cognition” to public support (van der Linden, Leiserowitz et al., Citation2015; Kahan, Citation2017). Whereas most of this work has focused on climate change, our research examines consensus messaging in a different setting – the safety of genetically-modified organisms. Unlike climate change, public opinion surrounding GMOs is not polarized along political ideology, despite being a controversial issue.

We also differ from previous work in that the consensus messages that we used in this experiment did not mention a specific percentage of scientists who were in agreement but spoke about GMOs and consensus more broadly. Although we find some differences based on condition in how messages are interpreted (e.g. whether or not they are representative of scientific consensus as a whole, the strength of the arguments they make), we find no evidence that the existing consensus messages on GMOs (namely the NASEM consensus report) affects people’s concern about GMOs. In fact, the only significant predictor of GMO concern is the pre-message measurement of anti-GMO attitudes – that is, the frequency with which people purchase non-GMO labeled food.

As with most studies, ours has limitations that should be taken into consideration when interpreting the results. First, our sample of participants comes from Amazon’s Mechanical Turk (MTurk) as opposed to a more nationally-representative panel. MTurk samples tend to be a bit more liberal and higher educated than the nation as a whole (see ). However, this matters less for examining GMOs than for examining climate change (the latter is very politically polarized, with most liberals agreeing that anthropogenic climate change is real and is problematicFootnote15, whereas the issue of GMO is not politically polarizedFootnote16, see Pew, Citation2015) and for experimental studies that use random assignment, like ours. Second, in contrast to using an easily digestible graphic that quantifies consensus (e.g. a pie chart showing that 97% of scientists agree that climate change is real and human-caused; e.g. van der Linden et al., Citation2014), the consensus messages we used were edited versions of existing, lengthy messages. We anticipated that participants would not read, for example, the 420-page NASEM consensus report or the 17-page Krimsky and Schwab (Citation2017) article in its entirety. We did, however, use existing short descriptions where possible (e.g. the abstract from Krimsky & Schwab, Citation2017). Thus, care should be taken when generalizing our results beyond our edited versions of the original messages.

We would also like to point out limitations to the examination of consensus messaging more generally. Indeed, some of the research surrounding the influence of consensus messaging relies on a series of untested assumptions that we briefly described in the introduction. First, some of this work has assumed that the best way to measure perceptions of consensus is to ask participants what percentage of scientists agree. Second, they have assumed that the communicators of scientific consensus will be seen as credible by all audiences and that domain-specific scientists (e.g. climate scientists, GMO scientists) are perceived as being as credible as scientists broadly speaking (though evidence suggests some people are skeptical of the scientists who study climate change and those who study GMOs; e.g. Kennedy, Citation2016; Kennedy & Funk, Citation2016). And third, scholars studying this issue assume that even people who are ideologically-predisposed to reject a specific issue believe that they should, and will, update their views to align with scientific consensus. Though we have touched on some of these in the current research, these assumptions should be addressed by future research conducted with nationally-representative populations.

Moreover, if studies continue to ask people what proportion of scientists agree on an issue as a measure of understanding whether a consensus exists, it is vital to ask participants at what percentage of scientists must be in concordance in order to say that consensus actually exists. Although we did not have a nationally-representative sample, we asked our participants what percent of scientists must agree on an issue for consensus to be established. Interestingly, our sample reported a mean of about 62% of scientists needed to agree, with wide variation in individual responses (Median = 64.5, SD = 21.48). Thus, it is possible that effects that have been found by studies, such as van der Linden et al. (Citation2014) examining changes in the estimated percent of scientists from an average of 67% to 97% could simply be moving people who already believe that climate change is a serious issue to the more accurate percent (the 97%), while leaving those who reject climate change to still say something lower. It is also worth noting that we asked our sample to what extent they agreed with the statement “people should update their views to match what scientific consensus says” (1 = strongly disagree to 6 = strongly agree). On average, participants only agreed somewhat with this statement (M = 3.80, Median = 4 or “somewhat agree”, SD = 1.32), forcing us to question whether, and if so, to what extent does scientific consensus even matter to the public?

Aggregating findings on a topic and determining where the science currently stands are clearly important responsibilities of the scientific community and science should continue to communicate what it knows to the public. However, it should not be assumed that such messaging about scientific consensus will change the hearts and minds of those who are ideologically or otherwise predisposed to reject issues that challenge their views. Current methods of communicating consensus rely on diffusion/deficit models of communication, making the assumption that rejection of scientific consensus occurs because people are misinformed about it (e.g. Oreskes & Conway, Citation2010), instead of recognizing that there may have ideological reasons to reject it (e.g. Kahan, Citation2015; Pasek, Citation2017). Our study supports the latter. At least in the short term, appeals to experts and presentation of hard facts about the safety of GMOs do not appear to be effective in changing attitudes.

Supplemental Material

Download Zip (5.4 MB)Acknowledgements

The authors would like to thank Brianne Suldovsky for her feedback on the experimental design and the staff of the Annenberg Public Policy Center for their help and support.

Disclosure Statement

No potential conflict of interest was reported by the authors.

ORCID

Asheley R. Landrum http://orcid.org/0000-0002-3074-804X

William K. Hallman http://orcid.org/0000-0002-4524-9876

Kathleen Hall Jamieson http://orcid.org/0000-0002-4167-3688

Notes

1. The NASEM disagrees and responded to the allegations made by Krimsky and Schwab in a press release (see http://www8.nationalacademies.org/onpinews/newsitem.aspx?RecordID=312017b) defending the rigor of their process, but they also are reportedly open to reviewing their criteria for what constitutes a conflict of interest (Basken, Citation2017).

2. Though, recent Pew Research Center surveys have asked participants to estimate how many climate scientists say that human behavior is mostly responsible for climate change with the following response options: “almost all”, “more than half”, “about half,” “fewer than half,” or “almost none” (Pew Citation2016).

3. Relevant to the current study, 88% of AAAS members surveyed in the Pew study reported that they believe it is safe to eat GMOs.

5. Note that the experiment consisted of 20 between-participants conditions that made up three studies. Each condition was seen by approximately 100 participants. Due to length restrictions, we discuss only the first of the studies in this manuscript, which uses conditions 1 through 4 as well as the control condition. The total N for this study is 485.

6. Unlike the other messages, the KRIM message was not presented with a graphic as the message was originally part of a separate study. However, we thought it would be useful to examine any potential effects of the KRIM message on participants’ GMO concern in the current study. Despite not being presented with an image, the KRIM message did not differ in the analyses from the other anti-consensus message (see Results).

7. This is confirmed with other non-graphical solutions to Scree test, including eigenvalues (>mean) and optimal coordinates, as well as the acceleration factor. See supplementary materials for more detail.

8. In the original manuscript draft, we ran the analyses with all four items combined. A reviewer suggested that we ought to separate these out, which was consistent with the parallel analyses (see above). Importantly, the results do not change significantly as a result of this edit.

9. The GMO Concern was re-standardized after the three contributing variables were averaged so that participants scores on the index of GMO concern would reflect their relative score within the sample, which we believe gives the scores more meaning than the average of three standardized numbers.

10. For NASEM, 2% said “not safe” and 3% said “wait”. For NOBEL, 3% said “not safe” and 14% said “wait”.

11. Overall, there was a significant effect of Message (LR Chisq = 319.03, p < .001) and a significant effect of the anti-GMO proxy (LR Chisq = 8.24, p = .016).

12. At first it may seem counterintuitive that the KRIM message should show the same pattern as NASEM and NOBEL as opposed to the ENSSER message. Although our question was meant to ask whether the message written by the authors was representative of the scientific community as a whole, it is possible that participants in the KRIM condition thought we were asking about the NASEM message. Therefore, what we are seeing is that participants who buy more non-GMO products are more likely to believe the KRIM argument that the NASEM consensus message doesn’t represent the scientific community as a whole.

13. In the gateway belief model, perceptions of scientific agreement mediate the relationship between whether people saw a consensus statement (versus a control) and concern about GMOs. This requires, of course, a positive relationship between presence of consensus message and percent of scientists presumed to agree. As we found no significant relationship between these two variables, it is unnecessary to run the full model. That said, we include this analysis in our supplemental materials. At least in part, this is likely due to the type of consensus message used; the National Academies Consensus report and the Nobel Prize Winners Letter did not mention a specific percentage of scientists who agreed with a conclusion that could help anchor the consensus estimates.

14. The authors clarify in their response to Kahan that overall main effects (when collapsing across treatment conditions) were found for two of the four attitudes variables (see van der Linden, Leiserowitz, & Maibach, Citation2017). The authors attribute the non-significance of the “climate change is happening” and the “public support for action” variables to the unbalanced sample sizes (989 in the collapsed treatment conditions and 115 in the control condition; van der Linden et al., Citation2017).

15. Therefore, it makes little sense to use MTurkers in a study that is supposed to change their beliefs about climate change when they already accept climate change.

16. When asked about the safety of consuming genetically modified foods, 37% of conservatives, 36% of moderates, and 41% of liberals report that they are safe to eat (Pew, Citation2015).

References

- Agre et al. (2016). Laureates letter supporting precision agriculture (GMOS). Retrieved from http://supportprecisionagriculture.org/nobel-laureate-gmo-letter_rjr.html.

- APPC. (2015). Annenberg Public Policy Center Papal Encyclical Survey.

- Basken, P. (2017). Under fire, National Academies Toughen Conflict-of-Interest Policies. Chronicle of Higher Education. Retrieved from https://www.chronicle.com/article/Under-Fire-National-Academies/239885.

- Boykoff, M. T. (2013). Public enemy No. 1? Understanding media representations of outlier views on climate change. American Behavioral Scientist, 57(6), 796–817. doi: 10.1177/0002764213476846

- Brossard, D., Scheufele, D. A., Kim, E., & Lewenstein, B. V. (2009). Religiosity as a perceptual filter: Examining processes of opinion formation about nanotechnology. Public Understanding of Science, 18(5), 546–558. doi: 0.1177/0963662507087304

- Cook, J., Nuccitelli, D., Green, S. A., Richardson, M., Winkler, B., Painting, R., … Skuce, A. (2013). Quantifying the consensus on anthropogenic global warming in the scientific literature. Environmental Research Letters, 8(2), 024024. doi: 10.1088/1748-9326/8/2/024024

- Dixon, G. N., & Clarke, C. E. (2013). Heightening uncertainty around certain science: Media coverage, false balance, and the autism-vaccine controversy. Science Communication, 35(3), 358–382. doi: 10.1177/1075547012458290

- Dunlap, R. E., & McCright, A. M. (2008). A widening gap: Republican and democratic views on climate change. Environment: Science and Policy for Sustainable Development, 50(5), 26–35.

- Gauchat, G., O’Brien, T., & Mirosa, O. (2017). The legitimacy of environmental scientists in the public sphere. Climatic Change, 143(3-4), 297–306. doi: 10.1007/s10584-017-2015-z

- Hansen, J., Holm, L., Frewer, L., Robinson, P., & Sandøe, P. (2003). Beyond the knowledge deficit: Recent research into lay and expert attitudes to food risks. Appetite, 41(2), 111–121. doi: 0.1016/S0195-6663(03)00079-5

- Hardwig, J. (1991). The role of trust in knowledge. The Journal of Philosophy, 88(12), 693–708. doi: 10.2307/2027007

- Hendriks, F., Kienhues, D., & Bromme, R. (2016). Trust in science and the science of trust. In B. Blöbaum (Ed.), Trust and communication in a digitized world. Models and concepts of trust research (pp 143–159). Berlin: Springer.

- Hilbeck, A., et al. (2015). No scientific consensus on GMO safety. Environmental Sciences Europe, 27(4), doi: 10.1186/s1230

- Kahan, D. M. (2015). Climate-science communication and the measurement problem. Advances in Political Psychology, 36(S1), 1–43. doi: 10.1111/pops.12244

- Kahan, D. M. (2017). The “gateway belief” illusion: Reanalyzing the results of a scientific-consensus messaging study. The Journal of Science Communication, 16(05), A03. Retrieved from https://jcom.sissa.it/sites/default/files/documents/JCOM_1605_2017_A03.pdf

- Kahan, D., Braman, D., Slovic, P., Gastil, J., & Cohen, G. (2009). Cultural cognition of the risks and benefits of nanotechnology. Nature Nanotechnology, 4, 87–90. doi: 10.1038/nnano.2008.341

- Kennedy, B. (2016). Conservative Republicans especially skeptical of climate scientists’ research and understanding. FacTank News in Numbers. Pew Research Center. Retrieved from http://www.pewresearch.org/fact-tank/2016/10/04/conservative-republicans-especially-skeptical-of-climate-scientists-research-and-understanding/.

- Kennedy, B., & Funk, C. (2016). Many Americans are skeptical about scientific research on climate and GM foods. FacTank: News in Numbers. Pew Research Center. Retrieved from http://www.pewresearch.org/fact-tank/2016/12/05/many-americans-are-skeptical-about-scientific-research-on-climate-and-gm-foods/.

- Kitcher, P. (1990). The division of cognitive labor. The Journal of Philosophy, 87(1), 5–22. doi: 10.2307/2026796

- Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108(3), 480–498. doi: 10.1037/0033-2909.108.3.480

- Krimsky, S., & Schwab, T. (2017). Conflicts of interest among committee members in the national academies’ genetically engineered crop study. PLoS ONE, 12(2), e0172317. doi: 10.1371/journal.pone.0172317

- Landrum, A. R., Eaves, B. S., & Shafto, P. (2015). Learning to trust and trusting to learn: A theoretical framework. Trends in Cognitive Sciences, 19(3), 109–111. doi: 10.1016/j.tics.2014.12.007

- National Academies of Sciences, Engineering, and Medicine. (2016). Genetically engineered crops: Experiences and prospects. Washington, DC: The National Academies Press. doi: 10.17226/23395

- Nisbet, M., & Mooney, C. (2007). Framing Science. Science, 316(5821), 56. doi: 10.1126/science.1142030

- Oreskes, N. (2004). Beyond the ivory tower: The scientific consensus of climate change. Science, 306(5702), 1686. doi:10.1126/science.11036

- Oreskes, N., & Conway, E. M. (2010). Merchants of doubt: How a handful of scientists obscured the truth on issues from tobacco smoke to global warming. New York, NY: Bloomsbury.

- Palmer, S. E., & Schibeci, R. A. (2014). What conceptions of science communication are espoused by science research funding bodies? Public Understanding of Science, 23(5), 511–527. doi: 10.1177/0963662512455295

- Pasek, J. (2017). It’s not my consensus: Motivated reasoning and the sources of scientific illiteracy. Public Understanding of Science. Manuscript in press.

- Pearce, W., Grundmann, R., Hulme, M., Raman, S., Kershaw, E. H., & Tsouvalis, J. (2017). Beyond counting climate consensus. Environmental Communication, 11, 723–730. doi: 10.1080/17524032.2017.1333965

- Pew Research Center. (2014). General public science survey final questionnaire August 15-25, 2014.

- Pew Research Center. (2015). Public and scientists views on science and society. Retrieved from http://www.pewinternet.org/interactives/public-scientists-opinion-gap/

- Pew Research Center. (2016). The politics of climate. Retrieved from http://www.pewinternet.org/2016/10/04/the-politics-of-climate/

- Pollack, A. (2016). Genetically engineered crops are safe, analysis finds. The New York Times. Retrieved from https://www.nytimes.com/2016/05/18/business/genetically-engineered-crops-are-safe-analysis-finds.html.

- Powell, J. L. (2016). The consensus on anthropogenic global warming matters. Bulletin of Science, Technology, & Society, 36(3), 157–163. doi: 10.1177/0270467617707079

- PRRI. (2014). PRRI/AAR 2014 religion, values, & climate change survey: September 18-October 8, 2014.

- R Core Team (2017). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

- Sturgis, P., & Allum, N. (2004). Science in society: Re-evaluating the deficit model of public attitudes. Public Understanding of Science, 13(1), 55–74. doi: 10.1177/0963662504042690

- Suldovsky, B., Landrum, A. R., & Stroud, N. (under review). Public perceptions of who counts as a scientist for controversial science. Manuscript under review.

- van der Linden, S. L., Clarke, C. E., & Maibach, E. W. (2015). Highlighting consensus among medical scientists increases public support for vaccines: Evidence from a randomized experiment. BMC Public Health, 15(1207), 352. doi: 10.1186/s12889-015-2541-4

- van der Linden, S. L., Leiserowitz, A. A., Feinberg, G. D., & Maibach, E. W. (2014). How to communicate the scientific consensus on climate change: Plain facts, pie charts, or metaphors. Climatic Change, 126(1-2), 255–262. doi: 10.1007/s10584-014-1190-4

- van der Linden, S. L., Leiserowitz, A. A., Feinberg, G. D., & Maibach, E. W. (2015). The scientific consensus on climate change as a gateway belief: Experimental evidence. PLoS ONE, 10(2), e0118489. doi: 10.1371/journal.pone.0118489

- van der Linden, S. L., Leiserowitz, A. A., & Maibach, E. W. (2017). Gateway illusion or cultural cognition confusion? Journal of Science Communication, 16(5), A04. doi:10.17863/CAM.22574

- van der Linden, S. L., Leiserowitz, A., Rosenthal, S., & Maibach, E. (2017). Inoculating the public against misinformation about climate change. Global Challenges, 1(2), 1600008. doi: 10.1002/gch2.201600008

- Weisberg, M., & Muldoon, R. (2009). Epistemic landscapes and the division of cognitive labor. Philosophy of Science, 76(2), 225–252. doi: 10.1086/644786

- Zhao, X., Strasser, A. A., Cappella, J. N., Lerman, C., & Fishbein, M. (2011). A measure of perceived argument strength: Reliability and validity in health communication contexts. Communication Methods and Measures, 5(1), 48–75. doi: 10.1080/19312458.2010.547822