?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Growing demand for seafood and reduced fishery harvests have raised intensive farming of marine aquaculture in coastal regions, which may cause severe coastal water problems without adequate environmental management. Effective mapping of mariculture areas is essential for the protection of coastal environments. However, due to the limited spatial coverage and complex structures, it is still challenging for traditional methods to accurately extract mariculture areas from medium spatial resolution (MSR) images. To solve this problem, we propose to use the full resolution cascade convolutional neural network (FRCNet), which maintains effective features over the whole training process, to identify mariculture areas from MSR images. Specifically, the FRCNet uses a sequential full resolution neural network as the first-level subnetwork, and gradually aggregates higher-level subnetworks in a cascade way. Meanwhile, we perform a repeated fusion strategy so that features can receive information from different subnetworks simultaneously, leading to rich and representative features. As a result, FRCNet can effectively recognize different kinds of mariculture areas from MSR images. Results show that FRCNet obtained better performance than other classical and recently proposed methods. Our developed methods can provide valuable datasets for large-scale and intelligent modeling of the marine aquaculture management and coastal zone planning.

1. Introduction

Marine aquaculture has become an essential agricultural sector in China’s coastal area, offering significant potential for food production and economic development (Campbell and Pauly Citation2013; Burbridge et al. Citation2001; Gentry et al. Citation2017). It has increased from 10.6 million tons in 2000 (Bureau of Fisheries of the Ministry of Agriculture Citation2001) to 20.7 million tons in 2019 (Bureau of Fisheries of the Ministry of Agriculture Citation2020), accounting for nearly 60% of the global aquaculture production. However, such rapid and excessive development has produced a large amount of farm chemicals, fertilizer, residual feed, and excrement in the marine environment, causing severe pollution to the water (Tovar et al. Citation2000), sediment (Rubio-Portillo et al. Citation2019), and marine biology (Rigos and Katharios Citation2010). Therefore, accurate mapping of marine aquaculture areas is important for protecting the marine environment and coastal resources, which can be served for the understanding of spatio-temporal distribution of mariculture areas, the estimation of mariculture production, and the carrying capacity of coastal environment.

For the monitoring of large-scale mariculture areas in complex marine environments, remote sensing technology offers ideal monitoring ability for its broad coverage image, regular revisit period, and lower economic cost (El Mahrad et al. Citation2020; Kachelriess et al. Citation2014). To identify mariculture areas from remote sensing images, researchers have tried to propose various methods, which can be summarized as follows: visual interpretation, spectrum or spatial features analyses, object-based image analysis (OBIA), and deep learning methods. Visual interpretation is less adopted, because it is a labor-intensive and time-consuming task. Spectrum or spatial features analyses, such as Otsu threshold or texture-enhanced methods (Fan et al. Citation2015; Lu et al. Citation2015), are commonly used in the pixel-based mapping process. OBIA is generally adopted for the interpretation of detailed information from high-spatial-resolution (HSR) images (Fu et al. Citation2019; Wang et al. Citation2017; Zheng et al. Citation2017; Duan et al. Citation2020). As all the mentioned methods are based on human-designed features, they can’t achieve robustness and high accuracy values at the same time (Zhang, Zhang, and Du Citation2016). Therefore, researchers have tried to use deep learning approaches (Yuan et al. Citation2020; Ekim and Sertel Citation2021; Castelo-Cabay, Piedra-Fernandez, and Ayala Citation2022), especially fully convolutional neural networks (FCN), to automatically extract high-level semantic information from HSR images (Cui et al. Citation2019; Fu et al. Citation2019; Shi et al. Citation2018; Sui et al. Citation2020).

Currently, most mariculture extraction methods, especially FCN-based models, are designed based on HSR images. However, nearly all the HSR images are commercial data, which are more expensive for large-scale studies. In contrast, a large amount of free and publicly available medium-spatial-resolution (MSR) images, such as multispectral images from Landsat series of satellites, Sentinel-2, and GaoFen-1 (GF-1), have been less studied. Therefore, developing a mariculture detection system based on such MSR images is necessary, which can provide more economical and reliable spatial distribution information over a large-scale area.

However, several limitations exist for conventional FCN-based methods to accurately detect mariculture areas from MSR images. First, due to the existence of down-sampling operations in the neural network, output size of the bottom features is generally much smaller than the input images. Thus, it is difficult to delineate the mariculture areas for their limited spatial coverage in the original MSR images. To solve such problems, researchers have tried to use skip connections (Ronneberger, Fischer, and Brox Citation2015), up-sampling algorithms (Shelhamer, Long, and Darrell Citation2017), and deconvolutions (Noh, Hong, and Han Citation2015). However, such methods are still difficult to recover the detailed information in MSR images through the learning process in FCN-based models. Although some researchers try to use the boundaries of image objects as auxiliary inputs, it takes too much effort and time to acquire the precious boundary information (Fu et al. Citation2019; Zhang et al. Citation2018). Second, various and complex structures of marine aquaculture areas coexist in large-scale areas, making the FCN-based model difficult to focus on a suitable receptive field. One of the commonly used methods is to prepare a series of different-resolution images as the inputs of subnetworks (Eigen and Fergus Citation2015; Liu et al. Citation2016; Zhao and Du Citation2016). Although such method can make different parts of object prominent in the subnetworks, it takes additional time and computation for the preprocess and training of multi-scale images. Another approach is to use a multi-scale structure, organizing the pooling (He et al. Citation2015; Zhao et al. Citation2017) or dilated convolution (Chen et al. Citation2018) operation in a parallel way, to emphasize different parts of objects in the feature pyramid structure. However, such structures lose the interactions between different scales when arranging the convolution or pooling operations in a parallel way.

In summary, due to the down-sampling operations and complex structures of mariculture areas, there are still challenges for the mapping of mariculture areas from MSR images. To overcome these limitations, we proposed the full resolution cascade convolutional neural network (FRCNet), which is able to keep full resolution of feature maps over the whole model, to accurately extract the mariculture areas from MSR images. And we also compared the proposed FRCNet with other state-of-the-art methods. The main contributions of our study can be summarized as follows:

We present an end-to-end FCN-based model for marine aquaculture extraction from medium spatial resolution images, termed FRCNet.

A feature pyramid structure is designed to provide the most detailed information of feature maps, which can effectively enrich the details of multi-scale features.

The multi-resolution feature fusion structure is constructed to fuse and enhance the multi-scale information of different levels, which can produce more effective and representative features.

2. Materials and methods

2.1. Dataset and preprocessing

A marine aquaculture dataset, which was collected from the wide-field-of-view sensors of Gaofen-1 satellite (GF-1 WFV), was built for this study. For the original images, we firstly orthorectified them into Universal Transverse Mercator (UTM) projection system. Considering the influence of climatic conditions on samples, we then performed atmospheric correction using the FLAASH atmospheric correction module, which is available in ENVI software (v5.3.1).

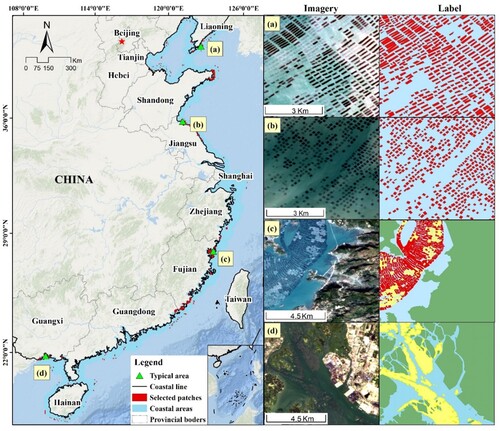

The dataset contains 705 non-overlapping patches and corresponding labels with a size of 256 × 256 pixels. Same as the original images, the selected patches have a spatial resolution of 16 m and four multispectral bands: B1 (450–520 nm, blue), B2 (520–590 nm, green), B3 (630–690 nm, red), and B4 (770–890 nm, near infrared). As shown in , all the patches are randomly distributed along China’s coastal regions, containing typical marine plants culture areas (MPC) and marine animal culture areas (MAC) in China.

Figure 1. Location of the selected patches in China’s coastal region, which are distributed within 30 km away from the coastal line. Images and corresponding labels of typical areas (a), (b), (c), (d) are shown in the right columns, which use red for marine plant culture areas (MPC) and yellow for marine animal culture areas (MAC).

The MPC are actually a combination of large number of fish cages, which are built with woodblocks and bamboo. As most of them are constructed without standard size and material, MPC shows complex spectrum and shapes in remotely sensed images.

The MAC are composed with cultivated plants that are twined on the fixed and floating ropes. As they are floating below the surface of sea waters, the characteristics of the MAC in RS images are influenced by the density of cultivated plants and sea environment, such as waves or transparence.

2.2. Full resolution cascade convolutional neural network

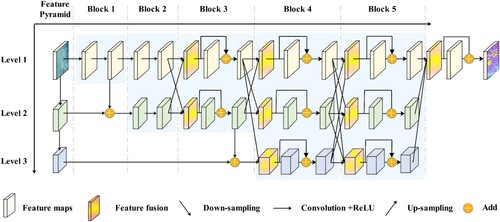

In this study, we perform the mapping of marine aquaculture areas from MSR images by using our proposed FRCNet. As shown in , the FRCNet is mainly comprised of three cascade subnetworks. Specifically, we first used a sequential full resolution neural network as the first-level subnetwork, which is expected to maintain the most detailed information. Based on the sequential full resolution neural network, we gradually added higher-level subnetworks in a cascade way. Meanwhile, we conducted a repeated fusion strategy so that the multi-scale information can be fully exchanged across different subnetworks. In the following parts, we will describe three important structures in our study: (1) sequential full resolution neural network, (2) multi-scale cascade information aggregation, and (3) multi-resolution features fusion strategy.

2.2.1. Sequential full resolution neural network

As shown in , we chose the widely adopted VGG-16 model as the first-level subnetwork of our FRCNet for its high performance. The VGG-16 model is built by connecting five blocks of convolutional layers and three fully connected layers in series. Each block, which is composed of two or three convolutional layers, produces feature maps that have the same spatial resolution. Meanwhile, there is a pooling layer across adjacent blocks to decrease the resolution by half. Detailed structures of the VGG-16 model are described in Karen and Andrew (Citation2014). To keep full resolution of feature maps for the preservation of detailed information, we removed all the pooling and fully connected layers. As a result, the first subnetwork of our proposed FRCNet is built by connecting these blocks in series, which makes the first-level feature maps maintain full resolution as the input images.

2.2.2. Multi-scale cascade information aggregation

Convolutional neural network (CNN)-based methods can obtain representative and extensive features by increasing the depth of structure, benefiting from more non-linear operations and larger receptive fields (Zeiler and Fergus Citation2014). However, features generated from such liner increase structure may only focus on a specific scale, making it difficult to discriminate confusing objects with complex structures. In addition, deeper neural networks generally require a large amount of training samples, which are generally difficult to be collected in environmental remote sensing fields (Yuan et al. Citation2020). Therefore, multi-scale features, which can effectively capture contextual information, are important for the improvement of classification performance.

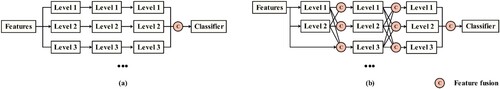

However, it is difficult to make full use of the multi-scale information using direct fusion methods (as illustrated in (a)). For example, if a CNN model adopts the direct fusion strategy with three subnetworks, there is only one fusion for the extracted multi-scale features (see (a)), losing the interactions between different scales. As a result, with the number of scales increases, features at lower levels obtain much limited semantic information while the higher levels lose too much detailed information.

Figure 3. Typical multi-scale information fusion strategies of the parallel way (a) verse our proposed cascade way (b).

To solve such problems, we proposed a novel multi-scale cascade architecture (as shown in ). Based on the sequential full resolution neural network, we gradually added subnetworks that have larger receptive fields one by one, and arranged them in a cascade way. As for the feature pyramid, which constructed of the first feature map of each level, was built by applying a strided 3 × 3 convolution operation on the feature maps of previous level. Each block at a later stage was fed with features from previous subnetworks. Thus, multi-scale information can be fused at different-level subnetworks again and again throughout the whole neural network. For instance, there are at least seven chances of fusion for an FCN-based module with three cascade subnetworks, as illustrated in (b). In such case, features at lower levels can integrate more semantic information from subnetworks at higher levels, and higher levels can also receive more detailed features from the subnetworks at lower levels. In contrast, there is only one fusion for traditional parallel subnetworks. Besides, the proposed cascade structure is also beneficial for the training of models, as the fully connected subnetworks allow gradients directly propagate to shallow layers.

As a result, feature maps from the first-level subnetwork are able to obtain the fully fused multi-scale information while maintain the full spatial resolution all over the process, producing the most fruitful and detailed multi-scale features. The process can be formulated as follows:

(1)

(1)

(2)

(2) where

represents the first-level output features of block m with the spatial resolution of O.

represents the whole convolutional blocks of m. l represents the l-level subnetwork in the proposed FRCNet. R(

) represents the resampling methods, which resample the

to features with a spatial resolution of O. Quo(

) and MOD(

) represents the quotient and remainder when m divided by 2, respectively. Detailed fusion process is described in section 2.2.3.

The most relevant work with our multi-scale cascade architecture is proposed by Wang et al. (Citation2021), however, it is different from ours to a large extent. On one hand, our strategy focuses on performing multi-scale information extraction considering the specific task (e.g. mariculture areas mapping) of MSR images in coastal areas. Specifically, as shown in , a feature pyramid structure is specially designed for the extraction. More subnetworks that actually contain blurry scenes due to this structure can be incorporated. On the other hand, our multi-scale cascade architecture works with our carefully designed multi-resolution features fusion strategy, which is described in Section 2.2.3.

2.2.3. Multi-resolution features fusion

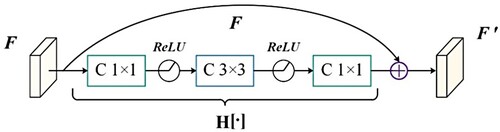

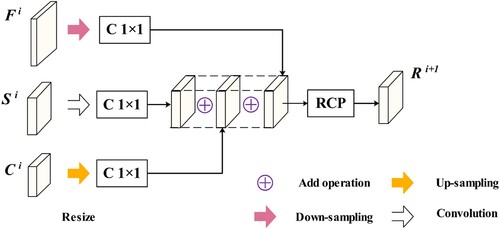

As illustrated in , the multi-scale information aggregation is conducted by fusing finer and coarser feature maps in a cascade way. However, as the existence of inherent semantic gaps, direct stacking of features from different-level subnetworks may not be an efficient way. To solve this problem, we proposed to perform the aggregation with a multi-resolution features fusion strategy, as shown in .

Figure 4. Typical process of the multi-resolution features fusion. ‘C 1 × 1’ represents the convolution operation with the kernel size of 1 × 1 and the followed ReLU activation. ‘RCP’ represents the residual correction process.

Specifically, we first resampled the multi-resolution features from previous blocks to the same resolution as current block. The strided 3 × 3 convolution operation was employed for the down-sampling of features from lower levels. And the nearest neighbor sampling method was used for up-sampling of features from higher levels. And then, features from different subnetworks were added and refined by the residual correction process. As a result, the feature maps with different resolutions can be integrated into a uniform resolution and the multi-scale information can be fused. Each refinement of the process is depicted in , which can be formulated as:

(3)

(3) where

represents the refined features of previous same resolution features

coarser features

, and finer features

.

and

represents up-sampling and strided convolution operation, respectively.

and

represents the convolutional weight for

and

, respectively.

represents the convolutional layers with a kernel size of 1 × 1, which is used to control the model size in this study. ‘

’ and ‘

’ represent the ‘multiply’ and ‘add’ operation, respectively.

represents the residual correction process, which will be described in Section 2.2.4.

2.2.4. Residual correction

In our study, it is notable that FRCNet exchange and fuse multi-scale information over and over throughout the whole process. To effectively and collaboratively aggregate them into a single-scale subnetwork, we need to find an effective architecture, which is able to deal with two challenging issues. First, when the structure gets deeper, it is hard for CNN to fit the desired classification results during the training process, especially when it comes with multi-scale structures (He et al. Citation2016). Second, when performing the aggregation of multi-scale semantic features, the existence of potential fitting residual between different subnetworks may cause a lack of gradient information. Inspired by the idea of residual learning (He et al. Citation2016), we proposed a residual correction architecture to correct the potential fitting residual during multi-resolution features exchange in the FRCNet, which is shown in .

Specifically, we let the stacked multi-scale features to learn another target, which can be formulated as:

(4)

(4) where

denotes the target residual, which is designed to compensate for the loss of information resulting from potential fitting residual.

and

denotes the original and expected fused feature, respectively.

2.3. Experimental details

As shown in , we built our first subnetwork of FRCNet using a sequential full resolution neural network, which was constructed with only five blocks of convolutional layers. And then, two higher-level subnetworks were gradually added in a cascade style. Meanwhile, FRCNet used nine multi-resolution features fusion structures to fully exchange the multi-scale information. To speed up inference process and control model size, the number of channels of convolutional layers in each subnetwork was set as 32, 64 and 128, respectively. Besides, we also used the convolutional layers with a stride of 2 × 2 or 4 × 4 to reduce the resolution of features.

As for the dataset, we randomly chose 705 paired samples with a size of 256 × 256 × 4 from GF-1 images for the training and testing process. The corresponding pixel-wise labels were interpreted by visual inspection. Among them, 80% of these patches were randomly selected to build the training dataset. And then, we applied data augmentation, including mirroring and rotation, to prevent the overfitting during the training process. Finally, our dataset contains over 4650 patches that can be used for training.

In the experiments, we build the FRCNet based on Keras (v2.2.4) on top of TensorFlow (v1.8.0). During the training process, we used the Adam optimizer and a batch size of 20. The learning rate is assigned as 0.0001, with the β1 value of 0.9, and β2 value of 0.999. The training processing was performed with 60 epochs.

2.4. Comparison methods

To evaluate the advantages and effectiveness of FRCNet, we provided a quantitative and visual comparison with several state-of-the-art methods, which achieved great success in remote sensing fields. Specifically, the FCN-32s, UNet, Deeplab V2, HCNet, and HCHNet were selected as the comparison methods. Apart from their excellent performance, all these selected models are built based on VGG-16 or similar structures, which are ideal comparison models for our study. We briefly describe the selected comparison models as follows.

FCN-32s is the first proposed model in FCN-based methods, which is used for the semantic segmentation of natural images (Long, Shelhamer, and Darrell Citation2015). Based on the original VGG-16 neural network, the authors propose to use convolutional layers instead of fully connected layers for semantic segmentation. Meanwhile, the FCN-32s uses up-sampling methods to recover the resolution of features. As the most simple and original FCN-based method, FCN-32s was chosen as the baseline in this study.

Deeplab V2 is another typical FCN-based model, which proposes to use the atrous spatial pyramid pooling (ASPP) architecture to acquire multi-scale information for semantic segmentation (Chen et al. Citation2018). The ASPP arranges a series of atrous convolutional layers in a parallel way, gaining from different sampling rates and corresponding reception fields. Besides, the model proposes to improve the classification performance by combining the probabilistic graphical models. Considering the representative and typical structure for producing multi-scale information, we chose Deeplab V2 for comparison purposes in this paper.

UNet is a widely used and typical encoder–decoder FCN-based model, which is originally proposed to perform semantic segmentation of medical images (Ronneberger, Fischer, and Brox Citation2015). The encoder is also a VGG-16 similar structure. In the decoder, the model proposes to gradually recover the resolution of feature maps by using the long-span connections. Based on the long-span connections, detailed and multi-scale feature maps from the encoder can be integrated into the decoder, which are expected to achieve better performance with the MSR images. As the flowing process of feature maps is totally different with our proposed FRCNet, UNet was chosen for the comparison in this study.

HCNet is proposed by Fu et al. (Citation2019) for the extraction of mariculture areas from HSR images, which combined the advantages of encoder–decoder and multi-scale structures. Similar to the previous encoder-decoder structure, HCNet uses a modified VGG-16 as the encoder, and gradually recovers the resolution of feature maps by using long-span connections. Most importantly, HCNet proposes to use a hierarchical cascade structure to produce multi-scale features, which is able to increase the sampling rate and reception field. In addition, HCNet also proposes to use an attention mechanism to refine the feature space within long-span connections. Due to the same classification objects as our study, which are various marine aquaculture areas, we chose the HCNet for comparison.

HCHNet is proposed by Fu et al. (Citation2021) for large-scale segmentation of marine aquaculture areas from MSR images, which has been successfully applied in the coastal regions in China. To overcome the limited spatial resolution of MSR images, HCHNet proposes to use the modified VGG-16 to extract high-dimensional and abstract features. Meanwhile, HCHNet also employs the hierarchical cascade structure to obtain multi-scale information. Considering the same classification targets as our models in this paper, HCHNet is an ideal model for comparison.

2.5. Accuracy assessment

To evaluate classification performance of the FRCNet, we conducted an accuracy assessment based on the testing dataset, which accounts for 20% of the total datasets. All labels of the datasets are obtained by visual interpretation, and validated by HSR images from Google Earth or field surveys from our previous study (Fu et al. Citation2021). Finally, we calculated the commonly used accuracy metrics, which are calculated as follows:

(5)

(5)

(6)

(6)

(7)

(7)

(8)

(8) where TP denotes true positives, FP denotes false positives, FN denotes false negatives. Sp and St denote the set of predicted and truth pixels, respectively. ‘IoU’ denotes Intersection over Union. ‘

’ and ‘

’ denote intersection and union calculation of pixel sets, respectively.

To assess the classification performance of different kinds of mariculture areas, we calculated these accuracy values for MPC and MAC, respectively. Meanwhile, we used the average accuracy values of MPC and MAC to represent the overall performance of different models.

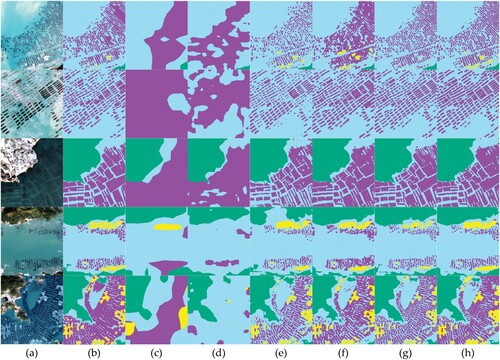

3. Results and comparison

In this study, the proposed FRCNet was compared with several widely used FCN-based models. As shown in , FCN-32s and Deeplab V2 can only identify a blurry boundary of mariculture areas, which fail to extract individual MAC or MPC. With the long-span connections, UNet and HCNet are able to identify more detailed boundaries of mariculture areas. However, there are still some misclassifications for confusing land or mariculture areas. Benefiting from the homogenous neural networks, HCHNet delineates more details of MAC. Compared with these state-of-the-art models, our proposed FRCNet achieves the best visual performance. It can successfully identify and discriminate MAC and MPC at typical mariculture areas.

Figure 6. Visual comparison of our proposed FRCNet with other models: (a) Image; (b) Ground truth; (c) FCN-32s; (d) Deeplab V2; (e) UNet; (f) HCNet; (g) HCHNet; (h) our proposed FRCNet. The red, yellow, green, and blue color in the classification results represent MAC, MPC, land, and sea areas, respectively.

To provide a quantitative comparison with other models, we calculated the commonly used F1 and IoU values for MAC and MPC, respectively. As shown in , FCN-32s, which is compared as the baseline model in our study, achieves the lowest accuracy values, with both the F1 and IoU values are lower than 0.5. Due to additional multi-scale information from the ASPP structure, Deeplab V2 identifies more mariculture areas at different scales. Benefiting from more detailed information from long-span connections, UNet and HCNet obtain similar classification performance, with the IoU value of nearly 0.56. We also find that HCHNet and the HRNet obtained relatively high accuracy values due to the maintenance of full resolution features. Our proposed FRCNet achieves the best accuracy values, with an average IoU value of 0.65. This is because FRCNet maintains the full resolution feature while incorporating multi-scale information all over the process.

Table 1. Quantitative comparison of our proposed FRCNet with other FCN-based methods.

4. Discussion

4.1. Advantages of our approach compared with other FCN-based methods

FCN has become the most powerful and popular method for semantic segmentation tasks of HSR images in recent studies (Zang et al. Citation2021). However, there are still challenges for mapping marine aquaculture areas from remotely sensed images, especially from MSR images.

First, it is difficult for traditional FCN-based models to recover the detailed information of mariculture areas in MSR images. Existing models generally use a series of down-sampling operations to acquire larger receptive fields, leading to smaller feature maps. And then, the output features are usually recovered using deconvolution or interpolation. Similar to the signal sampling processing, there is an upper limit for the up-sampling methods to recover the lost details (Kotaridis and Lazaridou Citation2021; Nyquist Citation1928). Meanwhile, the marine aquaculture areas in GF-1 WFV images occupy much smaller areas than nature or HSR images. Thus, it is difficult for traditional FCN-based methods to accurately delineate the marine aquaculture areas. To solve this problem, we removed all the pooling layers in the original VGG-16 model to avoid the loss of detailed information in the first-level subnetwork. As shown in , the full resolution representations can be preserved over the whole process. As a result, our proposed FRCNet can utilize more detailed information to map mariculture areas.

Second, it is also hard to accurately identify mariculture areas with complex structures. A straightforward way is to make full use of multi-scale features, which can effectively capture the dependency relationship of objects with their surrounding environments. Researchers generally feed multi-resolution images or features to parallel subnetworks to obtain such features. And then, the multi-scale features are fused through an add or multiply operation. However, features produced from such methods are independent with each other. Unlike previous methods, we kept the full resolution of feature maps in the first-level subnetwork, and gradually fused multi-scale features in a cascade way. Most importantly, we proposed to repeat the multi-scale aggregation throughout the neural network, making each subnetwork can fully exchange and absorb multi-scale information from each other. Therefore, the proposed FRCNet can effectively identify and discriminate different mariculture areas.

4.2. Ablation analysis

In this study, we carefully designed three different structures to build the FRCNet, including the sequential full resolution neural network, the multi-scale cascade architecture, and the residual correction structure. To quantitatively assess the benefits brought by different components, we conducted the ablation experiments on FRCNet.

In the experiments, we used the sequential full resolution neural network, which is composed of a series of full resolution convolutional blocks (see the first-level subnetwork in ), as the baseline model. And then, the variants of FRCNet were constructed by gradually adding different structures.

As shown in , the traditional multi-scale structure can only perform slightly better than the baseline module, with only two percentage points improvement in terms of the average IoU value. In contrast, our multi-scale cascade structure can significantly improve the accuracy values, with five percentage points improvement in the average IoU value. Meanwhile, the multi-scale cascade structure can effectively identify more MPC areas, which occupy relatively small percentages of mariculture areas. Besides, the classification performance improves further with the implementation of residual correction strategies.

Table 2. Experimental results of ablation analysis on the proposed FRCNet.

5. Conclusions

This study proposed an end-to-end neural network named FRCNet to identify marine aquaculture areas from MSR images. Experimental results show that the FRCNet can successfully delineate mariculture areas, with a high average F1 value of nearly 0.80. Compared with other widely used state-of-the-art FCN-based methods, the proposed FRCNet achieves the best performance for three reasons: (1) the full resolution variant of VGG-16 is employed as the first subnetwork of FRCNet, which allows model to maintain the most detailed information throughout the neural network; (2) a multi-scale cascade architecture is proposed to fully aggregate and exchange information among different scales; (3) the multi-resolution features fusion and residual correction structures are proposed to effectively fuse and refine the multi-scale features.

Future studies may focus on testing the performance of our proposed FRCNet for the extraction of other confusing land use and land covers from MSR images. Besides, the training process of such deep FCN-based models still needs lots of precious labels, which takes too much manual labor. Thus, it is also valuable to investigate an unsupervised method to train such models.

Acknowledgements

We would like to thank the support of Open Fund of State Laboratory of Agricultural Remote Sensing and Information Technology of Zhejiang Province, Fundamental Research Program of Shanxi Province (grant number 201901D211407), and the Service of National Major Food Crop Interpretation Data Set Collection.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data that support the findings of this study are openly available at [https://doi.org/10.5281/zenodo.3881612].

Additional information

Funding

References

- Burbridge, P., V. Hendrick, E. Roth, and H. Rosenthal. 2001. “Social and Economic Policy Issues Relevant to Marine Aquaculture.” Journal of Applied Ichthyology 17: 194–206. doi:10.1046/j.1439-0426.2001.00316.x.

- Bureau of Fisheries of the Ministry of Agriculture. 2001. China Fishery Statistical Yearbook 2001. Beijing: China Agriculture Press.

- Bureau of Fisheries of the Ministry of Agriculture. 2020. China Fishery Statistical Yearbook 2020. Beijing: China Agriculture Press.

- Campbell, Brooke, and Daniel Pauly. 2013. “Mariculture: A Global Analysis of Production Trends Since 1950.” Marine Policy 39: 94–100. doi:10.1016/j.marpol.2012.10.009.

- Castelo-Cabay, M., J. A. Piedra-Fernandez, and R. Ayala. 2022. “Deep Learning for Land use and Land Cover Classification from the Ecuadorian Paramo.” International Journal of Digital Earth 15 (1): 1001–1017. doi:10.1080/17538947.2022.2088872.

- Chen, Liang-Chieh, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L Yuille. 2018. “DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs.” IEEE Transactions on Pattern Analysis and Machine Intelligence 40 (4): 834–848. doi:10.1109/TPAMI.2017.2699184.

- Cui, Binge, Dong Fei, Guanghui Shao, Yan Lu, and Jialan Chu. 2019. “Extracting Raft Aquaculture Areas from Remote Sensing Images via an Improved U-Net with a PSE Structure.” Remote Sensing 11 (17): 2053. doi:10.3390/rs11172053.

- Duan, Y. Q., X. Li, L. P. Zhang, D. Chen, S. A. Liu, and H. Y. Ji. 2020. “Mapping National-Scale Aquaculture Ponds Based on the Google Earth Engine in the Chinese Coastal Zone.” Aquaculture 520: 734666. doi:10.1016/j.aquaculture.2019.234666.

- Eigen, David, and Rob Fergus. 2015. “Predicting Depth, Surface Normals and Semantic Labels with a Common Multi-Scale Convolutional Architecture.” In 2015 IEEE International Conference on Computer Vision (ICCV), 2650–2658. Santiago, Chile: IEEE.

- Ekim, B., and E. Sertel. 2021. “Deep Neural Network Ensembles for Remote Sensing Land Cover and Land use Classification.” International Journal of Digital Earth 14 (12): 1868–1881. doi:10.1080/17538947.2021.1980125.

- El Mahrad, B., A. Newton, J. D. Icely, I. Kacimi, S. Abalansa, and M. Snoussi. 2020. “Contribution of Remote Sensing Technologies to a Holistic Coastal and Marine Environmental Management Framework: A Review.” Remote Sensing 12 (14): 2313. doi:10.3390/rs12142313.

- Fan, J., J. Chu, J. Geng, and F. Zhang. 2015. “Floating Raft Aquaculture Information Automatic Extraction Based on High Resolution SAR Images.” In 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), 3898–3901. Milan, Italy: IEEE.

- Fu, Y. Y., J. S. Deng, H. Q. Wang, A. Comber, W. Yang, W. Q. Wu, S. X. You, Y. Lin, and K. Wang. 2021. “A new Satellite-Derived Dataset for Marine Aquaculture Areas in China's Coastal Region.” Earth System Science Data 13 (4): 1829–1842. doi:10.5194/essd-13-1829-2021.

- Fu, Y. Y., K. K. Liu, Z. Q. Shen, J. S. Deng, M. Y. Gan, X. G. Liu, D. M. Lu, and K. Wang. 2019. “Mapping Impervious Surfaces in Town-Rural Transition Belts Using China’s GF-2 Imagery and Object-Based Deep CNNs.” Remote Sensing 11 (3): 280. doi:10.3390/rs11030280.

- Fu, Yongyong, Ziran Ye, Jinsong Deng, Xinyu Zheng, Yibo Huang, Wu Yang, Yaohua Wang, and Ke Wang. 2019. “Finer Resolution Mapping of Marine Aquaculture Areas Using WorldView-2 Imagery and a Hierarchical Cascade Convolutional Neural Network.” Remote Sensing 11 (14): 1678. doi:10.3390/rs11141678.

- Gentry, Rebecca R., Halley E. Froehlich, Dietmar Grimm, Peter Kareiva, Michael Parke, Michael Rust, Steven D. Gaines, and Benjamin S. Halpern. 2017. “Mapping the Global Potential for Marine Aquaculture.” Nature Ecology and Evolution 1: 1317–1324. doi:10.1038/s41559-017-0257-9.

- He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. “Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition.” IEEE Transactions on Pattern Analysis and Machine Intelligence 37 (9): 1904–1916. doi:10.1109/TPAMI.2015.2389824.

- He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. “Deep Residual Learning for Image Recognition.” In IEEE Conference on Computer Vision and Pattern Recognition, 770–778. Las Vegas, NV: IEEE.

- Kachelriess, D., M. Wegmann, M. Gollock, and N. Pettorelli. 2014. “The Application of Remote Sensing for Marine Protected Area Management.” Ecological Indicators 36: 169–177. doi:10.1016/j.ecolind.2013.07.003.

- Karen, Simonyan, and Zisserman Andrew. 2014. “Very Deep Convolutional Networks for Large-Scale Image Recognition.” arXiv, 1–14. doi:10.48550/arXiv.1409.1556.

- Kotaridis, Ioannis, and Maria Lazaridou. 2021. “Remote Sensing Image Segmentation Advances: A Meta-Analysis.” ISPRS Journal of Photogrammetry and Remote Sensing 173: 309–322. doi:10.1016/j.isprsjprs.2021.01.020.

- Liu, Yanfei, Yanfei Zhong, Feng Fei, and Liangpei Zhang. 2016. “Scene Semantic Classification Based on Random-Scale Stretched Convolutional Neural Network for High-Spatial Resolution Remote Sensing Imagery.” In 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), 763–766. Beijing, China: IEEE.

- Long, Jonathan, Evan Shelhamer, and Trevor Darrell. 2015. “Fully Convolutional Networks for Semantic Segmentation.” In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 3431–3440. Boston, MA: IEEE.

- Lu, Yewei, Qiangzi Li, Xin Du, Hongyan Wang, and Jilei Liu. 2015. “A Method of Coastal Aquaculture Area Automatic Extraction with High Spatial Resolution Images.” Remote Sensing Technology & Application 30 (3): 486–494. doi:10.11873/j.issn.1004-0323.2015.3.0486.

- Noh, Hyeonwoo, Seunghoon Hong, and Bohyung Han. 2015. “Learning Deconvolution Network for Semantic Segmentation.” In 2015 IEEE International Conference on Computer Vision (ICCV), 1520–1528. Santiago, Chile: IEEE.

- Nyquist, H. 1928. “Certain Topics in Telegraph Transmission Theory.” Transactions of the American Institute of Electrical Engineers 47 (2): 617–644. doi:10.1109/T-AIEE.1928.5055024.

- Rigos, George, and Pantelis Katharios. 2010. “Pathological Obstacles of Newly-Introduced Fish Species in Mediterranean Mariculture: A Review.” Reviews in Fish Biology and Fisheries 20: 47–70. doi:10.1007/s11160-009-9120-7.

- Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. 2015. “U-Net: Convolutional Networks for Biomedical Image Segmentation.” In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), 234–241. Munich, Germany: IEEE.

- Rubio-Portillo, Esther, Adriana Villamor, Victoria Fernandez-Gonzalez, Josefa Antón, and Pablo Sanchez-Jerez. 2019. “Exploring Changes in Bacterial Communities to Assess the Influence of Fish Farming on Marine Sediments.” Aquaculture 506: 459–464. doi:10.1016/j.aquaculture.2019.03.051.

- Shelhamer, E., J. Long, and T. Darrell. 2017. “Fully Convolutional Networks for Semantic Segmentation.” IEEE Transactions on Pattern Analysis and Machine Intelligence 39 (4): 640–651. doi:10.1109/CVPR.2015.7298965.

- Shi, Tianyang, Qizhi Xu, Zhengxia Zou, and Zhenwei Shi. 2018. “Automatic Raft Labeling for Remote Sensing Images via Dual-Scale Homogeneous Convolutional Neural Network.” Remote Sensing 10 (7): 1130. doi:10.3390/rs10071130.

- Sui, B. K., T. Jiang, Z. Zhang, X. L. Pan, and C. X. Liu. 2020. “A Modeling Method for Automatic Extraction of Offshore Aquaculture Zones Based on Semantic Segmentation.” ISPRS International Journal of Geo-Information 9 (3): 145. doi:10.3390/ijgi9030145.

- Tovar, Antonio, Carlos Moreno, Manuel P. Mánuel-Vez, and Manuel García-Vargas. 2000. “Environmental Impacts of Intensive Aquaculture in Marine Waters.” Water Research 34 (1): 334–342. doi:10.1016/S0043-1354(99)00102-5.

- Wang, Min, Qi Cui, Jie Wang, Dongping Ming, and Guonian Lv. 2017. “Raft Cultivation Area Extraction from High Resolution Remote Sensing Imagery by Fusing Multi-Scale Region-Line Primitive Association Features.” ISPRS Journal of Photogrammetry and Remote Sensing 123: 104–113. doi:10.1016/j.isprsjprs.2016.10.008.

- Wang, J., K. Sun, T. Cheng, B. Jiang, C. Deng, Y. Zhao, D. Liu, et al. 2021. “Deep High-Resolution Representation Learning for Visual Recognition.” IEEE Transactions on Pattern Analysis and Machine Intelligence 43 (10): 3349–3364. doi:10.1109/TPAMI.2020.2983686.

- Yuan, Q. Q., H. F. Shen, T. W. Li, Z. W. Li, S. W. Li, Y. Jiang, H. Z. Xu, et al. 2020. “Deep Learning in Environmental Remote Sensing: Achievements and Challenges.” Remote Sensing of Environment 241: 111716. doi:10.1016/j.rse.2020.111716.

- Zang, N., Y. Cao, Y. B. Wang, B. Huang, L. Q. Zhang, and P. T. Mathiopoulos. 2021. “Land-Use Mapping for High-Spatial Resolution Remote Sensing Image Via Deep Learning: A Review.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 14: 5372–5391. doi:10.1109/JSTARS.2021.3078631.

- Zeiler, Matthew D., and Rob Fergus. 2014. “Visualizing and Understanding Convolutional Networks.” In Computer Vision – European Conference on Computer Vision 2014, 818–833. Zurich, Switzerland: Springer.

- Zhang, C., I. Sargent, X. Pan, H. P. Li, A. Gardiner, J. Hare, and P. M. Atitinson. 2018. “An Object-Based Convolutional Neural Network (OCNN) for Urban Land use Classification.” Remote Sensing of Environment 216: 57–70. doi:10.1016/j.rse.2018.06.034.

- Zhang, Liangpei, Lefei Zhang, and Bo Du. 2016. “Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art.” IEEE Geoscience and Remote Sensing Magazine 4 (2): 22–40. doi:10.1109/MGRS.2016.2540798.

- Zhao, Wenzhi, and Shihong Du. 2016. “Learning Multiscale and Deep Representations for Classifying Remotely Sensed Imagery.” ISPRS Journal of Photogrammetry and Remote Sensing 113: 155–165. doi:10.1016/j.isprsjprs.2016.01.004.

- Zhao, Hengshuang, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, and Jiaya Jia. 2017. “Pyramid Scene Parsing Network.” In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 6230–6239. Honolulu, HI: IEEE.

- Zheng, Yuhan, Jiaping Wu, Anqi Wang, and Jiang Chen. 2017. “Object-and Pixel-Based Classifications of Macroalgae Farming Area with High Spatial Resolution Imagery.” Geocarto International 33 (10): 1048–1063. doi:10.1080/10106049.2017.1333531.