ABSTRACT

Previous research has suggested that the perception of emotional images may also activate brain regions related to the preparation of motoric plans. However, little research has investigated whether these emotion-movement interactions occur at early or later stages of visual perception. In the current research, event-related potentials (ERPs) were used to examine the time course of the independent – and combined – effects of perceiving emotions and implied movement. Twenty-five participants viewed images from four categories: 1) emotional with implied movement, 2) emotional with no implied movement, 3) neutral with implied movement, and 4) neutral with no implied movement. Both emotional stimuli and movement-related stimuli led to larger N200 (200–300 ms) waveforms. Furthermore, at frontal sites, there was a marginal interaction between emotion and implied movement, such that negative stimuli showed greater N200 amplitudes vs. neutral stimuli, but only for images with implied movement. At posterior sites, a similar effect was observed for images without implied movement. The late positive potential (LPP; 500–1000 ms) was significant for emotion (at frontal sites) and movement (at frontal, central, and posterior sites), as well as for their interaction (at parietal sites), with larger LPPs for negative vs. neutral images with movement only. Together, these results suggest that the perception of emotion and movement interact at later stages of visual perception.

Emotional stimuli receive preferential processing by sensory and attentional systems in the brain, with numerous studies reporting interactions between sensory regions in the cortex and subcortical structures involved with emotional perception, including the amygdala and pulvinar nucleus (see Oliveira et al., Citation2013; LeDoux & Brown, Citation2017, for reviews). Previous research has also identified reciprocal circuits involving cortical sensory areas and both the prefrontal cortex and insula, regions involved with emotional evaluation of stimuli and embodied emotional responses (see Pessoa & Adolphs, Citation2010, for a detailed review). However, although the neural substrates underlying emotional perception are well-established, many previous studies – particularly those involving emotional scenes – conflate two processes: the perception of emotion and the perception of movement. For example, a photograph of two people fighting depicts emotion (e.g., anger and/or fear) as well as movement (e.g., fists moving toward the other individual). The purpose of the current study is to delineate the discrete and interactive effects of the perception of emotion and implied movement on neural activity during the perception of visual information.

The potential link between the perception of movement and the perception of emotion has been investigated using a number of different methodologies, each with advantages and disadvantages. For example, transcranial magnetic stimulation (TMS) has been used to study the effects of different types of emotional stimuli on the excitability of the corticospinal white-matter pathway connecting the motor cortex to the peripheral nervous system (Oliveri et al., Citation2003). In a typical TMS study, focal TMS is applied to the left primary motor cortex while participants are exposed to emotional or neutral stimuli. The magnitude of the motor-evoked potential (MEP) of a muscle in the right hand – typically the abductor pollicus brevis or the first dorsal interosseous muscle – are then measured using electromyography. Numerous studies have found that the MEPs detected during the perception of emotional stimuli were larger than those detected during the perception of neutral stimuli. This emotional modulation of motoric activity has been reported for the perception of emotional faces (Schutter et al., Citation2008), body postures (Borhani et al., Citation2015), scenes (Coelho et al., Citation2010; Hajcak et al., Citation2007 2007; van Loon et al., Citation2010), and music (Giovannelli et al., Citation2013). This research suggests that the perception of emotional stimuli facilitates motoric planning, thus mobilizing the body for actions in response to the affective items or situation (Blakemore & Vuilleumier, Citation2017; Lang et al., Citation1993). However, although this line of research provides important insights into movement-emotion interactions, TMS research typically focuses on single brain regions (e.g., primary motor cortex) rather than on examining the activity of multiple neural regions at the same time.

In contrast, functional magnetic resonance imaging (fMRI) allows researchers to measure neural activity throughout the brain. This neuroimaging tool has been used in several studies investigating the potential interactions between the perception of emotion and movement. Specifically, previous research has demonstrated that emotional stimuli elicit activity in motor regions of the cortex, including the supplementary motor cortex and midcingulate gyrus (Oliveri et al., Citation2003; Pereira et al., Citation2010; Portugal et al., Citation2020). These results suggest that emotion-movement interactions likely involve midline brain areas in the frontal and parietal lobes. This possibility was directly tested in an fMRI study that examined the discrete and combined effects of the perception of emotion and implied movement using images from the International Affective Picture System (Kolesar et al., Citation2017). The emotional stimuli in this study were limited to negative scenes (e.g., having a door close on one’s hand). The movement depicted in the implied movement stimuli – for both emotional and neutral images – was limited to the upper limbs (e.g., slicing bread or hitting one’s finger with a hammer). As expected, the main effect of implied movement included activity in areas related to the perception of movement including the left putamen and the posterior cingulate gyrus. Activity was also observed in the right insula, a region related to interoception (Craig, Citation2009), and the superior frontal gyrus. The main effect of emotion also included activity in the right insula, albeit to a lesser extent than in response to implied movement. Responses were also detected in the right middle frontal gyrus and the left lingual gyrus. Finally, the interaction between emotion and implied movement included activity in several sensorimotor regions including the bilateral insula, left middle frontal gyrus, right caudate, and left angular gyrus (Kolesar et al., Citation2017). These results demonstrate that although the perception of emotion and implied movement involves several unique brain regions, there are several brain areas that are sensitive to both types of information, particularly in medial prefrontal and parietal regions. However, the relatively poor temporal resolution of fMRI prevented the researchers from identifying the temporal sequence of the neural activity associated with perceiving emotion and implied movement.

The goal of the current study is to extend this earlier research by using event-related potentials (ERPs), a neuroimaging tool with millisecond-level temporal resolution that will allow us to precisely characterize the time-course of these emotion-movement interactions. In the current study, we focused on three specific ERP waveforms that previous research suggested were likely to be modulated by both emotion and movement: the N200, P300, and Late Positive Potential (LPP).

The N200 waveform is associated with early perceptual processing of sensory stimuli. Carretie et al. (Citation2004) reported that the N200 peaked at 240 ms in response to emotional photographs. Specifically, during the first 240 ms post-stimulus onset, LORETA analyses showed decreased activation in the visual cortices was followed by increased activity in the anterior cingulate cortex (ACC, an area involved with both emotional responses and attentional control; Allman et al., Citation2001), suggesting that increased cognitive resources were being allocated to specific stimuli. Similar frontal/central N200 responses have been observed in a number of different paradigms assessing the emotional modulation of attention (e.g., Balconi & Vanutelli, Citation2016; Glaser et al., Citation2012; Hot & Sequeira, Citation2013). Importantly, biological motion also elicits an N200 response, peaking at approximately 200 ms in occipito-temporal brain regions (Hirai et al., Citation2003). Together, these results suggest that both emotion and implied movement could both modulate neural activity in midline regions (e.g., ERP sensors Fz, Cz, and Pz) at this early stage of visual perception.

The P300 is a positive ERP component that reaches its maximum amplitude along midline parietal ERP sites between 300–500 msec post-stimulus onset (Sutton et al., Citation1965). The P300 typically arises when individuals are asked to detect infrequent target stimuli among more-frequent ‘standard’ stimuli (Sutton et al., Citation1965). For example, in an oddball task, the target auditory tone may be presented 20% of the time while the non-target tone may be presented on 80% of the trials; the P300 would be larger for the infrequent target stimuli (e.g., Johnson, Citation1986; Magliero et al., Citation1984). Some researchers have suggested that the intrinsic motivational significance of emotional stimuli may lead them to be processed in a manner similar to task-relevant targets (see Hajcak et al., Citation2010). Consistent with this hypothesis, larger P300 amplitudes have been reported for positive and negative images than for neutral images (e.g., Mini et al., Citation1996; Palomba et al., Citation1997). Midline occipital activity during a similar timeframe has also been observed for images that implied movement (Lorteije et al., Citation2007). As a result, in the current research, we would predict that activity in posterior regions (P1, PZ, P2) would be more pronounced for negative stimuli than for neutral stimuli, and that the amplitude of this effect would be larger for emotional images that implied movement.

Finally, the LPP is associated with responding to intense emotional stimuli (Hajcak et al., Citation2010). Pleasant and unpleasant pictures (Foti et al., Citation2009), faces (Schupp et al., Citation2004), and words (Dillon et al., Citation2006) elicit larger LPP responses than neutral stimuli (for a review, see Hajcak & Nieuwenhuis, Citation2006). For example, when participants viewed images consisting of faces or more complex scenes, the LPP was greater for emotional images than neutral images, and negative images elicited a larger LPP than positive images (Foti et al., Citation2009). The LPP has also been studied in response to point-light animations that depicted movement. In this study, the stimuli consisted of complete displays (i.e., no point-lights missing), or displays that were 85% or 70% complete (Jahshan et al., Citation2015). It was found that participants had larger LPPs when viewing 100% complete biological motion. The LPP has been found to have the greatest amplitudes in the temporal and anterior electrode sites (Mehmood & Lee, Citation2016), centro-parietal sites (Schupp et al., Citation2004), and occipital to central sites (Hajcak et al., Citation2010). Therefore, we predict that the largest LPP responses to emotion, implied movement, and their interaction will be observed in midline regions Fz, Cz, and Pz.

In the current study, the N200, P300, and LPP ERP components were measured in response to four different categories of images: 1) negative emotional images containing implied movement; 2) negative emotional images without implied movement; 3) neutral images containing implied movement, and 4) neutral images without implied movement. Based on the research described above, we hypothesized that the N200, which reflects early stages of visual perception, would be more sensitive to emotional stimuli and to stimuli that implied movement (i.e., two main effects) than to neutral stimuli that did not imply emotion. For the P300 and LPP, we predicted that emotional stimuli would elicit larger responses than neutral stimuli (with or without implied movement), as both of these waveforms are associated with emotional responses (Hajcak et al., Citation2010). Finally, we also predicted that emotional stimuli with implied motion would generate larger P300 and LPP effects than emotional stimuli that did not; these interactions should be particularly apparent at posterior sites (e.g., Pz), as these regions were active in both previous fMRI research (Kolesar et al., Citation2017) and numerous ERP studies of emotional perception (see Hajcak et al., Citation2010). Critically, these results would indicate that the perception of emotion and movement interact at later stages of processing.

Method

Participants

Twenty-five participants (18 females, 15 right-handed; 7 males, 4 right-handed, 1 ambidextrous, 1 unspecified) between the ages of 18 and 27 (1 unspecified; age M = 19.58, SD = 2.14) with normal or corrected-to-normal vision were recruited from the University of Winnipeg, Canada. Previous research has shown that this sample size is adequate to observe significant effects in the components of interest (see Jensen & MacDonald, Citation2023). Exclusion criteria consisted of a history of concussions and/or the presence of affective disorders. Participants received course credit for their participation. The data from six participants were excluded due to equipment/technical issues (n = 3) or alpha wave distortion in the subject average (n = 3). The final sample (age M = 19.79, SD = 2.27) consisted of 15 females (13 right-handed) and 4 males (4 right-handed).

All participants provided informed, written consent prior to the beginning of the experiment. All of the procedures in this experiment were performed in accordance with the ethical standards of the institutional research ethics board, the national research committee ethics requirements, and the 1964 Helsinki declaration and its later amendments.

Stimuli

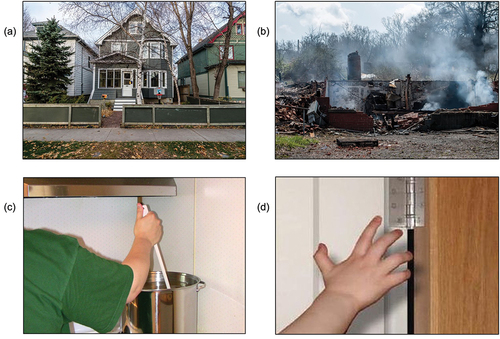

The stimuli consisted of 180 color photographs. These stimuli were identical to those used in our earlier fMRI study (Kolesar et al., Citation2017), thus allowing us to compare activity across experiments. Therefore, emotional stimuli were limited to negative emotions. The stimuli consisted of 45 emotional scenes containing implied movement, 45 emotional images without implied movement, 45 neutral scenes that implied movement, and 45 neutral without implied movement (see ). The movements depicted in the implied movement conditions were limited to the upper limbs in order to increase the homogeneity of the motoric activity in the brain and spinal cord (McIver et al., Citation2013). All stimuli were 155 × 115 mm in size. Images were presented at the center of the screen and were surrounded by a white background. All stimuli were presented on a 27” NEC EA273WMI-BK computer monitor (at a resolution of 1920 × 1080) using E-Prime 2.0 software (Psychology Software Tools, Inc., Pittsburgh, PA).

Procedure

Each participant’s head was measured in centimeters according to standard practice (i.e., using the nasion and inion as landmarks). Participants were then fitted with a 64-channel EEG cap that best fit their head measurement. Electrodes were imbedded into each cap after measurement to ensure that each participant had the correct cap size. After being fitted with the EEG cap, participants were seated in a Whisper Room sound-attenuating booth (Whisper Room Inc., Knoxville, Tennessee). Participants were asked to passively view images (rather than making a motoric response that may differentially interact with images that did, or did not, depict movement). These images were divided into three blocks of 60 trials, each containing 15 randomized stimuli from the four stimulus types. Participants were offered a short break between blocks.

For each experimental trial, a fixation cross appeared for 1000 ms, followed by a randomized image from one of the four categories presented for 2000 ms. Participants did not have to make a response during the viewing of the images. A blank screen appeared for 2000, 3000, or 4000 ms post-stimulus.

ERP recording & preprocessing parameters

Participants were fitted with a 64 Ag/AgCl electrode Brain Products© EasyCAP (actiCHamp Plus, Brain Products GmbH, Gilching, Germany), and horizontal and vertical eye movements were recorded from four electrodes around the eyes (HEOG and VEOG). Data were recorded using Brain Vision Pycorder and amplified via an actiCHamp amplifier at 500 Hz sampling rate, using Cz as a digital reference. Data were re-referenced offline to TP9/TP10 (located approximately at the mastoids). Impedance values were kept below 20 kΩ, the acceptable level as per the manufacturer’s guidelines.

The parameters and orders of operation for data processing were consistent with those described by Luck (Citation2014). A high-pass filter of 0.01 Hz, 24 db/Oct and a notch filter at 60 Hz (to remove electrical noise) were applied to the data. Data were then re-referenced to the approximate left and right mastoids (TP9/TP10). The trials were segmented into the four conditions from −100 to 1000 ms, and epochs were baseline corrected to the pre-stimulus interval (−100 – 0 ms). Next, ICA ocular correction was applied to the data to help remove blinks. Trials containing excessive blinks and other types of artifacts (i.e., those with voltages ±85 µV) were rejected from the data (~8% of all trials). The number of trials did not differ significantly between emotional-no-movement, emotional-implied-movement, and neutral-implied-movement conditions (p > 0.2). However, there was a significant difference between the neutral conditions with (M = 41.53, SD = 5.24) and without implied movement (M = 40.79, SD = 5.98): t(18), p = 0.049).

Data analysis

ERP amplitudes were averaged across participants for each stimulus category. ERPs were time-locked to the beginning of stimuli onset and plotted. Visual inspection of the data revealed differences between categories from 200–300 ms and 500–1000 ms. Analyses were performed across the two motion conditions (motion/no motion), the two emotion conditions (negative/neutral), four levels of anterior-to-posterior scalp distribution (frontal: F1, Fz, F2; central: C1, Cz, C2; parietal: P1, Pz, P2; and occipital: O1, Oz, O2), and three levels of left-to-right distribution (left, midline, right). Thus, a separate 2 (movement) x 2 (emotion) x 4 (anterior-to-posterior distribution) x 3 (left-to-right distribution) repeated-measures ANOVA was conducted to evaluate each component of interest: the N200 (200–300 ms), the P300 (350-500 ms), and the LPP (500-1000 ms). These scalp sites were chosen for analysis because past research has indicated that the N200 and LPP tend to peak at one or more of these sites during passive picture viewing tasks (e.g., the N200 can be frontally or posteriorly distributed depending on the stimulus type). For example, previous studies have reported the N200 at both frontal (Balconi & Vanutelli, Citation2016; Glaser et al., Citation2012; Hot & Sequeira, Citation2013) and parietal-occipital sites (White et al., Citation2014). The LPP has been found in central and superior recording sites (Foti et al., Citation2009; Hajcak et al., Citation2010). Analyses were corrected for multiple comparisons using the Huynh-Feldt correction for ANOVAs and a Bonferroni correction for post hoc paired-samples t-tests. Post hoc t-tests were performed to compare [Emotional images with no movement vs. Neutral images with no movement], [Emotional images with movement vs. Neutral images with movement], [Emotional images with and without implied movement] and [Neutral images with and without implied movement].

Results

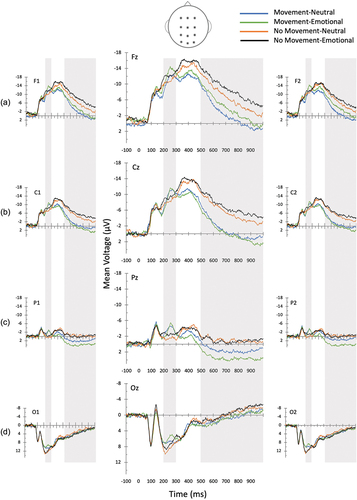

The data for the electrodes at frontal (F1, Fz, F2), central (C1, Cz, C2), parietal (P1, Pz, P2), and occipital (O1, Oz, O2) locations are depicted in . For the N200, within-subjects repeated-measures ANOVAs were performed on the mean voltage (from 200–300 ms) to compare movement (implied or not-implied) and emotion (negative or neutral) at four levels of anterior-to-posterior scalp distribution (frontal: F1, Fz, F2; central: C1, Cz, C2; parietal: P1, Pz, P2; and occipital: O1, Oz, O2), and three levels of left-to-right distribution (left, midline, right). We found a significant main effect of implied motion (F(1, 18) = 5.61, p = 0.029, η2 = .238) and of emotion (F(1, 18) = 5.29, p < 0.034, η2 = .227). The interaction between anterior-posterior scalp distribution, movement, and emotion was trending toward significance F(3, 54) = 2.41, p = 0.098, η2 = .118. Post hoc analyses show that at the frontal locations, the N200 was greater for negative images depicting movement compared to neutral images depicting movement (M= −12.60 vs. M = −10.99, respectively, p = .005); however, no difference was observed between negative and neutral stimuli that did not imply motion (M= −11.89 vs. M = −10.76, respectively, p = .166). Conversely, at posterior locations, there was a marginal difference between negative and neutral stimuli for images with no motion (M= −1.43 vs. M = −0.39, respectively, p = .053) compared to those with motion (M= −2.41 vs. M = −2.65, respectively, p = .632).

Figure 2. Grand mean amplitudes at (A) the data for the electrodes at frontal (F1, fz, F2), B) central (C1, cz, C2), C) parietal (P1, Pz, P2), and D) occipital (O1, oz, O2) locations, depicting the N200 and LPP (area analyzed shaded in gray).

Similar analyses were performed for the P300 waveform. The results indicated a main effect of movement (F(1, 18) = 22.24, p < 0.001, η2 = .553) with more positive-going P300s for images with implied movement (M = 9.24 µV) vs. those without motion (M = 7.72 µV), p < .001. However, no effect of emotion, (F(1, 18) = 1.84, p = 0.191, η2 = .093), and no interaction between emotion and motion, (F(3, 54) = 0.48, p = 0.497, η2 = .026), was observed. An interaction between motion and emotion was also not observed when considering anterior-posterior scalp distribution (F(3, 54) = 1.329, p = 0.264, η2 = .069).

Finally, a repeated-measures ANOVA was performed to examine shifts in the LPP (from 500–1000 ms). This analysis detected a main effect of movement (F(1, 18) = 32.35, p = < 0.001, η2 = .642), and an interaction between anterior-to-posterior scalp distribution and movement, F(3, 54) = 33.07, p = < 0.001, η2 = .648), such that the LPP was greater for images with motion compared to no motion at frontal (M = −2.65 vs. M = −7.11), central (M = −0.45 vs. M = −5.50), and parietal sites (M = 2.62 vs. M = −0.53, p < .001) but not at occipital sites (M = 2.50 vs. M = 1.92, p = .243). There was no main effect of emotion (F(1, 18) = 0.05, p = .828, η2 = .003); however, there was an interaction between anterior-to-posterior scalp distribution and emotion (F(3, 54) = 10.26, p = < 0.001, η2 = .363) such that the LPP was greater for emotionally negative images vs. neutral images at frontal scalp sites (M = −5.74 vs. M = −4.02, p = .02). A marginal effect was also observed with greater LPPs for emotionally negative images vs. neutral images at parietal scalp sites (M = 1.62 vs. M = 0.47, p = .059). There was a significant emotion x movement x scalp distribution interaction (F(3, 54) = 3.21, p = 0.044, η2 = .151). At parietal sites, the LPP was more positive-going for negative vs. neutral images with movement (M = 3.70 vs. M = 1.55, p = .019), while no difference between the two levels of emotion were observed for images without movement (M = −0.46 vs. M = −0.61, p = .804).

Discussion

The purpose of the current study was to examine the effects of perceiving emotion and implied movement – alone, and in combination – on neural activity. This experiment serves as an extension of earlier fMRI research (Kolesar et al., Citation2017); here, we used the identical stimulus set, but measured brain activity using ERPs, a neuroimaging method with superior temporal resolution. It was found that the N200 was significantly modulated by both motion and emotion, marked by greater negativity in the N200 for both images with implied movement and images with negative emotional valence. Furthermore, at frontal locations there was a marginal interaction between motion and emotion, with greater negativity for negative than neutral images in the motion condition, but not for images with no implied movement. Furthermore, at the LPP, there was a significant interaction between anterior-posterior scalp location, motion, and emotion. Specifically, at parietal sites, the LPP was significantly increased by the presence of implied movement, particularly for negative images. Together, these results suggest that emotion and implied movement have both discrete and additive effects on neural activity.

We had hypothesized that the N200 would have large responses to stimuli that implied movement. As predicted, the N200 response was significant from 200–300 ms for implied movement at a posterior scalp sites. The posterior distribution of the N200 for movement perception is not surprising given that posterior brain regions are typically associated with orienting movements and sensorimotor integration (e.g., Brodmann Area 7; see Whitlock, Citation2017, for a review), as well as with the perception of visual motion (e.g., V5; Martinez-Trujillo et al., Citation2007). Furthermore, past studies using ERPs have identified significant N200 modulations for biological motion at posterior scalp sites (e.g., Hirai et al., Citation2003). Importantly, this posterior ERP activity is consistent with the parietal activity detected in our previous fMRI study (Kolesar et al., Citation2017).

Our results also indicated that the N200 was influenced by emotion. The frontally distributed main effect of emotion is consistent with previous ERP studies of emotional perception (e.g., Balconi & Vanutelli, Citation2016; Glaser et al., Citation2012); it is also consistent with the right middle frontal gyrus activity observed in our earlier research (Kolesar et al., Citation2017). However, because participants were not asked to perform a specific task during the perception of the stimuli in the current study, it is unclear whether this activity is due to emotional regulation processes, an active attentional examination of the stimuli, or a combination of the two. Future ERP studies could examine these different possibilities. The emotion-movement interaction for the N200 was nearing significance in parietal regions. The fact that we found a dissociation between scalp distributions for the perception of emotion and the perception of implied movement suggests that different brain regions are simultaneously processing unique elements of the stimuli at a relatively early stage of visual perception.

We had predicted that emotional stimuli would modulate the P300 waveform; however, this hypothesis was not supported in the current study. Although Mehmood and Lee (Citation2015) found a P300 response in parietal and occipital locations when passively viewing emotional pictures, the P300 has been studied more thoroughly during ‘oddball’ tasks in which participants are asked to respond to target stimuli (Hajcak et al., Citation2010). If the current study was modified to include such a manipulation, it is possible that a P300 effect would have been observed.

We expected that emotional stimuli would elicit greater LPP amplitudes compared to neutral or implied movement stimuli because the LPP positively correlates with the intensity of perceived emotional stimuli (Hajcak et al., Citation2010). Indeed, effects of emotion on the LPP were observed, and these were most prominent in frontal regions. This result is consistent with previous investigations of emotion-dependent neural activity which suggest that the LPP is associated with suppressing later visual cortex activity in order to optimize the encoding of emotional items (Brown et al., Citation2012). It is also congruent with the right middle frontal gyrus activity reported by Kolesar et al. (Citation2017). Interestingly however, the LPP was also modulated by implied movement, with significant effects observed at frontal, central, and parietal sites. Although this finding was unexpected, the results are consistent with Jahshan et al. (Citation2015) study of point-light animations that depicted movement. Given the broad distribution of the effects on the LPP, it is possible that multiple perceptual and motoric processing are occurring at this later stage of visual perception (e.g., emotional regulation, attentional control, motoric planning, and response inhibition, to name a few). Future studies isolating each of these functions during the perception of emotional and neutral stimuli would help clarify the source of the effects observed in the current study.

The LPP was also sensitive to the interaction between emotion and implied movement, with significant effects being detected at parietal sites. Although it is problematic to link data from specific ERP electrodes to activity in precise brain regions, the neural generators of the LPP are similar to the brain regions that process body representations and the visual representations of actions (Chaminade et al., Citation2005; Marrazzo et al., Citation2021). Given that parietal activity has also been linked with judgments about the emotional content of body movements (Libero et al., Citation2014), it is possible that the emotion x implied movement interaction detected in the current study represents a detailed analysis of the potential threat being depicted by some of the stimuli.

One advantage of the current study is that the stimuli and experimental procedures were identical to those used in a previous fMRI experiment. As such, it is possible to compare the current results with the clusters of cortical activity reported by Kolesar et al. (Citation2017) in order to identify key trends related to emotion-movement interactions. Although it was difficult to link the subcortical activity observed in the fMRI study with the current ERP results, there were some noteworthy similarities in cortical activity in the two experiments. Our earlier fMRI study found that main effect of perceiving implied movement was found in the posterior cingulate cortex. The current study found movement-related activity in parietal regions at all three time points. The main effect of emotion was associated with activity in the right middle frontal gyrus and left lingual gyrus in the fMRI study. Our current results showed greater responses in frontal regions for the N200 and LPP. Finally, both the fMRI and ERP studies showed emotion x implied movement interactions in frontal and parietal regions. Together, these results suggest that although the perception of these two characteristics activate some unique structures, there is also overlap in the brain areas related to the perception of emotion and movement. Therefore, researchers should control for implied movement when studying emotional perception.

Limitations

Although the current study provides new insights into the interaction between emotion and implied movement during early stages of perception, it does have some limitations. First, the stimulus set was limited to negative emotions (mainly sadness, disgust, and fear) and movement of the upper limbs. This choice of emotional stimuli was made in order to allow for direct comparisons with previous fMRI research (Kolesar et al., Citation2017). Future research should include a wider variety of emotions, specifically happiness, in order to examine whether positive and negative emotional stimuli differentially affect the amplitude and latency of different ERP waveforms (see Hot & Sequeira, Citation2013). Additionally, implied movements of the human body could be expanded to whole-body movements. Spinal fMRI research suggests that neural activity differs when individuals view emotional movements by upper and lower limbs (McIver et al., Citation2013); however, the poor temporal resolution of spinal fMRI did not allow for the assessment of the rapid (millisecond-level) neural responses detected in ERP studies.

An additional limitation was that participants passively perceived the images rather than making a stimulus-relevant response. The rationale for using a simple perception task was that we were unsure whether the different types of stimuli (i.e., movement vs. non-movement) would be differentially affected by the addition of a cognitive or motoric task. That said, having participants perform an overt behavioral response such as rating the emotionality of the images may have increased the ERP effects, particularly for the P300 and LPP. The lack of behavioral responses also opened up the possibility that some categories of stimuli (e.g., implied movement) received more attention from participants than other stimuli (e.g., neutral, no-movement). Future studies should include occasional attention checks (e.g., press the ‘G’ key) to ensure that participants’ attention is on-task.

The final two limitations relate to our participant sample. A larger sample size would have increased our ability to detect effects, thus leading to some marginally significant results potentially reaching statistical significance. Finally, the sample in our study was heavily skewed toward females (78.9%). Given that previous research has shown sex differences in neural responses to emotional stimuli (see Proverbio, Citation2023; Whittle et al., Citation2011, for reviews), it is possible that the current results better represent female than male responses to affective and movement-related stimuli. Having an equal number of male and female participants would also have allowed us to statistically assess whether there are sex differences in emotion-movement interactions.

Conclusions

The purpose of the current study was to delineate the distinct roles of emotion and implied movement in the time course of visual perception using ERPs. Our results replicate previous findings that the N200 and LPP respond to emotional and movement-related images. The N200 was significant for emotion and implied movement; the fact that these effects were strongest in different brain areas suggests that simultaneous processing of emotion and implied movement occurred at this stage of perception. The LPP was modulated by implied movement at all frontal, central and parietal sites and by emotion at frontal sites (marginal effects were observed at parietal sites). Finally, the LPP was significant for the emotion and implied movement interaction at parietal sites. Overall, these results demonstrate that there are distinct patterns of neural responses to emotional and movement-related information at very early stages of perception, and that the perception of emotion and movement interact over the first 1000 msec of encoding. When combined with previous fMRI research using the same stimuli (Kolesar et al., Citation2017), the current data provide a novel window into the time-course of the perception of both emotional and movement-related visual stimuli. They also highlight the importance of controlling for implied movement when developing stimulus sets for affective neuroscience research.

Open practices statement

The data and materials for this study are available by request to the corresponding author.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Allman, J. M., Hakeem, A., Erwin, J. M., Nimchinsky, E., & Hof, P. (2001). The anterior cingulate cortex. The evolution of an interface between emotion and cognition. Annals of the New York Academy of Science, 935(1), 107–117. https://doi.org/10.1111/(ISSN)1749-6632

- Balconi, M., & Vanutelli, M. (2016). Hemodynamic (fNIRS) and EEG (N200) correlates of emotional inter-species interactions modulated by visual and auditory stimulation. Scientific Reports, 6, 23083. https://doi.org/10.1038/srep23083

- Blakemore, R. L., & Vuilleumier, P. (2017). An emotional call to action: Integrating affective neuroscience in models of motor control. Emotion Review, 9, 299–309. https://doi.org/10.1177/1754073916670020

- Borhani, K., Ladavas, E., Maier, M., Avenanti, A., & Bertini, C. (2015). Emotional and movement-related body postures modulate visual processing. Social Cognitive and Affective Neuroscience, 10, 1092–1101. https://doi.org/10.1093/scan/nsu167

- Brown, S. B. R. E., van Steenbergen, H., Band, G. P. H., de Rover, M., & Nieuwenhuis, S. (2012). Functional significance of the emotion-related late positive potential. Frontiers in Human Neuroscience, 6, 33. https://doi.org/10.3389/fnhum.2012.00033

- Carretie, L., Hinojosa, J., Martin-Loeches, M., Mercado, F., & Tapia, M. (2004). Automatic attention to emotional stimuli: Neural correlates. Human Brain Mapping, 22(4), 290–299. https://doi.org/10.1002/hbm.20037

- Chaminade, T., Meltzoff, A. N., & Decety, J. (2005). An fMRI study of imitation: Action representation and body schema. Neuropsychologia, 43(1), 115–127. https://doi.org/10.1016/j.neuropsychologia.2004.04.026

- Coelho, C., Lipp, O., Marinovic, W., Wallis, G., & Riek, S. (2010). Increased corticospinal excitability induced by unpleasant visual stimuli. Neuroscience Letters, 481, 135–138. https://doi.org/10.1016/j.neulet.2010.03.027

- Craig, A. D. (2009). How do you feel — now? The anterior insula and human awareness. Nature Reviews Neuroscience, 10(1), 59–70. https://doi.org/10.1038/nrn2555

- Dillon, D., Cooper, J., Grent-‘t-Jong, T., Woldorff, M., & LaBar, K. (2006). Dissociation of event-related potentials indexing arousal and semantic cohesion during emotional word encoding. Brain & Cognition, 62, 43–57. https://doi.org/10.1016/j.bandc.2006.03.008

- Foti, D., Hajcak, G., & Dien, J. (2009). Differentiating neural responses to emotional pictures: Evidence from temporal-spatial PCA. Psychophysiology, 46, 521–530. https://doi.org/10.1111/j.1469-8986.2009.00796.x

- Giovannelli, F., Banfi, C., Borgheresi, A., Fiori, E., Innocenti, I., Rossi, S., Zaccara, G., Viggiano, M. P., & Cincotta, M. (2013). The effect of music on corticospinal excitability is related to the perceived emotion: A transcranial magnetic stimulation study. Cortex, 49(3), 702–710. https://doi.org/10.1016/j.cortex.2012.01.013

- Glaser, E., Mendrek, A., Germain, M., Lakis, N., & Lavoie, M. E. (2012). Sex differences in memory of emotional images: A behavioral and electrophysiological investigation. International Journal of Psychophysiology, 85, 17–26. https://doi.org/10.1016/j.ijpsycho.2012.01.007

- Hajcak, G., & Nieuwenhuis, S. (2006). Reappraisal modulates the electrocortical response to unpleasant pictures. Cognitive, Affective & Behavioral Neuroscience, 6, 291–297. https://doi.org/10.3758/CABN.6.4.291

- Hajcak, G., Macnamara, A., & Olvet, D. M. (2010). Event-related potentials, emotion, and emotion regulation: An integrative review. Developmental Neuropsychology, 35, 129–155. https://doi.org/10.1080/87565640903526504

- Hajcak, G. Molnar, C. George, M. S. Bolger, K. Koola, J. & Nahas, Z.(2007). Emotion facilitates action: A transcranial magnetic stimulation study of motor cortex excitability during picture viewing. Psychophysiology, 44(1), 91–97. https://doi.org/10.1111/j.1469-8986.2006.00487.x

- Hirai, M., Fukushima, H., & Hiraki, K. (2003). An event-related potentials study of biological motion perception in humans. Neuroscience Letters, 344, 41–44. https://doi.org/10.1016/S0304-3940(03)00413-0

- Hot, P., & Sequeira, H. (2013). Time course of brain activation elicited by basic emotions. Neuroreport, 24, 898–902. https://doi.org/10.1097/WNR.0000000000000016

- Jahshan, C., Wynn, J., Mathis, K., & Green, M. (2015). The neurophysiology of biological motion perception in schizophrenia. Brain and Behavior, 5, 75–84. https://doi.org/10.1002/brb3.303

- Jensen, K. M., & MacDonald, J. A. (2023). Towards thoughtful planning of ERP studies: How participants, trials, and effect magnitude interact to influence statistical power across seven ERP components. Psychophysiology, 60, e14245. https://doi.org/10.1111/psyp.14245

- Johnson, R., Jr. (1986). A triarchic model of P300 amplitude. Psychophysiology, 23(4), 367–384. https://doi.org/10.1111/j.1469-8986.1986.tb00649.x

- Kolesar, T. A., Kornelsen, J., & Smith, S. D. (2017). Separating neural activity associated with emotion and implied motion: An fMRI study. Emotion, 17, 131–140. https://doi.org/10.1037/emo0000209

- Lang, P. J., Greenwald, M. K., Bradley, M. M., & Hamm, A. O. (1993). Looking at pictures: Affective, facial, visceral, and behavioral reactions. Psychophysiology, 30, 261–273. https://doi.org/10.1111/j.1469-8986.1993.tb03352.x

- LeDoux, J., & Brown, R. (2017). A higher-order theory of emotional consciousness. Proceedings of the National Academy of Science, 114(10), E2016–E2025. https://doi.org/10.1073/pnas.1619316114

- Libero, L. E., Stevens, C. E., Jr., & Kana, R. K. (2014). Attribution of emotions to body postures: An independent component analysis study of functional connectivity in autism. Human Brain Mapping, 35, 5204–5218. https://doi.org/10.1002/hbm.22544

- Lorteije, J. A., Kenemans, J. L., Jellema, T., Van der Lubbe, R. H., Lommers, M. W., & van Wezel, R. J. (2007). Adaptation to real motion reveals direction-selective interactions between real and implied motion processing. Journal of Cognitive Neuroscience, 19, 1231–1240. https://doi.org/10.1162/jocn.2007.19.8.1231

- Luck, S. J. (2014). An introduction to the event-related potential technique. (2nd ed.) MIT Press.

- Magliero, A., Bashore, T. R., Coles, M. G., & Donchin, E. (1984). On the dependence of P300 latency on stimulus evaluation processes. Psychophysiology, 21, 171–186. https://doi.org/10.1111/j.1469-8986.1984.tb00201.x

- Marrazzo, G., Vaessen, M. J., & de Gelder, B. (2021). Decoding the difference between explicit and implicit body expression representation in high level visual, prefrontal and inferior parietal cortex. Neuroimage: Reports, 243, 118545. https://doi.org/10.1016/j.neuroimage.2021.118545

- Martinez-Trujillo, J., Cheyne, D., Gaetz, W., Simine, E., & Tsotsos, J. (2007). Activation of area mt/v5 and the right inferior parietal cortex during the discrimination of transient direction changes in translational motion. Cerebral Cortex, 17, 1733–1739. https://doi.org/10.1093/cercor/bhl084

- McIver, T. A., Kornelsen, J., & Smith, S. D. (2013). Limb-specific emotional modulation of cervical spinal cord neurons. Cognitive, Affective & Behavioral Neuroscience, 13, 464–472. https://doi.org/10.3758/s13415-013-0154-x

- Mehmood, R. M., & Lee, H. J.(2015). ERP analysis of emotional stimuli from brain EEG signal. In F. Secca, J. Schier, A. Fred, H. Gamboa, & D. Elias (Eds.), Proceedings of the International Conference on Biomedical Engineering and Science, Setubal (pp. 44–48). Science and Technology Publications.

- Mehmood, R. M., & Lee, H. J. (2016). A novel feature extraction method based on late positive potential for emotion recognition in human brain signal patterns. Computers & Electrical Engineering, 53, 444–457. https://doi.org/10.1016/j.compeleceng.2016.04.009

- Mini, A., Palomba, D., Angrilli, A., & Bravi, S. (1996). Emotional information processing and visual evoked brain potentials. Perceptual and Motor Skills, 83, 143–152. https://doi.org/10.2466/pms.1996.83.1.143

- Oliveri, M., Babiloni, C., Filippi, M. M., Caltagirone, C., Babiloni, F., Cicinelli, P., Traversa, R., Palmieri, M. G., & Rossini, P. M.(2003). Influence of the supplementary motor area on primary motor cortex excitability during movements triggered by neutral or emotionally unpleasant visual cues. Experimental brain research, 149(2), 214–221. https://doi.org/10.1007/s00221-002-1346-8

- Oliveira, L., Mocaiber, I., David, I. A., Erthal, F., Volchan, E., & Pereira, M. G. (2013). Emotion and attention interaction: A trade-off between stimuli relevance, motivation and individual differences. Frontiers in Human Neuroscience, 7, 364. https://doi.org/10.3389/fnhum.2013.00364

- Palomba, D., Angrilli, A., & Mini, A. (1997). Visual evoked potentials, heart rate responses and memory to emotional pictorial stimuli. International Journal of Psychophysiology, 27, 55–67. https://doi.org/10.1016/s0167-8760(97)00751-4

- Pereira, M. G., de Oliveira, L., Erthal, F. S., Joffily, M., Mocaiber, I. F., Volchan, E., & Pessoa, L. (2010). Emotion affects action: Midcingulate cortex as a pivotal node of interaction between negative emotion and motor signals. Cognitive, Affective & Behavioral Neuroscience, 10(1), 94–106. https://doi.org/10.3758/CABN.10.1.94

- Pessoa, L., & Adolphs, R. (2010). Emotion processing and the amygdala: From a ‘low road’ to ‘many roads’ of evaluating biological significance. Nature Reviews Neuroscience, 11(11), 773–782. https://doi.org/10.1038/nrn2920

- Portugal, L. C., Alves, R. C. S., Junior, O. F., Sanchez, T. A., Mocaiber, I., Volchan, E., Smith Erthal, F., David, I. A., Kim, J., Oliveira, L., Padmala, S., Chen, G., Pessoa, L., & Pereira, M. G. (2020). Interactions between emotion and action in the brain. Neuroimage: Reports, 214, 116728. https://doi.org/10.1016/j.neuroimage.2020.116728

- Proverbio, A. M. (2023). Sex differences in the social brain and in social cognition. Journal of Neuroscience Research, 101, 730–738. https://doi.org/10.1002/jnr.24787

- Schupp, H., Ohman, A., Junghofer, M., Weike, A., Stockburger, J., & Hamm, A. (2004). The facilitated processing of threatening faces: An ERP analysis. Emotion, 4, 189–200. https://doi.org/10.1037/1528-3542.4.2.189

- Schutter, D. J., Hofman, D., & van Honk, H. J. (2008). Fearful faces selectively increase corticospinal motor tract excitability: A transcranial magnetic stimulation study. Psychophysiology, 45, 345–348. https://doi.org/10.1111/j.1469-8986.2007.00635.x

- Sutton, S., Braren, M., Zubin, J., & John, E. R. (1965). Evoked-potential correlates of stimulus uncertainty. Science, 150, 1187–1188. https://doi.org/10.1126/science.150.3700.1187

- van Loon, A. M., van den Wildenberg, W. P., van Stegeren, A. H., Ridderinkhof, G., & Hajcak, K. R. (2010). Emotional stimuli modulate readiness for action: A transcranial magnetic stimulation study. Cognitive, Affective & Behavioral Neuroscience, 10(2), 174–181. https://doi.org/10.3758/CABN.10.2.174

- White, N., Fawcett, J., & Newman, A. (2014). Electrophysiological markers of biological motion and human form recognition. Neuroimage: Reports, 84, 854–867. https://doi.org/10.1016/j.neuroimage.2013.09.026

- Whitlock, J. (2017). Posterior parietal cortex. Current Biology, 27(14), R691–R695. https://doi.org/10.1016/j.cub.2017.06.007

- Whittle, S., Yücel, M., Yap, M. B., & Allen, N. B. (2011). Sex differences in the neural correlates of emotion: Evidence from neuroimaging. Biological Psychology, 87, 319–333. https://doi.org/10.1016/j.biopsycho.2011.05.003