ABSTRACT

Introduction: Many internal medicine residents struggle to prepare for both the ITE and board test. Most existing resources are simply test question banks that are not linked to existing supporting literature from which they can study. Additionally, program directors are unable to track how much time residents are spending or performing on test preparation. We looked to evaluate the benefit of using this online platform to augment our pulmonary didactics and track time and performance on the pulmonary module and ITE pulmonary section.

Method: During the month-long live didactic sessions, residents had free access to the pulmonology NEJM K+ platform. A platform-generated post-test was administered with new questions covering the same key elements, including the level of confidence meta-metric. An anonymous feedback survey was collected to assess the residents’ feelings regarding using the NEJM Knowledge+ platform as compared to other prep resources.

Results: 44 of 52 residents completed the pre-test. 51/52 completed the month-long didactic sessions and the post-test. Residents’ score improvement from % correct pre-test (M = 46.90, SD = 15.31) to % correct post-test (M = 76.29, SD = 18.49) correlated with levels of mastery (t = 9.60, df = 41, p < .001). The % passing improved from 1/44 (2.3%) pre-test to 35/51 (68.6%) post-test, also correlating with levels of mastery. Accurate confidence correlated with improvement from pre to post test score (r = −51, p = .001). Survey feedback was favorable.

1. Introduction

A team of four Internal medicine residents (from all three PGY years) selected an educational intervention for their performance improvement project. Suboptimal scores on the September 2019 Internal Medicine In-Training Exam (IM-ITE) Pulmonary section for all internal medicine residents prompted this team to make an online, self-paced question bank tool freely available to all residents to supplement their upcoming traditional pulmonary didactic lectures scheduled during February 2020.

Self-paced, online question banks are used predominantly for American Board of Internal Medicine-Certification Exam (ABIM-CE) preparation. Once the Accreditation Council of Graduate Medicine Education’s Next Accreditation System (ACGME-NAS) was implemented in July 2014, a new continuing accreditation metric requiring a program to achieve and maintain a recent, rolling 3-year first attempt pass rate of >80% on the ABIM-CE by their graduates[Citation1] likely led to a proliferation and increased use of such online tools. The IM-ITE performance has been correlated with passing the ABIM-CE and such supplemental use for remediation has successfully been reported [Citation2].

We report our experiential descriptive and exploratory analysis with resident survey feedback on the use of a single module to supplement our scheduled month-long pulmonary module for all residents. In March 2020, following our experience, the CV-19 pandemic affected our and many other GME programs, necessitating remote, live or on-demand, didactic sessions and online, self-paced training. We felt it important to share our experience with readers who may consider incorporating similar strategies and conduct educational performance improvement strategies for internal medicine GME during these challenging times.

2. Methods

2.1. Participants

Internal medicine residents in an ACGME accredited program at a community teaching hospital participated during February 2020, the traditional didactic pulmonary lectures’ month, in a resident-led educational performance improvement project.

2.2. Selection of online platform

The team reviewed commercially available online, self-paced, question bank platforms and evaluated the published literature to select their project’s platform. No article directly addressed the use for supplementing a single module, but one platform demonstrated success when used both for remediation [Citation3] and for ABIM-CE prep satisfaction surveys [Citation4], The NEJM Knowledge Plus’ pulmonary module thus was selected. This platform captures individual medical knowledge improvement, degree of mastery of the key points, and confidence (metacognitive metric) for each answer (supplement 1) and permits monitoring of individual attention and performance in real time.

2.3. Procedure

The residents had 1 hour to complete a 30-question pre-test. The platform generated questions that covered 10 key points deemed important by faculty from the pulmonology module. During the month between the pre and post-test, residents had 24/7 free online access to the NEJM Knowledge+ pulmonology module and were encouraged to complete enough questions until the system showed they had mastered 100% of all 167 key learning points. A post-test followed the month-long series of 6 of didactic session. This post-test, similar to the pre-test, was platform-generated with 30 different questions that covered the same key learning points. The pre and post-tests’ percent correct answers and metaknowledge metrics (where residents selected their level of confidence in each answer) were captured. Each question’s answer had to be rated as follows: I ‘know it,’ ‘think so,’ ‘unsure,’ or ‘no idea.’ Current unaware is calculating the percent of questions answered incorrectly that were reported as I ‘know it.’

An anonymous resident feedback survey of 7 questions, designed by the team (Supplement 2), was sent using Microsoft Forms to all available 52 residents via their work email. This QI educational project was determined by the Chief Academic Officer and Chief Medical Officer to not meet the 45 CFR 46 Federal Common Rule of HSR and did not require IRB submission.

3. Results

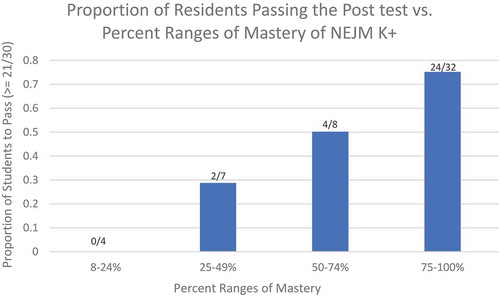

Fifty-two of the 55 internal medicine residents were available during the February 2020 traditional pulmonary didactic sessions. The 52 residents included 18 PGY1s, 19 PGY2, and 15 PGY3s. 44/52 took the pretest and 51/52 completed the posttest. All 51 residents who took the post-test demonstrated varying attempts to use of the supplemental online NEJM K+ tool as captured by the percent masteryFootnote1 of the module. is a descriptive analysis that presents means and standard deviations of captured NEJM Knowledge+ data. 42/52 residents took both the pretest and posttest. Only 1/44 (2.3%) passed the pre-test with 21 of 30 correct answers. We used a t-test to determine if the improvement from pre-test to post test was statistically significant. The residents (n = 42) demonstrated improvement (t = 9.97, df = 41, p < .001) from their pre-test (% correct) score (M = 46.90, SD = 15.31) to their posttest score (M = 76.29, SD = 18.43). Of the 51 residents who took the posttest (M = 74.88, SD = 17.99), the passing rate on the posttest was 35/51 (68.6%). The levels of mastery achieved with the tool correlated with both improvements from pre to post-test and improvement in the passing rate for the post-test (). The residents’ improvement in their confidence (metaknowledge metric) in answers to the questions from pre to post-test correlated with their change in pre to post-test score (r = −51, p = .001) and passing rate.

Table 1. Means and standard deviations of NEJM Knowledge+ data

Figure 1. Bar graph describing the post-test performance (proportion of residents who passed the post-test with ≥21/30 questions correct) vs. percent range of NEJM Knowledge+ module mastery

3.1. Survey results

3/52 did not respond. 49/52 (94.2%) residents completed the anonymous survey with the following results: 22/49 (44.9%) would either be ‘somewhat likely’ or ‘very likely’ to recommend NEJM Knowledge+ to a colleague or friend; 23/49 (46.9%) felt that the quality of the content was either ‘good’ or ‘excellent.’; 24/49 (49%) rated the relevance of the content of the NEJM Knowledge pulmonary as ‘good’ or ‘excellent.’; 36/49 (73.5%) rated the effectiveness of the learning in NEJM Knowledge+ as at least ‘somewhat effective.’ 21/49 they’ve used. 7/46 (15.2%) did not like the question format and chose not to continue with mastery. Whether these residents had low levels of mastery or post-test performance is impossible to say in an anonymous survey.

4. Discussion

The use of the NEJM Knowledge+ platform correlates with improvements in both residents’ Medical Knowledge (MK) domain and metaknowledge metrics. The MK, assessed by comparison of the pretest and posttest performance and passing rate, correlates with the individual resident’s mastery of all 167 key points during their month of traditional didactic session. Since all residents participated, as assessed by attendance, in the traditional didactic sessions to the same degree, this correlation implies that the comparative absolute and relative improvement in performance on the post-test and meta-metric measures is likely attributable to the degree of mastery itself achieved.

Resident feedback revealed that many felt the platform’s functionality and content was good and would recommend it to colleagues. Many also felt that it was at least as good as any test prep program to which they had previously accessed. Whether such a tool will prove useful to them as a lifelong supplement to the domain of Practice Based Learning (PBL) and how it affects the Patient Care (PC) domain remains to be explored.

Limitations to this project were that we could not adequately demonstrate that use of the NEJM Knowledge+ platform improved ITE scores which many would view is the most important metric. We did not find a relationship between the use of NEJM Knowledge+ platform and the mastery of key points for pulmonology and the ITE’s pulmonology sub-scores (Supplement 1). It’s difficult to assess the benefit of using the NEJM Knowledge+ on ITE exams because of multiple factors: 1. Not all residents participated and used the platform fully. 2. the CV-19 pandemic peaked in our hospital the week after this project finished in March of 2020 and persisted up until their ITE exam 6 months later. This could have had a very non-random effect on their mental and physical well-being. 3. The use of the NEJM Knowledge+ platform was too distant from the date the residents completed the ITE exam.

Importantly, this project took place the month prior to the COVID-19 pandemic spreading into our GME training hospital. Our experiential descriptive and exploratory analysis demonstrates this platform was effective and residents provided favorable survey ratings. Since that time, affected residency programs have switched to remote didactics and many program directors have expressed interest in additional self-paced, remote platforms that may be used as a supplemental resource to help residents learn medical knowledge for patient care training and ongoing board preparation.

Supplemental Material

Download MS Word (13.4 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Supplementary material

Supplemental data for this article can be accessed here.

Notes

1 Mastery is percentage of key learning points that the participant has correctly answered for the domain of medicine, in this case pulmonology, that they are reviewing. The participant needs to correctly answering one question about each of the key learning point and also rating their confidence in their answer as ‘I know it’ to show mastery in that particular key learning point.

References

- American Board of Internal Medicine (ABIM). First-time taker pass rates initial certification. 2019. Available from: https://www.abim.org/~/media/ABIM%20Public/Files/pdf/statistics-data/certification-pass-rates.

- McDonald FS, Jurich D, Duhigg LM, et al. Correlations between the USMLE step examinations, american college of physicians in-training examination, and ABIM internal medicine certification examination. Acad Med. 2020;95(9):1388–1395.

- Dokmak A, Radwan A, Halpin M, et al. Design and implementation of an academic enrichment program to improve performance on the internal medicine in-training exam. Med Educ Online. 2020;25(1):1686950.

- Healy M, Petrusa E, Axelsson C, et al. An exploratory study of a novel adaptive e-learning board review product helping candidates prepare for certification examinations. MedEdPublish. 2018;7(3). DOI:10.15694/mep.2018.0000162.1

Appendix

: Survey

Survey regarding NEJM Knowledge+

We want to get your feedback on how you felt about using the NEJM Knowledge+ program.

Hi Gregory, when you submit this form, the owner will be able to see your name and email address.

Section

1.How likely are you to reocmmend NEJM Knowledge+ to a friend or colleague

Very unlikely

Somewhat unlikely

Neither likely nor unlikely

Somewhat likely

Very likely

2.Please rate the quality of the content

poor

fair

average

good

excellent

3.Please rate the quality of the relevance of the content

poor

fair

average

good

excellent

4.How would you rate the effectiveness of the learning in NEJM Knowledge+

extremely effective

very effective

effective

somewhat effective

not effective

5.How did NEJM Knowledge+ compare with the best of the other resources you have used? NEJM Knowledge+ was …

substantially less helpful in preparing me for the exam

a little less helpful in preparing me for the exam

about as helpful in preparing me for the exam

a little more helpful in preparing me for the exam

substantially more helpful in preparing me for the exam

6.How much of the key learning points were you able to complete for pulmonology?

0–25%

26–50%

51–75%

76–99%

100%

7.If you didn’t complete 100% what were the reasons?

had no time due to other tasks

did not understand how the NEJM Knowledge+ tool worked

put the task on hold until later

did not like question format

I completed 100%