Credit: Monkey Business Images/Shutterstock.com.

YOU WERE PROBABLY EXPECTING to read ‘statistics’ in the title, and you are probably thinking ‘Oh no! Not that old chestnut again!’. But if you are still reading this, you might also be thinking ‘Why GCSE results? What’s going on?’.

Well, the answer is that on average, across all subjects, about 25% of GCSE grades, as originally awarded each August, are wrong. And the same applies to AS and A level grades too. To make that real, out of the (about) 6,000,000 GCSE, AS and A level grades announced for the summer 2019 exams, about 1,500,000 were wrong. So about 1 grade in 4 was, in the words of the title, ‘a lie’; or to a student who needed a particular grade to win a coveted university place, or an apprenticeship, or just for personal self-esteem, and didn’t get that grade, it was indeed ‘a damned lie’. Especially so, since no one knows which specific grades are wrong, or which specific candidates are the victims of having been ‘awarded’ the wrong grade. And now that the school exam regulator Ofqual has changed the rules for appeals, deliberately to make it harder to query a result, any candidate who thinks that a wrong grade might have been awarded has a very high mountain to climb to get the script re-marked.

WHAT’S THE EVIDENCE?

‘1 grade in 4 is wrong’ is quite a claim. What’s the evidence?

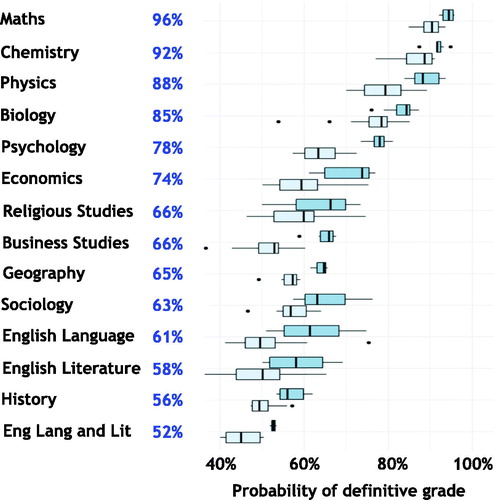

In fact, the evidence is from Figure 12 on page 21 of a report, Marking Consistency Metrics – an update, (see bit.ly/ConsistencyMetrics) published by Ofqual in November 2018, reproduced here (with some additional narrative) as .

This chart shows the results of an extensive study in which very large numbers of scripts for 14 subjects were each marked by an ‘ordinary’ examiner, and also by a ‘senior’ examiner, whose mark was designated ‘definitive’.

out of the (about) 6,000,000 GCSE, AS and A level grades announced for the summer 2019 exams, about 1,500,000 were wrong

We all know, especially for essay-based subjects, that different examiners can legitimately give the same script different marks. So, suppose that an ordinary examiner gives a particular script 54 marks, and a senior examiner, 56. If grade C is all marks from 53 to 59, then both marks result in grade C. But if grade C is 50 to 54, and grade B is 55 to 59, then the ordinary examiner’s mark results in grade C, and the senior examiner’s mark results in grade B. These grades are different, and if the senior examiner’s mark (and therefore grade) is ‘definitive’ – or in every-day language, ‘right’ – then the ordinary examiner’s mark (and therefore grade) must be ‘non-definitive’, which to me means ‘wrong’.

That’s the explanation of . For each of the subjects shown, the heavy vertical line within the darker-blue box defines the percentage of scripts for which the grade awarded by the ordinary examiner was the same as that awarded by the senior examiner. This percentage is therefore a measure of the average reliability of the grades awarded for that subject. So, for example, about 65% of Geography scripts were awarded the right grade, and, by inference, 35% the wrong grade. And if the subject percentages shown are weighted by the corresponding numbers of candidates, the average reliability over the 14 subjects is 75% right, 25% wrong.

The headline ‘1 grade in 4 is wrong’ applies to the average across only the 14 subjects studied by Ofqual as shown in . Some modelling, however, suggests that the reliability averaged across all examined subjects is likely to be within the ranges 80/20 and 70/30, so 75/25 is a sensible estimate.

But whether the truth is 85/15 or 65/35 just doesn’t matter. What other process do you know with such a high failure rate? Has the examination industry not heard of Six Sigma? How many young people’s futures are irrevocably damaged when 1,500,000 million wrong grades have been ‘awarded’ last summer alone? And yes, grades are wrong both ways, and so ‘only’ about 750,000 grades are ‘too low’, potentially damaging those candidates’ life chances. By the same token, 750,000 grades are ‘too high’, and the corresponding candidates might be regarded as ‘lucky’. But is it ‘lucky’ to be under-qualified for the next educational programme, to struggle, perhaps to drop out, and maybe lose all self-confidence? Unreliable grades are indeed a social evil.

Importantly, this unreliability is not attributable to sloppy marking. Yes, in a population of over 6 million scripts, there are bound to be some marking errors. But they are very few. Rather, grades are unreliable because of the inherent ‘fuzziness’ of marking. A physics script, for example, does not have ‘a’ mark of, say, 78; rather, that script’s mark is better represented as a range such as 78 ± 3; similarly, a history script marked 63 by a single examiner is more realistically represented as, say, 63 ± 10, where the range ± 10 for history is wider than the range ± 3 for physics since history marking is intrinsically fuzzier.

grades are unreliable because of the inherent ‘fuzziness’ of marking

In practice, the vast majority of scripts are marked just once, and the grade is determined directly from that single mark: the physics script receives a grade determined by the mark of 78, and the history script by the mark of 63. Fuzziness is totally ignored, and that’s why grades are unreliable: the greater the subject’s fuzziness, the greater the probability that the grades resulting from the marks given by an ordinary examiner and a senior examiner will be different, and the more unreliable that subject’s grades – hence the sequence of subjects in .

WHAT’S THE REACTION?

You might think that Ofqual would be working flat out to fix this – especially since the evidence, as illustrated in , is from their own research. Unfortunately, this is not the case. If anything, Ofqual are in ‘defensive mode’, if not outright denial.

A few days before the summer 2019 A level results were announced, The Sunday Times ran a front-page article (bit.ly/WrongResults) with the headline ‘Revealed – A level results are 48% wrong’. That headline is journalistic drama – as shown in , it is only the combined A level in English Language and Literature that is 52% right, 48% wrong – but the text of the article is substantially correct.

That same Sunday morning, Ofqual posted a two-paragraph announcement (see bit.ly/ResponseToGrades) under the title ‘Response to Sunday Times story about A level grades: Statement in relation to misleading story ahead of A level results’. Here is the final sentence:

‘Universities, employers and others who rely on these qualifications can be confident that this week’s results will provide a fair assessment of a student’s knowledge and skills.’

Phew! What a relief! That grade, as declared on the certificate, must be right after all! Those interpretations of the statistics must be lies, if not damned lies!

But, in the first paragraph, we read this: ‘… more than one grade could well be a legitimate reflection of a student’s performance…’

more than one grade could well be a legitimate reflection of a student’s performance

You might like to read that again. Yes. It does say ‘more than one grade could well be a legitimate reflection of a student’s performance’.

More than one grade? Really? But why is only one grade awarded? Why does only one grade appear on the candidate’s certificate? And if ‘more than one grade could well be a legitimate reflection…’, how can that other statement that ‘Universities ... can be confident that this week’s results will provide a fair assessment of a student’s knowledge and skills’ be simultaneously true?

Please read that ‘Universities, employers…’ sentence once more, very carefully. Did you notice that oh-so-innocuous indefinite article, ‘a’?

Yes, the grade on the certificate is indeed ‘a’ fair assessment of a student’s knowledge and skills. BUT NOT THE ONLY FAIR ASSESSMENT. There are others. Others that Ofqual know exist, but that are not shown on the candidate’s certificate. Others that might be higher.

Both statements in Ofqual’s announcement are therefore simultaneously true. But, to my mind, that last sentence is very misleading. And I fear deliberately so. Whoever drafted it is clearly very ‘clever’. So perhaps statisticians, or those who use (or misuse!) statistics, are not the only community to whom that cliché ‘Lies….’ might apply.

I think that this is an outrage. Do you?

Additional information

Notes on contributors

Dennis Sherwood

Dennis Sherwood, Managing Director, The Silver Bullet Machine Manufacturing Company Limited, is an independent consultant who, in 2013, was commissioned by Ofqual to compile causal loop diagrams of the systems within which Ofqual operates. Dennis has also worked closely with several stakeholder communities, particularly schools, to seek to influence Ofqual to publish relevant data (as happened in November 2018), and continues to campaign to get the problem described in the article resolved. See Dennis’s website: https://www.silverbulletmachine.com/. Dennis featured in an interview on BBC 4’s More or Less broadcast on Friday 24th August 2019 (bit.ly/ExamClip).