Abstract

Evaluation of method performance involves the consideration of numerous factors that can contribute to error. A variety of measures of performance can be borrowed from the signal detection literature and others are drawn from statistical science. This article demonstrates the principles of performance evaluation by applying multiple measures to osteometric sorting models for paired elements run against data from known individuals. Results indicate that false positive rates are close, on average, to expected values. As assemblage size grows, the false positive rate becomes unimportant and the false negative rate becomes significant. Size disparity among the commingled individuals plays a significant role in method performance, showing that case-specific circumstances (e.g. assemblage size and size disparity) will determine method power.

Introduction

Fundamental to forensic anthropology is the notion of method performance and error rates. While all scientific endeavours seek to produce replicable results based on rigorous and reliable procedures, forensic applications of science must be transparent and explicit about sources of error and how to mitigate it. This article is an examination of the nature of error in osteometric sorting of commingled remains. We will use statistical models for sorting paired elements as examples of how method performance can be conceptualized, measured, and to some degree controlled. The proximate purpose of the article is to provide fundamental measures of method performance for select osteometric models. The ultimate purpose is to demonstrate how method performance and error should be viewed in forensic anthropology.

We wish to be explicit about the terms we are using and the purposes of the various measures of error and performance adopted. A method is any procedure, technique or planned way of performing work. A test method or test is an analytical method defined by a specific protocol which is intended to produce a specific range of responses, including guidance on how the responses are to be interpreted. The test protocol includes details such as measurements to be taken, the level of precision required and the statistical models used to evaluate data. Test methods involving statistical analysis typically have recommended cutoff values (e.g. P < 0.10) or bifurcating guidelines (e.g. male if value >0).

Osteometric sorting, as we have promoted it, uses significance tests. Other approaches such as use of likelihood ratios can be valid as well. In practice, we have tended to use a cutoff value of P < 0.10, though have been adamant that practitioners should use whatever cutoff they find appropriate given the case they are working. Byrd and Adams [Citation1] originally proposed 0.10 as a convenient cutoff for their work based on intuition and experience. An important point to bear in mind is that when using significance testing as a statistical approach, each P value obtained is to be interpreted in the context of the test method being used, including the circumstances of the case. (Note: significance tests are generally meant to support an inference informed by data, not serve as the singular finding of an experiment). How one views a P value of 0.07 in a case with two individuals commingled should not necessarily be the same as in a case with over 300 individuals commingled.

The above points notwithstanding, we need to cast osteometric sorting into a more draconian framework for the purposes of exploring error and performance rates. This means that for purposes of this study we will collapse the significance test results into a binary rule whereby any P value below the stated cutoff value is a “positive” result (rejecting the null hypothesis) and any P value above the cutoff value is a “negative” result (failing to reject the null hypothesis). For osteometric sorting, we will view the rejection of an association as positive (segregate them because they are disparate sizes and should not be from the same individual) and the failure to reject as negative (cannot segregate, but this does not preclude that they are from separate individuals). This exercise makes possible the employment of a battery of measures of performance, most of which are borrowed from the signal-detection world. One should bear in mind that collapsing significance tests into this format is a heuristic device meant to explore performance issues.

The following signal-detection related measures (as in [Citation2]) will be used in this article to evaluate osteometric sorting tests for paired elements:

True positive rate (TPR) – Rate of obtaining a positive test result when the bones are known to be from separate individuals.

False positive rate (FPR) – Rate of obtaining a positive test result when the bones are known to be from the same individual.

True negative rate (TNR) – Rate of obtaining a negative test result when the bones are known to be from the same individual.

False negative rate (FNR) – Rate of obtaining a negative result when the bones are known to be from different individuals. (Note: osteometric sorting is not by design able to segregate bones from individuals of approximately the same size since the models focus generically on bone size).

Prevalence (P) – The proportion of comparisons of bones known to be from different individuals.

Level of test (Q) – The proportion of comparisons that produce a positive result (Q = TP + FP).

Sensitivity (SE) – The proportion of comparisons that are found to be TP out of all comparisons of bones known to come from different individuals (TP/P).

Specificity (SP) – The proportion of comparisons that are found to be TN out of all the comparisons of bones known to come from the same individual (TN/(1-P)).

Positive predictive value (PPV) – The proportion of comparisons that are found to be TP out of all comparisons that produced a positive test result (TP/Q).

Negative predictive value (NPV) – The proportion of comparisons that are found to be TN out of all comparisons that produced a negative test result (TN/(1-Q)).

Efficiency (EFF) – The overall correct classification rate, as in the number of TP and TN results divided by the overall number of comparisons. The overall error rate is 1 – Efficiency.

Sensitivity Quality Index (κ(1,0)) – A measure of the sensitivity in the context of the level of the test ((SE-Q)/(1-Q). This statistic takes into account the fact that if the level of the test is 99%, and the SE is 99%, that is not a very useful test since by calling every result positive, you guarantee high sensitivity and ignore a great many FPs.

Specificity Quality Index (κ(0,1)) – A measure of the specificity in the context of the level of the test ((SP-(1-Q))/Q). Like the κ(1,0), this statistic evaluates specificity against the level of test to guard against inflated specificity driven only by the design of the test (as in a level of test being 0.01 and nearly all comparisons are necessarily found to be negative, producing an inflated SP value).

In addition, we will explore the concept of the false discovery rate (FDR) in the context of osteometric sorting as described by Sorić [Citation3], defined as below:

FDR (Qactual) – The observed proportion of the comparisons with a positive result that are not TP (in other words, 1-PPV). This proportion is derived in validation studies where the correct answer is known through independent means.

Maximum FDR (Qmax) – The projected maximum number of positive results that are FP. This is an estimate relying upon model assumptions combined with observed results.

Methods

The results from four different studies, each using different reference databases, are examined in light of the various measures of error and performance described above. The central study examined is that reported in Byrd and LeGarde [Citation4], focusing on the models for paired elements. This study utilized the reference data described in the paper which was compiled at the Defense POW/MIA Accounting Agency (DPAA) Laboratory for the purpose of building general models for osteometric sorting. The second study was performed by LeGarde as part of her Master of Arts thesis and combined an independent sample from the Bass Collection at the University of Tennessee, Knoxville with the original DPAA Laboratory data [Citation5]. The third study was performed by LeGarde and included an independent collection of data from Chiba University in Japan. These data were used as an independent check on the original Byrd and LeGarde models in this article. Finally, a fourth study was reported by Vickers et al. [Citation6] and utilized data from the Forensic Databank of the University of Tennessee, Knoxville. (Note: we declined to consider Vickers et al. [Citation6] treatment of archaeological data since it did not involve known individuals.) The results from all four studies permit the assessment of the FPRs when testing the Byrd and LeGarde models against the various reference data. FPRs from the four studies are provided in . The results for Byrd and LeGarde studies were calculated from the respective databases in Microsoft Excel. The Vickers et al. [Citation6] results were extracted from their original publication and pertain only to the Forensic Databank. Along with the FPR for each model is given the significance level for a binomial test comparing the FPR to the expected result of 0.10 (since the cutoff value used was P = 0.10, we test the null hypothesis that the FPR = 0.10). Finally, the mean FPR for the group of results is provided along with the probability that at least one of the P values should be significant at the 0.05 level (calculated as given in the table footnote).

Table 1. False positive results from the study of Vickers et al. [Citation6] and application of performance metrics of Byrd models.

Table 2. False positive results from the study of Byrd and LeGarde [Citation4] and application of performance metrics of Byrd models.

Table 3. False positive results from the study of LeGarde [Citation5] and application of performance metrics of Byrd models.

Table 4. False positive results from the study of LeGarde [Citation5] and application of performance metrics of Byrd models.

None of these studies permit examination of the more interesting question as to the overall performance of the models. This assessment requires one to measure the test accuracy when bones known not to be associated (i.e. from the same individual) are compared along with the accuracy for those that are associated. To facilitate this fuller assessment, bone data from the DPAA Laboratory database were randomly commingled and compared to one another. The procedure for random pairing was 1) assign a number to each individual, 2) pair right and left bones for comparison by assigning random numbers for the left and right bones to be compared 1 000 times, 3) delete all instances of two bones from the same individual since results concerning performance against known matches already existed. For easy reference in this article, this exercise will be referred to as the “Byrd study”. The t-tests using the Byrd and LeGarde models were performed on the random pairings. All of these steps were performed in Microsoft Excel. The simulated comparisons of bones from different individuals were combined with the comparisons from the same individuals to produce a more complete suite of performance metrics in . The metrics in were calculated in Microsoft Excel according to the definitions provided above.

While it is clear that performance metrics for correctly segregating bones depend greatly upon the size disparity of the individuals to be compared, it is nonetheless interesting to project performance in future applications under the assumption that size disparity will be effectively the same as seen in the DPAA Laboratory data. This is not a “safe assumption”. One issue to consider is that DPAA data include a sizable representation of healthy, young adult males. However, this exercise provides the reader a baseline against which to base expectations in casework. To the extent that the data used here reflect a variety of body sizes and the simulation described above utilized random pairings, the FPRs and FNR’s can be considered “average”. provides expected PPVs and NPVs for commingled assemblages as small as 2 and as large as 400 individuals for the Byrd and LeGarde models that include the total lengths. All calculations rely on the FPR and NPR from the Byrd and LeGarde data resulting from the simulation described above. Calculations were performed in Microsoft Excel.

Table 5. Projections for future performance given performance metrics reported above for humerus, radius, and ulna (including TL).

Results

show the FPRs for the various studies. While the FPRs for individual tests vary between 0.02 and 0.20, the mean FPRs are all 0.10 – the expected value – except for the LeGarde Chiba study. LeGarde conducted this study using the Byrd and LeGarde models and summary statistics and then also ran the tests using the mean and standard deviations from that skeletal group (all Japanese). (Recall that Byrd and LeGarde provided a standard deviation for each model that tests the null hypothesis as deviation from “0”). When she substituted the new summary statistics into the models she saw a dramatic drop in error rates, ranging from 0.02 to 0.09. On the whole, the results follow what one expects when statistical models are developed from one sample and then applied to others: variation in detailed outcomes but show a mean error close to the expected value. Even the results reported by Vickers et al. [Citation6] show a mean error of 0.10. It is comforting to see the mean FPR close to or equal to 0.10 in the studies, but troubling to see that radius and ulna models that include the total length measurements perform consistently worse than expected (more on this issue later in the article).

go beyond the FPR and looks at the larger suite of performance metrics. Specificity can be provided for all of the studies and the values range from 0.80 to 0.96. The values vary for the different models in each study and there appear to be no striking differences between studies. Owing to the simulation through random pairings, the Byrd study shows all of the performance metrics. These are discussed further below.

provides what might be considered projections of future performance under conditions where the size disparities of the commingled individuals are similar to the DPAA reference data. These projections are estimated using the FPRs and NPRs captured in the results shown in . Only the models for complete bones (models include total lengths) were used in this exercise. This exercise is intended to be illustrative of key concepts, not a reliable predictor of future error rates in any particular case (see below). The obvious pattern to observe in the results is the diminishing values of NPV as the assemblage size grows beyond five commingled individuals. At more than six commingled individuals it is more likely than not that the bones are from different persons even when the test result is to accept the null hypothesis. We expect this pattern to always characterize osteometric sorting, even if the specific values of the NPV will vary from case to case due to varying size disparities of the commingled individuals. This is why all of our papers state emphatically that more confidence is to be placed in exclusions (results that indicate rejection) than the reverse. In larger assemblages, the failure to reject an association does not imply the bones must originate in the same person. Rather, it only indicates the bones originated from one person OR two persons of a similar size. Osteometric sorting is a method of exclusion when considered in isolation from other evidence.

Discussion

The performance of osteometric pair-matching is influenced deeply by two factors: the size of the commingled assemblage and the size disparity among the commingled individuals. These two factors will vary from case to case. Thus, the power of the method will always be case-specific. Other factors, such as choice of statistical model (e.g. significance testing versus likelihood ratio approach) pale next to these two factors in determining overall performance. illustrates the effect of size disparity by showing the FNR from comparisons of bones from separate individuals who were 4, 6 and 8 inches different in stature, respectively (using P = 0.10 as cutoff). This shows that individuals four or more inches apart correctly sorted apart >94% of the time. When these levels of size disparity are found in small (<6 individuals) assemblages, we should expect to achieve a high degree of accurate sorting using size alone. When assemblages are large (N> 6) and the size distribution of the commingled individuals is similar to that seen in the reference data used to calculate the statistical models, then we expect the error rates to fall on average very close to the expected error rates projected by the statistical model (e.g. observed error rate of 10% when using a cutoff value of P = 0.10). To the extent that the reference data we have used to calculate models is “average” in terms of size distribution, then the error rates projected by the statistical models are expected to be observed in many cases.

Table 6. Showing the effects of difference in body size (as represented by stature) on test performance.

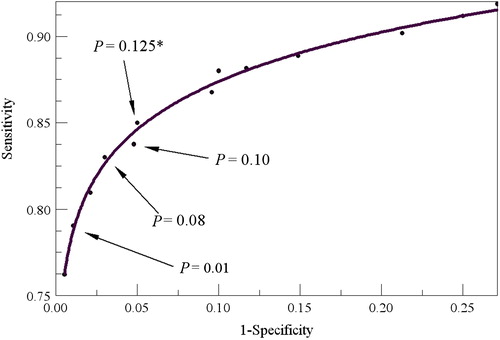

It is clear that understanding the performance of a test method is far more complicated than the simplistic view espoused in Vickers et al. [Citation6]. The most grievous shortcoming of that study is the limitation of error consideration to only the FP rates. Application of osteometric sorting in casework necessitates concern for the fuller suite of performance metrics presented in the tables above. What we see, for example, in is that the PPV quickly rises to 1.0 as the size of the commingled assemblage gets larger. We also understand that case size disparity greater than that seen in the reference data used to calculate the statistical models will lead to better than projected performance. Thus, there is no good reason to optimize the test method to minimize the FP rate while ignoring other factors. Indeed, application of the cutoff values recommended by Vickers et al. [Citation6] is expected to yield an overall poor performance as shown in . One way to view the optimal cutoff value is through receiver operating characteristic (ROC) curves [Citation2]. shows a ROC curve for the humerus model (including total length). Given that the “ideal” or “optimal” cutoff value is the one whose performance levels plot closest to the upper left corner, it appears that P = 0.125 would be the best choice for cutoff. The solution recommended by Vickers et al. [Citation6] is off the chart on the lower left corner and is clearly not optimal by this standard. While it is true that the risk of an FP is fully mitigated by the Vickers et al. [Citation6] approach, it is done at unreasonable cost to overall method performance. We do not recommend this approach. As a final note on their study, we observed high error rates with the radius and ulna that are consistent with what Vickers et al. [Citation6] reported for the Forensic Databank. It appears that there is systematic error for these elements that relates to varying degrees of curvature of the bones of the forearm. This topic is worthy of further study.

Figure 1. Receiver operating characteristic (ROC) curve for the humerus paired element model (with total length) with various P value cutoffs identified. The optimal value is P= 0.125.

Table 7. Performance metrics for Vickers et al. [Citation6] recommended approach applied to Byrd data.

Another interesting study by Lynch et al. [Citation7] demonstrates the effects of assemblage size on the FPR. Lynch developed computer simulations of comparisons by, for example, conducting t-tests of all possible pairwise associations of paired elements in the Forensic Databank sample. Using the Byrd and LeGarde [Citation4] models, he made approximately 100 000 tests for each element. The FPRs, calculated as a proportion of all comparisons (not just comparisons where the bones were from the same individual), was less than 0.1% in every instance.

Conclusion

The evaluation of method performance does not involve a single measure. Rather, one must consider a variety of factors that can be measured and/or assessed using results from validation studies. Application of the methods to known subjects where the correctness of the conclusions is independently determined provides a basis for understanding the various types of errors that can be encountered and how they might be mitigated. Osteometric sorting lends itself to such evaluation because the models can be turned back onto reference data comprised of known individuals and applied to independent samples. What is apparent in the results examined here is that the greatest determinant of the method’s performance will be the nature of the case it is applied to. The method(s) is at its best when the assemblage size is small and the size disparity of the commingled individuals is high. We expect that the performance over many cases to approximate what the statistical models project, combined with the assemblage size factors discussed above. We leave it to each person applying the methods to choose their optimal cutoff given the circumstances of the case.

Compliance with ethical standards

This article does not contain any studies with human participants or animals performed by any of the authors.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Byrd JE, Adams BJ. Osteometric sorting of commingled human remains. J Forensic Sci. 2003;48:717–724.

- Kraemer HC. Evaluating medical tests: objective and quantitative guidelines. Newbury Park (CA): Sage Publications; 1992.

- Sorić B. Statistical “discoveries” and effect-size estimation. J Am Stat Assoc. 1989;84:608–610.

- Byrd JE, LeGarde CB. Osteometric sorting. In: Adams BJ, Byrd JE, editors. Commingled human remains: methods in recovery, analysis, and identification. San Diego (CA): Academic Press; 2014. p.167–191.

- LeGarde CB. Asymmetry of the humerus: the influence of handedness on the deltoid tuberosity and possible implications for osteometric sorting [master thesis]. Missoula (MT): The University of Montana; 2012.

- Vickers S, Lubinski PM, DeLeon LH, et al. Proposed method for predicting pair matching of skeletal elements allows too many false rejections. J Forensic Sci. 2015;60:102–106.

- Lynch JJ, Byrd J, LeGarde CB. The power of exclusion using automated osteometric sorting: pair-matching. J Forensic Sci. 2018;63:371–380.