ABSTRACT

One challenge in medical education is the inability to compare and aggregate outcomes data across continuing educational activities due to variations in evaluation tools, data collection approaches and reporting. To address this challenge, Gilead collaborated with CE Outcomes to develop, pilot, and implement a standardized outcomes evaluation across Gilead directed medical education activities around the world. Development of the standardized tool occurred during late 2018, with Gilead stakeholders invited to provide input on the questions and structure of the evaluation form. Once input was captured, a draft evaluation tool was developed and circulated for feedback. Questions were created to collect 1) participant demographic characteristics 2)data on planned changes to practice, key learnings and anticipated barriers, and 3) learner satisfaction with content and perceived achievement of learning objectives. The evaluation tool was piloted in H1 2019 across 7 medical education activities. Revisions based on pilot feedback were incorporated. The evaluation tool was broadly released during H2 2019 and data were collected from over 30 educational activities. By the end of 2019, it was possible to compare outcomes results from individual activities and aggregate data to demonstrate overall educational reach and impact. Continuing education activities provide valuable up-to-date information to clinicians with the goal of improving patient care. While often challenging to highlight the impact of education due to variations in outcomes, this standardized approach establishes a method to collect meaningful outcomes data that demonstrates the collective impact of continuing education and allows for comparison across individual activities.

Introduction

Overview

One of the foremost challenges supporters of continuing medical education (CME) and continuing professional development (CPD) face is the inability to compare and aggregate outcomes across supported activities. From divergent learning objectives to activities assessed with unique questions and individualised measurement scales, there are numerous factors contributing to the challenge of comparing and aggregating outcomes data. As outcomes data associated with CME/CPD activities have continued to develop and expand over the past two decades, there have been few attempts to standardise outcomes evaluation items. One of the recent attempts at outcomes standardisation has faced numerous challenges as outlined in the article by McGowan et al. [Citation1].

To address the issue of standardisation, Gilead Sciences collaborated with CE Outcomes on the development of a standardised outcomes evaluation tool with the goal of implementing the tool across Gilead Sciences supported educational activities occurring around the world. The following criteria for the standardised outcomes evaluation tool were established at the initiation of the project:

The tool must be appropriate for different educational formats and therapeutic topics.

The tool must not create a burden on the learner.

The tool must collect meaningful educational outcomes data that allows for comparison between individual educational activities and aggregation of data across educational efforts.

The tool must meet the expectations of Gilead stakeholders around the world to provide meaningful outcomes data that is presented consistently across programmes, easy to create and to understand.

Main Text

Developing a Standardised Assessment Tool

One of the issues with standardisation of outcomes is agreement on the types of questions that should be included. In order to gain consensus to aid the adoption of a standardised tool, it was critical to gather input from stakeholders within Gilead. To accomplish this, a team of project champions within the Global Medical Affairs group was identified within Gilead to lead the effort.

With the intention of gaining input from as many Gilead stakeholders as possible around the world, CE Outcomes implemented a multi-phase, requirements gathering approach to define the elements of the standardised evaluation tool. Initial communication regarding the overall goals of the initiative was conducted via email and stakeholders were invited to participate in one of a series of introductory informational conference calls. Stakeholders were briefed on project objectives and were invited to provide opinions and ideas and to contribute to defining the elements of the standard evaluation tool.

Invitations to provide input were sent to all identified stakeholders, approximately 200 individuals, engaged in medical education and scientific information globally. Stakeholders were located across time-zones around the world making communication in a synchronous manner, such as a focus group, a significant barrier. To overcome the geographical challenge, CE Outcomes implemented a 2-step asynchronous feedback session using a modified Delphi technique [Citation2,Citation3] platform to gain consensus. The structured format of these sessions helped promote equal involvement of participants and controlled for extraneous and evaluative discussion that can occur during focus group sessions.

Stakeholders were invited to take part in the first phase which asked participants to provide responses to the following open-ended questions that were made available through an online survey platform accessible via computer and smartphone.

Please list the items that you consider important for the standard tool to gather.

Are there any elements that you have seen in other evaluation tools that you do NOT want this tool to gather?

What is the maximum length of time (in minutes) it should take the learner to complete the evaluation?

What technical considerations or limitations for the tool should be considered (example: limitations of open-ended question due to differing audience response or survey platforms)?

What barriers or challenges can you foresee with implementing a collaborative medical education evaluation tool?

What can help make implementation of the collaborative medical education evaluation tool successful?

The questions were fielded in December 2018 and responses were gathered over the course of a 1-week period. A total of 128 individual responses were received for this first phase of the requirements gathering. These were compiled, aggregated, and organised into themes or categories representing the collected responses.

In the second phase of requirements gathering, all stakeholders were asked to prioritise the themed responses to the questions from the first phase. Stakeholders were asked to select their top three responses from the coded list of themed responses for each question and then asked to rank their top three selections from most important to least important. Respondents were given one week during January 2019 to respond and a total of 156 responses were received.

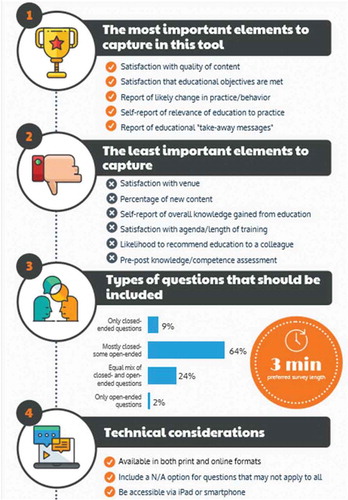

The prioritised data requirements results were consolidated and presented back to the stakeholders. Communicating the results back to the stakeholders provided context for them to understand where their own ideas aligned or diverged from other stakeholders. demonstrates the key results from the data requirements gathering session.

Following the requirements gathering phase, CE Outcomes developed a draft of the standard evaluation tool. The draft was provided to stakeholders for comment during a 2-week period in February 2019. Feedback was collated and revisions were made where appropriate to accommodate suggested edits. The two-page standardised evaluation and questions were designed to accommodate the suggested timeframe of no more than 3 minutes for educational participants to complete and included a mixture of open and closed-ended questions. The questions were designed to capture learner satisfaction with the content and achievement of learning objectives, anticipated barriers to implementing the educational objectives, and impact of education on practice, including key learnings and planned improvements to practice.

From Pilot to Adoption

To pilot test the standard evaluation tool, six continuing education activities were identified occurring between March and May of 2019. All stakeholders taking part in the pilot were requested to participate in a web-based training session to review the evaluation form and to address any questions or concerns with implementing the tool within their educational activities. The pilot provided the opportunity to collect evaluation data across the activities and to identify any technical and data challenges that may be encountered in broad dissemination of the standardised tool. Upon completion of the pilot, a data analysis plan and summary reporting template were created and provided with the evaluation tool to ensure that data from the form were consistently collected, analysed and reported. Additional refinements were made to the questions in the standard evaluation tool following the pilot and by July 2019, the tool was ready to be disseminated across the company for use in educational activities supported by Gilead outside of the US.

To help facilitate wide adoption, it was essential to ensure that all parties involved recognised the value of collecting outcomes data in a format that is different than what was previously used. A network of 20, country-level and regional medical education champions within Gilead was identified to help promote adoption of the standardised evaluation tool. The champions participated in training and were equipped with resources and templates to support implementation. In addition, calls were regularly scheduled to review any concerns or feedback related to the standard evaluation form and to the process of implementation.

Results

Standardised Outcomes Data

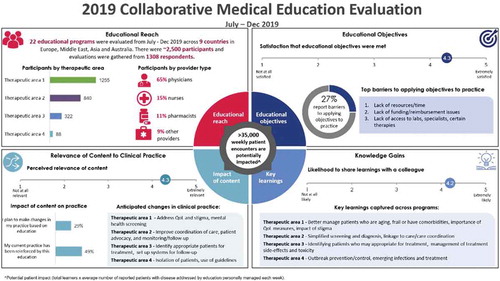

A total of 32 educational activities utilised the standard evaluation tool between July and December 2019. To ensure that the data reporting for each activity were consistent, a standard reporting template was developed. Each educational activity that utilised the standard tool received a templated report of outcomes data for the activity. In addition to the individual activity reports, a database was created to store evaluation responses from each activity. The database allows for the opportunity to provide aggregated reporting across educational activities by therapeutic area, region, or even country. A deidentified example of a standard report is below ():

It is anticipated that the standard evaluation tool will be utilised widely across Gilead educational activities occurring during 2020 and 2021. The data collected will provide Gilead the ability to demonstrate how continuing educational efforts are meeting clinician needs. Further, the data will allow Gilead to assess whether adjustments to educational planning are needed to ensure future educational activities are most effective and efficient. An abridged questionnaire that was adapted from the original survey form was also developed to facilitate outcomes evaluation for digital/online education programmes.

Discussion

Implications/Conclusion

Continuing education activities provide valuable up-to-date information to clinicians with the goal of improving patient care. Historically, comparing outcomes from activity to activity or aggregating outcomes data across activities has been a sizeable challenge. This study demonstrates an approach to developing and implementing a standardised outcomes evaluation with the goal of comparing and aggregating outcomes data. Key factors to the success of the implementation of the standardised outcomes evaluation included ensuring opportunities for all stakeholders to provide input and identifying internal champions to help facilitate the adoption of the standardised tool. As data from the standardised tool continue to be collected from educational activities occurring around the world, the data will provide further value in demonstrating the impact of education and examining trends over time.

Disclosure Statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- McGowan BS, Mandarakas A, McGuinness S, et al. Outcomes Standardisation Project (OSP) for Continuing Medical Education (CE/CME) professionals: background, methods, and initial terms and definitions. J. Eur CME. 2020;9:1.

- Delbecq AL, Van de Ven AH, Gustafson DH. Group techniques for program planning: a guide to nominal group and delphi processes. Glenview, IL: Scott Foresman; 1975.

- Shewchuk R, Schmidt HJ, Benarous A, et al. A standardized approach to assessing physician expectations and perceptions of continuing medical education. J Contin Educ Health Prof. 2007;27(3):1–5.