?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Given the centrality of news media to democracy, it is concerning that public trust in media has declined in many countries. A potential mechanism that may reverse this trend is independent fact-checking to adjudicate competing claims in news stories. We undertake a survey experiment on a sample of 1608 Australians to test the effects of fact-checking on media trust using a real-life case study known as the “sports rorts” affair. We construct duplicate news articles from two national media outlets (i.e. ABC.net.au, news.com.au) containing a senior government minister’s real-life false claim that public funds were not used for political advantage immediately before an election. Half of the participants are exposed to a third-party fact check, which confirms the Minister’s claim is verifiably false, the other half are not. All respondents are asked to evaluate the story’s and news outlets’ trustworthiness. Contrary to our expectations we find a backfire effect whereby independent fact-checking decreases readers’ trust in the original news story and outlet. This negative relationship is not conditional on partisanship or the media source. Our study provides a cautionary tale for those expecting third-party fact-checks to increase media trust and we outline several avenues by which fact-checkers might overcome this.

Introduction

News media plays an essential role in holding politicians and those with power to account. Such critical efforts inform public discourse so that citizens can meaningfully participate in democratic processes (Schudson Citation2008). Yet studies show public trust in the media has fallen in some democracies (Edelman Citation2020; Newman and Fletcher Citation2017). This is of concern given the media’s normative role in providing accurate information to citizens. Existing research points to a number of reasons for this decline including rising political polarization (Hetherington and Rudolph Citation2015) and corrosive discourse from political leaders who seek to delegitimize the mainstream press by attacking potentially damaging coverage as “fake news” (Van Duyn and Collier Citation2019; Farhall et al., Citation2019). Against this backdrop of falling media trust, legacy media is experiencing financial pressure, leading to reduced revenue and cost-cutting resulting in fewer professional journalists and editorial resources to undertake sub-editing and internal fact-checking ) (Carson, Citation2020, 46).

The quality of public discourse is further complicated by the prevalence of online misinformation and disinformation. While misinformation is not new, the globalization of the Internet and rise of digital technologies has accelerated the speed, scope and spread of falsehoods online (Giusti and Piras Citation2021, 2). Australian news consumers, like news audiences elsewhere, are increasingly getting their news online where credible information exists alongside misinformation (Fisher Citation2019). Repeated surveys show most Australians are worried about encountering fake news on the Internet and their capacity to determine fact from fiction, particularly in relation to political content (Murphy Citation2020; Fisher Citation2019). In this environment of widespread misinformation, declining media trust, and reader uncertainty in determining fact from fiction, it is essential to explore mechanisms that improve trust in credible news sources.

One response that scholars have explored is the use of active journalistic adjudication to improve public perceptions of news quality. Adjudication involves an active style of reporting whereby reporters check factual claims, weigh evidence, judge the accuracy of competing accounts, and, ultimately, share their findings with the reader (Pingree, Brossard, and McLeod Citation2014). Taking this approach, journalists serve a critical function in democratic accountability. On the other hand, lingering professional norms of objectivity can discourage journalists from actively adjudicating on verifiable claims (Jamieson Hall and Waldman, Citation2003, 22). Fact-checking can have the adverse “backfire effect” by making the journalist appear partial, which can undermine trust in the accuracy of information or, in some examples, increase belief in the falsehood under review (Nyhan and Reifler Citation2010; Nyhan, Reifler, and Ubel Citation2013). Thus, existing scholarship suggests that under certain conditions fact-checking may serve to undermine readers’ trust in news reporting.

Third-party fact-checking is another global response to investigate dubious claims in news stories, and may be a means to increase media trust and use (Pingree et al. Citation2018, 4). Fact-checking units around the world have almost quadrupled since 2014 (Stencel and Luther Citation2019), with nearly 300 now operating worldwide (Fischer Citation2020). Despite the rise in third-party fact-checking units, a surprisingly limited number of studies have focused on linkages between third-party fact-checks and media trust. Of those that do, findings are mixed (Amazeen et al. Citation2018; Graves Citation2016; Citation2018; Graves and Anderson Citation2020; Wintersieck Citation2017). A better understanding of the role that conditioning factors such as media source and partisanship play in trust in news may help explain divergent findings. It is this gap in the literature that this study addresses.

Australia provides a powerful case study to pursue this inquiry as mainstream Australian journalists often employ “he said/she said” reporting in general news coverage rather than actively adjudicate opposing parties’ claims to expose false statements. This is not to say all news reports fit this “he said/she said” model, but rather it is a common form of Australian political reporting. In such instances, third-party fact-checking has emerged to arbitrate specific political statements within a news story. Conceivably, third-party fact-checking may increase media trust as fact checked claims are a degree removed from the media source itself, thus avoiding the documented backfire effect. This scenario provides us with a clear experimental design through which to test whether third-party fact-checking of a politician’s claims has broader associations to media trust.

We address this aim by using a realistic survey experiment on a broadly representative sample of over 1100 Australians (detailed below) to test whether third-party fact-checking of political claims can increase citizens’ trust in news, and whether such an effect is conditional on partisanship and media source.

This article proceeds as follows: we discuss key literature on media trust, journalistic adjudication, fact-checking and backfire effects that inform this study and its research design. We outline the method and the “sports rorts” case study used in our experiment and detail our findings. Then, we discuss the implications of our findings for journalistic adjudication, and conclude with recommendations for future practice of third-party fact-checking.

Conceptualizing Media Trust

From a democratic perspective, citizens require some level of trust that the news they read is fair and accurate if they are to take media reports of political wrongdoing seriously. As Strömbäck and colleagues (Citation2020, 139) observe, “even a perfectly informative news media environment is of little democratic use if citizens … do not trust the news.” Yet, various studies find media trust has fallen in many established democracies (Ardèvol-Abreu and Gil de Zúñiga Citation2017; Robinson Citation2019; Tsfati and Ariely Citation2014). This includes similar Anglo-American democracies such as the US (Mourão et al. 2018, 1946), UK (Fletcher and Park Citation2017), Canada (Hanitzsch Citation2012; Hanitzsch, van Dalen, and Steindl Citation2018) and Australia (Fisher Citation2019).

Traditional mainstream media outlets typically experience higher levels of public trust than social media (Edelman Citation2020, 59). As a general concept, media trust is also related to trust in other public institutions such as government, non-government organizations and business sectors of society (van Dalen Citation2019; Edelman Citation2020). All four institutions have generally recorded lower levels of public trust in many democratic countries since 2000 (Edelman Citation2020, 5). van Dalen (Citation2019, 358) observes that trust in the press extends beyond just the public trusting the media to provide reliable information, but also to fulfill a broader societal role and hold other public institutions to account.

“Trust” and “credibility” are often used interchangeably in media studies along with other terms such as “trustworthiness”, “believability”, “accuracy”, “fairness”, and “reliability” (Fisher Citation2016). The wide-ranging field of media credibility scholarship demonstrates that public distinctions between trust and credibility can blur (Fisher Citation2016; Engelke, Hase, and Wintterlin Citation2019, 70). Consequently, seemingly simple questions about whether news audiences “trust” the media can be complicated by conceptual differences (Strömbäck et al. Citation2020). Thus, the operationalization of trust can vary from survey to survey.

Strömbäck and colleagues (Citation2020) have proposed a framework to examine media trust according to different levels of analyses. They argue that there should be a focus on “trust in the information coming from news media rather than on media as institutions or organizations” (Strömbäck et al. Citation2020, 152). We heed the multidimensionality of media trust in our experimental design, by directing respondents to evaluate the trustworthiness, fairness and credibility of the provided news story. In doing so, we account for the aforementioned divergent operationalizations of trust by narrowing participants’ assessment of trust to the specific news story that they read. We directly test the consequences for trust in a news story where there is no direct journalistic adjudication of a political claim within the story, but instead the claim is subject to third-party fact-checking.

Types of Journalistic Adjudication

Journalistic adjudication can take different forms, including passive adjudication. In this instance, the journalist provides competing accounts from key actors in the story, also known as “he said/she said” reporting (Pingree, Brossard, and McLeod Citation2014). In some cases, the news story may contain signposts to the reader using words such as “claims” and “alleges”, but the news report ultimately leaves it to the reader to adjudicate for themselves where the truth lies. Conversely, active journalistic adjudication is overt about the veracity of claims in the story and thus positions the journalist “as judge in the jury trial of public opinion, as opposed to a mere stenographer” (Pingree, Hill, and McLeod Citation2013, 196). Another form is external adjudication. In this instance, claims in a news story are independently checked and rated for their degree of truthfulness by a third-party fact-checker, as discussed below.

However, in some places there remains a reluctance in journalism to actively adjudicate disputes in stories, particularly if political (Lawrence and Schafer, Citation2012; Pingree, Brossard, and McLeod Citation2014) . Reasons include that some disagreements are considered inherently subjective – there is no clear truth to adjudicate. Of those claims that are underpinned by verifiable facts, journalists may shy away from seeking additional sources and doing their own analysis to bring readers new evidence that contradicts a political claim because they may lack time, resources or knowledge specialization to undertake this work. (Pingree, Brossard, and McLeod Citation2014, 617). As such, “he said/she said” reporting can be cheaper to produce and more conducive to serving the digital age’s insatiable news cycle that preferences speed over accuracy. Journalists and their editors may also be risk adverse, not wanting to expose their organizations to accusations of political bias with its own repercussions such as potentially losing audience share, audience trust and access to elite information sources (Pingree, Brossard, and McLeod Citation2014; Flew et al. Citation2020). At an institutional level, studies find perceived political bias contributes to audience distrust and that neutrality and impartiality, under some circumstances, are expected from journalists (Ojala Citation2021).

On the other hand, scholarship also finds benefits in journalist’s direct adjudication of claims in their stories. When journalists actively adjudicate false claims in their own stories, it can be beneficial to both the audience and news source. Studies find direct journalistic adjudication can enhance audience perceptions of news quality and increase the likelihood that news consumers will return to that media source (Pingree, Brossard, and McLeod Citation2014, 631). In some cases, readers are also more confident in their own ability to grasp the truth behind political issues known as epistemic political efficacy (EPE) (Pingree, Citation2011; Pingree, Hill, and McLeod Citation2013; Pingree, Brossard, and McLeod Citation2014, 631). Pingree and colleagues (Citation2014) conclude this is because journalistic adjudication enables readers to practice political truth detection and build confidence in determining the truth about politics in future news consumption.

The Rise of Third-Party Fact-Checking

An alternative to direct journalistic adjudication is third-party fact-checkers to help audiences identify untrustworthy information. The first independent fact checker was US nonprofit FactCheck.org in 2003. It was set up as a nonpartisan project to verify political claims. While such operations are separate to traditional, internal fact-checks by news organizations, these independent outlets reflect quality journalism’s “broad goal of informing the public and promoting fact-based public discourse” (Amazeen et al. Citation2018, 30). In this way, they have an implicit aim of increasing citizens’ trust in news stories by identifying misinformation to help better inform public discourse.

In recent years third-party fact-checkers have expanded in number and scope adjudicating public claims beyond politics including advertising, consumer news and health information. The growth of the sector is partly in response to the financial duress of traditional media that has led to shrinking newsrooms and fewer in-house resources to seek additional evidence to evaluate claims in news stories. In Australia and elsewhere, the sub-editing work of many newspapers has been cut or outsourced due to revenue shortfalls (Martin and Dwyer Citation2012). In this time, two prominent tax-payer subsidized third-party fact-checkers have emerged, Australian Associated Press (AAP)Footnote1 and RMIT ABC Fact-check (a collaboration between RMIT university and the public broadcaster). An explicit goal of these fact-checkers is to adjudicate claims by political and public figures in the news media presented as fact to “minimise misinformation, serving both the news media and the general public” (Australian Associated Press (AAP) Citation2021). One way that the news media industry is served by these fact-checkers is to strengthen public trust in news by correcting misperceptions and creating reputational costs for politicians making false claims (Pingree et al. Citation2018, 1).

The global proliferation of independent fact-checkers is also in response to the rise of online misinformation and disinformation in the digital age (Amazeen et al. Citation2018, 29). COVID-19 misinformation has drawn acute attention to the need and role of third-party fact-checkers to reduce misinformation and increase public trust in health news and public information. The World Health Organization has joined the company of established fact-checkers including Snopes, PolitiFact and FactCheck.org, to establish dedicated COVID-19 fact check sites to combat coronavirus mis- and disinformation.

As third-party fact-checking has grown as an industry, scholars have taken different approaches to studying it. One stream of research examines the efficacy of fact-checking. This includes studying its capacity to correct public misconceptions about political and non-political issues (Nieminen and Rapeli Citation2019; Nyhan et al. Citation2020; Owen et al. Citation2019), and its capacity to change audience’s pre-existing positions (Walter et al. Citation2020). Scholars also examine how fact-checking impacts trust in media and confidence in readers’ abilities to discern truth in reporting (Pingree et al. Citation2018). Others investigate the efficacy of fact-checking political debates on social media during election campaigns (Coddington, Molyneux, and Lawrence Citation2014). Further, within this “efficacy” stream are studies that reveal limits on fact-checking such as potential negative effects like the factual and familial “backfire” effects, discussed in more detail below. Other studies look at mediating factors such as how audience bias toward media source or political partisanship impact the effectiveness of fact-checking (Lyons Citation2018; Walter et al. Citation2020).

Fact-Checking, Media Trust and Backfire Effects

Given these studies – and the frequent praise of fact-checking for its corrective potential to inform news audiences and enhance audience perception of news quality (see Nieminen and Rapeli Citation2019; Pingree, Brossard, and McLeod Citation2014) – we might reasonably expect that audience trust will increase in news after receiving a fact-check. However, other studies also reveal that fact-checking’s capacity to improve overall trust in news is conditional on certain circumstances. For example, Pingree and colleagues’ (Pingree et al. Citation2018) online field experiment showed that fact-checking articles could improve trust in news, but only when the checks were presented alongside other opinion articles and editorials that defended the relevance and integrity of journalism. Without this specific condition, fact-checking had no measurable influence on participant’s trust.

Where Pingree et al. (Citation2018) show the absence of an effect (without conditions present), others find that fact-checking may actually be detrimental to correcting falsehoods and trust in the media. For example, repeating a falsehood in a fact-check in order to correct it may further propagate the existing misconception – described as a familial backfire effect. While meta-analyses of the familial backfire effect casts some doubt on the phenomenon (Swire-Thompson, DeGutis, and Lazer Citation2020), the authors advised caution should be taken around repeating political framing of issues that benefit political actors. Others find that fact-checking may correct biases in factual knowledge, but also the potential for a backfire effect still exists by increasing the salience of a politician’s “alternative” facts (Barrera et al., Citation2020).

Conditional Factors

Audience receptiveness to adjudication and fact-checking is likely to be moderated by conditional factors. Studies have shown that when individuals with strong ideological beliefs receive information correcting information contrary to their pre-existing views, they may respond by supporting their original view more strongly producing an unintended “backfire effect” (Nyhan and Reifler Citation2010, 307). Some may become more distrustful of the news media (Newman and Fletcher Citation2017, 12). Just as adjudication may increase trust for some, it may also decrease trust for others – making the effect conditional on one’s political partisanship.

Yet, there are reasons to be sceptical of this argument. A large US experimental study of 52 real instances of political leaders’ misinformation in fictitious news articles provided to 10,000 participants did not support the backfire effect (Wood and Porter Citation2019). The authors “did not observe a single instance of factual backfire” despite testing polarized issues where a factual backfire was expected (Wood and Porter Citation2019, 142). This was the case across all ideological-political orientations. The authors conclude that for many participants the acceptance of facts is considerably easier than the more “costly” and “effortful” activity of engaging in counterargument (Wood and Porter Citation2019, 158). Other studies on journalistic adjudication found that its effects on factual beliefs were not conditional on ideological or partisan cues (Lyons Citation2018).

Thus, faced with competing evidence in the journalistic adjudication literature and with few studies on the relationship between third-party fact-checking and media trust, our study addresses this research gap and tests the effects of third-party fact-checking on participants’ overall trust in the news story using a political case study. To answer the aim we address two research questions. The first is:

RQ1. What effect does a third-party fact check have on audience trust in a political news story?

We also aim to test if audience trust in the news story is conditional on partisanship and news source. Beginning with partisanship, we test the scenario that if the correction is contrary to the reader’s party identification will it decrease their trust in the news story? There is good reason to believe that media source may operate as another moderating influence on trust in media. For example, Fisher and colleagues (Fisher et al. Citation2021, 14) found that the reputation of the news brand potentially determines trust in news. Thus, we also aim to test whether the news source (commercial or public-owned) moderates the effects of fact-checking on media trust. This brings us to our second research question:

RQ2. Do other factors such as a) partisanship and b) media source moderate fact check effects?

Based on past studies described above we would expect that individuals with a positive bias towards the news source would maintain or increase their trust in the source after receiving the fact-check, assuming they take notice of the news source when reading the story. Thus, we also expect the opposite result from our respondents with a negative bias toward the news source, that their trust in the story would be maintained or decreased after receiving the fact check.

Method

Our study adopted a realistic survey experiment to answer these research questions. A quota-sampled non-probability survey experiment was administered through Qualtrics (an online survey provider) in October 2020, which provided a sample of 1,608 respondents. Qualtrics maintains a large panel of Australian respondents who are broadly representative of the population and are compensated for their participation. The benefit of using an established survey company in Australia like Qualtrics is that the sample was matched to be representative of the population on key demographics such as age, gender, and location. Given the partisan nature of the story, this survey sample also needed to be representative of those with different political persuasions. This was achieved by matching party identification as captured in the 2019 Australian Election Study of the most recent federal election.Footnote2 We have not matched the sample on any further characteristics because we believe that to do so would effect sample composition and undermine the power of randomisation, an important feature of our study design. There is also growing evidence that there are few meaningful differences between the inferences obtained from online non-probability panels and probability-based samples (Ansolabehere and Schaffner Citation2014; Breton et al. Citation2017). While we went to considerable effort to obtain a representative Australian sample on the key demographics described above, we also note that random assignment within this study design aids the robustness of its findings. Our research design, using a single country case study and applying an experiment, has a strong precedence in similar studies published in quality journals including some of the studies cited in the review above (Pingree, Brossard, and McLeod Citation2014, Pingree et al. Citation2018; Nyhan, Reifler, and Ubel Citation2013). We also have previous experience with applying a single country case study experimental survey design with random assignment in past published work (Carson, Ruppanner, and Lewis Citation2019). Writing about the generalizability of population-based survey experiments like ours, Mutz (Citation2011, 157) argues that: “population-based experiments come closest to what has been termed the “ideal experiment,” that is, the kind of study that combines random selection of subjects from the population of interest with random assignment to experimental treatments.”

The survey experiment had three components to it. First we asked all respondents a series of pre-treatment demographic and institutional trust questions (see online supplement for details). Second, we administered the experiment by randomly dividing participants into two groups. One group received a news story plus a third-party fact check verdict of the central political claim embedded in the news story (the treatment group). The second group received just the story without the third-party fact check (reference group). In addition, we randomly assigned one of two possible publication sources of the identically worded news story to all 1608 participants. Third, respondents were asked post-treatment questions about their levels of trust in the news story and, for those who received it, trust in the fact-check.

As part of the experimental design, two identically worded articles based on real-world facts and quotes were created by the authors (one of whom was a professional journalist with news writing skills). The articles were identical in content but differed in format to match the style of the news sources. Each article was formatted with careful attention to headlines, fonts, and pictures to appear exactly as they would if they were written for the attributed online news platform. One article was presented as being from the publicly funded Australian Broadcasting Corporation (ABC) website, and the other from Rupert Murdoch’s commercial news outlet, news.com.au.

The two outlets were selected because they represent large national mainstream news outlets, but differ in terms of ownership, public trust and audience partisanship. General population surveys consistently find the ABC to be Australia’s most trusted media source overall (Ipsos Citation2019). However, right-of-centre Liberal-National Coalition supporters are more likely to trust commercial mainstream media (print and television) over the ABC. In contrast centre-left Labor and Greens supporters are more likely to have greater trust in the ABC than other news sources (Jackman and Ratcliff Citation2018).

Notwithstanding partisan preferences, the two outlets in their “general news” sections generally strive to report stories in a “balanced” way. Meaning, competing viewpoints – where they exist – are represented in the story without taking an overt political stand, described earlier as “he said/she said” reporting. Brian McNair argued that Murdoch’s Australian media outlets take this more neutral approach (although ideological perspectives can be found on comment/editorial pages) compared to Murdoch’s US Fox News coverage, which takes an “unashamedly partisan approach to news”, because of the very different media markets within which they compete (McNair Citation2017, 1322). Meanwhile, the ABC is government funded and has a statutory duty to ensure factual accuracy “according to the recognised standards of objective journalism” (ABC 2013, 4), notwithstanding that it is sometimes criticized for a perceived left-wing bias by some conservative politicians and commentators.

The news story presented to participants was about the real use of public money to benefit sports clubs in electorates that the right-of-centre Liberal-National Coalition were attempting to win (or hold) just prior to the May 2019 federal election. The article included real evidence produced from an independent national auditor’s report into the controversy that found the public money was not directed to sporting clubs deemed most in need by Sports Australia (the independent advisor). The article also informed its readers that the minister responsible for the scheme, a senior National party Senator Bridget McKenzie, had since resigned from Cabinet amidst legal proceedings launched by the unfunded sporting clubs. To limit priming effects, we chose a political story that we thought respondents would engage with but would not recall key facts given 10 months had passed since the controversy, and that news coverage since had been overwhelmed by COVID-19 stories.

The story was headlined, “Sports grants ‘process above board,’ claims Dutton” and was written in a “he said/she said” fashion to include quotes from both sides of the controversy –without actively adjudicating the political statements. Thus, our news story included a real – but factually incorrect – statement from a senior Minister (Peter Dutton) who claimed the funding was awarded properly by his colleague, Senator McKenzie. Dutton’s real-life quote read: “Bridget McKenzie made recommendations, as I understand it, on advice from the sporting body that these programs that have been funded were recommended.”

The story then mentions that Minister Dutton repeated this claim on Nine’s Today program (a mainstream free-to-air morning television show in Australia) on 23 January 2020 stating that, “There was no funding provided to a project that wasn’t recommended.” To reiterate, the false claims were contradicted in the story using others’ quotes in the experiment. The constructed story contained real facts throughout the article including the ministerial resignation and the findings of the independent auditor’s review that the public money was misused. However, the central claim by Minister Dutton was not explicitly adjudicated by the journalist within the story (see Appendix A for the full story).

After reading the story, half of the participants were asked to read a genuine published fact-check conducted by Australian Associated Press (AAP) at the time the story broke on 24 January 2020. The fact-check provided substantial evidence before concluding that the deputy National’s leader and Senator Bridget McKenzie improperly funded the sports programs bypassing the recommendations of Sports Australia, the responsible division for growing the sport sector of the statutory authority the Australian Sports Commission. More critically, the fact-check specifically investigated the claim quoted above, made by Peter Dutton. The fact checker’s verdict definitively found Dutton’s claim was false.

Post-treatment questions to all respondents then assessed participants’ trust in the news story, their perceptions of credibility, and perceived fairness across a four-point Likert scale: 1) Do not trust at all; 2) Do not trust much; 3) Trust a little; 4) Trust a lot. Subjects in the treatment group also answered questions on how trustworthy and credible the fact-check was, again using a four-point Likert scale. Question wording is contained in . Thus, our experiment tests the effects of third-party adjudication (an independent, real fact-check) on trust in the original news story.

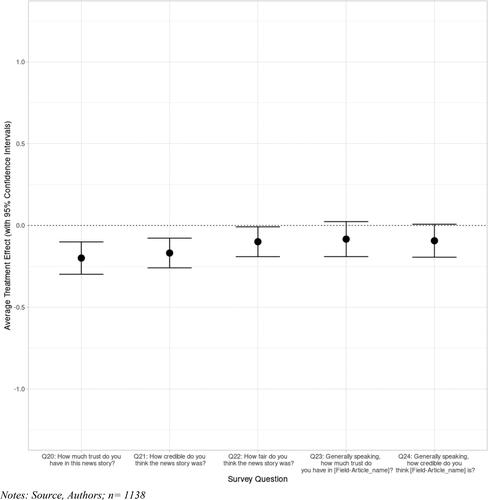

Figure 1. The effect of the being exposed to a fact check on trust, credibility and fairness (average treatment effects).

Notes: Source, Authors; n= 1138.

Respondents were debriefed after the experiment. They were informed that the news article was constructed by the authors (but based on real events) and were provided with the opportunity to withdraw their data as per University human ethics protocols.

To confirm the attention of our participants and improve the quality of responses, we instituted a number of screening procedures. We required respondents to pass a single attention check question at the start of the survey (i.e. select option “4” to confirm they were paying attention). We also included a series of manipulation checks where respondents were asked to identify (from four choices) the general topic of the story and the publication it appeared in (again from four choices). Excluded from the sample were 349 respondents who failed these checks with the majority failing to identify the news source. This may speak to larger questions about the normative expectations we have of readers paying attention to masthead titles when consuming news online and is a fertile area for future research. Respondents in the treatment group were also asked to identify the verdict of the fact-check. A further 143 respondents failed this test and were also excluded. Given the attention and reading required of the study, we also removed from participants who took less than 200 seconds to complete the entire survey. These exclusions left us with a sample of 1138 subjects.

Results

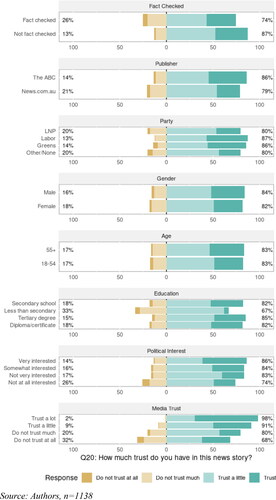

Despite widespread public concern regarding declining public trust in news, our results show quite high levels of trust initially in both the news story and the fact-check. Subjects showed a great deal of trust in the provided news story (i.e. “how much trust do you have in this story?”; 1) Do not trust at all; 2) Do not trust much; 3) Trust a little; 4) Trust a lot; M = 3.13, SD = 0.75). In fact, only three per cent of respondents expressed no trust at all in the news story, reflecting the relatively small standard deviation (SD). Post-treatment trust in the story (and the fact-check) was also quite high – even among those deeply suspicious of media as an institution. Those that claimed not to trust the media “at all” (in the pre-treatment question), exhibited a similarly high level of trust in the news story (M = 2.91, SD = 0.91) and in the fact-check (M = 3.04, SD = 0.91). This suggests (following our discussion of conceptualization of trust earlier) that respondents think about the general (i.e. the “media”) and the specific (i.e. the “news story”) in very different ways. These initial results seem to further justify our more refined approach to measure trust in the news story. A comprehensive visual outline of the demographic distribution of trust in our experiment and detailed description of the variables can be found in and .

Figure 2. Trust in the fact checked and non-fact checked news story according to partisanship, outlet and other factors.

Notes: Source, Authors; n= 1138; Note: For example to interpret the figure on general trust in the media, 98 per cent of those that trust the media “a lot” have trust in the new story.

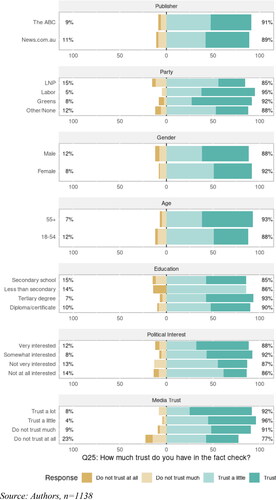

Figure 3. Trust in the third-party fact-checks according to partisanship, outlet and other factors.

Source: Authors, n = 1138.

While participants were likely to trust the news story in our experiment, the treatment condition – that of being exposed to the fact-check containing independent adjudication of the political claim in the story – significantly decreased this level of news trust. outlines the Average Treatment Effects (ATE). It is a measure used to compare treatments (or interventions) in randomized experiments; in this case, it compares perceptions of trust, fairness and credibility in the news story and also in the fact-check, with 95% confidence intervals. This statistical test measures the difference in mean (M) responses to the survey question between the treatment and reference groups. As shows, the fact-check significantly lowers participants’ trust in the news story (ATE = −0.19, p < 0.001), and perceived credibility (ATE = −0.17, p < 0.001), and perceived fairness (ATE = −0.10, p < 0.08). Put simply, trust in the news story decreased after participants received a fact check adjudicating the political claim in the story. When we asked about general trust and credibility in the story with direct reference to the article’s publisher neither reaches statistical significance, though the direction of the effect remains unchanged (i.e. a decrease in trust).

In addition to , , the ordinal logistic regressions, offers a more detailed investigation of variation in trust in our news story among subjects. Here, the dependent variables are ordered from 1 (i.e. do not trust at all) to 4 (i.e. trust a lot), making a significant positive coefficient an indicator of greater levels of trust (and vice versa). For example, the results in Model 1 (-0.589, p < 0.01) and 2 (-1.217, p < 0.05) show subjects in the treatment group (i.e. those who received the fact-check) were significantly less likely to trust the story than those in the reference group (i.e. did not see the AAP fact-check). These results, along with the findings from , answer our first research question. Far from increasing trust as some literature suggests, our study finds the third-party fact-check actually lowered the likelihood of trusting the original news article.

Table 1. Fact check experiment ordinal regression models – Trust.

Returning to , as the additive model (1) shows, publisher, party identification, age, education level, political interest, and media trust all significantly influence the probability of a subject expressing trust in the story. For example, participants that viewed the ABC version of the story were significantly more likely to trust the story than those that viewed the News.com.au article. The ABC therefore seems an overall more trustworthy news source than News.com.au, as expected from past survey findings discussed earlier. Coalition (right-leaning) supporters were also less likely to trust the story, which was negative for the Coalition government, which is also unsurprising and consistent with past studies.

We then tested if political party and publisher type would moderate the effect of fact-checking on levels of trust in news. ’s model (2) dismisses both prospects. As the interactions demonstrate, the effect of the fact-check is not conditional on the publisher (0.202, p > 0.1), nor is there a conditional relationship that exists between fact-check, publisher, and party support. During the variable selection process, we ran several LASSO regressions to test if such a conditional relationship might exist – a statistical test useful for identifying significant predictors. All of these estimates were driven to zero, indicating potential ordinal regression models that account for a two-way relationship between fact-checking and party or publisher do not deviate significantly from the additive model (1). In short, we do not see any such conditional relationship in the effect of a fact-check when moderated by party and/or publisher.

There is, however, a clear moderating relationship on the level of trust in the story between publisher and party, indicating the (conditional) effect of one is moderated by the other. This is consistent with past studies, and it also intuitively makes sense: Coalition supporters may be less trusting of an ABC story (-1.810, p < 0.01) than an article published from an outlet they deem to be politically sympathetic (i.e. News.com.au; −0.036, p > 0.1).

Models (3) and (4) in show that trust in the fact-check itself depends on party support, as we might expect given that the fact-check corrects the claim of an elected member of the Coalition (right-leaning). We see this with the coefficients for Coalition supporters being statistically significant in Models (3) and (4). However, the effect of publisher in these models both as a main (Model 3) and conditional effect (Model 4) fails to reach statistical significance. This indicates that trust in the fact-check does not depend on the type of publisher, a particularly important finding that suggests a slightly more complex relationship exists between publisher and the fact-checking, than party and fact-checking. For example, some party supporters seem entirely resistant to trusting our story, and the fact-check itself (e.g. Coalition supporters), but the publisher type appears to only influence trust in story, not in the fact-check. This may speak to the history of the fact-checker AAP, which began its operations as an independent newswire 85 years ago. A result like this suggests party identification to be the main challenge for fact-checkers looking to build public trust in their work.

As Model 5 shows in , there are potential limits to the influence of party support. When all else is equal, trust in the fact-check features as one of the major predictors for trust in our news story. When this variable is accounted for, party identification no longer significantly influences trust. The uniform outcomes of media trust in all five models hint at a similar conclusion: fostering greater trust whether it be in media as an institution, or in fact-checks themselves, appears to be the most viable path towards increasing trust in news stories.

Finally, it is worth returning at this point to our earlier discussion about conceptualizing trust. We noted that the terms “trust” and “credibility” have often been used interchangeably in previous studies, engendering some methodological and theoretical criticisms. To avoid similar concerns, we ran the exact same analysis on the “how credible do you think the news story was” questions. These tests produced highly similar results (see in online supplementary materials). This suggests that respondents think about trust and credibility in very similar ways in our study.

Table 2. Fact check experiment ordinal regression models - Credibility.

Discussion and Conclusion

In this experiment we set out to explore the effect of third-party fact-checking on trust in a news story. In answer to our first research question we found that fact-checking had a clear negative influence on readers’ trust in the original news story. In answer to our second research question we found that the effect was not conditional on partisanship or publisher. One of the purposes of third-party fact-checking is to help more accurately inform the public and strengthen media trust (AAP Citation2021). These findings, however, point to a clear “backfire” effect on strengthening media trust through the act of external fact-checking. Third-party fact-checking – according to these results for this case study – appears to hurt the very institution, the news media, that it hopes to serve.

This is a particularly concerning finding. In many ways, the fact-check explanation that precedes the verdict reinforced key facts offered in the story, and so one might reasonably expect in such a situation that the fact-check would strengthen participants’ (in this case, the treatment group’s) trust in the original story once they had read the full fact check. For it to do the opposite points to a concerning conclusion: news audiences may not discern a politician’s false claims within a news story from the news reporting itself. From the audiences’ perspective, the political falsehood potentially influences their trust in the whole story, rather than being isolated to the reputation of the politician making the false claim. This finding is particularly important given our use of a “he said/she said” reporting style in the news story whereby the readers are presented with divergent statements, one of which is false. In this case, leaving the adjudication to third-party fact-checkers may be a deeply unwise, although popular, long-term strategy for journalism. Given this, and earlier findings (Pingree, Hill, and McLeod Citation2013, Pingree, Brossard, and McLeod Citation2014), we see reason to recommend that journalists, where possible, more stringently adjudicate false claims in their own political reporting; although we note that this is somewhat problematic for an organization like the ABC that has impartiality as a duty in the ABC Act 1983. However, the ABC’s 2019 revised Code of Practise does specify that “impartiality” does not mean that every perspective receives equal attention (Australian Broadcasting Corporation (ABC) Citation2019, 3). We identify this as a clear direction for future research – to test whether the same patterns emerge for a fact checked news story where the journalist also adjudicates this finding. This would inform whether journalists should apply this approach to mitigate misinformation.

There may also be a lesson in this data for independent fact-checkers as well. Fact-checkers might help increase trust in news by more clearly stating that they are fact-checking a politician’s claim specifically, and not the media coverage that contains it. Some fact-checkers make this distinction on their websites but not on every fact-check explanation. This specificity may help avoid any audience conflation between the independent fact-check of a political falsehood and readers’ perceptions of the trustworthiness of the overall news story and media outlet. Both of these recommendations require further research, given the limitations of our study’s scope which was focused on political reporting in the Australian context.

Our findings still offer reasons for optimism. Respondents exhibited a great deal of overall trust in the story initially, and in the fact-check itself, surprisingly, even amongst those that distrust the media as an institution. It is for this reason that we believe journalists and fact-checkers can build greater trust, with some strategic changes. The lack of a conditional relationship between trust, fact-checking, partisanship and publisher is also reason for some optimism. Fact-checkers looking to build trust in the news stories that they scrutinize, need not necessarily worry about partisanship or news source as obstacles to overcome to resolve the detrimental outcome we observe in this study.

Trust in media as an institution also represents another important factor worth discussing here. Across all five statistical models, greater trust in media as a starting point leads to greater trust in the story and in the fact-check. This result indicates that the overall goal to increase trust in media is a worthy one, in that it will likely help facilitate greater trust in news reports and fact-checks. The challenge, of course, is to foster that greater level of institutional trust in the first place – or to help stop the ongoing erosion of it. While our research shows good reason for optimism, this data indicates journalists’ and fact-checkers’ current presentation of facts should be reviewed as they are likely to be contributing to the “trust” problem.

Our experiment provides important evidence on the effects of third-party fact-checking on trust in news stories in the Australian context. However, there are several unanswered questions that should be on the agenda for future research. The first of these is how these findings generalize across different countries and different media systems. The media systems, and the country context that they are embedded in, might also have important conditioning effects. This is an area for future, preferably cross-national, studies. Further, it is possible that social media or non-mainstream media platforms might engender lower levels of trust in similar experimental conditions and understanding how this impacts the results is also important. This may even occur when a reader experiences the same story from the same news outlet on the mobile app versus on a desktop. Further research is needed to understand what happens when explicit journalistic adjudication occurs within a news story, but there is disagreement with a third-party fact check. The effects we have found here may also be moderated by other factors. Story genre (e.g. whether the story is about political or non-political issues) may represent one such factor and is another area for future studies. This would be particularly fruitful for a news story that inherently polarizes respondents to identify the value of fact-checking in “high-stakes” and highly contentious news stories (e.g. COVID-19 vaccination misinformation). Finally, another research stream arising from our findings is how news consumers’ degree of familiarity and use of third-party fact-checkers might moderate their trust in news stories. These are important lines of enquiry that require greater examination.

Despite these limitations, our survey experiment examined an underexplored area of fact-checking scholarship by examining the effects of third-party adjudication on media trust. The findings suggest that third-party fact-checking is not a panacea when it comes to increasing media trust in its present form. External fact-checking can, at least by Australian perceptions, reduce trust in political news stories unless there is a rethinking of how fact-checks about political claims are presented and communicated.

Supplemental Material

Download PDF (2.4 MB)Disclosure Statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 In September 2020, the Australian federal government provided the newswire service AAP $5 million to keep it solvent after its owners Nine Entertainment and News Corp Australia sold it for $1 to a not-for-profit consortium.

2 The AES is Australia’s longest running representative electoral study and is akin in function to the American National Electoral Study.

References

- Amazeen, Michelle A., Emily Thorson, Ashley Muddiman, and Lucas Graves. 2018. “Correcting Political and Consumer Misperceptions: The Effectiveness and Effects of Rating Scale versus Contextual Correction Formats.” Journalism & Mass Communication Quarterly 95 (1): 28–48. https://doi.org/10.1177/1077699016678186

- Ansolabehere, Stephen, and Brian F. Schaffner. 2014. “Does Survey Mode Still Matter? Findings from a 2010 Multi-Mode Comparison.” Political Analysis 22 (3): 285–303.

- Ardèvol-Abreu, Alberto, and Homero Gil de Zúñiga. 2017. “Effects of Editorial Media Bias Perception and Media Trust on the Use of Traditional, Citizen, and Social Media News.” Journalism & Mass Communication Quarterly 94 (3): 703–724.

- Australian Associated Press (AAP). 2021. “About AAP FactCheck.” https://www.aap.com.au/about-factcheck/#item-1.

- Australian Broadcasting Corporation (ABC). 2019. “Code of Practice & Associated Standards.” https://about.abc.net.au/wp-content/uploads/2016/05/CODE-final-15-01-2019.pdf.

- Barrera, Oscar, Sergei Guriev, Emeric Henry, and Ekaterina Zhuravskaya. 2020. “Facts, Alternative Facts, and Fact Checking in Times of Post-Truth Politics.” Journal of Public Economics 182: 104123.https://doi.org/10.1016/j.jpubeco.2019.104123.

- Breton, Charles, Fred Cutler, Sarah Lachance, and Alex Mierke-Zatwarnicki. 2017. “Telephone versus Online Survey Modes for Election Studies: Comparing Canadian Public Opinion and Vote Choice in the 2015 Federal Election.” Canadian Journal of Political Science 50 (4): 1005–1036.

- Carson, Andrea. 2020. Investigative Journalism, Democracy and the Digital Age. New York: Routledge.

- Carson, Andrea, Leah Ruppanner, and Jenny M. Lewis. 2019. “Race to the Top: Using Experiments to Understand Gender Bias towards Female Politicians.” Australian Journal of Political Science 54 (4): 439–455. https://doi.org/10.1080/10361146.2019.1618438.

- Coddington, Mark, Logan Molyneux, and Regina G. Lawrence. 2014. “Fact-Checking the Campaign: How Political Reporters Use Twitter to Set the Record Straight (or Not).” The International Journal of Press/Politics 19 (4): 391–409..

- Edelman. 2020. “Edelman Trust Barometer 2020.” https://www.edelman.com/trust/2020-trust-barometer.

- Engelke, Katherine M., Valerie Hase, and Florian Wintterlin. 2019. “On Measuring Trust and Distrust in Journalism: Reflection of the Status Quo and Suggestions for the Road Ahead.” Journal of Trust Research 9 (1): 66–86..

- Farhall, Kate, Andrea Carson, Scott Wright, Andrew Gibbons, and William Lukamto. 2019. “Political Elites’ Use of Fake News Discourse across Communications Platforms.” International Journal of Communication 13: 4353–4375.

- Fischer, Sara. 2020. “Fact-Checking Goes Mainstream in Trump Era.” Axios, October. https://www.axios.com/fact-checking-trump-media-baad50cc-a13f-4b73-a52a-4cd9e63bd2fc.html.

- Fisher, Caroline. 2016. “The Trouble with ‘Trust’ in News Media.” Communication Research and Practice 2 (4): 451–465..

- Fisher, Caroline. 2019. “Australia.” In Reuters Institute Digital News Report 2019, edited by N. Newman, R. Fletcher, A. Kalogeropoulos, and R. K. Nielsen, 132–133. Oxford: Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/inline-files/DNR_2019_FINAL.pdf.

- Fisher, Caroline, Terry Flew, Sora Park, Jee Young Lee, and Uwe Dulleck. 2021. “Improving Trust in News: Audience Solutions.” Journalism Practice 15 (10): 1497–1515..

- Fletcher, Richard, and Sora Park. 2017. “The Impact of Trust in the News Media on Online News Consumption and Participation.” Digital Journalism 5 (10): 1281–1299. https://doi.org/10.1080/21670811.2017.1279979

- Flew, Terry, U. Dulleck, Caroline Fisher, and O. Isler. 2020. Trust and Mistrust in Australian News Media. Brisbane: Queensland University of Technology. https://research.qut.edu.au/best/wp-content/uploads/sites/244/2020/03/Trust-and-Mistrust-in-News-Media.pdf.

- Giusti, Serena, and Elisa Piras. 2021. Democracy and Fake News: Information Manipulation and Post-Truth Politics. Routledge: London.

- Graves, Lucas. 2016. Deciding What’s True: The Rise of Political Fact-Checking in American Journalism. New York: Columbia University Press.

- Graves, Lucas. 2018. “Boundaries Not Drawn: Mapping the Institutional Roots of the Global Fact-Checking Movement.” Journalism Studies 19 (5): 613–631. https://doi.org/10.1080/1461670X.2016.1196602

- Graves, Lucas, and Chris W. Anderson. 2020. “Discipline and Promote: Building Infrastructure and Managing Algorithms in a ‘Structured Journalism’ Project by Professional Fact-Checking Groups.” New Media & Society 22 (2): 342–360. https://doi.org/10.1177/1461444819856916

- Hanitzsch, Thomas. 2012. “Journalism, Participative Media and Trust in Comparative Context.” In Rethinking Journalism: Trust and Participation in a Transformed Media Landscape, edited by C. Peters and M. Broersma. London; New York: Routledge.

- Hanitzsch, Thomas, Arjen van Dalen, and Nina Steindl. 2018. “Caught in the Nexus: A Comparative and Longitudinal Analysis of Public Trust in the Press.” The International Journal of Press/Politics 23 (1): 3–23. https://doi.org/10.1177/1940161217740695

- Hetherington, Marc J., and Thomas J. Rudolph. 2015. Why Washington Won't Work: Polarization, Political Trust, and the Governing Crisis. Chicago: University of Chicago Press.

- Ipsos. 2019. “Australians Trust the Media Less: Ipsos ‘Trust in the Media’ Study.” Ipsos, June 25. https://www.ipsos.com/en-au/australians-trust-media-less-ipsos-trust-media-study.

- Jackman, Simon, and Shaun Ratcliff. 2018. “America’s trust deficit.” United States Studies Centre, University of Sydney, February 18. https://www.ussc.edu.au/analysis/americas-trust-deficit.

- Jamieson Hall, Kathleen, and, Waldman, Paul. 2003. The Press Effect: Politicians, Journalists, and the Stories That Shape the Political World. New York: Oxford University Press.

- Lawrence, Regina G., and Matthew L. Schafer. 2012. “Debunking Sarah Palin: Mainstream News Coverage of ‘Death Panels’.” Journalism 13 (6): 766–782. https://doi.org/10.1177/1464884911431389.

- Lyons, Benjamin A. 2018. “When Readers Believe journalists: Effects of Adjudication in Varied Dispute Contexts.“ International Journal of Public Opinion Research 30 (4): 583–606.

- Martin, Fiona R., and Tim Dwyer. 2012. “Churnalism on the Rise as News Sites Fill Up with Shared Content and Wire Copy.” The Conversation, 25 June. https://theconversation.com/churnalism-on-the-rise-as-news-sites-fill-up-with-shared-content-and-wire-copy-7859.

- McNair, Brian. 2017. “After Objectivity?” Journalism Studies 18 (10): 1318–1333.

- Murphy, Katharine. 2020. “Most Australians Say Social Media Platforms Should Block Misleading Political Ads.” The Guardian, 16 June. https://www.theguardian.com/media/2020/jun/16/digital-media-report-survey-majority-australians-say-social-media-platforms-facebook-twitter-should-block-misleading-political-ads.

- Mutz, Diana. 2011. Population-Based Survey Experiments. Princeton: Princeton University Press,

- Newman, Nic, and Richard Fletcher. 2017. Bias, Bullshit and Lies: Audience Perspectives on Low Trust in the Media.” Oxford, UK: The Reuters Institute for the Study of Journalism. https://www.ssrn.com/abstract=3173579.

- Nieminen, Sakari, and Lauri Rapeli. 2019. “Fighting Misperceptions and Doubting Journalists’ Objectivity: A Review of Fact-Checking Literature.” Political Studies Review 17 (3): 296–309.

- Nyhan, Brendan, Ethan Porter, Jason Reifler, and Thomas J. Wood. 2020. “Taking Fact-Checks Literally but Not Seriously? The Effects of Journalistic Fact-Checking on Factual Beliefs and Candidate Favorability.” Political Behavior 42 (3): 939–960. https://doi.org/10.1007/s11109-019-09528-x

- Nyhan, Brendan, and Jason Reifler. 2010. “When Corrections Fail: The Persistence of Political Misperceptions.” Political Behavior 32 (2): 303–330. https://doi.org/10.1007/s11109-010-9112-2

- Nyhan, Brendan, Jason Reifler, and Peter A. Ubel. 2013. “The Hazards of Correcting Myths about Health Care Reform.” Medical Care 51 (2): 127–132.

- Ojala, Markus. 2021. “Is the Age of Impartial Journalism over? The Neutrality Principle and Audience (Dis)Trust in Mainstream News.” Journalism Studies 22 (15): 2042–2060.

- Owen, Taylor, Peter Loewen, Derek Ruths, Aengus Bridgman, Robert Gorwa, Stephanie MacLellan, Eric Merkley, Andrew Potter, Beata Skazinetsky, and Oleg Zhilin. 2019. “Digital Democracy Project, Research Memo #5: Fact-Checking, Blackface and the Media.” Public Policy Forum, Max Bell School of Public Policy. DDP Research Memo #5: Fact-Checking, Blackface and the Media - Public Policy Forum (ppforum.ca).

- Pingree, Raymond J. 2011. “Effects of Unresolved Factual Disputes in the News on Epistemic Political Efficacy.” Journal of Communication 61 (1): 22–47. https://doi.org/10.1111/j.1460-2466.2010.01525.x.

- Pingree, Raymond J., Dominique Brossard, and Douglas M. McLeod. 2014. “Effects of Journalistic Adjudication on Factual Beliefs, News Evaluations, Information Seeking, and Epistemic Political Efficacy.” Mass Communication and Society 17 (5): 615–638.

- Pingree, Raymond J., Megan Hill, and Douglas M. McLeod. 2013. “Distinguishing Effects of Game Framing and Journalistic Adjudication on Cynicism and Epistemic Political Efficacy.” Communication Research 40 (2): 193–214. https://doi.org/10.1177/0093650212439205

- Pingree, Raymond J., Brian Watson, Mingxiao Sui, Kathleen Searles, Nathan P. Kalmoe, Joshua P. Darr, Martina Santia, and Kirill Bryanov. 2018. “Checking Facts and Fighting Back: Why Journalists Should Defend Their Profession.” PLoS One 13 (12): e0208600. https://doi.org/10.1371/journal.pone.0208600

- Robinson, Sue. 2019. “Crisis of Shared Public Discourses: Journalism and How It All Begins and Ends with Trust.” Journalism 20 (1): 56–59. https://doi.org/10.1177/1464884918808958

- Schudson, Michael. 2008. Why Democracies Need an Unlovable Press. Cambridge: Polity Press.

- Stencel, Mark, and Joel Luther. 2019. “Reporters’ Lab Fact-Checking Tally Tops 200.” Duke Reporters’ Lab. https://reporterslab.org/reporters-lab-fact-checking-tally-tops-200/.

- Strömbäck, Jesper, Yariv Tsfati, Hajo Boomgaarden, Alyt Damstra, Elina Lindgren, Rens Vliegenthart, and Torun Lindholm. 2020. “News Media Trust and Its Impact on Media Use: Toward a Framework for Future Research.” Annals of the International Communication Association 44 (2): 139–156.

- Swire-Thompson, Briony, Joseph DeGutis, and David Lazer. 2020. “Searching for the Backfire Effect: Measurement and Design Considerations.” Journal of Applied Research in Memory and Cognition 9 (3): 286–299. https://doi.org/10.1016/j.jarmac.2020.06.006

- Tsfati, Yariv, and Gal Ariely. 2014. “Individual and Contextual Correlates of Trust in Media across 44 Countries.” Communication Research 41 (6): 760–782. https://doi.org/10.1177/0093650213485972

- van Dalen, Arjen. 2019. “Journalism, Trust, and Credibility.” In Handbook of Journalism Studies, edited by K. Wahl-Jorgensen and T. Hanitzsch, 356–371. London: Routledge.

- Van Duyn, Emily, and Jessica Collier. 2019. “Priming and Fake News: The Effects of Elite Discourse on Evaluations of News Media.” Mass Communication and Society 22 (1): 29–48. https://doi.org/10.1080/15205436.2018.1511807

- Walter, Nathan, Jonathan Cohen, R. Lance Holbert, and Yasmin Morag. 2020. “Fact-Checking: A Meta-Analysis of What Works and for Whom.” Political Communication 37 (3): 350–375.

- Wintersieck, Amanda L. 2017. “Debating the Truth: The Impact of Fact-Checking during Electoral Debates.” American Politics Research 45 (2): 304–331. https://doi.org/10.1177/1532673X16686555

- Wood, Thomas, and Ethan Porter. 2019. “The Elusive Backfire Effect: Mass Attitudes’ Steadfast Factual Adherence.” Political Behavior 41 (1): 135–163.

Appendix A

The two identically worded news articles used for the survey experiment are below. One is attributed to the commercial news, outlet news.com.au, and the other to the public broadcaster, abc.com.au. Both are formatted to mimic the real-life websites.