Abstract

Platform companies play an important role in the production and distribution of news. This article analyses this role and questions of control, dependence and autonomy in the light of the ‘AI goldrush’ in the news. I argue that the introduction of AI in the news risks shifting even more control to and increasing the news industry’s dependence on platform companies. While platform companies’ power over news organisations has to date mainly flown from their control over the channels of distribution, AI potentially allows them to extend this control to the means of production as the technology increasingly permeates all stages of the news-making process. As a result, news organisations risk becoming even more tethered to platform companies in the long-run, potentially limiting their autonomy and, by extension, contributing to a restructuring of the public arena as news organisations are re-shaped according to the logics of platform businesses. I conclude by mapping a research agenda that highlights potential implications and spells out areas in need of further exploration.

Introduction

Platform companies such as Google, Amazon, and Microsoft have become powerful actors in the news. They also lead the development and application of artificial intelligence, a field many news organisations look to in hopes of buttressing journalistic work, increasing efficiency, and improving profitability. Platform companies play an important role in news organisations’ processes—as providers of AI services, tools, and infrastructures, or as partners in research and development. Yet, while platform companies dominate in the field of AI, a majority of news organisations lack almost all of the resources that give platform companies their advantage in this area. The result is that news organisations often have to rely on their AI solutions, services, and infrastructures. This poses an interesting problem for the news industry: Already dependent on platform companies in many ways, will the push to adopt AI increase their dependency on these companies? This emerging challenge is, to date, poorly understood and holds answers for the future of news.

This article analyses the constitutive role platform companies play in journalism’s adoption and use of AI and re-examines questions of control, dependence and autonomy in the light of the ‘AI goldrush’ in the news. Focusing on news organisations in the US and Europe, I argue that the introduction of AI in the news increases the potential for so-called ‘infrastructure capture’ and the risk of shifting even more control to platform companies, thus increasing the news industry’s dependence on the same, with the potential to undermine the news’ autonomy and reshape the public arena. While the power exercised by platform companies over the news has to date mainly flown from their control over the channels of communication and distribution, AI allows them to extend this control as the technology increasingly permeates all stages of the gatekeeping process from news gathering, to production, to distribution. As such, platform companies increasingly control both the means of production and connection in the news. With the complexity and resource-intensiveness of AI creating lock-in effects, news organisations will likely become even more tethered to platform companies in the long-run, thus potentially limiting their autonomy and, by extension, leading to a restructuring of the public arena, as news organisations as the main gatekeepers to the same are re-shaped according to the logics of platform businesses.

After clarifying key terms, this article provides an overview of AI’s rise in the news, the role of major technology companies in this development, and how it potentially increases their control over the news. Next, I elaborate on potential issues as well as consequences of the same for the autonomy of the news. I conclude by mapping a research agenda that thinks through potential implications and spells out areas in need of exploration to allow us to make sense of the larger environment and dynamics that shape how AI is being adopted in the news.

AI, Platform Companies, and the News

Defining Platform Companies and Artificial Intelligence

As Gorwa notes, ‘platform’ as of late has been adopted as a ‘shorthand both for the services provided by many technology companies, as well as the companies themselves’ (Gorwa Citation2019, 856). Here, I use ‘platform-based businesses’ (Srnicek Citation2016) or ‘platform companies’ to refer to the dominant US-technology giants, because these terms emphasise the companies, thus allowing us to consider more than just the platforms and services they provide—namely other innate features of any firm, for example human capital and financial resources. While these terms also cover smaller platform companies such as eBay or Uber, this article focuses on the dominant US-based platform companies Alphabet/Google, Facebook (now Meta), Microsoft, Apple, and Amazon (more commonly known as GAFAM).

Defining artificial intelligence is equally thorny. For this article I will rely on a broad definition of AI as the activity of computationally simulating human activities and skills in narrowly defined domains, most commonly through the application of machine learning approaches, a subfield of AI, in which machines learn from data or their own performance (Mitchell Citation2019, 8). This ‘learning’ is an important feature: outcomes are ‘learned’ and iteratively optimised from (new) data or past performance, thus (ideally) improving the system’s quality and efficiency at certain tasks over time. AI’s specific ethical concerns or debates about artificial intelligence are beyond the scope here, but a good overview is provided by Mitchell (Citation2019) and Broussard (Citation2018).

What is AI in the News?

In a news context, the most commonly used types of AI are Machine Learning and forms of Natural Language Processing, although it should be noted that these are not the only variants of AI in use (for an overview see e.g., Beckett Citation2019; Marconi Citation2020). Widely seen as promising greater efficiency, profits, and the solution of hitherto unsolved problems, AI is increasingly deployed in news as part of a wider historical trend of ‘computational journalism’ (Thurman Citation2019) and in response to an algorithmic, data-driven logicFootnote1 increasingly governing the media landscape and society at large (Mau Citation2019). In this context, AI forms one of the frontiers of longer-standing trends of increasing rationalisation and quantification in the news industry (Napoli Citation2010) and moves towards more automation as well as innovation (Creech and Nadler Citation2018) all of which increasingly come—among other reasons—in response to a rapidly changing media landscape (Schroeder Citation2018) and growing business pressures (Newman Citation2019; Citation2021). These dynamics can be compounded by extensive promotion of AI from the technology sector and uncertainty around the future and the potential of such new technologies, which often tends to reinforce tendencies whereby managers follow trends or industry hype (Lowrey Citation2011)—which ironically in the case of AI is often also fanned by exaggerated news coverage (Brennen, Howard, and Nielsen Citation2019; Citation2020). Nevertheless, it should not be forgotten that AI is beneficial in many ways and has already had a positive impact on the news as multiple case studies and industry reports suggest. As such, the news industry’s adoption of AI is not just a result of hype or excessive promotion. Looking at examples, AI has been or can be employed at various points of the gatekeeping process in the news—the socio-technical process in news organisations that determines what and how information is gathered, evaluated, edited, and shared as news (Shoemaker and Reese Citation2013). provides a simplified overview of where AI is used by news organisations at the time of writing.

Table 1. AI use cases along the gatekeeping process, drawing on Beckett (Citation2019) and Marconi (Citation2020).

Intersections: The Role of Platform Companies in AI in the News

Platform businesses have long been central actors in the news (see e.g., Nielsen Citation2018, 21–23) and a plethora of scholars has studied their effect on news and journalism and their relationship with the news industry (for an overview see e.g., Steensen and Westlund Citation2020). In a seminal study, Nielsen and Ganter (Citation2018) showed how news organisations’ relationships with platform companies is often marked by a tension between a willingness to cooperate and an uneasiness about the same for the fear of becoming too dependent on the former, a finding that has since been confirmed in various other studies (see e.g., Chua and Westlund Citation2021; Chua and Duffy Citation2019). A particular focus of researchers has been the effects of news media’s dependency on platform companies (e.g., Lindén Citation2020; Rashidian et al. Citation2019; Ananny Citation2018) and the role of platforms in re-shaping the public arena and news organisations’ role as gatekeepers of the same (Jungherr and Schroeder Citation2021). Today platforms are important gateways to news for audiences (Newman et al. Citation2020, 23), with search engines and social media increasing news media’s reach and traffic and shaping the flow of attention online (Diakopoulos Citation2019, 179; Nielsen Citation2018). They have also become service providers to the news industry (Fanta and Dachwitz Citation2020; Nechushtai Citation2018) and fund journalism projects and research. A simplified overview of the services offered by platform companies to the news industry is provided in .

Table 2. Typology of the overlap between platform company’s services and news organisations needs and uses of the same. Please note that there is often no clear delineation between e.g., hardware and software services.

While AI is involved at the back-end in many platform services (e.g., in the algorithms and models creating and presenting news feeds or search results relied upon by journalists) and implicitly affects the work of news organisations, growing evidence shows how platform companies are involved more directly in the development and use of artificial intelligence in the news industry at all stages of the gatekeeping process through several other channels outlined in . These include the provision of essential infrastructure (e.g., cloud computing and storage), access to AI models (e.g., Google BERT) or stand-alone software, but also funding for AI-related work and innovation projects. A non-exhaustive list of examples can be found in the appendix.

Theorising Dependency, Control, and News Autonomy in an Age of AI and Technology Behemoths

Platform companies play an increasingly important role in the development and use of AI in the news industry through a variety of channels. This raises the question of the consequences of this development for the news. A likely outcome is a shift of control to platform companies and an increase in the news industry’s dependence on the same through, among other things, ‘infrastructure capture’, which in turn holds the potential to constrain journalism’s autonomy. The second part of this article will spell out how and why such a shift in control and dependency might arise, how it differs from existing forms of dependency of the news on platform businesses, and why it matters with a view to the autonomy of journalism. I will begin by showing how the structural advantages platform companies have built up have translated into their dominance in AI, before explaining how these advantages combined with news organisations’ structural weaknesses afford platform companies control over parts of how AI is used in the news. In a third step, I tackle the question what this control does to the autonomy of the news, before thinking through potential implications as part of a proposal for a wider research agenda.

Structural Advantages, Strategies of Dominance, and Big Tech’s AI Superiority

Following their successful disruption of and rise to dominance in their respective core markets (for a detailed discussion see e.g., Barwise and Watkins (Citation2018) and Srnicek (Citation2016)) platform companies have made sustained high profits which have been re-invested into a) strengthening and protecting their respective core business and b) entering new markets which either promise to help with the former, or where these companies can use their existing assets (technology, infrastructure, brand, etc.) to their advantage at a minimum extra cost (Barwise and Watkins Citation2018, 24). Over time, this has resulted in the built up of large infrastructures—defined here as the physical, digital, human, and organisational structures and facilities needed for the operation of a service or enterprise—which allow them to run their digital businesses. In addition, it has given them a leading position in the collection and processing of vast amounts of (among other things) user data. These infrastructures include physical components (e.g., data warehouses, fibreoptic cables, and server and computing facilities), digital components (e.g., software, operating systems, algorithms), humans (in the form of skilled labour) and organisational structures which help to maintain and develop these infrastructures (e.g., Research & Development departments). The interlocking expansion of hardware, network infrastructure, and software has allowed platform companies to become more efficient in the process of scaling up (Hindman Citation2018, 22), in what economists call increasing returns to scale. This in turn is directly linked to further cementing the dominant position of these firms, as over time, continuous improvement of the access to and processing of data can give these companies a ‘strategic advantage in service quality, customization, message targeting, and cost reduction […with] quantity driv[ing] quality’ (Barwise and Watkins Citation2018, 29)—while also acting as a significant entry barrier for others.

In recent years, these companies have through research and strategic acquisitions also steadily expanded their capabilities in the field of artificial intelligence. Not only does the technology complement core businesses, it also offers further cost and revenue economies of scale, scope, and learning (Barwise and Watkins Citation2018, 28). The structural advantages described above have proven to be pivotal in this process, allowing the ‘Big Five’ to become leaders in these fields in the Western world. The existing infrastructure with access to vast amounts of storage, computing power and the ability to extract diverse data at scale has given them a first-mover advantage in AI and gives them a leading edge in the ongoing research and application of AI as these factors are key pre-requisites. At the same time, the business success deriving from their dominant positions in their respective markets has allowed the ‘Big Five’ to build up huge financial resources. These have been used to invest in high-risk internal and external AI research, to acquire and integrate promising AI companies and products, and to attract and retain skilled workers and leading talents in the field (J. P. Simon Citation2019; Metz Citation2017), with the strong incentives for skilled labour to join these firms leading to intense competition for and a shortage of talent elsewhere with potentially negative effects on the capacity of other actors in the AI space to innovate.

These reinforcing structural advantages have not only allowed platform companies to become dominant in AI research and provision; on the flipside they make it difficult for many news organisations to develop AI without having to rely on tools and infrastructure provided and maintained by these companies. A first issue for news organisations is financial costs. The financial outlook for most of the news industry internationally remains difficult. This makes it risky to divert resources to innovation around AI inasmuch as many organisations face other pressing concerns that require their resources and attention. Innovation also requires sunk cost investments, costs incurred which cannot be recovered. Actor’s decisions are shaped by this knowledge and a preference for loss avoidance which usually increases as uncertainty rises. Both set incentives to rely on existing products rather than developing them independently, as these can be faster and easier to implement, can have lower maintenance requirements thus lowering costs, or can be the only option where structural disadvantages make it impossible to independently achieve similar results (or to achieve results at all). A second problem related to the ‘cost’ of AI is that competing for skilled talent is difficult, given the attractive conditions platform companies can offer—a problem the news industry is well aware of. As Newman (Citation2020) reports only 24% of the surveyed news executives were confident that they could attract or retain staff in ‘Technology’ and ‘Data analysis or data science’ roles with the key reasons given that major technology companies can offer higher salaries and more job security to top-talent. A third limitation for news organisations is the availability of computing power, access to AI models, and sufficient high-quality data all of which can act as barriers to adoption (Diakopoulos Citation2019). While many news organisations themselves collect user data (among other things) which can be used for specific AI solutions, data availability remains a key barrier. Relatedly, the infrastructure (in terms of computing resources and server capabilities) and processing routines are complex and costly to build and maintain independently. To sum up: The building and deployment of successful AI applications is, to quote Hindman, an ‘iterative and accretive process’ which benefits from diverse and large teams, computing power, and data access (Hindman Citation2018, 49). Developing AI is easier for very large, well-funded, well-staffed, and well-infrastructured technology companies—something that many news organisations, arguably, are not.

From Structural Advantages to Control over the News: Control, Media-, and Infrastructure Capture

What is the mechanism by which these conditions lead to a shift in control and increasing dependence of the news? A fruitful conceptual lens to arrive at first answers and hypotheses for further research is provided by the concept of ‘media capture’ and more specifically, Efrat Nechushtai’s (Citation2018) specification of the same, ‘infrastructure capture’. Both offer ways to understand how actors external to the news industry, such as platform companies, might be able to control them—and what implications this control might have.

What I understand control to be in this context requires some further explanation. Beniger (Citation1986) states that ‘control is purposive influence towards a predetermined goal’, where one agent A (in practice e.g., an individual, state, or organisation) has influence or power over another agent B (here e.g., a news organisation), meaning that the former can cause changes in the actions or condition of the latter according to some prior goal.

Building on this, in my reading control can be broadly defined as the ability of an agent A to effect actions (A causes B to do something) and/or modulate actions (A shapes B’s actions) and shape the possibility to act (B can/cannot act without A) of another agent B. This is complemented by A’s ability to change the conditions or the environment within which B acts (B can act without A, but only within constraints defined by A). To put it differently: Exerting control means effecting actions, and/or ordering, shaping, or limiting another agent’s capability to act and defining the framework of their possible actions. The channel or mechanism of control can both be direct (A has direct control of B’s actions) or indirect (A has control over the environment within which B’s actions happen, thus having some control over B’s actions).

I use control here as the term encompasses the entire range from absolute and direct effects (B changes/acts directly in response to A, and exactly as intended by A) to weaker and more probabilistic forms that is, any purposive influence, however slight (B might change in response to A, and not necessarily as intended). With a view to the following sections we can spin this further and already point out how control is linked to the concept of autonomy: True (hypothetical) autonomy is marked by the absence of external control. In other words, autonomous agents are free from the influence of other agents in both their actions and conditions and able to act and make decisions according to their own logic (see also Karppinen and Moe Citation2016; Lazzarato Citation2004).

A situation where news organisations are under control by external actors and have lost some (or all) autonomy is often described as media capture. While definitions for media capture vary (for an overview see e.g., Schiffrin Citation2021; Nielsen Citation2017a) we can broadly define it here as a news organisation being ‘captured’ by another agent (e.g., a government or a business) and subjected to their interests, thus losing some or all of its autonomy, especially in relation to the capturing agent. In practice, media capture limits the news organisations’ ability to scrutinise or critique the capturing agent (either because it is prevented outright or because the news organisation has lost its capacity to do the same effectively). Capture here can be said to equal control: The capturing agent has influence over the condition and/or actions of the news organisation and can use this control to their advantage. This control can be exerted through various channels or mechanisms and can be both implicit or explicit. Various scholars have documented different forms and channels of media capture: capture by states through funding or regulatory means (Dragomir and Söderström Citation2021), capture through sources who influence the selection and framing of coverage (Gans Citation1979; Tuchman Citation1978), or cognitive capture when journalists’ views become aligned with the logic of the area they cover (Schiffrin Citation2015).

Most relevant in the context of this article, however, is Nechushtai’s (Citation2018) concept of ‘infrastructure capture’: the creation of ‘circumstances in which a scrutinizing body [the news media] is incapable of operating sustainably without the physical or digital resources and services provided by the businesses it oversees and is therefore dependent on them [the platform companies]’ (Nechushtai Citation2018). Nechushtai’s specification is a conceptual melange of infrastructural approaches—which consider how material elements (e.g., tools, services, hardware, software) constrain, enable, and shape the socio-technical conditions of news work (see also Plantin and Punathambekar Citation2019), with the concept of media capture. She argues that infrastructure capture can happen simultaneously at different stages in the gatekeeping process and ultimately impact coverage or news production and distribution more broadly, for instance by driving it to ‘comply with the logics, norms, or business strategies of external platforms’ (2018, 1052). While Nechushtai does not explicitly specify it, infrastructure capture is best understood as happening on a spectrum, with various levels of capture or control over different parts of the news enterprise or the gatekeeping process possible.

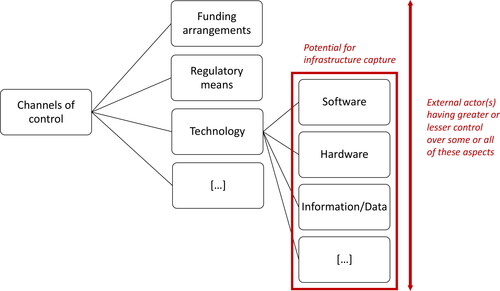

The advantage of this infrastructural lens is that it also allows us to grasp more implicit and often less apparent forms of capture and control (see ), such as control exerted through technological arrangements, instead of only focusing on very obvious forms of control (e.g., through funding arrangements or regulatory means) which are more prominent in parts of the literature (see e.g., Dragomir and Söderström Citation2021). At the same time, it allows us to ask questions about the locus and nature of control: In other words, who gets to control the infrastructure in which ways and thus has a (stronger or weaker) influence on the production and distribution of news. The disadvantage is that, as a concept, it remains relatively vague and does not go into greater detail when it comes to channels of control or capture (where and how control can happen), as well as potential outcomes (what control through infrastructure might be able to achieve).

Infrastructure Capture through AI in the News and Its Implications for News Autonomy

Do we see signs of infrastructure capture and thus greater dependence on and potential for control by platform companies in the case of AI? And what are other channels through which platform companies can exert control when it comes to AI in the news? Starting with the latter, we can use the typology of the overlap between platform businesses and the news industry (see ) and briefly say that in these areas we mainly see a continuation or extension of existing channels of control. Funding events, research or collaborations are well-established activities on part of platform companies with respect to the news. Likewise, platform companies have long been active in the regulatory space, with concerted lobbying efforts attempting to affect regulations in their favour, most recently in the US, the European Union, or Australia. These all now include a focus on artificial intelligence and news organisations will be affected directly or indirectly by many of these activities, as other research has demonstrated at length.

Where platforms’ AI activities, however, constitute a meaningful difference to existing forms of dependency between the two and potentially increases the control platform companies can exert over news organisations is in the realm of infrastructure (see ). As AI increasingly permeates all stages of the gatekeeping process from news gathering, to production, to distribution (see and appendix), platform companies no longer have only ‘relational power’ in that they mainly ‘control the means of connection’ (especially to audiences) as Nielsen has argued (2020). On the contrary, by providing both infrastructure (services) and tools for AI across all stages and sides of the news enterprise (see and earlier examples), they increasingly control both the means of production and connection. Importantly, they do so through a technology for which various structural factors have created both high barriers to entry and afforded them market dominance—making it difficult for news organisations to avoid or compete with them in this space, let alone operate artificial intelligence applications without relying on their hardware, software, or data. From this perspective, a core aspect of Nechushtai’s definition of ‘infrastructure capture’ is fulfilled: News organisations are strongly dependent on the physical and digital resources and services of platform companies in the case of AI, potentially yielding them greater control over the conditions under which news work takes place. The complexity behind AI creates lock-in effects which risks keeping news organisations tethered to the platform companies and their products.

Table 3. Vectors for infrastructure capture of news organisations around AI.

What could be the implications of increasing control of the news through infrastructure capture in AI? While an exhaustive analysis is beyond the scope of this article and needs to be addressed through further research, we can gesture to what is potentially at stake (see ).

First, infrastructure capture could lead to vendor lock-in where news organisations become structurally dependent on AI infrastructure provided by platform companies and are unable to use another vendor without substantial switching costs. AI poses a high risk of vendor lock-in effects (Lins et al. Citation2021), as there are, for example, no standards for exporting the results of machine ‘learning’, that is the improvements made by training a model with one’s data. This increases switching-costs. While it might be argued that news organisations use tools and ‘off the shelf’ solutions by major technology companies all the time (for example Microsoft’s Office Solutions) and that any AI provided by the same constitutes just ‘another tool’ in the box, such an argument fails to consider that while it might be easy to swap individual applications for which alternatives exist or can easily be developed, it is much harder to do the same for AI, given the complexity of the technology and the resources required to create and maintain it. This could result in a situation where news organisations are forced to accept the pricing power of the platforms and are forced to go along with any price increases (or, in a hypothetical scenario, are vulnerable to an abuse of pricing power).

Second, platforms possess artefactual and contractual control over their infrastructure and services. The infrastructure and software structure actions, enabling certain activities and restricting others. At the same time, users contract with the platform business by agreeing to their terms and conditions (Kenney, Bearson, and Zysman Citation2021). In practice, this could not only mean that platforms could control which AI applications news organisations can or cannot build, it would also give them control over the conditions of use, the level of customisability, and the interoperability of any solutions.

Third, artefactual control also means that platforms can change the infrastructure at will, without the customers having the ability to intervene or resist. This could expose news organisations to the risk that AI solutions, once implemented at various stages, are subject to unforeseen changes, stop to function, or can no longer be accessed (or, again, in a hypothetical scenario, are vulnerable to abuse).

Fourth, as an often inscrutable technology (Wachter, Mittelstadt, and Russell Citation2018) AI provided by platform companies could make it even more difficult for news organisations to understand why certain decisions or predictions are being made, forcing them to rely on platform companies themselves around questions of accuracy or fairness in any results.

Fifth, by controlling a technology that is increasingly embedded at various points in news organisations, platform companies could gain a deeper understanding about their workings (which they could use to their advantage). They could also potentially get access to sensitive data, thus e.g., limiting news organisations’ ability to protect sources or proprietary business information.

Taken together, the overarching and most critical implication is that these incremental shifts in the locus of control potentially matter cumulatively for the autonomy of the news. Autonomy is a normative ideal that is seen as constitutive to journalism. Journalism and the news as a societal institution as well as individual organisations and journalists should be free from any undue influence from other societal institutions and actors (Örnebring and Karlsson Citation2019). Without sufficient autonomy, the news cannot provide a public arena for information exchange, debate and deliberation, and cannot fulfil ‘its duty of informing the citizenry, free from partisan bias and other corrupting influences’ (McDevitt Citation2003, 158), nor can it fulfil a watchdog function.

Many news media in the US and parts of Europe had developed into largely independent, autonomous institutions over the course of the 20th century, deriving much of their power to shape political, cultural, and economic spheres from their control over channels of communication (Schroeder Citation2018; Thompson Citation2001) although their autonomy has always been hemmed in by various economic and political forces (Nielsen Citation2017a). This picture changed further with the rise of platform companies who started to dominate the market for scarce attention (Taylor Citation2014) and emerged as a main gateway to audiences which has afforded them growing control over news organisations. This control has been used to drive particular content formats such as video (Kalogeropoulos and Nielsen Citation2018), or to make certain tools and data indispensable to publishers seeking to extend or optimise their audiences. Unannounced changes to their algorithms or products have had dramatic consequences for some publishers (Diakopoulos Citation2019). Nielsen and Ganter demonstrate how this has made them ‘uneasy bedfellows’ (my term) for news organisations who see ‘their collaboration with platforms […] as accompanied by significant strategic risk of losing control’ (2018, 1614).

From a theoretical point of view, the increasing dependence on platform companies brought about by AI—as applied to various points of the news infrastructure—then is a continuation of these developments and, at least in theory, means a further constraint on the news’ autonomy. While it is questionable if the news at large or individual organisations will reach a point where they would be ‘incapable of operating sustainably’—as Nechushtai’s model would have it—without the physical or digital resources and services of AI provided by platform businesses, it cannot be denied that both on an institutional and individual level the control of these systems largely lies outside of news organisations, thus removing a part of their autonomy.

On a macro or institutional level, this increasing takeover of critical infrastructure and reliance on outside services and tools not only creates a stronger dependency on providers who have their own motives, but also causes asymmetrical power relations which can potentially be used by the former to assert their interests which—in a worst-case scenario—might reduce critical coverage of these actors (although this is a hypothesis that needs to be tested).

It could also lead to a loss of autonomy inasmuch as it pushes the news as a whole even stronger towards values and logics of platform companies encoded into these systems such as greater quantification or commercialisation which might be in conflict with values that have traditionally motivated them (Diakopoulos Citation2019; Mau Citation2019; Jungherr and Schroeder Citation2021). Examples for this are plenty when we look at the distribution stage of the gatekeeping process. The aforementioned push to video ultimately created financial difficulties for some publishers when Facebook changed its priorities. Likewise, following platforms’ push to adopt social media for distribution and audience engagement has strained some news organisations’ relationships with their core audiences or has bound resources that could have been invested into e.g., deeper coverage (Posetti, Simon, and Shabbir Citation2019). Platform companies have already wrought control and autonomy from the news as they have taken over the dominant revenue model (advertising) and as news organisations have adopted and submitted to the logics of their platforms, their distribution systems, and ways of measuring and targeting audiences. As the news industry is embracing (or being forced to embrace) technologically more powerful ways of quantifying audiences through AI and embedding a data-driven logic in news work (Christin Citation2020)—both which were not part of the news industry before—we might be witnessing a further loss of control and autonomy, as the news’ modus operandi shifts.

Questions of autonomy, however, also play out on the first two stages of the gatekeeping process, information selection and production, and on an individual level with journalists placing considerable value on their autonomy as part of their professional identity. While the discussion here often focuses on their autonomy from owners, commercial goals or dominant discourses and values, technological angles can be considered, too. In this context, again, AI provided by platform businesses potentially removes autonomy by limiting the discretionary decision-making ability of journalists and their ability to scrutinise the workings of these systems, for instance when it comes to the identification of topics and trends, the production and composition of stories, or the placement and ranking of content within various news products (Örnebring and Karlsson Citation2019). Examples here are journalists’ use of search and translation or transcription tools provided by platforms which are powered by AI and may end up shaping their work and practices in unintended ways. Likewise, consider a hypothetical scenario where a system such as Google’s ‘Pinpoint’ is unable to detect certain names in large document sets because it was not trained to do so, thus impeding investigative reporting. And again, as in the previous examples for the distribution stage there is a potential loss of autonomy when certain values or ways of seeing the world encoded into these systems conflict with those of journalists, regardless of whether this is obvious to journalists right away or not. While beyond of the scope of this article, more research will be needed to establish where exactly along the gatekeeping process infrastructure capture through AI comes to bear most.

Yet, despite these claims that the reliance of the news on AI as provided by platform businesses leads to a loss of autonomy, the overall argument is not without problems. For one, it remains a matter of debate what should be considered undue influence and if this condition is met in this context—both normatively and empirically. The second issue is that from an empirical point of view, it has, of course, long been clear that journalism is never fully autonomous, with media having more or less independence in the context of different political and economic systems and depending on their position and role within a given (media) system itself (Schroeder Citation2018, 11). Every analysis of the political economy of news has to ask what the effects of any development as the one described herein are on the content (which is after all the ‘product’ of the news) and on news media’s position as watchdogs and providers of ‘relatively accurate, accessible, diverse, relevant, and timely independently produced information about public affairs’ (Nielsen Citation2017b). One could make the argument that it is far too early to arrive at a definitive conclusion if the loss of control and increasing dependence of the news industry on platform companies in the space of AI constitutes an actual or merely a perceived loss of autonomy as identified in the hypothetical cases above. To put this differently: Even if the news is (or already has been) captured through AI, it is currently hard to say if this already has or will impede their ability to inform the citizenry free from bias or limit their ability to scrutinize these companies.

Towards a Research Agenda for the Role of Platform Companies in AI in the News

This article argues that platform businesses play an increasingly central role in AI in the news, with various structural factors enabling a growing and new form of control over the news through the control of critical AI infrastructure. Yet, a central weakness of this article is that it remains theoretical in nature and is founded on evidence that is often cursory and patchy, a problem that plagues the broader debate around the interrelationship of platform companies and the news industry—an area that is often difficult to research and where different fractions have predispositions towards certain analytical outcomes. Many open questions remain, and only careful empirical examination will ultimately allow us to see the value of the argument presented herein. To aid this process, I suggest that six issues require more careful scrutiny.

First, we need a better understanding of our blind spots which includes a systematic overview of the involved actors and their respective activities. For one, the exact scale and depth of platform companies’ role are unclear. Questions such as which tools and services have been developed (or are in development) by whom, and which news organisations have purchased these or use them (and where) are all in search of answers. It should also be noted here that the focus of this article has been uniquely EU/US-centric and concentrated on large organisations, leaving aside important developments in other parts of the world and smaller regional and local outlets. Relatedly, we should pay greater attention to activities in the AI space that eschew the categorical buckets of ‘product’, ‘service’, or ‘infrastructure’ such as training sessions organised by platform companies around AI for news organisations, funding provided for research or industry events which deal with these topics, as well as collaborations between news organisations and platform companies in this space. Infrastructure capture is a piece, not the whole puzzle.

Second, we should aim for a better understanding of the actual (or intended) use of the AI tools, services, and infrastructures offered by major technology companies and how they come to matter within news organisations, as well as within which news organisations. A first question is which organisations will be more affected than others, and for which reasons. One hypothesis is that the size and type of company will mean different levels of exposure and thus different levels of risk of infrastructure capture. While, for example, large and financially stronger news organisations such as The New York Times or the BBC can afford to develop their own AI-driven tools, smaller national, regional, or local media companies will likely be more reliant on off-the-shelf tools and solutions provided by platform companies—a tendency that will likely be also exacerbated in contexts outside of the Global North. A second question is in which parts of the gatekeeping process (Domingo Citation2008) and in which departments within news organisations AI as provided by platform companies will come to matter most. Cursory evidence points to strong use in and around fact-checking, information assessment, content moderation, and distribution but the overall picture remains patchy. Similarly, do these offerings predominantly act in complementary or standalone fashion? And how are they integrated into existing and new working routines and to which effect, both intra- and extra-organisational?

Third, we should pay greater attention to the conditions of use as well as the issues and problems arising around it. For one, we need to ask about newsworkers’ experiences with these tools and services, and the benefits, issues, and affordances of the technology in use (Lewis, Guzman, and Schmidt Citation2019; Nagy and Neff Citation2015), not least with a view to the potential effect on journalists’ individual autonomy. At the same time, we have to consider the ‘Terms of Service’. One example is provided here by the aforementioned New York Times’s use of Google Jigsaw’s Perspective AI. As the newspaper states publicly, the deal entailed that Jigsaw would receive ‘The Times’s anonymized comments data’ in return (Etim Citation2017) with a similar deal struck with Spanish news outlet EL PAÍS which, arguably, held even greater importance for Google given that it marked the launch of Perspective in Spanish. The announcement implicitly acknowledges this: ‘The process for training Perspective to work in new languages […] requires substantial data sets—in this case, lots of public online comments in Spanish’ (Pellat and Georgiou Citation2021). In both cases, Google not only received access to these outlets’ data but did so in a way that likely benefitted its own services in other domains, too, thus reinforcing its advantage in this space. Finally, questions in this context also entail issues of transparency, bias, and ‘black boxes’, hence if journalists and news organisation can actively scrutinise and understand why certain outcomes are produced rather than others. Likewise, the question of privacy and data protection cannot be ignored with a dependence on these systems and their infrastructure potentially exposing news organisations and journalists to surveillance of their work which could pose risks for them and their sources (Nechushtai Citation2018, 1054).

Fourth, is the question of motives. While select surveys and interviews (e.g., Beckett Citation2019; Newman Citation2019, Citation2021) indicate why news organisations are showing a general interest in AI, it is—so far—much less clear when and why the products and services of platform companies are relied upon in this space. At the same time, the intentions of platform companies around AI in the news call for greater attention. Obvious explanations are that we are simply witnessing the extension of existing activities: Platform companies want access to content, the ability to collect data, and control over user experiences and products (Nielsen Citation2020) all of which can be achieved by becoming more deeply enmeshed in the news through AI. At the same time, platform businesses are interested in protecting their dominant positions for which controlling the future of AI will be a key factor. It may well be that news organisations are merely an afterthought in these considerations, but for the now the evidence is insufficient to make a strong claim either way.

Fifth, we should ask what kind of regulatory or policy interventions might be necessary to address the possible dependencies I have described, or, respectively to prevent them from arising in the first place. Recent policy initiatives such as Australia’s News Media Bargaining Code aim to level information asymmetries between news organisations and platforms and to redistribute resources, regulation that could have implications in this context, too (although the Code has been widely criticised for a variety of reasons, foremost that it primarily benefitted larger Australian news outlets and neglected smaller ones with less bargaining power) (Xu Citation2021). Meanwhile, the European Union’s Digital Services Act (DSA) and the Digital Markets Act (DMA) have received much attention in this regard, with the DSA introducing transparency obligations around issues such as terms of use (Nosák Citation2021), as well as various provisions on auditing and data access for public interest research which could be useful for news professionals in scrutinising AI they are working with. Towards this end, however, news professionals would have to be included in the scope as public interest researchers. Campaigners have also called for the introduction of interoperability requirements for core platform services in acts like the DSA and DMA which could help in enabling and strengthening competition in this space (E. Simon Citation2021). Yet, as things stand the DSA primarily addresses distribution power and online intermediary services’ responsibility for user-generated content, not regulating AI or platform companies as such. The DMA focuses largely on the relatively narrow category of ‘core platform services’ and seems, again, focused on issues to do with distribution power. Hence, it is questionable how effective these instruments can be in addressing the potential for infrastructure capture described in this article, particularly around non-distributional forms of control. It remains to be seen what the EU’s proposed AI Act—which attempts to comprehensively regulate AI systems and their application—can achieve in this regard.

Sixth, the question of possible wider outcomes arises. For the news industry, the central frame here will likely be around various levels of dependency and autonomy as already argued in the previous section. Relatedly, there is also the risk of a skills trap as the ability to build in-house expertise might be reduced if AI is externalised to ‘big tech’, with a loss of skills further widening the gap and cementing an already asymmetrical relationship.

Scholars also need to consider the wider implications for the public arena—the common but contested mediated space vital for democracy, where different actors exchange information and in which public deliberation can and does happen, access to which is still largely controlled by the media (Jungherr, Rivero, and Gayo-Avello Citation2020; Schroeder Citation2018). As Jungherr, Rivero, and Gayo-Avello (Citation2020) have argued, a major drawback of the increasingly central position of platform companies in the information ecosystem is the fact that news organisations risk losing influence and knowledge over central elements of the same which potentially weakens their position as important gatekeepers to the public arena. If platform companies are able to extend their control through AI from the distribution of information and news into the production of the same, this may end up calcifying such tendencies. We should also consider a potential restructuring of the public arena, not just through the use of AI in the news in general, but as various outlets will potentially be strengthened or weakened through their reliance on AI provided by ‘big tech’. If the history of technological innovation is any guide, AI will not topple the existing socio-technical organisation of the news, nor will it be applied evenly within and among news organisations. Historical precedent shows that platform-based businesses do not cooperate on equal terms with all news organisations. The control or shaping power that platform companies can (and potentially will) exercise through AI will not be equally distributed. Some news organisations will be more affected than others which raises questions about who will be more or less affected, as well as potential distortions of existing structures across media systems.

Finally, scholars should focus on the second order effects of platform companies’ push to introduce AI across the news. Outsized claims regarding AI’s potential in addition to heavy promotion through the companies developing them—both factors potentially inciting news organisations to invest in them—could come to bear negatively on news organisations, and by extension the public arena, if these investments turn out to be a waste of money and thus lead to fewer resources available for the production of quality news, thus harming the quality of information circulating in the public arena indirectly. Platform companies already shape much of information and news online—by distributing, filtering, ranking, and curating it, often with the help of AI. How this influence changes and expands through the penetration of ever more elements of the news industry remains an unanswered question.

Conclusion

This article has explained the rise of and reasons for news organisations’ adoption of AI and analysed platform companies’ roles in this development. Looking at the structural advantages of platform companies in the space of AI, I argued that this asymmetrical relationship and the increasing adoption of AI in the news enables platform companies to extend their control over the news, making news organisations even more dependent on them. This development potentially leads to a loss of autonomy, although it is as of yet unclear how exactly such a loss would play out and how strong its effects might be. I have closed by sketching out a research agenda that highlighted six interrelated areas of inquiry and implications.

It is worth briefly dwelling here on two final points. First, it is important that my argument is not misconstrued in the sense that I think platform companies are the only ones holding the means of production or distribution for news in the space of AI. Any such claim is belied by emerging and established companies such as Narrativa and Retresco (natural language generation), Adobe (AI-supported CMS and production tools), DeepL (automatic translation), or Logically (AI-assisted fact-checking) who are proof of a growing market catering to the news industry’s demands in this space. However, none of these has reached (or is likely to reach) the status of a critical player in AI who simultaneously has relationships with almost all actors in the news industry, nor do they together form an oligopoly which affords them more control over the news industry through a technology than any single company on its own. While the core business of platform companies is not in news and the news industry likely only plays a minor role in their business considerations (albeit a more important one in other strategic areas, such as PR), platform companies’ role as oligopolists affords them the ability to push and control this technology at scale across many news organisations.

A second point pertains to the strength of the effects flowing from the capture of infrastructure. It may well be that ultimately AI does not end up affording platforms as much control over the news industry as suggested in this article. One could also argue that technological infrastructure hardly matters for the news’ autonomy. As an example, one could point to electric utilities and Internet Service Providers (ISPs), both of which provide key infrastructure and services to the news, with neither having any obvious control over journalistic processes or posing an obvious threat to the news’ autonomy. Yet, this argument only holds under conditions where the providers ‘play by the rules’. Internet shutdowns or the abuse of ISPs to censor and control the press are frequent in countries such as Iran, Belarus or Ethiopia (and while this has not happened for electric utilities, it is also not unimaginable). These forms of control might not be fine-grained and subtle, but they are real. It may well be that platform companies do not end up using any of their advantages in the space of AI or that the effects achieved will be minimal, but for now this question remains open pending further investigation.

A final point is to acknowledge that there is for now likely no escaping from the development outlined herein, even though we could imagine counterfactual scenarios. Platform companies have succeeded in expanding their dominant positions in other areas into an oligopoly in AI by skilfully exploiting exogenous and endogenous market conditions (Belleflamme and Peitz Citation2015). These circumstances alone mean that it is difficult for news organisations to circumvent them, as strong pull factors are at work. To use an analogy from gravitational theory: As the objects with the bigger mass, platform companies exert a stronger gravitational force than smaller objects, thus drawing the latter into their orbit. Platform companies and news organisations will remain uneasy and unequal bedfellows as AI is gradually rolled out. This raises the question how news organisations and regulators should react. For both, the answer is difficult. While Nechushtai has argued that a threat of infrastructure capture ‘could serve as an argument for supporting news organisations in building their own tools and formats’ (2018, 1054) and others have suggested using regulatory tools to reign in the power of platform companies, the key problem remains that the nature of AI likely limits the usefulness of these avenues in the former case, while effective policies for the latter have yet to be found and enforced. That some action will be needed, however, is beyond doubt. Platform companies have already taken over some of the mechanisms of control and influence over media and media infrastructures, and some observers (see e.g., Picard Citation2014) have argued that this has already reduced the ability of the public to regulate them through democratically determined policy. With AI potentially both accelerating and calcifying this shift, a mix of different and creative approaches will be needed to prevent the risks for the news that could potentially flow from such a development. Despite its flaws, AI potentially holds great promises for the news. The question is if these promises can materialise if access to and control of this technology is concentrated in the hands of a few powerful companies whose interests are not necessarily aligned with those of news organisations.

UneasyBedfellows_Appendix_v4.docx

Download MS Word (20.4 KB)UneasyBedfellows_Graphics_v4.docx

Download MS Word (53.9 KB)Acknowledgements

I am greatly indebted to Michelle Disser, Ralph Schroeder, and Gina Neff for helping to shape the article from the onset, taking the time to read various drafts and providing excellent comments. My thanks further extend to Marlene Straub, Sarah K. Wiley, Seth C. Lewis, Greg Taylor, Joanne Kuai, Max van Drunen, Matthias Kemmerer, Alfred Hermida, and Rasmus Kleis Nielsen who variously provided helpful comments or literature recommendations which informed this article. I am also grateful for support from colleagues at the OII, the Reuters Institute, and Tow Center as well as various journalists and experts with whom I had conversations about this topic. Many thanks to special issue editors Natali Helberger, Judith Moeller, and Sarah Eskens and the participants of the special issue workshop session on ‘Journalism, platforms and governance power’ for their comments and critique that helped to improve this article. Finally, many thanks to the anonymous reviewers for their very valuable feedback and without whom this article would be in much poorer shape.

Disclosure Statement

In the past, the author was employed on projects unrelated to this research at the Reuters Institute for the Study of Journalism which have received funding by Google and Facebook. I have no conflicts of interest to disclose.

Additional information

Funding

Notes

1 Following Haveman and Gualtieri, I use logic here in the sense of institutional logics: ‘systems of cultural elements (values, beliefs, and normative expectations) by which people, groups, and organizations make sense of and evaluate their everyday activities, and organize those activities in time and space’ (Haveman and Gualtieri Citation2017).

References

- Ananny, Mike. 2018. ‘The Partnership Press: Lessons for Platform-Publisher Collaborations as Facebook and News Outlets Team to Fight Misinformation’. Tow Center for Digital Journalism Publications. New York: Tow Center for Digital Journalism, Columbia University. https://www.cjr.org/tow_center_reports/partnership-press-facebook-news-outlets-team-fight-misinformation.php/.

- Barwise, Patrick, and Leo. Watkins. 2018. “The Evolution of Digital Dominance: How and Why We Got to GAFA.” In Digital Dominance: The Power of Google, Amazon, Facebook, and Apple, 21–49. New York: Oxford University Press.

- Beckett, Charlie. 2019. New Powers, New Responsibilities. A Global Survey of Journalism and Artificial Intelligence. London: Polis, London School of Economics. https://blogs.lse.ac.uk/polis/2019/11/18/new-powers-new-responsibilities/.

- Belleflamme, Paul, and Martin. Peitz. 2015. Industrial Organization: Markets and Strategies. 2nd ed. Cambridge: Cambridge University Press.

- Beniger, James. R. 1986. The Control Revolution: Technological and Economic Origins of the Information Society. Cambridge, Massachusetts: Harvard University Press.

- Brennen, J. Scott. Philip N. Howard, and Rasmus Kleis. Nielsen. 2019. ‘An Industry-Led Debate: How UK Media Cover Artificial Intelligence’. “Reuters Institute Report.” Oxford: Reuters Institute for the Study of Journalism.

- Brennen, J. Scott., Philip N. Howard, and Rasmus Kleis. Nielsen. 2020. “What to Expect When You’re Expecting Robots: Futures, Expectations, and Pseudo-Artificial General Intelligence in UK News.” Journalism 0 (0): 1–17.

- Broussard, Meredith. 2018. Artificial Unintelligence. How Computers Misunderstand the World. 1st ed. Cambridge, Massachusetts: MIT Press.

- Christin, Angèle. 2020. Metrics at Work: Journalism and the Contested Meaning of Algorithms. Princeton: Princeton University Press.

- Chua, Sherwin, and Andrew Duffy. 2019. “Friend, Foe or Frenemy? Traditional Journalism Actors’ Changing Attitudes towards Peripheral Players and Their Innovations.” Media and Communication 7 (4): 112–122.

- Chua, Sherwin, and Oscar. Westlund. 2021. ‘Advancing Platform Counterbalancing: Examining a Legacy News Publisher’s Practices of Innovation over Time amid an Age of Platforms’. In. https://gup.ub.gu.se/publication/310802.

- Creech, Brian, and Anthony M. Nadler. 2018. “Post-Industrial Fog: Reconsidering Innovation in Visions of Journalism’s Future.” Journalism 19 (2): 182–199.

- Diakopoulos, Nicholas. 2019. Automating the News: How Algorithms Are Rewriting the Media. Cambridge, Massachusetts: Harvard University Press.

- Domingo, David. 2008. “Interactivity in the Daily Routines of Online Newsrooms: Dealing with an Uncomfortable Myth.” Journal of Computer-Mediated Communication 13 (3): 680–704.

- Dragomir, Marius, and Astrid. Söderström. 2021. ‘State of State Media. A Global Analysis of the Editorial Independence of State Media and an Introduction of a New State Media Typology’. Budapest: Center for Media, Data and Society. Central Euroepan University. https://cmds.ceu.edu/article/2021-09-20/sorry-sorry-state-state-media-four-fifths-worlds-state-media-lack-editorial.

- Etim, Bassey. 2017. ‘The Times Sharply Increases Articles Open for Comments, Using Google’s Technology’. The New York Times, 13 June 2017, sec. Times Insider. https://www.nytimes.com/2017/06/13/insider/have-a-comment-leave-a-comment.html.

- Fanta, Alexander, and Ingo. Dachwitz. 2020. Google, the Media Patron. How the Digital Giant Ensnares Journalism.’ 103. OBS-Arbeitsheft. Frankfurt: Otto Brenner Stiftung.

- Gans, Herbert J. 1979. Deciding What’s News: A Study of CBS Evening News, NBC Nightly News, Newsweek, and Time. New York: Random House.

- Gorwa, Robert. 2019. “What is Platform Governance?” Information, Communication & Society 22 (6): 854–871.

- Haveman, Heather A, and Gillian. Gualtieri. 2017. Institutional Logics. Oxford: Oxford University Press.

- Hindman, Matthew. 2018. The Internet Trap: How the Digital Economy Builds Monopolies and Undermines Democracy. Princeton: Princeton University Press.

- Jungherr, Andreas, and Ralph Schroeder. 2021. Digital Transformations of the Public Arena. Cambridge Elements. Politics and Communication. Cambridge: Cambridge University Press. https://www.cambridge.org/core/elements/digital-transformations-of-the-public-arena/6E4169B5E1C87B0687190F688AB3866E.

- Jungherr, Andreas, Gonzalo Rivero, and Daniel Gayo-Avello. 2020. Retooling Politics: How Digital Media Are Shaping Democracy. Cambridge: Cambridge University Press.

- Kalogeropoulos, Antonis, and Rasmus Kleis Nielsen. 2018. “Investing in Online Video News.” Journalism Studies 19 (15): 2207–2224.

- Karppinen, Kari, and Hallvard Moe. 2016. “What We Talk about When Talk about “Media Independence.” Javnost - The Public 23 (2): 105–119.

- Kenney, Martin., Dafna. Bearson, and John. Zysman. 2021. “The Platform Economy Matures: Measuring Pervasiveness and Exploring Power.” Socio-Economic Review 19 (4): 1451–1483.

- Lazzarato, Maurizio. 2004. Les Révolutions Du Capitalisme. Paris: Les Empêcheurs de penser en rond. https://www.librairie-sciencespo.fr/livre/9782846711043-les-revolutions-du-capitalisme-maurizio-lazzarato/.

- Lewis, Seth C., Andrea L. Guzman, and Thomas R. Schmidt. 2019. “Automation, Journalism, and Human–Machine Communication: Rethinking Roles and Relationships of Humans and Machines in News.” Digital Journalism 7 (4): 409–427.

- Lindén, Carl-Gustav. 2020. Silicon Valley. Och Makten Äver Medierna. Göteborg: Nordicom.

- Lins, Sebastian., Konstantin D. Pandl, Heiner. Teigeler, Scott. Thiebes, Calvin. Bayer, and Ali. Sunyaev. 2021. “Artificial Intelligence as a Service.” Business & Information Systems Engineering 63 (4): 441–456.

- Lowrey, Wilson. 2011. “Institutionalism, News Organizations and Innovation.” Journalism Studies 12 (1): 64–79.

- Marconi, Francesco. 2020. Newsmakers: Artificial Intelligence and the Future of Journalism. New York: Columbia University Press.

- Mau, Steffen. 2019. The Metric Society. On the Quantification of the Social. Cambridge, UK: Polity Press.

- McDevitt, Michael. 2003. “In Defense of Autonomy: A Critique of the Public Journalism Critique.” Journal of Communication 53 (1): 155–164.

- Metz, Cade. 2017. ‘Tech Giants Are Paying Huge Salaries for Scarce A.I. Talent’. The New York Times, 22 October 2017, sec. Technology. https://www.nytimes.com/2017/10/22/technology/artificial-intelligence-experts-salaries.html.

- Mitchell, Melanie. 2019. Artificial Intelligence: A Guide for Thinking Humans. London: Pelican.

- Nagy, Peter., and Gina. Neff. 2015. “Imagined Affordance: Reconstructing a Keyword for Communication Theory.” Social Media + Society 1 (2): 205630511560338.

- Napoli, Philip M. 2010. Audience Evolution: New Technologies and the Transformation of Media Audiences. New York: Columbia University Press.

- Nechushtai, Efrat. 2018. “Could Digital Platforms Capture the Media through Infrastructure?” Journalism 19 (8): 1043–1058.

- Newman, Nic. 2019. ‘Journalism, Media, and Technology Trends and Predictions 2019’. “Reuters Institute Report.” Oxford: Reuters Institute for the Study of Journalism.

- Newman, Nic. 2020. ‘Journalism, Media and Technology Trends and Predictions 2020’. “Reuters Institute Report.” Oxford: Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2020-01/Newman_Journalism_and_Media_Predictions_2020_Final.pdf.

- Newman, Nic. 2021. ‘Journalism, Media and Technology Trends and Predictions 2021’. “Reuters Institute Report.” Oxford: Reuters Institute for the Study of Journalism.

- Newman, Nic, Richard. Fletcher, Anne. Schulz, Simge. Andi, and Rasmus Kleis. Nielsen. 2020. ‘Digital News Report 2020’. “Digital News Report.” Oxford: Reuters Institute for the Study of Journalism. http://www.digitalnewsreport.org/.

- Nielsen, Rasmus Kleis. 2017a. “Media Capture in the Digital Age.” In In the Service of Power: Media Capture and the Threat to Democracy, edited by Anya Schiffrin. Washington, DC: Center for International Media Assistance.

- Nielsen, Rasmus Kleis. 2017b. “The One Thing Journalism Just Might Do for Democracy.” Journalism Studies 18 (10): 1251–1262.

- Nielsen, Rasmus Kleis. 2018. “The Changing Economic Contexts of Journalism.” In Handbook of Journalism Studies, edited by Thomas Hanitzsch and Karin Wahl-Jorgensen, 2nd ed. London: Routledge. https://rasmuskleisnielsen.files.wordpress.com/2018/05/nielsen-the-changing-economic-contexts-of-journalism-v2.pdf.

- Nielsen, Rasmus Kleis. 2020. ‘The Power of Platforms and How Publishers Adapt’. Berlin: HIIG, January 20. https://www.youtube.com/watch?v=SfBnSOhL6iA&t=755s.

- Nielsen, Rasmus Kleis., and Sarah Anne. Ganter. 2018. “Dealing with Digital Intermediaries: A Case Study of the Relations between Publishers and Platforms.” New Media & Society 20 (4): 1600–1617.

- Nosák, David. 2021. ‘The DSA Introduces Important Transparency Obligations for Digital Services, but Key Questions Remain’. Center for Democracy and Technology (blog). 18 July 2021. https://cdt.org/insights/the-dsa-introduces-important-transparency-obligations-for-digital-services-but-key-questions-remain/.

- Örnebring, Henrik, and Michael. Karlsson. 2019. “Journalistic Autonomy.” In Oxford Research Encyclopedia of Communication, edited by Jon F Nussbaum. Oxford: Oxford University Press.

- Pellat, Marie, and Patricia. Georgiou. 2021. ‘Perspective Launches In Spanish With El País’. Medium–Jigsaw. 9 February 2021. https://medium.com/jigsaw/perspective-launches-in-spanish-with-el-pa%C3%ADs-dc2385d734b2.

- Picard, Robert G. 2014. “Panel I: The Future of the Political Economy of Press Freedom.” Communication Law and Policy 19 (1): 97–107.

- Plantin, Jean-Christophe., and Aswin. Punathambekar. 2019. “Digital Media Infrastructures: Pipes, Platforms, and Politics.” Media, Culture & Society 41 (2): 163–174.

- Posetti, Julie. Felix Marvin. Simon, and Nabeelah. Shabbir. 2019. ‘What If Scale Breaks Community? Rebooting Audience Engagement When Journalism Is Under Fire“ Reuters Institute Report.” Oxford: Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/our-research/what-if-scale-breaks-community-rebooting-audience-engagement-when-journalism-under.

- Rashidian, Nushin. George. Tsiveriotis, Pete. Brown, Emily. Bell, and Abigail. Hartstone. 2019. ‘Platforms and Publishers. The End of an Era’. Tow Center for Digital Journalism Publications. New York: Tow Center for Digital Journalism, Columbia University. https://academiccommons.columbia.edu/doi/10.7916/d8-sc1s-2j58.

- Schiffrin, Anya. 2015. “The Press and the Financial Crisis: A Review of the Literature.” Sociology Compass 9 (8): 639–653.

- Schiffrin, Anya. 2021. “Media Capture.” In Oxford Research Encyclopedia of Communication. Oxford: Oxford University Press. https://www.oxfordbibliographies.com/view/document/obo-9780199756841/obo-9780199756841-0265.xml.

- Schroeder, Ralph. 2018. Social Theory after the Internet: Media, Technology, and Globalization. London: UCL Press.

- Shoemaker, Pamela J, and Stephen D. Reese. 2013. Mediating the Message in the 21st Century: A Media Sociology Perspective. New York: Routledge. https://www.taylorfrancis.com/books/mediating-message-21st-century-pamela-shoemaker-stephen-reese/10.4324/9780203930434.

- Simon, Eva. 2021. ‘Rights Groups: MEPs Should Ensure Interoperability in Digital Services Act’. Liberties.Eu. 13 July 2021. https://www.liberties.eu/en/stories/open-letter-libe-digital-services-act/43686.

- Simon, Jean Paul. 2019. “Artificial Intelligence: Scope, Players, Markets and Geography.” Digital Policy, Regulation and Governance 21 (3): 208–237.

- Srnicek, Nick. 2016. Platform Capitalism. Cambridge, UK: Polity Press.

- Steensen, Steen, and Oscar Westlund. 2020. “The Platforms: Distributions and Devices in Digital Journalism.” In What is Digital Journalism Studies?, 1st ed., 40–54. London: Routledge.

- Taylor, Greg. 2014. “Scarcity of Attention for a Medium of Abundance. An Economic Perspective.” In Society & the Internet, edited by Mark Graham and William H. Dutton, 257–271. Oxford; New York: Oxford University Press.

- Thompson, John B. 2001. The Media and Modernity: A Social Theory of the Media. Cambridge: Polity Press.

- Thurman, Neil. 2019. “Computational Journalism.” In The Handbook of Journalism Studies, edited by Karin Wahl-Jorgensen and Thomas Hanitzsch, 2nd ed. New York: Routledge.

- Tuchman, Gaye. 1978. Making News: A Study in the Construction of Reality. New York: Free Press.

- Wachter, Sandra, Brent Mittelstadt, and Chris Russell. 2018. “Counterfactual Explanations without Opening the Black Box: Automated Decisions and the GDPR.” Harvard Journal of Law & Technology 31 (2): 841–887. https://papers.ssrn.com/abstract=3063289.

- Xu, Craig. 2021. ‘Australia: The News Media Bargaining Code, Recent Responses’. Inforrm’s Blog. 3 October 2021. https://inforrm.org/2021/10/03/australia-the-news-media-bargaining-code-recent-responses-craig-xu/.