ABSTRACT

The Engineering Education Research (EER) Peer Review Training (PERT) project aimed to develop EER scholars’ peer review skills through mentored experiences reviewing journal manuscripts. Concurrently, the project explored how EER scholars develop capabilities for evaluating and conducting EER scholarship through peer reviewing. PERT used a mentoring structure in which two researchers with little reviewing experience were paired with an experienced mentor to complete three manuscript reviews collaboratively. Using a variety of techniques including think aloud protocols, structured peer reviews, and exit surveys, the PERT research team addressed the following research questions: (1) To what extent are the ways in which reviewers evaluate manuscripts influenced by reviewers’ varied levels of expertise? and (2) To what extent does participation in a mentored peer reviewer programme influence reviewers’ EER manuscript evaluations? Data were collected from three cohorts of the mentored review programme over 18 months. Findings indicate that experience influenced reviewers’ evaluation of EER manuscripts at the start of the programme, and that participation can improve reviewers’ understanding of EER disciplinary conventions and their connection to the EER community. Deeper understanding of the epistemological basis for manuscript reviews may reveal ways to strengthen professional preparation in engineering education as well as other disciplines.

1. Introduction

Questions about how we know what we know and how knowledge is related to action have been posed for centuries, beginning with philosophers such as Plato (Epistemology- The history of epistemology | Britannica Citationn.d.). Historically, researchers conceived of knowledge from a positivist perspective, with knowledge thought of as fixed and activated as needed to inform or guide problem-solving. Since the early 21st century, this view has been increasingly challenged, with theorists and practitioners both arguing that disciplinary knowledge is transactional, socially constructed, and essentially functional, continually adapting and updating through experience. These views have infiltrated professional education, challenging conventional practices in higher education about how to prepare students to be teachers, architects, medical doctors, and engineers (Coles Citation2002; Schön Citation2017).

Yet research on the epistemology of researchers is limited. Ideas about how knowledge develops for engineering education research (EER) professionals is particularly interesting, because like many of the social sciences, EER is an interdisciplinary field shaped by the norms of the disciplinary origins of its members (Beddoes, Xia, and Cutler Citation2022). Some EER professionals were prepared in engineering education programmes. Others were trained as engineers with no previous expertise in education research, but whose professional practice and intellectual interests motivated them to explore the teaching and learning of engineering. Others migrated into EER from social science disciplines, having no previous training in engineering (Benson et al. Citation2010).

Regardless of their backgrounds, all paths that professionals have taken who study EER converge in manuscript review. Peer review of scholarship is critical to the advancement of knowledge in a scholarly discipline such as EER. Academia relies heavily on peer review, with nearly every facet of academic work evaluated, at least in part, by the peer review process. Indeed, publishing manuscripts, promotion and hiring, grant funding, awards, and in some cases, teaching evaluations rely on peer review (Hojat, Gonnella, and Caelleigh Citation2003).

For manuscript review in EER, peer reviewers apply their various perspectives and professional knowledge in assessing the quality and potential of a study to advance academic discourse and the practice of engineering education. Manuscript review is a discussion (sometimes a negotiation) between professional peers in the roles of reviewers, editors, and authors about effective and robust research practices (Lee Citation2012). At the same time, manuscript review has a weighty, gate-keeping function (Hojat, Gonnella, and Caelleigh Citation2003). The decisions made about manuscripts can have lasting effects on individuals, journals, and the profession itself. It is generally recognised that peer review strongly influences these decisions, but the basis by which manuscripts are evaluated by peer reviewers is little known or understood (Tennant and Ross-Hellauer Citation2020). Peer review has wide-ranging implications for research and academic communities. In research, peer review determines what is shared with the larger community and even what projects are conducted in the first place through distribution of grant funding (Langfeldt Citation2001). In academic communities, peer review inevitably wields power over who holds academic positions and whose voices are heard (Newton Citation2010; Lipworth and Kerridge Citation2011), and thus determines the level of inclusivity of a field as new scholars, ideas, and methods emerge. Collectively, peer review shapes academic disciplines and defines community values (Tennant and Ross-Hellauer Citation2020). Despite the enormity of these implications, scholars receive little or no training in effective and constructive peer review.

This study aims to explore the ways peer reviewers evaluate the quality and overall value of EER manuscripts, particularly with respect to their background and level of reviewing expertise. We anticipate that by examining the peer review process within the context of a mentored peer reviewer programme, we can advance knowledge about how mentors and mentees build shared understanding of the review process and perceptions of quality in EER research. This in turn will advance our ability to bring new scholars into the EER field and expand capacity as we develop vibrant, reflective networks of EER scholars.

2. Literature review

Peer review clearly constitutes a social epistemic feature of the production and dissemination of scientific knowledge. It relies on members of knowledge communities to serve as gatekeepers in the funding and propagation of research. It calls on shared norms cultivated by the community. And it relies on institutions such as journal editorial boards, conference organizers, and grant agencies to articulate and enforce such norms. –Lee, Citation2012 [7, p. 868].

Not surprisingly, perhaps, researchers who have studied peer review typically focus on issues of reliability or convergence in the assessment of reviewers’ ratings. The premise underlying these studies is that a manuscript has an inherent quality that can be assessed against the standards and conventions of an intellectual discipline, as long as reviewers are not corrupted by biases and/or inattentiveness during the review process (Merton Citation1979; Tyler Citation2006). Several studies have explored bias in the peer review process (Ceci and Peters Citation1982; Cole and Cole Citation1981). Typical findings include low correlations between reviewers, bias in single-anonymous reviews (in which reviewers know the author’s identity) that favour eminent researchers, and biases that favour prestigious institutions.

There is scant research on the bases by which reviewers formulate their recommendations. In a study of what reviewers focused on for 153 manuscripts submitted to American Psychological Association journals, researchers examined the proportion of reviewer comments related to the conceptualisation of the study, design, method, analysis, interpretations and conclusions, and presentation (quality of expression) (Fiske and Fogg Citation1990). Two-thirds of comments overall were related to the planning and execution of the study, and one-the third related to the presentation. Conceptualisation (20%), analyses and results (22%), and interpretations/conclusions (16%) were also frequent weaknesses commented on by reviewers. The reviewers found minimal consensus on publication recommendations across reviewers, although they found very few disagreements across reviewers about specific issues in the paper. Variability in the reviewers’ recommendations may have resulted from individuals weighting specific strengths and weaknesses differently, as other researchers have found (Newton Citation2010). A mixed-methods study of perceptions of reviewers and editors of the review process in EER specifically provided similar insights into what reviewers tended to focus on: relevance of the topic, data collection or analysis, and theoretical frameworks (Beddoes, Xia, and Cutler Citation2022). Although these authors draw distinctions between EER and other disciplines based on their analysis of reviews of articles that were rejected or accepted for publication in an EER journal, in reality reviewers weigh similarly narrow sets of factors in other fields and reviews are similarly seen as potentially biased (Newton Citation2010; Fiske and Fogg Citation1990). Reviewers’ comments to editors to justify or explain their recommendations vary widely and typically do not include the tacit criteria reviewers use to evaluate a manuscript; some journals do not even require justification of recommendations by reviewers (Tennant and Ross-Hellauer Citation2020). In many reviews, only the most prominent features of a manuscript – negative and positive – are likely to be mentioned in reviewer comments. This raises the question, what factors influence how reviewers weigh various factors that result in their recommendation to editors on whether to publish a manuscript?

In deciding which of these factors bear the most and least weight in review criteria, reviewers will likely rely on previously formed schema to guide their decision-making process (Newton Citation2010). Schemata (plural of schema) are general representations of knowledge which are typically abstract and used to fit into a given context (Anderson, Spiro, and Anderson Citation1978). All schemata comprise variables (tangible objects or actions) that help to build a foundation for this larger, abstract conceptualisation based on connections between variables (Anderson, Spiro, and Anderson Citation1978; Rumelhart and Ortony Citation1977). However, when an event is encountered that does not fit a previously built schema, the schema must either be tuned to account for this dissonance, or completely rebuilt into an entirely new schema (Rumelhart Citation1980). In the case of schema for peer review, variables can consist of manuscript elements such as formatting, theoretical backing, and writing clarity. The assessments of these variables are formed based on personal, prior experiences and ultimately lead to inferences about an outcome (Rumelhart Citation1980), such as a recommendation to an editor.

Because schemata are based on individual experiences (including those encountered in professional situations), individuals with similar backgrounds are likely to have similar schemata. For example, in a study exploring how schema develops in teams of individuals from different backgrounds with a diverse schemata, as some individuals adjusted, their schemata were co-oriented (Rentsch et al. Citation1998). This allowed teams to better communicate and reach consensus decisions. When schemata were not co-oriented, discord occurred and teams were unable to communicate effectively, leading to task failure. Teams that developed similar schemata were ultimately more likely to accurately identify a problem and deeply explain the logic behind their thoughts or conclusions to build on the team’s similarly formed schemata.

Working to ‘tune’ a schema can be a slow and arduous process, but guidance from a mentor with well-developed schemata eases this burden (Rumelhart and Ortony Citation1977). Much like in team settings, apprenticeships allow for the co-orientation of schemata, however, apprenticeships use a scaffolding, stepwise approach under the direction of a coach. In 2009, Austin (Austin Citation2009) detailed these steps through a theoretical model of apprenticeship for doctoral students in a seminar. Five specific steps were outlined in order from lowest to highest amounts of scaffolding. 1) Modelling – mentors model expectations for a working procedure with detail, 2) Coaching – students engage in the task with coaches providing formative feedback as needed, 3) Scaffolding – difficulty of the task increases with less direction from the coach, 3) Articulation and Reflection – students ask questions and articulate the underlying process (schema) they have learned, 5) Promote Transfer of Learning – coach encourages the student to think about and apply the built schema elsewhere.

Much like cohesive teams with similarly built schema, peer reviewers who have similar levels of peer review experience likely evaluate manuscript elements similarly. However, discrepancies are likely to arise when young career faculty or graduate students who may have little or no EER or peer reviewing experience and poorly formed schema conduct reviews.

In this study, we explore the relationship, if any, between peer reviewing experience and the way in which reviewers evaluate manuscripts. Aspects of manuscript evaluation include the tacit criteria for determining quality or value of EER manuscripts and the weighting of various elements of a manuscript. We examined these relationships within the context of a mentoring programme, guided by the following research questions:

To what extent are the ways in which reviewers evaluate manuscripts influenced by reviewers’ varied levels of expertise?

To what extent does participation in a mentored peer reviewer programme influence reviewers’ EER manuscript evaluations?

3. Methods

3.1. Peer reviewer training (PERT) program

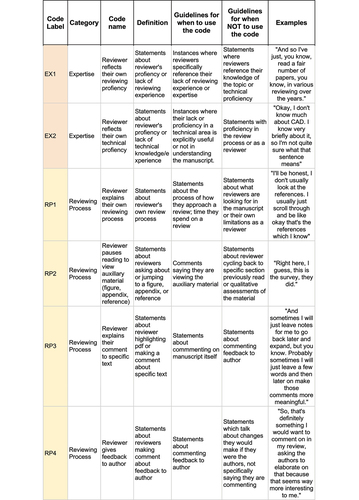

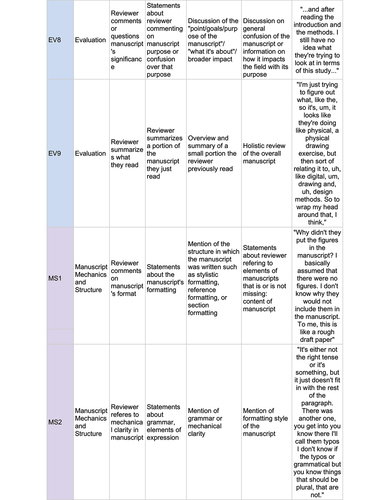

The PERT programme was developed to provide peer review training to emerging EER scholars from different disciplinary backgrounds, framing the peer review process around mentoring and building up the EER community (Benson et al. Citation2021; Jensen et al. Citation2021; Watts et al. Citation2022; Jensen et al. Citation2022). The goal of PERT is for participants to build capacity in EER through a peer reviewer training programme that grows their professional network and fosters schema development for reviewing EER manuscripts. The structure of the PERT programme was to pair less experienced mentees with more experienced mentors in triads (one mentor and two mentees). After virtual training and orientation sessions together, in which mentors and mentees could network with each other and the programme team (coordinators, researchers, and evaluators), triads then collaboratively wrote reviews of three manuscripts submitted to an EER journal (). Research and evaluation data sources included five Structured Peer Review (SPR) forms, Think Aloud Protocols (TAPs), and exit surveys (). Cohorts of up to twelve triads participated in the six-month programme. We report here the findings from three cohorts of the PERT programme conducted from January 2021 through May 2022. The first two cohorts completed all research and training activities in the mentored manuscript review programme; the third cohort only completed pre activities and went onto complete a mentored proposal review programme.

Figure 1. Activities completed as part of the peer reviewer training program. Each triad completed three journal manuscript reviews, going through the review cycle collaboratively for each manuscript. Each participant was asked to individually complete a Structured Peer Review (SPR) at the beginning of the program (Pre-SPR), for each of the three manuscripts they reviewed as a triad (SPR-1, −2 and −3), and at the end of the program (Post-SPR). Participants also completed Think-Aloud Protocols (TAPs) at the beginning of the program.

3.2. Participant recruitment and selection

Mentees were selected through a competitive, online application process that collected demographic information, professional background (Ph.D. discipline and year of degree), current position, relevant EER experience (e.g. publications, presentations, and reviewing history), confidence reviewing EER manuscripts, and the number of EER colleagues with whom they regularly interact. Participants were chosen based on a baseline level of experience (some EER training or education and at least in their last year of graduate study) and their desire to help advance EER through peer review. Special consideration was given to individuals deemed ‘lone wolves’ who were not well-connected to an EER network (Riley et al. Citation2017), diverse participants who may not have been previously connected to the EER community, and postdoctoral researchers. This process resulted in an overall acceptance rate of 38%. Mentors were invited to participate based on their experience in EER, recommendations from journal editors and colleagues, and their desire to help advance EER through peer review. Invited mentors were senior researchers and faculty members who had reviewed multiple journal papers or were previous members of an editorial board of an education research journal. In total, mentors and mentees represented 23 universities in six countries. Across all cohorts, mentees’ professional levels included graduate students, postdoctoral researchers, and early-career faculty, averaging around five years of experience in research outside of their Ph.D. Mentors averaged over five years of experience beyond their Ph.D. Participants’ disciplinary backgrounds were in social sciences, engineering, science, technology and engineering education. Triads (one mentor and two mentees) were formed based on participants’ time zones and areas of expertise.

3.3. Data collection

Think Aloud Protocols (TAPs) are designed to explore individuals’ thoughts and reasoning processes as they work through problems or engage in self-regulated learning activities (Greene, Robertson, and Costa Citation2011). After orientation and prior to beginning manuscript reviews, all mentees and mentors were invited to complete TAPs; interviews were conducted with twelve mentees and five mentors from cohorts 1–3. During these individual virtual interviews, participants verbalised their review of a brief (~1500 word) pre-published manuscript (previously submitted to an EER journal with all identifying information redacted). Two such manuscripts were used for data collection by the research team; the manuscripts used for all of the TAP interviews are referred to as Manuscript A or B throughout this paper. Researchers conducting the TAPs asked additional probing questions at the end of each manuscript section to ensure that participants verbalised all thoughts. Sessions were recorded and transcribed with all identifying information redacted.

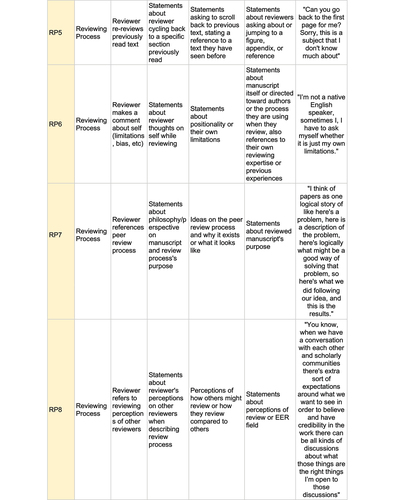

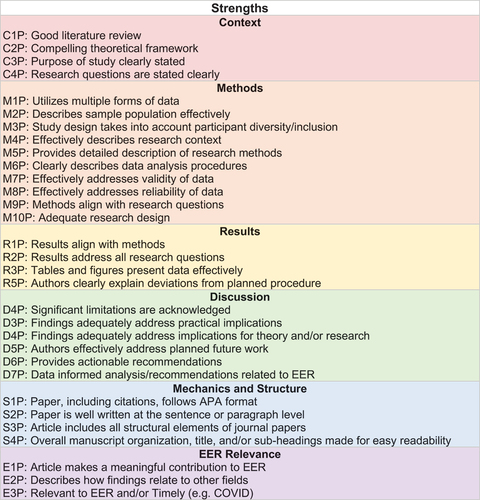

Structured Peer Review (SPR) forms were designed to evaluate the criteria on which reviewers based their evaluations of manuscripts. The SPR is an online questionnaire that prompts participants to describe five notable strengths and weaknesses of a manuscript, recommend a decision to the editor (accept, minor revision, major revision, or reject), and provide a 200-word justification of their recommendation (). Participants were instructed to complete their SPRs individually and then use them as a starting point for discussions within their triads (Benson et al. Citation2021).

Figure 2. The open-ended Structured Peer Review (SPR) form distributed to participants prior to their first triad meeting was used to determine what criteria participants used to evaluate manuscripts when conducting their reviews and making a recommendation to the editor.

Mentees and mentors were asked to complete an SPR prior to the triad’s first meeting (Pre-SPR; Manuscript A for cohort 1 and Manuscript B for cohort 2), for each manuscript they reviewed as a triad (SPRs 1, 2, and 3), and after their final triad review was submitted (Post-SPR; Manuscript B for cohort 1 and Manuscript A for cohort 2). Manuscripts A & B were both ~1500-word manuscripts that had been submitted to a special edition of a peer-reviewed EER journal and were used with permission from the authors for our research purposes. For both the Pre- and the Post-SPR articles, the associate editor recommended ‘major revision’ after receiving recommendations of both ‘major revision’ or ‘reject’ by the actual manuscript reviewers. In cohorts 1 and 2, 62 out of the 63 PERT participants consented to participate in the research study and submit SPRs. Only results from the Pre- and Post-SPRs are discussed in this paper.

After the conclusion of the programme (i.e. their triad completed three manuscript reviews), exit surveys were distributed to cohort 1 and 2 participants. The exit survey included closed- and open-ended questions about programme expectations, impact on professional development and community, and recommendations for improvement of the programme (Benson et al., Citation2021). Survey response rates for mentees and mentors were 88% and 75%, respectively.

The data collected from TAPs and SPRs were used to establish baseline similarities and differences between mentors and mentees at the start of the programme in how they conducted reviews (TAPs) and the content of these reviews (SPRs). These data were used to address our first research question, which explored the extent to which reviewers’ varied levels of expertise influences the ways they evaluate manuscripts. The SPRs and exit surveys collected after participants completed the PERT programme were used to answer our second research question, which explored the influence of participation in a mentored peer reviewer programme on reviewers’ EER manuscript evaluations. The SPRs were primarily used to document changes in content in manuscript reviews after participating in the programme; exit surveys provided insight into why or how these changes may have occurred.

3.4. Data analysis

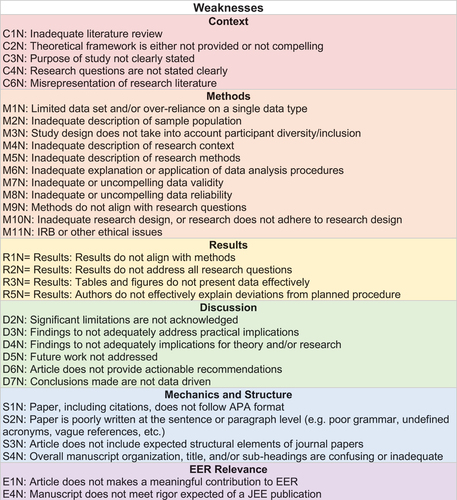

TAP transcripts were analysed using open coding methods by two members of the research team. They identified coding events (meaningful passages to which codes should be assigned) within transcripts collaboratively for two transcripts, then independently identified coding events for the remaining transcripts. Through open coding, one researcher initially developed a set of 27 potential codes, and tested and refined the codes with the second researcher, resulting in 25 codes (Appendix A). They established consistency of coding through inter-rater reliability (IRR) by dividing the total number of agreed-upon codes by the total number of codes assigned within four transcripts. IRR for the two coders was calculated to be 73%. Although no standards exist for IRR for qualitative data, a reliability rating of 70% for open coding of phenomenological data can be considered an acceptable cut-off point (Marques and McCall Citation2005; Miles, Huberman, and Saldaña Citation2014). The researchers then independently and iteratively coded the remaining transcripts (n = 14), developing axial codes and categories. Coded sections of transcripts were extracted and analysed for relevant themes using thematic analysis (Braun and Clarke Citation2006).

SPR codes were developed after collection of Pre-SPRs from cohort 1’s open-ended survey responses pertaining to strengths, weaknesses, and recommendations for Manuscript A. Using thematic analysis (Braun and Clarke Citation2006), the same two members of the research team used open coding to identify responses that described similar features within the manuscript. These were reviewed and revised iteratively and then further refined similar to the process described for TAPs data analysis. To ensure that the SPR codes were comprehensive enough to capture strengths and weaknesses across a broad range of manuscripts, this process was repeated for cohort 2 using Manuscript B. All iterations required respondents to provide open-ended comments to justify their recommendations to the editor. In this subsequent analysis, only two new codes emerged.

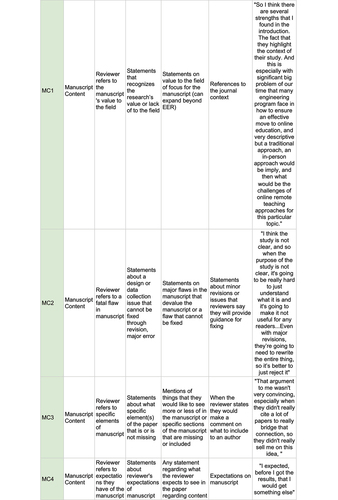

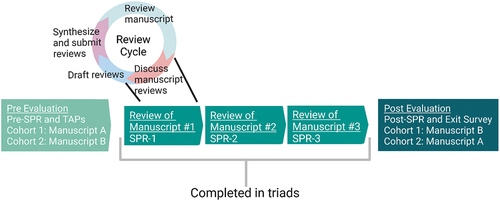

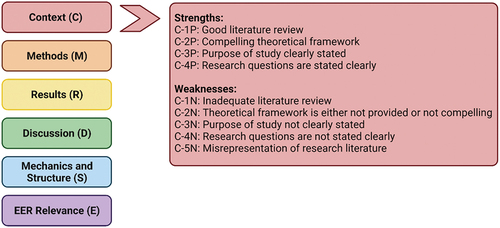

Coding resulted in identifying six themes: Context, Methods, Results, Discussion, Mechanics and Structure, and EER Relevance. Within each theme, codes were organised as strengths (positive attributes) and weaknesses (negative attributes) (). Once codes were finalised, they were inserted into the SPR form as checkbox lists that future respondents could select from within strengths and weaknesses (Appendix B). After each participant completed all three triad manuscript reviews, they were sent the Post-SPR manuscript to review. Participants identified strengths and weaknesses from the checkbox lists, then wrote 200-word, open-ended justifications to the editor.

Figure 3. The six themes used for characterizing responses on the Pre- and Post-Structured Peer Review (SPR) forms: Context, Methods, Results, Discussion, Mechanics and Structure, and EER Relevance. Each of these themes had multiple codes organized as strengths (positive attributes) and weaknesses (negative attributes). For example, Context had four strengths (P for positives) and five weaknesses (N for negatives).

Segments of the recommendation justifications were coded independently by two researchers using the SPR codes. IRR was calculated as the number of segments that reflected agreement between the two raters divided by total segments. Although some 200-word responses included the same code multiple times, any one code was only counted once per response. After IRR was determined to be greater than 70% for SPR analyses, analyses were conducted on segments that both coders identified as coding events. For analysis of these data, we report results for codes used by at least 50% of mentors or mentees, which we define as ‘convergence’, in response to the three SPR questions (strengths, weaknesses, and justification of recommendation to the editor). To account for potential differences in codes simply due to variability in manuscript content and quality, analysis results were compared across manuscripts. As illustrated in , the manuscript used as the Pre-SPR for cohort 1 (Manuscript A) was used as the manuscript for the Post-SPR for cohort 2 and vice versa.

Exit survey data, which informed programme evaluation, were analysed by averaging close-ended responses and thematically grouping open-ended responses into categories such as ‘expanded EER network’ and ‘increased reviewing confidence’. These results provided insight into perceptions of reviewing skill development and EER community connections as an outcome of participating in the PERT programme.

4. Results

4.1. Think aloud protocols (TAPs) revealed schema differences between mentors and mentees prior to participating in the mentored review program

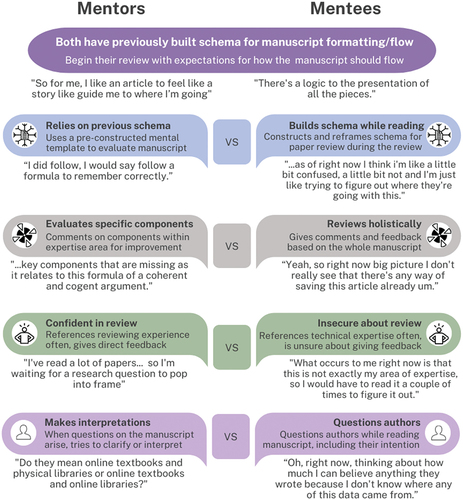

Analysis of TAPs allowed us to identify similarities and differences in schema development between mentees and the more experienced mentors. All similarities, differences, and supporting quotes based on TAPs are presented in . The primary similarity in schema between mentees and mentors is that they focused on formatting and grammar of the manuscript. Both began reviewing the manuscript with expectations of how it should be formatted, including what information should be in each section.

Figure 4. Summary of mentor and mentee similarities and differences in manuscript review based on analysis of Think-Aloud Protocols (TAPs).

Beyond formatting, mentees had few expectations on manuscript quality or purpose at the start of their review. As mentees moved through the paper, their schema was fluid; they began to build their expectations on what contributed to the quality of the paper and its relevance to EER. As shown in under both ‘builds schema while reading’ and ‘questions authors’, mentees would often ask themselves questions about an author’s intention or how a statement fit into the overall argument at the beginning of the manuscript. However, by the end of the article, mentees would forge ahead in developing their own interpretations of unclear components. Through building this schema, mentees maintained a holistic view of the paper, working to assess the entire manuscript’s quality rather than specific sections. Mentees were more likely than mentors to want to read the entire manuscript before making judgements on specifics of the manuscript. Once the schema for manuscript quality and purpose were developed, if the manuscript deviated from their expectations, mentees tended to be more reactive to the manuscript and would question the author’s intentions. When mentees would experience deviations in their constructed schema for quality, it would often lead to them somewhat discrediting the validity of the manuscript. A clear example of a mentee’s reaction to a schema deviation is shown in under ‘questions authors’. Throughout their reviews, mentees often referenced their lack of reviewing experience and would question if their judgements were ‘right’.

Like mentees, mentors approached the manuscript with expectations of formatting and grammar. Unlike mentees, mentors approached their review with clear expectations of the manuscript’s research quality and relevance to EER. Mentors would often review each section individually, comparing the manuscript to their pre-formed schema. When the manuscript deviated from these expectations, mentors would ask clarifying questions about the manuscript to understand the authors’ intentions. While mentees also often questioned the authors’ intentions, it was more rhetorical (i.e. ‘Why did they do this?’), whereas mentors would ask and then provide possible explanations pulled from prior experience. One specific example of an interpretive question asked by a mentor is listed under ‘makes interpretations’ in . They would also often include guiding comments and suggestions to support the authors in revising their manuscripts in ways that aligned with their expectations of an EER manuscript.

Mentors primarily made comments within their areas of expertise and focused on the logical or research elements of the manuscript rather than the manuscript as a whole. When they made these comments, mentors were very confident and would often reference their prior experience as a reviewer.

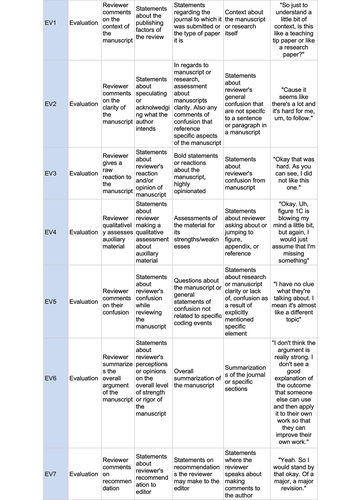

4.2. Structured peer reviews (SPRs) provide evidence of schema development in mentees after completion of the mentored preview program

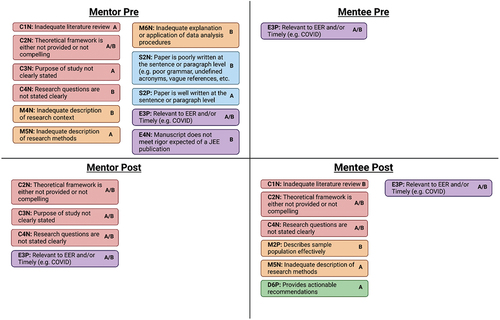

The SPRs reinforced our findings from the TAPs that mentors came into the programme with more of a shared schema than the mentees. For both cohorts, at the start of the programme mentors were more likely to identify the same criteria when reviewing the same manuscript than mentees () based on their Pre-SPRs. While mentees only aligned on one code (E-3P) at least 50% of the time in their Pre-SPRs for both cohorts 1 and 2, mentors aligned on six (cohort 1 mentors) to seven (cohort 2 mentors) different codes. This indicates that mentors came into the programme with more of a shared schema in terms of the criteria that they apply when conducting a peer review.

Figure 5. Comparison of aspects of a manuscript that at least 50% of mentors and 50% of mentees commented on in their reviews of Manuscripts A and B before (Pre-) and after (Post-) participating in the PERT program based on Structured Peer Review (SPR) data.

In contrast, upon completion of the programme, the Post-SPRs show that mentees identify shared criteria and are more aligned with mentors. In their responses to the Post-SPR for both manuscripts, mentees aligned at least 50% of the time on five codes for both cohorts 1 and 2. Similarly, mentors aligned at least 50% of the time on four codes for both cohorts 1 and 2 in their responses to the Post-SPR. Of these four aligned codes for the mentors, mentees aligned with three of them (E-3P, C-2N, and C-4N). This alignment in codes after participation in the programme was consistent for the two different manuscripts used for training and evaluation purposes in this study. This provides evidence that through participation in a mentored review programme, mentees were able to enhance their schema development and become more closely aligned with mentors.

Codes relating to Context (C) showed the most convergence across mentors and mentees, and across different manuscripts. Codes C-2N (Theoretical framework is either not provided or uncompelling) and C-4N (Research questions are not stated clearly) were identified as major weaknesses of the manuscripts by mentors and mentees when reviewing both manuscripts A and B in their Post-SPRs. Code E-3P (Relevant to EER and/or Timely (e.g. COVID)) was identified as a major strength by mentors and mentees of both manuscripts in their Pre-SPRs and Post-SPRs. This could indicate that these criteria are some of the most important to reviewers in EER.

4.3. Exit surveys highlight the building of community of practice and increased confidence in peer review and research

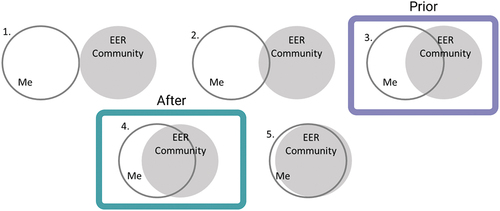

In the exit surveys, mentees were asked to rate their perceived connection to the EER community before (‘PRIOR’) and after (‘AFTER’) utilising a Venn diagram format (McDonald et al. Citation2019). The averages of the responses are shown in . There was a clear positive shift in mentees’ connection to the EER community through participation in the mentored reviewer programme. When asked to explain the extent of this shift, mentees who reported a closer connection with the EER community mentioned an increased level of confidence and belonging as a result of the programme. One mentee reported:

Figure 6. Response options to the following questions on the exit survey ‘Which of the images below best characterises your connection to the EER community PRIOR to participating in the PERT Program?’ and ‘Which of the images below best characterizes your connection to the EER community AFTER participating in the PERT Program?’.

I saw the care for researchers and community that is embodied in the PERT program, and that made me feel much more safe to be part of the community. It has also been great to have so many opportunities to interact.

Similar sentiments were also expressed by the mentors, for example:

My research and work looks at STEM from an interdisciplinary perspective. This has lead me to engage with EER community in a variety of different ways. By being intentional with my involvement pushed me further into engineering education than before.

The exit survey also provided evidence that the mentoring increased participants’ confidence in executing various facets of peer review (). Participants rated the peer review programme as having increased their reviewing and research skills and confidence moderately and to a great extent.

Table 1. Mentees were asked to rate the PERT programme’s effect on the following facets related to manuscript review according to 1=Not at all, 2=Minimally, 3=Moderately, and 4=To great extent.

In response to the exit survey question about connections between peer review skills and research skills such as identifying EER topics to research, framing research questions, designing studies, and preparing manuscripts, both mentors and mentees overwhelmingly agreed that there was a strong connection between peer review and research skills, and that the mentored reviewer programme helped improve those skills. Typical responses include:

Yes, there is a good connection. The mentoring process has made me think about the main components of an article, also the different types of research that exist. I can look at my own paper to ensure that these components are included (Mentee).

Yes, my research skills have improved significantly due to my participation in the program. One of the main benefits has been understanding alignment in study design. As a reviewer, I always look for congruence between the problem/focus, theory, methodology, presentation of findings, and discussion of conclusions/implications. This perspective has made me more intentional in how I design and describe my own research. Having a keen eye for research quality as a mentor and being able to articulate its importance to the mentees has helped develop my ability to do the same as an author (Mentor).

5. Discussion

This study sought to understand the relationship between reviewing expertise and manuscript evaluation and the influence of peer review mentoring on this relationship. Our research was guided by the following research questions:

To what extent are the ways in which reviewers evaluate manuscripts influenced by reviewers’ varied levels of expertise?

To what extent does participation in a mentored peer reviewer programme influence reviewers’ EER manuscript evaluations?

5.1. Reviewing expertise, mentoring, and schema development

Mentors and mentees entered the PERT programme with varied levels of experience in peer review, resulting in clear differences in the schemata they drew from as they evaluated manuscripts as illustrated in both the TAP and SPR results. Previous literature has identified that not only lack of experience, but also differences in disciplinary expertise can lead to divergence in the definition of research or writing quality in manuscripts (Tennant and Ross-Hellauer Citation2020; Brezis and Birukou Citation2020). These differences are often compounded by a poor understanding of what defines ‘quality’ research in an emerging, interdisciplinary field such as EER (Tennant and Ross-Hellauer Citation2020). For these reasons, divergences such as those seen in our pre SPR and TAP data are not unexpected. However, by the end of the programme, mentors and mentees were more aligned in the criteria that they identified as important in their evaluations, a strong indication that their schemata have also become more aligned. Notably, codes relating to Context had the most convergence for both manuscripts by mentors and mentees. This could potentially indicate that for EER researchers, criteria related to problem framing is the primary consideration in manuscript evaluation. The peer review study conducted by Fiske and Fogg reported a similar finding (Fiske and Fogg Citation1990).

The convergence of quality evaluations for manuscripts could possibly be explained by the increase in EER community integration among mentees. Although schema is developed through individual experiences and understanding (such as those developed in a previous discipline), integration within a team or community can lead to convergences in group schema (Rentsch et al. Citation1998). While previous research has identified how similar team experiences can eventually lead to deeper understanding of others’ schemata and, subsequently, co-orientation of schemata, such results have not been reported for peer review training (Rentsch et al. Citation1998). In the PERT programme, co-orientation of schemata related to manuscript review also appears to have developed as less experienced mentees converged with more experienced mentors in their SPRs. Beginning with the mentor, triad members alternated leading reviews of three manuscripts so each gained experience with the full process of writing, refining and submitting reviews. This experience likely contributed to the alignment of schemata due in part to the opportunity for mentees to be trained in peer review from their mentors. Future studies should further investigate factors contributing to this convergence and influencing co-orientation between mentees and mentors, specifically for reviews of more varied manuscripts in terms of quality and content.

5.2. Mentored peer review has implications for the field of EER

The arrangement of mentors and mentees into triads was designed based on cognitive apprenticeship in which learners acquire knowledge through carefully sequenced authentic learning activities that allow them to develop expertise within a community of practice (Maher et al. Citation2013; Holum, Allan, and Brown Citation1991). Communities of practice often begin with exploring connectedness between a group and negotiations of what the practice may be (coalescing stage), eventually leading into active practice and adaptations to divergent schema (active stage) (Wenger Citation2008). Exit survey results showed that after participating in the PERT programme, mentees felt more connected to the EER community than when they started. These findings, along with the co-orientation of schemata, suggests that mentees involved in the programme shift from involvement in the coalescing stage of the EER community to the active stage. When members of the community make this type of shift, they become active practitioners (Wenger Citation2008). This active status has implications for the EER community, potentially allowing a wider diversity of young practitioners to engage in EER research and leading to a more inclusive, innovative community overall. The social construction of knowledge and shared ideas about what aspects of a manuscript to focus on during peer review can also lead to a shift in existing normativities for reviewing EER scholarship (Beddoes, Xia, and Cutler Citation2022). Future research should continue to explore changes to the larger EER community, including alterations to how quality research is defined and integrated into the field.

5.3. Broad impacts

Based on positive outcomes for the PERT participants, the triad mentoring structure (one mentor with two mentees who rotate through reviewer responsibilities) could be replicated with other journals in EER and beyond, notwithstanding the unique characteristics that reviewers and editorial boards must attend to for different journals that require different schema when conducting reviews based on a journal’s aims and scope. The SPR codes we provide in Appendix B and other training materials on our website (EER peer reviewer training program, Citationn.d.) provide guidelines for assessing the quality of EER manuscripts that could be useful to both reviewers in their evaluations and to authors in developing and revising manuscripts. Other interdisciplinary fields may also benefit in terms of the community integration found through peer review training, suggested by our community alignment results. Through co-orientation of schemata and stronger connections with the scientific community, novice researchers and reviewers can become better situated within their field while bringing in their own experiences and innovations.

6. Limitations and future research

A limitation of this study is that it included peer review of manuscripts from only one journal; the participant selection process, manuscript assignment and review process, key aspects of the PERT participants’ experience, would be different for other journals. Although the application process for mentees was open to the entire engineering education community, mentors for the first cohort of the programme were invited by the project team based on their experience as reviewers. Having mentors from within our own networks could have biased our findings. This was mitigated in subsequent cohorts by inviting mentees to be mentors based on recommendations from their own mentors. Another limitation of this study is that the manuscripts used for the Pre- and Post-SPRs were each only ~1500 words. While the use of these abbreviated samples enabled the research team to collect a large number of reviews of the same manuscript, it raises the possibility that the content of these manuscripts does not reflect that of full research articles, which are typically ~ 8,000 to 10,000 words. Data collection also includes the three SPRs of full-length EER journal manuscripts for each triad, and future analyses of these SPRs will help address the limitations of using shorter manuscripts for training purposes. Additionally, we only conducted TAPs with participants prior to participating in the programme. In subsequent cohorts we will also conduct Post-TAPs to more fully investigate shifts in the manuscript evaluations of mentees resulting from their participation. Future research will involve participants who have participated in multiple cohorts of the PERT programme. We will continue to collect and analyse data from subsequent cohorts, which will provide a more robust sample size for our analyses.

7. Conclusion

This paper explored the aspects of EER manuscripts that peer reviewers notice and comment on in their reviews and recommendations to editors within the context of a mentored peer reviewer programme. Our data are unique in including reviewers’ recommendation as well as justifications for those recommendations, and the strengths and weaknesses of manuscripts they identified. These preliminary findings suggest that the ways in which reviewers evaluate manuscripts are influenced by their level of expertise. We also provide evidence that peer review professional development in the form of mentored training can influence not only reviewers’ EER manuscript evaluations but also how reviewers understand EER research quality. Evidence of the effects of mentored reviewing can build capacity in engineering education research by recognising that this type of training is a form of professional development for novice peer reviewers such as senior graduate students, postdocs or those making the transition into EER from other fields. This evidence also demonstrates that, because assessing research quality is informed by one’s professional knowledge and experience, reviewers can learn from each other through the varied aspects of manuscripts that they each focus on.

We are in the early stages of our study, yet we find implications from the data in terms of expanding expertise and building community. Most researchers receive little or no training in peer review. However, as increasing numbers of EER scholars are involved in peer review of journal and conference manuscripts, it is essential to consider the extent to which understanding of quality in EER research is shared. Notably, there was greater convergence between mentors and mentees in how they evaluated EER manuscripts by the end of their participation. This suggests that there are epistemological foundations upon which EER professionals evaluate manuscripts and that these conventions can become shared through peer mentoring. In a field as new and interdisciplinary as EER, discussions about the criteria by which we evaluate manuscripts can promote enhanced understanding of the research questions we pose and the methods we use to explore them. Deeper understanding of the epistemological basis for peer reviews of manuscripts can continue to reveal ways to strengthen professional preparation in EER as well as in other disciplines.

Acknowledgments

The authors thank the program participants for sharing their experiences and supporting the program. The authors also thank the project advisory board members for their guidance on the project. Additional thanks to the reviewers who took the time to provide us with thorough feedback that helped shape the manuscript. All figures in the manuscript were generated using BioRender.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes on contributors

Kelsey Watts

Kelsey Watts (she/her) is a postdoctoral researcher in the Department of Biomedical Engineering and the Center for Public Health Genomics at the University of Virginia. She holds a Ph.D. in bioengineering from Clemson University.

Randi Sims

Randi Sims (she/her) is a doctoral student in the Department of Engineering and Science Education at Clemson University. She holds a Masters degree in biology from Clemson University.

Evan Ko

Evan Ko (he/they) is a research associate in the Department of Biomedical Engineering at the University of Michigan. He holds a bachelor of science degree in bioengineering from the University of Illinois Urbana-Champaign.

Karin Jensen

Karin Jensen Ph.D. (she/her) is an assistant professor in the Department of Biomedical Engineering and Engineering Education Research program at the University of Michigan. She holds a Ph.D. in bioengineering from the University of Virginia.

Rebecca Bates

Rebecca Bates (she/her) is Professor and Department Chair of Integrated Engineering at Minnesota State Mankato. She holds a Ph.D. in electrical engineering from the University of Washington.

Gary Lichtenstein

Gary Lichtenstein (he/they), Ed.D., is Affiliate Associate Professor of Experiential Engineering Education at Rowan University and Principal of Quality Evaluation Designs.

Lisa Benson

Lisa Benson (she/her) is a Professor of Engineering and Science Education at Clemson University. She holds a Ph.D. in bioengineering from Clemson University.

References

- Anderson, R. C., R. J. Spiro, and M. C. Anderson. 1978. “Schemata as Scaffolding for the Representation of Information in Connected Discourse.” American Educational Research Journal 15 (3): 433–440. doi:10.3102/00028312015003433.

- Austin, A. E. 2009. “Cognitive Apprenticeship Theory and Its Implications for Doctoral Education: A Case Example from a Doctoral Program in Higher and Adult Education.” International Journal for Academic Development 14 (3): 173–183. doi:10.1080/13601440903106494.

- Beddoes, K., Y. Xia, and S. Cutler. 2022. “The Influence of Disciplinary Origins on Peer Review Normativities in a New Discipline.” Social Epistemology 37 (3): 390–404. doi:10.1080/02691728.2022.2111669.

- Benson, L., R. A. Bates, K. Jensen, G. Lichtenstein, K. Watts, E. Ko, A. Balsam. 2021, July. Building Research Skills Through Being a Peer Reviewer presented at the 2021. ASEE Virtual Annual Conference Content Access. Virtual Conference. 10.18260/1-2-36769.

- Benson, L. C., K. Becker, M. M. Cooper, O. H. Griffin, and K. A. Smith. 2010. “Engineering Education: Departments, Degrees and Directions.” International Journal of Engineering Education 26 (5): 1042–1048.

- Braun, V., and V. Clarke. 2006. “Using Thematic Analysis in Psychology.” Qualitative Research in Psychology 3 (2): 77–101. doi:10.1191/1478088706qp063oa.

- Brezis, E. S., and A. Birukou. 2020. “Arbitrariness in the Peer Review Process.” Scientometrics 123 (1): 393–411. doi:10.1007/s11192-020-03348-1.

- Ceci, S. J., and D. P. Peters. 1982. “Peer Review: A Study of Reliability.” Change: The Magazine of Higher Learning 14 (6): 44–48. doi:10.1080/00091383.1982.10569910.

- Cole, J. R., & S. Cole. 1981. Peer Review in the National Science Foundation: Phase II. 2101 Constitution Avenue, N: National Academy Press.

- Coles, C. 2002. “Developing Professional Judgment.” The Journal of Continuing Education in the Health Professions 22 (1): 3–10. doi:10.1002/chp.1340220102.

- EER Peer Reviewer Training Program. Google Sites: Sign-In. (n.d.). https://sites.google.com/view/jee-mentored-reviewers/mentored-reviewer-program

- Epistemology - The history of epistemology | Britannica. n.d. In Britannica, Accessed Feb 8 2022. https://www.britannica.com/topic/epistemology/The-history-of-epistemology

- Fiske, D. W., and L. F. Fogg. 1990. “But the Reviewers are Making Different Criticisms of My Paper! Diversity and Uniqueness in Reviewer Comments.” The American Psychologist 45 (5): 591–598. doi:10.1037/0003-066X.45.5.591.

- Greene, J. A., J. Robertson, and L. -J.C. Costa. 2011. “Assessing Self-Regulated Learning Using Think-Aloud Methods.” In Handbook of Self-Regulation of Learning and Performance, edited by B. J. Zimmerman and D. H. Schunk, 313–328, New York, NY, USA: Routledge/Taylor & Francis Group.

- Hojat, M., J. S. Gonnella, and A. S. Caelleigh. 2003. “Impartial Judgment by the “Gatekeepers” of Science: Fallibility and Accountability in the Peer Review Process.” Advances in Health Sciences Education 8 (1): 75–96. doi:10.1023/A:1022670432373.

- Holum, A., C. Allan, and J. S. Brown. 1991. “Cognitive Apprenticeship: Making Thinking Visible.” American Educator 15: 1–18.

- Jensen, K., I. Direito, M. Polmear, T. Hattingh, and M. Klassen. 2021. “Peer Review as Developmental: Exploring the Ripple Effects of the JEE Mentored Reviewer Program.” Journal of Engineering Education 110: 15–18. doi:10.1002/jee.20376.

- Jensen, K., E. Ko, K. Watts, G. Lichtenstein, R. A. Bates, & L. Benson. 2022, June. Building a Community of Mentors in Engineering Education Research Through Peer Review Training ASEE Annual Conference and Exposition Proceedings, Minneapolis, MN, USA.

- Langfeldt, L. 2001. “The Decision-Making Constraints and Processes of Grant Peer Review, and Their Effects on the Review Outcome.” Social Studies of Science 31 (6): 820–841. doi:10.1177/030631201031006002.

- Lee, C. J. 2012. “A Kuhnian Critique of Psychometric Research on Peer Review.” Philosophy of Science 79 (5): 859–870. doi:10.1086/667841.

- Lipworth, W., and I. Kerridge. 2011. “Where to Now for Health-Related Journal Peer Review?” Journal of Law and Medicine 18 (4): 724–727.

- Maher, M. A., J. A. Gilmore, D. F. Feldon, and T. E. Davis. 2013. “Cognitive Apprenticeship and the Supervision of Science and Engineering Research Assistants.” Journal of Research Practice 9 (2).

- Marques, J., and C. McCall. 2005. “The Application of Interrater Reliability as a Solidification Instrument in a Phenomenological Study.” The Qualitative Report 10 (3): 439–462. doi:10.46743/2160-3715/2005.1837.

- McDonald, M. M., V. Zeigler-Hill, J. K. Vrabel, and M. Escobar. 2019. “A Single-Item Measure for Assessing STEM Identity.” Frontiers in Education 4 (78): 1–15. doi:10.3389/feduc.2019.00078.

- Merton, R. K. 1979. The Sociology of Science: Theoretical and Empirical Investigations, Chicago, IL: University of Chicago Press. Accessed Feb 8 2022. [ Online]. https://press.uchicago.edu/ucp/books/book/chicago/S/bo28451565.html

- Miles, M. B., A. M. Huberman, and Saldaña J. 2014. Qualitative Data Analysis. Thousand Oaks, CA, USA: SAGE.

- Newton, D. P. 2010. “Quality and Peer Review of Research: An Adjudicating Role for Editors.” Accountability in Research 17 (3): 130–145. doi:10.1080/08989621003791945.

- Rentsch, J. R., M. D. McNeese, L. J. Pape, D. D. Burnett, D. M. Menard, & M. N. Anesgart. 1998. Testing the Effects of Team Processes on Team Member Schema Similarity and Team Performance: Examination of the Team Member Schema Similarity Model. Prepared for the United States Air Force (Issued July 1998).

- Riley, D., J. Karlin, J. Pratt, and S. Quiles-Ramos. 2017, June. Building Social Infrastructure for Achieving Change at Scale ASEE Annual Conference & Exposition Proceedings. 10.18260/1-2-27722.

- Rumelhart, D. E. 1980. “Schemata: The Building Blocks of Cognition.” In Handbook of Educational Psychology (Second), edited by P. A. Alexander and P. H. Winne, 33–58. Mahwah, NJ, USA: Routledge.

- Rumelhart, D. E., and A. Ortony. 1977. “The Representation of Knowledge in Memory.” In Schooling and the Acquisition of Knowledge, edited by R. C. Anderson, R. J. Spiro, and W. E. Montague, 99–135. Lawrence Erlbaum Associates. doi:10.4324/9781315271644-10.

- Schön, D. A. 2017. The Reflective Practitioner: How Professionals Think in Action. London: Routledge. doi:10.4324/9781315237473.

- Tennant, J. P., and T. Ross-Hellauer. 2020. “The Limitations to Our Understanding of Peer Review.” Research Integrity and Peer Review 5 (1): 1–14. doi:10.1186/s41073-020-00092-1.

- Tyler, T. R. 2006. “Psychological Perspectives on Legitimacy and Legitimation.” Annual Review of Psychology 57 (1): 375–400. doi:10.1146/annurev.psych.57.102904.190038.

- Watts, K., G. Lichtenstein, K. Jensen, E. Ko, R. A. Bates, & L. Benson. 2022, June. The Influence of Disciplinary Background on Peer Reviewers’ Evaluations of Engineering Education Journal Manuscripts ASEE Annual Conference and Exposition Proceedings, Minneapolis, MN, USA.

- Wenger, E. 2008. “Communities of Practice: Learning as a Social System.” Organization 7 (2): 1–10. doi:10.1177/135050840072002.