ABSTRACT

This study focuses on the development of neural discrimination of pitch changes in speech and music by English-language adults and 4-, 8- and 12-month-old infants. Speech stimuli were Mandarin Chinese rising and dipping lexical tones and the musical stimuli were three-note melodies with pitch levels based on those of the lexical tones. Mismatch responses were elicited using a non-attentive oddball paradigm. Adults showed mismatch negativity (MMN) responses in both the lexical tone and music conditions. For infants, for the lexical tones, a positive-mismatch response (p-MMR) was observed at 4, 8, and 12 months, whereas for the musical tones, a p-MMR was found for the 4-month-olds, an MMN for the 12-month-olds, and no mismatch response, either positive or negative, for the 8-month-olds. No evidence of cross-domain correlation of the mismatch responses was found. These results suggest domain-specific development of mismatch responses to pitch change in the first year of life.

Introduction

Pitch variation abounds in human auditory input, and it plays a fundamental role for two uniquely human complex cognitive functions, viz. speech and music. Of particular interest here is the use of pitch information in both, and the relative perceptual salience of pitch contours in speech and music and how these might develop and even diverge in early infancy (McMullen & Saffran, Citation2004; Trehub & Hannon, Citation2006).

In all languages of the world, pitch variation plays a major role in prosody (Gussenhoven, Citation2004; Xu, Citation2011). Over and above such variation, 70% of the world's languages are tone languages in which lexical tone, based predominantly on pitch, is used to distinguish lexical meaning (Yip, Citation2002). Young infants are sensitive to speech prosody (Fernald et al., Citation1989), and heightened pitch variation facilitates language learning (Thiessen et al., Citation2005). Pitch contour is also an essential element in music. A musical melody is defined by pitch relations, and listeners recognise a melody by its pitch contour irrespective of variations in pitch height or timbre (Trehub & Hannon, Citation2006). Similarly, in speech, listeners make use of contour cues to identify and discriminate between intonations and lexical tones irrespective of the specific pitch height and pitch range of particular speakers (Gandour & Harshman, Citation1978; Patel et al., Citation2005).

There is good evidence for an association between pitch processing in music and speech, in that expertise in one domain transfers to the other: there is a positive cross-domain transfer in pitch processing ability from tone language experience to music (although cultural differences may be at play as well [Peretz et al., Citation2013]), and from musical experience to speech processing (Bidelman et al., Citation2011, Citation2013); and that the transfer from music to lexical tones can have both positive (i.e. musicianship enhances lexical tone processing, Burnham et al., Citation2015) and negative (i.e. people with congenital amusia are hindered in lexical tone processing, Tillmann et al., Citation2011) consequences. However, little is known about whether pitch processing in music and language exhibit unified or distinct developmental trajectories in early infancy. Although many studies suggest that the same learning mechanisms might be at play in both, experimental evidence is scarce (McMullen & Saffran, Citation2004; Trehub & Hannon, Citation2006). Chen et al. (Citation2017) directly compared early development of pitch processing in music and speech, and they found that from 4- to 12-months Dutch infants showed comparable improvement in their discrimination of Chinese lexical tones and of three-note musical melodies with similar pitch contours. As the lexical tones were non-native for the Dutch infants, their improvement in lexical tone discrimination cannot be the result of specific speech-related learning, but suggests a domain-general enhancement in pitch discrimination as the result of maturation or some domain-general learning. In a near-infrared spectroscopy (fNIRs) study, Sato et al. (Citation2010) showed that for Japanese infants, native lexical pitch processing was bilateral at 4 months and became left lateralised at 10 months while the processing of pure tones remained bilateral. Another fNIRs study found that 4.5-month-old infants showed comparable processing elicited by either a lyric or prosody change while by 12 months, the infants processed the two in a unified manner (Yamane et al., Citation2021). These fNIRs studies seem to suggest a separate developmental course of the processing of lexical versus musical pitch, and it seems that infants acquiring a native pitch accent language gradually process the lexical pitch differently than non-linguistic pitch, which is consistent with the split hypothesis proposed in Chen et al. (Citation2016).

Auditory event related potential (ERP) studies allow us to study neural processing and discrimination of sound. One of the most extensively studied ERP components is mismatch negativity (MMN). MMN can be elicited using a passive oddball paradigm, in which listeners are presented with a stream of “standard” sounds conforming to a certain regularity, punctuated occasionally by “deviant” sounds, dissimilar in some relevant dimension from the standards. In adults, if change from standard to deviant is detected, then the MMN, the response to the deviant minus compared to that to the standard, is visible as a negative peak between 100 and 300 ms from deviant onset (Bishop, Citation2007; Näätänen et al., Citation2007). MMN to non-linguistic pitch (note) change has been elicited in many studies with adults (Hari et al., Citation1984; Jacobsen & Schroger, Citation2001; Näätänen et al., Citation2007), and MMN amplitude has been found to correlate with behavioural measures of frequency discrimination accuracy (Kujala et al., Citation2007; Lang et al., Citation1990). Besides single tones, the MMN response can also be elicited by pitch contour differences (Tervaniemi et al., Citation1994). With regard to pitch in speech, the most frequently used stimuli are Chinese lexical tones. Lexical tones and their non-speech analogues elicit MMNs in both tone and non-tone language listeners (Chandrasekaran et al., Citation2007, Citation2009; Kaan et al., Citation2008; Yu et al., Citation2017).

Recently, to understand possible neural differences underlying speech and musical pitch processing, Chen et al. (Citation2018) tested Dutch and Chinese adults on their discrimination of Chinese lexical tones and ecologically-valid three-note musical phrases. The musical melodies and lexical tones were constructed to have comparable overall pitch contours (i.e. comparable pitch at the onset and offset of the stimuli as well as comparable pitch contour shape), while encapsulating the intrinsic between-domain differences – the musical phrases comprised discrete piano notes whereas the lexical tones had a continuous pitch contour produced by human voice. Both conditions elicited MMNs but the lexical tone condition (one standard versus one deviant lexical tone) elicited a single MMN whereas the musical condition (one standard three-note melody versus one deviant three-note melody) elicited separate MMNs in the transitions between notes 1 and 2 and between notes 2 and 3. For both the Chinese and the Dutch listeners, the morphology of the responses was substantially different in the two conditions suggesting stimulus-specific encoding. However, while the Dutch listeners showed significant positive correlations between lexical tone and music MMN amplitudes, there was no cross-condition correlation for the Chinese listeners, suggesting unified processing of pitch variation across the domains for the Dutch but not the Chinese listeners (Chen et al., Citation2016). In summary, MMNs are elicited by both speech and musical pitch change (both single-note change and more complex contour change) in adults and, at least for non-tone language listeners, there is a positive relationship between neural responses to pitch change in the speech and the music domains.

Turning to MMN studies with infants, interpretation is hampered by the fact that under certain conditions and at certain ages, infants’ and children's mismatch responses tend to exhibit a late positivity (positive mismatch response, p-MMR) rather than the adult negativity (MMN) (Alho et al., Citation1993; He et al., Citation2007, Citation2009; Morr et al., Citation2002). As infants develop, the polarity of the mismatch response shifts to negative, and gradually approximates the adult MMN (Bishop et al., Citation2011; Morr et al., Citation2002). Children's p-MMRs have been observed to occur at latencies earlier, equal, or later than the adult MMN (Leppänen & Lyytinen, Citation1997; Maurer et al., Citation2003; Morr et al., Citation2002; Shafer et al., Citation2000), and while there is consensus that the p-MMR is a less mature response than the MMN, no consistent results across stimuli have been found with regard to the age at which the infant/child p-MMR shifts to MMN (He et al., Citation2007, Citation2009; Kushnerenko et al., Citation2002; Morr et al., Citation2002). In addition, both MMNs and p-MMRs can be observed in infants at the same time (Cheng & Lee, Citation2018; Jing & Benasich, Citation2006; Lee et al., Citation2012; Maurer et al., Citation2003; Shafer et al., Citation2010), suggesting that the MMN and the p-MMR in infants and children might be separate mismatch responses that index different neural functions.

With specific reference to neural discrimination of pitch in music, besides responses to single notes, the infant brain also responds to relational pitch differences. Newborns show mismatch responses to contour differences between melodies composed of discrete notes (Carral et al., Citation2005; Stefanics et al., Citation2009), and six-month-old infants show a p-MMR when presented with four-note melody standards interspersed with deviant melodies that incorporate an occasional pitch change (Tew et al., Citation2009). With respect to developmental change, Jing and Benasich (Citation2006) presented two-note sequences that differed in the second note and found robust late p-MMRs in 3- to 24-month-old infants, while MMN was not present at 3 months and became robust at 6 months and beyond.

With specific reference to neural discrimination of pitch in speech, there is a similar progression from p-MMR to MMN in studies of Chinese infants’ mismatch responses to lexical tones. Cheng and colleagues (Cheng et al., Citation2013; Cheng & Lee, Citation2018) compared responses to an acoustically salient lexical tone contrast, the Mandarin Chinese high-level versus low-dipping tone (T1-T3), differing in both pitch level and contour, and a less salient lexical tone contrast, the Mandarin low-rising and low-dipping tone (T2-T3), which have similar onset and contour. For the salient T1-T3 contrast, there is a p-MMR response at birth and an MMN responses from 6 months through 12, 18, and 24 months. For the less salient T2-T3 contrast there is no p-MMR at birth, and p-MMR from 6 through 12 and 18 months but not at 24 months, perhaps suggesting the imminent emergence of an MMN beyond 24 months. It should be noted that the aforementioned lexical tone studies tested infants’ mismatch response to their native tones, hence the developmental pattern found in these studies could be the result of either maturation or learning (or both). If we are to understand commonalities and specificities of the neural mechanisms that may underlie pitch perception development in speech and music, so that any difference observed cannot be due to learning particular pitch patterns in one domain and not in the other, it is crucial for the stimuli to be equally familiar for the infants. In particular, seeing the change in lateralisation for speech but not for the pure tone stimuli in the fNIRs studies (Sato et al., Citation2010) in the first year of life, it is plausible that the maturation of neural responses shows a different timeline for different types of stimuli (e.g. lexical tones versus musical pitch in the current study).

In summary, although there is both neural and behavioural evidence for common processing mechanisms underlying speech and music perception in adults (Bidelman et al., Citation2011, Citation2013; Chen et al., Citation2018; Krishnan et al., Citation2010), research on whether pitch processing in speech and music is linked early in life is scarce. Studies on neural discrimination of pitch have focused on either music or language, and for both it remains uncertain how the mismatch response develops early in life. Both speech and music are uniquely human, and are universally used across human cultures to serve fundamental communicative purposes (Patel, Citation2008). How young infants respond to pitch in speech and music will shed light on whether common brain mechanisms subserve these high-level cognitive abilities and to what extent the development of neural responses occurs in a domain-specific or domain-general manner.

In this study, we tested English-learning 4-, 8-, and 12-month-old infants as well as native English adults, using the same stimuli and procedure as in the study of Chinese and Dutch adults by Chen et al. (Citation2018). Chinese lexical tones and three-note musical melodies with comparable pitch contours were used as stimuli in the speech and music condition respectively. These stimuli were chosen since they entail contour variation, which plays a central role in both domains. We expected the English-language adults to show similar brain responses to the Dutch participants in Chen et al. (Citation2018), namely a single MMN elicited by the lexical tones, two separate MMNs corresponding to each note change elicited by the musical tones, and a positive correlation between the MMNs in the two conditions. For the English-language infants, if the detection of pitch change in speech and in music develop in a parallel fashion, we would expect (i) the emergence of p-MMR to occur at the same age for speech and music, (ii) the shift from p-MMR to MMN to occur at the same age for speech and music. More specifically, based on the resource sharing framework (Patel, Citation2012), that p-MMR to MMN will occur at the same time for speech and music, and based on previous research on pitch MMN for speech, we predict that this p-MMR to MMN shift will occur between 4 months (p-MMR) 8 months (MMN). Alternatively, based on the perceptual attunement literature, we expect p-MMR for all the three age groups (i.e. 4-, 8-, and 12-month-olds) as pitch changes are not lexical in English.

Materials and methods

The ethics committee for human research at Western Sydney University approved all the experimental methods used in the study (Approval number: H9660). Informed consent was obtained from all adult participants and parents of the infant participants.

Participants

Fifteen adult native Australian English language participants (mean age 26.3 years, SD = 6.7 years, 10 females) were tested, along with 54 healthy infants – 18 4-month-olds (range: 4 month 4 days-5 month 5 days, 10 girls), 18 8-month-olds (range: 7 month 22 days-9 month 4 days, 10 girls), and 18 12-month-olds (range: 11 month 10 days-13 month 3 days, 11 girls). All infant participants were recruited through the BabyLab Register at MARCS Institute for Brain, Behavior and Development in Western Sydney University in Australia. The adult participants were completing or had recently completed bachelor or graduate programmes. None of the adult participants had learned any tone language; five of them had had music lessons (mean lesson duration = 3.4 years, SD = 1.8 years). All adult participants were right-handed according to self report and reported normal hearing. All the infants were raised in a monolingual Australian English environment. None of the infants’ parents reported any infant hearing impairment, or any ear infection within the two weeks prior to the experiment. One additional 12-month-old girl was tested but excluded from the analysis as she removed the EEG cap shortly after the experiment started, and one additional 8-month-old boy was excluded due to fussiness.

Stimuli

The lexical tone and the musical stimuli were the same as in Chen et al. (Citation2018). The Mandarin Chinese rising tone (tone 2, T2) and dipping tone (tone 3, T3) were used as stimuli, each on the tone-bearing syllable /ma/. The speaker was a female native Chinese speaker, who was recorded producing /ma2/ and /ma3/ in isolation in a sound-proof phonetic laboratory equipped with a DAT Tascam DA-40 recorder and a Sennheiser ME-64 microphone. The durations of the two lexical tone contours (one token for each tone) were time-normalised to 667 ms. The pitch contours of these naturally-produced /ma2/ and /ma3/ were extracted using Praat (Boersma & Weenink, Citation2011) and the pitch contour of the T2 was re-synthesised onto the original T3 syllable using the overlap-add method. In this way, the two lexical tones differed only in pitch and had identical segments and duration. The pitch of the initial 30 ms of both syllables was adjusted manually in Praat so that their onsets were at the same F0 level. It should be noted that the lexical tones are produced with variable duration in real life speech, and duration normalisation does result in natural-sounding syllables. Multiple native Mandarin speakers listened to the stimuli and all agreed that the stimuli sounded natural.

The music stimuli were generated to match the pitch contours of the lexical tones. Piano tones F3 (175.61 Hz), F#3 (185.00 Hz), C#3 (138.59 Hz), G3 (196 Hz) and A#3 (233.08 Hz) were synthesised in Nyquist software (http://www.cs.cmu.edu/~music/music.software.html), which follows equal temperament tuning, with the frequency of middle A (A4) being the usual 440 Hz. The notes were generated with the default duration of one-16th of a note (250 ms) in Nyquist. Then F3, G3 and A#3 were concatenated to form a rising melody, and F3, C#3 and F#3 concatenated to form a dipping melody. In order to ensure continuity and naturalness, the whole melody was then adjusted in Praat to a duration of 667 ms, which resulted in slightly different durations for each note; 220 ms for the first, 226 ms for the second, and 221 ms for the third note. Then the melodies were resynthesized with overlap-add method to ensure that the lexical tones and musical melodies were synthesised in the same way. As can be seen in , the lexical tones and the musical melodies shared the same pitch onset and offset and exhibited comparable pitch movement over the duration of the stimuli, but were each clearly lexical tones (continuous pitch contour realised by human voice) and musical melodies (discrete piano notes) respectively.

Paradigm

The paradigm was the same as in Chen et al. (Citation2018). MMNs were recorded in two blocks: a lexical tone block and a music block with block order counterbalanced across participants. In the tone condition, the standard was the T2 syllable, and deviant the T3 syllable; in the music condition, correspondingly, the standard was the rising melody and deviant was the dipping melody.

Each block comprised 600 stimuli, of which 480 (80%) were standards and 120 (20%) deviants. Each block began with 10 repetitions of the standard; after which standards and deviants were presented in a pseudo-random order with the constraint that deviants were separated by at least two standards. The inter-stimulus interval (ISI) was randomly varied between 450 and 550 ms, to prevent possible anticipatory ERP effect that might overlap with the mismatch responses.

Procedure

The experiment was conducted in a sound-attenuated room at in the XXXX lab at XXXX University. For the adult participants a participant-selected silent movie was played on a computer screen with subtitles during testing. The infant participants sat on their caregivers’ laps. Infant friendly silent animated videos were played on the computer screen, and parents were instructed not to talk during the experiment. Toys were placed on the table in front of the infant, with which they could play if they wanted to. The distance between the participant's eyes and the screen was ∼1 m and the experimental stimuli were presented at 70 dB SPL through two audio speakers on each side of the screen. EEG was recorded from a 128-channel EGI system using a Hydrocel Geodesic Sensor Net, and all electrodes had impedance lower than 50 kΩ at the beginning of the experiment. EEG was recorded at a sampling rate of 1 kHz and the online filter was at 0.1 and 100 Hz, and the online recording was referred to the vertex.

EEG analysis

Adults

The EEG data were analysed offline using NetStation 4.5.7 software and EEGLAB toolbox (version 13.1.1b in Matlab 2015b; Delorme & Makeig, Citation2004). The raw recordings were filtered in NetStation 4.5.7 between 0.1–30 Hz. The filtered recordings were down-sampled to 250 Hz before further analysis. The continuous recordings were re-referenced to averaged mastoids and were segmented into 1000 ms epochs from the 100 ms before the onset (baseline) to 900 ms after the onset of the first note. Artefact reduction was conducted on all 128 channels with a threshold of ±100 microvolts. The remaining artefact-free trials were averaged to obtain the ERPs of each individual participant, and individual ERPs were averaged to obtain grand average waves. The mean number of accepted deviants in the lexical tone and music condition were 85 (SD = 16) and 88 (SD = 23) respectively.

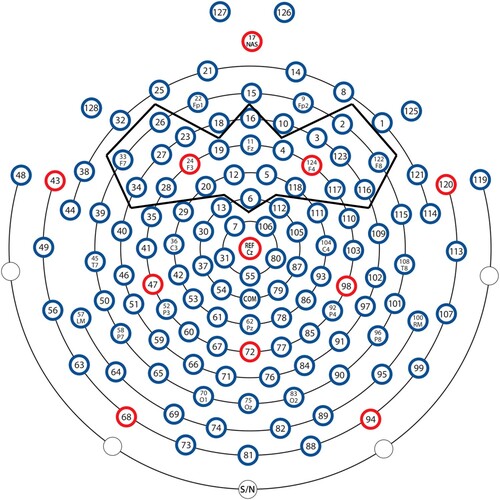

Since MMN was mainly fronto-centrally distributed, and since it was impossible for the infants to stay still during the experiment, and for the purpose of comparing the adult and the infant ERPs, for all the participants, channels E5, E11, E12 on the HCGSN were further averaged to obtain the frontal-central ERPs (FC hereafter), channels E24, E27, and E28 were further averaged to obtain the frontal-left ERPs (FL hereafter), and channels E117, E123, and E124 were further averaged to obtain the frontal-right (FR hereafter) ERPs, as such electrode averaging could help to reduce the noise (He et al., Citation2007).

MMN peaks on the grand average difference wave were first visually identified, and to determine whether these peaks were significant, for each condition, point-by-point t-tests were conducted in a 21-time-point window surrounding the grand average peaks (10 points before and 10 points after), and a peak was considered significant if more than six consecutive points (which equalled to 24 ms with the sampling rate being 250 Hz) showed significant difference (Chen et al., Citation2018; Rinker et al., Citation2007). MMN peak latencies of each individual participant were identified as the latency of the most negative peak in a 100 ms window surrounding the grand average peaks (50 ms before and after) at FC. MMN amplitude of each individual participant was calculated as the mean amplitude in the 40 ms windows (20 ms before and after) surrounding grand average peaks at FL, FC, and FR (Chen et al., Citation2018; Peltola et al., Citation2003). For each condition, MMN amplitudes were analysed by a three sites (FL, FC, FR) repeated ANOVA.

Infants

The infant EEG date were analysed in the same way as the adults, except that a 0.3–20 Hz filter was applied. As it was impossible for the infants to remain stationary during the entire experiment, and as auditory ERPs are mainly central-frontally distributed, conducting artefact reduction on all channels would waste clean signals from the central-frontal site. In order to remove artefacts while retaining sufficient data from each child, we conducted artefact rejection on the 23 frontal channels (), in which trials having an amplitude larger than 150 microvolts were removed. The remaining artefact-free trials were averaged to obtain the ERP values for each infant. Infants who had more than 20 artefact-free deviant trials were included in the final analysis, and all the infants met this criterion. Mean number of accepted trials and standard deviations are listed in . In addition, as both MMN and p-MMR responses were expected for the infants and as the MMN is seen 100–250 ms after the change onset, whereas p-MMR is a broad response that can extend from 100 ms to 500 ms, a wider response time window was included in the infant analysis.

Table 1. Mean, standard deviation (SD), median and range of number of accepted trials in the lexical tone and the music conditions.

Analyses for the lexical tone and the music condition were conducted separately. As previous studies yield no consensus on latency and polarity of infant mismatch response, first, to identify the time windows in which the standard and deviant ERP differed significantly, for each age group, point-by-point t tests were conducted from 300 to 900 ms after the stimuli onset. Next, to understand how the ERP and mismatch responses differed across the age groups and conditions, for each consecutive 50 ms window from 300 to 900 ms after the stimulus onset, a mixed effect ANOVA (i.e. for each condition, twelve ANOVAs in total) was conducted with the mean amplitudes of standards and deviants, with sites (FL, FC, FR) and type (standard or deviant) being the within-subject variables and age groups (4-, 8-, 12-month-olds) being the between-subject variable (Cheng et al., Citation2013, Citation2015).

Results

Results are reported separately, first for the adults and then for the infants.

Adult participants

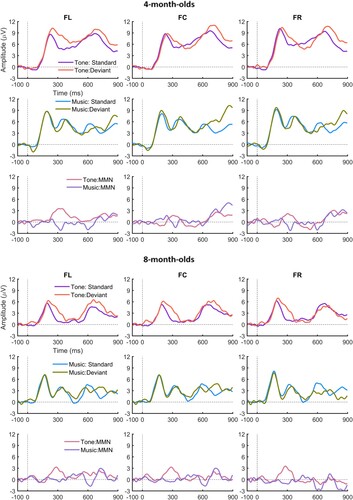

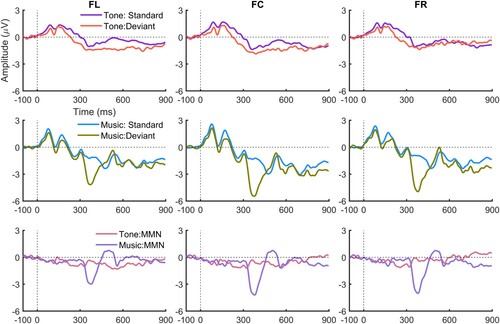

For the adults, the standard, deviant and the difference waves in the lexical tone and music condition are plotted in ; and the mean peak latencies (ms) and mean amplitudes (µv) of the MMNs in the lexical tone and music condition are set out in .

Figure 3. Standard and deviant ERPs and difference waves of the adult participants in the lexical tone and music conditions.

Table 2. For the adults, mean (SD) peak latencies (ms) and mean (SD) amplitudes (µv) of the MMNs and LDN in the music condition and the MMN in the lexical tone condition.

Lexical tones

For the lexical tone condition, a grand average MMN peak was identified at FC in the 200–400 ms window with a peak latency of 324 ms (time range of significant difference between the standard and deviant ERPs: 204–344 ms), which was significant based on the 21-point t-tests. The effect of site (FL, FC, FR) was not significant, F(2, 28) = 0.27, p = .76, indicating comparable MMN amplitude at FL, FC, and FR. Therefore, in the lexical tone condition, the adults showed a significant MMN with a symmetrical scalp distribution, indicating successful neural discrimination of the lexical tones.

Musical melodies

For the music condition, based on the findings of Chen et al. (Citation2018), two negative peaks were visually identified on the difference waves between 200–400 ms and between 400–600 ms for note2 and note3 MMN respectively. The grand average peak latencies of the note2 MMN and note3 MMN were 380 ms and 560 ms respectively. For note2, the standard and deviant ERPs were significantly different for the time window 332–444 ms after the deviant onset, and for note3 at 560 and 564 ms after the deviant onset. Therefore, only the note2 and not the note3 MMN was significant under the six-point criterion.

For the note2 MMN, site (FL, FC, FR) showed a significant effect, F(2, 28) = 5.07, p < .05, partial η = 0.27. Bonferroni corrected post hoc analyses indicated that amplitude at FC was significantly more negative than at FL, but no other comparison was significant. Together with , it can be seen that a prominent MMN was elicited by note2 change while MMN was much more attenuated for note3, possibly because the note3 change was the second in the two consecutive changes, and the MMN to it may have been masked by the previous response to the note2 change.

Cross-condition comparison

As only the note2 MMN was significant, we tested the correlation between lexical tone MMN and note2 MMN at FL, FC, and FR. Pearson's r (one tailed) was significant (p < 0.05) for tone MMN at FR and note2 MMN at FR (r = 0.50, p = .029). However, when controlled for multiple comparisons using the false discovery rate (FDR, Benjamini & Hochberg, Citation1995), this correlation was no longer significant. Such a finding suggests a weak association between the discriminative response of lexical tones and musical pitch.

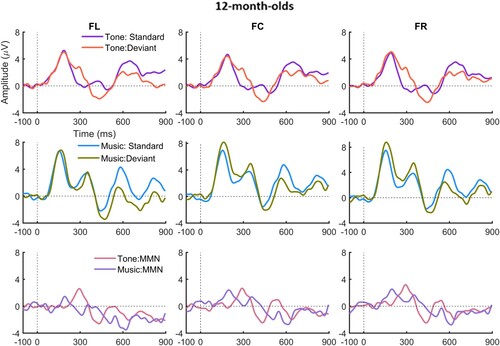

Infant participants

For the infants, plots the ERPs for the 4-, 8-month-olds and 12-month-olds to the standard and deviant and the difference waves for lexical tone (upper panel), and for music (lower panel); and presents the latencies (consecutive points) at which the standard and deviant differed significantly at each site for the lexical tone and the music conditions. As can be seen, for the lexical tone condition, all the three age groups showed a significant p-MMR while for the music condition, the 4-month-olds showed a significant late (800 ms after the deviant onset) p-MMR, the 12-month-olds an MMN at around 600 ms, and no MMR, either positive or negative, was observed for the 8-month-olds.

Figure 4. ERPs to the standard and deviant and the difference waves in the lexical tone and music condition of the infants.

Table 3. For the infants, time windows (ms) in which the standard and deviant differed significantly from the stimulus onset at FL, FC and FR.

Lexical tones

For the lexical tone condition, type (standard/deviant) * site (FL, FC, and FR) * age (4-, 8-, and 12-month-olds) mixed-effect ANOVAs were conducted for each consecutive 50 ms window between 300 ms and 900 ms after stimulus onset (i.e. twelve ANOVAs in total). Bonferroni corrected alpha level as adjusted to .004 to correct for potential Type I error. For all the time windows, there was a significant main effect of age, all F(2, 49) > 7.45, p < .001, partial η2 > 0.23, with the ERPs of the 4-month-olds being more positive than those of the 8- and 12-month-olds. Type showed a significant main effect for the 301–350 ms time window, F(1, 49) = 11.90, p < .001, partial η2 > 0.20, where ERPs to the deviant were more positive than those to the standard, and the interaction between type and site was not significant at any time window.

As the p-MMR was significant for all the three age groups at around 300 ms, we further analyzed the mean p-MMR peak latencies, which were 307 ms (SD = 31 ms), 286 ms (SD = 31 ms), and 298 ms (SD = 18 ms) for the 4-, 8-, and 12-month-olds respectively. An ANOVA of individual peak latencies with age group as the independent variable (4-, 8-, 12-month-olds) found no significant effect, F(2, 49) = 2.57, p = .09.

Musical melodies

For the music condition, for the 401–450 ms, 451–500 ms, and for 851–900 ms time windows, there were significant main effects of age, F(2, 49) > 10.38, all ps < .001, all partial ηs > .30, in which 4-month-olds showed more positive ERPs than the 8- and 12-month-olds, and there was no significant difference between the two older groups. There was a significant main effect of type for the 601–650 ms time window, F(1, 49) = 5.23, p = .027, partial η2 = .10, with the deviant ERP being more negative than the standard ERP. There were significant main effects of site for 701–750 ms, 751–800 ms, 801–850 ms, and 851–900 ms, F (2, 98) > 8,33, all ps < .001, all partial η2s > .12. Bonferroni corrected post hoc pair-wise comparisons revealed that FC showed more positive response than FL but more negative response than FR for the windows 701–750 ms, 751–800 ms, 801–850 ms, and 850–900 ms (all ps < .05). The three-way interaction between type, site and age was significant for windows 801–850 ms and 851–900 ms, F(4, 98) > 3.30, all ps < .02, all partial ηs > .12, and Bonferroni corrected pair-wise comparisons showed that only for the 4-month-olds, were the deviant ERPs significantly more positive than the standard ERPs (p-MMR) at FC p < .05, whereas no significant difference between ERPs to standard and deviant was found for the 8- or 12-month-olds.

Together with and , it can be seen that the 4-month-olds showed a significant late p-MMR between 800 and 900 ms after stimulus onset. All the three age groups showed a negative deflection at around 600 ms after the stimulus onset, yet this deflection was significant only for the 12-month-olds in the point-by-point t tests, and it occurred at a later latency than the adult MMN. Comparing the infants’ waveforms to those of the adults, it is likely that the negative peak at around 600 ms for the infants was the discriminative response to note2. No reliable note3 mismatch response, either positive or negative, was observed for the infants.

A significant developmental change in the response to the musical melodies was observed at 800–900 ms after stimulus onset, i.e. a p-MMR was present for the 4-month-olds, whereas it was absent for the two older groups. As the point by point t-test failed to find an MMN in the time window 601–650 ms for the 4-month-olds, their p-MMR shown in the 800–900 ms window likely reflects the neural discrimination of note2. In summary, it can be concluded that the infants’ mismatch response to the musical notes changes from a late p-MMN to a more adult-like MMN between 4 and 12 months.

Cross-condition comparison

To understand whether neural discriminative responses to the lexical tones and to the musical melodies correlated, Pearson's r was calculated for the mismatch response amplitudes at FL, FC, FR in the significant windows, namely 301–350 ms in the lexical tone condition and in the 601–650 ms window for the music condition. As only the 4-month-olds exhibited a p-MMR in the 800–900 ms window in the music condition, we also tested the correlation between the mismatch response in the 301–350 ms window for lexical tones and the 801–850 ms and 851–900 ms for the music for the 4-month-olds. No significant cross-condition correlation was found for any site, suggesting possible independent neural discriminative responses across the two domains.

Discussion

Adults

Lexical tones

Lexical tones elicited MMNs, despite the fact that they are non-native for Australian listeners. As non-tone language speakers, adults here must have responded to the acoustic properties rather the phonemic function of the lexical tones. These results are consistent with the Chen et al. (Citation2018) MMN results and with behavioural studies (Burnham & Brooker, Citation2002; Chen et al., Citation2016). So, while non-tone language listeners have attenuated perceptual discrimination of lexical tones, it appears that such difficulties are due to linguistic factors, which come into play after acoustic level discrimination. Such a hypothesis is consistent with non-tone language listeners’ better discrimination of lexical tones at short but not longer interstimulus intervals (ISIs), both at the MMN level with 600 ms versus 2600 ms ISI (Yu et al., Citation2017), and at the behavioural level at 500 ms versus 1500 ms ISI (Burnham et al., Citation1996). Shorter ISIs allow acoustic processing, but longer ISIs obviate simple template matching and necessitate longer term storage, which in the case of linguistic (speech) stimuli, involves phonological processing (Werker & Logan, Citation1985). The ISI in current study was randomised between 450 and 550 ms, which possibly allowed the adults to process the lexical tones on an acoustic, or at least phonetic (Werker & Logan, Citation1985) basis, leading to successful neural discrimination.

Musical melodies

The musical melodies also elicited MMNs. An MMN was observed for note2, while for note 3, it was much more attenuated, possibly due to a masking effect of the note2 change. Thus it seems that the neural responses to musical melodies were note based, which may provide the basis for the processing of melodic contour. Accordingly, as can be seen in , the MMN elicited by note2 has a larger amplitude than the MMN elicited by the lexical tones, and the same is also true for the N1-P2 complex. Compared to the Dutch and Chinese listeners tested by Chen et al. (Citation2018), we found smaller amplitude of the standard and deviant ERPs as well as the MMNs among the Australian English listeners in the current study. For now, it is difficult to ascertain whether such differences in amplitude truly reflect processing differences or whether they are the result of different testing instruments (i.e. Biosemi system for the Dutch and Chinese participants in Chen et al. (Citation2018), and EGI system for the Australian participants)

Cross-condition comparison

It is unequivocal that the lexical tones and music melodies elicited stimulus-specific neural responses: the abrupt onset of the individual musical notes appears to enhance obligatory auditory responses as well as MMN, compared to the continuous pitch change exhibited in the lexical tones. Given that the MMN peaked 62 ms earlier in the lexical tone (314 ms) than the music (376 ms), and given the gradual rather than discrete change of the pitch contours in the lexical tone condition, it is likely that the adult brain detected the pitch change earlier in the lexical tone than in the music condition (i.e. presumably at around 158 ms in the lexical tone condition, given that note2 started at 220 ms after the stimulus onset).

Unlike the Dutch participants in Chen et al. (Citation2018) who showed positive correlations between the MMNs elicited in the two conditions, here, the cross-condition correlation disappeared after correction for multiple correlations. In other words, in this study we found only limited evidence for cross-domain correlation in neural response to pitch change. As the participants in Chen et al. (Citation2018) were tested with different instruments in different labs, it is difficult to ascertain whether the attenuated MMNs and correlations were due to apparatus difference or they truly reflect processing difference between the Dutch and English listeners.

Infants

Lexical tones

For the lexical tones, the ERPs at the three infant ages showed comparable ERP morphology (see ). Consistent with previous studies (Cheng et al., Citation2013; Dehaene-Lambertz, Citation2000), both the standard and the deviant elicited two positive peaks, one around 250 ms and the other around 700 ms, with a negative deflection in between – around 450 ms. All the three age groups showed a significant p-MMR at around 300 ms. As there was no significant interaction between the trial type and age, the p-MMR to the lexical tones appears to be stable over the first year of life. On the difference wave, a negative deflection followed the p-MMR, and it became more negative from 4 to 12 months. Such a negative deflection might be an immature MMN, yet by 12 months, the magnitude of this deflection was still not significant. Hence, by 12 months there was no reliable adult-like MMN for lexical tones, so the polarity shift of the infant p-MMR to lexical tones must occur at some point after 12 months. Such findings are consistent with previous studies, in which in response to acoustically similar vowels, infants and young children showed p-MMRs, and 5-year-olds showed an emerging MMN (Shafer et al., Citation2010; Yu et al., Citation2019).

Musical melodies

For the music condition, as in the lexical tone condition, the standard and deviant waveforms showed similar morphology across the three ages. Overall, there was a significant negative peak around 601–650 ms on the difference wave. Yet separate point-by-point t-tests for each age group showed that the 4-month-olds showed a significant p-MMR but only after 700 ms; there were no significant mismatch responses for the 8-month-olds; and the 12-month-olds showed a significant MMN at around 600 ms. Therefore, the significant MMN in the 601–650 ms was driven mainly by the 12-month-olds. Unlike the mismatch responses to the lexical tones, which remained positive from 4 to 12 months, the mismatch response to the musical melodies exhibited a shift from positive to negative polarity over age. Given the separate responses to the three notes in the adult data, it would appear that the three positive peaks on the standard ERP at around 200, 400 and 600 ms for the infants reflects responses to each individual note. It seems that although the infants’ standard ERP was time-locked to individual notes, the MMN was not. Seeing the shift of mismatch response polarity between the 4- and the 12-month-olds, it is likely that the 8-month-olds were at the transitional stage, in which some infants already became negative responders while others were still positive responders. Consequently, the positive and negative mismatch responses cancelled each other out, leading to a non-significant MMN of the group as a whole.

Cross-condition comparison

With regard to cross-domain correlations, within the time windows showing significant mismatch responses (i.e. 301–350 ms for the lexical tone condition, and 601–650 ms for the music condition), the standard and deviant ERPs significantly correlated within as well as across the domains, yet the mismatch response failed to show cross-domain correlation. Therefore, the magnitude of brain activity responding to auditory stimulation seemed to be consistent within a participant, i.e. those infants who exhibited more positive responses to one type of auditory stimulus also exhibited more positive response to the other. Yet individual infants did not seem to respond to pitch changes in different domains in a consistent way. It should be noted that the infant music MMN occurred at a later latency than the adult music MMN (around 600 ms for the 12-month-olds versus around 380 ms for the adults), yet the infant lexical tone p-MMR occurred at comparable latency as the adult lexical tone MMN (around 350 ms). Therefore, it seems that the mismatch response, as well as its maturation, is stimulus specific, as we did not find convincing evidence supporting parallel development of mismatch responses across the two conditions.

General discussion

In this study, we tested native English-speaking adults, and 4-, 8- and 12-month-old English language infants’ neural responses to pitch changes realised on lexical tones in human speech, and on three-note musical melodies. summarises the results of the mismatch responses found for each condition and each age. For the adults, similar to Chen et al. (Citation2018) both the lexical tones and the musical melodies elicited MMNs, and positive correlations between the MMNs for lexical tone and music MMNs were observed. For the infants, for the lexical tones, p-MMRs were observed for all three age groups, and for the musical melodies, the 4-month-olds showed a p-MMR, the 8-month-olds showed no significant mismatch response, either positive or negative, and the 12-month-olds showed an MMN. There were correlations within individual infants between their responses to lexical tones and musical notes, but not between lexical tone and music MMNs.

Table 4. Mismatch responses in 4-, 8-, and 12-month-old infants and adults.

Development of mismatch response in early infancy

It is known that the infant brain is sensitive to frequency change, and that mismatch responses can be elicited by various pitch patterns (He et al., Citation2007, Citation2009; Stefanics et al., Citation2009). Beyond that, debate centres on how to characterise such mismatch responses. It should be noted that infants in the current study showed comparable standard and deviant ERP waveforms (an M shape waveform when plotted positive up) to those obtained from 6-month-olds in Cheng et al. (Citation2013). In addition, the overall ERP waveforms obtained in the current study were also comparable with the ERPs elicited by vowels (Shafer et al., Citation2015; Yu et al., Citation2019), and the cortical auditory evoked potentials (e.g. P1) had similar latencies across these studies. These findings suggest a common, language universal neural mechanism underlying obligatory auditory responses to lexical tones and possibly to speech sounds.

Turning to the discriminative brain responses, previous studies testing neural discrimination of lexical tones found p-MMRs to the Mandarin T2-T3 contrast at 6, 12, and 18 months, while no significant mismatch response was found, either positive or negative, in 24-month-olds (Cheng & Lee, Citation2018; Cheng et al., Citation2013). Here, a p-MMR to lexical tones in all the three infant age groups was found, and such positivity occurred at a latency comparable to that of the adult MMN (i.e. around 300 ms). Besides the positivity at around 300 ms, the 4-month-olds also showed a late positivity at around 800 ms. The p-MMR at 300 ms had a shorter latency than that reported by Cheng and Lee (Citation2018) and Cheng et al. (Citation2013), which may be attributable to differences between the stimuli in the two studies – 250 ms vowel-only /i/ versus 667 ms consonant–vowel /ma/ in the current study. Comparing the native Mandarin Chinese infants tested in Cheng and Lee (Citation2018) and Cheng et al. (Citation2013) and the native English infants tested here, it can be seen that the two groups of infants showed different developmental patterns: (i) the English-language infants in the current study showed a general decrease in ERP magnitude over age, but the overall wave forms were similar in morphology; whereas (ii) the Chinese infants (Cheng & Lee, Citation2018; Cheng et al., Citation2013) exhibited qualitatively different waveforms at different ages. Such different development trajectories might well be related to language input. Lexical tones are phonologically contrastive in Mandarin Chinese, and consequently Chinese infants must learn to make use of the lexical tones in order to distinguish word meaning. Thus the age-related change in waveforms in the Chinese infants would appear to reflect such learning. On the contrary, in English, lexical tones are not phonemic (i.e. not lexically contrastive), and the lack of lexical contrast of the lexical tones in English may have led to the stable waveforms. Alternatively, the non-tone language infants (in particular the 12-month-olds) may have (mis)-perceived the lexical tones as the result of learning native intonation patterns, and they may have relied on different acoustical cues than the native infants when processing the lexical tones (Gandour & Harshman, Citation1978). In other words, the effect of any top-down developmental influence of the native language on the processing on lexical tones is, for tone language infants, to maintain perceptual processing of pitch variation at the lexical level, whereas for non-tone language infants, the effect is to disregard pitch variation at the lexical level. It would be interesting for future studies to test Chinese infants’ responses to the same musical pitch and lexical tones as those tested here.

In this study the difference between lexical tones and musical stimuli was manipulated to be of comparable magnitude, i.e. same pitch level at the onset and offset as well as overall pitch contour. Nevertheless, the developmental trajectories of the mismatch response were different across conditions (see ). While the mismatch response to lexical tones remained positive and at a comparable latency to the adult MMN, the mismatch responses to the musical tones exhibited a shift from p-MMR to MMN, with both peaking later than the adult MMN. It is well-documented that at a given age, infants can show a p-MMR to some stimuli but an adult-like MMN to others (Cheng & Lee, Citation2018; Lee et al., Citation2012), and it has been hypothesised that the shift from p-MMR to MMN would occur earlier for acoustically salient than non-salient stimuli (Cheng et al., Citation2013; Lee et al., Citation2012). Our results show that the infant brain is sensitive to the acoustic properties of the stimuli, and that equivalent fundamental frequency differences can elicit a p-MMR or an MMN depending on the nature of the carrier stimulus.

Alternatively, the different developmental course for the lexical tone and musical stimuli might be due to the different degree of continuity within the stimuli. Many studies have found larger obligatory evoked potentials for stimulus onset (Wunderlich et al., Citation2006), and consistent with this, the discrete onsets of the three musical notes might have assisted the registration of individual notes in memory and facilitated comparison between the standard and deviant; whereas the continuous pitch contour of the lexical tones might have blurred the temporal locus within the pitch contour at which standard and deviant tones diverged. In the music condition, for the adults, note2 elicited a clear MMN, while the MMN response to note3 was attenuated. It is likely that the discriminant response to note2 masked the response to note3. It should be acknowledged that the change of note3 always followed the change note2 (i.e. if note2 differed between the standard and the deviant, so would note3), and such predictability may have attenuated the MMN to note3 (Sussman et al., Citation1998; Winkler et al., Citation1996). For the infants, separate responses elicited for each note to the standard could be observed at all three infant ages, yet although the ERPs appear to be time-locked to individual notes in the standard, the mismatch response is not. Increased neuron myelination over development assists in the reduction of the latency of evoked potentials (Eggermont & Salamy, Citation1988; Moore & Guan, Citation2001), and MMN latency decreases (Morr et al., Citation2002) as infants mature. Hence, longer response latency in infants due to maturational influences may have made any mismatch response to individual notes difficult to observe.

Mismatch response and language and music development

Speech and music exist in all human cultures, and pitch contour is essential for both. However, the way that pitch is employed differs across the domains. For speech, pitch is realised with the human voice with smooth and continuous pitch contours. Music, on the other hand, is often organised with discrete pitches (although slides between pitches occur with string instruments and in singing). In addition, pitch in music is employed with higher precision than in speech, as one semitone may be critical for musical structure and perception, while distortion of lexical tone fundamental frequencies can be tolerated to some extent (Patel, Citation2011). As a practical example, consider two singers singing the same song and one is a little flat versus two tone speakers, a male and a female pronouncing the same tone – their pitch height and contour may vary wildly but lexical tone identity remains the same.

Our results resonate with the domain specific manner in which pitch is employed. In the current study, 12-month-olds showed an MMN in the music condition, but a p-MMR in the lexical tone condition. As this polarity shift has been hypothesised to occur earlier for auditorily salient than non-salient contrasts, it seems that pitch differences of the same magnitude are more salient when realised in musical than speech prosody, resulting in a developmentally earlier appearance of MMN in the music condition. In other words, the infant brain appears to respond to musical pitch differences with higher precision, despite the same actual pitch in the music and speech stimuli.

Numerous studies have found an association between musical and speech pitch processing both behaviourally and neurally (Bidelman et al., Citation2013; Delogu et al., Citation2006; Wong et al., Citation2007; Wong & Perrachione, Citation2007). The current study, however, only found limited evidence for correlations between the MMN elicited by the lexical tones and musical melodies among the adults, and both the MMNs and the correlations were attenuated compared to those obtained in Chen et al. (Citation2018). For the infants, no correlation was observed for the mismatch responses across the two conditions. For adults, overlap exists anatomically and functionally in brain networks that process the same acoustic features (e.g. amplitude and frequency), which are present in both music and speech, and more effort should be made in future research to understand how such networks are established early in life.

In sum, both the adult and the infant brain captures specific physical properties of speech and musical pitch. In the first year of life, there tends to be a shift from infant p-MMR to adult MMN for the musical pitch, but sustained p-MMR for the lexical tones in non-tone language infants. No parallel maturation of neural change detection is apparent over this period, suggesting domain specific development of pitch processing early in life.

Acknowledgements

We would like to thank two anonymous reviewers for their helpful comments on an earlier version of the manuscript.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Alho, K., Kujala, T., Paavilainen, P., Summala, H., & Näätänen, R. (1993). Auditory processing in visual brain areas of the early blind: Evidence from event-related potentials. Electroencephalography and Clinical Neurophysiology, 86(6), 418–427. https://doi.org/10.1016/0013-4694(93)90137-K

- Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Methodological), 57(1), 289–300. https://doi.org/10.1111/j.2517-6161.1995.tb02031.x

- Bidelman, G., Gandour, J., & Krishnan, A. (2011). Cross-domain effects of music and language experience on the representation of pitch in the human auditory brainstem. Journal of Cognitive Neuroscience, 23(2), 425–434. https://doi.org/10.1162/jocn.2009.21362

- Bidelman, G., Hutka, S., & Moreno, S. (2013). Tone language speakers and musicians share enhanced perceptual and cognitive abilities for musical pitch: Evidence for bidirectionality between the domains of language and music. PLos ONE, 8(4), e60676. https://doi.org/10.1371/journal.pone.0060676

- Bishop, D., Hardiman, M. J., & Barry, J. G. (2011). Is auditory discrimination mature by middle childhood? A study using time-frequency analysis of mismatch responses from 7 years to adulthood. Developmental Science, 14(2), 402–416. https://doi.org/10.1111/j.1467-7687.2010.00990.x

- Bishop, D. V. M. (2007). Using mismatch negativity to study central auditory processing in developmental language and literacy impairments: Where are we, and where should we be going? Psychological Bulletin, 133(4), 651–672. https://doi.org/10.1037/0033-2909.133.4.651

- Boersma, P., & Weenink, D. (2011). PRAAT: doing phonetics by computer (Version 5.3.25).

- Burnham, D., & Brooker, R. (2002). Absolute pitch and lexical tones: Tone perception by non-musician, musician, and absolute pitch non-tonal language speakers. The 7th international conference on spoken language processing, 257–260.

- Burnham, D., Brooker, R., & Reid, A. (2015). The effects of absolute pitch ability and musical training on lexical tone perception. Psychology of Music, 43(6), 881–897. https://doi.org/10.1177/0305735614546359

- Burnham, D., Francis, E., Webster, D., Luksaneeyanawin, S., Attapaiboon, C., Lacerda, F., & Keller, P. (1996). Perception of lexical tone across languages: evidence for a linguistic mode of processing. Spoken Language, 1996. ICSLP 96. Proceedings., Fourth International Conference On, 4, 2514–2517 vol.4.

- Carral, V., Corral, M.-J., & Escera, C. (2005). Auditory event-related potentials as a function of abstract change magnitude. Neuroreport, 16(3), 301–305. http://journals.lww.com/neuroreport/Fulltext/2005/02280/Auditory_event_related_potentials_as_a_function_of.20.aspx https://doi.org/10.1097/00001756-200502280-00020

- Chandrasekaran, B., Krishnan, A., & Gandour, J. T. (2007). Experience-dependent neural plasticity is sensitive to shape of pitch contours. Neuroreport, 18(18), 1963–1967. https://doi.org/10.1097/WNR.0b013e3282f213c5

- Chandrasekaran, B., Krishnan, A., & Gandour, J. T. (2009). Relative influence of musical and linguistic experience on early cortical processing of pitch contours. Brain and Language, 108(1), 1–9. https://doi.org/10.1016/j.bandl.2008.02.001

- Chen, A., Liu, L., & Kager, R. (2016). Cross-domain correlation in pitch perception, the influence of native language. Language, Cognition and Neuroscience, 31(6), 751–760. https://doi.org/10.1080/23273798.2016.1156715

- Chen, A., Peter, V., Wijnen, F., Schnack, H., & Burnham, D. (2018). Are lexical tones musical? Native language’s influence on neural response to pitch in different domains. Brain and Language, 180–182, 31–41. https://doi.org/10.1016/j.bandl.2018.04.006

- Chen, A., Stevens, C. J., & Kager, R. (2017). Pitch perception in the first year of life, a cross-domain study. Frontiers in Psychology, 8, 297. https://doi.org/10.3389/fpsyg.2017.00297

- Cheng, Y.-Y., & Lee, C.-Y. (2018). The development of mismatch responses to Mandarin lexical tone in 12- to 24-month-old infants. Frontiers in Psychology, 9, 448. https://doi.org/10.3389/fpsyg.2018.00448

- Cheng, Y.-Y., Wu, H.-C., Tzeng, Y.-L., Yang, M.-T., Zhao, L.-L., & Lee, C.-Y. (2013). The development of mismatch responses to Mandarin lexical tones in early infancy. Developmental Neuropsychology, 38(5), 281–300. https://doi.org/10.1080/87565641.2013.799672

- Cheng, Y.-Y., Wu, H.-C., Tzeng, Y.-L., Yang, M.-T., Zhao, L.-L., & Lee, C.-Y. (2015). Feature-specific transition from positive mismatch response to mismatch negativity in early infancy: Mismatch responses to vowels and initial consonants. International Journal of Psychophysiology, 96(2), 84–94. https://doi.org/10.1016/j.ijpsycho.2015.03.007

- Dehaene-Lambertz, G. (2000). Cerebral specialization for speech and non-speech stimuli in infants. Journal of Cognitive Neuroscience, 12(3), 449–460. https://doi.org/10.1162/089892900562264

- Delogu, F., Lampis, G., & Belardinelli, M. O. (2006). Music-to-language transfer effect: May melodic ability improve learning of tonal languages by native nontonal speakers? Cognitive Processing, 7(3), 203–207. https://doi.org/10.1007/s10339-006-0146-7

- Delorme, A., & Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21. https://doi.org/10.1016/j.jneumeth.2003.10.009

- Eggermont, J. J., & Salamy, A. (1988). Maturational time course for the ABR in preterm and full term infants. Hearing Research, 33(1), 35–47. https://doi.org/10.1016/0378-5955(88)90019-6

- Fernald, A., Taeschner, T., Dunn, J., Papousek, M., de Boysson-Bardies, B., & Fukui, I. (1989). A cross-language study of prosodic modifications in mothers’ and fathers’ speech to preverbal infants. Journal of Child Language, 16(3), 477–501. https://doi.org/10.1017/S0305000900010679

- Gandour, J., & Harshman, R. (1978). Crosslanguage differences in tone perception: A multidimensional scaling investigation. Language and Speech, 21(1), 1–33. https://doi.org/10.1177/002383097802100101

- Gussenhoven, C. (2004). The phonology of tone and intonation. University Press.

- Hari, R., Hämäläinen, M., Ilmoniemi, R., Kaukoranta, E., Reinikainen, K., Salminen, J., Alho, K., Näätänen, R., & Sams, M. (1984). Responses of the primary auditory cortex to pitch changes in a sequence of tone pips: Neuromagnetic recordings in man. Neuroscience Letters, 50(1–3), 127–132. https://doi.org/10.1016/0304-3940(84)90474-9

- He, C., Hotson, L., & Trainor, L. J. (2007). Mismatch responses to pitch changes in early infancy. Journal of Cognitive Neuroscience, 19(5), 878–892. https://doi.org/10.1162/jocn.2007.19.5.878

- He, C., Hotson, L., & Trainor, L. J. (2009). Maturation of cortical mismatch responses to occasional pitch change in early infancy: Effects of presentation rate and magnitude of change. Neuropsychologia, 47(1), 218–229. https://doi.org/10.1016/j.neuropsychologia.2008.07.019

- Jacobsen, T., & Schroger, E. (2001). Is there pre-attentive memory-based comparison of pitch? Psychophysiology, 38(4), 723–727. https://www.cambridge.org/core/article/is-there-preattentive-memorybased-comparison-of-pitch/C0F82F369B6070500E70797538BD833B https://doi.org/10.1111/1469-8986.3840723

- Jing, H., & Benasich, A. A. (2006). Brain responses to tonal changes in the first two years of life. Brain and Development, 28(4), 247–256. https://doi.org/10.1016/j.braindev.2005.09.002

- Kaan, E., Barkley, C. M., Bao, M., & Wayland, R. (2008). Thai lexical tone perception in native speakers of Thai, English and Mandarin Chinese: An event-related potentials training study. BMC Neuroscience, 9, 53. https://doi.org/10.1186/1471-2202-9-53

- Krishnan, A., Gandour, J. T., & Bidelman, G. M. (2010). The effects of tone language experience on pitch processing in the brainstem. Journal of Neurolinguistics, 23(1), 81–95. https://doi.org/10.1016/j.jneuroling.2009.09.001

- Kujala, T., Tervaniemi, M., & Schröger, E. (2007). The mismatch negativity in cognitive and clinical neuroscience: Theoretical and methodological considerations. Biological Psychology, 74(1), 1–19. https://doi.org/10.1016/j.biopsycho.2006.06.001

- Kushnerenko, E., Ceponiene, R., Balan, P., Fellman, V., & Näätänen, R. (2002). Maturation of the auditory change detection response in infants: A longitudinal ERP study. Neuroreport, 13(15), 1843–1848. http://journals.lww.com/neuroreport/Fulltext/2002/10280/Maturation_of_the_auditory_change_detection.2.aspx https://doi.org/10.1097/00001756-200210280-00002

- Lang, H. A., Nyrke, T., M, E. K., Aaltonen, O., Raimo, I., & Näätänen, R. (1990). Pitch discrimination performance and auditory event-related potentials. In C. H. M. Brunia, A. W. K. Gaillard, A. Kok, G. Mulder, & M. N. Verbaten (Eds.), Phychophysiological brain research (pp. 294–298). Tilburg University Press.

- Lee, C.-Y., Yen, H., Yeh, P., Lin, W.-H., Cheng, Y.-Y., Tzeng, Y.-L., & Wu, H.-C. (2012). Mismatch responses to lexical tone, initial consonant, and vowel in Mandarin-speaking preschoolers. Neuropsychologia, 50(14), 3228–3239. https://doi.org/10.1016/j.neuropsychologia.2012.08.025

- Leppänen, P. H., & Lyytinen, H. (1997). Auditory event-related potentials in the study of developmental language-related disorders. Audiology and Neurotology, 2(5), 308–340. https://doi.org/10.1159/000259254

- Maurer, U., Bucher, K., Brem, S., & Brandeis, D. (2003). Development of the automatic mismatch response: From frontal positivity in kindergarten children to the mismatch negativity. Clinical Neurophysiology, 114(5), 808–817. https://doi.org/10.1016/S1388-2457(03)00032-4

- McMullen, E., & Saffran, J. R. (2004). Music and language: A developmental comparison. Music Perception, 21(3), 289–311. https://doi.org/10.1525/mp.2004.21.3.289

- Moore, J. K., & Guan, Y.-L. (2001). Cytoarchitectural and axonal maturation in human auditory cortex. JARO – Journal of the Association for Research in Otolaryngology, 2(4), 297–311. https://doi.org/10.1007/s101620010052

- Morr, M. L., Shafer, V. L., Kreuzer, J. A., & Kurtzberg, D. (2002). Maturation of mismatch negativity in typically developing infants and preschool children. Ear and Hearing, 23(2), 118–136. http://journals.lww.com/ear-hearing/Fulltext/2002/04000/Maturation_of_Mismatch_Negativity_in_Typically.5.aspx https://doi.org/10.1097/00003446-200204000-00005

- Näätänen, R., Paavilainen, P., Rinne, T., & Alho, K. (2007). The mismatch negativity (MMN) in basic research of central auditory processing: A review. Clinical Neurophysiology, 118(12), 2544–2590. https://doi.org/10.1016/j.clinph.2007.04.026

- Patel, A. D. (2008). Science & music: Talk of the tone. Nature, 453(7196), 726–727. https://doi.org/10.1038/453726a

- Patel, A. D. (2011). Why would musical training benefit the neural encoding of speech? The OPERA hypothesis. Frontiers in Psychology, 2, 142. https://doi.org/10.3389/fpsyg.2011.00142

- Patel, A. D. (2012). Language, music, and the brain, a resource-sharing framework. In P. Rebuschat, M. Rohrmeier, J. A. Hawkins, & I. Cross (Eds.), Language and music as cognitive systems (pp. 204–223). Oxford University Press.

- Patel, A. D., Foxton, J. M., & Griffiths, T. D. (2005). Musically tone-deaf individuals have difficulty discriminating intonation contours extracted from speech. Brain and Cognition, 59(3), 310–313. https://doi.org/10.1016/j.bandc.2004.10.003

- Peltola, M. S., Kujala, T., Tuomainen, J., Ek, M., Aaltonen, O., & Näätänen, R. (2003). Native and foreign vowel discrimination as indexed by the mismatch negativity (MMN) response. Neuroscience Letters, 352(1), 25–28. https://doi.org/10.1016/j.neulet.2003.08.013

- Peretz, I., Gosselin, N., Nan, Y., Caron-Caplette, E., Trehub, S. E., & Béland, R. (2013). A novel tool for evaluating children’s musical abilities across age and culture. Frontiers in Systems Neuroscience, 7, 30. https://doi.org/10.3389/fnsys.2013.00030

- Rinker, T., Kohls, G., Richter, C., Maas, V., Schulz, E., & Schecker, M. (2007). Abnormal frequency discrimination in children with SLI as indexed by mismatch negativity (MMN). Neuroscience Letters, 413(2), 99–104. https://doi.org/10.1016/j.neulet.2006.11.033

- Sato, Y., Sogabe, Y., & Mazuka, R. (2010). Development of hemispheric specialization for lexical pitch-accent in Japanese infants. Journal of Cognitive Neuroscience, 22(11), 2503–2513. https://doi.org/10.1162/jocn.2009.21377

- Shafer, V. L., Morr, M. L., Kreuzer, J. A., & Kurtzberg, D. (2000). Maturation of mismatch negativity in school-age children. Ear and Hearing, 21(3), 242–251. http://journals.lww.com/ear-hearing/Fulltext/2000/06000/Maturation_of_Mismatch_Negativity_in_School_Age.8.aspx https://doi.org/10.1097/00003446-200006000-00008

- Shafer, V. L., Yu, Y. H., & Datta, H. (2010). Maturation of speech discrimination in 4- to 7-yr-old children as indexed by event-related potential mismatch responses. Ear & Hearing, 31(6), 735–745. https://journals.lww.com/ear-hearing/Fulltext/2010/12000/Maturation_of_Speech_Discrimination_in_4__to.2.aspx https://doi.org/10.1097/AUD.0b013e3181e5d1a7

- Shafer, V. L., Yu, Y. H., & Wagner, M. (2015). Maturation of cortical auditory evoked potentials (CAEPs) to speech recorded from frontocentral and temporal sites: Three months to eight years of age. International Journal of Psychophysiology, 95(2), 77–93. https://doi.org/10.1016/j.ijpsycho.2014.08.1390

- Stefanics, G., Háden, G. P., Sziller, I., Balázs, L., Beke, A., & Winkler, I. (2009). Newborn infants process pitch intervals. Clinical Neurophysiology, 120(2), 304–308. https://doi.org/10.1016/j.clinph.2008.11.020

- Sussman, E., Ritter, W., & Vaughan Jr, H. G. (1998). Predictability of stimulus deviance and the mismatch negativity. Neuroreport, 9(18), 4167–4170. https://journals.lww.com/neuroreport/Fulltext/1998/12210/Predictability_of_stimulus_deviance_and_the.31.aspx https://doi.org/10.1097/00001756-199812210-00031

- Tervaniemi, M., Maury, S., & Naatanen, R. (1994). Neural representations of abstract stimulus features in the human brain as reflected by the mismatch negativity. Neuro Report, 5(7), 844–846. https://doi.org/10.1097/00001756-199403000-00027

- Tew, S., Fujioka, T., He, C., & Trainor, L. (2009). Neural representation of transposed melody in infants at 6 months of age. Annals of the New York Academy of Sciences, 1169(1), 287–290. https://doi.org/10.1111/j.1749-6632.2009.04845.x

- Thiessen, E. D., Hill, E. A., & Saffran, J. R. (2005). Infant-directed speech facilitates word segmentation. Infancy, 7(1), 53–71. https://doi.org/10.1207/s15327078in0701_5

- Tillmann, B., Burnham, D., Nguyen, S., Grimault, N., Gosselin, N., & Peretz, I. (2011). Congenital amusia (or tone-deafness) interferes with pitch processing in tone languages. Frontiers in Psychology, 2, 120. https://doi.org/10.3389/fpsyg.2011.00120

- Trehub, S. E., & Hannon, E. E. (2006). Infant music perception: Domain-general or domain-specific mechanisms? Cognition, 100(1), 73–99. https://doi.org/10.1016/j.cognition.2005.11.006

- Werker, J. F., & Logan, J. S. (1985). Cross-language evidence for three factors in speech perception. Perception & Psychophysics, 37(1), 35–44. https://doi.org/10.3758/BF03207136

- Winkler, I., Karmos, G., & Näätänen, R. (1996). Adaptive modeling of the unattended acoustic environment reflected in the mismatch negativity event-related potential. Brain Research, 742(1), 239–252. https://doi.org/10.1016/S0006-8993(96)01008-6

- Wong, C. M. P., Skoe, E., Russo, N. M., Dees, T., & Kraus, N. (2007). Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nature Neuroscience, 10(4), 420–422. https://doi.org/10.1038/nn1872

- Wong, P. C. M., & Perrachione, T. K. (2007). Learning pitch patterns in lexical identification by native English-speaking adults. Applied Psycholinguistics, 28(4), 565–585. https://doi.org/10.1017/S0142716407070312

- Wunderlich, J. L., Cone-Wesson, B. K., & Shepherd, R. (2006). Maturation of the cortical auditory evoked potential in infants and young children. Hearing Research, 212(1–2), 185–202. https://doi.org/10.1016/j.heares.2005.11.010

- Xu, Y. (2011). Post-focus compression: Cross-linguistic distribution and historical origin. Proceedings of ICPhS XVII, 152–155.

- Yamane, N., Sato, Y., Shimura, Y., & Mazuka, R. (2021). Developmental differences in the hemodynamic response to changes in lyrics and melodies by 4- and 12-month-old infants. Cognition, 213, 104711. https://doi.org/10.1016/j.cognition.2021.104711

- Yip, M. (2002). Tone. Cambridge University Press.

- Yu, Y. H., Shafer, V. L., & Sussman, E. S. (2017). Neurophysiological and behavioral responses of Mandarin lexical tone processing. Frontiers in Neuroscience, 11, 95. https://doi.org/10.3389/fnins.2017.00095

- Yu, Y. H., Tessel, C., Han, H., Campanelli, L., Vidal, N., Gerometta, J., Garrido-Nag, K., Datta, H., & Shafer, V. L. (2019). Neural indices of vowel discrimination in monolingual and bilingual infants and children. Ear & Hearing, 40(6), 1376–1390. https://doi.org/10.1097/AUD.0000000000000726