Abstract:

This pre-registered systematic review and meta-analysis aimed to answer if K-2 students at risk (Population) for reading impairment benefited from a response to tier 2 reading intervention (Intervention) compared to teaching as usual, (Comparator), on word decoding outcomes (Outcome), based on randomized controlled trials (Study type). Eligibility criteria were adequately sized (N > 30 per group) randomized controlled trials of tier 2 reading interventions within response to intervention targeting K-2 at risk students (percentile 40) compared with teaching as usual (TAU). Reading interventions had to be at least 20 sessions and conducted in a school setting with at least 30 students in each group and containing reading activities. Comparator could not be another intervention. Only decoding tests from Woodcock Reading Mastery Test-Revised (WRMT) and Test of Word Reading Efficiency (TOWRE) were included.Information sources: Database search was conducted 2019–05-20 in ERIC, PsycINFO, LLBA, WOS, and additionally in Google Scholar as well as a hand search in previous reviews and meta-analyses. The searches were updated on 2021–03-21. Risk of bias: Studies were assessed with Cochrane’s Risk of Bias 2, R-index and funnel plots. A random-effects model was used to analyze the effect sizes (Hedges’ g). Seven studies met the eligibility criteria but only four had sufficient data to extract for the meta-analysis. The weighted mean effect size across the four included studies was Hedges’ g = 0.31, 95% CI [0.12, 0.50] which means that the intervention group improved their decoding ability more than students receiving TAU. A Leave-one-out analysis showed that the weighted effect did not depend on a single study. Students at risk of reading difficulties benefit from tier 2 reading intervention conducted within response to intervention regarding a small effect on the students decoding ability. Only four studies met inclusion criteria and all studies had at least some risk of bias. tier 2 reading interventions, conducted in small groups within RtI, can to some extent support decoding development as a part of reading factors.

PUBLIC INTEREST STATEMENT

Learning to decode words is a basic skill needed for students in becoming competent readers. Many students struggle to learn how to decode. In this systematic review, we are investigating the effects of small-group reading interventions aimed at students who are at-risk of not developing their decoding skills. These interventions are conducted in a prevention model, Response to Intervention (RtI). Mostly, RtI has been used in the United States for the last two decades, but recently the model has spread globally. We included high-quality studies that focused on the small-group reading interventions (called tier 2), where students were randomly assigned to either train their reading skills in the intervention program or to the school’s ordinary practice. Students who took part in the interventions developed their decoding skills somewhat better than students who were part of the school’s ordinary practice. This means that there is evidence for using small-group reading intervention, within RtI, to improve at-risk students’ decoding skills in becoming better readers.

1. Introduction

One of the most important responsibilities of educators in primary schools is to ensure that all students become competent readers. High-quality reading instruction in primary grades is essential (CitationNational Reading Panel (US),). Ehri (Citation2005) suggests that early reading instruction should focus on phonic decoding. Such a synthetic phonics approach builds upon the theory of “simple view of reading” where reading comprehension is dependent on a sufficient word decoding ability (Gough & Tunmer, Citation1986; Hoover & Gough, Citation1990). Students who do not develop robust reading skills in the primary grades will most likely continue to struggle with their reading throughout school years (Francis et al., Citation1996; Stanovich, Citation1986). However, it has been stated that various reading difficulties can be prevented if students are offered early reading interventions (Fuchs et al., Citation2008; Partanen & Siegel, Citation2014). Numerous efficient reading intervention programs have been examined in the last decades (Catts et al., Citation2015; Lovett et al., Citation2017; Torgesen et al., Citation1999). In the United States, reading interventions are often conducted as part of a multi-tiered system of support (MTSS). Indeed, MTSS has become routine in many elementary schools (Gersten et al., Citation2020). One common approach is the Response to Intervention (RtI) framework, which is the focus of the present review.

2. Response to intervention (RtI)

Response to intervention (RtI) is an educational approach designed to provide effective interventions for struggling students in reading and mathematics (Fuchs & Fuchs, Citation2006). It originated in the US through the “No Child Left Behind” Act, which was introduced in the early 2000s (No Child Left Behind [NCLB], 2002). Many other countries have followed and implemented models inspired by RtI, for example, in the Netherlands (Scheltinga et al., Citation2010) and the UK. A similar model, “Assess, Plan, Do, Review” (APDR), was introduced in England in 2014 by the “Special Educational Needs and Disability Code of Practice” (Greenwood & Kelly, Citation2017). Theoretical comparisons have been made between the structure of special education in Finland since 2010 and RtI (Björn et al., Citation2018). Recently, there has also been an increased interest regarding Response to intervention models in other northern European countries, such as Sweden (Andersson et al., Citation2019; Nilvius, Citation2020; Nilvius & Svensson,). As RtI or RtI-inspired models are widely implemented across the US and Europe, scientific evaluation of their effectiveness is important.

The basic premise of RtI is prevention mirrored from a medical model in the field of education. The aim is to prevent academic failure and mis-identification for special education delivery services as a resource allocation framework (Denton, Citation2012). Struggling students are identified early and support can be offered before failure can occur, which is in contrast with education where students fail before measures and support are put in place—a “wait-to-fail” approach. RtI is often referred to as a three-tiered model of support. It is characterized by a systematic recurring assessment and monitoring data that determines students’ response to interventions in tiers (Stecker et al., Citation2017).

Tier 1 consists of evidence-based teaching for all pupils in classroom-based activities. Students receive the core curriculum and differentiated instruction. Universal screenings are used to identify students at-risk. The students who do not develop adequate skills receive more intensive and individualized support through teaching in smaller groups. This corresponds to tier 2 in the model. Tier 2 entails supplemental support and is often delivered to small groups of students for a limited duration (Denton, Citation2012; Gilbert et al., Citation2012). The teacher can be a reading specialist, general educator, or paraprofessional who delivers a small-group lesson within or outside the regular classroom setting (Denton, Citation2012). The intention of tier 2 is to close the gap between current and age-expected performance (Denton, Citation2012). The third tier consists of even more individualized and intensive efforts. Intervention is provided in even smaller groups or through one-to-one tutoring, and intervention time is increased (45–60 minutes daily). Tier 3 teaching is provided by even more specialized teachers and progress is monitored weekly or biweekly (Fletcher & Vaughn, Citation2009). Throughout the model, instruction is intensified by manipulating certain variables (i.e., group size, dosage, content, training of interventionist, and use of data) (Vaughn et al., Citation2012).

There are areas within RtI that are criticized, such as the lack of specificity in assessment, the quality and implementation of interventions, selection of research-based practices and fidelity (Berkeley et al., Citation2009). In addition, RtI interventions have been criticized for lack of validity and comprising students who do not respond to interventions (Kavale, Citation2005). The many challenges of implementing the RtI-model have been discussed by Reynolds and Shaywitz (Citation2009). Although implemented as a preventative model for students “at-risk” as a contrast to “wait-to-fail,” Reynolds and Shaywitz (Citation2009) criticize the RtI-model for being implemented in practice without the support and adequate research. They also discuss the neglect of possible negative long-term impact on students with disabilities. RtI has historically also been used as a diagnostic method for learning disabilities (Batsche et al., Citation2006). A highly controversial practice not discussed in this paper.

3. Objectives

The aim of the present systematic review was to investigate the evidence for tier 2 reading interventions, conducted within the RtI-framework, concerning word decoding skills for at-risk students in primary school (Year K–2). Tier 2 interventions deliver supplementary instruction for students who fall behind their peers in tier 1 core instruction. In primary school years these less intensive interventions might be preventative, with early identification and intervention of students at-risk for reading failure. Implementation of efficient tier 2 interventions could allow students to get back on track with their reading improvement. This is an argument for continuous evaluation of the efficiency of reading interventions within tier 2. Specifically, we asked:

What are the effects of tier 2 interventions on at-risk students’ word decoding skills compared to teaching as usual (TaU)?

“At-risk” refers to risk for developing reading impairments and was defined as word decoding skills at or below the 40th percentile. We focused only on studies with a randomized control trial design (RCT) with children in kindergarten to Grade 2 (K–2) using tier 2 reading interventions for struggling readers at or below the 40th percentile on decoding tests.

4. Previous reviews of the efficacy of RtI

Synthesis, reviews, and meta-analyses have examined the efficiency of interventions within the RtI-model since the beginning of the twenty-first century. Burns et al. (Citation2005) conducted a quantitative synthesis of RtI-studies and concluded that interventions within the RtI-model improved student outcomes regarding reading skills as well as demonstrating systemic improvement. Wanzek et al. (Citation2016) investigated tier 2 interventions by examining the effects of less extensive reading interventions (less than 100 sessions) from 72 studies for students with, or at-risk for, reading difficulties in Grades K–3. They examined the overall effects of the interventions on students’ foundational skills, language, and comprehension. Wanzek et al. (Citation2016) also examined whether the overall effects were moderated by intervention type, instructional group size, grade level, intervention implementer, or the number of intervention hours. Adding further to these new findings, Wanzek et al. (Citation2018) provided an updated review of this literature. Hall and Burns (Citation2018) investigated small-group reading interventions, equivalent to typical tier II interventions, and intervention components (eg., training of specific skills vs multiple skills, dose, and group size) and concluded that small-group reading interventions are effective. Taken together, these exploratory meta-analyses provide an overview of the effect of RtI interventions. However, as they did not distinguish between different designs or assess the quality or risk of bias for the included studies, their effects cannot directly be used to answer our research question.

Of highest relevance for the present research is a recent meta-analysis by Gersten et al. (Citation2020) who reviewed the effectiveness of reading interventions on measures of word and pseudoword reading, reading comprehension, and passage fluency. Results from a total of 33 experimental and quasi-experimental studies conducted between 2002 and 2017 revealed a significant positive effect for reading interventions on reading outcomes. They assessed the quality of the included studies through the “What Works Clearinghouse” standard. Moderator analyses demonstrated that mean effects varied across outcome domains and areas of instruction. Gersten et al. found an effect size of 0.41 (Hedges’ g) for word decoding (word and pseudoword reading).

5. The present study: A gold standard systematic review

It may seem that our research question could have already been answered in the previous reviews and meta-analyses outlined. Indeed, they had quite broad scopes. Another way of investigating the literature is through a systematic review. According to the “Cochrane handbook for systematic reviews of interventions” (Higgins et al., Citation2020), a systematic review is characterized by clearly pre-defined objectives and eligibility criteria for including studies that are pre-registered in a review protocol. The search for studies should also be systematic to identify all studies that meet the eligibility criteria. Further, the eligibility criteria should be set to the appropriate quality, or quality should be assessed among the included studies. Studies should be checked for risk of bias both individually (e.g., limitations in randomization) and across studies (e.g., publication bias). The entire work-flow from searches to synthesis should be reproducible. Reporting of systematic reviews should be conducted in a standardized way, with the current gold standard being Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) (Page et al., Citation2021).

Systematic reviews have gained prominence in medicine (e.g., Cochrane) and health (e.g., PROSPERO), and are cornerstones of evidence-based medicine and health interventions. In educational research, systematic reviews remain more novel. We believe that the approach is an important development for the field. Indeed, the traditional meta-analysis approach has been criticized for combining low-quality with high-quality studies that yield effect sizes that lack inherent meaning and have little connection to real-life when results are averaged (Snook et al., Citation2009). In contrast, systematic reviews can directly inform practical work. The only previous review that can be considered a systematic review is the recent one by Gersten et al. (Citation2020). We applaud their novel and rigorous effort. Still, we are convinced that there are some limitations in Gersten et al. (Citation2020) that the present study will address or complement, and thus further advance the field.

This systematic review focuses specifically on evaluating only RtI tier 2 interventions and not of interventions that are, or could have been, used in a RtI framework. From our perspective of examining RtI, this distinction is important because non-RtI studies may lack the inherent logic behind deciding and selecting students to different tiers of interventions. We rely on another type of quality assessment that is more rigorous and restrictive than what has been used in previous reviews. Although it could be argued that our criteria were too strict, they certainly serve as a nice complement to Gersten et al. (Citation2020), and interested readers and policymakers can decide for themselves what level of evidence standard they prefer. For example, whereas Gersten et al. (Citation2020) inclusion criteria allowed for varying levels of quality and evidence (e.g., quasi-experimental studies), we will only consider full-scale (i.e., not pilots) randomized control trials with outcomes that have previously established validity and reliability. Furthermore, we undertook extensive investigation not only across studies but also for individual studies (Cochrane’s Risk of Bias 2; Sterne et al., Citation2019). Importantly, we took advantage of new tools developed in the wake of the replication crisis (see Renkewitz & Keiner, Citation2019 for an overview).

An additional benefit of the present research is that we pre-registered our protocol. This ensures transparency throughout the process and reduces the risk of researcher bias when estimating the size of effects. We have ensured that our systematic review can be reproduced by a third party through sharing the pre-registration, the coding of the articles (i.e., our data) and the R code for all analyses. This means that anyone can build on and update our work. A final benefit of the present review is that the literature search is updated. Gersten et al. (Citation2020) included studies from 2002–2017, whereas we included studies published from 2001 to 2021–03-21.

6. Method

6.1. Protocol and registration

Pre-registration of the study and its protocol was published in March 2019 and can be found at the Open Science Framework: https://osf.io/6y4wr. All the materials, including search strings, screened items, coding of articles, extracted data, risk of bias assessments, and the data analysis can be accessed at https://osf.io/dpgu4/.

This method section presents our protocol (copied verbatim as far as possible), followed by deviations from the protocol that were made during the process.

6.2 Eligibility criteria

To examine the effectiveness of tier 2 reading interventions within the RtI framework regarding students “at-risk” in K-2, the following eligibility criteria were set.

6.1.1 Participants

Year K-2 students in regular school settings with assessed word decoding skills in or below the 40th percentile. Year K-2 was chosen because we wanted to focus on early intervention, rather than to focus on RtI being evaluated relatively late in the learning-to-read process. We think year two is a reasonable upper limit for that goal. The 40th percentile can be perceived as high but was set in order to find all studies examining students “at-risk”, which possibly could have a rank set at or just above the 30th percentile.

6.1.2 Interventions

The intervention within the RtI framework had to be a reading intervention, as defined by the authors. It needed to consist of at least 20 sessions during a limited period with duration and frequency reported. It has been stated in previous research that more longer-term and intensive literacy interventions are preferable in order to achieve more substantial differences among young students (Malmgren & Leone, Citation2000). The intervention needed to be conducted in a regular school setting (i.e., not homeschooling or other alternative school settings). Other than that, there were no limitations on the type of interventions. For example, the reading interventions could explicitly teach phonological awareness and letter-sound skills along with decoding and sight word instruction. The interventions could also include fluency training, meaning-focused instruction, dialogic reading techniques, and reading comprehension activities, however, instructional activities needed to be reported.

Tier 2 interventions in other similar frameworks that are not RtI, such as Multi-Tiered System of Support (MTSS) or other forms of tier/data-driven teaching, are not included.

6.1.3 Comparator

The control groups had to contain students of the same population (at-risk), allocated randomly to the schools’ regular remedial procedures, which is denoted as TaU (i.e., teaching as usual) or denoted as an active control group. The control group was, however, not referred to as another research intervention. The comparison group had “teaching as usual”/TaU.

6.1.4 Outcome

Descriptive statistics as M, SD and/or reported effect sizes at pre-and post-test of decoding with the tests Test of Word Reading Efficiency (Torgesen et al., Citation1999) TOWRE and/or Woodcock Reading Mastery Test-Revised (Woodcook, Citation1998) (WRMT) had to be reported as the key outcome in the studies. We argue data obtained from these instruments has evidence of reliability and validity of the construct decoding. (i.e., studies must have included WRMT and/or TOWRE).

6.1.5 Study type

Only RCTs were included. The sample size had to be at least 30 participants in each group. We choose this cut-off to avoid including pilots and severely underpowered trials, as n < 30 per group means less than 50 % power for realistic moderate effect size (d = 0.5). Such small studies have a higher risk of publication bias and will mainly add heterogeneity to the overall estimate. Besides the eligibility criteria above the study had to be published in a peer-reviewed journal and written in English.

8. Information sources

A systematic search was conducted using the online databases of ERIC, PsycINFO, LLBA, Web of Science, and Google Scholar. We complemented the main search process with a hand search of references in previously conducted reviews, meta-analyses, and syntheses regarding reading intervention within the RtI-model, as well as checking the reference lists of the included studies.

9. Search

Word decoding skills are one of several potential outcomes in typical RtI research. We aimed to include all possible search terms to identify relevant articles that include decoding skills, including the population of interest and type of study (i.e., at-risk students and RCT). Combinations of keywords, such as “reading,” “decoding”, ‘K–2ʹ OR “Grade K” OR ‘Grade 1ʹ OR “Grade 2ʹ, ‘RtI OR Response to intervention,’ and ‘randomized OR RCT’ were used to identify studies (key phrases for the different searches are available at OSF). The search was restricted to a specified date range from 2001–2019, up to the search day 20th of May 2019. In 2001 RtI was recognized in legislation and then rolled out in school settings. Before 2001, intervention studies were conducted but not integrated into schools” prevention models.

The searches were conducted as planned, with the one exception that parenthesis in a search string in the protocol had to be corrected because of a typo. On completion of our systematic review, we repeated the searches with the same criteria on the 9th of September 2020 to control the search strings. On 2021–03-21 we updated the searches once more, and this time we also added the search term “Multi-tiered system of support and MTSS”, because it was possible that some RtI studies were framed as such instead. The new date range was thus 2001–01-01 to 2021–03-21. There were only minor differences compared to the previous search and no additional studies met the inclusion criteria. The final search strings can be found at OSF and should be used rather than the ones given in the protocol.

10. Study selection

Articles were downloaded from the databases, combined with those identified by the hand searches, and checked for duplicates. Next, two of the authors independently screened the abstracts of all these articles against the eligibility criteria using the Zotero reference tool. The reliability of the screening procedure was not calculated. Based on the review of abstracts, we excluded dissertations, book chapters, and documents that did not involve children in K–2 or that were not relevant to our topic. Case studies or microanalyses, multiple studies as reviews, or policy reports were also excluded. The remaining articles moved forward into the full-text reading phase. In this phase, we also extracted information about the studies that related to our eligibility criteria. Only articles that met all these criteria were then analyzed further for extraction of statistical data.

11. Data collection process and data items

In the full-text screening phase, the following categories were coded by two reviewers (CN) and (LF): (A) author, (B) design, (C) participants, (D) grade, (E) inclusion criteria: at-risk/on or below 40th percentile, (F) intervention, (G) time in intervention and (H) outcome. Any occurring discrepancies or difficulties were solved by discussions with the third reviewer (RC) who was not part of the reading process at that point. We deviated from protocol in that the coders stopped their coding when it was apparent that a study would not meet eligibility criteria. When studies did not meet a code’s criteria no further coding was conducted, e.g., when the design (code B) was other than RCT, categories C–H were not coded as the studies were excluded. In other words, this full-text coding phase also involved some full-text screening.

Statistical data for the analysis were extracted independently by two members of the research team (TN) and (RC), and disagreements were resolved by discussion. Data extracted were Mean and SD of pre- and post-intervention for each group. For each study where Mean and SD have been extracted, we also extracted the main inferential test used by the authors to draw a conclusion (i.e. a focal test). For example, the t-test for the difference between the control and intervention group, or the F-test for the interaction effect in an ANOVA. The logic behind extracting the focal test is that these are the ones that authors may have tried to get significance for. The focal test was extracted by two study authors (TN) and (RC), and disagreement was resolved by discussion.

The only deviation regarding the statistical extraction was that we could not extract pre-test information for one of the articles (*Case et al., Citation2014). We had also not specified that we should contact authors in case of missing data for the outcomes, but we decided to do so.

12. Risk of bias in individual studies

We decided to deviate from the protocol and use the new Cochrane risk of bias resource, RoB 2 (Sterne et al., Citation2019) instead of the stated SBU protocol because RoB 2 had updated and SBU was planning to revise their tool. Studies were assessed using the “individually randomized parallel-group trial” template. The assessments followed the RoB 2 manual and were undertaken by two independent reviewers. Assessments were compared using the Excel program provided by Cochrane. Disagreements and problems were solved through discussions.

We also assessed the statistical risk of bias using R-index (Schimmack, Citation2016). R-index is a method that estimates the statistical replicability of a study by taking the observed power of the test statistics and penalizing it relative to the observed inflation of statistically significant findings. For example, if 10 studies have 50 % average power, only 50 % of them should be significant (on average). If 100 % of studies are significant, R-index adjusts for this inflation that is plausibly due to publication bias, selective reporting etc. It should be interpreted as a rough estimate of the chance of a result replicating if repeated exactly and ranges from 0 to 100 %. Importantly, the adjustment can be used on a study-by-study basis to assess the risk of bias. Of course, it does not mean that the specific study is biased, but a study with just significant value (e.g., p = 0.04 with alpha = .05) would be flagged as having a high risk of bias, whereas a study that passed the significant threshold with a large margin (e.g., p = 0.001) would not be flagged. Studies that present non-significant findings have no risk of bias on this test.

For each study where Mean and SD were extracted, we calculated the R-index based on the focal test (i.e. main inference) reported in the article. We used a tool called p-checker (Schönbrodt, Citation2018) for this. The focal test was extracted by two members of the research team and disagreement was resolved by discussion. An R-index < .50 for a statistically significant focal test was considered as high risk for statistical reporting bias.

We deviated from our protocol by adding the level “some concerns” for studies that would be over or below the threshold depending on how their focal test was interpreted. This additional level made this statistical bias check more in line with the Rob 2 terminology.

13. Summary measures

The outcome of interest was the standardized mean difference between the intervention group and the TAU group. We calculated the standardized mean differences in the form of Hedges’ g. Hedges’ g is the bias-corrected version of the more commonly used Cohen’s d. It can be interpreted in the same way but is more suitable for a meta-analysis that combines studies of different sizes. We used the R package Metafor (Viechtbauer, Citation2010) to calculate effect sizes and their standard errors.

We deviated from the protocol in the following ways: First, although not entirely clear in our protocol, the plan was to calculate the effect size based on the pre-post data for the two groups. Both were initial requirements for inclusion. For this to work, the articles also needed to report the correlation between pre and post. We realized that this would mean exclusion of articles that met all other criteria. Accordingly, we switched to post-comparisons only for the effect size. This does not change the interpretation, since the designs were always pre-post randomized trials, but simply means that when studies did not report the pre-test in sufficient detail for extraction, they could still be included. It has recently been argued that this practice is preferred for pre-post designs (Cuijpers et al., Citation2017).

We had not specified how to handle nested data in our protocol. Data collected from schools are naturally multi-level: students are nested within classes that are nested within schools. For the designs of the present review, there were two more types of clustering to consider. First, the trial might randomly assign clusters of students (schools or classes) instead of individuals to TAU or RtI (Hedges, Citation2007). Second, even if students are individually randomized, they may form clusters within the treatment group because tier 2 intervention is done in small groups. Another way to think of this is that there are interventionists specific (e.g., special teachers) effects (Hedges, Citation2007; Walwyn & Roberts, Citation2015). These could arise not only because of individual differences among the special teachers but also because the designs do not always call for random assignment to teachers but may depend on various things such as scheduling, preference, and needs, etc.

When data are clustered, the effective sample size is reduced, and this will inflate standard errors (Hedges, Citation2007). The effect of this can range from negligible to substantial, depending on correlation within clusters (Intraclass correlation: ICC) and the size and number of clusters. Proper modeling of this would require the full datasets from all trials, as well as information that is often not even collected. The difficulty of adjusting for clustering is likely the reason why it is often partially or completely ignored (Hedges, Citation2007; Walwyn & Roberts, Citation2015).

We decided to adjust for clustering of random assignment using design effect adjustment for sample size described in the Cochrane manual (Higgins et al., Citation2020). For studies with an individual assignment, we adjusted for clustering in the treatment group using the approach described in Hedges and Citkowicz (Citation2015) if sufficient details were reported for it to be possible. We did not adjust for the multiple levels of clustering (i.e., everything is nested within schools) but focused on the most substantial clustering.

14. Synthesis of results

A random-effects model was used to analyze the effect sizes (Hedges’ g) and compute estimates of mean effects and standard errors, as well as estimates of heterogeneity (tau, τ). We used the R package Metafor (Viechtbauer, Citation2010) for this purpose, and the R-script and extracted data are available on OSF.

15. Risk of bias across studies

Because we expected few studies (< 20), publication bias was mainly assessed through visual inspection using funnel plots. We also undertook sensitivity analysis for the random-effects meta-analysis using leave-one-out analysis, and excluding studies that have a high risk of bias. When the tau (τ) estimate and inspection of funnel plots suggested low heterogeneity, we used p-curve to examine the evidential value (Simonsohn et al., Citation2014) and p-uniform (to provide a biased adjusted estimate (Van Assen et al., Citation2015). We used z-curve (Brunner & Schimmack, Citation2020) to estimate overall power, and PET-PEESE (Stanley & Doucouliagos, Citation2014) to examine publication bias, only if we obtained a sufficient number of studies (> 20).

We deviated from our protocol in that we decided to only conduct p-uniform or p-curve if we had a large share of statistically significant studies. These two methods remove the non-significant studies and estimate only based on the significant studies. This is based on the assumption that there are more significant than non-significant studies because of publication bias. This assumption was not met for the current literature.Additional analysis

As an additional exploratory analysis, we planned to examine effect sizes as a function of time, to investigate if effect sizes have decreased since 2001. However, having extracted the final dataset, we realized that we did not have enough data to examine the effect of time.

16. Results

16.1 Study selection

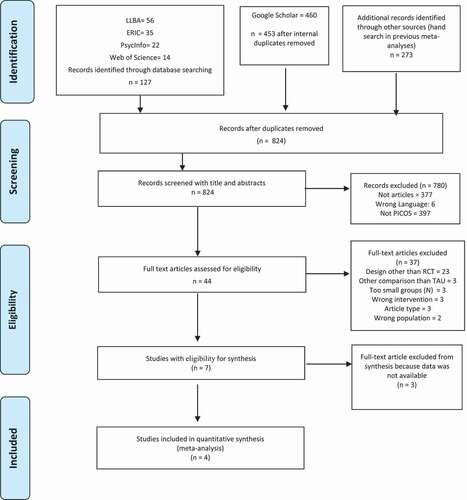

The flow chart () details the search and screening process. In the full-text reading phase, three articles were assessed differently by the two reviewers; the differences were then resolved by the third reviewer.

Seven studies met the qualitative inclusion criteria. However, only four of them were included because the other three did not report necessary statistics such as means and standard deviations for tests conducted after the interventions (*Cho et al., Citation2014; *Gilbert et al., Citation2013; *Linan-Thompson et al., Citation2006). Authors were contacted by two consecutive emails and either they did not respond or could not provide the data. We decided to continue with the review of the four articles that contained the descriptive statistics, which can be found in . In the database search, 127 items were identified. To prevent any study being missed a Google Scholar and a hand search was conducted. However, all the final four articles in the meta-analysis were identified in the database search.

Table 1. Features of intervention studies included in the systematic review

16.2 Reasons for exclusion

It can be noted that exclusion of studies in the abstract-reading phase was done mainly because the studies did not meet PICOS (eg., wrong population, design or comparison) or they were not articles. Regarding the exclusion of articles in the full-text reading phase, the main reason was that the studies did not have an RCT design. Other reasons for excluding articles were rare (see ). No studies were excluded due to other tests than TOWRE or WRMT, intervention features (eg., number and frequency of sessions), or cut-off boundaries for defining at-risk students. This means that the chosen inclusion criterias, other than for design (i.e., adequately-sized RCT with teaching as usual as comparison) did not affect the final study selection.

17. Study characteristics

Four studies were included in the review (*Case et al., Citation2014; *Denton et al., Citation2014; *Fien et al., Citation2015; *Simmons et al., Citation2011). All four were conducted in the US where RtI is implemented to a greater extent than in other countries. provides an overview of the key features of the four included studies. A total of 339 students were represented in intervention groups and 341 children in control groups. The samples were detected as at-risk children scoring at least < 40th percentile but most commonly below the 30th percentile. None of the studies included samples of students identified with learning disabilities. The intervention sessions lasted between 11 and 26 weeks. Intervention content was similar across the interventions in the different studies focusing on phonemic awareness, sound-letter relationships, decoding, fluency training and comprehension. In this section, no findings were reported from the studies because we analyzed the effects in accordance with our analysis plan. Both TOWRE and WRMT were in the inclusion criteria. However, among the finally included studies, WRMT was a common denominator across all studies. There was then no reason to make an individual analysis for TOWRE. The focal tests for each study were analyzed for the risk of statistical bias and can be found under the heading “Risk of bias” within individual studies.

*Case et al. (Citation2014) investigated the immediate and long-term effects of a tier 2 intervention for beginning readers. Students were identified as having a high probability of reading failure. First-grade participants (n = 123) were randomly assigned either to a 25-session intervention targeting key reading components, including decoding, spelling, word recognition, fluency, and comprehension or were admitted to a no-treatment control condition.

*Denton et al. (Citation2014) evaluated the two different approaches in the context of supplemental intervention for at-risk readers at the end of Grade 1. Students (n = 218) were randomly assigned to receive Guided reading intervention, Explicit intervention, or Typical school instruction. The Explicit instruction group received daily sessions of instructional activities on word study, fluency and comprehension.

*Fien et al. (Citation2015) examined the effect of a multi-tiered instruction and intervention model on first-grade at-risk students’ reading outcomes. Schools (N = 16) were randomly assigned to control or treatment conditions. Grade 1 students identified as at-risk (10th to 30th percentile) were referred to tier 2 instruction (n = 267). Students performing in the 31st percentile or above had tier 1 instruction. In the treatment condition, teachers were trained to enhance core reading instruction by making instruction more explicit and increasing practice opportunities for students in tier 1.

*Simmons et al. (Citation2011) investigated the effects of two supplemental interventions on at-risk students’ reading performance of kindergarteners. Students (n = 206) were randomly assigned to either an explicit and systematic commercial program or to the school’s own designed practice intervention. Interventions took place for 30 min per day in small groups, for approximately 100 sessions. Characteristics of the interventions are summarized in .

18. Risk of bias within individual studies

Using RoB 2, three studies were assessed as having some concerns of risk of bias (*Case et al., Citation2014; *Denton et al., Citation2014; *Simmons et al., Citation2011) because no pre-registered trial protocol or statistical analysis plan existed (although *Denton et al., Citation2014 mentioned one we could not find it) and assessors were not blinded to which group participants belonged. *Fien et al. (Citation2015) were assessed to be of high risk of bias because of a combination of no pre-registered trial protocol and with a composite score of the outcome which was marginally significant. However, this analysis is not the focus here as we calculated the effect in accordance with our research plan. The R-index risk of bias check resulted in two studies with no risk of bias and two studies about which there was some concern (see ). Overall, there were no alarming levels of risk to be found.

19. Results of individual studies

Figure shows the forest plots for the studies based on a random-effects meta-analysis. It shows the effect sizes and 95% CI of the individual studies and the random-effects weighted total. Because the forest plot is based on data adjusted for clustering, the width of the 95% CI does not directly match the sample sizes. shows the Mean and SD extracted for the WRMT test. Note that one of the studies used a composite score and thus these scores are not based on exactly the same metric.

Table 2. Descriptive data of the included studies

20. Synthesis of results—the meta-analytic findings

The random-effects model was estimated with the default restricted maximum-likelihood estimator. Tau (τ) was estimated to be zero (0). Although this is consistent with low heterogeneity, it should not be interpreted as exactly zero since small values of tau are hard to estimate with a low number of studies. With a τ of zero, the results from a random-effects model are numerically identical to a fixed effects model.

The random-effects model on the four studies found an overall effect (Hedges’ g) of .31, 95 % CI [0.12, 0.50], that was significantly different from zero z = 3.18, p = .0015. Relying on Cohen’s convention for interpreting effect sizes, this interval ranges from trivial to just below moderate. Taken together we reject that the effect is negative, zero, moderate or large, and interpret it as a statistically trivial to small effect.

To summarize, interventions including decoding in tier 2 within RtI give a point estimate of a small positive effect of 0.31 with a 95% CI that ranges from 0.12 (a trivial effect) to 0.50 (just below moderate). Although we can exclude zero, the effect is not much more effective than teaching as usual in the comparison groups. This information is relevant to researchers and practitioners and is discussed in the implications below.

21. Risk of bias across studies

Looking at the funnel plot with enhanced contours (indicated 90 %, 95 % and 99 % CI) we see no signs of publication bias (). A conventional funnel plot suggests the same (). However, with so few data points it is very hard to tell.

A leave-one-out analysis showed that the effect was robust as it ranged from 0.34 to 0.27, and the lower CI ranged from .04 to 0.17. Because of the little bias found in the individual studies, this leave-one-out analysis is enough to examine that as well. It is worth noting that the results were robust with the exclusion of *Fien et al. (Citation2015), the only study with high risk.

It was not possible to use p-curve or p-uniform because only one study was significant for the extracted outcome. It would have been possible to conduct these on the extracted focal tests, but we had already performed R-index on that, based on our analysis plan. Furthermore, as we had detailed in our protocol, we would not conduct Z-curve or PET-PEESE across studies if there were fewer than 20 studies.

22. Discussion and summary of evidence

This PRISMA-compliant systematic review examining RtI tier 2 reading interventions shows positive effects on at-risk students’ decoding outcomes. The significant weighted mean effect size across the four included studies was Hedges’ g = 0.31, 95% CI [0.12, 0.50]. Students at-risk for reading difficulties benefit from the tier 2 interventions provided in the included studies, although the CI indicates the effect can be anything from trivial to just below moderate. Gersten et al.’s (Citation2020) high-quality meta-analysis of reading interventions found effects of Hedges’ g = 0.41 in the area of word or pseudoword reading. Our present study, with both stricter criteria and only including studies conducted within the RtI-framework, suggests that the findings from Gersten et al. (Citation2020) are robust. As the US national evaluation of RtI (Balu et al., Citation2015) found negative effects on reading outcomes for tier 2 reading interventions our result must be regarded as important to the field. Gersten et al. (Citation2020) point out that intervention programs with specific encoding components might reinforce phonics rules and increase decoding ability. This is consistent with research in the field of interventions targeting reading acquisition and reading development that show that systematic and explicit training in phonemic awareness, phonics instruction, and sight word reading implemented in small groups can help students who are likely to fall behind when they receive more traditional teaching. The studies in this review included such intervention programs that also involved explicit instruction. The efficiency of explicit instruction is aligned with earlier extensive reading research highlighting explicit instruction as efficient for students at-risk of reading difficulties (Lovett et al., Citation2017). These findings are of importance for practitioners teaching children to read. However, the effect found in this review is not large compared to TAU, which was the comparator used. One way to interpret the relatively low effect of tier 2 intervention vs. TAU is that it could not be expected to be large. By definition, RtI is proactive instead of reactive (like “wait-to-fail”). Indeed, the students who are given treatment are at-risk. By definition, with a cut-off at 40 %, some would not need this kind of focused intervention and thus the effect vs. TAU in such cases is expected to be low or close to zero. To use TAU in studies that investigate the relative effect of RtI interventions also points to the need to be able to carefully examine the TAU components and the quality of classroom reading instruction. However, TAU is rarely described in the same detail as the experimental intervention, which makes conclusions about the effectiveness of RtI a bit difficult to determine. We would expect a larger contrasting effect in schools with lower overall classroom quality, and conversely, a smaller contrasting effect in schools of higher quality. As these details are not available to review, the generalization becomes more restricted. We believe that generalizing beyond the US school system would not be appropriate. More high-quality studies of RtI are needed outside of the American school setting to determine the effectiveness in a more global sense.

Some of the previous reviews and meta-analyses (Burns et al., Citation2005; Wanzek et al., Citation2018; Wanzek & Vaughn, Citation2007; Wanzek et al., Citation2016) have examined RtI reading interventions with several general questions studying overall effects on reading outcome measures. This approach has benefits and weaknesses. The benefit would be examining overall effects in reading, but at the same time, the results can be too broad and unspecified. This study narrowed both the research question asked, compared to previous studies, as well as the eligibility criteria (i.e, PICOS) for inclusion of studies. This was done to obtain studies with high quality but also studies that are comparable in terms of design and tests used. Previous reviews included not only RCTs but also quasi-experimental studies. Including quasi-experimental studies makes it harder to establish the effect of the intervention because individual differences at baseline can confound intervention effects. It is also common but unfortunate practice in meta-analyses to merge studies together for within-group and between-group designs, with within-group studies artificially inflating the overall effect size.

In this review, we assessed the studies at risk of bias in addition to only including high-quality studies. These approaches did not result in any alarming levels of risk of bias assessment. The interpretation of the reported effect size in the present study can therefore be regarded as a more confident meta-analytic finding compared to earlier findings as it only includes quality-checked RCT-studies that met our rigorous standards.

23. Limitations

Outcome data were missing in three studies with eligibility for inclusion in the review. This reduces the value of the evidence somewhat compared to if all studies had been included because it is not clear if the weighted effect obtained would be somewhat lower, the same, or higher. Another limitation, although not our research question, is that the included studies did not allow for specifying how the tier 2 intervention affected the student’s long-term functional reading level and how much the gap between at-risk student’s decoding levels were reduced in comparison with normally developing students. This is due to the design of the included studies, which did not measure the longitudinal impact of the intervention, either in comparison with normally developing students or with relevant norms.

24. Future research

For future research, we have several recommendations. First, we recommend pre-registered studies with sufficient power (several hundreds of schools) as the hitherto reported effects are small-to-medium and RtI should be studied at the organizational level. A large-scale longitudinal research study of fully implemented RtI-models has not been conducted yet. Such a study would be expensive and difficult to accomplish but it must be weighed against the cost of continued failure to teach a large percentage of school children to read, as has already been argued by Denton (Citation2012). Second, we suggest that RtI-intervention studies use randomization at school or other appropriate cluster levels. This is important as RtI interventions are not pure reading interventions but occur in school contexts. To this end, we recommend using a cluster-randomized approach with proper modeling of nested effects that can occur at the school level as well as in intervention groups. Third, reading interventions published as scientific articles must report and provide necessary data for others to analyze, for example, accessible via a repository such as the OSF. The disadvantage of not doing so became apparent for this review and can seriously harm any field. Three more studies could have been examined in this meta-analysis if authors had reported descriptive data or made it accessible in other ways.

Fourth, we recommend studies included in future systematic reviews be assessed for evidential value (e.g., with GRADE) and risk of bias (e.g., using Rob 2). When applying Rob 2, risk of bias was indicated as few studies had blinded assessors and none had pre-registration protocols. We also found no systematic review that has been pre-registered or had a statistical analysis plan provided or made accessible for others to scrutinize. To increase quality and ensure the highest standards, pre-registrations, trial protocols, and transparency should be the standard, especially as RtI is widespread and impacts academic studies for many young students. Given that there are high quality single RCTs already, in line with the concept “Clinical readiness level” (Shaw & Pecsi, Citation2020), the next step is to conduct multi-site pre-registered replications that address generalization, boundary effects as well as the core replicability of the overall findings. Gersten et al. (Citation2020) also ask for high-quality studies in the field of reading interventions that provide a robust basis for further examinations. They suggest it will take some time for the field to reach that goal. We hope for quicker progress.

25. Conclusions

The findings of this systematic review support previous findings (Gersten et al., Citation2020) that tier 2 reading interventions, conducted in small groups within RtI, to some extent support decoding as a part of reading development. Seven studies met inclusion criteria of which four studies were included in the analyses. The overall effect of a tier 2 interventions on at-risk student’s decoding ability is (Hedges’ g) .31, 95 % CI [0.12, 0.50] which is estimated as a small-to-medium effect size. The conclusion is applicable to students in or below the 40th percentile in reading performance. Thus, teachers may continue to deliver tier 2 reading interventions that target basic reading skills such as decoding.

About the authors’ statement

Camilla Nilvius’s themes of research include reading development, reading interventions, and frameworks in education as Response to intervention (RtI) to prevent reading difficulties. Rickard Carlsson’s themes of research include method development, meta-psychology, and systematic reviews of evidence-based methods and interventions in education. Linda Fälth’s themes of research include reading development, reading difficulties, dyslexia, and interventions in education, particularly in reading research. Thomas Nordström`s current themes of research are evidence-based methods and interventions in education, particularly in reading research.

Registration

Available at the Open Science Framework: https://osf.io/6y4wr

Acknowledgements

Thomas Nordström and Rickard Carlsson were supported by the Swedish Research Council under Grant no. 2020-03430. Camilla Nilvius and the present study were part of the Swedish National Research School Special Education for Teacher Educators (SET), funded by the Swedish Research Council Grant no. 2017-06039, for which we are grateful. We thank Michelle Pascoe, PhD, from Edanz Group (https://en-author services.edanzgroup.com/ac) for editing a draft of this manuscript. We also thank Lucija Batinovic, Linnaeus University, for a final proof-reading.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Camilla Nilvius

Order of the authors is Nilvius, Camilla as lead author. In alphabetical order Carlsson,

Rickard, Fälth, Linda and Nordström, Thomas as senior author.

Authors have contributed with following:

Camilla Nilvius: Conceptualization, Investigation, Writing original draft, Writing, reviewing and editing, Visualization, Funding acquisition.

Rickard Carlsson: Conceptualization, Methodology, Validation, Data curation, Resources,

Formal Analysis, Writing, reviewing and editing, Funding acquisition.

Linda Fälth: Conceptualization, Investigation, Writing, reviewing and editing.

Thomas Nordström: Conceptualization, Methodology, Validation, Investigation, Data curation, Writing, reviewing and editing, Project administration, Funding acquisition.

References

- Andersson, U., Löfgren, H., & Gustafson, S. (2019). Forward-looking assessments that support students’ learning: A comparative analysis of two approaches. Studies in Educational Evaluation, 60, 109–20. https://doi.org/https://doi.org/10.1016/j.stueduc.2018.12.003

- Balu, R., Zhu, P., Doolittle, F., Schiller, E., Jenkins, J., & Gersten, R. (2015). Evaluation of response to intervention practices for elementary school reading. NCEE 2016-4000. National Center for Education Evaluation and Regional Assistance.

- Batsche, G. M., Kavale, K. A., & Kovaleski, J. F. (2006). Competing Views: A dialogue on Response to Intervention. Assessment for Effective Intervention, 32(1), 6–19. https://doi.org/https://doi.org/10.1177/15345084060320010301

- Berkeley, S., Bender, W. N., Peaster, L. G., & Saunders, L. (2009). Implementation of Response to Intervention: A snapshot of progress. Journal of Learning Disabilities, 42(1), 85–95. https://doi.org/https://doi.org/10.1177/0022219408326214

- Björn, P. M., Aro, M., Koponen, T., Fuchs, L. S., & Fuchs, D. (2018). Response-To-Intervention in finland and the united states: Mathematics learning support as an example. Frontiers in Psychology, 9(800), 1–10. https://doi.org/https://doi.org/10.3389/fpsyg.2018.00800

- Brunner, J., & Schimmack, U. (2020). Estimating population mean power under conditions of heterogeneity and selection for significance. Meta-Psychology, 4(4), 1–22. https://doi.org/https://doi.org/10.15626/MP.2018.874

- Burns, M. K., Appleton, J. J., & Stehouwer, J. D. (2005). Meta-analytic review of responsiveness-to-intervention research: Examining field-based and research-implemented models. Journal of Psychoeducational Assessment, 23(4), 381–394. https://doi.org/https://doi.org/10.1177/073428290502300406

- *Case, L., Speece, D., Silverman, R., Schatschneider, C., Montanaro, E., & Ritchey, K. (2014). Immediate and long-term effects of Tier 2 reading instruction for first-grade students with a high probability of reading failure. Journal of Research on Educational Effectiveness, 7(1), 28–53. https://doi.org/https://doi.org/10.1080/19345747.2013.786771

- Catts, H. W., Nielsen, D. C., Bridges, M. S., Liu, Y. S., & Bontempo, D. E. (2015). Early identification of reading disabilities within an RTI framework. Journal of Learning Disabilities, 48(3), 281–297. https://doi.org/https://doi.org/10.1177/0022219413498115

- *Cho, E., Compton, D. L., Fuchs, D., Fuchs, L. S., & Bouton, B. (2014). Examining the predictive validity of a dynamic assessment of decoding to forecast Response to Tier 2 Intervention. Journal of Learning Disabilities, 47(5), 409–423. https://doi.org/https://doi.org/10.1177/0022219412466703

- Cuijpers, P., Weitz, E., Cristea, I. A., & Twisk, J. (2017). Pre-post effect sizes should be avoided in meta-analyses. Epidemiology and Psychiatric Sciences, 26(4), 364–368. https://doi.org/https://doi.org/10.1017/S2045796016000809

- Denton, C. A. (2012). Response to intervention for reading difficulties in the primary grades: Some answers and lingering questions. Journal of Learning Disabilities, 45(3), 232–243. https://doi.org/https://doi.org/10.1177/0022219412442155

- *Denton, C. A., Fletcher, J. M., Taylor, W. P., Barth, A. E., & Vaughn, S. (2014). An experimental evaluation of guided reading and explicit interventions for primary-grade students at-risk for reading difficulties. Journal of Research on Educational Effectiveness, 7(3), 268–293. https://doi.org/https://doi.org/10.1080/19345747.2014.906010

- Ehri, L. C. (2005). Learning to read words: Theory, findings, and issues. Scientific Studies of Reading, 9(2), 167–188. https://doi.org/https://doi.org/10.1207/s1532799xssr0902_4

- *Fien, H., Smith, J. L. M., Smolkowski, K., Baker, S. K., Nelson, N. J., & Chaparro, E. (2015). An examination of the efficacy of a multitiered intervention on early reading outcomes for first grade students at-risk for reading difficulties. Journal of Learning Disabilities, 48(6), 602–621. https://doi.org/https://doi.org/10.1177/0022219414521664

- Fletcher, J. M., & Vaughn, S. (2009). Response to Intervention: Preventing and remediating academic difficulties. Child Development Perspectives, 3(1), 30–37. https://doi.org/https://doi.org/10.1111/j.1750-8606.2008.00072.x

- Francis, D. J., Shaywitz, S. E., Stuebing, K. K., Shaywitz, B. A., & Fletcher, J. M. (1996). Developmental lag versus deficit models of reading disability: A longitudinal, individual growth curves analysis. Journal of Educational Psychology, 88(1), 3. https://doi.org/https://doi.org/10.1037/0022-0663.88.1.3

- Fuchs, D., Compton, D., Fuchs, L., Bryant, J., & Davis, G. (2008). Making “secondary intervention” work in a three-tier responsiveness-to-intervention model: Findings from the first-grade longitudinal reading study of the national research center on learning disabilities. Reading and Writing, 21(4), 413–436. https://doi.org/https://doi.org/10.1007/s11145-007-9083-9

- Fuchs, D., & Fuchs, L. S. (2006). Introduction to response to intervention: What, why, and how valid is it? Reading Research Quarterly, 41(1), 93–99. https://doi.org/https://doi.org/10.1598/rrq.41.1.4

- Gersten, R., Haymond, K., Newman-Gonchar, R., Dimino, J., & Jayanthi, M. (2020). Meta-analysis of the impact of reading interventions for students in the primary grades. Journal of Research on Educational Effectiveness, 13(2), 401–427. https://doi.org/https://doi.org/10.1080/19345747.2019.1689591

- Gilbert, J. K., Compton, D. L., Fuchs, D., & Fuchs, L. S. (2012). Early screening for risk of reading disabilities: Recommendations for a four-step screening system. Assessment for Effective Intervention, 38(1), 6–14. https://doi.org/https://doi.org/10.1177/1534508412451491

- *Gilbert, J. K., Compton, D. L., Fuchs, D., Fuchs, L. S., Bouton, B., Barquero, L. A., & Cho, E. (2013). Efficacy of a first-grade responsiveness-to-intervention prevention model for struggling readers. Reading Research Quarterly, 48(2), 135–154. https://doi.org/https://doi.org/10.1002/rrq.45

- Gough, P. B., & Tunmer, W. E. (1986). Decoding, reading, and reading disability. Remedial and Special Education (RASE), 7(1), 6–10. https://doi.org/https://doi.org/10.1177/074193258600700104

- Greenwood, J., & Kelly, C. (2017). Implementing cycles of assess, plan, do, review: A literature review of practitioner perspectives. British Journal of Special Education, 44(4), 394–410. https://doi.org/https://doi.org/10.1111/1467-8578.12184

- Hall, M. S., & Burns, M. K. (2018). Meta-analysis of targeted small-group reading interventions. Journal of School Psychology, 66, 54–66.

- Hedges, L. V. (2007). Correcting a significance test for clustering. Journal of Educational and Behavioral Statistics, 32(2), 151–179. https://doi.org/https://doi.org/10.3102/1076998606298040

- Hedges, L. V., & Citkowicz, M. (2015). Estimating effect size when there is clustering in one treatment group. Behavior Research Methods, 47(4), 1295–1308. https://doi.org/https://doi.org/10.3758/s13428-014-0538-z

- Higgins, J. P. T., Thomas, J., Chandler, J., Cumpston, M., Li, T., Page, M. J., & Welch, V. A. (Eds.). (2020). cochrane handbook for systematic reviews of interventions. John Wiley & Sons. https://training.cochrane.org/handbook/current

- Hoover, W., & Gough, P. (1990). The simple view of reading. Reading & Writing: An Interdisciplinary Journal, 2(2), 127–160. https://doi.org/https://doi.org/10.1007/BF00401799

- Kavale, K. A. (2005). Identifying specific learning disability: Is responsiveness to intervention the answer? Journal of Learning Disabilities, 38(6), 553–562. https://doi.org/https://doi.org/10.1177/00222194050380061201

- *Linan-Thompson, S., Vaughn, S., Prater, K., & Cirino, P. T. (2006). The response to intervention of English language learners at risk for reading problems. Journal of Learning Disabilities, 39(5), 390–398. https://doi.org/https://doi.org/10.1177/00222194060390050201

- Lovett, M. W., Frijters, J. C., Wolf, M., Steinbach, K. A., Sevcik, R. A., & Morris, R. D. (2017). Early intervention for children at risk for reading disabilities: The impact of grade at intervention and individual diffrences outcomes. Journal of Educational Psychology, 109(7), 889. https://doi.org/https://doi.org/10.1037/edu0000181

- Malmgren, K. W., & Leone, P. E. (2000). Effects of a short-term auxiliary reading program on the reading skills of incarcerated youth. Education & Treatment of Children, 23(3), 239–247. https://www.jstor.org/stable/42899617?seq=1#metadata_info_tab_contents

- National Reading Panel (US), national institute of child health, human development (US), national reading excellence initiative, national institute for literacy (US), united states. public health service, & united states department of health. (2000). Report of the National Reading Panel: Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction: Reports of the subgroups. National Institute of Child Health and Human Development, National Institutes of Health. https://www.nichd.nih.gov/publications/pubs/nrp/smallbook

- Nilvius, C. (2020). Merging lesson study and response to intervention. International Journal for Lesson and Learning Studies, 9(3), 277–288. https://doi.org/https://doi.org/10.1108/IJLLS-02-2020-0005

- No Child Left Behind (NCLB) Act of 2001, Pub. L. No. 107-110, § 101, Stat. 1425 (2002).

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ (Online), 372, N71. https://doi.org/https://doi.org/10.1136/bmj.n71

- Partanen, M., & Siegel, L. S. (2014). Long-term outcome of the early identification and intervention of reading disabilities. Reading and Writing, 27(4), 665–684. https://doi.org/http://dx.doi.org/10.1007/s11145-013-9472-1

- Renkewitz, F., & Keiner, M. (2019). How to detect publication bias in psychological research. Zeitschrift für Psychologie, 227(4), 261–279. https://doi.org/https://doi.org/10.1027/2151-2604/a000386

- Reynolds, C. R., & Shaywitz, S. E. (2009). Response to Intervention: Ready or not? Or, from wait-to-fail to watch-them-fail. School Psychology Quarterly: The Official Journal of the Division of School Psychology, American Psychological Association, 24(2), 130. https://doi.org/https://doi.org/10.1037/a0016158

- Scheltinga, F., Van Der Leij, A., & Struiksma, C. (2010). Predictors of response to intervention of word reading fluency in dutch. Journal of Learning Disabilities, 43(3), 212–228. https://doi.org/https://doi.org/10.1177/0022219409345015

- Schimmack, U. (2016). A Revised Introduction to the R-Index. Replicability-Index: Improving the Replicability of Empirical Research. Online: 2016.01. 31. https://replicationindex.com/2016/01/31/a-revised-introduction-to-the-r-index/

- Schönbrodt, F. D. (2018). p-checker: One-for-all p-value analyzer. http://shinyapps.org/apps/p-checker/

- Shaw, S. R., & Pecsi, S. (2020). When is the evidence sufficiently supportive of real‐world application? Evidence‐based practices, open science, clinical readiness level. Psychology in the Schools, 58(10), 1891–1901. https://doi.org/https://doi.org/10.1002/pits.22537

- *Simmons, D. C., Coyne, M. D., Hagan-Burke, S., Kwok, O.-M., Simmons, L., Johnson, C., … Crevecoeur, Y. C. (2011). Effects of supplemental reading interventions in authentic contexts: A comparison of kindergarteners’ response. Exceptional Children, 77(2), 207–228. https://doi.org/https://doi.org/10.1177/001440291107700204

- Simonsohn, U., Nelson, L. D., & Simmons, J. P. (2014). p-Curve and effect size: Correcting for publication bias using only significant results. Perspectives on Psychological Science, 9(6), 666–681. https://doi.org/https://doi.org/10.1177/1745691614553988

- Snook, I., O’Neill, J., Clark, J., O’Neill, A.-M., & Openshaw, R. (2009). Invisible learnings?: A commentary on john hattie’s book – ‘visible learning: A synthesis of over 800 meta-analyses relating to achievement’. New Zealand Journal of Educational Studies, 44(1), 93–106.

- Stanley, T. D., & Doucouliagos, H. (2014). Meta-regression approximations to reduce publication selection bias. Research Synthesis Methods, 5(1), 60–78. https://doi.org/https://doi.org/10.1002/jrsm.1095

- Stanovich, K. E. (1986). Matthew effects in reading: Some consequences of individual differences in the acquisition of literacy. Reading Research Quarterly, 21(4), 360–407. https://doi.org/https://doi.org/10.1598/rrq.21.4.1

- Stecker, P. M., Fuchs, D., & Fuchs, L. S. (2017). Progress monitoring as essential practice within Response to Intervention. Rural Special Education Quarterly, 27(4), 10–17. https://doi.org/https://doi.org/10.1177/875687050802700403

- Sterne, J. A. C., Savović, J., Page, M. J., Elbers, R. G., Blencowe, N. S., Boutron, I., … Higgins, J. P. T. (2019). RoB 2: A revised tool for assessing risk of bias in randomised trials. BMJ, 366, l4898. https://doi.org/https://doi.org/10.1136/bmj.l4898

- Torgesen, J. K., Rashotte, C. A., & Wagner, R. K. (1999). TOWRE: Test of word reading efficiency. Pro-

- van Assen, M. A., Van Aert, R. C., & Wicherts, J. M. (2015). Meta-analysis using effect size distributions of only statistically significant studies. Psychological Methods, 20(3), 293–309. https://doi.org/https://doi.org/10.1037/met0000025

- Vaughn, S., Wanzek, J., Murray, C. S., & Roberts, G. (2012). Intensive interventions for students struggling in reading and mathematics: A practice guide. RMC Research Corporation, Center on Instruction.

- Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 36(3), 1–48. https://doi.org/https://doi.org/10.18637/jss.v036.i03

- Walwyn, R., & Roberts, C. (2015). Meta-analysis of absolute mean differences from randomised trials with treatment-related clustering associated with care providers. Statistics in Medicine, 34(6), 966–983. https://doi.org/https://doi.org/10.1002/sim.6379

- Wanzek, J., Stevens, E. A., Williams, K. J., Scammacca, N., Vaughn, S., & Sargent, K. (2018). Current evidence on the effects of intensive early reading interventions. Journal of Learning Disabilities, 51(6), 612–624. https://doi.org/https://doi.org/10.1177/0022219418775110

- Wanzek, J., & Vaughn, S. (2007). Research-based implications from extensive early reading interventions. School Psychology Review, 36(4), 541–561. https://doi.org/https://doi.org/10.1080/02796015.2007.12087917

- Wanzek, J., Vaughn, S., Scammacca, N., Gatlin, B., Walker, M. A., & Capin, P. (2016). Meta-analyses of the effects of Tier 2 type reading interventions in Grades K–3. Educational Psychology Review, 28(3), 551–576. https://doi.org/http://dx.doi.org/10.1007/s10648-015-9321-7

- Woodcock, R. (1998). Woodcock reading mastery tests–revised, normative update (WRMT-Rnu). American Guidance Service.