?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

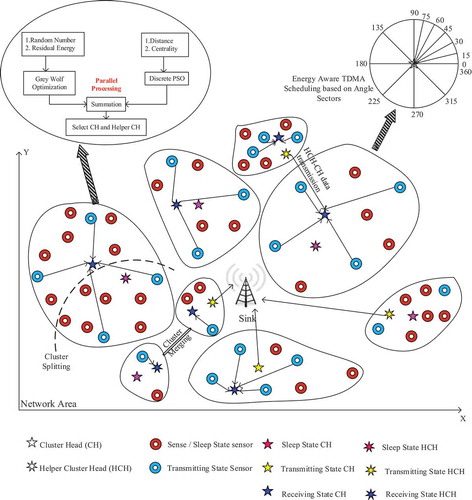

Network lifetime remains as a significant requirement in Wireless Sensor Network (WSN) exploited to prolong network processing. Deployment of low power sensor nodes in WSN is essential to utilize the energy efficiently. Clustering and sleep scheduling are the two major processes involved in improving network lifetime. However, abrupt and energy unaware selection of cluster head (CH) is non-optimal in WSN which reflects in the drop of energy among sensor nodes. This paper addresses the twofold as utilization of sensor nodes to prolong the node’s energy and network lifetime by LEACH-based cluster formation and Time Division Multiple Access scheduling (TDMA). Clusters are constructed by the design of an Enhanced-Low-Energy adaptive Clustering Hierarchy protocol (E-LEACH) that uses parallel operating optimization (Grey Wolf Optimization (GWO) and Discrete Particle Swarm Optimization (D-PSO)) for selecting an optimal CH and helper CH. The fitness values estimation from GWO and D-PSO is concatenated to prefer the best optimal CH. E-LEACH also manages the cluster size which is one of the conventional disadvantages in LEACH. CHs are responsible to perform energy-aware TDMA scheduling which segregates the coverage area into 24 sectors. Alternate sectors are assigned to be operated on any one of the three states as sense, transmit and sleep. Lastly, for mitigating packet loss, a channel is chosen between CH and sink node using the dynamic fuzzy algorithm. The extensive performances are evaluated in terms of energy consumption, throughput, delay, packet loss and network lifetime.

PUBLIC INTEREST STATEMENT

Wireless sensor network has become widely used in several applications including IoT, environmental monitoring, fire detection, etc. Wireless sensor networks (WSN) consume a lot of energy during data transmission compared with sensing and computational phases. In recent years many studies focus on reducing energy consumption and therefore enhancing the network lifetime. Low-Energy Adaptive Clustering Hierarchical (LEACH) protocol is popular in WSN that is proposed to increase the network lifetime by clustering the network for data aggregation. However, LEACH is conventional it existed with certain problematic issues that are solved in many previous works. In this work, we design a wireless sensor network that is deployed with sensor nodes and sink node. Our main objective is to minimize energy consumption of sensor nodes and prolong the network lifetime. This objective is obtained by undergoing enhanced clustering using LEACH protocol, energy-aware TDMA scheduling, efficient channel-based data transmission.

1. Introduction

Wireless Sensor Network (WSN) is composed of small power-constrained sensor devices that sense and report the data towards the sink node. For the utilization of limited energy of sensors, clustering is the solution that not only impacts on energy but also throughput and delay (Rostami et al., Citation2018). WSN is subjected to three limitations as battery power, computation, sensor mobility and communication capability. The major challenges in clustering are CH selection, cluster formation and management of the balanced number of cluster members (CMs). Different clustering protocols were proposed, among which LEACH is also a conventional protocol in WSN. Clustering takes into account certain parameters as energy efficiency, location, mobility and complexity.

LEACH protocol has two processing phases as a setup phase and steady phase. In the setup phase sensors are constructed into groups, i.e. clusters and then in steady state the data aggregation and transmitting the sensed data are performed. Due to the limitations of LEACH, many other extended versions of LEACH have been proposed (Zhao et al., Citation2018). In order to improve LEACH, the CH is selected by considering parameters as energy and the network address. CH threshold value is dynamically varied using network address. LEACH protocol is enhanced with the selection of two levels CH (TLCH) (Amodu & Mahmood, Citation2016). Energy minimization has introduced this approach with the selection of two CHs, where one is nearer to Base Station (BS) and the other is based on energy constraint. Hereby, the selection of two CH ensures with the limited drop of energy on the network. Energy saving is also presented by creating unequal clusters based on Particle Swarm Optimization (PSO) (Kaur & Kumar, Citation2018). Master CH is elected using PSO which takes into account the location and residual energy. Swarm optimization is a stochastic technique developed from the social behavior of the bird food flocking.

This hierarchy protocol LEACH is supposed to deal with its pros and cons (Al-Shalabi et al., Citation2018). TDMA is associated with LEACH protocol for the assignment of sleep mode to sensor nodes for accomplishing the goal of low energy consumption. In conventional LEACH procedure, the CH is chosen from random numbers without considering any sensor constraint. The weakness of LEACH is that it has a larger possibility for the same sensor to be selected as CH repeatedly that drops a larger amount of energy. LEACH-eXtended Message-Passing (LEACH-XMP) distributed algorithm that gathers data from sensor nodes (Kang et al., Citation2018). The CH is selected based on the message exchanges between nodes; therefore, clusters can be constructed by individual nodes. LEACH-Mobile Energy Efficient and Connected (LEACH-MEEC) routing protocol increases the alive sensors in the network (Ahmad et al., Citation2018). Connectivity between nodes is determined using distance formulation and then the density of sensor nodes is calculated to select CH. Appropriate CH selection using node characteristics assists with the effective utilization of energy among nodes.

Energy consumption is also reduced by incorporating sleep/wakeup schedules among sensor nodes. Connectivity and energy-efficient algorithm for sleep scheduling is addressed in WSN (Wang et al., Citation2019). Based on determined K value, the sensor node’s sleep and wakeup slots are assigned. Connectivity of each node is calculated using the closeness of the sensor nodes that are participating WSN. The two constraints that are taken into account are coverage and connectivity are applied on an improved genetic algorithm (GA) (Harizan & Kuila, Citation2018). Along with these coverage and connectivity parameter, two other objectives are taken into account a number of sensors and energy level. The target points in the network area are completely covered and it maintains connectivity with BS. Here the sensors with larger energy have more preference for scheduling. Improved GA performs three major operations as mutation, cross overwhelmed and selection. The new population is generated from mutation based on which fitness value is determined.

Clustering-based scheduling is also designed using an optimization algorithm (Guru Prakash et al., Citation2018). Artificial Bee Colony (ABC) is used to perform clustering with distributed scheduling. Fitness function is estimated residual energy and transmission power for CH election. TDMA schedule is proposed whose scheduling order is based on the contiguous link. In WSN, there is a possibility of multiple sensors submitting similar data to head nodes due to their deployment. Hence, similarity measure-based sleep scheduling is modeled with the assurance of energy efficiency (Wan et al., Citation2018). Fuzzy matrix is applied for measuring the similarity degree between nodes and then redundant nodes are predicted before scheduling. If a node is identified as redundant, then that node is assigned with the state of sleep then for the following round the scheduling is carried out by updating flag information. Sleep scheduling is incorporated with Q-learning which is a self-learning system that adaptively allots time slots (Yao et al., Citation2018). Q-table maintains the slot information based on which the sensor state is defined. The participation of Markov chain model is also involved in selecting time slots. Therefore, the challenging issue of energy consumption is handled intelligently by using clustering and scheduling the participating sensor devices that are deployed in the network. However, sleep scheduling is incorporated either randomly or by measuring some constraints, it tends to have a considerable consumption of energy.

To manipulate the lifetime of the network, an enhanced-LEACH and an angle sector-based energy-aware TDMA scheduling are presented in this paper. E-LEACH address mitigation of energy consumption in clustering by selecting a CH followed by management of cluster size and energy-aware TDMA scheduling brings extensive energy minimization by splitting the CH’s coverage into 24 sectors and assign time slots. Then, unnecessary packet loss also consumes higher energy due to frequent sensing and re-transmitting the data. The dynamic fuzzy-based channel selection method is proposed for preferring a bets channel for data transmission.

1.1. Contribution of this paper

The major contributions of this paper are illustrated in the following,

Improvement of network lifetime in WSN by composing the network with E-LEACH, energy-aware TDMA scheduling and best channel selection that mitigates reasonable energy consumption in sensors. The conventional LEACH protocol is enhanced by the proposed E-LEACH with the selection of best CH and helper CH using parallel operating optimization algorithms. As a result, both fitness values are combined to select best of best CH and helper CH.

The optimization algorithms GWO and D-PSO are operated parallel in order to minimize the processing time of the algorithms. On the other hand, energy consumption will also be lesser on selecting an optimal CH and helper CH which avoids frequent selection. The four constraints preferred for selection are random number, residual energy, distance and centrality. Each optimization processes with two-node constraint and they are combined into one.

Probability link addition and criticality index are applied to decision-making method for managing the cluster size which is one of the demerits in LEACH. Based on the estimated metric the decision is made and either the cluster is split into two or merged into one.

Assignment of equal timeslots in TDMA is the major demerit which is resolved by the designed energy-aware TDMA scheduling. CH segregates its coverage into 24 sectors based on angle and then timeslots are allotted for members alternatively so that no node requires waiting time for transmission and also it will have equal time at a sleep state.

Dynamic Fuzzy-based channel selection method associates to select the best channel for transmitting the aggregated data to sink without any packet loss. Received Signal Strength (RSS), channel capacity and packet error rate are the three parameters computed in fuzzy to select a channel.

The complete network lifetime improvement in WSN is experimentally simulated in Network Simulator 3 and the performance metrics are evaluated. The better achievement of this proposed work in terms of delay, consumption of energy, throughput, counts of alive nodes and packet loss.

1.2. Paper layout

This paper is organized into following sections, section 2 discusses with previous works on the clustering and scheduling, then Section 3 addresses the major problem existed in WSN, Section 4 presents novelty of the proposed work that aims to improve network lifetime using E-LEACH, energy-aware TDMA and channel-based data transmission, Section 5 brings out the simulation outcomes of the proposed work with comparative analysis and Section 6 concludes this paperwork and defines the possible future directions.

2. Related work

In this section, the previous works worked on improving network lifetime in WSN are discussed with their disadvantages and limitations. An energy-efficient protocol based on LEACH was proposed to overcome the conventional issues of LEACH (Huang et al., Citation2018). LEACH is cluster-based routing protocol that constructs cluster and gathers data to transmit it. Fuzzy C-means (FCM) is combined with this new routing algorithm. Clustering centers are estimated from FCM, if the cluster center is satisfied then the nodes join cluster based on distance else cluster center is recalculated. Further improvements in clusters are undergone by using the parameters as node membership degree, energy weighting factor and the distance weighting factor. Using these factors, cluster a head was selected at the determined center. The center associated CH selection can select nodes present only in that region, even if the factor is not up to the level. An enhanced three-layer hybrid clustering mechanism (ETLHCM) was addressed to limit the control packets for balancing the energy (Ullah et al., Citation2019). In this work, the sensors are divided as different levels in accordance with the energy. A grid head was selected to gather the information that is collected from CHs, Grid head was selected by FCM approach that includes distance and residual energy estimation based on which the highly prioritized node was selected. Then, CH was selected using conventional LEACH procedure by additionally including residual energy. TDMA-based scheduling with equal time slots are allotted for data transmission which requires waiting time for transmission if the neighboring node is at sleep state.

Combined residual energy-based distributed clustering and routing (REDCR) was designed with Cuckoo-Search (CS) algorithm and LEACH protocol (Ghosh & Chakraborty, Citation2019). CS algorithm was enabled to determine the CH positions and total numbers of CHs. Super CHs were deployed nearer to BS for gathering the data from CH and deliver to BS. This super CH was selected by coordination of all the elected CHs for supporting data transmission. The data transmitting nodes are only active where it was defined that all the other residual CM at a sleep state. The CH and super CH have to be active at all time since anyone of the CM will be transmitting, so consumption of energy consumption at CHs will be higher and hence it requires repeated head selection. Optimal CH selection was extensively studied in several WSN for balancing energy constraint using multi-criteria decision-making algorithm. In (Rajpoot & Dwivedi, Citation2019), optimal cluster head selection and ranking using MADM approaches called TOPSIS were designed. TOPSIS was well-known multi-criteria-based decision-making approach that ranks the results. In this work, eleven attributes were considered that are composed of beneficial and non-beneficial attributes. The attributes are sensor coverage, sink connectivity, remaining power, three different distances (Max distance from BS, Average distance from BS and Average distance from CHs), required power, average lifetime and others. The involvement of multiple attributes for optimal head selection may be a better solution; however, it consumes time for computation as well as its energy. A combination of clustering and routing was presented with distributed fuzzy logic for improving energy efficiency (Mazumdar & Om, Citation2018). The designed routing algorithm was enabled to handle multihop-based data transmission. Two fitness values are determined from individual fuzzy systems in which one uses energy level and distance, then the other fuzzy uses neighbor density and neighbor cost. Using these two fitness values from fuzzy, the mapping rules were generated for predicting cluster radius. Each unequal cluster was elected with a CH in accordance with the distance closer to BS and higher residual energy. After estimating the cluster radius, unequal clusters were created; however, it impacts the cluster head with higher energy drop due to the unequal size of a cluster.

In WSN optimization algorithms were presented to address the challenge of energy efficiency in the deployed network by enabled efficient data gathering. The scalability offered optimal CH selection by using improved Gravitational Search Algorithm (GSA) integrated with the PSO algorithm that performs hierarchical clustering and also it computes new cost function (Morsy et al., Citation2018). Here one-hop clusters were created and optimal CHS were elected. The two phases performed in this work are neighbor discovery phase and flooding phase. The significant constraints as the residual energy and distances between nodes and BS play a major role in electing an optimal CH. To maximize network lifetime, traditional TDMA scheduling was performed. Integration of two algorithms makes the process lengthier, i.e. it consumes larger time to elect CH based on a cost function. Data aggregation was the main process concentrated for reducing network overhead and traffic that consumes larger energy (Mosavvar & Ghaffari, Citation2019). The clustered nodes are categorized into two as active and inactive with respect to certain criteria. Clustering-based firefly algorithm was proposed that aggregates data by taking into account energy consumption and distance. Periodical activation of sensor nodes tends to obtain a lesser amount of duplicate data. The aggregated data was transmitted to sink from CH by directly or via another CH. Here to avoid packet loss, the distance was estimated before transmitting the aggregated data from CH to sink. Honeycomb architecture was presented to construct an integration of geographical and hierarchical routing for successful data delivery of gathered data to sink (Rais et al., Citation2019). CHs were selected by knowing the location of sensors, though the location of sensors is static they were not varied for each round. Repeated selection of the same node as CH will drop higher energy and in some cases, poor sensors will be chosen as CH due to their positioned location.

A hierarchical clustering-task scheduling policy (HCSP) was proposed with the processes of cluster preserving, cluster splitting, cluster merging and mix-reclustering (Neamatollahi et al., Citation2018). This distributed multi-criteria clustering (DMCC) protocol was applied to HCSP to perform clustering and task scheduling. CHs were selected with the estimation of the remaining node’s energy and score value. The score value was predicted using degree and centrality factor. Mix-reclustering tends to increase CH workload; therefore, it consumes a larger amount of energy. A Distributed Energy-efficient Adaptive Clustering Protocol (DEACP) was addressed for balancing the energy dissipation of sensor nodes (Gherbi et al., Citation2019). Sleeping control rules were applied to mitigating the consumption of energy. By this scheduling, the CHS are always active, since they gather data however it drains energy faster than the ordinary nodes. The challenging issues and limitations in WSN for maximizing the network lifetime are detailed, from this survey our proposed work overcome the previous limitations.

3. Problem definition

An energy-balanced routing protocol (EBRP) was developed using K-means++ algorithm for dividing the nodes into clusters based on their positions and fuzzy logic for CH selection (Li Lin & Donghui, Citation2018). Fuzzy rules were generated from GA that performs selection, crossover and mutation processes. Distance between sensor and sink as well as the distance from sensor and cluster center along with the remaining energy were involved for head selection. The execution of pre-defined 45 rules for selecting a CH was tedious and GA results with higher computation time. Further data transmission is performed based on TDMA scheduling. Inappropriate selection of k-value in K-means++ algorithm leads to poor clustering. In (Sert et al., Citation2018) Two-Tier Distributed Fuzzy Logic-Based Protocol shorted as TTDFP with a set of 27 rules was applied for clustering and routing. The distance, remaining energy and relative node connectivity were taken into account for the clustering phase. In this case, the residue energy was determined using relative distance and average link. Cluster head was selected by determining the connectivity of a node which has ignored energy in the sensor network. The fuzzy rules required to be altered, since the change in relative node connectivity also impacts the competition radius.

A differentiated data aggregation routing (DDAR) scheme was presented based on the satisfaction of Quality of Service (QoS) (Xujing et al., Citation2018). This network was constructed with the following entities: sink node, sensor nodes and aggregator nodes. The aggregator was responsible to receive data and forward the collected data to sink. On the other hand, sensors will be in sleep state, when the aggregators are transmitting data to sink. This network was deployed with 57 aggregators and 70 sensors, here the aggregators cover nearly 85% of unnecessary sensors, since the aggregator node was equipped to gather data from multiple nodes. Also, the sensor node selects aggregators based on the service level, if no aggregator is available to satisfy the service level, then without any option it prefers a lesser satisfying service level from the list. Data aggregation with Scheduling was studied in WSN. A fast, energy-efficient and adaptive data collection protocol was proposed in multi-channel-multi-path for channel assignment scheduling, packet forwarding (Liew et al., Citation2018). Each cycle was composed of scheduling sub-cycle and packet forwarding sub-cycle. The detection also includes the traffic load, where higher traffic load has many pairs and at lower traffic, the nodes can be put in sleep mode. This works well in higher traffic load; however, it consumes higher energy consumption that minimizes network lifetime. Based on the problems defined in these previous works, an energy-efficient WSN architecture is proposed in this work.

4. Proposed lifetime improved WSN system

4.1. Improved WSN model

The proposed WSN is constructed to prolong the network lifetime by undergoing clustering, scheduling and data transmission. This system model is developed with the operations performed by E-LEACH, energy-aware TDMA scheduling and channel-based data transmission. The proposed WSN system model is composed of sensor nodes and a sink. Let the proposed system model consider n number of sensor nodes represented as and the sink as

. In this proposed work, energy consumption in nodes is minimized by handling three key processes as (i) clustering—best CH selection and cluster size management in E-LEACH, (ii) TDMA scheduling—angle-based timeslot assignments and (iii) dynamic fuzzy-based transmission—preferring the best quality channel helps to avoid packet loss that reflects in minimized energy consumption.

The major assumptions in this proposed WSN system model are illustrated below:

Sensor nodes deployed in the network stationary and they randomly distributed over the network using a uniform distribution model

Deployed sensors are homogeneous with similar functionalities to detect events

Sink node is present at the center which is static and it aggregates data from CH

Each sensors node is composed of sensing element, transmitter and receiver

The communication range of each sensor node is similar, i.e. 400 m is the range of the sensor within which it senses and communicates.

The initial amount of energy for the sensor nodes are identical during deployment

CMs directly communicate (single-hop) to the CH in this model

This work overwhelms the conventional limitations of LEACH and proposes a novel TDMS scheduling for mitigating energy consumption. Lastly, the fuzzy logic is defined to the determine the dynamic threshold value for channel selection that ensures better data transmission from CH to sink

The sensor nodes deployed in this system model are adopted with the first-order radio model that defines the consumption of energy in each sensor (Deyu Lin & Wang, Citation2019). Energy consumed by individual sensor node for transmitting -bit packets is formulated as

From the above expression, the energy transmission at

sensor node is defined from

that represents the energy consumed by the transmitter unit,

is the propagation loss exponent that depends between

and

represents the transmitter amplifier. This energy consumption model is estimated between nodes whose distance is denoted as

if the value of

represents the free space model which gradually increases by

that defines the multipath model.

Then, energy dissipation at receiver for receiving

-bit message is defined as follows:

Based on this proposed work the energy model is modeled to estimate the energy consumption of the sensor node. This system model is used for our simulation. The proposed system model is depicted in Figure . A set of clusters with CH and helper CH (HCH) is shown with different scheduled states. In this work, the scheduling slots are also allotted for CH and HCH for balanced energy dissipation. This ensures with limited energy drop at CHs that minimizes frequent selection of head. In accordance with this system model, the proposed algorithms are applied.

4.2. Enhanced-LEACH

The conventional challenges of LEACH as a distributed selection of CH, variable cluster size and inefficient selection of CH tend to reduce network lifetime. The optimal CH means the selection of best CH which enables to improve its sustainability as a CH that eventually reduces frequent energy required for clustering. Due to this reason, the best node is selected as CH to prolong the network lifetime. The use of two optimization algorithms increases accuracy in preferring one best node as CH. To prolong the lifetime of the network, an E-LEACH is developed by resolving the key challenges. E-LEACH is operated on three sequential phases as setup phase, maintenance phase and steady phase. Hereby, the working of E-LEACH is detailed in the following.

(i) Setup Phase—

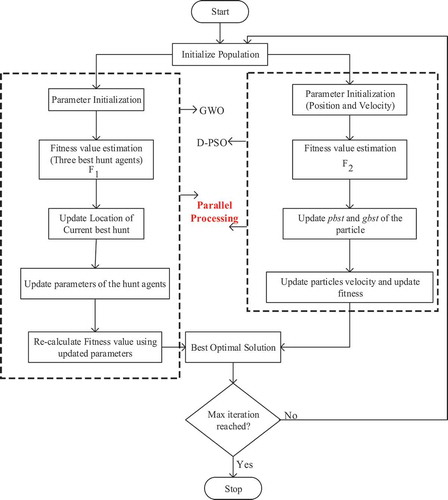

This is the initial phase performed in E-LEACH for selecting CH and HCH. The parallel processing optimization algorithm is presented to select optimal CH and HCH. The GWO takes a random number and residual energy as input whereas D-PSO takes distance and centrality as input.

Grey Wolf Optimization: This algorithm is developed from swarm intelligence based on the group hunting behavior of grey wolves. First initialize the parameters as search agent, variable size, vectors and a maximum number of iteration. Then, generate a random number of wolves and compute fitness value. The fitness value consists of a random number and residual energy. Assume the random number that ranges between

,

the sensor node determines threshold

using probability

for current round

.

where denotes the sensor nodes that are not CH in prior rounds. The sensor node having a lesser random number with respect to the pre-defined threshold has a higher priority to be head for this present round. Let residual energy

be the amount of remaining energy that is present at the current time. Then, residual energy of node

is measured as the difference of energy in total from the amount of energy dissipated till current time. The mathematical computation of

can be expressed as

represent the total energy of an individual node during initial deployment and amount of energy dissipated till the current time. Using these two metric fitness is determined to identify the best hunt agent, i.e. best sensor to act as CH. The fitness value is given as

In GWO three best hunt agents are determined. Then, the location of the current best CH is predicted and then the parameters are updated for an individual node.

On the other hand, D-PSO is executed by initializing population of the particles. Two best values are determined as and

as local best and global best, respectively. The

values are estimated from the fitness value that uses two parameters: distance and centrality, whereas

is identified by taking the best value that is attained from the initialized population. Once the two best values are determined, then their velocity and position id updated using the following expressions,

Here the terms v[], prs[] are particle velocity and current particle’s solution, respectively, and rand() is the random number that varies between [0,1]. Then, the learning factors in D-PSO are c1 and c2respectively. The fitness value in D-PSO is computed from Euclidian distance that is formulated as

Let be two sensors between which the distance is determined using their

coordinates. The position of

is defined as

and the position of sink

is represented as

. Then, the second metric centrality of the nodes is determined by taking into account the node degrees. The centrality of

is formulated as

The centrality of node i.e.

is determined from the degree of node

that is connected with and also taken in account of the degree of nodes of its neighbor

, here the neighbor's degree will be 0 since the nodes are connected in direct one-hop. Based on the larger value of centrality defines that a node connected with more number of neighbors and hence it is nearly at center position to communicate with many other nodes. From these two metric, the fitness value is determined as follows:

This phase is terminated by combining the two fitness values from the parallel operated optimization algorithm. According to the best fitness value CH and HCH are selected until the final iteration is reached.

The parallel processing of GWO and D-PSO for optimal selection of CH and HCH is depicted in Figure . The parallel operations of these two optimization algorithms create a potential impact on the execution time for selecting the optimal head. Here the network lifetime is increased by optimal selection of head which can be sustained for a longer time in the network.

(ii) Maintenance Phase—

This phase is presented to address the maintenance of cluster size. Unbalanced cluster size is one of the challenging issues in LEACH. In this work, estimation of the probability of link addition and criticality index for making a decision either to merge cluster or split cluster. The probability of link addition is expressed as

From the equation, (11) ,

and

define the number of links added, number of possible links and number of existing links, respectively. Then, the node’s centrality index for

node is computed from the following expression:

denotes the neighboring set of node

which defines

, here the

represents the distance of two nodes as node

and node

,

is the range within which the transmission is possible,

is the dissimilarity ratio that is determined from the neighboring set. The prediction of

is formulated as

The estimated values of this are between

that identifies the difference between neighboring sets of node

and node

. In case if determined value

is small, then the nodes

and

have more number of common neighbors whereas the common neighbors are lesser if the ratio is large. The larger value of

and

will merge the clusters and if the values are lesser then the cluster will be split into two.

(iii) Steady Phase—

The steady phase is the last step of the proposed E-LEACH, in this steady phase, the sensed data from CMs are transmitted to the head. Both the CH and HCH have the capability to aggregate data and transmit data to sink in the network. Here the sensors are allotted with time slots; hence, any one of the head nodes will be awake at each round. CMs directly transmit the data to the head node based on the scheduling slots.

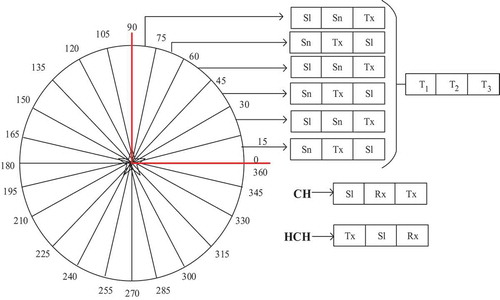

4.3. Energy-aware TDMA scheduling

TDMA scheduling is the process presented to allot sleep/wakeup periods for sensors. On selecting the heads: CH and HCH, then time slots are assigned using the procedure of energy-aware TDMA scheduling. Scheduling is handled by CH which has the knowledge of its CMs position and other information. In this proposed energy-aware TDMA scheduling the CM and CH/HCH sensor nodes are subjected to swipe between four states as,

Sense State—In this state, the sensors radio is turned ON for sensing the environment. According to the sensing time, the environmental changes are measured by CMs.

Transmit State—In transmit state, the CMs begin to transmit the sensed measurements to CH or HCH i.e. wither one will be receiving data at a time.

Receive State—In this receive state, the sensed information is collected for delivering it to sink node.

Sleep State—In this sleep state, the CM’s radio is turned OFF which will not perform any work as sensing or transmitting.

In energy-aware TDMA scheduling the CMs are supposed to move on three states as sense, transmit and sleep, similarly the CH/ HCH is associated with three states as transmit, receive and sleep. The head nodes do not perform sensing since they are responsible to aggregate the data, further transmit it towards the sink node. However, in the second round if the head node is changed to CM then it needs to sense the environment and report the measurements. Figure shows the three different states for CM and CH/HCH under three equal time slots as and

.

The CH in each cluster is in charge of assigning time slots based on energy-aware TDMS scheduling. Initially, the elected CH splits its communication range into 24 sectors by assuming the entire communication range as 360°. On dividing the coverage into 24 sectors the difference will be 15° i.e. . As the nodes are distributed in the network area, the individual sector will be composed of more than one sensor node.

After partitioning the timeslots are allotted for the CMs, HCH and CH by its own. The 24 splits by CH and timeslots for 6 divisions are shown in Figure . Similarly, the timeslots are assigned to all the sensors within the cluster. At timeslot the CMs at first division, i.e.

-

is at sensing state

, whereas the CMs at second division, i.e.

-30

is at sleep state

. On the other hand, the CH is at

state and HCH is at transmitting

state. The

state represents that the HCH delivers the aggregated data to sink; hence, obviously the HCHs will be in

state in next timeslot. Next, in

CMs at first division, i.e.

-

is at

, whereas the CMs at second division, i.e.

-30

is at

. So, in second timeslot some CMs are transmitting which is received by CH whose state is receiving

at

. Hereby the timeslots are alternately arranged for the sensor nodes in the communication range. According to this scheduling, CH and HCH are appointed to balance the consumption of energy, which ensures the sustainability of the lifetime of head nodes for a longer period. Based on these scheduled slots, any one of the head node will be active at each time period, while they also have sleeping time which will prolong the lifetime. By enriching the lifetime of head nodes, the frequent selection of head is also mitigated. This scheduling also increases the number of alive nodes since all the CMs are assigned with sleep state.

Energy-aware TDMA scheduling having equal timeslots for sleeping and other states ensures with the enhancement of network lifetime along with the improvement of CHs and HCHs lifetime.

4.4. Channel-aware data transmission

CHs/HCH aggregate the sensed data from CMs during receiving state, and then the data are transmitted to sink during transmission state. In transmission state, the head performs a dynamic fuzzy algorithm for selecting the best channel to transmit the data without any packet loss. The best channel is selected from three significant channel parameters as RSS, channel capacity and packet error rate. All the three parameters are estimated and they are considered as input in fuzzy. Once the channel is selected for transmission, then its feedback is loaded into the interference engine. Using this feedback on the channel, the threshold is dynamically adjusted. Since the channel conditions are not the same at all the time, based on the received feedback the threshold is dynamically varied for selecting a bets channel for current transmission.

RSS indicator is defined as the measurement of power that is present in the particular signal. Estimating RSS before transmitting the data will affect greatly on the data transmission. This parameter is estimated from

RSS indicator is computed from is the transmit power,

is the antenna gain and

is the path loss. RSS differs for each channel, it is not maintained static and hence this parameter is involved in predicting the best channel. Then, the second metric channel capacity is measured using Shannon-Hartley theorem which enabled to maximize the information rate. This parameter is mathematically formulated as follows:

This channel capacity is determined for the channel in terms of bits/second.

denotes the bandwidth present in the channel and

is signal-to-noise ratio (SNR) that is composed of signal levels

and noise levels

. The SNR plays a significant role in the channel using which the data transmission can be performed without any error. The third metric is the packet error rate that is determined based on the presence of the number of readable slots in the aggregated data. Readable data is defined as the data without any error in the aggregated packets. These three metrics are considered as input in the fuzzy logic system to define rules and the best channel is selected.

Fuzzy logic is a system that computes results based on the degrees of truth. Using the three metrics, our best channel estimation is performed from the rules defined in Table . Fuzzy logic is a system that consists of three components as fuzzification, interference engine and defuzzification. Input parameters are fed into fuzzification which converts the input into fuzzy sets as crisp values. Then, interference engine calculates the degree of matching for the given input and the rules that are previously built. Lastly, defuzzification converts based the fuzzy sets into crisp values and results with the decision.

Table 1. Fuzzy rules for channel selection

Fuzzy logic membership functions are ranging between the values of . This brings the most effective solution in determining the best channel. The working procedure of dynamic fuzzy is illustrated in Figure . The result is given based on the rules and hence they are accurate. Since the values of the channel are dynamic, the threshold values for the parameters are also dynamically changed. By selecting the best channel, the CH/ HCH transmits the aggregated data to the sink node.

5. Experimental evaluation

This experimental section deals with the evaluation of the results of the proposed lifetime improved WSN. A detailed simulation environment and comparative analysis are studied.

5.1. Simulation environment

In this proposed WSN system the implementation is performed using network simulator 3 (Ns-3). This Ns-3 is modeled as a discrete-event network simulator that supports all the functionalities of sensor nodes. The concepts in this simulator are programmed with C++ languages and compiled with python language. The sensor senses the environment and the measurements are transmitted as packets. Each packet is composed of a single buffer and it includes a header in which the information is added.

A few main system model specifications are listed in Table which is not limited to this end. The output is ns3 is incorporated by using PyViz package which is a real-time visualization package built on python. The proposed lifetime improved WSN environment is developed with all the above-discussed procedure for clustering, head selection, scheduling and channel selection. By implementing the proposed algorithms in this simulation setup and the results are evaluated.

Table 2. Simulation specifications

5.2. Comparative analysis

The performances of proposed E-LEACH with energy-aware TDMA scheduling in WSN is evaluated in this section by comparing with previous conventional LEACH and TTDFP. LEACH is a clustering-based method similarly TTDFP was also a clustering-based routing method developed to prolong the network lifetime and hence the conventional LEACH and TTDFP method is taken into account to compare the proposed E-LEACH which is also designed for performing routing based the constructed clusters. The LEACH and TTDFP were presented in WSN to improve network lifetime which is the key goal of this proposed E-LEACH. Let us discuss the disadvantages that existed in LEACH and TTDFP methods. In general, the conventional LEACH is subjected to have certain demerits as

CH election is performed without the consideration of energy constraint.

CHs are not uniformly placed into the network area.

Repeated re-clustering consumes a larger amount of energy among nodes.

TTDFP was a clustering, routing process that used fuzzy logic for decision-making. A set of three parameters for clustering and two parameters for routing was applied to fuzzy logic. The major problematic issues in this TTDFP are

The CH selection was based on the connectivity of the particular node, which ignored the major constraint of energy in the sensor network.

Individual sensor node deployed in the network has to check each rule frequently to predict its competition radius.

Fuzzy rules are fed once into the fuzzy is not appropriate since a change in relative node connectivity also impacts the competition radius.

Hereby the designed lifetime improved E-LEACH with energy-aware TDMA is compared with conventional LEACH protocol and TTDFP protocol. The comparison parameters that are taken into account are throughput, delay, packet loss, energy consumption and network lifetime. These parameters play a major role in evaluating the efficiencies of the proposed work. Effectiveness defines the better performances of each work in accordance with the previous research works.

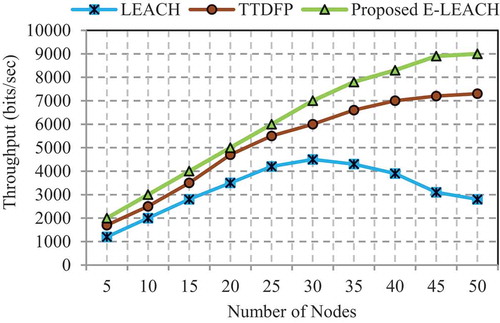

Effectiveness of throughput

Throughput is an important parameter which defines the performances based on the data transmission. The increase in throughput indicates the quality of connectivity which tends to make the transmission successfully. In case if the delivery of data is lesser and then it can be predicted that the network connectivity is poor in the network. Throughput is degraded by two major factors as network congestion and packet loss. Network congestion happens due to the participation of multiple nodes in communication at the same time. In our proposed WSN system model, energy-aware TDMA scheduling is performed which gives perfect timing for nodes. Hence, only the nodes at transmission state will be sending the data to CH/HCH, so network congestion is completely avoided.

Throughput is the measure of successfully transmitted packets at the given time. Figure demonstrates the results obtained for throughput. This plot compares the proposed work with LEACH and TTDFP. By this comparison, the proposed E-LEACH experiences high throughput when compared with LEACH and TTDFP. The increase in throughput is due to the selection of the best channel for transmission, which greatly minimizes packet loss. Hence, the mitigation of packet loss will avoid unnecessary retransmissions which also consume energy. Throughput of proposed E-LEACH is approximately 60% higher than LEACH and 20% higher than TTDFP. However, TTDFP transmits successfully it uses a set of rules that consumes larger time for decision-making.

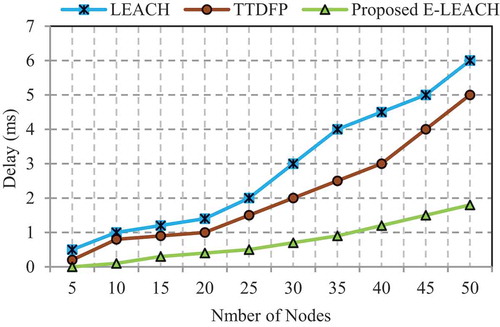

(b) Effectiveness of Delay

Delay is a key parameter that is essential to be determined for predicting the characteristic of data transmission. Delay is measured based on the time periods. The longer time consumption for processing leads to increase time for transmitting the data. This impact reflects due to poor network design.

LEACH, TTDFP and E-LEACH are compared in Figure using the delay parameter. Transmission of data in a short period of time tends to have a lesser amount of delay. Selection of best channel associates with the faster transmission of data packets whereas the other two works considered node characteristics only. In this comparison, LEACH is the protocol which is having a higher delay when compared with TTDFP and E-LEACH. TTDFA also reached delay nearer to LEACH, i.e. only a difference of 1–2 ms is present. As a result reduction of delay implies the better efficiencies of proposed data transmission. In the proposed work, the delay is not much larger than previous works even the computations are handled. In this work, not all the nodes perform computations only the head is responsible to select a channel and deliver the data. The use of eight rules in channel selection will ensure faster computation and hence the data transmission reduces in delay. On the other hand, sleep slots for head nodes will also balance energy consumption.

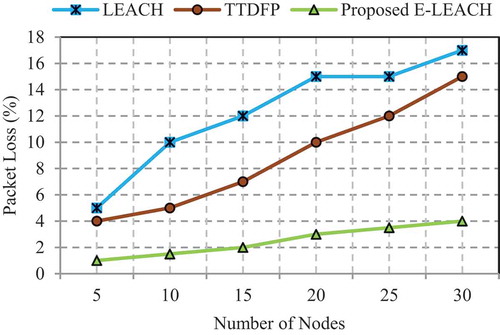

(c) Effectiveness of Packet Loss

Packet loss is a parameter that defines the efficiency of data transmission. Packet losses occur with poor wireless channel condition. Packets from sensors travel across CH and then to the sink node. Loss in packets will tend to degrade the network performance. The performance efficiency of the network in terms of packet loss is evaluated for LEACH, TTDFP and E-LEACH.

Figure illustrates the comparison of packet loss for LEACH,

TTDFP and E-LEACH. The packet loss of LEACH is extremely high with the increase in the number of sensor nodes participation. As per the growth in the number of nodes, the communicating sensors will also be gradually higher. The number of nodes represents that all the nodes perform data transmission at the same time. When compared with LEACH, TTDFP shows better results but it was not better than the proposed E-LEACH. The use of dynamic fuzzy-based channel estimation ensures with the selection of best channel that attains minimized packet loss. Based on the performance of throughput, it reflects over packet loss parameter. However, the proposed has a considerable packet loss which is very low than LEACH and TTDFA.

The average packet loss in each work is shown in Table , where the proposed E-LEACH is very low that the other two works. Let assume 200 packets are transmitted in total, among which nearly 6 to 7 packets are dropped and hence an average of 4% of packet loss occurs in proposed work. The increase in packet loss results in degraded performance. Approximately 12% of packet loss is minimized in E-LEACH when compared with LEACH protocol. Nearly 9% of packet loss is reduced from TTDFP work. This minimization shows improvements in other network parameters and the impact in the proposed system to sustain nodes with prompt data delivery. Increase in packet loss creates poor impacts over the designed system model. The reduction in packet loss shows the effectiveness of the method applied for data transmission.

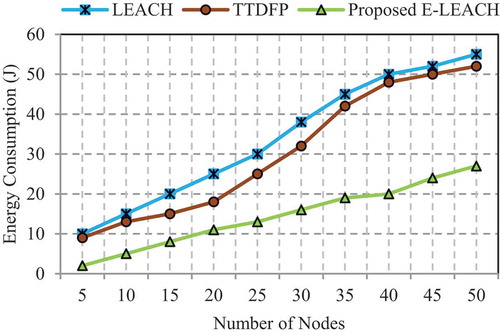

(d) Effectiveness of energy consumption

Table 3. Comparison of average packet loss

The energy consumption in sensors plays a key role in the network WSN due to which sensor nodes are battery-assisted. Sensor nodes consume energy during sensing and data transmission. This energy consumption can be mitigated by effectively designing operations for sensor nodes.

The efficient utilization of a node’s energy is essential to prolong the sensor network lifetime. Figure demonstrates comparative plot for the node’s energy consumption. In this comparison, the conventional LEACH shows higher energy consumption than TTDFP and proposed E-LEACH. Due to the above-mentioned limitations and problematic issues in LEACH, energy consumption is comparatively high. In TTDFP method, it was essential to compute individual rule that will take up time and energy. In proposed work, E-LEACH is presented to select optimal CH/HCH which minimizes frequent selection of head and energy-aware TDMA-based scheduling brings better utilization of energy by saving energy at sleep states. The potentiality of this work is that the scheduling is performed in a balanced way so that the CH/HCH will not lose energy in a short period of time. This work is new to WSN and so the energy consumption is minimized when compared with previous works. The initial energy of each sensor node is 100 J and as an average of processing with 5 nodes, there is only 2 J of energy drained whereas it is 9 J and 10 J drained in TTDFP and LEACH, respectively. Further, the increase in the number of nodes also increases energy drain in previous work. Comparatively, only a few megaJoules is used by the nodes in this work.

In Table the drained energy on processing the number of nodes is depicted. As shown the energy drain in E-LEACH is less due to the performance of best head selection, scheduling and packet transmission using the selected channel. All the process reflects on the reducing of energy drain that prolongs network lifetime.

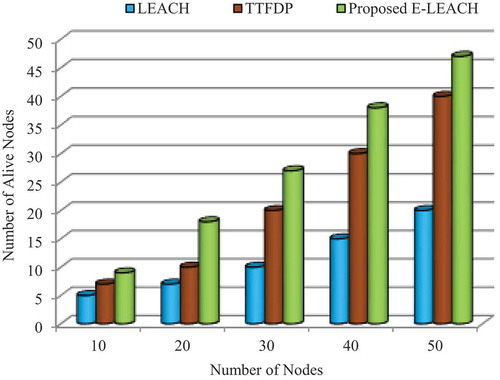

Effectiveness of Network Lifetime

Table 4. Energy drain

The network lifetime is the first most significant metric in WSN which is also the goal of this paper. Network lifetime is measured in terms of the number of sustained alive sensor nodes in the designed network. Increase in network lifetime intimates the best functioning of the system model with proposed algorithms. In WSN network lifetime is mainly improved by performing clustering which is the best solution. However, a poor cluster formation mechanism increases higher energy consumption that impacts on reduced network lifetime. Based on the amount of utilized energy, the network lifetime is determined.

This network lifetime metric is evaluated by determining the total number of alive nodes after completion of simulation time. Figure depicts the comparison of alive nodes with respect to the deployed sensor nodes in the network. Based on higher energy consumption in LEACH and TTDFP, the number of alive nodes is lesser than the proposed E-LEACH. Hence, the proposed lifetime improvement WSN is achieved using the designed E-LEACH and energy-aware TDMA scheduling.

6. Conclusion

In this proposed paper, a network lifetime improved WSN system model is constructed and incorporated with E-LEACH, energy-aware TDMA scheduling and dynamic fuzzy-based best channel selection. Initially, E-LEACH begins to create clusters with optimal head selection. In this work two optimization methods are used as GWO and D-PSO, they are operated parallel in order to minimize the time consumption for selecting the head. Based on these algorithms, a CH and HCH are selected for balancing the energy consumption and also to gather the data concurrently. E-LEACH also associates with the management of cluster size by either splitting or merging, this is performed to avoid unnecessary energy consumption at larger sized clusters. Then, head assigns timeslots for members using energy-aware TDMA scheduling by splitting its communication range into 24 sectors. According to the timeslots, members perform sensing and transmit the sensed data; on the other hand, the cluster head receives data and transmits it to sink. During head transmission, dynamic fuzzy is executed to select the best channel for transmission. Improvements in throughput, packet loss and delay are achieved by the selection of channel and scheduling timeslots. On the whole, the goal of minimizing energy consumption is attained and it impacts in enhancing the network lifetime of the designed WSN. In future, we have planned to test our simulation under a large-scale environment for the peculiar application.

Additional information

Funding

Notes on contributors

Ramadhani Sinde

Ramadhani Sinde works as an assistant lecturer in the School of Computational and Communication Sciences and Engineering at Nelson Mandela African Institution of Science and Technology (NM-AIST). Currently, he under Doctoral Environmental Management Information System project undertaking PhD studies in the field of Information and Communication of Science and Engineering. His research interests include Internet of Things (IoT) network, embedded systems, mobile computing, modelling and performance evaluation of wireless sensor networks, wireless and mobile communication. Mr. Sinde has co-authored more than 10 papers in internationally refereed journals and conferences. He is a Queen Elizabeth Scholarship – Advanced Scholars Program alumni.

References

- Ahmad, M., Tianrui, L., Khan, Z., Khurshid, F., & Ahmad, M. (2018). A novel connectivity-based LEACH-MEEC routing protocol for mobile wireless sensor network. Sensors, MDPI, 18(12), 4278. https://doi.org/10.3390/s18124278

- Al-Shalabi, M., Anbar, M., Wan, T. C., & Khasawneh, A. (2018, August). Variants of the low-energy adaptive clustering hierarchy protocol: Survey, issues and challenges. Electronics, MDPI, 7(8). https://doi.org/10.3390/electronics7080136

- Amodu, O. A., & Mahmood, R. A. R. (2016, November). Impact of the energy-based and location-based LEACH secondary cluster aggregation on WSN lifetime. Wireless Networks, 24(4), 1379–21. https://doi.org/10.1007/s11276-016-1414-9

- Gherbi, C., Aliouat, Z., & Benmohammed, M. (2019, April). A novel load balancing scheduling algorithm for wireless sensor networks. Journal of Network and Systems Management, 27(2), 430–462. https://doi.org/10.1007/s10922-018-9473-0

- Ghosh, A., & Chakraborty, N. (2019, February). A novel residual energy-based distributed clustering and routing approach for performance study of wireless sensor network. International Journal of Communication Systems, 32(7), e3921. https://doi.org/10.1002/dac.3921

- Guru Prakash, B., Sukumar, R., & Balasubramanian, C. (2018, December). A swarm intelligence based clustering technique with scheduling for the amelioration of lifetime in sensor networks. Wireless Personal Communications, 103(4), 3189–3207. https://doi.org/10.1007/s11277-018-6002-0

- Harizan, S., & Kuila, P. (2018, July). Coverage and connectivity aware energy efficient scheduling in target based wireless sensor networks: An improved genetic algorithm based approach. Wireless Networks, 25(4), 1995–2011. https://doi.org/10.1007/s11276-018-1792-2

- Huang, W., Ling, Y., & Zhou, W. (2018, June). An improved LEACH routing algorithm for wireless sensor network. International Journal of Wireless Information Networks, 25(3), 323–331. https://doi.org/10.1007/s10776-018-0405-4

- Kang, J., Sohn, I., & Lee, S. H. (2018, December). Enhanced message-passing based LEACH protocol for wireless sensor networks. Sensors, MDPI, 19(1), https://doi.org/10.3390/s19010075

- Kaur, T., & Kumar, D. (2018, April). Particle swarm optimization-based unequal and fault tolerant clustering protocol for wireless sensor networks. IEEE Sensors Journal, 18(11), 4614–4622. https://doi.org/10.1109/JSEN.2018.2828099

- Liew, S.-Y., Tan, C.-K., Gan, M.-L., & Goh, H. G. (2018, February). A fast, adaptive, and energy-efficient data collection protocol in multi-channel-multi-path wireless sensor networks. IEEE Computational Intelligence Magazine, 13(1), 30–40. https://doi.org/10.1109/MCI.2017.2773800

- Lin, D., & Wang, Q. (2019, April). An energy-efficient clustering algorithm combined game theory and dual-cluster-head mechanism for WSNs. IEEE Access, 7(4), 49894–49905. https://doi.org/10.1109/ACCESS.2019.2911190

- Lin, L., & Donghui, L. (2018, May). An energy-balanced routing protocol for wireless sensor network. Journal of Sensors, 1–12. https://doi.org/10.1155/2018/8505616

- Mazumdar, N., & Om, H. (2018, May). Distributed fuzzy approach to unequal clustering and routing algorithm for wireless sensor networks. International Journal of Communication Systems, 31(12), e3709. https://doi.org/10.1002/dac.3709

- Morsy, N. A., AbdelHay, E. H., & Kishk, S. S. (2018, September). Proposed energy efficient algorithm for clustering and routing in WSN. Wireless Personal Communications, 103(3), 2575–2598. https://doi.org/10.1007/s11277-018-5948-2

- Mosavvar, I., & Ghaffari, A. (2019, January). Data aggregation in wireless sensor networks using firefly algorithm. Wireless Personal Communications, 104(1), 307–324. Springer. https://doi.org/10.1007/s11277-018-6021-x

- Neamatollahi, P., Abrishami, S., Naghibzadeh, M., Moghddam, M. H. Y., & Younis, O. (2018, May). Hierarchical clustering-task scheduling policy in cluster-based wireless sensor networks. IEEE Transactions on Industrial Informatics, 14(5), 1876–7886. https://doi.org/10.1109/TII.2017.2757606

- Rais, A., Bouragba, K., & Ouzzif, M. (2019, March). Routing and clustering of sensor nodes in the honeycomb architecture. Journal of Computer Networks and Communications, 2019, 1–12. https://doi.org/10.1155/2019/4861294

- Rajpoot, P., & Dwivedi, P. (2019, March). Multiple parameter based energy balanced and optimized clustering for WSN to enhance the lifetime using MADM approaches. Wireless Personal Communications, 106(2), 1–49. https://doi.org/10.1007/s11277-019-06192-6

- Rostami, A. S., Badkoobe, M., Mohanna, F., keshavarz, H., Hosseinabadi, A. A. R., & Sangaiah, A. K. (2018, January). Survey on clustering in heterogeneous and homogeneous wireless sensor networks. The Journal of Supercomputing, 75(1), 277–323. https://doi.org/10.1007/s11227-017-2128-1

- Sert, S. A., Alchihabi, A., & Yazici, A. (2018, December). A two-tier distributed fuzzy logic based protocol for efficient data aggregation in multihop wireless sensor networks. IEEE Transactions on Fuzzy Systems, 26(6), 3615–3629. https://doi.org/10.1109/TFUZZ.2018.2841369

- Ullah, M. F., Imtiaz, J., & Maqbool, K. Q. (2019, February). Enhanced three layer hybrid clustering mechanism for energy efficient routing in IoT. Sensors, 19(4). https://doi.org/10.3390/s19040829

- Wan, R., Xiong, N., & Loc, N. T. (2018, December). An energy-efficient sleep scheduling mechanism with similarity measure for wireless sensor networks. Human-centric Computing and Information Sciences, 8(1), 1-22. https://doi.org/10.1186/s13673-018-0141-x

- Wang, L., Yan, J., Han, T., & Deng, D. (2019, May). On connectivity and energy efficiency for sleeping-schedule-based wireless sensor networks. Sensors, MDPI, 19(9), 2126. https://doi.org/10.3390/s19092126

- Xujing, L., Liu, A., Mande Xie,, Xiong, N. N., Zeng, Z., & Cai, Z. (2018, July). Differentiated data aggregation routing scheme for energy conserving and delay sensitive wireless sensor networks. Sensors, 18(7). https://doi.org/10.3390/s18072349

- Yao, L., Zhang, T., Erbao, H., & Comsa, I.-S. (2018, May). Self-learning-based data aggregation scheduling policy in wireless sensor networks. Journal of Sensors, 1–12. https://doi.org/10.1155/2018/9647593

- Zhao, L., Shaocheng, Q., & Yufan, Y. (2018, December). A modified cluster-head selection algorithm in wireless sensor networks based on LEACH. EURASIP Journal on Wireless Communications and Networking, (1). https://doi.org/10.1186/s13638-018-1299-7