?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

The Secant equation has long been the foundation of quasi-Newton methods, as updated Hessian approximations satisfy the equation with each iteration. Several publications have lately focused on modified versions of the Secant relation, with promising results. This study builds on that idea by deriving a Secant-like modification that uses non-linear quantities to construct Hessian (or its inverse) approximation updates. The method uses data from the two most recent iterations to provide an alternative to the Secant equation with the goal of producing improved Hessian approximations that induce faster convergence to the objective function optimal solution. The reported results provide strong evidence that the proposed method is promising and warrants further investigation.

Public interest statement

Unconstrained optimization problems are common in industrial and engineering applications. Much research has been dedicated to devise efficient methods to solve such problems. Secant Quasi-Newton methods constitute the basis for such methods due to their attractive properties. This paper derives a new nonlinear variant of the classical Secant methods that efficiently computes solutions to unconstrained optimization problems.

1. Introduction

The techniques examined in this paper are those employed in the solution of problems of the form

Such issues are common in all types of engineering and manufacturing projects. The above problem is solved iteratively using quasi-Newton methods. For the current iteration i, the gradient of f at is indicated as

and the matrix

is approximates

, the actual Hessian of f. The next iteration Hessian matrix approximation must traditionally satisfy the so-called Secant equation

for

and

.

The new solution approximation is defined as

where the direction vector, obtained through solving

The step length in (2) is computed as the solution to

by means of some line search methodology to satisfy conditions the following Wolfe-Powell- conditions are satisfied (Fletcher (Citation1987))

and

Satisfying conditions of the type in (4) and (5) is necessary to guarantee convergence.

The derivation and coding of the actual Hessian matrix is susceptible to human error and demands considerable storage for large problems. The identity matrix (or some weighted version of it) is usually chosen as the initial approximation to the Hessian, and it is updated step-by-step using the most recent available computed data. It has been demonstrated that such methods exhibit superlinear convergence under reasonable assumptions about the objective functions (Fletcher, Citation1970, Citation1994; Dennis & Schnabel, Citation1979). When the line searches are exact, the methods converge in at most n iterations on quadratic functions (Broyden, Citation1970).

The most numerically successful update formula used to approximate the Hessian on a step-wise basis is the BFGS update and is given as

The global convergence of the BFGS method for convex objective functions has been established by some authors (see, Dai et al., Citation2002; Dennis & Schnabel, Citation1979; Fletcher, Citation1987; Shanno, Citation1978; Shanno & Phua, Citation1978; Xiao et al., Citation2006; Yuan et al., Citation2018, Citation2017). Dai et al. (Citation2002) demonstrate that when the line searches are inexact, the standard BFGS method may fail to converge on non-convex functions. Wei et al. (Citation2004, Citation2006) show that even with accurate-line searches, the classical BFGS method can fail to converge. Li and Fukushima (Citation2001) modified the classical BFGS formula to create a variant of the method that exhibits global convergence even when the objective function f lacks the convexity condition.

2. Variants of the Secant equation

Much research has been conducted in order to develop methods that outperform the classical BFGS update numerically and are globally convergent under reasonable assumptions. In this section, we provide a brief overview of some of the successful methods that have outperformed the classical BFGS. The algorithms’ development is primarily based on the introduction of modifications to the Secant Equationequation (1)(1)

(1) . The concept of using more of the data available at each iteration in the update of the Hessian approximation that would otherwise be disregarded motivates one particularly successful class of approaches documented in the literature. These data include, though not limited to, the readily computed step vectors as well as the gradient difference vectors in (1) from the m latest iterations (m > 1). The standard quasi-Newton methods utilize just from the most recent iteration. We next present a particularly successful class of methods known as the multi-step methods (Ford & Moghrabi, Citation1993, Citation1994, Citation1996).

Applying the Chain Rule is applied to g , for a differentiable path in

,

(for

, and differentiate with respect to

, the result is

In particular, if m is chosen to pass through the most recent point (so that

, say), then Equationequation (6)

(6)

(6) , known as the “Newton Equation” (see, Al-Baali, Citation1985; Broyden, Citation1970; Dennis & Schnabel, Citation1979), presents generally an alternative that the Hessian matrix

satisfies. EquationEquation (6)

(6)

(6) may be regarded as a basis for the Secant equation when m is chosen to be i + 1 (Broyden, Citation1970). In Ford and Moghrabi (Citation1994), the path

is constructed as the polynomial interpoloating the

most recent points

. The polynomial (

, say) is used to approximate the vector

that by differentiating the corresponding polynomial which interpolates the corresponding available gradient points

.

The scalar values correspond to the points

on the curve

as follows

If is a given approximation to

in (6) and we make the following definitions

(where the vectors and

are as defined in (1) and

represents an approximation to

, it is not ureasonable (by (6)) to force the matrix

to satisfy a relation similar to the one in (6). We, thus, have

There remains the issue of choosing values for the τ parameters. One possibile choice is (Ford & Moghrabi, Citation1993)

This choice has the merit of reflecting the distances among the iterates in the space of the variables and updated for each new point.

The multi-step BFGS Hessian update is given by

The main advantage of (10) is in exploiting several recent steps as well as available gradient vectors in contrast to the standard BFGS method that utilizes only latest one-step and the corresponding gradient difference vectors.

Other non-secant methods are motivated by the fact that the classical BFGS formula is confined to using single gradient and step vectors in the construction of the Hessian approximation while neglecting other potentially useful readily computed data. For example, function values might prove to be valuable in the updating process. So, focusing on building better ‘quality’ updates, variants of the classical Secant relation (1) have been explored (see, Ford & Moghrabi, Citation1996; Wei et al., Citation2004; Ortiz et al., Citation2004; G. Yuan & Wei, Citation2010; Yuan et al., Citation2010, Citation2017, Citation2018; Zhang et al., Citation1999). Wei et al. (Citation2006), using Taylor’s series, derived a modification to (1) as follows:

where, and

G. Yuan and Wei (Citation2010) used another alternative, given as:

Some of the recent and well-known Secant-like methods that are motivated by the need to derive accelerated convergence variants of the classical BFGS may be summarized in .

Table 1. Some modifications of Secant equation

Although the methods listed in represent some of the well-known published Secant-variant formulae that in addition to incorporating gradient and step vectors function values obtained from the latest iterate are exploited in the update of the Hessian approximation. To differ, the methods derived in Moghrabi (Citation2017) and Ford and Moghrabi (Citation1993), utilize nonlinear interpolating polynomials, and introduce a completely different Secant-like equation utilizing available data from the two/three most recent steps. The methods of Moghrabi (Citation2017) are based on the idea of performing multiple updates on each iteration so that multiple Secant and Secant-variant conditions are observed. The updates are performed in a manner that ensures the first update obeys (1) while subsequent updates satisfy

where and

, for r and w as in (7) and (8).

A sample of the work done on the derivation of modified Secant equations is summarized in . For interested readers, there are many other similar methods available in the literature (Wei et al., Citation2006; Xiao et al., Citation2006; Yuan et al., Citation2018; G. Yuan & Wei, Citation2010; Yuan et al., Citation2017, Citation2010; Zhang et al., Citation1999). Several of the approaches mentioned here have had their convergence qualities investigated. Yuan et al. (Citation2018), for example, demonstrate global convergence using a less stringent variant of the Powell-Wolfe line search criteria (4) and (5).

Such methods may prove useful in various domains in which the classical BFGS has been a serious contender. One possible domain is in sustainable transportation planning for dealing with the issue of minimizing air pollution, congestion, and noise (Sayyadi & Awasthi, Citation2018). Another possible application could be in the bi-objective inventory model created by Gharaei et al. (Citation2020). The goal of to optimize the lot-sizing of the replenishment while meeting stochastic limitations and calculating the optimal number of lots and volume of each lot. Gharaei et al. (Citation2015) aim to optimize incentives in single machine scheduling in reward-driven systems such that total reward is maximized while limitations such as total reward limitation for earliness and learning, independence from earliness and learning, and others are met. The goal is to maximize overall rewards in the mentioned system by using quadratic rewards for both learning and earliness, where non-secant methods may prove useful. Gharaei et al. (Citation2019) present a mathematical model that combines the buyers’ and vendors’ total costs (TC) in a supply chain (TC) under penalty, green, and quality assurance (QC) rules, as well as a VMI-CS agreement. The goal is to find the optimal batch-sizing policy in the integrated SC with the lowest TC that finds both the number of the vendor’s batches for each of the shipped product lines and the size of the batches shipped to the buyers in order to minimize the integrated SC’s TC while satisfying the stochastic barriers. Due to the complexity of the optimization model, the possibility of employing the methods proposed in this paper for solving the integrated supply chain problem. Another attractive application might be in areas where quality control and green production policies are considered when designing a bi-objective multi-product limited and integrated economic production quantity model. There are stochastic constraints in the model mentioned above. Such research aims to find the best total inventory cost and total profit while keeping stochastic restrictions in mind (see, Gharaei et al. (Citation2021)). Awasthi & Omrani, (Citation2019) propose a goal-oriented strategy for prioritizing sustainable mobility projects based on fuzzy axiomatic design. The affinity diagram technique is used to establish sustainability evaluation attributes. The criteria and alternatives are rated using the fuzzy Delphi technique. Fuzzy axiomatic design provides final rankings for urban transportation initiatives, and the best project(s) are picked for implementation. Sensitivity analysis is used to examine the impact of changes in input parameters on the model’s stability, and this is a possible venue for the method proposed in this paper to prove its usefulness.

Next a new method that is motivated by the same concept of incorporating data available from several of the latest iterations for deriving a new variant to the Secant Equationequation (1)(1)

(1) is developed.

3. A New Non-Secant equation

We will use data from three most recent iterations to derive the new Secant-like relationship that the updated Hessian (or its inverse) must fulfil, just as we did with the nonlinear multi-step quasi-Newton methods (Equationequations (5(5)

(5) –Equation10

(10)

(10) )) discussed earlier, thus choosing

in (6). In particular, an interpolation of the points

,

and

is constructed using a differentiable curve in

,

, such that

,

and

.

The corresponding objective function is modelled as using Taylor’s expansion relation around the point

(corresponds to

as follows

for the τ-values chosen in (10).

Using (12), we have

where and

Choosing the Lagrange representation for , we have (obtained by setting m = 2 in (7) and (8)), we obtain

and

for

,

and, for all τ, the following quantities hold

For the τ-values selected in (10), we have It is not unreasonable then to introduce a generalization of

obtained by plugging in a scaling constant,

(see, Moghrabi, Citation2017). Such scaling gives an easier more convenient mechanism to switch to the standard one-step Secant update method by setting

. Therefore,

Should be chosen to be used in (12) if the true Hessian at

be substituted by its approximation

, as is the case in the implementation of the standard quasi-Newton methods, it is reasonable to require that

or, alternatively,

For the quantities and

as in (14)

and (15), respectively. It follows that

Now, from (13) and (19), we obtain

for and

are as defined in (14)

and (15), respectively.

Upon defining the quantities

and

,

then from (19) we have

EquationEquation (21)(21)

(21) can be rewritten as

for

,

and

The equation obtained in (22) suggests a new Secant-like equation of the form

for as in (21) and

.

The computed search direction is downhill if is positive definite. By analogy with the standard Secant Equationequation (1)

(1)

(1) ,

is positive definite if and only if

is already so and

As this cannot be guaranteed in this new formulation, (23) is replaced with

where

for some positive constant . Relation (24) is an appropriate replacement to (23) since

ascertains the positive definiteness of .

The outline of the new method is as follows

Algorithm NMBFGS

Input: , and set Ho = I. Let iteration count i = 0.

Output: (near) optimal solution

Stop if the size of the gradient satisfies ||gi||

(convergence threshold).

compute di = −Higi.

Minimize,

to determine

using

Cubic interpolation, such that conditions (4) and (5) are satisfied (an O(n) operation).

Compute the new iterate

. If (24) is satisfied, update Hi using (10) with wi replaced by

and

replaced by

in (23), else set

and

in (23) and update to satisfy condition (1) (an O(n2) operation).

Set i = i + 1 and go to 1.

The overall cost of the above method, asymptotically, is the same as the standard quasi-Newton algorithm though practically the recorded savings in both iteration, function/gradient evaluations count differentiate the new method as opposed to others in the same category.

4. Convergence properties

The convergence analysis presented next rests on the following assumptions:

A1. The level set is bounded, for a starting point

.

A2. The objective function f is twice continuously differentiable on D and in an open set M containing D, there exists a constant ƺ > 0 such that

ƺ||x—y||, for all

.

Given that the sequence is a decreasing sequence, then the sequence

generated by the new method belongs to D, and there exists a constant

such that:

A3. The objective function f is uniformly convex, in that there are positive constants l1 and l2 such that

holds for all .

Theorem 1. Let f satisfy assumptions 1 and 2 and be generated by algorithm NBFGS and there exist constants

and

such that

then the following holds

Proof: Since ,

, it is straightforward to show that (27) holds with

replacing

. Using (27) and

, we get

Let us define the set of indices i, for which (27) holds. By the Powell-Wolfe condition (5) and Assumption 2, we obtain

,

Using (26), one gets

By the Powell-Wolfe condition (4), it follows that

Hence,

We now proceed to prove that algorithm NBFGS has global convergence.

Theorem 2. Let f satisfy assumptions 1 and 2 above and generated by algorithm NBFGS. Then, the following holds

Proof. As per Theorem 2, it suffices to prove that (29) holds for all i. By contradiction, assume this is not the case. Thus, there exists a positive constant ƺ such that, for all i,

It is then easy to show that

From (25) and the fact that

it follows that

Thus, from (30) and (31), we obtain

for

The sequence , by theorem 2, for there exist constants

and

such that (29) applies for all i. The proof completes on the basis of the following (see Theorem 2.1 in Dai et al. (Citation2002)).

If there are two positive constants and

such that for all i

and

,

such that for any positive integer j (28) holds for no less than iterations of

.

4.1. Non-asymptotic Superlinear Convergence of the New Method

The analysis presented here is quite similar to that of Jin and Mokhtari (Citation2021). The convergence results presented by the authors apply primarily to the standard BFGS method. We follow a similar approach to prove the superlinear convergence of NMBFGS. To do so, the following assumptions are needed:

A3. The objective function f is twice differentially continuous. It is also convex with convexity parameter and a gradient g(x) which is Lipschitz continuous with parameter

. Thus, we have

A4. The Hessian matrix G(x) of f satisfies the condition

for some constant

Theorem 3. Consider the method described in Algorithm NMBFGS and suppose the objective function f satisfies the conditions in Assumptions A3 and A4. Choose two real parameters, for any arbitrary in (0, 1), choose

> 0 and

> 0 such that

where and

Should the start point

and the initial inverse Hessian approximation

satisfy the relations

Proof. Similar to the proof done in Jin and Mokhtari (Citation2021).Theorem 4 suggests that the sequence of points generated by NMBFGS advance to the optimal solution at a superlinear rate of in the local neighbourhood of the optimal solution. The constants

and

depend on the parameter of the problem being solved. For sufficiently large

, the convergence rate is

There is a compromise between the speed the method converges superlinearly and the size of the local neighbourhood in that increasing the size of and

results in an increase in the size of the vicinity the method converges at a superlinear rate. However, this will lower the speed of convergence since (32) increases therefore, by selecting different values for the parameters

and

both the speed and region of superlinear convergence change.

5. Numerical test results and discussions

Summaries of the numerical results are tabulated in this section. summarizes the list of test problems. The chosen test set is for issues of varying dimensions, allowing for the examination of various test problem sizes. The functions that were tested, and hence the results that were reported, relate to problems that were categorized by size/dimension. Those problem sizes fall into four categories, low (2 ≤ n ≤ 20), medium (21 ≤ n ≤ 40), moderately high (41 ≤ n ≤ 1000) and high (n >1000). The findings result from experiments on 17 different test problems with dimensions ranging from 2 to 100,000. Each of the problems in the list has been tested from four different perspectives. A total of 950 test items were obtained. Moré et al. (Citation1981), Fletcher (Citation1987), Tajadod et al. (Citation2016), and Xiao et al. (Citation2006) provided the test problems.

Table 2. Test problems and dimensions

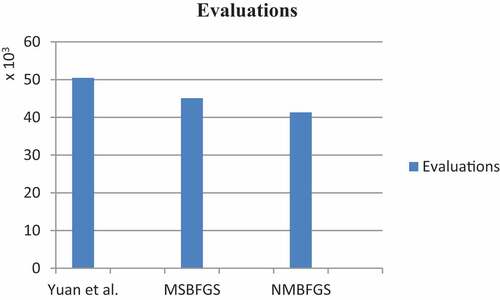

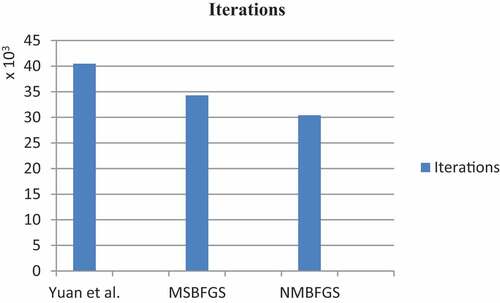

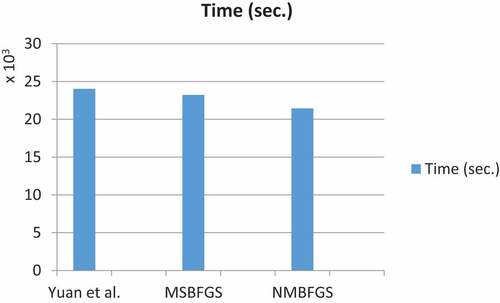

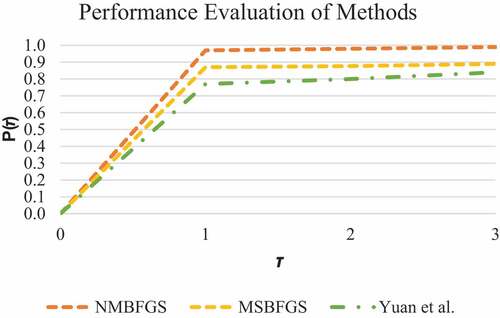

In (8) (see, Ford & Moghrabi, Citation1993), (Ford & Moghrabi, Citation1994, Citation1996), the new algorithm NMBFGS is compared to Yuan et al. (Citation2017) (see ) and the multi-step BFGS (MSBFGS; see, Ford & Moghrabi, Citation1993). The tests are run using Yuan’s technique as a benchmark. presents a summary of the overall findings. show the results of the small, medium, moderately large, and huge problems, respectively. Because there isn’t enough room to provide the figures for each dimension separately, the findings are summarized by dimension category. Iteration, function/gradient assessments, and total execution times are all included in the scores. The average optimality error is also reported for each dimension category. provide a diagrammatic summary for each of function/gradient evaluations, iteration count and timing, respectively. The implementation code is C++ carried out on a 64-bit machine with i7-3770, 3.4 GHZ CPU.

Table 3. Overall iteration, function evaluations count and timing

Table 4. Iteration, function evaluation count and timing-large problems

Table 5. Iteration, function evaluation count and timing-moderately large problems

Table 6. Iteration, function evaluations count and timing-medium problems

Table 7. Iteration, function evaluation count and timing-small problems

For all the methods tested here, the next point is obtained from

by employing a line search algorithm that applies safeguarded cubic interpolation with step-doubling, such that the conditions in (4) are satisfied.

For the standard BFGS method, it is well known that a necessary and sufficient condition for ensuring that the generated updated matrices are positive definite and hence the computed search direction is downhill, is that

(Broyden, Citation1970). By analogy, for the new method MSBFGS, condition (24) is imposed in order to guarantee that

is “sufficiently” positive to circumvent any potential numerical instability in the construction of

. Should condition (24) fail to hold, the algorithm reverts to using an MSBFGS iteration on that particular instance. An initial scaling is applied to the start inverse Hessian approximation using the methods introduced in Shanno and Phua (Citation1978).

It is generally known that is a necessary and sufficient condition for assuring that the generated updated matrices

are positive definite and thus the computed search direction is downhill for the typical BFGS method (Broyden, Citation1970). By analogy, condition (24) is applied for the new technique MSBFGS in order to ensure that that

is “sufficiently” positive to avoid any potential numerical instability in the building of

. If condition (24) fails, the algorithm falls back to utilizing an MSBFGS iteration on that specific instance. Using the approaches proposed in Shanno and Phua (Citation1978), an initial scaling is applied to the start inverse Hessian approximation.

shows the graphical results of the test problems in terms of the most expensive component of the minimization process, the overall number of function/gradient evaluations. That is, we depict the proportion of problems for which each technique is within a factor of

the best number of evaluations for each approach. The algorithm that performs better in terms of evaluations that is within a factor of the best number of function evaluations is shown on the top curve. shows that NMBFGS outperforms the other two methods in terms of number of iterations and evaluations, thus making it the fastest and best performer.

6. Conclusions

In this study, Secant-like approaches based on modifications to the classical Secant relation (1) are investigated, and a new non-Secant method is devised. To increase the quality of the computed Hessian approximations and, as a result, expedite convergence to a solution for a particular issue, the approach employs nonlinear quantities that integrate data readily available from the previous three iterations. The outperforming algorithm has been justified both theoretically and practically. The primary motivation to exploring alternatives to the standard Secant equation has been to obtain possibly better Hessian approximations that expedite the convergence of quasi-Newton methods. The proposed algorithm’s convergence behaviour has been investigated. The numerical data achieved above have shown promising results, drawing attention to the methods’ potential practical significance. The findings demonstrate that past iteration data, such as function values, gradient points, and step vectors, yield performance gains practically, and hence provide incentives to explore them further.

7. Future work

Future research ought to concentrate on modified Secant methods for various τ-parameter choices in order to investigate the numerical behaviour of these methods’ sensitivity to such choices. It would also be interesting to study these methods’ ability to offer equivalent improvements when used to solve systems of non-linear equations, as well as their convergence characteristics.

Similar approaches are also being investigated, in which the computed search direction is adjusted before the line search is performed. This strategy looks to be effective. A variation of the technique is also being considered, in which the update of the Hessian approximation is skipped every other iteration (or more frequently). Also, the sensitivity of the algorithmic behaviour to the accuracy of the line search deserves investigation. The numerical performance of such methods when replacing the line search with Trust Region methods is also worth exploring.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Issam A.R. Moghrabi

Dr. Issam Moghrabi is a Professor of M.I.S/C.S. He laid the foundations of the M.B.A Program at GUST. He is a Fulbright Scholar and did a postdoctoral research in Geographic Information Systems. His main research interests are in mathematical Optimization, Management Science and Information Retrieval and database systems. He serves as a referee for many well-known journals in his subjects of interest.

References

- Al-Baali, M. (1985). New property and global convergence of the Fletcher–Reeves method with inexact line searches. IMA Journal of Numerical Analysis, 5(1), 122–19. https://doi.org/10.1093/imanum/5.1.121

- Awasthi, A., & Omrani, H. (2019). A goal-oriented approach based on fuzzy axiomatic design for sustainable mobility project selection. International Journal of Systems Science: Operations & Logistics, 6(1), 86–98. https://doi.org/10.1080/23302674.2018.1435834

- Broyden, C. G. (1970). The convergence of a class of double-rank minimization algorithms - Part 2: The new algorithm. IMA Journal of Applied Mathematics, 6(3), 222–231. https://doi.org/10.1093/imamat/6.3.222

- Dai, Y., Yuan, J., & Yuan, Y. (2002). Modified two-point step size Gradient methods for unconstrained optimization. Computational Optimization and Applications, 22(1), 103–109. https://doi.org/10.1023/A:1014838419611

- Dennis, J. E., & Schnabel, R. B. (1979). Least change Secant updates for quasi-Newton methods. SIAM Review, 21(4), 443–459. https://doi.org/10.1137/1021091

- Fletcher, R. (1970). A new approach to variable metric algorithms. The Computer Journal, 13(3), 317–322. https://doi.org/10.1093/comjnl/13.3.317

- Fletcher, R. (1987). Practical Methods of Optimization (second ed.). Wiley.

- Fletcher, R. (1994). An Overview of Unconstrained Optimization. In E. Spedicato (Ed.), Algorithms for Continuous Optimization. NATO ASI Series (Series C: Mathematical and Physical Sciences) (Vol. 434, pp. 109–143). Springer.

- Ford, J. A., & Moghrabi, I. A. R. (1993). Alternative parameter choices for multi-step quasi-Newton methods. Optimization Methods & Software, 2(3–4), 357–370. https://doi.org/10.1080/10556789308805550

- Ford, J. A., & Moghrabi, I. A. R. (1994). Multi-step quasi-Newton methods for optimization. Journal of Computational and Applied Mathematics, 50(1–3), 305–323. https://doi.org/10.1016/0377-0427(94)90309-3

- Ford, J. A., & Moghrabi, I. A. R. (1996). Using function-values in multi-step quasi-Newton methods. Journal of Computational and Applied Mathematics, 66(1–2), 201–211. https://doi.org/10.1016/0377-0427(95)00178-6

- Gharaei, A., Hoseini, S. S., & Karimi, M. (2020). Modelling And optimal lot-sizing of the replenishments in constrained, multi-product and bi-objective EPQ models with defective products: Generalised Cross Decomposition. International Journal of Systems Science: Operations & Logistics, 7(3), 262–274. https://doi.org/10.1080/23302674.2019.1574364

- Gharaei, A., Naderi, B., & Mohammadi, M. (2015). Optimization of rewards in single machine scheduling in the rewards-driven systems.Management. Science Letters, 5(6), 629–638.

- Gharaei, A., Naderi, B., & Mohammadi, M. (2019). An integrated multi-product, multi-buyer supply chain under penalty, green, and quality control polices and a vendor managed inventory with consignment stock agreement: The outer approximation with equality relaxation and augmented penalty algorithm. Applied Mathematical Modelling, 69 2 , 223–254. https://doi.org/10.1016/j.apm.2018.11.035

- Gharaei, A., Shekarabi, S. A., Karimi, M., Pourjavad, E., & Amjadian, A. (2021). An integrated stochastic EPQ model under quality and green policies: Generalised cross decomposition under the separability approach. International Journal of Systems Science: Operations & Logistics, 8(2), 119–131. https://doi.org/10.1080/23302674.2019.1656296

- Hassan, B., & Ghada, M. (2020). A new quasi-newton equation on the gradient methods for optimization minimization problem. Indonesian Journal of Electrical Engineering and Computer Science, 19(2), 737–744. https://doi.org/10.11591/ijeecs.v19.i2.pp737-744

- Hassan, B., & Mohammed, W. T. (2020). A new variants of quasi-newton equation based on the quadratic function for unconstrained optimization. Indonesian Journal of Electrical Engineering and Computer Science, 19(2), 701–708. https://doi.org/10.11591/ijeecs.v19.i2.pp701-708

- Hassan, B. (2019). A new Quasi-Newton Updating formula based on the new quasi-Newton equation. Numerical Algebra Control and Optimization, 4(1), 63–72.

- Hassan, B. (2020). A new type of quasi-Newton updating formulas based on the new quasi-Newton equation. SIAM Journal Numerical Algebra, Control, and Optimization, 10(2), 227–235. https://doi.org/10.3934/naco.2019049

- Jin, Q., & Mokhtari, A. (2021). Non-asymptotic super linear convergence of standard quasi-Newton methods. Journal of Strategic Decision Sciences, 121, https://arxiv.org/pdf/2003.13607v1.pdf

- Li, D., & Fukushima, M. (2001). A modified BFGS method and its global convergence in nonconvex minimization. Journal of Computational and Applied Mathematics, 129(1–2), 15–35. https://doi.org/10.1016/S0377-0427(00)00540-9

- Moghrabi, I. A. R. (2017). Implicit extra-update multi-step quasi-newton methods. International Journal of Operational Research, 28(2), 69–81. https://doi.org/10.1504/IJOR.2017.081472

- Moré, J. J., Garbow, B. S., & Hillstrom, K. E. (1981). Testing unconstrained optimization software. ACM Transactions on Mathematical Software, 7(1), 17–41. https://doi.org/10.1145/355934.355936

- Ortiz, F., Simpson, J. R., Pignatiello, J. J., & Heredia-Langner, A. (2004).A genetic algorithm approach to multiple-response optimization. Journal of Quality Technology, 36(4), 432–450. https://doi.org/10.1080/00224065.2004.11980289

- Sayyadi, R., & Awasthi, A. (2018). A simulation-based optimisation approach for identifying key determinants for sustainable transportation planning. International Journal of Systems Science: Operations & Logistics, 5(2), 161–174. https://doi.org/10.1080/23302674.2016.1244301

- Shanno, D. F., & Phua, K. H. (1978). Matrix conditioning and nonlinear optimization. Mathematical Programming, 14(1), 149–160. https://doi.org/10.1007/BF01588962

- Shanno, D. F. (1978). On the convergence of a new conjugate gradient algorithm. SIAM Journal on Numerical Analysis, 15(6), 1247–1257. https://doi.org/10.1137/0715085

- Tajadod, M., Abedini, M., Rategari, A., & Mobin, M. (2016). A comparison of multi-criteria decision making approaches for maintenance strategy selection (a case study). International Journal of Strategic Decision Sciences, 7(3), 51–56. https://doi.org/10.4018/IJSDS.2016070103

- Wei, Z., Li, G., & Qi, L. (2004). The superlinear convergence of a modified BFGS- type method for unconstrained optimization. Computational Optimization and Applications, 29(3), 315–332. https://doi.org/10.1023/B:COAP.0000044184.25410.39

- Wei, Z., Li, G., & Qi, L. (2006). New quasi-Newton methods for unconstrained optimization problems. Mathematics Program Applied Mathematics and Computation, 175(2), 1156–1188. https://doi.org/10.1016/j.amc.2005.08.027

- Xiao, Y. H., Wei, Z. X., & Zhang, L. (2006). A modified BFGS method without line searches for nonconvex unconstrained optimization. Advances in Theoretical and Applied Mathematics, 1(2), 149–162. https://www.ripublication.com/Volume/atamv1n2.htm

- Yuan, G., Sheng, Z., Wang, B., Hu, W., & Li, C. (2018). The global convergence of a modified BFGS method for nonconvex functions. Journal of Computational and Applied Mathematics, 327(1), 274–294. https://doi.org/10.1016/j.cam.2017.05.030

- Yuan, G., Wei, Z., & Lu, X. (2017). Global convergence of BFGS and PRP methods under a modified weak Wolfe–Powell line search. Applied Mathematical Modelling, 47(2), 811–825. https://doi.org/10.1016/j.apm.2017.02.008

- Yuan, G., Wei, Z., & Wu, Y. (2010). Modified limited memory BFGS method with non-monotone line search for unconstrained optimization. Journal of the Korean Mathematical Society, 47(4), 767–788. https://doi.org/10.4134/JKMS.2010.47.4.767

- Yuan, G., & Wei, Z. (2010). Convex minimizations. Computational Optimization and Applications, 47(1), 237–255. https://doi.org/10.1007/s10589-008-9219-0

- Zhang, J. Z., Deng, N. Y., & Chen, L. H. (1999). Quasi-Newton equation and related methods for unconstrained optimization. Journal of Optimization Theory and Applications, 102(1), 147–167. https://doi.org/10.1023/A:1021898630001