?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

The Vision Guided Robotic systems (VGR) is an essential aspect of modern intelligent robotics. The VGR is rapidly transforming manufacturing processes by enabling robots to be highly adaptable and intelligent reducing the cost and complexity. For any sensor-based intelligent robots, vision-based planning is considered as one of the most prominent steps followed by controlled actions using visual feedback. To develop robust vision-based autonomous systems in robotic applications, path-planning and localization can be implemented along with Visual Servoing (VS) for robust feedback control. In the available literature, most of the reviews are focused on a particular module of autonomous systems like path planning, motion planning strategies, or Visual Servoing techniques. In this paper overall review of different modules in vision-guided robotic systems is presented. So, this review provides researchers with broader in-depth knowledge about different modules that exist in the vision-guided autonomous system. The review also includes different vision sensors that are commonly used in industries covering their characteristics and applications. In this work, overall, 227 research papers in path planning and vision-based control algorithms are reviewed with recent intelligent techniques based on optimization and learning-based approaches. The graphical analysis illustrating the advancements of research in the field of vision-based robotics using Artificial Intelligence (AI) is also discussed. Lastly, this paper concludes by discussing some of the research gaps, challenges, and future directions existing in vision-based planning and control.

PUBLIC INTEREST STATEMENT

Nowadays, robots are considered as an important element in society with the fact that they can replace humans in basic and dangerous activities. Among them, Vision-Guided Robots (VGR) are an essential aspect of modern intelligent robotics that can easily navigate in any environment using visual feedback thereby increasing productivity and efficiency. The authors have highlighted the important layers that exist in any vision-guided robot and focus on state-of-art techniques and developments that took place in the last 5 years. The goal of this review paper was to make researchers understand the basic concepts of vision-guided robots and what are the current gaps existing in this field. The evolution of research in vision-guided robots have been illustrated through graphical analysis. Finally, the future scope of this study has also been highlighted in the paper. The VGR can help in transforming manufacturing processes highly adaptable reducing the cost and complexity. The research activities specified are currently being performed in real-time industrial applications.

1. Introduction

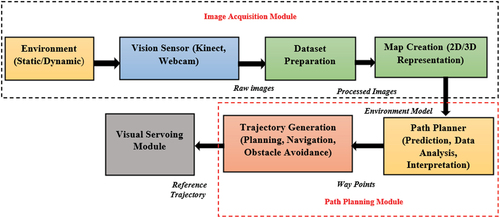

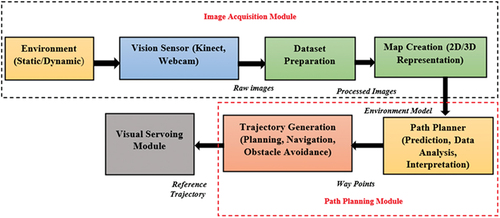

Nowadays, robots are considered an important element in society with the fact that they can replace humans in basic and dangerous activities. Among them, vision-guided robots are commonly used in industries that can easily navigate in any environment using the feedback obtained from the vision sensor. These robots increase productivity making the robots highly adaptable and robust. There are different modules to build a vision-guided system namely perception, localization, path planning, and control. As far as robot planning is concerned it is difficult for a robot to react to sudden environmental changes or avoid any kind of obstacles whereas humans can easily achieve these tasks (Pandey et al., Citation2017). In robot planning, a sequence of actions is planned from start to goal point by using planning algorithms simultaneously avoiding obstacles (Garrido et al., Citation2011; Haralick & Shapiro, Citation1993). However, designing an efficient navigation strategy is the most important issue in creating intelligent robots. Therefore, the robot planning problem using vision sensors is one of the most interesting and wide research areas where the ultimate goal is to achieve a safe and optimal route for robot navigation. In general, vision-based robotic systems can be applied to several industrial applications like spray painting (Ferreira et al., Citation2014), pick and place, assembly task in the optical industry, automotive, robotic welding like pipe and spot welding (Rout et al., Citation2019), payload identification and much more where efficient planning and control are of prime importance. The modules involved in vision-based autonomous robotic systems are illustrated in Figure .

1.1. Perception

In robotics, the perception stage involves visualization and reaction to the changes in the surrounding environment based on the features obtained from the vision sensor which is used for making decisions, planning, and operation in real-world environments. The key modules of a robot perception system are essentially sensor data acquisition and data pre-processing using image processing so that an environment model can be generated. Some of the applications of robotic perception are obstacle detection (Rout et al., Citation2019), object recognition (Bore et al., Citation2018), semantic segmentation (Sunderhauf et al., Citation2016), 3D environment representation (Saarinen et al., Citation2012), gesture and voice recognition (Fong et al., Citation2003), activity classification (Faria et al., Citation2015), terrain classification (Manduchi et al., Citation2005), road detection (Fernandes et al., Citation2014), vehicle detection (Asvadi et al., Citation2017).

1.2. Localization

Localization refers to finding the position and orientation of the robot in space relative to the camera (Paya et al., Citation2017). In other words, the robot maps out the world while keeping track of where it is in the world using bounding boxes or markers around the region of interest in an image or video sequence. The applications of localization are robot vehicle tracking, pedestrian detection (Premebida & Nunes, Citation2013), human detection (Sarabu & Santra, Citation2021), and environment change detection (Guo et al., Citation2020).

1.3. Path planning

The main idea behind vision-based robot planning (Mezouar & Chaumette, Citation2002) is to generate optimal waypoints along with visual constraints. Once the waypoints are obtained, trajectories with respect to time can be generated so that the robot can be made to move along the planned trajectories making the error between the reference and actual trajectories becomes zero. Path planning is commonly used in autonomous vehicles, mobile robot navigation, tracing weld lines in the welding industry, video games, and industrial automation in logistics (Dolgov et al., Citation2008). The path planning algorithms can be designed for both static and dynamic environments where the optimal path is obtained with the knowledge of any object positions in that environment map because most of the obstacles in real-life situations are dynamic. The path planning is categorized based on robot workspace, obstacle, and environment which is discussed in detail in section 2.3.

1.4. Robot control

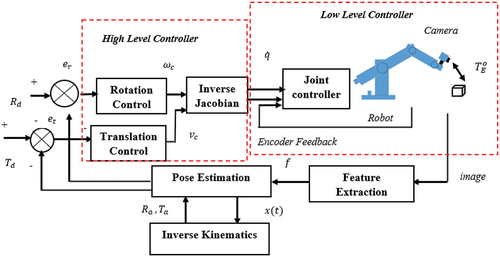

Once the path is planned, the joints of the robot are controlled to achieve the desired goals which are also termed trajectory tracking. For the design of control techniques, the mathematical model of the robot is obtained using kinematics, inverse kinematic, or robot dynamics. There are many control algorithms to track the desired trajectory namely conventional controllers like Proportional Integral Derivative (PID) controller, Adaptive Control schemes (Dan Z et al., Citation2017), Optimal Control and Robust Control (Mittal et al.,(Citation2003); Nagrath et al.,Citation2003) followed by intelligent controllers like Neural Network, Fuzzy Logic, Genetic Algorithm (Arhin et al., Citation2020). This paper provides a detailed survey of state-of-art optimization and learning-based approaches for designing the controllers which is one of the contributions of this paper. Figure illustrates the different layers of any vision-based autonomous robotic system. There are three layers—a high level, an interface layer, and a low-level layer which constitutes autonomous robotic systems. This paper mainly concentrates on vision sensor-based robotic systems.

The communication of any robotic system is from a high-level to low-level layer in which task planned or any human-machine commands are sent to the interface layer which acts as middleware between robot hardware and environment. Based on the action received from the environment, the interface layer decides the path and obstacles. This planned path is sent to sensors installed on the robot which in turn controls the wheel velocities and move accordingly. Though the robots have become intelligent with the advancement of technologies, some flaws still exist in vision-based systems particularly if the camera movement is dynamic and in highly illumination environment. These factors hinder the planning stage thereby misleading the robots. In recent times, many reviews and research have been reported in vision based robotic systems. Pandey et al. (Citation2017) discussed in depth survey on intelligent techniques for navigation of mobile robots. The author focussed on evolutionary algorithms, deterministic and non-deterministic algorithms whereas Rout et al. (Citation2019) explained different sensors and techniques to track the weld seam in robotic welding applications. The author also explained different techniques for determining weld seam position and geometry feature extraction along with advantages and disadvantages. Mouli et al. (Citation2013) presented review on embedded systems for object sorting using vision-guided robotic arm. The author addressed past and current design methodologies, image processing techniques, modeling of arm and finally wireless control of robotic arms for sorting applications. Halme et al. (Citation2018) discussed recent method in vision-based technologies for human-robot interaction. The focus in this paper was vision-based safety and alarming systems based on flexibility, system speed and collaboration level. The author also addressed challenges existing in human-robot collaboration along with future direction. Fauadi et al. (Citation2018) discussed recent intelligent techniques for mobile robot navigation and the author focussed three vision systems namely monocular, stereo and trinocular system. Chopde et al. (Citation2017) discussed recent developments in vision-based system for agricultural inspection along with image processing techniques. Zafar and Mohanta (Citation2018) presented commonly used techniques focusing on conventional and heuristic approaches for path planning of mobile robots whereas Campbell et al. (Citation2020) discussed various global and local path planning algorithms for both static and dynamic environments. Gul et al. (Citation2021) present consolidated review on path planning and optimization techniques for ground, aerial and under water vehicles along with communication strategies. Wang, Fang, et al. (Citation2020) focussed on different Visual Servoing strategies along with trajectory planning methods. The author discussed trajectory planning methods based on potential field and optimization algorithms. Dewi et al. (Citation2018) presented a review on Visual Servoing design and control strategies for agricultural robots whereas Huang et al. (Citation2019) discussed Visual Servoing techniques for non-holonomic robots for two different robot-camera configurations namely independent and coupling types. The author proposed active disturbance rejection control (ADRC) technique for implementing Visual Servoing with the uncalibrated camera.

From the above discussion, it can be noticed that most of the present literature is related to path planning, motion planning, calibration strategies, and Visual Servoing (VS) techniques. There is a limited consolidated review on vision-based intelligent robots with current state-of-art techniques. Hence, in this review, an attempt has been made to present a detailed survey on different modules focussing on different layers like perception, localization, path planning, and control strategies in vision-based robotic systems.This paper also elaborates on recent techniques discussed in various literatures for vision-based robotic systems. This paper also provides an in-depth review of sensors commonly used for real-time applications, different calibration procedures, image processing techniques for feature extraction, and Visual Servoing algorithms for robot control. Apart from this, high-level controller design based on recent developments has been addressed along with future direction and challenges. The objectives of the current review are to focus on the following:

To discuss the different modules of the perception stage that covers major areas like different sensors used in real-time applications, different camera calibration techniques, image processing techniques and addressing their advantages and disadvantages.

To elaborate on different localization methods and its advancements made in recent years.

To present some of the state-of-art intelligent techniques for vision-based path planning which can be used to produce waypoints to plan its task.

To discuss different Visual Servoing strategies along with limitations of each approach.

To discuss different high-level optimization and learning-based controllers for effective visual feedback control.

The paper is divided into six sections. The description of different modules of vision-based robot planning and types of Visual Servoing is discussed in Section 2. It is followed by an explanation of a high-level controller design for Visual Servoing in section 3. The graphical analysis of the literature review for this paperwork is discussed in section 4. In Section 5, the research gap, challenges, and future scope of vision-based planning are discussed followed by a conclusion in Section 6.

2. Modules of vision-based autonomous robot

This paper concentrates on path planning and control using vision sensor since it is widely used in industrial applications. The vision-based robot planning is an amalgamation of pre-processed images in the perception module which is further needed for planning the robot path to perform sequential actions in a controlled manner as illustrated in Figure . The detailed explanation for each module of vision-based robot planning and control will be explained in further sections.

2.1. Perception module for vision-based robot planning

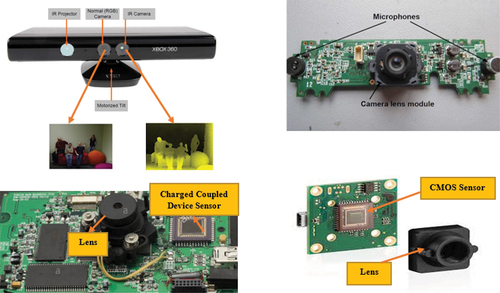

In this section, different elements in the perception stage are discussed which covers vision sensors for acquiring data, camera calibration methods, and finally image processing for feature extraction. The main purpose of this module is to pre-process the visual information so that the robot can understand the environment. Based on the information, an environmental representation or map is created which helps the robot to decide on its motion. The vision sensors used for robotic perception system comprises lighting source, lenses, image sensors, controllers, vision tools, and communication protocols (D-M. Lee et al., Citation2004). The internal hardware structure of the vision sensor is shown in Figure .

The basic working of the vision sensor is that initially, the lens performs object inspection by looking for specific features to determine position, orientation, and accuracy. Once the field of view (FOV) is set, image sensors convert light into a digital image which is sent to the Analog to Digital (ADC) converter for processing and optimizing images. In general, sensors are classified as exteroceptive and proprioceptive where exteroceptive sensors measure external parameters like distance from the robot and proprioceptive measure internal parameters of the robot—speed and wheel rotations. The robots are always installed with exteroceptive sensors to correct the errors. Since the camera belongs to the exteroceptive sensor, its detailed classification based on the camera layout, semiconductor technology, camera technology, and based on the light source used are illustrated in Figure . Environment, lighting, and modularity are some of the most important factors to be considered while selecting a vision sensor (Malamas et al., Citation2003) for specific applications. Apart from this other factors like application, sensor type, and communication interfaces should be considered for choosing the right camera. The most common communication interfaces used are USB 2.0, USB 3.0, GigE, Camera Link, PoE, and VGA. Vision sensors have enormous applications like food inspection, welding, object tracking, auto sorting the waste, assistive robots, assembly applications, and much more (Nandini et al., Citation2016). In recent years, there are enormous companies that manufacture smart vision sensors for various industrial applications with advanced technologies. They have built-in high-end processors which help in faster processing of frames per second. Table explains the main categories of vision sensors with detailed specifications like manufacturer, Frames Per Second(FPS). It also gives readers a better idea of choosing a particular sensor based on applications.

Table 1. List of vision sensors used for robot planning

2.1.1. Camera calibration methodology

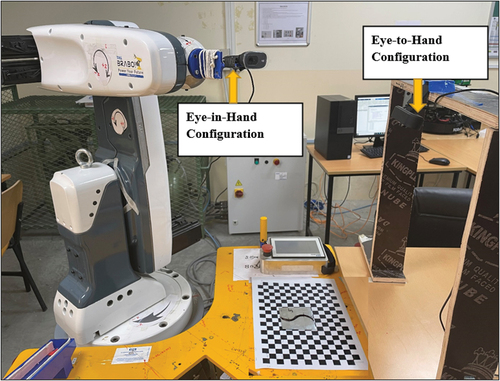

Any sensors which are installed on robots need to be calibrated to avoid measurement errors. The commonly used sensor in robots is the camera module which has a lens in form of a pinhole or fisheye. The raw image obtained from the camera is not the real view hence some transformation has to be applied so that it looks the same way as the ideal camera captures. This transformation is obtained by computing the intrinsic parameters and the distortion parameters (D’ & Gasbarro, Citation2017). The first and foremost step is to calibrate the camera to remove lens distortion and calculate camera internal and external parameters. The camera calibration parameters include intrinsic parameters namely focal length, lens distortion, and principal point whereas extrinsic parameters namely rotation and translation of the camera. Based on the lens model, calibration is performed for two main models namely the pinhole model (Rigoberto et al., Citation2020; Wang, Zhang et al., Citation2019) and the fish-eye model (Lee, Citation2020). Since general explanations have been already covered in various literature, this section explains the comparison of calibration methods for different lens types. The camera for calibration is commonly placed in the eye-in-hand or eye-to-hand configuration as shown in Figure . If the camera is placed in an eye-to-hand setup, a checkboard can be placed either in the robot workspace or it can be attached to the robot end-effector. Furthermore, if the camera is placed in an eye-in-hand setup, a calibration board can be placed in the robot workspace or placed somewhere outside the workspace which can be visualized by the camera. Based on the different camera placement, transformation calculations vary.

The general classification of camera calibration methods is conventional visual calibration, camera self-calibration, and active vision-based calibration (Long & Dongri, Citation2019). In traditional visual calibration, intrinsic parameters and extrinsic parameters are computed. The intrinsic parameters like focal length, skew, the principal point of the lens is constant whereas external parameters like translational and rotation components are dynamic. Traditional calibration methods include the DLT algorithm, Tsai calibration method (Tsai, Citation1987), linear transformation method and RAC-based calibration Method, Zhang Zhengyou calibration method based on the chessboard (Zhang, Citation2000). The most commonly and widely used technique for calibration is Zhang<apos;>s Method. In this technique, checkboard pattern images are taken at different angles using a pinhole camera. Next, the 4 × 3 camera matrix is obtained which is the combination of the intrinsic and extrinsic matrices. Using these matrices, a camera to world transformation can be found by mapping the checkboard corner points and world corner points. The world to camera coordinates

are transformed using extrinsic matrix whereas camera

to pixel coordinates

are obtained using an intrinsic matrix. The intrinsic matrix I and extrinsic matrix E of the pinhole model is given by

Where ( = Focal length in pixels

(= principal point of lens in pixels

S = Skew coefficient

The camera matrix is given by,

Finally using the camera matrix image points (,

) are converted to corresponding world coordinates (X, Y, Z) which is given by,

Where is the scaling factor and the above matrices do not account for distortions so to represent the real camera radial and tangential distortion has to be accounted for. The detailed explanations and derivations can be referred to in (Zhang, Citation2000). Furthermore, camera self-calibration involves finding the unknown camera intrinsic parameters from a sequence of images and it does not require any special calibration objects. The commonly used self-calibration techniques are Absolute Conic (Richard Hartley & Chang, Citation1992) and Absolute Quadric (Trigg, Citation1997). Next in active vision-based calibration, image data is obtained from a controlled camera, and camera motion is known during the calibration process. Active vision calibration methods are further classified as Makonde<apos;>s three orthogonal translational motion methods (Trigg,Citation1997)and Hartley camera pure rotation correction method (Hartley, Citation1997). The summary of related research works of different methodologies and recent developments in calibration technologies is discussed in detail in Table .

Table 2. Summary of recent works collected in camera calibration methodology

The advantages and disadvantages of different camera calibration methods are listed in Table .

Table 3. Comparative analysis of different camera calibration methods

2.1.2. Dataset preparation for vision-based robot planning

In a robotic vision-based task, the next important step after calibration is the preparation of the dataset captured from vision sensors for processing. Once the images are obtained from sensors, the raw image has to be processed using some image processing algorithms to extract useful information. In vision-based path planning, the dataset is nothing but the collection of 2D or 3D images from which the robot has to understand the data and locomote in a particular fashion. In general, 2D image can be defined by two-dimensional function where

and

are height and width which range from 0 to 255 (Bovik, Citation2009d) and f is the intensity of the image at that point whereas 3D image has an additional depth which contributes to finding the object distance easily. Hence many upcoming research works are focussing on 3D based Vision tasks which creates realistic effects easily.

The common classification of images storage formats is given in Figure .

In the case of binary images, it has two-pixel elements 0 and 1 where 0 refers to black and 1 refers to white. In 8-bit format, images are also called the grayscale image has 256 colors where 0 stands for Black, 255 stands for white, and 127 stands for gray. Finally, the 16-bit color format also called RGB format has 65,536 different colors in it which are divided into three channels namely Red, Green, and Blue (Jackson, Citation2016). Based on these image formats, there are different image processing techniques to extract the useful features and prepare the datasets suitable to the algorithm so that robots are not distorted. The common process flow of data processing to extract the features from the image acquired from the vision sensor is shown in Figure . The process involves image acquisition from a vision sensor followed by an image preprocessing step where the images are scaled, rotated, binarized, and segmented to extract the useful features (Patel & Bhavsar, Citation2021; Premebida et al., Citation2018). Once the preprocessing is done, the datasets can be used for any kind of applications like object tracking, motion estimation, object recognition and so on (Garibotto et al., Citation2013; Song et al., Citation2018). Though the process sounds simple there are a lot of factors that affect the processing stage. The most common factors are high illumination and noise in images. Also with high-resolution cameras, large datasets need a lot of memory and powerful processing devices. Working with large videos poses serious problems where better encryption and compression techniques are needed. To address these issues, still many research works are being conducted.

In recent times, there are many improvements made over conventional image processing techniques with the advancements of artificial intelligence algorithms. Byambasuren et al. (Citation2020) applied image processing techniques for conducting quality assurance of products using ABB IRB 120 industrial arm. The author captured 3D data of normal and abnormal objects and used HSV transformation with histogram normalization technique to distinguish the objects. The main gap in that paper is that it was not tested in real-time considering illumination effects and other noises. Cruz et al (Citation2020) used the structured light camera for welding inspection of LPG vessels. The weld lines were extracted using image processing techniques like grayscale conversion and binarization. The author was able to achieve a quality index of 95%-99% in practical validation. S Xu et al. (Citation2020) presented a review on computer vision and image processing techniques for construction sites since monitoring the process is difficult whereas Vijay Kakani et al. (Citation2020) reviewed different computer vision methods and artificial techniques for the food industry. The author also discusses the possibility and future directions of industry 4.0 for sustainable food production. Rodriguez Martinez et al. (Citation2020) presented a vision-based navigation system using a photometric A star algorithm that compares previous states(images) with the current one to create an efficient visual path.

In recent years, there has seen a massive increase in the adoption of computer vision tools and software in various applications like camera calibration (Hua & Zeng, Citation2021), feature detection/tracking (Wang Zhang et al., Citation2020), image positioning for sorting (Man Li et al., Citation2020), speech classification (Mustafa et al., Citation2020), segmentation (L. C. Santos et al., Citation2020), thresholding (Angani et al., Citation2020), quality assurance/monitoring (Byambasuren et al., Citation2020) and 3D image processing (Cui et al., Citation2020). Numerous toolkits, frameworks, and software libraries have been developed which make the system intelligent and smart. Some of the best open-source computer vision tools which can be used for creating an effective computer vision application are mentioned in Table with specific languages supported (Bebis et al., Citation2003; Parikh, Citation2020). This helps the readers to pick the right tools based on software compatibility and specific applications.

Table 4. Common computer vision tools for robotic perception system

Finally, once the datasets are prepared and features are extracted, the sensor measurements are used to create a map to represent the environment. The map data is then sent to the localization module to find the robot<apos;>s position in the real world. From the above discussions, it can be inferred that the perception module is the key element in vision-based robotic systems because without this module robot cannot understand its surroundings. In-depth discussions of different elements of perception modules like sensors, calibration techniques, and image processing techniques are covered with state-of-art developments made by the researchers. The future of perception is that it can be used in Industry 4.0 which makes the industrial process more fluid and flexible. Since industries are looking for a reduction in energy consumption and recycling capabilities, in such cases artificial intelligent techniques and image processing helps in improving the quality of production and enhancing the automation control.

2.2. Localization module for vision-based robot planning

Localization is a process of estimating the position and orientation of the robot using sensor information. There are two main types of localization namely indoor localization and outdoor localization. The indoor localization is used for position estimation when there is no GPS information available whereas the outdoor localization is based on a satellite navigation system. Based on position information, it can be further classified as position tracking, global localization, and kidnapped problem (Aguiar et al., Citation2020). In position tracking, the robot<apos;>s initial position is known, and the objective is to track the robot at each instance of time using the previous position obtained from odometry, IMU sensor, and vision sensor. In case of large position uncertainty, position tracking does not perform well. Hence global localization is chosen where the robot does not have any knowledge about its initial position. Furthermore, in some cases, the robot is moved to an unidentified place which is called the kidnapped problem. Hence an autonomous robot should be able to monitor its location and relocation simultaneously by applying the relocation method. To address this issue, there are many localization algorithms- probabilistic localization strategies, automatic map-based localization, RFID-based approaches, and evolutionary techniques (Liu et al., Citation2007). This section explains the recent works based on indoor localization methods using vision sensor in which images of different visual markers like QR codes, AprilTags, ARTags are used as the input (Morar et al., Citation2020). The summary of works implemented in localization modules is discussed in Table .

Table 5. Common computer vision tools for robotic perception system

The above table describes various works that can be performed in the field of robot localization in both indoor and outdoor environments. Many sensors were reported in the literature works and most commonly IMU sensors, LiDAR, and cameras are used, and they can also be fused using different filtering techniques. The common filters that are used for sensor fusion are Kalman filter, 1D complementary filter, and EKF filter. Other hybrid approaches using LSTM, machine learning techniques can be used to find the efficient and accurate position estimates of the robot. The advantages and disadvantages of different localization methods along with applications are elaborated and compared in Table .

Table 6. Comparison of common robot localization techniques

This section discussed in detail the recent techniques which are used for efficient localization strategy as it is the most important step in robot planning. Without proper localization, robot position cannot be estimated and might lead to a kidnapped problem. There are many works reported on how to recover the robot from being lost in an unknown environment (Hu et al., Citation2020; Le et al., Citation2021). In such scenarios reinforcement learning-based localization is being researched.

2.3. Vision-based path planning

In general, Robot planning is the problem of deciding what the robot should try to do under constraints and uncertainties caused due to mapping, localization estimates, and the outcome of actions. The planning is generally classified into task planning, path planning, and motion planning. Path planning is the extension of localization which is the problem of finding a safe and optimal route in cartesian space or joint space. Next figuring out how to move between planned paths by computing velocity and acceleration is termed motion planning (Patle et al., Citation2019; Tzafestas Spyros, Citation2018). In this paper, a detailed survey is done for path planning using vision sensors followed by motion planning in terms of Visual Servoing. The task planning is not the scope of this paper. The information obtained from the perception section is used by the robot to plan the predefined path for moving in its world coordinates. Path planning is generally classified into local and global based on obstacle type. Local path planning is performed by obtaining data from vision sensors simultaneously when the robot is moving changes in the environment. In global path planning, paths are generated only if the environment is known with the start and endpoints (Jiexin et al., Citation2019). Path planning can be implemented in both mobile robots and manipulators to perform a specific task but in mobile robots, they have a higher degree of freedom because of the moving base, hence path planning of such robots is more challenging than serial manipulators (Gasparetto et al., Citation2015). Once the path for a robot is described, the next step is to generate the trajectory which serves as the input to the motion control system ensuring that the robot follows the executed trajectory. Different techniques like Cubic polynomial, Quintic polynomial, and Beizer curves are used are commonly used for trajectory generation. Some of the factors to find the optimal route for path planning of robotic manipulators and mobile robot navigation includes optimization on acceleration, velocity, and jerk (Lin, Citation2014; Tang et al., Citation2012). Once the trajectory is generated, the robot must move in these trajectories efficiently by controlling its pose which is taken care by the Visual Servoing module. The overall process of vision-based planning is illustrated in Figure .

The current methods of robot planning specified in the literature are categorized into conventional methods that include Roadmap, Cell Decomposition, and Potential Fields followed by intelligent approaches which include genetic algorithm, Neural Network (NN), fuzzy logic, and artificial intelligence techniques (Chaumette et al., Citation2008). The detailed path planning algorithms, challenges, types can be found in a few works of literature (Campbell et al., Citation2020; Jiang & Ma, Citation2020; Nadhir Ab Wahab et al., Citation2020; LC Santos et al., Citation2020; H. Y. Zhang et al., Citation2018). The path planning methodologies varies based on the workspace of the robot involving 2D or 3D data. The research dated back involves planning mostly in a 2D environment since it was easy for the robot to understand and also it was easy to process the data. But in order to make the analysis more realistic to the real-time environment, 3D data can be used for path planning which is termed 3D path planning. With the advancement in vision and artificial intelligence, processing and handling of 3D data became much easier hence recent research concentrates more on 3D data analysis. Another aspect of classification is based on environment model details which can be known or unknown. In most cases, environment details are known, and path planning is performed but in the case of an unknown environment, a Reinforcement learning approach can be implemented. Another classification considered is based on obstacles. The detailed classification of different path planning algorithms used in the research is illustrated in

In recent years, metaheuristic algorithms for path planning like a genetic algorithm (GA) is commonly used as it helps in the optimization of several factors like path length, path time, path smoothening (Goldberg, Citation1989). Another intelligent technique used for path planning is the neural network where paths generated are fed as inputs to the neurons. As it moves from input layers to output layers via hidden layers weights and biases are updated. To improve the training of the neural network, hidden layers can be increased, and also momentum coefficient can be specified for faster convergence. Artificial Intelligent (AI) algorithms can be implemented when humans are unaware of process knowledge, handle complex processes, and process huge datasets. Machine learning and deep learning are a subset of AI techniques which has widespread use in applications like image classification and object recognition, predicting grasp locations, bin-picking operations, trajectory planning, motion estimation, pose estimation, camera delocalization, remote sensing using Convolutional Neural Network (CNN) (Alom et al., Citation2019; Bhatt et al., 2021). Another new direction has evolved in path planning where the algorithm learns by itself. Based on the actions and reward obtained, it defines the path which is called Reinforcement Learning (RL) (Wang Liu et al., Citation2020). In RL, a path is generated based on learning from mistakes. If the training is repeatedly done, learning will be better. Also combining deep learning and RL is a popular area of research in recent times. The current research trends in vision-based path planning algorithms based on the papers surveyed are listed in Table .

Table 7. Literature review of vision-based planning tasks applied to manipulators and mobile robots

The recent developments in the field of vision-based path planning were discussed in the table above. It can be seen that many works have been reported in deep learning and reinforcement learning. The main advantage of such methods is that they can be used if the robot does not have any information about the environment thereby there is no need for complex mathematical modeling. The conventional vision-based path planning needed additional feature extraction algorithms and they were susceptible to odometry noise. With deep learning networks, feature extraction is done automatically without the need for additional feature extraction algorithms. They can easily handle larger datasets without additional processing. Nowadays,3D path planning is being implemented where the environment is uncertain like underwater, forest, and urban areas (Yang et al., Citation2016). Unlike 2D path planning complexity of 3D path planning is high when kinematic constraints increases. Hence several solutions are being investigated to address this issue.

2.4. Visual servoing techniques for robot navigation

As per the process flow explained in section 2.4, the waypoints and trajectories generated as fed as inputs to the Visual Servoing module where robots are made to move along the planned trajectories in a controlled manner. Visual Servoing in general controls the robot motion by using visual information as feedback (Ke et al., Citation2017). In general, using image processing (Wang Fang et al., Citation2020), computer vision (Kanellakis et al., Citation2017), robotics (Zake et al., Citation2020), and control theory (Sarapura et al., Citation2018) visual feedback control can be achieved. The path planner module can be combined with Visual Servoing for robust feedback control because the path planning algorithms take into account critical constraints and uncertainties in the system (Kazemi et al., Citation2010). The two main types of Visual Servoing are Position-Based Visual Servoing (PBVS) and Image-based Visual Servoing (IBVS) (Palmieri et al., Citation2012). The PBVS is a model-based technique where features from the image are transformed into corresponding robot position and orientation in cartesian space whereas in the IBVS method the features in the image space are directly compared with reference features omitting the pose estimation module (Lippiello et al., Citation2007). The position-based VS can be used when there is a need to track only the target object by making sure that the camera is in its field of view whereas image-based techniques are more robust than PBVS even in the presence of the camera and hand-eye calibration errors (Ghasemi et al., 2019). The manipulated variable for the robot navigation control is contours, features, and corners of the images captured by the robot sensors whereas the control variable is the position or velocity, or angular velocity of the robot relative to the target object (Senapati et al., Citation2014). To perform the Visual Servoing task, there are two main transformations required namely camera-to-world transformation which is obtained from camera calibration of pin-hole camera model or fisheye camera model based on the sensor shape. The second transformation is camera-to-base

or camera-to-end effector

which is the homogeneous transformation matrix obtained from hand-eye calibration. This matrix is the combination of translational and rotation vectors of the camera with world coordinates. Since the Visual Servoing task involves the movement of joints in world coordinates, Jacobian matrix J is used to compute the relationship between end effector velocities and joint velocities. The detailed concepts and derivation of Visual Servoing equations can be referred to in (Corke., Citation1993). There are various estimation techniques like particle filter (Wang Sun et al., Citation2019), Least Square estimation (Shademan et al, Citation2010) that are used to compute robust and efficient Jacobian matrix for Visual Servoing applications.

2.4.1. Position-based visual servoing (PBVS)

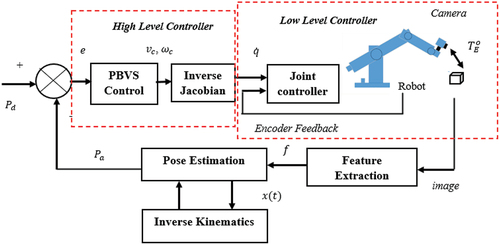

There are three basic modules of the PBVS system namely Pose Estimation module, Feature Extraction module involving image processing techniques, and Control module in which conventional or intelligent controllers are designed (Banlue et al., Citation2014) as shown in . From the calibrated vision sensor, using camera intrinsic parameters and the geometric 3D model of the object, the pose of the robot end-effector is estimated from the transformation matrix .The features

obtained from the transformation is used to estimate the pose

which is a function of the position of the end effector x(t) and orientation θ. The orientation is calculated from inverse kinematics or any heuristic algorithms which calculate the position of the target object x(t) with respect to the camera frame. Finally, the joint controller is designed in such a way that error e between the reference pose

and the actual pose

becomes zero. Finally, PBVS control calculates linear and angular velocity

and

. These values are used to obtain the inverse Jacobian matrix to calculate the joint velocities needed for the robot to move. The advantage of PBVS is that the desired tasks can be formulated in cartesian space which is straightforward to compute the error (Patil et al., 2017). Peng et al. (Citation2020)have done a comparative analysis between PBVS and IBVS for industrial assembly in terms of accuracy and speed. It was implemented in the ABB robot and found that PBVS had faster execution than IBVS and also tested the same with multiple cameras. Similarly, Sharma et al. (Citation2020) implemented PBVS for a mobile robot to estimate the camera to robot base transformation using the gradient descent method. Shi Chen et al (Citation2019) used PBVS for robot pose estimation to avoid obstacles. The authors used the repulsive torque method to find the inverse kinematics of redundant manipulators and found them to be fast, flexible, and accurate.

2.4.2. Image-Based visual servoing (IBVS)

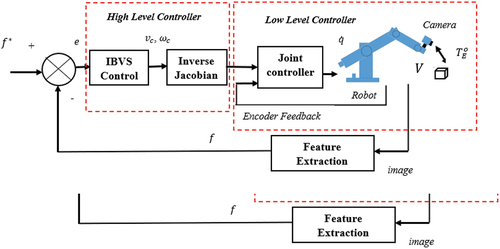

The IBVS approach as illustrated in extracts the features from the transformation matrix

without the need for pose estimation (Keshmiri et al., Citation2016). In both configurations, the camera can be either fixed in a particular position (eye-to-hand) or placed in the robot end effector (eye-in-hand) configuration. The Image Jacobian Matrix or interaction matrix is calculated which provides transformation mapping between camera velocity

and image feature velocity (Keshmiri et al., Citation2014). The difference between extracted features

and actual features

is calculated to drive the error to zero. In IBVS, there is no need for a pose estimation module and the control law computes velocities of the robot from linear

and angular velocity and

of the camera by computing Image Jacobian matrix

(Cervera et al.,Citation2003). Though IBVS is robust against errors, there exists some difficulty in IBVS if the camera is disturbed or it is not in a desirable position. If the joints have large rotational errors, IBVS tends to fail. Image singularity, camera retreat, and local minima problem are the other causes of IBVS failure (Nematollahi et al., 2009; Chaumette et al., 1998;Chaumette et al.,2008). Lee et al (2021) proposed dynamic IBVS of wheeled mobile robots using fuzzy integral sliding mode controller to track the feature points in the image plane. By implementing Lyapunov stability analysis transient response was improved. Haviland et al (Citation2020) used IBVS for grasping since other techniques fail when the sensor is close to the object. Using this technique author was able to guide the robot to the object-relative grasp pose using camera RGB information.

2.4.3. 2.5D visual servoing

The pros and cons of IBVS and PBVS which were discussed in the above sections have certain flaws, so researchers combined both IBVS and PBVS into a hybrid approach called 2.5D Visual Servoing (VS) which potentially outperforms these basic schemes (Z. He et al., Citation2018; Malis et al., Citation1999) which is shown in . One of the most interesting aspects of this control law is that it manages to decouple the rotation and translation control. In this type of VS, the controller is designed such that rotation error between Ra and Rd as well as a translation error

between

and

becomes zero. The decoupled linear velocity

and angular velocity

are fed as inputs to the interaction matrix which gives the joint velocity

as the output. This is then sent to the robot joints to move the end effector in a particular pose. Also, 2.5D Servoing has better stability than IBVS and PBVS, and some of the major disadvantages like the existence of local minima in IBVS, large steady-state errors are removed in PBVS (Gans et al., Citation2003). W. He et al. (Citation2019) proposed 2.5D Visual Servoing to eliminate higher-order image moments so he implemented this technique for grasping texture less planar parts. The authors used cross-correlation analysis to estimate the position of planar parts and they found that it has stable motion control with 3D trajectory. Q. Li et al. (Citation2020) proposed hybrid VS for harvesting robots to track the unknown fruit motion which is taken as the feature in this work. The authors used an adaptive tracking controller since the motion was dynamic and also performed stability analysis using Lyapunov.

The general idea behind Visual Servoing, its types, and related works has been discussed in depth. The common difference between various methods is consolidated based on the literature survey and it is described in Table .

Table 8. Comparison of different visual servoing techniques

2.4.4. Visual servoing based on multiple view geometry

To improve the robustness of Visual Servoing and to generate an optimal trajectory, multiple view geometry techniques can be implemented where feedback signals are processed without prior knowledge about the coordinates of the target. Also, to remove the classical Visual Servoing failure caused by high illumination and calibration errors, and measurement errors, this technique can be effectively used in robot motion control. The different geometrical methods include Homography matrix-based approach, Epipolar geometry, Trifocal-tensor, and Essential matrix-based approach (Li Li et al, Citation2021).

2.4.4.1. Visual servoing based on homography matrix

The purpose of the homography matrix is to generate an ideal trajectory in image space using projective transformation where 3D coordinates are converted into corresponding 2D coordinates. The scaled Euclidean reconstruction approach is used to calculate the rotation and the translation motion of the camera path. Z. Gong et al. (Citation2020) proposed uncalibrated VS to calculate the shortest path using projective homography which is used to remove large errors. It was tested for Universal Robot 5 where the camera intrinsic and extrinsic are unknown. Wang Zhang et al (Citation2019) used homography for improving robot grasping tasks where the author used grasp images to train Grasp Quality Network (GQN) and the success rate was 78% in real-world testing. Y. Qiu et al. (Citation2017) implemented homography-based VS for tracking control of mobile robots where initially video recorded trajectory is taken as input features and calculated projective geometry of those feature points to perform visual feedback control. To reduce the time cost of image processing and to avoid non-unique solutions, Lopez-Nicolas et al. (2010) used the homography matrix in the control law directly instead of SVD for pose estimation. The main advantages of using homography in visual servoing are it is the simplest algorithm and saves computational time. The limitations of this approach are coordinates are assumed zero hence it works for the applications involving planar objects. Next, this method cannot be used if the camera moves out of view hence there is a need to fix the camera where it always sees the camera. But again, it is not practically possible especially with mobile robots where the field of view is dynamic (Dey et al, Citation2012).

2.4.4.2. Visual servoing based on epipolar geometry

This technique can be useful when two monocular cameras or stereo camera is used to capture the same scene then we use epipolar geometry to calculate the relation between the two views. When the optical centers of each camera are projected onto the other camera<apos;>s image plane epipolar points are formed (Prasad et al, Citation2018). Becerra et al (2011) proposed epipolar geometry for dynamic pose estimation of differential drive mobile robot. The pose was further predicted using discrete Extended Kalman Filter (EKF) and it showed better performance than the Essential matrix-based approach. Chesi et al. (Citation2006) used epipolar geometry to estimate the position of apparent contours in unstructured 3D space so that using VS robot can navigate efficiently. Mutib et al (2014) used ANN-based VS to learn the mapping between mobile drifts and visual kinematics and used multi-view epipolar geometry to compensate for the rotational errors. The main advantage of epipolar geometry is that they are easy to implement and provide the relationship between image planes without the knowledge of 3D structure. The only drawback is that they are more susceptible to noise.

2.4.4.3. Visual servoing based on trifocal tensors

In general, the trifocal tensor is a 3x3x3 array that calculates projective geometric relationship in all three dimensions which depends only on the pose and not on scene structure. They are more robust to outliers. Zhang et al proposed a trifocal tensor for Visual Servoing implemented for 6 DOF manipulators. The author built a trifocal tensor between current and reference views to establish a geometric relationship. Finally, a Visual controller with adaptive law was developed to compensate for the unknown distance, and stability was analysed using Lyapunov-based techniques (Zhang et al, Citation2019). Aranda et al. (Citation2017) developed a 1D tensor model to estimate the angle between omnidirectional images captured at different locations. This technique provided a wide field of view with precise angles. K. K. Lee et al. (Citation2017) used trifocal tensors to estimate the 3D motion of the camera and used two Bayesian filters for point and line measurements. B. Li et al. (Citation2013) develop a 2D trifocal tensor-based visual stabilization controller whereas Chen Jia et al. (Citation2017) designed a trifocal-tensor-based adaptive controller to track the desired trajectory with key video frames as input. The main advantage is that it is computationally less intensive, but the disadvantage of this method is that no closed-form solution is possible with nonlinear constraints.

The overall summary of Visual Servoing and its different approaches were discussed in depth with advantages and limitations. The main implication of this section is that the execution of robot tasks becomes simpler and efficient when visual information is combined with control theory. Using different image processing techniques like homography matrix, epipolar geometry, Visual Servoing can be optimized. Also, with the help of machine learning and deep learning techniques, it becomes easier to handle large image datasets, planning and control under complex environments can also be achieved.

3. High-Level intelligent controllers for visual servoing

In Visual Servoing, the controller is used to calculate the error e between the reference pose or image feature and actual pose or feature to zero where the visual feedback loop is very effective in increasing the dexterity and flexibility of the robot. Over the past few decades, lots of improvements have been made in the field of control systems applied to robotics because only path planning with the low-level controller is not sufficient. To control the external disturbances like the vibration of motors, jerking, and other environmental factors, optimization, and learning-based approaches are very effective in set-point tracking and stabilization control. The previous literature discussed different conventional approaches but in recent years many visual control schemes are based on optimization for better tracking and motion control of robots. Though conventional controllers like PID are commonly used due to their robustness and simplicity, in robotics the model is non-linear, and to use conventional controllers the model must be linearized. This becomes time-consuming for the complex models. Hence there is a need for optimization techniques that can be combined with conventional controllers for better performance. Further many hybrid approaches using learning-based approaches are being explored. This section explains different works based on optimization approaches and learning-based approaches to design high-level controllers for vision-based control.

3.1. Optimization approaches for visual servoing

The optimization of robot parameters is needed to perform accurate navigation avoiding obstacles. Classical VS techniques cannot handle the constraints effectively and perform unsatisfactorily. Hence VS is combined with optimization algorithms so that large displacements or rotations are handled safely. By taking into account various constraints in robot dynamics and environment, the robot must be able to take its own decisions thereby increasing efficiency, reliability, and stability and reducing the consumption of power and vibrations. The common parameters considered are minimizing velocity, acceleration, and jerk, minimizing the distance between goal and robot, minimizing time, and much more. The optimization problem can be a single or multi-objective problem, Linear or Non-linear. In the multi-objective optimization (MOO) problem, these multiple objectives are treated independently between them giving a set of Pareto optimal solutions which is termed the Pareto set. The common steps in optimization are defining the objective function; selecting the set of variables to maximize or minimize; specifying a set of constraints; choose optimization algorithms like Genetic Algorithm, Least-Squares, Linear Quadratic Regulator, Newton Raphson iteratively until stopping criteria is achieved. There are a few practical constraints in Visual Servoing namely field of view constraint, dynamic saturation constraint, and kinematic saturation constraint (Adrian et al, Citation2017). For Visual Servoing, enough features must be extracted with respect to the reference image to reduce the error but due to limited FOV of the camera or in case of moving the camera the above criteria get affected. To solve this issue hybrid controllers (Gans et al, 2007) and path planning approaches can be used (Chesi, Citation2009). Fang et al. (Citation2012) and Freda et al (2007) installed a camera on the pan-tilt platform to keep the target as close as possible to the center of the image. This configuration captures enough features with the target image so that the error signals of the high-level controller become zero. Jia and Liu (Citation2015) removed the redundant FOV by applying homography to system states. Finally, a switching controller is designed to track the shortest path. Jing Xin et al. (Citation2021) proposed improved tracking learning detection (TLD) algorithm which extracts the features when the camera is in FOV or predicts the features when it is away from the FOV. It was experimentally tested in MOTOMAN 6 DOF manipulator and the authors found that it effectively solved the servoing failure due to FOV.

The saturation constraints commonly occur in servo motors when the input signals exceed the maximum limit thereby causing the controller to saturate and degrading the performance of Visual Servoing. To resolve this issue time-varying state feedback control (Jiang et al., Citation2001), vector field orientation feedback (Michalek et al,2010), and filters control can be used (X. Chen et al., Citation2014). Y. Chen et al. (Citation2019) implemented Model Predictive Control (MPC) as a tracking controller with constraints and unknown dynamics to calculate the optimal value of the input signal. Further, the virtual control torque method based on the error state of the dynamic model is regulated to eliminate the effect of saturation and nonlinearity. The servo controllers designed by Wang et al. (Citation2017), (Citation2021)) considered saturation constraint for the design of MPC-based VS. Panah et al (2021) proposed optimized hybrid VS for 7 DOF robot arms to calculate the shorter camera path by eliminating convergence issues, non-optimized trajectories, and singularities. The author used a neuro-fuzzy approach to calculate the pseudoinverse Jacobian matrix and found it to remove singularities making it robust against image noises. Ke et al (2018) implemented a stabilization control strategy for differential drive mobile robots using robust MPC along with an ancillary state feedback system. The author used linear variable inequality-based primal-dual neural network (LVI-PDNN) for gain scheduling and MPC to generate optimized trajectory. J Li et al. (Citation2019) used structured light-based VS to optimize the welding pose. It uses the IBVS method to extract the weld seam center as features and control law was formulated to optimize the orientation and position of the welding torch. The authors found that features were matched, and camera velocities were zero in presence of noises making it highly robust. Y Zhang et al. (Citation2017) used Recurrent Neural Network (RNN) for kinematic control of puma 560 robotic manipulator. The author uses constrained optimization problems with joint and velocity limits and found it to be efficient. Z Qiu et al. (Citation2019) designed MPC for both eye-in-hand and eye-to-hand configurations considering visibility and torque constraints. The authors also designed a sliding mode observer to estimate joint velocity and found that IBVS with MPC gave a satisfactory performance with minimum pixel error. The major drawback of any optimization algorithm is that if the number of control variables is more, the time taken to find the optimal values increases thereby increasing the computational effort. Also, there is a lot of possibilities where the solutions can reach local optimum thereby failing to attain global optimum.

3.2. Learning based approaches for visual servoing

Using artificial intelligence, a set of rules and models were created that allows the robot systems to sense and act like humans in the real world (Peters et al., Citation2011). The learning approaches for Visual Servoing increase the intelligent ability of robots and a high level and common tasks like human-robot interaction, adapting sudden environmental changes can be done efficiently. Furthermore, the learning approaches are classified into supervised, unsupervised, and reinforcement learning. In supervised learning techniques, both input and targets are known whereas in unsupervised learning only part of the input is known and the target to achieve is unknown. Finally, Reinforcement learning (RL) is used to make decisions sequentially based on rewards obtained from acting on the environment. It can be used for tasks like trajectory optimization, motion planning, dynamic path planning, and Visual Servoing. The effective learning algorithms for Visual Servoing are Deep learning algorithms, RL, Deep RL, and much more. Pandya et al. (Citation2019) proposed a Convolution Neural Network (CNN) based Visual Servoing strategy for vehicle inspection. Shi et al. (2020) implemented a decoupled Visual Servoing with a fuzzy Q-learning module are designed for adjusting mixture parameters for efficient control of wheeled mobile robots. Sampedro et al. (Citation2018) used deep reinforcement learning-based Visual Servoing in object following tasks. Pedersen et al. (Citation2020) proposed DRL for grasping tasks where he initially uses cycleGAN for transferring the raw images into simulated ones and uses DRL for gripper pose estimation and achieved a success rate of 83%. Bateux et al. (Citation2017) proposed deep learning-based VS for the 6DOF robot for estimating the relative pose between two images in the same scene. This method was highly robust even under strong illumination and occlusions and it was able to achieve error less than 1 mm. Castelli et al. (Citation2017) proposed machine learning-based VS that uses Gaussian Mixture Model (GMM) for industrial path following tasks. Saxena et al. (Citation2017) used CNN for end-to-end VS in which knowledge of camera parameters and scene knowledge is unknown. The data used for training CNN was synchronized camera poses and the author tested in UAV where he claims that using CNN there is no need for feature extraction or tracking. MengKang et al. (Citation2020) proposed IBVS based on extreme ML and Q learning to solve the problem of complex modeling. Here ML is used to calculate pseudo inverse interaction matrix to avoid singularities and uses Q learning to adaptively adjust the gains of servo motors. It was tested in 6 DOF robots with eye-in-hand configuration. Ribeiro et al. (Citation2021) uses CNN for grasping tasks. The authors used Cornell grasping dataset to train CNN to predict the position, orientation, and opening of the robot gripper. Jin et al. (Citation2021) proposed policy-based DRL for IBVS of mobile robots to resolve the problems of feature loss and improve servo efficiency. The flowchart of optimization-based controllers and learning-based controllers has been developed by referring to the literature (Calli B et al., Citation2018; Bateux et al., Citation2018; J Li et al., Citation2019; L Shi et al., Citation2021) and they are illustrated in Figure ) respectively. Though the learning-based approaches can solve complex problems easily there are few limitations. The first and foremost drawback is that it requires lots of data for training to provide better results. Next, it requires powerful processing like GPUs which is not cost-effective. They do not learn interactively and incrementally in real-time.

4. Literature review analysis in vision-based planning and control

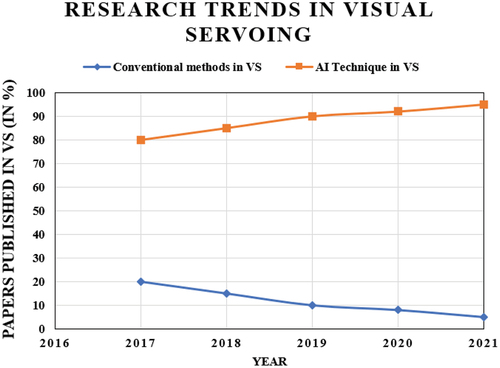

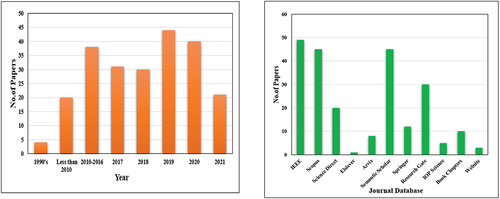

Over the decade, many research papers have been published for vision-based path planning tasks which use both conventional as well as intelligent techniques. This paper reviewed in detail the steps involved in vision-based autonomous systems as a whole dealing with sensors, calibration methodology, path planning, and Visual Servoing. Many papers were reviewed in the area of vision-based path planning and visual feedback controllers using optimization and learning-based approaches. It was found that among papers reviewed there is a huge scope in learning-based approaches particularly in the area of deep learning and deep reinforcement learning for both path planning and Visual Servoing. Most of the papers taken for this review analysis are from well-reputed journals taken from databases namely Scopus, Web of Science, Arvix, and much more and they are recent papers with a duration from 2017–2021. The number of papers investigated for this paperwork is illustrated in the form of graphical analysis based on year wise and journals database referred for this paperwork. From ), it can be inferred that more papers were reviewed between 2017–2021. It can also be noted that most of the papers chosen for review analysis were from IEEE and Scopus databases. The reason for choosing such papers is that they gave better insights into the Visual Servoing concept and its various methodologies. It also gave in-depth information about different state-of-art approaches followed in vision-based path planning which helped to formulate an overall review about vision-based autonomous navigation and control. The entire Scopus database contains over 200,000 AI-based papers that are getting published every year where the quality of paper is high. So, the need for AI in the field of robotics is increasing which motivated to formulate this overall review which will help the readers in understanding the concepts of path planning and Visual Servoing. Based on the papers reviewed analysis has been made based on the number of papers that used conventional and AI approaches in path planning as well as in Visual Servoing. The detailed analysis of research in the field of VS has been plotted year wise in .

Figure 15. (a) year wise graphical analysis of visual servoing papers published (b) journal database of papers reviewed in this work.

From the above graph, it can be seen that 80% of research works are carried out in AI-based techniques in the year 2017 which drastically increased to 98% in the year 2021. In the present scenario, the need for robotics in Robot Process Automation is increasing, the research work is carried out based on conventional approach is decreasing because AI can make the robot think and behave like humans, and productivity and quality increases. Also, with developments in processing technology like GPUs, AI algorithm training, and large database handling have become simpler. Furthermore, many simpler tools to perform Visual Servoing in various programming languages have been developed (Riberio et al,2021). Future research work is mainly concentrating on building robots for social interaction with humans and in improving the intelligent ability of robots, the usage of artificial intelligence algorithms is expected to increase diminishing the usage of conventional algorithms. Furthermore, recent research is focussing mainly on unsupervised learning strategies like Dynamic Programming, Intelligent Markov Decision process, Deep Reinforcement Learning under environment. These algorithms tend to make the robot more robust under uncertain conditions making learning better than supervised learning algorithms. Also, three-dimensional Visual Servoing involving point cloud analysis is being researched making computer vision tasks more realistic.

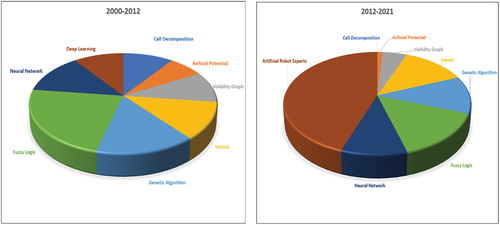

a and b illustrate the trend of conventional and heuristic path planning algorithms for Visual Servoing from the year 2000–2012 and 2012–2021 respectively. During the period from 2000–2012, most of the planning of robots were done using conventional algorithms and deep learning was least explored between the year 1980–2012 which saw a drastic increase from 10% to 40% after 2012. Among them, Voronoi graph-based algorithm was very popular because it was efficient for irregular obstacle shapes. Deep learning was researched more after 2012 after the introduction of transfer learning. From 2012–2021 researchers made a paradigm shift from conventional algorithms to AI-based techniques for path planning because the robot was made to navigate autonomously without hitting the obstacles thereby increasing the intelligence of the robot. It also circumvents the need for modelling the robot kinematics and dynamics using complex mathematical equations because when the number of DOF increases modelling becomes time-consuming. The total contribution of conventional approaches is just 19% whereas intelligent approaches are dominated by about 81% focussing mainly on Artificial Robot Experts.

Figure 17. (a) Comparison of conventional vs AI approaches between 2000–2012 (b) comparison of conventional vs AI approaches between 2012–2021.

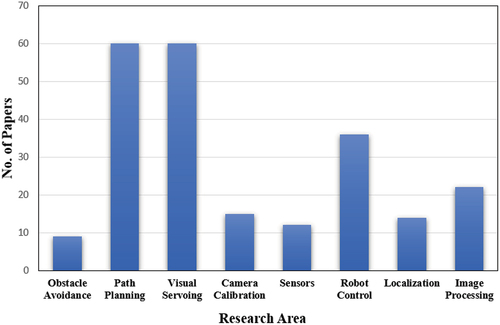

The recent research shows that artificial intelligence algorithms are dominating in almost all industries since they outperform better for larger datasets. In total, 227 papers have been reviewed to give an overall idea about how the research varied from the conventional approach to AI approaches. This section gives the reader the basic idea behind techniques that were used for the past 20 years in the field of Visual Servoing and vision-based path planning. It can be noticed that before 2012 more conventional approaches were used to plan the path and perform motion planning. It was the period where robots were operated in manual mode with 100% human intervention. The researchers opted for the Artificial potential field and graph-based approaches for path planning. Further for the design of controllers, PID was commonly used. But though these techniques gave accurate results, it was not able to achieve autonomous behaviour. Also, these algorithms cannot handle complex, dynamic environments to give accurate and mimic realistic situations. This led researchers to shift to learning-based approaches which could increase the intelligent ability of robots. Hence after 2012, with the development of low-cost cameras and processing capabilities, AI-based approaches became popular. To handle dynamic realistic situations Fuzzy logic, ANN, and deep learning were used to perform path planning for Visual Servoing based applications. Using these algorithms robots will also be able to plan the path even in an unknown environment. In recent times, learning-based algorithms combined with optimization algorithms like MPC, GA, Kalman filter techniques are used to predict the future optimal trajectory of the robot efficiently. In further years more research will happen in the field of Reinforcement Learning with deep learning which makes the robot capable of learning from mistakes without human intervention. The number of papers reviewed for each section discussed in this paper is also illustrated in .

5. Research gaps, challenges and future scope of vision-based tasks

The usage of robots in both home and industry is accelerating for the past few decades. Based on the statistics released by the International Federation of Robotics, the ratio of robots for every 10,000 human employees, is drastically increasing at a rate of 5% to 9% (Jung et al,2020). But there are still lots of challenges and improvements needed for both industrial and personal robots which researchers are constantly working on it to change the future of manufacturing or for home automation. Among the field of robotics, due to the emergence of low-cost 2D and 3D cameras computer vision has gained popularity for solving problems like tasks Image classification, object detection, image segmentation, path planning, trajectory generation, Visual Servoing, environment mapping, and so on (Yue et al,2021; Dulari et al,2021; Iuliia et al,2021; Vuuren et al,2020). This section explains the existing challenges in various industrial sectors as well as in computer vision-based tasks from the survey done and a few challenges are listed in Table .

Table 9. Challenges existing in vision-guided robots

The future direction of robotics in Visual Servoing is combining deep learning and reinforcement learning for better feedback control. Also, to make the scene representation more realistic analysis is shifting from two-dimensional to three-dimensional using 3D cameras. Next is combining robots with the Internet of Things(IoT) where robots can be benefitted from cloud services and global connectivity. The only challenges are interoperability, safety, and self-adaptability and further details can be found in (Ray et al,2016; Razafimandimby et al., Citation2018). There is a need to improve on coordination between humans and robots because human intervention can reduce measurement errors and many complex tasks still require humans for coordination. So, care must be taken to develop a proper architecture for safe and efficient communication between them (Talebpour et al,2019). Furthermore, performance evaluations for Visual Servoing are required to be developed effectively so that robots can be controlled in a better way. For such cases, robustness analysis can be done for sensor failure cases and sensor communication by performing prediction analysis using MPC or using Markov Decision Process to restrict the movement of robots under failure conditions and take decisions accordingly (Verma et al,2021). The optimization algorithms make the system computationally effective with less power consumption which can be used for actions like task allocation, motion planning, and decision making. Other factors which need to be addressed while developing autonomous robots are collision, congestion, and deadlock. Finally, optimal techniques can be combined with 3D image processing and image reconstruction for better real-world understanding.

6. Conclusion

In recent years, there is a huge gap between technology and industrial demands in which the need for fully automated robots is increasing. To remove this gap, industries are shifting towards the implementation of robots in intelligent manufacturing or any other social activities. To satisfy the industrial needs, vision-guided robots are made which are capable of moving accurately and precisely based on the visual feedback received. This in turn enables greater flexibility and higher productivity in a diverse set of manufacturing tasks. The key to successful and efficient vision-based robots can be implemented with better system design which in turn reduces the cost and time-consuming process. Though better technologies have emerged, still there are many issues in vision-based tasks due to illumination and occlusion. So, researchers have been finding many solutions to eliminate this issue. Many works and review papers on different methodologies and their improvements were discussed. But there were very limited works that concentrates on understanding the basic concepts of vision-based robots. Hence this paper aims to provide a detailed survey on different modules of vision-based robots which will be an eye-opener for the readers. The stages which were covered in the discussion was namely perception, localization, path planning, and Visual Servoing. The various stages have been analyzed logically and technically to give clear insights on various techniques and basics involved. The paper also includes designing high-level controllers needed for Visual Servoing using optimization and learning-based approaches to emphasize more on recent approaches as many works on conventional controllers have already been addressed. In each stage, various state-of-art approaches followed in recent years were discussed. The comparison of different camera calibration methodologies which is responsible for better data acquisition has been elaborated. Different path planning algorithms and conventional VS schemes were discussed, and optimization of VS based on multi-view geometry was also covered. The Graphical analysis has also been made year-wise making it convenient for the readers to understand the improvements evolved in the area of Visual Servoing and path planning. Also, the journal database referred for this paperwork is illustrated using a graph to show the quality of papers referred for this work. Finally, the challenges and future direction of Visual Servoing that still exist in vision-guided robots have been discussed. It can be concluded that vision sensors are a good cost-effective solution for vision-based navigation of any robot either industrial manipulators or mobile robots. The overall vision-based robot planning can be used for obstacle detection for safe human-robot interaction,3D environmental mapping, and SLAM-based path planning. From the review, it can be inferred that as the industries are shifting towards AI and smarter technologies, vision-based robots have more scope where more robust algorithms will be built that can do multiple tasks like human beings and also safely interact with humans. Many technologies involving reinforcement learning are emerging which makes the robot behave and take decisions like a human. The future scope of review works includes a detailed review on behavioral cloning of robots, 3D Visual Servoing, deep reinforcement learning, and SLAM-based techniques.

Acknowledgements

The authors are immensely grateful to the authorities of Birla Institute of Science and Technology Pilani, Dubai campus for their support throughout this research work.

Disclosure statement

No potential conflict of interest was reported by the author(s). https://www.ijser.org/onlineResearchPaperViewer.aspx?A-Review-on-Wireless-Embedded-System-for-Vision-Guided-Robot-Arm-for-Object-Sorting.pdfIEEE

Additional information

Funding

Notes on contributors

Abhilasha Singh

Abhilasha Singh is currently pursuing full time Ph.D. degree in Electrical and Electronics engineering at BITS Pilani, Dubai Campus since 2018. Her current research area focuses on Robotics, Computer Vision, and Intelligent Control Techniques.

References

- Adrian, O. D., Serban, O. A., & Niculae, M. (2017). Some proper methods for optimization in robotics. International Journal of Modeling and Optimization, 7(4), 188–44. https://doi.org/10.7763/IJMO.2017.V7.582

- Aguiar, A. S., Dos Santos, F. N., Cunha, J. B., Sobreira, H., & Sousa, A. J. (2020). Localization and mapping for robots in agriculture and forestry: A survey. Robotics, 9(4), 1–23. https://doi.org/10.3390/robotics9040097

- Al Mutib, K., Mattar, E., Alsulaiman, M., Ramdane, H., & ALDean, M. (2014). Neural network vision-based visual servoing and navigation for KSU-IMR mobile robot using epipolar geometry. The International Journal of Soft Computing and Software Engineering. March 1-2 (pp. 1–8). March 1-2 3( March 1-2. https://doi.org/10.7321/jscse.v3.n3.136.