?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Architectural visualization is based on diverse content that utilizes virtual structures and environments, such as those in games or movies, along with fundamental values conveyed through visual design communication. This requires an extremely high level of presence owing to characteristics of information delivered via independent real-time configured tangible media. This study aims to improve presence in real-time architectural visualization content and proposes detailed production-process guidelines. To this end, factors necessary for presence improvement were first analyzed. Findings thereof were subsequently used as a basis for proposing realistic expression principles suitable for a real-time environment.

PUBLIC INTEREST STATEMENT

Virtual reality has been actively studied in conjunction with its application in military, medicine, education, and advertising, and graphics-related computer technology has been advancing accordingly. Artists can efficiently produce virtual reality contents using advanced authoring tools; however, there is a lack of research on the visual recognition and expression method of objects. Our study aims to improve the quality of the devices produced in various fields by providing specific guidelines for their visual art aspect, to which visual reality technology is applied. In conclusion, this study investigates the factors and possible improvements for virtual reality and analyzes new technologies being used for the creation of virtual environments. This research aims to solve the increasing expectations of users and contribute to the advancement of virtual reality.

1. Introduction

Architectural visualization refers to realistic visualization of pre-construction structural designs and requires extremely high presence compared to other fields involving production through tangible media. Recent advancements in 3D production software and real-time game-engine rendering technology have enabled easier artist configuration of virtual environments (VEs). However, the necessary mechanical tasks and tight schedules have deflected focus from virtual reality (VR) as, essentially, a type of media and generated considerable mass-produced content.

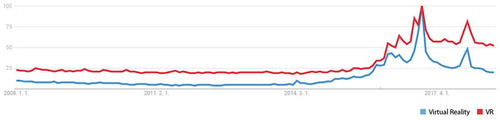

VR is a tangible medium allowing deep and efficient information delivery. VR technology has passed the disillusionment stage trough in the hype cycle and entered the enlightenment slope. Several profit models are being designed based on post-verification popularization of new technology, and increasing numbers of researchers are exploring application of this technology. This trend is supported by the consistent increase in VR-related searches in the past decade (Figure ).

As VR is experiencing increased market popularity and application in entertainment, etc., presence, a significant component of the VR experience, merits exploration. VR technology degrades temporal/spatial limitations and creates digital archives of historical ruins or cultural heritage, preserves the present, or imagines and creates the future. Human-created content is based on a virtual world, enabling the impossible by surpassing human limits and temporal/spatial restrictions through imagination. Efforts to improve presence and narrow the imagination–reality gap of this process are ongoing. Recent technological advancements have achieved an unprecedented level of reality, with application fields such as films, games, exhibitions, and education (Badni, Citation2011; Mosaker, Citation2001; Butt, Citation2018; Bittner, Citation2018).

McLuhan (Citation1964) stated that media are “extensions of man”; i.e., the development of various media-derived digital tools is contributing to human evolution. Digital architecture has grown from a 2D orthogonal coordinate system based on recent architectural designs to a 3D design tool. Moreover, digital-tool-based media have advanced to surpass simple reality simulation and enter the realm of “hyper-reality”.

American psychologist Abraham Maslow (Citation1968) presented a hierarchy of needs to explain human potential and defined the motivation towards continuous growth surpassing basic needs as “meta motivation”. Hyper reality can be regarded as a result of meta motivation.

This paper focuses on VR as a tangible medium to examine the factors of presence for improved information delivery and constructs a methodology applicable to real-time VE. Furthermore, effective, realistic expression guidelines are explored through technical understanding of current computer graphics. Consequently, principles of realistic expression are established.

2. Presence

2.1. Definition of presence

Since the emergence of media, discussions regarding immersion and the distance between the virtual world and reality have been ongoing. Since the birth of VR, presence has been defined from various perspectives. These definitions are non-uniform, encompassing remote, virtual, and mediated presence. Lee (Citation2004a) reviewed the origins of these terms, focusing on their differences, and argued that the term “presence” is most favorable for expanding the research community.

Research on presence began with a study on remote presence to improve teleoperation system control precision (Nam et al., Citation2017). Since then, the term “presence” has been applied to the psychological phenomenon occurring during experience of a media-based phenomenon (Nam et al., Citation2017).

Steuer (Citation1992) defined presence as the strength of conviction of an individual that they exist in a mediated environment instead of their actual physical environment. Similarly, Slater and Usoh (Citation1993) defined presence as the degree to which a user experiencing VE-generated stimuli or effects is convinced they are in an environment different from their actual location. Heeter (Citation1992) divided presence into three categories: personal presence, where a person recognizes their existence in VR; social presence, felt through interaction with artificial intelligence or other people in VR; and environmental presence, experienced through similar interaction with the VE as with reality. Witmer and Singer (Citation1998) defined presence as a subjective experience, where the user feels they are in an environment that differs from their actual physical space.

Overall, presence may be defined as a psychological state where a user cannot recognize that they are deeply immersed in a VE. This may be interpreted as a type of virtual medium or a feeling of possession by an avatar.

2.2. Factors of presence

To improve presence, its factors must first be analyzed. These factors, along with the validity of presence measurement methods, have been investigated previously. In such studies, the factors are mainly categorized as technical (the objective quality of the technology), user (individual differences), and social (social characteristics of the technology) (Lee, Citation2004). The present study targets presence improvement in a real-time VE, and thus pertains to technical factors regarding objective quality. Therefore, relevant studies are discussed here.

The technical factors of presence have been analyzed by Sheridan (Citation1992), Steuer (Citation1992), Lombard and Ditton (Citation1997), Witmer and Singer (Citation1998), and Sheridan (Citation1992) divided factors impacting presence into the extent of sensory information, i.e., the total amount of valid information delivered to the user; control of relation of sensors to the environment, i.e., the use relation of sensors to the environment delivered to the user; and ability to modify physical environment, i.e., the user’s ability to change their relationship with objects or other people in a virtual space. Sheridan argued that the user experiences complete presence when a balance of these three factors is achieved.

Steuer (Citation1992) argued that the presence-determining factors within the experience–technology relationship are vividness and interaction. Vividness can be divided into breadth (the number of sensory channels activated simultaneously) and depth (the resolution in each sensory channel). Interaction is divided into speed, range, and mapping; speed refers to the reaction time to the input environment, range is the number of environments that can be successfully controlled and the amount of change at the user’s disposal for each attribute, and mapping refers to the reproduction similarity of natural real-world movements in VR.

Lombard and Ditton (Citation1997) divided the presence-determining factors into media type, media content, and media users. The media type factor incorporates the number of sensory outputs, visual display characteristics (image quality, image size, viewing distance, movement and color, multidimensionality, camera techniques), audio linearity, sensory stimuli aside from audiovisual senses, interactivity, medium obtrusiveness, event recentness, and number of people. Media content includes social truth, media custom use, and task or behavioral properties. Media users encompasses the determination to overcome distrust, knowledge of and prior experience with the medium, and other use variables (personality, preference, recognition style, sensory seeking level, solitude, atmosphere, age, gender).

Witmer and Singer (Citation1998) divided the presence-determining factors into control, sensory, disturbance, and realism factors. Control factors correspond to the user control level of the VE or media program, determined by the degree of control, instant reaction to control, event foresight, control type, and physical or environmental change ability. As regards sensory factors, sensory patterns, information abundance, information diversity, and information consistency impact presence. Disturbance factors include isolation, distinct focus, and user awareness/unawareness of the interface. Realism factors include sensory realism, information consistency with the objective world, and dissatisfaction with separation from VR. Thus, previous studies have examined the technical factors of presence, focusing on improving the level of information related to the user’s sensory organs or improving interaction in the virtual space.

3. Presence improvement method for real-time architectural visualization content

3.1. Research method

As the first step of improving the presence of virtual reality content, which is the ultimate objective of our research, we analyzed the factors influencing the presence. It was discovered in previous studies that the technical factors, which can be controlled during the production process for content, include “fidelity of sensory information” and “interactivity”, as well as the significance of those factors. Among various virtual reality content, this study focused on “real-time architectural visualization”, which requires relatively higher presence, to concentrate on the fidelity of sensory information without the interference of other absorbing elements such as the game’s story or handling. Among the five senses, the focus was placed on vision.

After analyzing the factor influencing the presence, the research is being directed towards “improving the fidelity of sensory information”, in which the principles of reality expression was applied as the relevant solution. The principles of reality expression was defined previously by a few researchers to create the scenes produced by computer graphics in a more realistic manner. However, it is unsuitable for application in the current production environment due to technical advancements, which brought up the need for a more suitable principle. To deduce the principle, we analyzed previous research on the principles of reality expression and examined the relationship. By analyzing advanced computer graphics technology, the factors required for generating scenes with enhanced fidelity of sensory information were deduced and classified according to the principle.

3.2. Architectural visualization

Architectural visualization is visual configuration of architectural design information to deliver information on structures under construction or fully built to concerned parties. Generally, technical information is delivered through architectural visualization regarding spatial structure, type, numerical data, etc. However, through media, consumers wish to obtain emotion-based information such as convenience, coziness, and warmth. Because of this information discord, the supplier and consumer experience conflict.

Before advanced development of computer-based designs, such information was delivered to consumers through artwork of the building exterior and interior, while structures expressed through the line and color dynamics were appropriate for delivering emotion to consumers. Today, computer-generated structural graphics can be easily configured by anyone, and the expertise of artists regarding emotional delivery tends to be neglected.

3.3. Perceptual realism

Based on the technical factors regarding presence determined in previous studies, improved sensory information linearity is necessary for VR content with improved presence. Factors improving sensory information linearity include increased vividness (the technical ability to create a rich mediated environment) and interaction (the degree to which the user can impact the environmental form or content). Vividness is similar to the concept of “perceptual realism”.

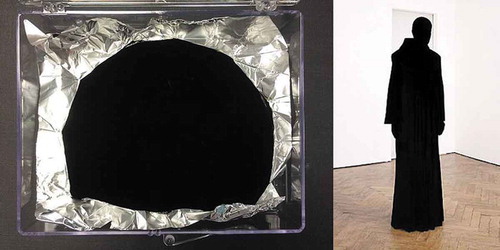

Realism is achieved when sensory information is consistent with the general range of information accumulated through human experience. Figure shows the phenomenon associated with inconsistency between visual and experience information. VANTA Black is a material developed using nanotechnology. Because of its light-absorbing properties, when applied to objects, their stereoscopic properties disappear, and an optical effect occurs in which a hole appears in the space.

Prince (Citation1996) claimed that perceptual realism is achieved when any given structure is consistent with a multidimensional audiovisual experience. Thus, creation of a rich, emotionally mediated environment, or improving vividness, is achieving perceptual realism.

Senses (sight, hearing, smell, taste, and touch) allow perception acceptance through physical processes. Humans are most dependent on sight; the eyes provide approximately 70% of sensory signals. Therefore, sight may be regarded as the most important perceptual sensory channel and must be prioritized when configuring media with improved presence.

3.4. Real-time rendering

Rendering refers to the process of generating realistic/unrealistic 2D images by calculating the location, light source, or material of a 2D or 3D model created in a 3D space through a computer program. Real-time rendering refers to quick processing of at least 30 fps. Because of such high hardware performance requirements, real-time rendering has long been criticized for its low quality compared to images or animations rendered in advance. Many researchers have attempted to overcome these shortcomings by imitating phenomena achieved using various methods, such as using approximated values to resolve problems. With recent, rapid developments in graphics processing units (GPUs), fundamental changes have been made to solutions stagnant due to hardware limitations. Thus, real-time, high-quality, high-calculation-cost rendering has been facilitated, such as ray tracing or radiosity, which can only be achieved through pre-rendering.

The main differences between real-time rendering and pre-rendering concern interaction and continuity. Interaction in a VE is meaningful as users have permission to participate in content. Further, interaction contributes to expansion of the breadth of use of computer graphics. Continuity connects objects and phenomena by configuring time and space instead of single frames. Applications of the principles of previous studies to real-time environments are limited because of interaction and continuity, which are the advantages of real-time rendering. To convey real-time rendering with improved perceptual realism, expression methods that change with technological advancements are required.

3.5. Principles of real-time expression

Modern computer-graphics technology has developed to blur the boundaries between the virtual and real worlds. When cameras with precise image reproduction emerged, painting evolved to a new form. Today, computer-graphics technology is creating a new junction in art history through imitation that comes close to photo-realism. Textural computer graphic images similar to actual images are reproduced through rendering processes involving lighting, camera settings, and material management, as for real-world filming. In the computer-graphics image production environment, the basic elements of actual images are controlled to achieve realistic expression. When a surface material pattern is used, the object location, size, and direction must be appropriately established through a texture mapping process to replicate reality.

Fleming (Citation1999) proposed 10 basic principles for realistic 3D images: 1. clutter and chaos; 2. personality and expectations; 3. believability; 4. surface texture; 5. specularity; 6. aging: dirt, dust, and rot; 7. flaws, tears, and cracks; 8. rounded edges; 9. object material depth; and 10. radiosity. When at least 8 of 10 principles are satisfied, the image is similar to the actual image. These principles may be used in guidelines for improved realism for image or animation pre-rendering. However, while these principles are significant to perceptual realism for improved presence, this approach does not surpass a general theory that fails to consider technical matters.

Brenton (Citation2007) redefined Fleming’s principles, dividing them into four main categories. Specularity and radiosity, which are related to light, were included in the principle of global illumination. Principles related to material and form, i.e., surface texture, rounded edges, and object material depth, were categorized as accurate object representation. The clutter and chaos principles were simply reduced to a chaos principle. This was further divided into clutter, which occurs through human interaction with their environment; randomness, where a natural phenomenon occurs by chance; and non-uniformity, i.e., an object cannot have perfectly uniform appearance and placement. Finally, dirt, dust, rust, flaws, scratches, and dings were included in the imperfection principle. Moreover, Brenton excluded the personality and expectation principle, claiming that there are individual, perspective-based differences, as well as the believability principle, because it is already inherent to the actual image.

Jong Kouk Kim (Citation2018) theoretically analyzed previous studies and considered their correlations to deduce four different principles for photo-algorithm configuration from architectural rendering images, applying new and evolved computer-graphics technology developed since those studies were performed. The principles are as follows: physically accurate light calculations, accurate object form reproduction, realistic material and texture expressions, and reproduction of camera characteristics appearing in photographs. These were divided into principles according to a digital artist’s workflow. The principles from previous studies are presented in Table .

Table 1. Existing principles of realistic expression

The Brenton and Kim principles are realistic architectural rendering principles applicable to real-time architectural visualization content. Therefore, the authors anticipated methods for immediate application after extraction of the necessary aspects of each principle from the target content; however, several problems were encountered during testing. First, previous studies neglected the real-time environment. Brenton (Citation2007) included specularity in global illumination, but excluded believability; hence, there were categorization aspects unsuitable for the present study. Further, Jong Kouk Kim (Citation2018) failed to consider the virtual camera. Rather than being errors, these issues arise from perspective differences; thus, it was necessary to improve upon the existing principles to resolve potential issues.

4. Proposed guidelines and technical understanding

For improved perceptual realism in real-time architectural visualization content, the revised realistic expression principles proposed herein are divided into five categories: real-time global illumination, physically based rendering (PBR), trace expression, expressions with verisimilitude, and visual phenomenon reproduction.

4.1. Real-time global illumination

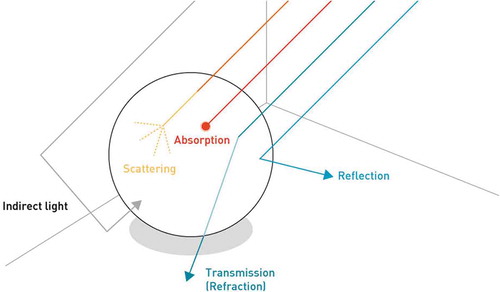

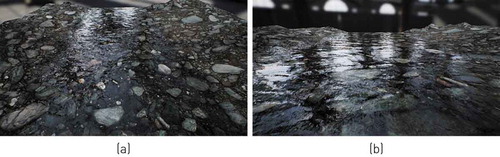

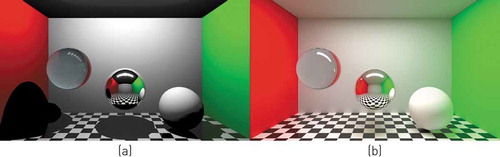

Human eyes perceive light through various channels. Figure illustrates various incident light paths on an object. For a realistic portrayal, the absorption, reflection, curving, penetration, and scattering phenomena occurring when light reaches an object surface must be expressed. The global illumination principle refers to calculation of the scene materials and the light reflected or absorbed between objects, considering information on both direct and indirect light. This principle is most important for improved realism based on computer-graphics techniques, through rapid improvement of light-related realism. Figure depicts scenery differences achieved using global lighting in a non-real-time environment. However, application of global illumination to realistic media portraying 30 or more images per second involves excessive calculations for current computers. Therefore, typical real-time rendering environments involve use of lightmap or image-based lighting (IBL).

Figure 4. Label: Scenario differences observed using global illumination in non-real-time environment (a) without and (b) with global illumination.

Light and shadow simulations for a given object are calculated in advance and stored in an image called a lightmap. When content is executed, the lightmap information is retrieved and applied to the existing material surface; hence, light-related calculations are reduced and high quality is provided compared to the user’s system specifications. However, if a lightmap is applied to a moving object, the pre-determined indirect lighting information becomes skewed. Therefore, this method can only be applied to static objects.

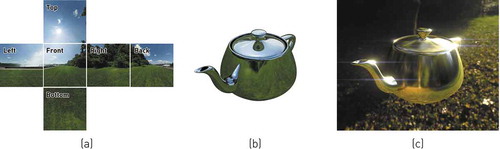

IBL converts actual image information into a light source and illuminates an object based on the user’s line of sight and object surface direction (Debevec, Citation2006). This method is used when real-time global illumination configuration is difficult or combined with real-time global illumination, because of its light source placement limitations compared with non-real-time global illumination. Figure shows environment mapping using a cube map developed to show the initial specularity of metallic objects. With advancements in hardware performance, cube map images can now be used as light sources and environment mapping has developed through IBL.

Lightmap and IBL can serve as relatively low-cost global illumination, but cannot resolve visual interaction, which is the fundamental problem affecting global illumination. Therefore, a real-time global illumination method has been developed to achieve the desired level of perceptual realism, along with real-time global illumination algorithms such as Light Propagation Volume (LPV) and Voxel Global Illumination (VXGI).

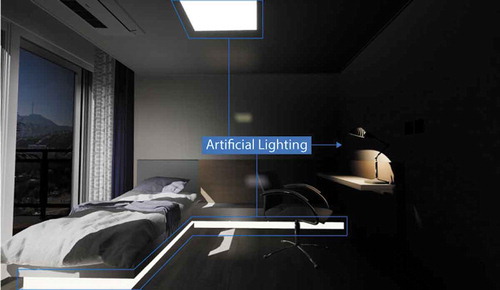

LPV is a real-time global illumination algorithm developed by Crytek, which refocuses light source information stored in a 3D grid onto a scene (Kaplanyan & Dachsbacher, Citation2010). In Unreal Engine (UE) 4, the production environment used in this study, the LPV functions (currently under development) can be implemented according to the console variable settings in the engine. When LPV is activated, indirect indoor lighting can be expressed through direct external lighting (Figure ). The indirect light also changes with the direct light direction (Figure ).

Figure 6. Label: Changes observed upon (a) deactivation of global illumination and (b) LPV activation.

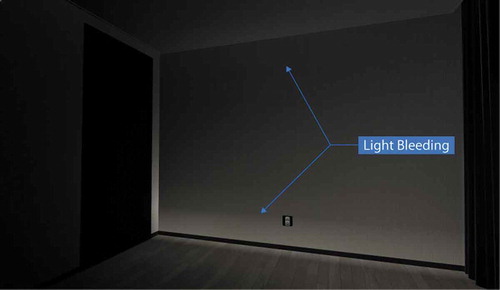

However, LPV does not create global illumination through artificial lighting and can only be applied to direct light (Figure ). This is problematic when configuring a space that may be closed, as for architectural visualization content. Further, the light bleeding phenomenon occurring in certain situations (Figure ) may be reduced to some extent via the settings (although this function is under development), but this will not resolve the fundamental issue. Thus, an improved real-time global illumination method is required to overcome the limited functions of LPV.

VXGI was developed by NVIDIA to obtain reflected light source information using the voxel cone tracing method for light source information stored on the voxel grid. VXGI may apply global illumination to any object or material used in the engine, and can even create dynamic configurations using ambient occlusion, specular, multi-bounce, and other functions. Figures and 1 show VXGI application to the same scene as in Figure and a voxel created to express global illumination, respectively.

Relatively high hardware performance is required to drive VXGI-based content. However, rapid hardware performance development is expected. Interactivity and sensory depth will likely be supplemented by VXGI application to real-time architectural visualization. Interactivity expressed through real-time global illumination manifests as subtle changes through visual interaction, such as changes in the reflective light of surrounding objects based on user movements. This factor impacts emotions and generates realism through user experience.

4.2. Physicality-based rendering

American installation artist Alexa Meade demonstrated that realistic lighting cannot guarantee a realistic scene. Figure is a 3D artwork designed to have a 2D appearance through an optical illusion, and it shows the contribution of the surface material to the perceptual realism phenomenon.

In UE4, PBR is a shadow processing and rendering method that accurately expresses light interaction with surface materials, developed to fit the UE system based on Burley (Citation2012) (Karis & Games, Citation2013). This technique is based on physical light reflection and uses shading methods prior to PBR or diffused reflection and specular reflection textures, but it simplifies many aspects of actual light-related physical phenomena. PBR is not a perfect simulation of actual light; however, it can simulate light phenomena according to different surface materials. Therefore, it approaches light-related physical phenomena from a more scientific perspective than legacy rendering and is a combination of corrected shading methods.

Including PBR in the realistic expression principles can combine the material-related principles scattered throughout the existing principles, yielding more accurate and natural results. Moreover, this approach can reduce dependence on the programmer or technical artist during the interactive content production process for real-time architectural visualization, games, etc., and images can be expressed as the graphic designer intended.

In UE4, PBR can control four main properties (base color, roughness, metallicity, and specularity) and several additional functions to deduce material characteristics. These properties are configured by combining various computer-graphics theories. (Russell, Citation2015) categorized the theories composing PBR into diffusion and reflection, translucency and transparency, energy conservation, metallicity, Fresnel effect, and microfacets.

4.2.1. Diffusion and reflection

Diffusion and reflection describe light–object interactions. When light reaches an object surface, it is absorbed to become heat or partially reflected to emphasize the object color. In legacy rendering, this phenomenon is configured separately through diffuse lighting (light is absorbed by the material surface and only the surface-color wavelength is reflected) and specular lighting (the light itself is reflected according to its location and angle). However, these methods tend to rely on the artist’s senses. Unlike the existing diffuse texture method that uses the visible image itself as a texture, PBR uses the basic color texture in consideration of the pure color wavelength reflected by the material. Unlike the separation and processing into reflected light textures employed in existing rendering methods using grey environment maps or mask maps, reflected light can be set based on the material metallicity, as the light itself is reflected by a more metallic material.

4.2.2. Translucency and transparency

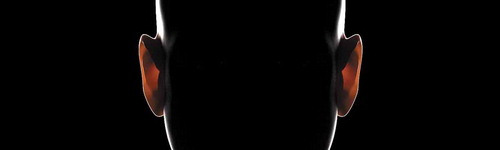

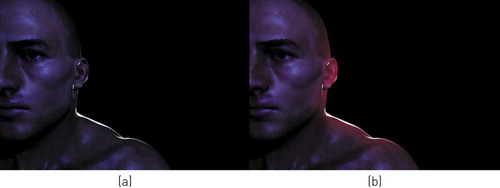

Translucency and transparency indicate degrees of light penetration of an object instead of absorption or reflection. Subsurface scattering, where light enters a translucent object, is extremely important for actual scene portrayal. Figure shows subsurface scattering in an unrealistic environment. The light from behind the subject is scattered in thin skin and emitted. Many other substances are also translucent, such as milk, fabric, candles, and leaves. To express these materials in a VE, a bidirectional scattering surface reflectance distribution function model is necessary. However, there is a high calculation cost; thus, this method is inappropriate for a real-time environment.

Rapid, accurate, real-time subsurface scattering expression is attracting considerable research attention (Ki, Citation2007; Wang, Citation2006; Hao, Citation2004; Jimenez et al., Citation2009). Current graphics hardware cannot perfectly simulate subsurface scattering, but this can be expressed through approximate values. Here, subsurface shading and subsurface profile shading models are used to express subsurface scattering in UE4. Both models have similar properties, but the latter is slightly more focused on human skin expression. Figure compares use of basic materials and subsurface profile shading in a real-time environment. For the latter, the ear and curves hit by the light appear more realistic and natural, demonstrating the importance of translucency and transparency expression.

4.2.3. Energy conservation

Russell (Citation2015) explained that diffusion and reflection are mutually exclusive with respect to energy conservation laws. In PBR, the energy conservation rule means that light reflected from an object surface cannot be stronger than the pre-contact light, under the assumption of accurate physical laws. Figure shows shading of materials with energy conservation. Light reflection on a rough surface appears over a wider area and is vaguer because of light scattering, whereas reflection from a smooth surface appears in a distinct, narrow area.

4.2.4. Metallicity

Metallic property implementation is the main difference between existing rendering methods and PBR (Walker, Citation2014). Metallicity is a characteristic of metallic objects and differs from non-metal and visual characteristics. When light hits a metal object surface, all visible-wavelength light is absorbed and converted into a low-energy state. Light with the same wavelength is emitted. These metal characteristics prevent penetration of the object interior by scattered light. The object has high reflectivity up to 90–95% depending on the surface polish. When white light illuminates metals such as iron, aluminum, or silver, the emitted light is almost at the same wavelength. For copper, gold, or bronze, only light with a certain wavelength is emitted, yielding a unique color. Therefore, in PBR, the metal type is expressed according to the object surface metallicity and by controlling the reflected-light color.

4.2.5. Fresnel effect

The Fresnel effect refers to the phenomenon where reflection and penetration have different proportions according to the observer location and light incidence angle. For example, for water, if the light incidence angle is close to 0° (90°), the reflectivity is close to 0 (1, i.e., 100%) (Figure ).

Reflectivity and penetration can generally be explained by the laws of reflection and Snell’s law. However, these laws indicate the light direction, but not the reflection or penetration degree or the refractive indexes of countless materials. Schlick’s law of approximation (EquationEq. (1(1)

(1) )) is used for higher-efficiency expression in most real-time content production tools (Karis & Games, Citation2013), and is given as follows:

where F is the Fresnel term, F0 is the base reflective index, v is the view direction and h is the microfacet normal (rotated half-dir)

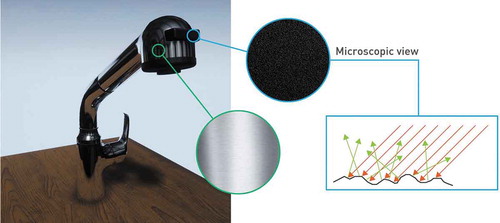

4.2.6. Microfacets

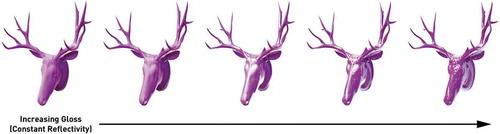

All surfaces have roughness, which influences the surface reflectivity. Material roughness can be expressed through other terms like gloss or smoothness. When a material surface is more smooth than rough, it has mirror-like total reflection properties. For a rougher surface, the reflected light scatters in different directions with decreased clarity (Figure ). It is impossible to express subtle unevenness on a surface using methods for unevenness portrayal in legacy rendering.

In legacy rendering, the total surface reflectivity is applied or omitted at the artist’s discretion, regardless of the surface roughness. To configure reflection in the surrounding environment, a cube map of real-time or pre-made images of each environment section can be used; hence, an optical illusion with apparent reflection is created. An improved method involves rendering of the scene to be reflected one more time to configure real-time reflection.

PBR also employs a cube map or real-time reflection, but the reflection expression controls a value inversely proportional to the total reflectivity depending on the surface roughness. Figure shows surface-roughness-based variations.

4.3. Trace expression

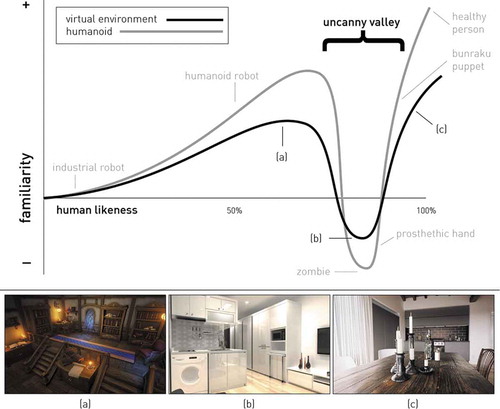

Although less common with graphics software advancements and greater artist expressive competence, overly perfect computer-graphics environments often appear in virtual environments. Trace is defined as “a mark or stain left behind after a certain phenomenon or entity disappears or passes by”. Some examples of traces can be a mark created by rusting or due to friction when metal oxidizes. Traces that humans subconsciously overlook occur beyond the object production process in the presence of air. When such traces are omitted, users feel a sense of awkwardness. This is connected to the uncanny valley phenomenon, i.e., the hypothesis that human familiarity with a robot increases with greater similarity of the robot appearance and behaviors to those of humans (Figure ). When the degree of similarity exceeds a certain level, familiarity rapidly decreases. When the similarity is such that the robot is difficult to differentiate from humans, familiarity increases again (Mori, Citation1970). This theory transcends robot-related fields and is applicable to computer-graphics-based video media or game characters. This study argues that the uncanny valley phenomenon can also be applied to environments.

From the first application of 3D computer-graphics technology to VR, the main objective was real-time creation of realistic results. However, because of initial hardware performance limitations, technological development focused on non-photorealistic rendering (NPR) for real-time rendering (Jung & Kim, Citation2008). NPR can produce rendering surpassing reality depending on the pattern of expression and expertise, through a compromise between performance and expression (Figure )). The problem is the output (Figure )). Hardware performance has improved to allow realistic real-time rendering. Although a production environment similar to the output shown in Figure ) is possible, user reviews are drastically divided. We attribute this problem to insufficient detailed expressions. When the level of VE expression reaches the uncanny valley region, the degree of unfamiliarity is relatively euphemistic, but the appearance remains similar.

Figure 19. Label: Mori’s Uncanny valley phenomenon (Mori, Citation1970).

Ultimately, the solution to the uncanny valley problem in VR is identical to that for characters, i.e., to express details or traces. The principles of trace expression not only improve scene realism, but also greatly impact emotional aspects. The trace expression principle employed in this study includes principles 1 and 6–8 of Fleming (Citation1999), and it incorporates the error and randomness principle arising for creation or placement of structures or traces formed on objects through use.

4.4. Expressions with verisimilitude

Fleming (Citation1999) presented the believability principle: the realism of other objects is improved by a certain reliable object (anchor’s). As noted above, Brenton (Citation2007) excluded the believability principle. However, this study argues the need for “expressions with verisimilitude” principle, which includes the believability principle in a broad sense.

Verisimilitude can be divided into subject verisimilitude and the verisimilitude rule. The latter, which is extensive and internal, is applied to all digital game types and takes real-world time, locations, people, and objects as subjects; these are reconfigured when real-time architectural visualization is incorporated in some categories of functional games. The principle is to actively achieve subject verisimilitude (Han, Citation2011). In “expressions with verisimilitude”, verisimilitude refers to probability from a cognitive perspective that occurs during narrative creation using video media and message delivery, and includes the time, space, and object factors of subject verisimilitude.

High-verisimilitude expressions must be similar to individual imagery imprinted through social customs and experiences. The cause-and-effect relationship of each narrative factor must be clear. In terms of generational background, if there is an object that cannot likely exist in the context of the frame or a significant difference between the object size and a person’s experience, verisimilitude is reduced through insufficient probability from a cognitive aspect. Cases lacking verisimilitude in VE configuration mainly result from insufficient cognitive probability due to inadequate historical research or inaccurate measurements. For advertisements and other image media, a surreal world can be created easily by intentionally removing verisimilitude or emphasis on the delivered message. However, in real-time architectural visualization, presence must be prioritized, and verisimilitude precedes physical expression of the environment. Table presents the detailed expressions with verisimilitude principles essential for architectural visualization content production, with case examples.

Table 2. Cognitive aspect probabilities for improving verisimilitude

4.5. Visual phenomenon reproduction

Faithful reproduction of photographic factors (depth of field, vignetting, motion blur, lens flares) due to physical camera characteristics and the tools of typical architectural photography in digital architectural rendering images contribute to improved realism (Jong Kouk Kim, Citation2018). The human eye and cameras have similar structures; hence, some of the phenomena generated by camera’s characteristics can be observed with the naked eye. However, real-time architectural visualization content is a video, not static, medium; thus, the requirements differ. Video media are based on a visual aftereffect: when a person regards a series of still images at slightly different phases, their visual perception system creates the illusion of movement. Therefore, in this study, improved realism through the reproduction of the visual aftereffect phenomenon was investigated. Three factors reproducible by production tools were deduced: motion blur, eye adaptation, and light spread.

4.5.1. Motion Blur

The frame rate creates visual aftereffects in video media and must be at least 15 fps for video perception (Dohoon. Kim, Citation2017). However, this is the minimum unit of recognition and the scene dynamics determines the actual frame rate required for a natural effect. The majority of modern movies are filmed at a relatively low frame rate of 24 fps (Song, Citation2013). This feels natural to the audience because of the motion blur effect generated within the frame during the filming stage (Figure ). Motion blur occurs when a rapidly moving object or scene is filmed at a relatively slow shutter speed, or the trajectory of the object viewed with the naked eye is blurred to suppress the strobe effect.

Use of motion blur for realistic media content is controversial. Unlike video media, for which the viewer must view a predetermined sequence unilaterally, in realistic media, the viewer interacts with the content through various input devices. As a result, unpredictable scenes must be created in real-time. Motion blur based on real media characteristics often causes cybersickness due to sensory discord. Therefore, to create natural scenes without using motion blur in realistic media, a high frame rate is required so that the input inconsistency goes unnoticed. High-performance GPUs are required to maintain the frame rate, along with a high scanning rate for the display device that transmits the GPU-created images. Content optimization must also be established.

This study focuses on a universal rather than ideal environment. While there are individual differences, the human critical fusion frequency is 60 Hz, similar to the scanning rate of commonly used display devices (Amato Citation2014). Differences result from different variables, such as content optimization or resolution, but typical hardware should be able to stably configure 60 fps or higher. In other words, in a GPU environment that can configure 200 fps or higher and for a 240-Hz display device, motion blur may be unnecessary. However, in a GPU environment that configures 60 fps with stability and for a 60-Hz display device (a popular specification), motion blur will help improve realism.

4.5.2. Eye adaptation

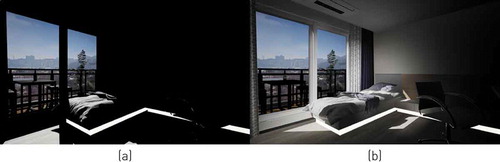

When the environment illumination changes suddenly, object recognition decreases temporarily. This is because the human eye cannot quickly adapt to the change in brilliance. Eyes accustomed to a bright environment are temporarily blinded by a dark room. Gradual return of vision is called dark adaptation (the opposite process is called light adaptation). Unlike an actual environment, the illumination in a VE on a display does not reflect eye adaptation when seen with the naked eye; hence, an artificial eye adaptation effect must be reproduced.

4.5.3. Light-spread effect

When a light source is viewed in a relatively dark environment, or light is reflected by an object with high specular reflectivity, the border of the bright area appears to disperse. This phenomenon is called light spread (or glow or bloom) when describing similar effects in various graphics-related software. The cause is similar to that of the light spread that occurs for a camera aperture. In a relatively dark environment, the pupil of the eye is similar to the camera aperture; it expands to receive more light, but the light appears to spread because of high-order aberration, an optical error of the cornea (Figure ). As with other visual phenomena, this phenomenon does not appear naturally on the display screen without artificial expressions (Figure ).

5. Discussion

The present study was prompted by exploration of whether “hyper-realism” can be achieved in VR. Theories concerning improved presence were examined and conditions for guidelines for real-time architectural visualization content creation were deduced. The commonality of faithfulness to sensory information and perceptual realism were identified as factors that improve presence. Hence, realistic expression principles appropriate for a real-time environment were proposed.

Similar studies were analyzed and principles suitable for a real-time environment were selected. Factors were also deduced through analysis and technical understanding of recent computer-graphics technology. Hence, five different primary and secondary principles for realistic expressions in real-time architectural content were deduced (Figure ). These principles can be divided into essential items for real-time architectural visualization content with improved presence, along with optional items.

If various research findings are used to improve presence and direct endless qualitative improvement of media for application to VR, “hyper-reality” will be one step closer. This study may serve as the minimum rule for arguments that low-quality products are art, which use the profoundness and ambiguity of art as a shield. However, we do not wish for VE creation to become formulaic or rigid based on the realistic expression principles proposed herein. Our goal is to extract the inner thoughts of artists concerning VE expression.

The authors acknowledge that methods deduced from the proposed principles must be revised in accordance with technological development, and that the presence of content based on these principles must be measured to verify the quantitative effects of these principles.

Additional information

Funding

Notes on contributors

Dubeom Kim

Dubeom Kim received the MS degree in Art & Technology from Chung-Ang University in 2007. Currently, he is pursuing his PhD degree in Art & Technology at Graduate School of Advanced Imaging Science, Multimedia and Film, Chung-Ang University, Seoul, Korea. His research interests include, Archviz and Virtual Environments design.

Young Ho Chai

Young Ho Chai has an M.S. degree in mechanical engineering from SUNY at Buffalo and a Ph.D. in mechanical engineering at Iowa State University in 1997. From 2006 to 2007, he was with the Louisiana Immersive Technology Enterprise at the University of Louisiana at Lafayette, U.S.A. He is currently a professor with the Graduate School of Advanced Imaging Science, Multimedia, and Film, Chung-Ang University, Seoul, Korea, where he leads the Virtual Environments Laboratory. His research interests include spatial sketching.

References

- Amato, I. 2014. “When bad things happen in slow motion.” Nautilus. http://nautil.us/issue/19/illusions/when-bad-things-happen-in-slow-motion

- Badni, K. (2011). The collaboration of two different working practices enabling autonomous virtual reality artwork. Digital Creativity, 22(1), 49-21. doi: 10.1080/14626268.2011.548524

- Bittner, L, Mostajeran, F, Steinicke, F, Gallinat, J, & Kühn, S. (2018). Evaluation of flowvr: a virtual reality game for improvement of depressive mood. Biorxiv, 451245. doi: 10.1101/451245

- Brenton, J. A. (2007). Photorealism within Interior Architectural Images [PhD diss.]. Texas Tech University.

- Burley, B., & Studios, W. D. A. (2012, August). Physically-based shading at disney. In ACM SIGGRAPH (Vol. 2012, Pp. 1-7). https://www.semanticscholar.org/paper/Physically-Based-Shading-at-Disney-Burley/3f4b29a0cc51f1ba8baaf99ac008f3acf18d04df

- Butt, A. L, Kardong-Edgren, S, & Ellertson, A. (2018). Using game-based virtual reality with haptics for skill acquisition. Clinical Simulation in Nursing, 16, 25-32. doi: 10.1016/j.ecns.2017.09.010

- Debevec, P. (2006). Image-based lighting. ACM SIGGRAPH 2006 Courses. ACM.

- Fleming, B. (1999). Advanced 3D Photorealism Techniques. John Wiley & Sons.

- Han, H. W. (2011). A Study of Fantasy and Versimilitude in Serious Games. Journal of the Korean Society for Computer Game, 24(3), 1–9. https://www.earticle.net/Article/A154020

- Hao, X, & Varshney, A. (2004). Real-time rendering of translucent meshes. Acm Transactions on Graphics (Tog), 23(2), 120-142. doi: 10.1145/990002.990004

- Heeter, C. (1992). Being There: The Subjective Experience of Presence. Presence: Teleoperators &Virtual Environments, 1(2), 262–271. doi:10.1162/pres.1992.1.2.262

- Jimenez, J., Sundstedt, V., & Gutierrez, D. (2009). Screen-space perceptual rendering of human skin. ACM Transactions on Applied Perception, 6(4), 23. https://doi.org/10.1145/1609967.1609970

- Jung, K., & Kim, S. W. (2008). Application of non-photorealistic rendering techniques for computer games. Journal of the Korean Society for Computer Game, 14, 91–96. https://www.earticle.net/Article/A89552

- Kaplanyan, A., & Dachsbacher, C. (2010, February). Cascaded Light Propagation Volumes for Real-time Indirect Illumination. Proceedings of the 2010 ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, 99–107. doi:10.1145/1730804.1730821

- Karis, B., & Games, E. (2013). Real Shading in Unreal Engine 4. In Physically Based Shading in Theory and Practice, SIGGRAPH 2013 (pp. 621–635). Course. https://www.semanticscholar.org/paper/Real-Shading-in-Unreal-Engine-4-by-Karis/91ee695f6a64d8508817fa3c0203d4389c462536

- Ki, H. W, & Oh, K. S. (2007). Real-time hierarchical techniques for rendering of translucent materials and screen-space interpolation. Journal Of Korea Game Society, 7(1), 31-42. https://www.koreascience.or.kr/article/JAKO200714364025067.page

- Kim, D. (2017). Video Codecs and Video Formats - 2017, Revised Edition. Communication Books.

- Kim, J. K. (2018). Study on the Photorealism of Digital Architectural Rendering Images. Journal of the Korea Academia-Industrial Cooperation Society, 19(2), 238–246. doi:10.5762/KAIS.2018.19.2.238

- Lee, K. M. (2004). Why Presence Occurs: Evolutionary Psychology, Media Equation, and Presence. Presence: Teleoperators & Virtual Environments, 13(4), 494–505. https://doi.org/10.1162/1054746041944830

- Lee, K. M. (2004a). IT Impact on Society and Mind for Literary Approach in USA. Korea Information Strategy Development Institute.

- Lombard, M., & Ditton, T. (1997). At the Heart of it All: The Concept of Presence. Journal of Computer‐Mediated Communication, 3, 2. https://doi.org/10.1111/j.1083-6101.1997.tb00072.x

- Maslow, A. H. (1968). Toward a Psychology of Being. Reinhold Publishing.

- McLuhan, M. (1964). Understanding Media: The Extensions of Man. MIT Press.

- Mori, M. (1970). The Uncanny Valley. Energy, 7(4), 33–35. doi:10.1109/MRA.2012.2192811

- Mosaker, L. (2001). Visualising historical knowledge using virtual reality technology. Digital Creativity, 12(1), 15-25. doi: 10.1076/digc.12.1.15.10865

- Nam, S., Yu, H. S., & Shin, D. H. (2017). User Experience in Virtual Reality Games: the Effect of Presence on Enjoyment. International Telecommunications Policy Review, 24(3), 85–125. https://www.kci.go.kr/kciportal/ci/sereArticleSearch/ciSereArtiView.kci?sereArticleSearchBean.artiId=ART002264806

- Prince, S. (1996). True Lies: Perceptual Realism, Digital Images, and Film Theory. Film Quarterly, 49(3), 27–37. https://doi.org/10.2307/1213468

- Russell, J. (2015). Basic theory of physically based rendering. Marmoset. https://marmoset.co/posts/basic-theory-of-physically-based-rendering/

- Sheridan, T. B. (1992). Musings on Telepresence and Virtual Presence. Presence: Teleoperators &Virtual Environments, 1(1), 120–126. https://doi.org/10.1162/pres.1992.1.1.120

- Slater, M., & Usoh, M. (1993). Representations Systems, Perceptual Position, and Presence in Immersive Virtual Environments. Presence: Teleoperators & Virtual Environments, 2(3), 221–233. https://doi.org/10.1162/pres.1993.2.3.221

- song, N. (2013). Film Directing. Seoul. Communication Books.

- Steuer, J. (1992). Defining Virtual Reality: Dimensions Determining Telepresence. Journal of Communication, 42(4), 73–93. https://doi.org/10.1111/j.1460-2466.1992.tb00812.x

- Walker, J. 2014. Physically based shading in UE4. Unreal Engine. https://www.unrealengine.com/en-US/blog/physically-based-shading-in-ue4?lang=en-US

- Wang, L, Wang, W, Dorsey, J, Yang, X, Guo, B, & Shum, H. Y. (2006). Real-time rendering of plant leaves. In Acm Siggraph, (2006(Courses), 5-es. doi: 10.1145/1185657.1185725

- Witmer, B. G., & Singer, M. J. (1998). Measuring Presence in Virtual Environments: A Presence Questionnaire. Presence: Teleoperators and Virtual Environments, 7(3), 225–240. https://doi.org/10.1162/105474698565686