?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This paper examines the relationship between Federal Reserve policy and the Taylor rule, a commonly used model for guiding monetary policy. The study analyzes the deviation of the actual Federal Funds Rate from the Taylor Rule model during distinct structural changes, using real-time macroeconomic data available to the Fed at the time of their interest rate decision. The research focuses on whether former Fed chair Alan Greenspan’s policies from 2003 to 2006, which have been linked to the housing bubble, were deviant from the Taylor Rule. The findings show that there isn’t sufficient statistical evidence to support this claim, and a machine learning text analysis of the Federal Open Market Committee transcripts confirms the presence of only one regime during this period. These results contribute to the existing literature on monetary policy and its impact on the economy, providing valuable insights into the relationship between Federal Reserve policy and the Taylor Rule.

1. Introduction

Since the end of the inflationary episode in the 1970s, the U.S. has experienced a significant reduction in the volatility of GDP growth (with the exception of the 2008 recession) and only a moderate amount of yearly inflation (with the exception of 2021−present). This has prompted extensive empirical research to assess why economic conditions have changed so dramatically. A possible explanation is that the U.S. Federal Reserve has changed the decision-making process that determines the federal funds rate. Consequently, there is a growing interest in modeling the process by which this decision is made and determining whether this process has changed over time.

Various efforts have been made to model the decision-making process of the Federal Reserve on the federal funds rate question, with the ”Taylor Rule” emerging as the clear winner. Named after its creator, John Taylor, the rule is presented as a simple equation that specifies how the federal funds rate should be set based on three variables: the equilibrium real interest rate, the deviation of real GDP from a target, and the inflation gap. The original rule from Taylor (Citation1993) stipulates that the federal funds rate, , should be set in response to the equilibrium real interest rate,

, the deviation of real GDP from a target,

, and the inflation gap (the difference between the observed inflation,

, and the target inflation rate,

*) according to

Taylor suggests , i.e. the percent difference between real output,

, and trend or potential output

. Including Taylor’s suggestion for the parameter values and targets, the rule becomes

Taylor initially presented the rule at the 1992 Carnegie-Rochester Conference as an empirical regularity, but its descriptive power has gradually transformed the formula into a policy prescription, particularly by Taylor himself. The rule has been used to evaluate the US Federal Reserve’s monetary policy over various periods. For instance, Taylor (Citation2007, Citation2009) used the rule named after him to argue that monetary policy was “too loose” from 2003 to 2006 compared to the experience of the previous few decades and played a role in the formation of the housing bubble by making housing finance cheap and attractive, thereby contributing to the boom-bust cycle in housing starts. Taylor’s arguments are based on evaluating Greenspan’s adherence to a Taylor rule that uses final values of the output gap and inflation rate.

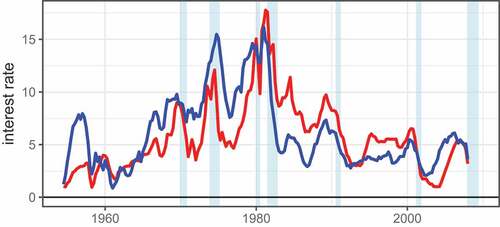

Figure displays the actual federal funds rate and the one suggested by the Taylor Rule with final values of the output gap and inflation rate. Plotting the difference between the actual federal funds rate and that suggested by Taylor Rule in Figure , we can see the dip from 2003 to 2006 in the series that constitutes the “loose” period in Greenspan’s tenure that is contentious for Taylor.

Figure 1. St. Louis fed Taylor rule.

Figure 2. 1954–2008.

However, research in this area has been subject to some controversy due to different methods of measuring inflation. Alex et al. (Citation2019) argue that the period from 2000 to 2007 was inconsistent with the Taylor Rule, due to the use of the real-time GDP deflator as the measure of inflation rather than the vintage core-PCE series the Federal Reserve was using at the time. The author argues that this inconsistency highlights the importance of using accurate measures of inflation when implementing the Taylor Rule.

Similarly, Orphanides and Wieland (Citation2008) conclude that policy actions from 1988 to 2007 (Greenspan’s tenure) have been consistent with a stable Taylor rule. They confirm that many of the apparent deviations of the federal funds rate from the prescriptions of an outcome-based Taylor rule may actually be the result of the policymakers’ systematic responses to projections of output gap and inflation as opposed to recent economic data, as confirmed by the authors. Orphanides (Citation2001, Citation2002) emphasize the necessity of evaluating monetary policy with rules based on real-time data, and one should judge the federal funds rate policy based on the information available to the policymakers at the time of policy decision rather than ex-post revised data, which is only available much later. In fact, Mehra and Sawhney (Citation2010) find that applying a forward-looking Taylor Rule using real-time inflation data reduces much of the gap between the federal funds rate and the Taylor Rule recommendation in 2003–2006.

Despite the existing research, questions remain about the consistency of the Taylor Rule and the Federal Reserve’s adherence to it. This paper adds to this literature in two fronts. First, we perform a data-based determination of regime changes in U.S. monetary policy by employing the methods of Alex et al. (Citation2019), but using the insight of Mehra and Sawhney (Citation2010) that it is necessary to use real-time core-PCE as the measure of inflation in the Taylor Rule post-2004, rather than CPI or the GDP deflator thereby reflecting the series used by the Federal Reserve at the time. We employ a standard non-forward-looking version of Taylor’s (Taylor, Citation1993, Citation1999) decision model of the federal funds rate, the version of the Taylor Rule suggested by the St. Louis Federal Reserve (EquationEquation 2(2)

(2) ), where the potential GDP series comes from the Congressional Budget Office (CBO) model potential GDP in the U.S. Second, we add to the literature by confirming our more standard time series regimes switching analysis with a machine learning text analysis approach to determine regime changes in monetary policy. In this methodology, the monetary policy regime is determined by which cluster of related transcripts a particular FOMC meeting is determined to belong. This provides an independent method of finding policy regimes. However, we can only identify monetary policy regimes over a more limited period (since machine-readable FOMC meeting transcripts were not available until 1979). Due to the lack of availability of the full meeting transcripts, Greenspan’s era can only be compared to Volcker’s chairmanship period.

The rest of the paper is organized as follows. Section 2 discusses the literature on previous regime identification as well as on the application of machine learning techniques on FOMC transcripts. Section 3 reviews the Taylor Rule and the construction of deviation series with the data available to the Fed at the time of their decisions. Section 4 discusses the empirical regime detection methodologies, Section 5 presents the empirical results, and Section 6 concludes.

2. Previous literature

Regime changes in U.S. monetary policy have been extensively studied using a variety of methodologies, each with its own strengths and limitations. Table summarizes the most common approaches in the literature. Early approaches, such as the binary indicator variable method proposed by Hamilton (Citation1989), were limited to only two regimes and did not account for changes in the economy over time. Sims (Citation1992) proposes a more general approach to identifying and estimating changes in the parameters of a dynamic model. Compared to Hamilton’s (Citation1989) Markov-switching model, which assumes a fixed number of regimes, Sims (Citation1992) VAR approach does not require a pre-specified number of regimes. Instead, the number of regimes can be determined endogenously based on the data, making it a more data-driven approach. However, these models relied on assumptions about the structure of the economy and faced challenges in identifying relevant shocks. To address these limitations, researchers such as Fair (Citation2001), and Judd and Rudebusch (Citation1998) modified the SVAR model to allow for more flexible identification schemes and better incorporation of economic theory.

Table 1. Summary of literature review on identifying and analyzing monetary policy regimes

A typical method is to choose regime dates based on some known features and history of the available data and then use tests of parameter constancy, e.g., Chow tests, to justify the dates chosen. However, as Hansen (Citation2001) observes, if the breakpoints are not known , then the Chi-squared critical values for the Chow test are inappropriate. Using known features of the data (e.g., the Volcker policy experiment of 1979–82) to determine breakpoints can make these candidate break dates endogenously correlated with the actual data, leading to incorrect inferences about the significance of those candidate break dates. Furthermore, not all of the parameters or targets necessarily change at the same date. Fitting values to the policy parameters on the output and inflation gap,

and

in Equationequation (1)

(1)

(1) , with an OLS model, such as

provides less than reliable parameter estimates if the regime includes few data, as is the case with potential Volcker policy experiment.

Boivin (Citation2006) attempt to address some of these issues by using a Time-Varying Parameter model that assumes that policy parameters are time series which follow drift-less random walks. This is the Kalman filter model of Cooley and Prescott (Citation1976), and all the parameters in the model can be estimated jointly by maximum likelihood estimation. However, when the variance of the policy parameter time series is small, the parameters can only change slowly over time, and policy regime shifts may not be visible. Boivin (Citation2006) deals with this problem in an ad hoc manner but still fails to identify discrete regimes that align with the terms of particular Federal Reserve Chairs. He finds only a gradual shift in the Taylor Rule policy parameters until around 1982, the start of the Great Moderation.

Timothy and Sargent (Citation2005) use a Bayesian Markov-switching VAR model to identify changes in the conduct of monetary policy and their effects on key macroeconomic variables. Their model allows for different regimes, each with its own set of parameters and error variances, to capture the possibility that the relationship between policy and macroeconomic outcomes may change over time. Sims and Zha (Citation2006) improve upon the earlier studies by introducing a new approach to modeling regime-switching dynamics that allow for continuous and endogenous shifts in the behavior of both policymakers and the economy. Murray et al. (Citation2015) use Markov-Switching models to identify regimes from 1965 onward and find that the Taylor parameters are mostly consistent, except for 1973–1974 and 1980–1985. Alba and Wang (Citation2017) also identify monetary regimes between 1973 and 2014 using a k-state Markov regime-switching model and find 2001Q2 to 2005Q4 to be mostly consistent with the Taylor Rule “low discretionary regime,” and 2006Q1 to 2007Q4 to be completely consistent with the Taylor Rule, which is broadly consistent with other findings, despite their use of the GDP deflator instead of the CPI and core-PCE post-July 2004. These methods allow for more flexible modeling of uncertainty and can produce more accurate estimates of the model parameters but require specifying prior distributions for the model parameters.

The work closest to ours, Alex et al. (Citation2019), use the Bai and Perron (Citation2003a, Citation2003b) structural change model to identify regimes and fit OLS regressions similar to Equationequation (3)(3)

(3) for each regime to check for significant deviations from the expected parameters on the inflation and output gap. However, their conclusion that the 2000–2007 period had significantly different parameters than the standard Taylor rule is dependent on using the GDP deflator as the measure of inflation rather than real-time core PCE, as shown in other studies.

Recently, machine learning methods, specifically text analysis, on central bank communication have been on the rise to study monetary policy. Hansen and McMahon (Citation2016) use topic modeling to analyze the effect of forward guidance on macroeconomic aggregates. Shapiro and Wilson (Citation2022) use sentiment analysis on FOMC transcripts to estimate the objectives of central bank preferences. They find that the FOMC’s implicit inflation target was roughly 1.5 percent, significantly below the assumed value of 2 percent. Handlan (Citation2021) uses neural networks for text analysis on FOMC meeting statements to generate “monetary policy shocks” series and find that the wording of the statements accounted for more variation in federal funds futures (FFF) prices than target federal funds rate change announcements. She also finds that the impact of forward guidance on real interest rates is twice as large when using these text-based shock series compared to other measures, such as changes in FFF prices. To our knowledge, ours is the first paper to identify different Federal Reserve decision framework regimes using machine learning methods.

3. Data

The federal funds rate, inflation, unemployment, and output time series come from the U.S. Federal Reserve Bank of St. Louis’ FRED database. The potential output series in the initial Taylor Rule comes from the Congressional Budget Office and is imported from the St. Louis Federal Reserve. The St. Louis Federal Reserve Taylor Rule series runs quarterly for 54 years from 1954:Q3–2008:Q4. For the the real-time Taylor Rule we create, the Federal Reserve Bank of Philadelphia has a Real-Time Data Set only available from 1965 onward, limiting our real-time Taylor Rule series to run from 1965 to 2008. We also obtain FOMC transcripts from the Board of the Governors of the Federal Reserve System website for the text clustering portion of the paper, but transcripts of the meetings are only available from 1979 to present (Board of Governors of the Federal Reserve System, Citation2021).

3.1. Real time taylor rule

The Taylor Rule assumes that policymakers know, and can agree on, the size of the output gap. However, measuring the output gap is very difficult and FOMC members typically have different judgments. In addition, since the FOMC meets eight times per year, assessing the Taylor Rule consistency of the FOMC using quarterly data could be misleading. It is fairer to assess the consistency of the federal funds rate with Taylor Rule using monthly data that was available to the committee at the time of their meeting. Instead of attempting to interpolate quarterly output and potential output data with a method similar to Sims (Citation1980), we choose to approximate the output gap using Okun’s law,

EquationEquation 4(4)

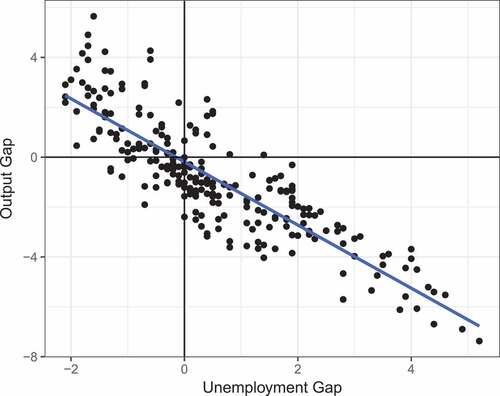

(4) is the gap version of Okun’s “rule of thumb” as presented in Abel et al. (Citation2005). For the period of 1954–2008, the slope of the line is −1.26 (Figure ). This suggests that the Taylor Rule on a monthly frequency is

Figure 3. Gap version of Okun’s law 1954–2008.

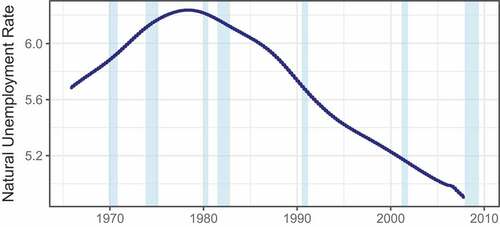

The Natural Rate of Unemployment, from the U.S. Congressional Budget Office must still be interpolated from quarterly to monthly frequency, producing the series shown in Figure . However, this version of the Taylor rule also has the advantage of being able to use the historical values of inflation (

) and unemployment (

) values that were the estimates at the time of the FOMC meeting, rather than the revised series. This data is available from the Federal Reserve Bank of Philadelphia’s Real-Time Data Set from 1965 onward. The final version of “real-time” Taylor rule is

Figure 4. Monthly natural rate of unemployment.

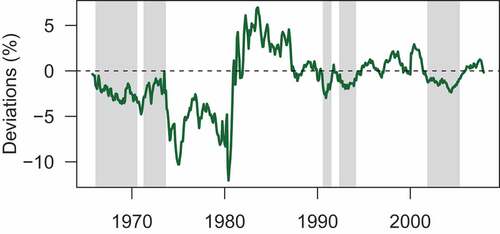

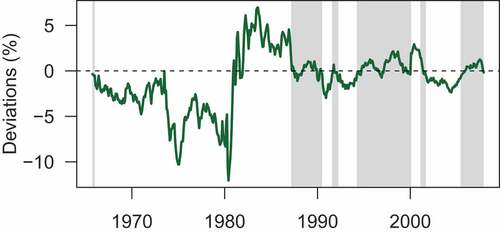

Figure shows the difference between the monthly average federal funds rates and the federal funds rate implied by the Taylor rule in EquationEquation 6(6)

(6) . The series has 507 monthly data points from 1965 to 2008. The Taylor rule residual series is still biased (mean = −1.25%) toward a higher interest rate than the Taylor rule suggests (i.e. a bias toward less permissive monetary policy). The “Real-Time” series is obviously much closer to being stationary, but is still not consistent with the single Taylor rule over the entire period.

Figure 5. Real-Time , 1965–2008.

In February 2000, CPI was replaced by the personal consumption expenditures (PCE) deflator as the preferred FOMC measure of inflation. From July 2004 onward, the Fed began targeting the core-PCE price index that excludes food and energy prices. As Mehra and Sawhney (Citation2010) point out, these adjustments reduce much of the apparent Greenspan deviation from the Taylor Rule from 2003 to 2006.

4. Empirical methodology

The Tukey Honest Significant Difference Test is a single-step multiple comparison procedure that determines if sample means are significantly different from each other simultaneously. The test assumes that the observations are independent with and among groups, and there is homogeneity in within-group variance across the groups. Since we first wish to test whether Greenspan’s tenure is distinguishable from the other Fed Chairmen on an aggregate basis, this is a suitable procedure to perform before attempting to identify regimes with an agnostic statistical procedure.

It is easiest to judge the break points, however, using the multiple mean model, even though there is autocorrelation in the federal funds rate-Taylor rule difference series. It is also useful to think of the FOMC monetary policy as having an unbiased error in relation to the Taylor rule in each regime. Using this assumption and using the methodology of Bai and Perron (Citation1998, Citation2003a, Citation2003b), we fit multiple mean equations to the series and find the points in time that minimize the residual sum of squares for the chosen number of breakpoints. The optimal number of breakpoints is three, based on the Schwarz Information Criterion (SIC).

Another useful way to find the hidden regimes in monetary policy is with the Markov switching model of Hamilton (Citation1989), one of the most popular nonlinear time series models in the literature. This model involves multiple structures (equations) that can characterize the time series behaviors in different regimes. By permitting switching between these structures, this model is able to capture more complex dynamic patterns. A novel feature of the Markov switching model is that the switching mechanism is controlled by an unobservable state variable that follows a first-order Markov chain. In particular, the Markovian property regulates that the current value of the state variable depends on its immediate past value. As such, a structure may prevail for a random period, and it will be replaced by another structure when a switching takes place. This is in sharp contrast with the random switching model of Quandt (Citation1972) in which the events of switching are independent over time. The original Markov switching model focuses on the mean behavior of variables. This model and its variants have been widely applied to analyze economic and financial time series (c.f. Diebold et al. (Citation1994); Engel (Citation1994); Engel and Hamilton (Citation1990); Hamilton (Citation1988, Citation1989); Filardo (Citation1994); Garcia and Perron (Citation1996); Ghysels (Citation1994); Goodwin (Citation1993); C.J. Kim and Nelson (Citation1998); M.J. Kim and Yoo (Citation1995); Lam (Citation1990); Sola and Driffill (Citation1994); Schaller and Van Norden (Citation1997); Athanasios and Williams (Citation2003); Westelius (Citation2007)). In traditional Markov switching models, the regime probabilities are exogenous and are usually estimated using maximum likelihood estimation methods. However, Yoosoon et al. (Citation2017) extend the regime switching methodology by allowing the Markov chain determining regimes to be endogenous, implying that the switching probabilities depend on the state of the underlying process. Similarly, Svensson (Citation2017) uses a regime-switching model with an endogenous Markov chain to analyze the effectiveness of an approach that involves setting interest rates based on forecasts of future inflation and output rather than relying on a specific rule or model.

Let denote the unobservable state variable. The switching model for the Taylor Rule deviation (

) series we consider involves three regimes,

This model could be thought of as representing three states of monetary policy relative to the Taylor Rule, where “tight”, “loose”, and “other” are three hidden states which each might represent. This formulation allows for the presence of different conditional variances across regimes, and so is a less restrictive version of the methodology of Bai and Peron.

When are independent Bernoulli random variables, it is the random switching model of Quandt (Citation1972). In the random switching model, the realization of

is independent of the previous and future states. This would imply that the deviation from the Taylor rule belongs to one of several regimes randomly, which is not consistent with the concept of the hidden state being the particular Fed chairman, who is likely not changing policy stances from month to month randomly. Suppose instead that

follows a first-order Markov chain with the following transition matrix:

where (i,j = 0,1,2) denote the transition probabilities of

given that

so that the transition probabilities satisfy

. The transition probabilities determine the persistence of each regime.

4.1. Text analytics

We also employ a machine learning procedure, specifically text clustering, to the FOMC transcripts and find evidence that the Greenspan tenure post-2000 was in large part consistent with his tenure in pre-2000. In particular, we employ k-means clustering on the FOMC transcripts to identify different monetary policy regimes. The idea with clustering is to categorize a set of texts in such a way that texts in the same cluster are more similar to each other than texts in other clusters by applying machine learning and natural language processing techniques. As such, the k-means clustering algorithm takes in a set of FOMC transcript texts as inputs and yields the list of detected clusters, where each cluster is taken to represent a distinct policy regime.

Before we describe the clustering technique, we provide a brief discussion of text pre-processing. In order to apply machine learning techniques, we need to convert the transcript texts to numerical vectors. We split the texts into single words and two-word phrases by removing numbers, punctuation, symbols, and white spaces. We also remove the names of the FOMC members present during a meeting to ensure that our clustering will not be driven solely by the names of the members. We then count the frequency of single-word and two-word phrases within the transcript and normalize the frequencies by the size of the document. As a final step, we weight the terms that occur in the majority of documents with diminishing importance. We use the tf-idf scheme by Salton and McGill (Citation1983) to obtain weights for each term.Footnote1 Essentially, each FOMC transcript is uniquely represented by a vector of normalized frequencies of single words and two-word phrases which can now be employed in the clustering exercise.

The k-means clustering algorithm requires the user to first specify the number of clusters before grouping the FOMC transcripts into those clusters. We use a widely used method called the “elbow method” to determine the number of clusters. Appendix A.2 discusses the k-means clustering algorithm in detail.

4.2. Optimal number of clusters

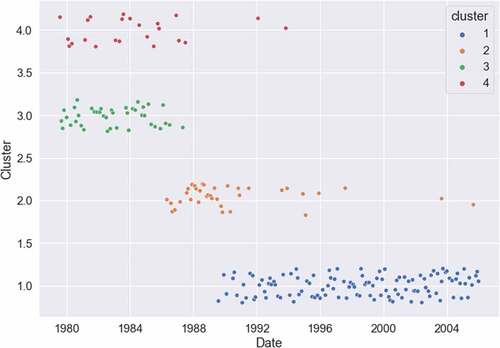

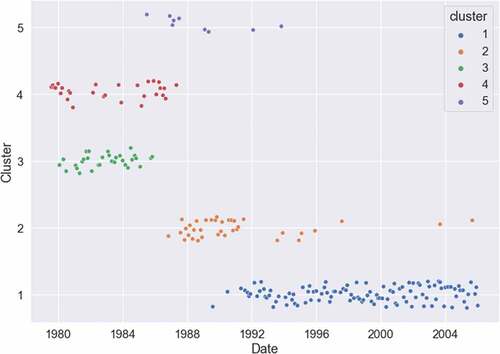

To apply the “Elbow Method” of determining the optimal number of clusters, the within-group sums of squares is plotted against the number of clusters. If the plot resembles an arm, then the “elbow” on the arm is the appropriate number of clusters, i.e. where the inflection point of the curve exists. This inflection point occurs where the marginal benefit (in terms of a lower sum of squares error) from adding additional clusters begins to diminish, and is thus the point where there is a balance between model parsimony and fit to the data. Figure in the Appendix (Appendix A.3) plots the within-group sum of squares against the number of clusters. The appropriate number of cluster as suggested by the elbow method is anywhere between 3 and 4. Beyond the 4 clusters, the within-group sums of square do not fall much. We do present results for when FOMC transcripts are split into 2, 3, 4, and 5 regimes, but it turns out that the results are quite consistent across all clusters.

5. Results

Table and Table demonstrate that, regardless of which form of the Taylor Rule is utilized, Alan Greenspan’s tenure as Federal Reserve Chair is significantly closer to the Taylor Rule in aggregate than any previous Chairman, with the exception of Bernanke. The absolute value of Greenspan’s deviation is the smallest, and there is a statistically significant difference in Greenspan’s mean deviation from the Taylor Rule and the average deviation of all other Chairmen, except for Bernanke.

Table 2. Tukey HSD:St. Louis rule

Table 3. Tukey HSD: Bernanke rule

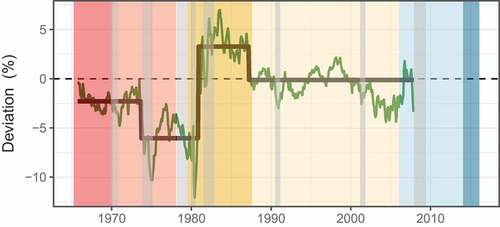

Figure displays the federal funds rate-Taylor Rule difference series with separate means for each regime. The first regime that the Bai and Peron’s statistical procedure identified covers the chairmanship tenures of William Martin (1951–1970) and Arthur Burns (1970–1978), from the start of the series until 1973. This was a period of very loose monetary policy, perhaps influenced by President Nixon’s threats of taking away Federal Reserve independence. Burns’ monetary policy under the Ford presidency after the breakpoint in 1973 was even looser and less consistent with the Taylor rule.

Figure 6. Fitted regimes with Fed Chairman tenure periods, 1965–2008.

The second breakpoint in 1980 is somewhat expected and agrees with the drifting output and inflation gap evidence from Boivin (Citation2006). The chairmanship of Paul Volcker (1979–1987) exhibits a clear breakpoint in Taylor rule consistency to a regime of tight monetary policy in November of 1980 until the end of tenure, a result that is not surprising given that the Federal Reserve targeted non-borrowed reserve levels rather than the federal funds rate during 1979–1982. The high interest rate period continued until the end of Volcker’s tenure in 1987, as the Fed continued to battle stagflation by first taming inflation (an emphasis on the inflation gap over the output gap in the standard Taylor rule).

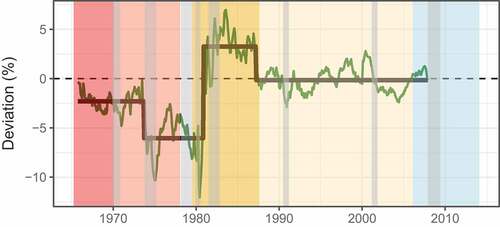

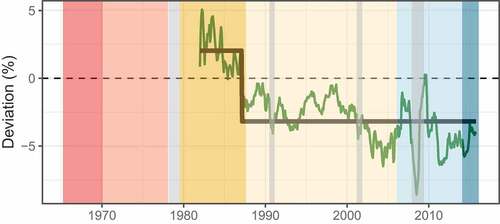

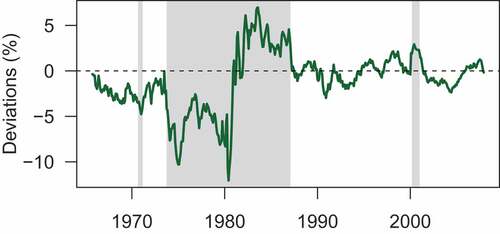

Alan Greenspan’s tenure from 1987 to 2006 was remarkably consistent with the Taylor Rule, regardless of whether the shift from targeting core CPI to core PCE in 2000 is reflected in the Taylor Rule (Figure ). The conditional mean deviation of Greenspan’s tenure is approximately zero, in either case, as Figures indicate. However, as Figure shows, Greenspan apparently did not account for inflation expectations in his decision-making, since when his inflation gap is calculated using the University of Michigan 1-year inflation expectations survey, his conditional mean policy stance is consistently “loose”. Greenspan’s tenure is still quite distinct from Volcker’s even when using inflation expectations in the Taylor rule.

Figure 7. Fitted Regimes using PCE rather than CPI inflation target from 2000 to 2008.

Figure 8. Fitted regimes using university of michigan 1-year inflation expectations for the inflation gap rather than CPI or PCE from 1982 to 2015.

The less restrictive Markov-Switching regime structure reveals periods of greater and lesser adherence to the Taylor Rule. In some periods, Greenspan is indeed classified in the “loose” Regime 0 (conditional deviation mean less than zero), as shown in Figure , while the remainder of his regime is in a “tight” Regime 1 (conditional mean greater than zero), as shown in Figure . However, it is worth noting that the conditional standard deviation of both the “tight” and “loose” regimes is similar (0.722 vs. 0.764). This demonstrates that Greenspan was symmetric in his deviations from the Taylor Rule in addition to being cyclical.

Figure 9. Markov Switching: Regime 0 (“Loose”).

Figure 10. Markov switching: regime 1 (“Tight”).

The Markov-Switching model classifies Volcker and Burns in the same regime, despite the fact that Volcker was much tighter than the Taylor Rule, and Burns was much looser than the rule (Figure ). Regime 2 can thus be interpreted as a monetary policy regime that is “inconsistent” with the Taylor Rule, and is either very tight or very loose. In Figure , Greenspan was “inconsistent” with the Taylor Rule for a brief period following the economic fallout from the tech stock crash and the September 2001 terrorist attacks.

Figure 11. Markov switching: regime 2 (“Inconsistent”).

The estimated conditional means and variance for the model in equation 7 are

and the estimated transition matrix is

The transition matrix shows that regimes identified from fed funds deviations from the Taylor Rule are very persistent. The low probabilities of transitioning between regimes imply that changes in the monetary policy framework at any point in time are, in general, very improbable.

5.1. Text analytics

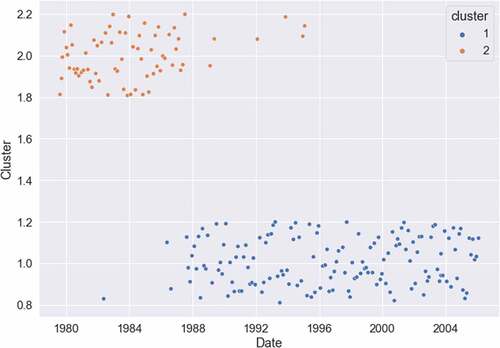

The findings of our clustering analysis (where we regard each cluster as a distinct monetary policy regime) of the FOMC meeting transcripts are presented in this section. We begin by discussing the results when only two clusters are considered, and then expand to three, four, and five clusters. Greenspan’s era is quite consistent in general.

When the FOMC transcripts are grouped into only two clusters, representing two distinct policy regimes, Figure presents the results. It can be observed that there is minimal overlap between the two regimes, indicating that the chairmanships of Volcker and Greenspan are clearly distinguishable from each other. Additionally, the mean deviation from the Taylor rule for the cluster period corresponding to the Greenspan period is close to zero (−0.0211 compared to 2.93 for the other cluster), as presented in Table . This consistency is particularly noteworthy as it persists even when allowing for greater clustering granularity.

Figure 12. Clustering results of FOMC transcripts: 2 clusters.

Table 4. Mean deviation from the Taylor rule: By clusters

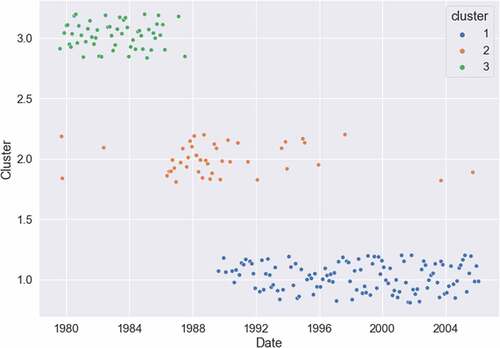

Figure demonstrates the results when the FOMC transcripts are divided into three clusters. The majority of the transcripts from the Volcker era (August 1979 to August 1987) belong to the same cluster, while the Greenspan meeting transcripts are split into the remaining two clusters. The post-1990 Greenspan regime looks quite consistent as evidenced by almost all of the post-1990 documents being classified as a single regime. This consistency holds even when we allow the k-means clustering algorithm to group the transcripts into four or five clusters, as shown in Figures respectively. Most of the post-1990 transcripts belong to the same cluster, and most importantly, 2003–2006 is not distinguishable as a separate policy regime during Greenspan’s tenure.

Figure 13. Clustering results of FOMC transcripts: 3 clusters.

Figure 14. Clustering results of FOMC transcripts: 4 clusters.

Figure 15. Clustering results of FOMC transcripts: 5 clusters.

In addition, the analysis reveals that there is no clear distinction between the policy regime of 2003–2006 and the rest of Greenspan’s tenure. This implies that Greenspan’s approach to monetary policy during his final years was consistent with the policies he implemented earlier in his tenure.

In summary, the results from our clustering analysis demonstrate that Greenspan’s tenure as Federal Reserve Chair was characterized by a high degree of consistency in monetary policy. Even when using a more nuanced clustering approach, most of the post-1990 transcripts remain classified as belonging to the same policy regime. Moreover, our analysis indicates that there is no discernible difference between the policy regime of 2003–2006 and the rest of Greenspan’s tenure.

5.2. Cluster characteristics

Figures present the word cloud outcomes for the primary terms in clusters labeled 1, 2, and 3, respectively. The larger and bolder terms in the clouds indicate the significance of the term within the particular cluster. Although we only present the terms for the case of 3 clusters for clarity, the memberships of the clusters are generally consistent. This means that as we shift from, say, three to four clusters, just a few transcripts change their cluster memberships.

Figure 16. Wordcloud of the top texts in cluster 1 (regime from roughly from 1990 to 2007).

Figure 17. Wordcloud of the top texts in cluster 2 (regime from roughly from 1987 to 1990).

Figure 18. Wordcloud of the top texts in cluster 3 (regime from roughly from 1979 to 1986).

Cluster 1, which roughly corresponds to the majority of Greenspan’s tenure after 1990, features top relevant terms such as “recovery”, “stock market”, “recession”, among others. Cluster 2, covering the initial years of Greenspan’s tenure, includes terms like “exports”, “international trade”, “dollar”, “exchange”, indicating that the transcripts during this period focused on topics related to international trade and finance. In contrast, after 1990, the discussion in the transcripts seems to have heavily centered on the stock market, according to the clustering algorithm’s findings. Lastly, cluster 3, which aligns with the Volcker era, highlights top terms such as “money supply”, “federal funds”, “targets”, and “interest rate”. During the Volcker regime, which began in 1979, the discussion in the text was centered around which targets the Fed should be using, switching to targeting the money supply from 1979 to 1981 and then reverting to interest rate targets after 1981.

6. Conclusion

Alan Greenspan’s early years as the head of the Federal Reserve, spanning from 1988 to the end of 2000, were marked by remarkable consistency with the real-time Taylor Rule, with the federal funds rate oscillating around the Rule’s recommended value with low variance each month. While the second part of Greenspan’s Federal Reserve leadership was characterized by a policy that appeared to be looser than that suggested by the Taylor Rule, the conditional mean found by the Bai and Peron structural break process is still consistent across his tenure. The Markov switching regime identified the year 2003 as a “loose” period, but it was not significantly different from other “loose” periods during his tenure, or even during Martin’s chairmanship in the late 1960s.

The contention by Taylor (Citation2007, Citation2009) that Greenspan inflated the housing bubble is inconsistent with a historical inspection of Federal Reserve deviations from the Taylor Rule. Greenspan had a conditional mean deviation of zero throughout his tenure, assuming a constant level of variance with Bai and Perron (Citation2003a). A less restrictive Markov-Switching model finds that some periods of Greenspan’s tenure corresponded to “loose” monetary policy, but the conditional variance was extremely close for both the “loose” and “tight” Markov-switching regimes as EquationEquation 9(9)

(9) shows. To the extent that Greenspan deviated from the Taylor Rule, he deviated in a cyclical, symmetric manner: Negative deviations were offset by positive deviations from the Taylor Rule, which is inconsistent with Taylor’s argument that the period of 2003–2006 differed from what economic agents had come to expect of monetary policy. On the whole, we find that Greenspan’s interest rate policies were broadly consistent with the Taylor Rule. In addition, using an FOMC text-based analysis to find policy regimes, the policy discussions from 2003 to 2006 were also no different than the vast majority of policy discussions earlier in Greenspan’s tenure.

6.1. Policy Implications

The study has several policy implications worth considering. One of the main findings is that the Taylor Rule, while a useful guideline, has limitations due to data constraints, which means that strict adherence to it may not always be the best solution for addressing speculative bubbles. Additionally, it would be unwise for Congress to impose mechanical adherence to the Taylor Rule for the FOMC in setting interest rates. The study shows that the FOMC, under Greenspan’s tenure, has generally followed the Taylor Rule, taking into account the available economic data at the time of the policy decision. Therefore, any future attempts to restrict the FOMC to follow the Taylor Rule should consider the real-time data available at the time of the rate-setting decision, as this can significantly alter the implied short-term interest rates compared to the final economic data.

Acknowledgments

We gratefully acknowledge Dr. Gerald Dwyer, Dr. Robert Tamura, Dr. Michal Jerzmanowski, Dr. Aspen Gorry, Dr. Scott Baier, and all participants at Macroeconomics workshop at Clemson University. All remaining errors are ours.

Disclosure statement

This is an original manuscript, and no part of it has been published before or is under consideration at another journal. We have no relevant or material financial interests that relate to the research described in this paper.

Notes

1. Appendix A.1 discusses the procedure in detail.

References

- Abel, A. B., Bernanke, B. S., & Dean, C. (2005). Macroeconomics. Pearson.

- Alba, J. D., & Wang, P. (2017). Taylor rule and discretionary regimes in the United States: Evidence from a k-state Markov regime-switching model. Macroeconomic Dynamics, 21(3), 817–24. https://doi.org/10.1017/S1365100515000693

- Alex, N.R., Papell, D. H., & Prodan, R. (2019). The Taylor principles. Journal of Macroeconomics, 62, 103159.

- Athanasios, O., & Williams, J. C. (2003). Monetary policy rules and the Great Inflation. The American Economic Review, 93(3), 545–569.

- Bai, J., & Perron, P. (1998). Estimating and testing linear models with multiple structural changes. Econometrica, 66(1), 47–78. https://doi.org/10.2307/2998540

- Bai, J., & Perron, P. (2003a). Computation and analysis of multiple structural change models. Journal of Applied Econometrics, 18(1), 1–22. https://doi.org/10.1002/jae.659

- Bai, J., & Perron, P. (2003b). Critical values for multiple structural change tests. The Econometrics Journal, 6(1), 72–78. https://doi.org/10.1111/1368-423X.00102

- Board of the Governors of the Federal Reserve System. (2021) (Retrieved February 12, 2021) FOMC Transcripts and Other Historical Materials. https://www.federalreserve.gov/monetarypolicy/fomchistorical2011.htm

- Boivin, J. (2006). Has US monetary policy changed? Evidence from drifting coefficients and real-time data. Journal of Money, Credit, and Banking, 38(7), 1149–1173. https://doi.org/10.1353/mcb.2006.0065

- Clarida, R., Gali, J., & Gertler, M. (1999). The science of monetary policy: A new Keynesian perspective. Journal of Economic Literature, 37(4), 1661–1707. https://doi.org/10.1257/jel.37.4.1661

- Cooley, T. F., & Prescott, E. C. (1976). Estimation in the presence of stochastic parameter variation. Econometrica, 44(1), 167–184. https://doi.org/10.2307/1911389

- Diebold, F. X., Lee, J.H., & Weinbach, G. C. (1994). Regime switching with time-varying transition probabilities. Business Cycles: Durations, Dynamics, and Forecasting, 1, 144–165.

- Engel, C. (1994). Can the Markov switching model forecast exchange rates? Journal of International Economics, 36(1–2), 151–165. https://doi.org/10.1016/0022-1996(94)90062-0

- Engel, C., & Hamilton, J. D. (1990). Long swings in the dollar: Are they in the data and do markets know it? The American Economic Review, 80, 689–713.

- Fair, R. C. (2001). Actual federal reserve policy behavior and interest rate rules. Federal Reserve Bank of New York Economic Policy Review, 7(1), 61–72.

- Filardo, A. J. (1994). Business-cycle phases and their transitional dynamics. Journal of Business & Economic Statistics, 12(3), 299–308. https://doi.org/10.1080/07350015.1994.10524545

- Garcia, R., & Perron, P. (1996). An analysis of the real interest rate under regime shifts. The Review of Economics and Statistics, 78(1), 111–125. https://doi.org/10.2307/2109851

- Ghysels, E. (1994). On the periodic structure of the business cycle. Journal of Business & Economic Statistics, 12(3), 289–298. https://doi.org/10.1080/07350015.1994.10524544

- Goodwin, T. H. (1993). Business-cycle analysis with a Markov-switching model. Journal of Business & Economic Statistics, 11(3), 331–339. https://doi.org/10.1080/07350015.1993.10509961

- Hamilton, J. D. (1988). Rational-expectations econometric analysis of changes in regime: An investigation of the term structure of interest rates. Journal of Economic Dynamics and Control, 12(2–3), 385–423. https://doi.org/10.1016/0165-1889(88)90047-4

- Hamilton, J. D. (1989). A new approach to the economic analysis of nonstationary time series and the business cycle. Econometrica: Journal of the Econometric Society, 57(2), 357–384. https://doi.org/10.2307/1912559

- Handlan, A. (2021). Text shocks and monetary surprises (Doctoral dissertation, University of Minnesota).

- Hansen, B. E. (2001). The New Economnetrics of Structural Change: Dating Breaks in U.S. Labor Productivity. Journal of Economic Perspectives, 15(4), 117–128.

- Hansen, S., & McMahon, M. (2016). Shocking language: Understanding the macroeconomic effects of central bank communication. Journal of International Economics, 99, S114–133. https://doi.org/10.1016/j.jinteco.2015.12.008

- Judd, J. P., & Rudebusch, G. D. (1998). Taylor’s rule and the fed: 1970-1997. Economic Review–Federal Reserve Bank of San Francisco, 3, 3–16.

- Kim, C.J., & Nelson, C. R. (1998). Business cycle turning points, a new coincident index, and tests of duration dependence based on a dynamic factor model with regime switching. The Review of Economics and Statistics, 80(2), 188–201. https://doi.org/10.1162/003465398557447

- Kim, M.J., & Yoo, J.S. (1995). New index of coincident indicators: A multivariate Markov switching factor model approach. Journal of Monetary Economics, 36(3), 607–630. https://doi.org/10.1016/0304-3932(95)01229-X

- Lam, P.S. (1990). The Hamilton model with a general autoregressive component: Estimation and comparison with other models of economic time series: Estimation and comparison with other models of economic time series. Journal of Monetary Economics, 26(3), 409–432. https://doi.org/10.1016/0304-3932(90)90005-O

- Mehra, Y. P., & Sawhney, B. (2010). Inflation measure, Taylor rules, and the Greenspan-Bernanke years. FRB Richmond Economic Quarterly, 96(2), 123–151.

- Murray, C. J., Nikolsko-Rzhevskyy, A., & Papell, D. H. (2015). Markov switching and the Taylor principle. Macroeconomic Dynamics, 19(4), 913–930.

- Orphanides, A. (2001). Monetary policy rules based on real-time data. The American Economic Review, 91(4), 964–985.

- Orphanides, A. (2002). Monetary-policy rules and the great inflation. The American Economic Review, 92(2), 115–120.

- Orphanides, A., & Wieland, V. (2008). Economic projections and rules-of-thumb for monetary policy. Federal Reserve Bank of St Louis Review, 90(July/August), 307–324.

- Primiceri, G. E. (2005). Time varying structural vector autoregressions and monetary policy. The Review of Economic Studies, 72(3), 821–852.

- Quandt, R. E. (1972). A new approach to estimating switching regressions. Journal of the American Statistical Association, 67(338), 306–310.

- Salton, G., & McGill, M. J. (1983). Introduction to modern information retrieval. McGraw-Hill.

- Schaller, H., & Van Norden, S. (1997). Regime switching in stock market returns. Applied Financial Economics, 7(2), 177–191.

- Shapiro, A. H., & Wilson, D. J. (2022). Taking the fed at its word: A new approach to estimating central bank objectives using text analysis. The Review of Economic Studies, 89(5), 2768–2805.

- Sims, C. A. (1980). Macroeconomics and reality. Econometrica, 48(1), 1–48.

- Sims, C. A. (1992). Interpreting the macroeconomic time series facts: The effects of monetary policy. European Economic Review, 36(5), 975–1000.

- Sims, C. A., & Zha, T. (2006). Were there regime switches in US monetary policy? The American Economic Review, 96(1), 54–81.

- Sola, M., & Driffill, J. (1994). Testing the term structure of interest rates using a stationary vector autoregression with regime switching. Journal of Economic Dynamics and Control, 18(3–4), 601–628.

- Svensson, L. E. O. (2017). Monetary policy with judgment: Forecast targeting. International Journal of Central Banking, 1(1), 1–54.

- Taylor, J. B. (1993) Discretion versus policy rules in practice. In Carnegie-Rochester Conference Series on Public Policy, 39, 195–214.

- Taylor, J. B. (1999). A historical analysis of monetary policy rules. In John B. Taylor (Ed.), Monetary Policy Rules (pp. 319–348). University of Chicago Press.

- Taylor, J. B. (2007). Housing and monetary policy. No. w13682National Bureau of Economic Research.

- Taylor, J. B. 2009. The financial crisis and the policy responses: An empirical analysis of what went wrong. Working Paper 14631. National Bureau of Economic Research (January).

- Timothy, C., & Sargent, T. J. (2005). Drifts and volatilities: Monetary policies and outcomes in the post WWII US. Review of Economic Dynamics, 8(2), 262–302. https://doi.org/10.1016/j.red.2004.10.009

- Westelius, N. J. (2007). The time-varying nature of the link between inflation and inflation uncertainty: Evidence from the US, UK and Germany. Journal of International Money & Finance, 26(7), 1146–1165.

- Yoosoon, C., Choi, Y., & Park, J. Y. (2017). A new approach to model regime switching. Journal of Econometrics, 196(1), 127–143. https://doi.org/10.1016/j.jeconom.2016.09.005

Appendix A.1

From Text Documents to Numerical Feature VectorsFor clustering analysis, the text documents will first need to be converted to a vector of real numbers. We follow a three-step procedure commonly employed in natural language processing literature to transform texts to numerical vectors. First, we assign integer identification for each single word or a two word phrase, the process referred to as tokenization. Second, we counting the number of occurrences of each token for each document in the collection of FOMC documents. Finally, we normalize each document to have a feature matrix of the same size and also weight tokens that occur in the majority of documents with diminishing importance. We use tf-idf method by Salton and McGill (Citation1983) to obtain weights for each token.

In order to calculate tf-idf value of a token in each document, we multiply the frequency of that token by its idf component. The idf frequency is given by

where is the total number of documents, and df(d,t) is the number of documents that contain term

. The tf-idf vectors are then normalized by the Euclidean norm and is given by

Upon completion of this 3-step procedure, we obtain a features matrix , a row of which consists of tf-idf values of all tokens for each document in our collection of documents. Each row is also normalized to have a unit norm to account for the fact that documents are of variable length. Otherwise, a longer document will have higher term frequencies and thus higher tf-idf values than a shorter document.

Appendix

K-means Clustering Algorithm

This section closely follows James et al. 2013. Let ,

, be the set of FOMC transcripts to be clustered into a set of K clusters,

where

is the number of features per transcript. We assume a-priori that there exists

clusters with cluster centers

. K-means clustering solves

The optimization algorithm takes place in the following steps.

Choose the number of clusters,

, that we would like the texts to be split into based on domain knowledge.

Arbitrarily initialize cluster centers

.

Given the fixed cluster centers, choose optimal cluster assignment for each data point (FOMC transcript)

based on the closest cluster center.

Update

on the basis of cluster assignments of

.

Repeat steps 3 and 4 until convergence takes place, i.e. the centroids of the cluster do not move.

We repeat the steps outlined above for different values of , namely 2, 3, 4, and 5.

Appendix