ABSTRACT

Quantum machine learning is a rapidly growing field at the intersection of quantum technology and artificial intelligence. This review provides a two-fold overview of several key approaches that can offer advancements in both the development of quantum technologies and the power of artificial intelligence. Among these approaches are quantum-enhanced algorithms, which apply quantum software engineering to classical information processing to improve keystone machine learning solutions. In this context, we explore the capability of hybrid quantum-classical neural networks to improve model generalization and increase accuracy while reducing computational resources. We also illustrate how machine learning can be used both to mitigate the effects of errors on presently available noisy intermediate-scale quantum devices, and to understand quantum advantage via an automatic study of quantum walk processes on graphs. In addition, we review how quantum hardware can be enhanced by applying machine learning to fundamental and applied physics problems as well as quantum tomography and photonics. We aim to demonstrate how concepts in physics can be translated into practical engineering of machine learning solutions using quantum software.

GRAPHICAL ABSTRACT

AI-generated image of a quantum robotic Schrödinger's cat that reads a quantum machine learning review paper.

1. Introduction

Nowadays, due to exponential growth of information, computational speedup, acceleration of information transmission and recognition, many key global interdisciplinary problems for modern societies emerge [Citation1]. In everyday life, we face the problem of big data everywhere. Classical information science and relevant technological achievements in communication and computing enable us to move our society from Internet of computers to the Internet of Things (IoT), when humans interact with spatially distributed smart systems including high precision sensors, various recommendation systems based on huge amount of on-line information processing and its recognition [Citation2]. Artificial intelligence (AI) and machine learning (ML) drive the progress in this movement of our society. These tasks and facilities require online information recognition that is actually possible only on the basis of the parallel information processing.

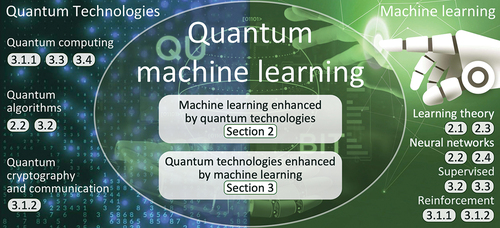

Today, a number of areas have formed in information science, physics, mathematics, and engineering, which propose to solve these problems by means of various approaches of parallel processing of information by spatially distributed systems. Our vision of the problem we establish schematically in that reflects the content of this paper. Nowadays, AI predominantly focuses on ML approach that provides solutions for Big data problem, data mining problem, explainable AI, and knowledge discovery. As a result, in our everyday life we can find distributed intelligent systems (DIS) which represent networks of natural intelligent agents (humans) interacting with artificial intelligence agents (chatbots, digital avatars, recommendation systems, etc.), see e.g. [Citation3] and references therein. Such systems require new approaches to data processing that may be described by means of cognitive computing which possess human cognitive capabilities, cf [Citation4]. At the same time, such a system operates within a lot of uncertainties which may provide new complexities. But, how about quantum approach and quantum technologies which can help us in this way?

Figure 1. Interdisciplinary paradigm of quantum machine learning that is based on current classical information, quantum technologies, and artificial intelligence, respectively (the details are given in the text).

Certainly, quantum approach and relevant quantum technologies are one of the drivers of current progress in the development of information sciences and artificial intelligence, which have common goals of designing efficient computing, as well as fast and secure communication and smart IoT. The mutual overlapping of the three seminal disciplines are bearing meaningful fruits today. Within left half of the ellipsis in we establish some crucial topics of quantum technologies studies, which are interdisciplinary right now. In particular, quantum computing opens new horizons for classical software engineering, see e.g. [Citation5] Especially it is necessary to mention quantum inspired algorithms and quantum inspired approaches, which utilize quantum probability and quantum measurement theory for classical computing [Citation6].

Quantum computers as physical systems, biological neurons and human brain are capable for parallel information proceeding in natural way. However, sufficient criterion for speedup information processing is still unknown in many cases.

A qubit, which is a minimal tool in quantum information science, is established by superposition of two well-distinguished quantum states defined in Hilbert space and represents an indispensable ingredient for parallel information processing [Citation7]. Quantum algorithms (software) which are proposed many years ago utilize qubit quantum superposition and entanglement power for achieving so-called quantum supremacy and speedup in the solution of NP-hard problems, which are unattainable with classical algorithms [Citation8]. Quantum computers (hardware) as physical devices was first proposed by Richard Feynman, which deals with simple two-level systems as physical qubits performing quantum computation [Citation9,Citation10]. Despite the fact that a lot of time has passed since the successful demonstration of the first quantum gates and the simplest operations with them (see e.g. [Citation11]), there exists a large gap between the quantum information theory, quantum algorithms, and quantum computers designed to execute them. Existing quantum computers and simulators are still very far from quantum supremacy demonstrations in solving real problems related to our daily life. This can be partly explained by the modern noisy intermediate-scale quantum (NISQ) era of the development of quantum technologies [Citation12]. Currently, quantum computers are restricted by small number of qubits, and relatively high level of various noises, which include decoherence processes that completely (or, partially) destroy the effects of interference and entanglement. In this regard, the problem of quantum supremacy for specific tasks represents the subject of heated debates [Citation13–15].

Surface codes and creation of logical qubits are proposed for significant reduction of computation errors [Citation16,Citation17]. In particular, such a code presume mapping of some graph of physical qubits onto the logical qubit. Typically, special network-like circuits are designed for quantum processor consisting of logical qubits. However, it is unclear how this mapping is unique and how such a network is optimal and universal for various computation physical platforms?

As an example, a minor embedding procedure is supposed for quantum annealing computers, which are based on superconductor quantum hardware [Citation18]. Obviously, various physical platforms examined now for quantum computation can use different mapping procedures and relay to design of specific networks of qubits accounting specific noises and decoherence. Thus, the choosing of appropriate network architecture represents a keystone problem for current quantum computing and properly relays to demonstration of quantum supremacy.

Clearly, the solution of this problem is connected not only with the properties of quantum systems, but also with the ability of networks to parallel and robust information processing. An important example that we refer here is the human brain as a complex network comprising from biologically active networks, which exhibit fast information processing. Noteworthy, the architecture of such a computation is a non-von Neumann. In this order, the human brain is capable for pattern retrieving by means of association. A long time ago, Hopfield introduced a simple neural network model for associative memory [Citation19]. As time evolves, neural networks have represented an indispensable tool for parallel classical computing. Artificial intelligence and machine learning paradigm, cognitive and neuromorphic computing, use neural network some specific peculiarities represent vital approach proposed to explore the full power of parallel character of computation [Citation1,Citation20].

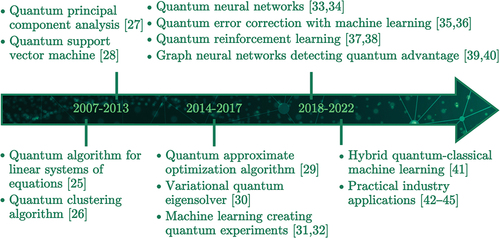

Quantum machine learning (QML) is a new paradigm of parallel computation where quantum computing meets network theory for improving computation speed-up and current, NISQ-era quantum technology facilities, by means of quantum or classical computational systems and algorithms [Citation21–24]. In we represent a timeline of the appearance and development of some important algorithms [Citation25–45] which are able to improve computational complexity, accuracy and efficiency within various types of hardware available now. In this work, we are going to discuss most of them in detail.

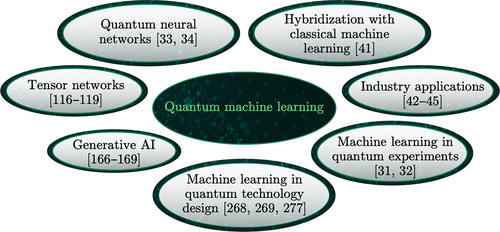

In more general, nowadays QML disciplines occur at the border of current quantum technologies and artificial intelligence and includes all their methods and approaches to information processing, see . The rapidly growing number of publications and reviews in this discipline indicates an increasing interest in it from the scientific community, see e.g. [Citation46–53]. In particular, seminal problems in algorithm theory, which are capable of enhancement of quantum computing by means of the ML approach we can find in [Citation21,Citation46,Citation50,Citation53]. Some applications of ML approach to solve timely problems in material science, photonics, and physical chemistry reader can find in [Citation47–49, Citation51, Citation52], respectively.

It is important to notice that ML approach is closely connecting with knowledge discoveries in modern fundamental physics, which are closely connected with a problem of big data and their recognition. In order, we talk about automated scientific discovery, which can significantly expand our knowledge of Nature, cf [Citation54,Citation55]. In particular, it is worth to mention research of the Large Hadron Collider (LHC), where data mining can contribute to new discoveries in the field of fundamental physics [Citation56]. Another important example constitutes network research on the registration of gravitational waves and extremely weak signals in astronomy, see e.g. [Citation57] Clearly, further discoveries in this area require improvement of the sensitivity of network detectors (which are interferometers) and obtained data mining where ML approach can significantly promote, cf [Citation58].

Despite the fact that the previous review papers [Citation21, Citation22, Citation46, Citation47, Citation49–53] theoretically substantiate and discuss the effectiveness of quantum approaches and quantum algorithms in ML problems, in practice there are many problems that do not allow to see quantum supremacy in experiment. Within the NISQ era of modern quantum computers and simulators, their capabilities are not yet enough to achieve quantum supremacy, cf [Citation12]. In this regard, hybrid information processing algorithms that take into account the sharing of quantum and classical computers have come forward. Quantum-classical variational, quantum approximate optimization (QAOA) algorithms are very useful and effective in this case, see e.g. [Citation59–62]. In this review work, we are going to discuss various approaches, which are use for QML paradigm within current NISQ-era realities. Unlike the previous work [Citation21,Citation22,Citation46,Citation47,Citation49–53], below we will focus on methods and approaches of ML that can be effective, especially for hybrid (quantum-classical) algorithms, see .

In its most general form, the current work can be divided into two large parts, which we establish as Sections 2 and 3, respectively. In particular, in Sec. 2 we consider a variety of problems where the ML approach may be enhanced by means of quantum technologies, as it is presented in . In general, we speak here about speed-up of data processing by quantum computers and/or quantum simulators, which we can use for classical ML purposes, see . An important part of these studies is devoted to optimal encoding, or embedding of classical data sets into the quantum device [Citation63], and recognition of data set from quantum state readout. We establish a comprehensive analysis of quantum neural network (QNN) feature as a novel model in QML whose parameters are updated classically. We discussed how such a model may be used in timely hybrid quantum-classical variational algorithms.

Figure 3. Quantum technologies help in improving machine learning. Sections that discuss a particular topic are labeled.

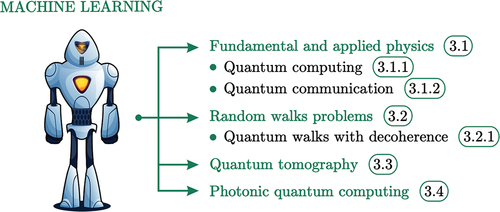

On the contrary, in Sec. 3 we establish currently developing QML hot directions where classical ML approach can help to solve NISQ-era quantum computing and quantum technology tasks, cf. . In particular, it is necessary to mention automation of quantum experiments, quantum state tomography, quantum error correction, etc., where classical ML techniques may be applied. Especially, we note here that ML algorithms which can be useful in recognition of quantum speedup problem of random (classical, or quantum) walks performed on various graphs. The solution to this problem proposed by us plays an essential role for both of current quantum computing hardware and software development.

2. Machine learning enhanced by quantum technologies

In this section, an impact that quantum technologies make in machine learning is discussed. The outline of the topics is given in .

2.1. Machine learning models

At its roots, machine learning is a procedural algorithm that is augmented by the provision of external data to model a specific probability distribution. The data could consist of only environment variables (features), – unsupervised learning – features and their associated outcomes (labels),

– supervised learning – or environment variables and a reward for specific actions,

– reinforcement learning.

2.1.1. Unsupervised learning

The point of unsupervised learning is to infer attributes about a series of data points, usually to find the affinity of data points to a clustering regime. A popular method of unsupervised learning is known as the K-means clustering [Citation64] where data points are assigned to a chosen number of clusters, and the position of the centres of these clusters could be trained. Unsupervised learning is applied to many real-world problems, from customer segmentation in different industries [Citation65] to criminal activity detection [Citation66].

2.1.2. Supervised learning

In contrast, supervised learning endeavours to infer patterns in the provided data. The goal of such models is to generalise this inference to previously unseen data points. In a linear regression setting, this is often done by linear interpolation [Citation67], but for an a-priori problem where some degree of non-linearity is plausible, supervised learning can train non-linear regression models and provide better alternatives. Supervised learning is also used for logistical learning, where instead of a regression model, a categorical probability distribution is to be learned. Supervised learning has seen considerable success in many areas, from credit-rating models [Citation68] to scientific fields [Citation69].

2.1.3. Reinforcement learning

Finally, reinforcement learning is the optimisation of a set of actions (policy) in an environment. The environment allows actions and provides rewards if certain conditions are met. An agent is made to explore this environment by investigating the outcomes of certain actions given its current state and accordingly optimise its model variables. Reinforcement learning often attracts significant attention from the gaming industry [Citation70] but it has also contributed to real-life scenarios, such as portfolio management [Citation71].

2.1.4. Exponential growth of practical machine learning models

A recurring theme in all three modes of ML is the high complexity of their models. This could be caused by a high-dimensional input size like classifying a high-resolution image database [Citation72], or a complex problem like image segmentation [Citation73]. A commonly used – but known to be inaccurate [Citation74,Citation75] – measure of complexity is the parameter-count of an ML model. It is simply the number of trainable parameters of a model. Most familiarly in neural networks, the parameters are the weights and biases associated with each neural layer. For smaller problems, the parameter-count could be as small as hundreds [Citation76,Citation77], but cutting-edge AI models, such as DALL-E 2 [Citation78], Gopher [Citation79], and GPT-3 [Citation80] are models that have tens or hundreds of billions of parameters and is increasing [Citation81,Citation82]. This level of high-dimensionality comes at a great financial and environmental cost. Ref [Citation83]. assessed the carbon emission and the financial cost of fine-tuning and training several large ML models in 2019. They found that in some cases these models emitted more CO2 than the entire lifetime of an average American car, and could cost over $3 m. In addition to this great cost, there are concerns regarding the scalability, namely that the exponential-growth in computing power – known as Moore’s law [Citation84], revisited in [Citation85] – is growing at a slower rate than ML research [Citation86,Citation87].

2.1.5. Quantum-enhanced machine learning

The idea behind the field of quantum machine learning is to use the capabilities of quantum computers to provide scalable machine learning models that can provide machine learning capabilities beyond what classical models can be expected to deliver, at a healthier cost.

Quantum computers offer an exponential computational space advantage over classical computers by representing information in quantum binary digits or qubits. Where classical computers work in the Boolean space, , qubits form an exponentially growing, special unitary space,

. This means that while a classical register with

bits can hold an n-digit binary string, a quantum register of the same size holds all possible strings of such size, providing an exponential number of terms in comparison to its classical counterpart [Citation7].

In addition to addressing the scalability concerns, classical machine learning models operate within the realm of classical statistical theory, which in some cases seems to diverge from human behavioural surveys. Ref [Citation88]. Introduced the sure thing principle, which shows how unrelated uncertainties could affect a human’s decision, which a classical statistical model would deem as unrelated and remove. In Refs. [Citation89,Citation90] it is showed that in some cases people tend to give higher credence to two events occurring in conjunction than either happening individually, which is contrary to the classical statistical theory picture. In Ref [Citation6,Citation91]. It is argued that these problems could be addressed by using a quantum statistical formulation. In addition, other similar issues like the problem of negation [Citation92] and others listed in Ref [Citation93]. are also shown to have a resolution in the quantum theory. The distributional compositional categorical (DisCoCat) model of language [Citation94] could be addressed as the first theoretically successful attempt at harnessing this advantage of quantum machine learning.

2.2. Quantum neural networks

For a given data provision method, e.g. supervised learning, a host of different machine learning architectures could be considered. A machine learning architecture has a set of trainable parameters that can be realised based on an initial probability distribution. Any specific realisation of the parameters of a machine learning architecture is a model. The quest of machine learning is to train these parameters and achieve a nearer probability distribution to that of the problem in question. The fully trained version of each architecture yields a different model with different performance, and generally, the architectures that can spot and infer existent patterns in the data are said to be of superior performance. It is also important to avoid models that find non-existent patterns, models that are said to over-fit their pattern-recognition to the provided data, and when evaluated on previously unseen data fail to perform as well. A model that can spot existent patterns without overfitting to the provided data is said to have a high generalisation ability. This metric establishes a platform for model selectionFootnote1 [Citation95].

For any given problem, there are a variety of architecture classes to choose from. Some of the most commonly used architectures are multi-layered perceptrons (neural networks), convolutional networks for image processing, and graph neural networks for graphically structured data. QML contributes to this list by introducing quantum models such as QNN [Citation96].

Quantum neural networks are models in QML whose parameters are updated classically. The training pipeline includes providing data to the quantum model, calculating an objective (loss) value, and then adapting the QNN parameters in such a way as to reduce this objective function. A specific approach to providing the data to the quantum model is known as the data encoding strategy, and it can have a drastic effect on the functional representation of the model. Sec 2.2.1 covers the various approaches to data encoding, and Sec 2.2.4 offers a review of the theoretical advances in exploring the analytical form of this representation. In QNNs, the objective function is (or includes) the expectation value of a parametrised quantum circuit (PQC) [Citation97]. PQCs are quantum circuits that make use of continuous-variable group rotations. Fine-tuning the architecture of the PQC can have a direct effect on the performance of the resultant QNN model. Sec 2.2.2 reviews the various PQC parametrisations suggested in the literature.

The consequences of the choice of the loss function are outlined in Sec 2.3.1. After making this choice, one could evaluate the PQC, and pass the result to the loss function to obtain a loss value. To minimise the loss value, it is important to tune the trainable parameters in such a way to maximally minimise this value. This is achieved – in both the classical and quantum ML – by calculating the gradient of the loss function with respect to the model parameters.Footnote2 The gradient vector of a function point to the direction of maximal increase in that function, and to maximally reduce the loss function one could find the gradient and step in the opposite direction. Sec 2.2.3 reviews the literature concerning QNN gradient computation.

2.2.1. Data encoding strategies

There are three overarching data encoding strategies [Citation98]:

State embedding: the features are mapped to the computational bases. This is often used for categorical data, and as the number of bases grows, the number of data points needs to follow the same trend, otherwise, the encoding will be sparse [Citation96].

Amplitude embedding: when the features of the dataset are mapped to the amplitudes of the qubit states. This embedding could be repeated to increase the complexity of the encoding. For

qubits, this method allows us to encode up to

features onto the quantum system.

Observable embedding: the features are encoded in a Hamiltonian with respect to which the quantum system is measured. This encoding is typically used in quantum native problems - see Sec 2.5.2 - namely variational quantum eigensolvers (VQE) [Citation30] and quantum differential equation solvers [Citation99,Citation100].

It is important to recognise that state embedding is the only discrete-variable encoding with a strong resemblance to classical ML, whereas the other two are continuous-variable methods and can be considered analogue machine learning.Footnote3

Amplitude embedding could be sub-divided into sub-categories: angle embedding, state amplitude embedding, squeezing embedding and displacement embedding [Citation101,Citation102]. Ref [Citation98]. provides an expressivity comparison between these encoding methods. Effective encoding strategies were analysed in [Citation99,Citation103–105].

2.2.2. Parametrised architecture

The specific parametrisation of the network could dramatically change the output of a circuit. In classical neural networks, adding parameters to a network improves the model expressivity, whereas, in a quantum circuit, parameters could become redundant in over-parametrised circuits [Citation106]. Additionally, the architecture must be trainable, whereas it was shown that this cannot be assumed in an a-priori setting [Citation107] – see Sec 2.2.5. Many architectures have been suggested in the literature, and many templates are readily available to choose from on QML packages [Citation108–110].

Ref [Citation111]. introduced a family of hardware-efficient architectures and used them as variational eigensolvers – see Sec 2.5.2. These architectures repeated variational gates and used CNOT gates to create highly entangled systems. Based on the discrete model in Ref [Citation112]. and made continuous in Ref [Citation113]. A model was devised using RZZ quantum gates that was shown to be computationally expensive to classically simulate [Citation101,Citation114,Citation115], named the instantaneous polynomial-time quantum ansatz (IQP).

Another approach to creating quantum circuits is to take inspiration from tensor networks [Citation116]. Famous architectures in this class are the tensor-tree network (TTN), matrix product state (MPS), and the quantum convolutional neural networks (QCNN) [Citation34,Citation117–119].

2.2.3. Gradient calculation

Despite its excessive memory usage [Citation120], the most prominent gradient calculation method in classical ML is the back-propagation algorithm [Citation121]. This method computes the gradient of every function that trainable parameters are passed through alongside its output and employs the chain rule to create an automatically differentiable ML routine. The back-propagation method can (and has been [Citation122]) implemented for QML, but as it requires access to the quantum state-vector, it can only be used on a simulator and not a real quantum processing unit (QPU). As quantum advantage can only occur in the latter setting, it is important to seek alternatives that can operate on QPUs.

The first proposed algorithm is known as the finite-difference differentiation method [Citation123]. As its name suggests, it calculates the gradient by using the first principles of taking derivatives, i.e. adding a finite difference to the trainable parameters one at a time, and observing the change that this action makes. This method is prone to error in the NISQ era.

As an alternative, a discovery was made in [Citation124] known as the parameter-shift rule that suggested an exact, analytic derivative could be calculated by evaluating the circuit twice for each trainable parameter. The suggestion was that the derivative of a circuit with respect to a trainable parameter is half of the evaluation of the circuit with

shifted by

subtracted from the circuit when it is shifted by

. This suggestion initially worked only on trainable parameters applied to Pauli rotations, but later works [Citation125–132] expanded to its current form, applicable to any parametrisation. The parameter-shift rule is the state-of-the-art gradient computation method and is compatible with QPUs, but one of its major problems is its scalability. As mentioned, the number of circuit evaluations for this method increases linearly with the number of trainable parameters, and this poses a challenge to how complex the quantum models can get. A notable effort to mitigate this effect was by parallelising the gradient computation, which is now natively provided when using PennyLane on AWS Braket [Citation133].

As a transitional gradient computation method for QNNs, Ref [Citation134]. introduced the adjoint algorithm. Similar to the back-propagation method, the adjoint can only be run on a simulator and calculates the entire gradient vector using a single evaluation of the circuit. However, its memory usage is superior to the former. It works by holding a copy of the quantum state and its adjoint in the memory, and in turn applying the gates in reverse order, calculating the gradients wherever possible. This means that two overall evaluations of the circuit are made, first to evaluate the output, and second to compute the gradient.

Alternative suggestions have also been made to optimise QML models following the geometry of their group space. Ref [Citation135]. suggested a Riemannian gradient flow over the Hilbert space, which through hardware implementation showed its favourable optimisation performance.

2.2.4. Quantum neural networks as universal Fourier estimators

Ref. [Citation105] explored the effects of data encoding on the expressivity of the model. It is proved that the data re-uploading technique suggested by Ref [Citation136]. created a truncated Fourier series limited by the number of repetitions of the encoding gates. Ref [Citation137]. also showed that QNNs can be universal Fourier estimators – an analogue to the universality theorem in classical multi-layered perceptrons [Citation138]. Another point proven by Refs. [Citation105,Citation136] was that by repeating the encoding strategy (in amplitude embedding and more specifically the angle embedding) more Fourier bases are added to the final functional representation of the circuit. This was true if the repetitions were added in parallel qubits or series. This sparked a question about the accessibility of these Fourier bases, i.e. whether their coefficients can independently be altered, which remains an open question at the time of this publication.

2.2.5. Barren plateaus and trainability issues

QNNs could suffer from the problem of vanishing gradients. This is when during training, the gradient of the model tends to zero in all directions. This could severely affect the efficiency of the training or even bring it to a halt. This is known as the barren plateau (BP) problem.

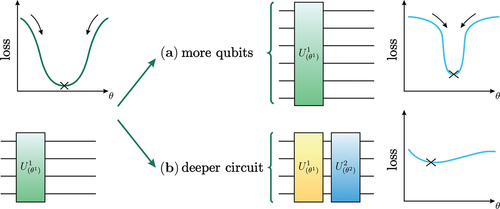

BPs are not usually at the centre of attention in classical ML, but their dominance in quantum architectures makes them one of the most important factors in choosing a circuit. Ref [Citation107]. showed that the expectation value of the derivative of a well-parametrisedFootnote4 quantum circuit is equal to zero, and that its variance decays exponentially with the number of qubits. Ref [Citation139]. confirmed that barren plateaus also exist in gradient-free optimisation methods. In addition, Ref [Citation140]. showed that in the NISQ era, using deep circuits flattens the overall loss landscape resulting in noise-induced BPs. These are mathematically different kinds of Barren plateaus that flatten the landscape as a whole. Illustrations in summarise these phenomena.

Figure 4. Visualisation of the barren plateau phenomenon in (a) noise-free and (b) noise-induced settings.

These two findings painted a sobering picture for the future of QNNs, namely that they need to be shallow and low on qubit-count to be trainable, which contradicts the vision of high-dimensional, million-qubit QML models.

Many remedies have been proposed: Ref [Citation141]. suggested that instead of training all parameters at once, the training could be done layer-wise and Ref [Citation142]. showed that if the depth of the variational layers of the QNN is of the order O(),

being the number of qubits, and that only local measurements are made, the QNN remains trainable. This was tested on circuits with up to 100 qubits and no BPs were detected. Other remedies included introducing correlations in the trainable parameters [Citation143,Citation144] and specific initialisations of the parameters [Citation145] of the circuit by applying adjoint operators of certain variational gates.

More analysis was done on specific architectures: Ref [Citation146]. showed that under well-defined weak conditions, the TTN architecture was free from the BP problem; and Ref [Citation147]. showed that the quantum convolutional neural network architecture introduced in Ref [Citation34]. was also free from BPs. Ref [Citation148]. developed a platform based on ZX calculusFootnote5 to analyse whether a given QNN is subject to suffering from BPs. In addition to confirming the results from the two earlier contributions, it is also proved that the matrix product state [Citation149] and hardware efficient, strongly-entangling QNNs suffered from BPs. Furthermore, Ref [Citation150]. related the barren plateau phenomenon to the degree of entanglement present in the system.

2.3. Quantum learning theory

2.3.1. Supervised QML methods are kernel methods

In Refs. [Citation98,Citation151] the similarities between the QNNs and kernel models were brought to focus. First introduced in Ref [Citation152]., kernel methods are well-established ML techniques with many applications. In conjunction with support vector machines (SVM), the way they work is by mapping the features of a dataset into a high-dimensional space through a function and then using a kernel function,

, as a distance measure between any two data points in this high-dimensional space. This is exactly the behaviour observed in QNNs: the features are first embedded into a high-dimensional quantum state-vector, and by overlapping one encoded state with another we can find the level of similarity between two points in this space. In this high-dimensional space, one hopes to find better insight into the data – usually expressed as a decision boundary in the form of a hyperplane in classification tasks. Ref [Citation153]. used this link and developed a framework for searching for possible quantum advantages over classical models. It is also shown that large models could scatter the data so far apart that a distance measure becomes too large for optimisation purposes, and proposed that an intermediate step be added to map the high-dimensional space into a lower-dimensional hyperplane to improve its training performance.

2.3.2. Bayesian inference

Bayesian inference is an alternative approach to the statistical learning theory where Bayes’ theorem [Citation154] is used to adapt an initial assumption about the problem (prior distribution) based on newly-found data (evidence) to get a posterior distribution. Bayesian learning is when this logic is applied to ML. This is done by applying a distribution to every parameter in the network and updating the distributions when training. Calculating the posterior distribution is generally computationally expensive, but it is possible to approximate its using a trick known as variational inference [Citation155,Citation156] successfully demonstrated an approximate back-propagation algorithm on a Bayesian neural network (BNN), referred to as Bayes-by-backprop.

The first implementations of Bayesian QNNs were in Refs. [Citation157–159] which attempted to make quantum circuits into an exact Bayesian inference machine. Ref [Citation160]. introduced two efficient, but approximate methods – one from kernel theory and another using a classical adversary – to use QNNs to perform variational inference. The work consists of a quantum circuit that can be modelled to produce the probability distribution of a phenomenon by exploiting the probabilistic nature of quantum mechanics – known as a Born machine [Citation161,Citation162] or quantum generative models [Citation149,Citation163–168]. This could also be used later to quantify the prediction error for a single data point, as it has been done classically in Ref [Citation169].

2.3.3. Model complexity and generalisation error bounds

Intuitively, complex phenomena require complex modelling, but quantifying the complexity of a given model is non-trivial. There are multiple ways of defining the model complexity: Vapnik-Chervonenkis (VC) dimension [Citation170], Rademacher complexity [Citation171], and effective dimension [Citation172].Footnote6 The complexity measures are also connected to the generalisation error because when the model becomes too complex for the problem, the generalisation is expected to worsen.

Much work has been done to quantify the complexity and the generalisation error of quantum neural networks: Ref [Citation173]. explored a generalisation error bound through the Rademacher complexity that explicitly accounted for the encoding strategy; and Ref [Citation174]. used the effective dimension – a measure dependent on the sample size – to bound the generalisation error of QNNs as well as prove their higher expressivity given the same number of trainable parameters. Other attempts were also made to quantify the complexity (also referred to as the expressivity) of QNNs in Refs. [Citation175–179].Footnote7 Notably, Ref [Citation180]. theoretically proved that the generalisation error of a QNN grew as where

was the number of parametrised quantum gates in the QNN, and

was the number of data samples in the dataset. The latter work implies that QML models are better at generalising from fewer data points.

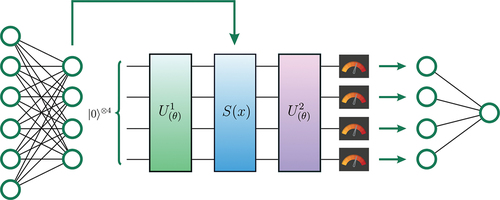

2.4. Hybrid quantum neural networks

Just as there are classical and quantum models, one could also combine the two to create hybrid models – see . It is conceivable that in the NISQ era, one could use the understanding of QML described in Sec. 2.3 to find a regime where quantum models cover some bases that classical models do not. Ref [Citation41]. developed a platform for hybrid quantum high-performance computing (HQC) cloud and it was deployed on the QMware hardware [Citation181,Citation182]. It is shown that for high-dimensional data, a combination of classical and quantum networks in conjunction could offer two advantages: computational speed and the quality of the solution. The data points were, first, fed to a shallowFootnote8 quantum circuit composed of four qubits, two of which were measured, and their corresponding values were passed onto a neural network. Two classical datasets were chosen to explore the effectiveness of a hybrid solution and to compare it when the quantum part is removed, leaving only a classical network: the sci-kit circles’ dataset [Citation183] and the Boston housing dataset [Citation184]. The former is a synthetic geometrical dataset that consists of two concentric circles in a 2-dimensional square of side , and the latter is about the distribution of the property value given its population status and the number of rooms. In both cases, it was shown that the hybrid network generalises better than the classical one and this difference is most visible at the extremes of problems with very small training sample sizes. However, this difference became smaller as the number of samples grew.

Figure 5. An example of a hybrid quantum-classical ML model. In this case, the inputs are passed into a fully connected classical multi-layered perceptron, and its outputs are fed into the embedding of a quantum circuit. Depending on the setting, some measurements of this quantum circuit are taken and then passed into another fully connected layer, the output of which can be compared with the label.

In continuation, Ref [Citation44]. suggested a hyperparameter optimisation scheme aimed at architecture selection of hybrid networks. This work also implemented a hybrid network for training, but in two new ways: 1) using a real-world, image recognition dataset [Citation185], and 2) the quantum part of the hybrid network was inserted in the middle of the classical implementation. Additionally, the architecture of the quantum part was a subject of hyper-parameter optimisation, namely the number of qubits used, and the number of repetition layers included are optimised. Training this network showed that the hybrid network was able to achieve better quality solutions, albeit by a small margin. It is notable that because of this architecture optimisation, a highly improved quantum circuit was achieved. The performance of this circuit was theoretically measured by applying analysis methods such as ZX reducibility [Citation186], Fourier analysis [Citation105], Fisher information [Citation106,Citation187,Citation188], and the effective dimension [Citation172,Citation174].

2.5. Applications and realisations

QML automatically inherits all classical ML problems and implementations, as it is simply a different model to apply to data science challenges. In addition to this inheritance, QML research has also provided novel, quantum-native solutions. In both cases, QML has so far been unable to provide a definite, practical advantage over classical alternatives, and all the suggested advantages are purely theoretical.

2.5.1. Solving classical problems

QML is employed in many classical applications. Some notable contributions are in sciences [Citation189–195], in finance [Citation42,Citation196–198], pharmaceutical [Citation43,Citation45], and automotive industries [Citation44]. In many cases, these models replaced a previously known classical setting [Citation199–202]. Quantum generative adversarial networks were suggested in Ref [Citation203]. and followed by Refs. [Citation204–211]. Similarly, quantum recurrent neural networks were investigated in [Citation212,Citation213], and two approaches to image recognition were proposed in Refs. [Citation34,Citation214]. Ref [Citation215]. looked at a classical-style approach to quantum natural language processing. The applications of QML in reinforcement learning were also explored in Refs. [Citation38,Citation216–218].Footnote9 Finally, a celebrated application is the quantum auto-encoder, where data is compressed and then re-constructed from less information, a notable suggestion was made in Ref [Citation219].

2.5.2. Quantum-native problems

Native problems are novel, quantum-inspired ML problems that are specifically designed to be solved by a QML algorithm.

Perhaps, the most known QML algorithm is the variational quantum eigensolver (VQE). The problem formulation is that the input data is a Hamiltonian, and we are required to find its ground state and ground-state energy. The VQE solution consists of preparing a PQC of trainable parameters and taking the expectation value of the Hamiltonian. This yields the energy expectation of the prepared state, and the idea is that by minimising this expectation value, we can achieve the ground-state energy, at which point the prepared state will represent the ground state of the problem. This was first implemented to find the ground-state energy of [Citation30] and was then substantially extended in Ref [Citation59]. VQE remains one of the most promising areas of QML.

Ref [Citation25]. showed that PQCs can be used to solve a linear system of equations (LSE). They proposed a commonly known as the Harrow, Hassidim, and Lloyd (HHL). Refs. [Citation220–227] improved this algorithm and Ref [Citation228]. extended it to also include non-linear differential equations. Ref [Citation100]. showed that it is possible to use a quantum feature map to solve non-linear differential equations on QNNs. This is also an exciting and promising area of QML.

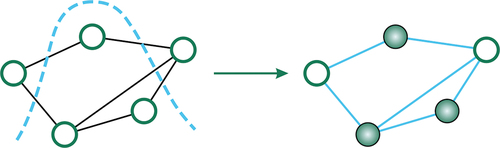

An important QML formulation is known as the quadratic unconstrained binary optimisation (QUBO) [Citation229,Citation230]. This is generally a classical problem, but using the Ising model – see [Citation231] – this can be solved on a quantum computer [Citation232,Citation233]. A common demonstration of the latter is the max cut problem [Citation234] – see . There are solutions for the QUBO problem on both gate-based quantum computers and quantum annealers [Citation235–237], and this general concept has seen use in many sectors [Citation238].

Figure 6. The max cut problem. The abstract manifestation of this problem is a general graph, and we are interested in finding a partition of its vertices such that the number of edges connecting the resultant graph to the complementary graph is maximal.

Lastly, another quantum-native formulation is in natural language processing. Ref [Citation94]. developed a platform for turning grammatical sentences into quantum categories using J. Lambek’s work [Citation239]. Refs. [Citation240–242] tested this algorithm on quantum hardware, and later a full QNLP package was developed [Citation243]. The initial value proposition of QNLP in this way is that this algorithm is natively grammar-aware, but given that large classical language models are shown to infer grammar [Citation80], the real advantage of this approach could lie in other avenues, such as a potential Grover-style [Citation244] speed-up in text classification.

2.6. Open questions

2.6.1. Quantum advantage

Despite the theoretical findings in Sec 2.3.3, there is limited demonstrable success in using QML in real-life problems, and this is not purely due to hardware shortcomings. Ref [Citation245]. showed that there exists a class of datasets that could showcase quantum advantage, and Ref [Citation153]. found a mathematical formulation for where we can expect to find such an advantage. In Refs. [Citation246–248] attempts were also made to devise a set of rules for potential quantum advantage. However, Ref [Citation249]. argued that a shift of perspective from quantum advantage to alternative research questions could unlock a better understanding of QML. The suggested research questions were: finding an efficient building block for QML, finding bridges between statistical learning theory and quantum computing, and making QML software ready for scalable ML.Footnote10

2.6.2. Optimal parametrisation

In Sec 2.2.2, we encountered various QNN parametrisations with specific properties. An open question is how to optimally parametrise a circuit to avoid barren plateaus, be as expressive as possible, and be free of redundancy. A potential characteristic of such a parametrisation is a high level of Fourier accessibility as mentioned in Sec 2.2.4, potentially requiring a quantifiable measure of this accessibility.

2.6.3. Theory for hybrid models

Despite the successes outlined in Sec 2.4, the theoretical grounding for such models is limited. We saw that hybrid networks performed well if the quantum section was introduced at the beginning of the model architecture [Citation41] or in the middle [Citation44]. From an information-theoretic perspective, this needs to be investigated in more detail to shed light on the effect of hybridisation. Such investigation could identify if there exist areas where the application of a quantum part could complement a classical circuit by either introducing an information bottleneck to prevent over-fitting or by creating high-dimensional models.

2.6.4. An efficient optimisation method

The current gradient calculation methods are either only available on simulators or require a linearly-growing number of circuit evaluations- see Sec. 2.2.3. Neither of these can accommodate a billion-parameter, million-qubit setting. This poses a barrier to the future of QML, and thus an efficient optimisation method is needed for the long term.

3. Quantum technologies enhanced by machine learning

In this section, an impact that machine learning makes in quantum technologies is discussed. The outline of the topics is given in . Today machine learning is used to realize algorithms and protocols in quantum devices, by autonomously learning how to control [Citation35,Citation250–253], error-correct [Citation36,Citation254–256], and measure quantum devices [Citation257]. Given the experimental data, ML can reconstruct quantum states of physical systems [Citation258–261], learn compact representations of these states [Citation262,Citation263], and validate the experiment [Citation264]. In this section, we discuss the impact of machine learning on fundamental and applied physics, and give specific examples from quantum computing and quantum communication.

Figure 7. Machine learning helps in solving problems in fundamental and applied quantum physics. Sections that discuss a particular problem are labeled.

3.1. Machine learning in fundamental and applied quantum physics

Since its full development in the mid-1920s, a century later quantum mechanics is still considered as the most powerful theory, modeling a wide range of physical phenomena from subatomic to cosmological scales with the most precise accuracy. Even though the measurement problem and quantum gravity had led many physicists to conclude that quantum mechanics cannot be a complete theory, the spooky action of entanglement in the Einstein-Podolsky-Rosen pair [Citation265], has provided the resources for quantum information processing tasks. With machine learning, one may be able to model different physical systems (e.g. quantum, statistical, and gravitational) using artificial neural networks, which might lead to the development of a new framework for fundamental physics.

Even without a precise description of a physical apparatus and solely based on measurement data, one can prove the quantumness of some observed correlations by the device-independent test of Bell nonlocality [Citation266]. In particular, by using generative algorithms to blend automatically many multilayer perceptrons (MLPs), a machine learning approach may allow the detection and quantification of nonlocality as well as its quantum (or postquantum) nature [Citation267–269].

3.1.1. Machine learning in quantum computing

Machine learning has also become an essential element in applied quantum information science and quantum technologies. ML, which was inspired by the success of automated designs [Citation31], was demonstrated to be capable of designing new quantum experiments [Citation32].

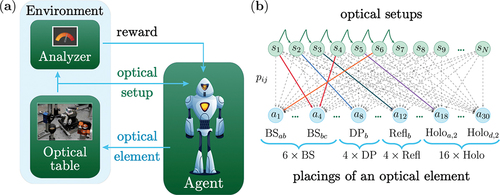

Quantum experiments represent an essential step towards creating a quantum computer. More specifically, for example, three-particle photonic quantum states represent a building block for a photonic quantum computing architecture. In Ref [Citation32]. ML algorithm used is a reinforcement learning algorithm based on the projective simulation model [Citation270–275]. An agent, the reinforcement learning algorithm, puts optical elements on a (simulated) optical table. Each action adds an optical element to an existing setup. In case the resulting setup achieves the goal, e.g. creates a desired multiphoton entangled state, the agent receives a reward. The described learning scheme is depicted in ).

Figure 8. A reinforcement learning algorithm that designs a quantum experiment. An experiment on an optical table is shown as an example. (a) The learning scheme depicts how an agent, the reinforcement learning algorithm, learns to design quantum experiments. (b) Representation of the reinforcement learning algorithm, projective simulation, as a two-layered network of clips.

The initial photonic setup is an output of a double spontaneous parametric down-conversion (SPDC) process in two nonlinear crystals. Neglecting these higher-order terms in the down-conversion, the initial state can be written as a tensor product of two orbital angular momentum entangled photons,

where the indices and

specify four arms in the optical setup. The actions available to the agent consist of beam splitters (BS), mirrors (Refl), shift-parametrized holograms (Holo), and Dove prisms (DP). The final photonic state

is obtained by measuring the arm

, and post-selecting the state in the other arms based on the measurement outcome in

.

The reinforcement learning algorithm that achieves the experimental designs is shown in ). It is the projective simulation agent is represented by a two-layered network of clips. The first layer corresponds to the current state of the optical setup, whereas the second layer is the layer of actions. The connectives between layers define the memory of the agent, which changes during the learning process. The connectivities correspond to the probabilities of reaching a certain action in a given state of a quantum optical setup. During the learning process, the agent automatically adjusts the connectivities, and thereby prioritize some actions other than the other. As shown in Ref [Citation32]. this leads to a variety of entangled states of improved efficiency of their realization.

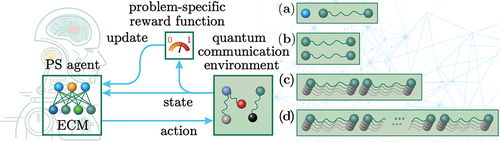

3.1.2. Machine learning in quantum communication

In addition to designing new experiments, ML helps in designing new quantum algorithms [Citation276] and protocols [Citation277]. Designing new algorithms and protocols has similarities to experiment design. In particular, similar to experiment design, every protocol can be broken down into individual actions. In the case of the quantum communication protocol, these actions are, e.g: apply -gate to the second qubit, apply

-gate to the first qubit, send the third qubit to the location

, and measure the first qubit in the

-basis. Because of the combinatorial nature of the design problem, the number of possible protocols grows exponentially with the number of available actions. For that reason, a bruteforce search of a solution is impossible for an estimated number of possible states of a quantum communication environment

[Citation277].

A reinforcement learning approach to quantum protocol design, first proposed in Ref [Citation277]., is shown to be applicable to a variety of quantum communication tasks: quantum teleportation, entanglement purification, and a quantum repeater. The scheme of the learning setting is shown in . The agent perceives the quantum environment state, and chooses an action based on the projective simulation deliberation process. The projective simulation network used in this work is similar to the one in ), with addition of hierarchical skill acquisition. This skill is of particular importance in the long-distance quantum communication setting, which has to include multiple repeater schemes.

Figure 9. A reinforcement learning algorithm that designs a long-distance quantum communication protocol. The algorithm is based on projective simulation with episodic and compositional memory (ECM). Given the state of the quantum communication environment, the algorithm chooses how to modify this state by acting on the environment. The goals of the PS agent are: (a) teleportation protocol (b) entanglement purification protocol (c) quantum repeater protocol (d) quantum repeater protocol for long-distance quantum communication.

With the help of projective simulation, it was demonstrated that reinforcement learning can play a helpful assisting role in designing quantum communication protocols. It is shown that the use of ML in the protocol design is not limited to rediscovering existing protocols. The agent finds new protocols that are better than existing protocols in case optimal situations lack certain symmetries assumed by the known basic protocols.Footnote11

3.2. Machine learning in random walks problems

Random walks paradigm plays an important role in many scientific fields related to the transfer of charge, energy, or information transport [Citation278–282]. Random (classical) walks on graphs represent an indispensable tool for many subroutines in computational algorithms [Citation283–285]. Quantum walks (QW) represent a generalization of classical walks to the quantum domain and use quantum particles instead of classical one [Citation286,Citation287]. The resulting quantum interference pattern, which governs the QW physics fundamentally differs from the classical one [Citation288]. For quantum information science, it is crucially important that a quantum particle exhibits quantum parallelism, which appears as a result of various path interference and entanglement. It was shown that quantum particle propagates quadratically faster than classical one on certain graphs, which are line [Citation289], cycle [Citation290,Citation291], hypercube [Citation292,Citation293] and glued trees graphs [Citation294], respectively. It is expected that algorithms based on QW should demonstrate quadratic speedup that is . Such parallelism may be useful for quantum information processing and quantum algorithm purposes [Citation294–296]. It is especially important to note that QWs are explored in quantum search algorithms, which represent important tools for speed up of QML algorithms [Citation21,Citation297–299].

Noteworthy, QW speed up demonstration with arbitrary graphs represents an open problem [Citation300]. The standard approach would be to simulate quantum and classical dynamics on a given graph, which provides an answer in which case a particle would arrive at a target vertex faster. However, this approach may be difficult (and costly) to use in computations for the graphs possessing a large number of vertices; the propagation time scales polynomially in the size of the graph. Second, we are usually interested in a set of graphs for which the obtained results of the simulations cannot reveal some general features of quantum advantage.

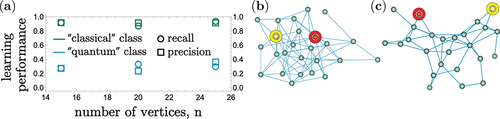

In a number of works, we attacked this problem by means of ML approach [Citation39,Citation40,Citation301]. We explore a supervised learning approach to predict a quantum speed up just by looking at a graph. In particular, we designed a classical-quantum convolutional neural network (CQCNN) that learns from graph samples to recognize the quantum speedup of random walks.

The basic concept of CQCNN that we use in Ref [Citation39,Citation40,Citation301]. is shown in , respectively. In particular, we examined in Ref [Citation39,Citation40,Citation301–303]. continuous-time random walks and suppose that the classical random walk representing stochastic (Markovian) process defined on a connected graph. It starts at the time from the initial node

and hits the target vertex

. Unlike the classical case, a quantum particle due to interference phenomenon will be ‘smeared’ across all vertices of the graphs. Thus, in the quantum case, we propose an additional (sink) vertex

that is connected to the target vertex and provides localization of the quantum particle due to energy relaxation from

to

vertices, which happen with the rate

. In other words, the quantum particle may be permanently monitored at the sink vertex. Mathematically, a graph is characterized by its weighted adjacency matrix

that is relevant to Hamiltonian

. Notice, for chiral QW time asymmetry may be obtained by using complex-valued adjacency matrix elements [Citation301]. We characterize quantum transport by means of Gorini–Kossakowski–Sudarshan–Lindblad equation that looks like (cf [Citation304].):

Figure 10. A schematic representation of considered in Refs. [Citation39,Citation40] random walks on (a) connected random graph, (b) cycle graph. The labels (), and (

) specify initial and target vertices, respectively;

is a sink vertex which is require to localize and detect quantum particle. The

is coupling parameter between target and sink vertices, respectively.

![Figure 10. A schematic representation of considered in Refs. [Citation39,Citation40] random walks on (a) connected random graph, (b) cycle graph. The labels (), and () specify initial and target vertices, respectively; is a sink vertex which is require to localize and detect quantum particle. The is coupling parameter between target and sink vertices, respectively.](/cms/asset/b2cd6ae8-4354-489c-bba7-c515d9dc00f4/tapx_a_2165452_f0010_oc.jpg)

Figure 11. Schematic representation of CQCNN approach which is used for predicting the quantum speed up on the graphs represented in . (a) – scheme of the CQCNN architecture. The neural network takes a labeled graph in form of an adjacency matrix as an input. The

then processed by convolutional layers with filters of graph-specific ‘edge-to-edge’ and ‘edge-to-vertex’, respectively. These filters act as functions of a weighted total number of neighboring vertices of each vertex. The convolutional layers are connected with fully connected layers which classify the input graph. Data and error propagation are shown with arrows. (b) and (c) demonstrates processes of CQCNN training and testing, respectively.

where is time-dependent density operator,

and

operators characterize transitions from vertices

to

and from

(target) to

(sink), respectively;

is the coupling parameter between target and sink vertices. The parameter

lies

condition and determines the decoherence; the value

is relevant to purely quantum transport, while

determines completely classical random walks.

The solution of EquationEq. (2)(2) specifies quantum probability

(

is the total number of vertices), which is relevant to QW on a chosen graph. The classical random walk may be established by the probability distribution

where is a vector of probabilities

of detecting a classical particle in vertices

of the graph;

is the identity matrix of size

. The transition matrix

is a matrix of probabilities

for a particle to jump from

to

. In this case, the sink vertex is not needed, and we can assume

. We are interested in the probability of finding a particle in the target (or, in the sink) vertex, which is described by solutions of EquationEq. (2)

(2) and (Equation3

(3) ).

Then, one is possible to compare and

against

that determines threshold value of probability for a given graph. If this probability is larger than

, we can conclude that the particle occurs at the target. The time at which one of the inequalities

,

fulfilled, is called the hitting time for quantum or classical particle, respectively. Hence, by comparing the solutions to EquationEq. (2)

(2) and (Equation3

(3) ), we can define the particle transfer efficiency: it is

if the quantum particle reached the target first, and

otherwise.

In we schematically summarize the proposed CNN approach for detection of QW speedup. In order, the architecture of CQCNN is shown in ). It consists of a two-dimensional input layer that takes one graph represented by an adjacency matrix . This layer is connected to several convolutional layers, the number of which depends on the number of vertices

of the input graph. The number of layers is the same for all graph sizes. CQCNN has a layout with convolutional and fully connected layers, and two output neurons that specify two possible output classes. Convolutional layers are used to extract features from graphs, and decrease the dimensionality of the input.

Empirically, we find out that relevant features are in the rows and columns of adjacency matrices. The first convolutional layer comprises six filters (or, feature detectors) which define three different ways of processing the input graph. These three ways are marked by green, red, and blue colors in ), respectively. The constructed filters are form ‘crosses’ which are shown in ) and capture a weighted sum of column and row elements. These filters act as functions of a weighted total number of neighboring vertices of each vertex. Thus, the cross ‘edge-to-edge’ and ‘edge-to-vertex’ filters crucially important in designed CQCNN; they are capable for prediction of the quantum advantage by QW.

) shows schematically the training procedure by using some graphs samples, which are established by adjacency matrices as an input. CQCNN made prediction at the output determining classical or quantum classes depending on the values of the two output neurons. The predicted class is determined by means of the index of a neuron with the largest output value

. Having a correct label, the loss value is computed.

The filters that we constructed in CQCNN play an essential role in the success of learning. CQCNN learns by stochastic gradient descent algorithm that takes the cross-entropy loss function. The loss on a test example is defined relative to the correct class

(classical or quantum,

or

) of this example:

where we defined the values of the output neurons as and

;

is the total fraction of examples from this class in the dataset. As we have shown in Ref [Citation39]. CQCNN constructed a function that generalizes over seen graphs to unseen graphs, as the classification accuracy (which may be defined as the fraction of correct predictions) goes up.

CQCNN testing procedure is not principally different from the training process in how it is seen in ), cf. ). The CQCNN does not receive any feedback on its prediction in this case and the network is not modified.

We apply the described ML approach to different sets of graphs. In particular, to understand how our approach works in a systematic way, we first analyze the CQCNN on line graphs with up to vertices. CQCNN was trained over

epochs with a single batch of

examples per epoch.

Then, we simulated CQCNN’s learning process for random graphs, each sampled uniformly from the set of all possible graphs with vertices and

edges. The learning performance results in the absence of decoherence (

) are shown in for

; the

is chosen uniformly from

to

. Our simulations shows that the loss after training is vanishing; it is below

for all these random graphs. In ) we see that both recall and precision are about

for the ‘classical’ part of the set, and is in the range of

for the ‘quantum’ one. nFootnote12 Thus, one can see that CQCNN helps to classify random graphs correctly much better than a random guess without performing any QW dynamics simulations. In we represent samples of correctly classified graphs.

Figure 12. (a) CQCNN learning performance. Dataset consists of random graphs with and

vertices,

examples for each

, and the corresponding classical and quantum labels. CQCNN was simulated during

epochs,

mini batches each with the batch size of

examples. The neural network was tested on

random graphs for each

. (b), (c) establish random graph examples taken from the test set which were correctly classified by CQCNN (initial and target vertices are marked in yellow and red, respectively). The classical particle is faster on (b), whereas the quantum one is faster on graph (c).

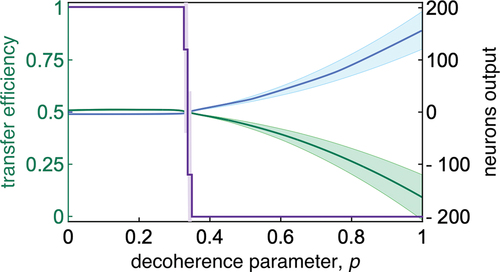

3.2.1. Quantum walks with decoherence

In the presence of decoherence, i.e. for physical picture is getting richer. In we demonstrate results of QW dynamics simulation on cycle graph consisting of

-vertices; the efficiency of transport is measured between opposite vertices of the graph as it is shown in ). Simulations are performed for

randomly sampled values of the decoherence parameter

and used to train CQCNN. After the training procedure, we suggest CQCNN to predict if the QW can lead to an advantage for a new given parameter

. In , we represent the results of the transfer efficiency predictions as a violet line. From it is clearly seen that at the value of decoherence parameter

abrupt crossover from quantum (

) to classical (

) regime transport occurs. Thus, one possible to expect QW advantage in transport in domain of

. Physically, such a crossover may be relevant to quantum tunneling features in the presence of dissipation, cf [Citation305,Citation306]. Notice that the parameter

is temperature-dependent in general, cf [Citation307]. In this case, we can recognize the established crossover as a (second-order) phase transition from the quantum to classical (thermal activation) regime that happens for a graph at some finite temperature.

Figure 13. Prediction of transfer efficiency (violet curve) for a -cycle graph versus decoherence parameter

. The activation values of output neurons are shown in blue and green. The results obtained by averaging of

CQCNN networks. Standard deviations are marked by shaded regions.

demonstrates predictions of CQCNN which are based on the learned values of the output neurons; they are shown in as a classical (blue) and quantum (green) classes, respectively. CQCNN made decision about the class by using the maximum value of the output neuron activation. From it is clearly seen that the ‘vote’ for the quantum class grows up to the maximum value , which corresponds to the highest confidence for the quantum class. Simultaneously, the confidence in the classical class grows with increasing of decoherence parameter

. Separation between the classes becomes more evident after the crossover point of

.

Thus, the obtained results play a significant role in the creation of soft- and hardware systems, which are based on the graph approach at their basis. CQCNN that we proposed here allows us to find out which graphs, and under which conditions on decoherence, can provide a quantum advantage. This is especially relevant to NISQ era quantum devises development.

3.3. Machine learning in quantum tomography

With the capability to find the best fit to arbitrarily complicated data patterns with a limited number of parameters available, machine learning has provided a powerful approach for quantum tomography. Here, quantum tomography or quantum state tomography (QST) refers to the reconstruction about a quantum state with its comprehensive information by using measurements on an ensemble of identical quantum states [Citation308–314]. However, the exponential growth in bases for a Hilbert space of -qubit states implies that exact tomography techniques require exponential measurements and/or calculations. In order to leverage the full power of quantum states and related quantum processes, a well characterization and validation of large quantum systems is demanded and remains an important challenge [Citation315].

Traditionally, by estimating the closest probability distribution to the data for any arbitrary quantum states, the maximum likelihood estimation (MLE) method is used in quantum tomography [Citation316,Citation317]. However, the MLE method requires exponential-in- amount of data as well as an exponential-in-

time for processing. Albeit dealing with Gaussian quantum states, unavoidable coupling from the noisy environment makes a precise characterization on the quantum features in a large Hilbert space almost unattackable. Moreover, MLE also suffers from the overfitting problem when the number of bases grows. To make QST more accessible, several alternative algorithms are proposed by assuming some physical restrictions imposed upon the state in question, such as the adaptive quantum tomography [Citation318], permutationally invariant tomography [Citation319], quantum compressed sensing [Citation320–323], tensor networks [Citation324,Citation325], generative models [Citation326], feed-forward neural networks [Citation327], and variational autoencoders [Citation328].

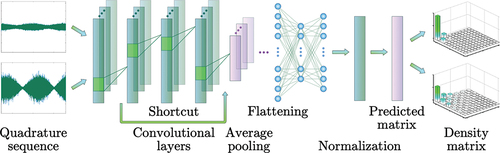

To reduce the overfitting problem in MLE, the restricted Boltzmann machine (RBM) [Citation329–332] has provided a powerful solution in QST. With the help of two layers of stochastic binary units, a visible layer and a hidden layer, the RBM acts as a universal function approximator. For qubits on an IBM Q quantum computer, quantum state reconstructions via ML were demonstrated with four qubits [Citation333]. For continuous variables, the convolutional neural network (CNN) has been experimentally implemented with the quantum homodyne tomography for continuous variables [Citation260,Citation334,Citation335].

As illustrated in , the time sequence data obtained in the optical homodyne measurements share the similarity to the voice (sound) pattern recognition [Citation336,Citation337]. Here, the noisy data of quadrature sequence are fed into a CNN, composited with convolutional layers in total. In applying CNN, we take the advantage of good generalizability to extract the resulting density matrix from the time-series data [Citation336]. In our deep CNN, there are four convolution blocks are used, each containing

to

convolution layers (filters) in different sizes. Five shortcuts are also introduced among the convolution blocks, in order to tackle the gradient vanishing problem. Instead of max-pooling, average pooling is applied to produce higher fidelity results, as all the tomography data should be equally weighted. Finally, after flattening two fully connected layers and normalization, the predicted matrices are inverted to reconstruct the density matrices in truncation.

Figure 14. Schematic of machine learning enhanced quantum state tomography with convolutional neural network (CNN). Here, the noisy data of quadrature sequence obtained by quantum homodyne tomography in a single-scan are fed to the convolutional layers, with the shortcut and average pooling in the architecture. Then, after flattening and normalization, the predicted matrices are inverted to reconstruct the density matrices in truncation.

Here, the loss function we want to minimize is the mean squared error (MSE); while the optimizer used for training is Adam. We take the batch size as in the training process. By this setting, the network is trained with

epochs to decrease the loss (MSE) up to

. Practically, instead of an infinite sum on the photon number basis, we keep the sum in the probability up to

by truncating the photon number. Here, the resulting density matrix is represented in photon number basis, which is truncated to

by considering the maximum anti-squeezing level up to

dB.

As to avoid non-physical states, we impose the positive semi-definite constraint into the predicted density matrix. Here, an auxiliary (lower triangular) matrix is introduced before generating the predicted factorized density matrix through the Cholesky decomposition. During the training process, the normalization also ensures that the trace of the output density matrix is kept as . More than a million data sets are fed into our CNN machine with a variety of squeezed (

), squeezed thermal (

), and thermal states (

) in different squeezing levels, quadrature angles, and reservoir temperatures, i.e.

Here, with the vacuum state

,

with the probability distribution function

, defined with the mean-photon number

at a fitting temperature

, and

denotes the squeezing operator, with the squeezing parameter

characterized by the squeezing factor

and the squeezing angle

. All the training is carried out with the Python package tensorflow.keras performed in GPU (Nvidia Titan RTX).

The validation of ML-enhanced QST is verified with simulated data set, through the average fidelity obtained by MLE and CNN by calculating the purity of the quantum state, i.e. . Compared with the time-consuming MLE method, ML-enhanced QST keeps the fidelity up to

even taking

dB anti-squeezing level into consideration. With prior knowledge of squeezed states, such a supervised CNN machine can be trained in a very short time (typically, in less than 1 hour), enabling us to build a specific machine learning for certain kinds of problem. When well trained, an average time of about

milliseconds (by averaging

times) costs in a standard GPU server. One unique advantage of ML-enhanced QST is that we can precisely identify the pure squeezed and noisy parts in extracting the degradation information. By directly applying the singular value decomposition to the predicted density matrix, i.e.

, all the weighting ratios about the ideal (pure) squeezed state, the squeezed thermal state, and thermal state can be obtained. With this identification, one should be able to suppress and/or control the degradation at higher squeezing levels, which should be immediately applied to the applications for gravitational wave detectors and quantum photonic computing.

Towards a real-time QST to give physical descriptions of every feature observed in the quantum noise, a characteristic model to directly predict physical parameters in such a CNN configuration is also demonstrated [Citation338]. Without dealing with a density matrix in a higher dimensional Hilbert space, the predicted physical parameters obtained by the characteristic model are as good as those generated by a reconstruction model. One of the most promising advantages for ML in QST is that only fewer measurement settings are needed [Citation339]. Even with incomplete projective measurements, the resilience of ML-based quantum state estimation techniques was demonstrated from partial measurement results [Citation340]. Furthermore, such a high-performance, lightweight, and easy-to-install supervised characteristic model-based ML-QST can be easily installed on edge devices such as FPGA as an in-line diagnostic toolbox for all possible applications with squeezed states.

In addition to the squeezed states illustrated here, similar machine learning concepts can be readily applied to a specific family of continuous variables, such as non-Gaussian states. Of course, different learning (adaptation) processes should be applied in dealing with single-photon states, Schrödinger’s cat states [Citation341,Citation342], and Gottesman-Kitaev-Preskill states for quantum error code corrections [Citation343]. Alternatively, it is possible to use less training data with a better kernel developed in machine learning, such as the reinforce learning, generative adversarial network, and the deep reinforcement learning used in the optimization problems [Citation259,Citation344–350]. Even without any prior information, informational completeness certification net (ICCNet), along with a fidelity prediction net (FidNet), have also been carried out to uniquely reconstruct any given quantum state [Citation351].

Applications of these data-driven learning and/or adaptation ML are not limited to quantum state tomography. Identification and estimation of quantum process tomography, Hamiltonian tomography, and quantum channel tomography, as well as quantum-phase estimation, are also in progresses [Citation352–355]. Moreover, ML in quantum tomography can be used for the quantum state preparation, for the general single-preparation quantum information processing (SIPQIP) framework [Citation356].

3.4. Photonic quantum computing