ABSTRACT

Topological data analysis refers to approaches for systematically and reliably computing abstract ‘shapes’ of complex data sets. There are various applications of topological data analysis in life and data sciences, with growing interest among physicists. We present a concise review of applications of topological data analysis to physics and machine learning problems in physics including the unsupervised detection of phase transitions. We finish with a preview of anticipated directions for future research.

Graphical abstract

I. Introduction

Topological quantities are invariant under continuous deformations; an often-cited example is that a doughnut can be continuously transformed into a coffee mug – both are topologically equivalent to a torus. The robustness of topological quantities to perturbations is inspiring physicists in many fields, including condensed matter, photonics, acoustics, and mechanical systems [Citation1–4]. In all these areas, topology has enabled the prediction and explanation of surprisingly robust physical effects.

Most famously, the extremely precise quantisation of the Hall conductivity observed in two-dimensional electronic systems since the 1980s was explained as a novel topological phase of matter, the quantum Hall phase [Citation5]. In this and many other examples from physics, we deal with smooth deformations in some parameter space, such as the energy bands of solid state electronic systems.

Physics is, however, an outlier among fields of science in that idealised continuous models and functions can explain a wide variety of observed phenomena. Other fields do not have the luxury of continuity and have to make do out of sparse data and limited observations in high-dimensional parameter spaces. Despite this very different setting, topological approaches remain powerful.

A suite of computational topological techniques known as topological data analysis (TDA) has been developed over the past 20 years to systematically define and study the ‘shape’ of complex discrete data in high-dimensional spaces. TDA is attracting growing interest among physicists, particularly those working on topological materials or the application of machine learning techniques to physics [Citation6–10].

At this time, we are aware of two existing reviews of TDA aimed at the physics audience. The first by Carlsson, one of the founders of the field, gave a broad survey of different techniques of TDA and their applications in various areas of science [Citation11]. The second review, by Murugan and Robertson, provided a detailed pedagogical and physicist-friendly introduction to two important techniques, persistent homology and the Mapper algorithm, applying them to the example of an astronomical dataset [Citation12].

Since publication of these two reviews there has been growing interest in applying TDA methods to physics, including the incorporation of TDA into physics-targeted machine learning, with applications including the unsupervised detection of phase transitions. Moreover, the field of TDA has continued to evolve with new generalisations and techniques being actively studied.

The aim of this article is to review cutting edge applications of TDA to physics. We will provide a gentle introduction to the basic techniques, survey how TDA shows promise for the detection of novel phases of matter, and speculate on what we believe to be important directions for future research, including opportunities offered by newer TDA methods such as zigzag persistence.

The structure of this article is as follows: Section II provides a brief introduction to TDA guided by the simple example of two-dimensional point clouds. Section III discusses how TDA has been applied to identify order parameters and phase transitions in various physical systems. Section IV covers recent studies that employ TDA to compute features of physical systems that are then incorporated into a larger machine learning pipeline. Section V speculates on anticipated future directions and applications of TDA to physics, and vice versa. We conclude with Section VI.

II. Topological data analysis

Admittedly, TDA has a rather steep learning curve, since its foundation differs from the topology of continuous spaces to which physicists are more accustomed. However, after battling through the unfamiliar jargon and notation one can develop a powerful intuition for the subject. Our aim here is to give an equation-free sketch of the general approaches and terminology, while referring the motivated reader to more comprehensive and mathematically rigorous reviews [Citation11–15].

A. From point clouds to persistence diagrams

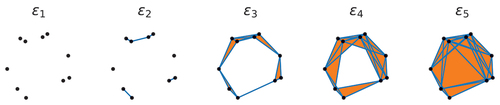

As an instructive example, let us consider the two-dimensional point clouds shown in . Each point may correspond to a distinct measurement of some object, e.g. the locations of photons arriving at a camera, or the positions of particles in a system. With our eyes, we can clearly see that each cloud has a different shape: The points in the ‘Circle’ and ‘Figure 8’ clouds are distributed around one and two loops, respectively. On the other hand, the ‘Swiss Roll’ corresponds to a noisy one-dimensional point cloud embedded into a higher (two-) dimensional space.

Figure 1. Examples of noisy point clouds. Point clouds sampled from objects with differing shapes and even differing dimensionality may be difficult to distinguish using standard summary statistics such as the centre of mass and variance. In “Circle” and “Figure 8” the noise randomly perturbs the points in the ambient two-dimensional space. In “Swiss Roll” points are sampled from a one-dimensional interval before being embedded into the two-dimensional space .

We would like to formalise these qualitative observations in a more systematic way, such that we are not reliant on directly plotting the data, which is an approach limited to two- or three-dimensional datasets. How can we quantify the obviously different shapes of these point clouds? Standard summary statistics such as the centre of mass or variance are clearly inadequate, since they are not invariant under shape-preserving translations or rescaling of the data.

Fortunately, graph theory provides rigorous ways of quantifying intuitive shapes of discrete datasets including point clouds. The idea is to construct a graph by connecting pairs of points (vertices) that are sufficiently close together by edges and then to quantify its shape by computing topological invariants of the graph, its Betti numbers . The

th Betti number is the number of

-dimensional holes, e.g. the number of independent connected components (clusters

) or non-contractible loops (cycles

). In practice, evaluating graph invariants amounts to computing the ranks and null spaces of linear operators (matrices) acting on the graph’s vertices and edges. In a nutshell, the computation of the shape of point cloud data can be reduced to simple linear algebra.

Higher-dimensional topological features can be similarly obtained by constructing generalisations of graphs known as simplicial complexes, which capture higher dimensional objects (faces, volumes, etc.) by triangulation. A -simplex is a combination of

vertices; edges are 1-simplices, triangular faces are 2-simplices, tetrahedral volumes are 3-simplices, and so on. A

-simplicial complex is a collection of simplices with dimension of at most

.

Increasing complicates matters. First, since

-simplices are combinatorial objects, the number of possible simplices grows rapidly with

, limiting practical calculations to low dimensional topological features. Second, there is no unique way to construct a simplicial complex given only pairwise distances between points and a cutoff scale; different methods may differ in their computational costs, stability properties, and ability to faithfully reproduce the shapes of the underlying space from which the points are sampled [Citation16].

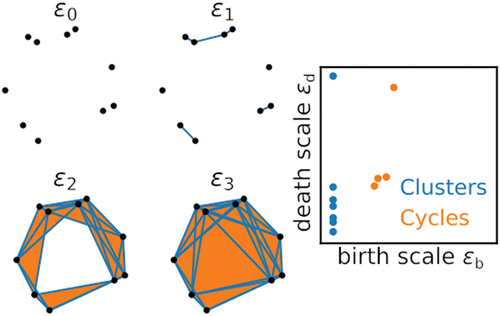

There is one big elephant in the room that we must address what do we mean by ‘sufficiently close’ when connecting vertices to form a graph or simplicial complex? How do we determine which pairs of vertices to link by an edge and which pairs to leave disconnected? The number of cycles and clusters will be sensitive to the choice of cutoff distance and even possibly the addition or removal of a single edge, as illustrated in . This seems like a major problem making the approach lack robustness to noise and other perturbations.

Figure 2. Simplicial complexes constructed from a point cloud using different cutoff distances , where blue lines and orange shaded areas denote edges and faces, respectively. For small cutoff distances all points are disconnected, forming a trivial simplicial complex with no edges (

). As the cutoff is increased nearby vertices start to become connected by edges (

). Increasing the cutoff further, triplets of points become connected, forming faces. In

and

the simplicial complex has a single connected component hosting a non-trivial cycle. For sufficiently large cutoff distances the cycle is destroyed by the addition of faces covering the entire interior of the point cloud (

).

A neat solution to the scale-dependence of graph invariants obtained from point clouds is to compute the shape of the graph over an entire range of scales known as a filtration, i.e. study its topology as a function of the cutoff length scale [Citation17]. Topological features (e.g. clusters and cycles) persisting over a wide range of scales are more robust and should provide a meaningful characterisation of the overall shape of the data. On the other hand, features sensitive to small changes in scale or the addition or removal of a few edges can be attributed to noise and discarded if necessary. By studying the persistence of topological features we will be able to distinguish robust features from noise.

Persistence diagrams are one stable way to represent scale-dependent topological features of a dataset [Citation18]. shows persistence diagrams computed for each of the point clouds in . The most persistent topological features not only allow us to infer the overall shape of the data but also give information as to the geometry of the point cloud. For example, the birth scales of the long-lived cycles in the ‘Circle’ and ‘Figure 8’ clouds are related to a maximum separation between neighbouring points comprising the cycle, while the death scale will be related to the cycle’s diameter.

Figure 3. Persistence diagrams of the two-dimensional point clouds shown in computed using the Vietoris-Rips complex [Citation16]. Each point represents a distinct topological feature. Horizontal and vertical axes denote the length scales at which each feature is created (; birth) and destroyed (

; death) respectively. Points that are further from the diagonal dashed line therefore persist over a larger range of scales and are said to have a longer “lifetime”

. Since features must be created before they are destroyed, no points lie below the diagonal. At sufficiently large spatial scales all points become connected to form a single connected graph, corresponding to a single cluster with an infinite lifetime. Typically the infinite lifetime cluster is either discarded or plotted at a finite

and distinguished using a horizontal dashed line.

![Figure 3. Persistence diagrams of the two-dimensional point clouds shown in Figure 1 computed using the Vietoris-Rips complex [Citation16]. Each point represents a distinct topological feature. Horizontal and vertical axes denote the length scales at which each feature is created (; birth) and destroyed (; death) respectively. Points that are further from the diagonal dashed line therefore persist over a larger range of scales and are said to have a longer “lifetime” . Since features must be created before they are destroyed, no points lie below the diagonal. At sufficiently large spatial scales all points become connected to form a single connected graph, corresponding to a single cluster with an infinite lifetime. Typically the infinite lifetime cluster is either discarded or plotted at a finite and distinguished using a horizontal dashed line.](/cms/asset/eb4ecee0-f47f-4afe-9a6f-f7527b7d853c/tapx_a_2202331_f0003_oc.jpg)

The attentive reader will notice that the persistence diagrams for the ‘Circle’ and ‘Swiss Roll’ clouds share the same long-lived features, despite their obviously differing shapes. Closer inspection will, however, reveal noticeable differences in their short-lived features. For example, the cycles appearing in the ‘Swiss Roll’ dataset all have similar birth scales, corresponding to the distance between the inner and outer parts of the spiral and hinting at a one-dimensional embedding. This suggests that the differing shapes of these two-point clouds may indeed be captured by inspecting their short-lived features; thus, persistent homology can also capture the local features (geometry) of the data.

B. Comparing and computing persistence diagrams

While persistence diagrams provide a compact visual summary of the scale-dependent topological features of a single dataset, it is not immediately clear how we should go about comparing persistence diagrams computed for different datasets; they will generally differ in their number of features and level of noise, making it difficult to establish a common threshold between genuine features and noise-induced features.

These issues motivated the development of stable distance and similarity measures for persistence diagrams. Here, stability means that a small change to one dataset results in, at most, a similarly small change in similarity to other fixed persistence diagrams.

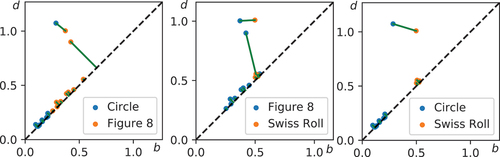

One example of a stable distance measure is the Wasserstein distance, which is the smallest distance the points in a pair of persistence diagrams must be moved in order to transform one diagram into the other. Unpaired features (i.e. if one diagram has more features) are moved to the diagonal. For example, shows the matching between the one-dimensional cycles of the Circle, Figure 8, and Swiss Roll point clouds. Since all features contribute to the Wasserstein distance, even the noise-induced ones close to the diagonal, it can be less sensitive to changes in the most persistent features. Another popular choice of distance measure is the bottleneck distance, which is the largest deformation of a pair of features required to convert one diagram to another (i.e. the Wasserstein distance under the norm). The bottleneck distance is thus idependent of the short-lived features near the diagonal.

Figure 4. Matching (green lines) of the one-dimensional cycles of the Circle, Figure 8, and Swiss Roll point clouds used to compute the Wasserstein distance, which corresponds to the total length of the green lines.

Alternative approaches for characterising and comparing the information contained in the persistence diagrams employ vectorisation: the variable length information encoded in the pairs of the persistence diagrams is mapped to a vector or vectors in a fixed-dimensional space; different persistence diagrams can then be studied using familiar tools such as vector inner products. For example, one might compute a set of summary statistics such the entropy or moments of the feature lifetimes

[Citation19–21], assuming they are relevant to the task at hand. Often the relevant features are unknown a priori and it is preferable to compute a high-dimensional vectorisation to minimise the loss of relevant information. One example is the persistence landscape, a stable and invertible (i.e. information-preserving) persistence diagram vectorisation [Citation22,Citation23].

Using a distance measure or vectorisation allows one to combine persistent homology with powerful machine learning techniques such as artificial neural networks or clustering algorithms to compare topological features of different datasets and perform tasks including shape-based identification and classification of different point clouds, which will be explained further in Sec. IV. However, one important consideration in applying vectorisation or distance measures is that they can introduce additional hyper-parameters that may affect the sensitivity to different topological features of the data.

There are a variety of software libraries for computing persistence diagrams, their vectorisation, and distance measures [Citation24–27], surveyed in Ref. [Citation28]. Crucial for applications, persistence diagrams can be efficiently computed given a filtration by building up a simplicial complex one element at a time and detecting any changes to the topological features at each step. This yields not only the feature birth and death scales but also their representations, e.g. edges comprising a cycle. Nevertheless, due to the combinatorial nature of simplicial complexes, the computational requirements grow rapidly with the feature dimension , with most practical applications limited to

.

To compute a persistence diagram, the end-user must provide at a minimum either the data points or a distance matrix encoding pairwise distances between points. One can also consider custom filtrations. For example, when dealing with image data one can use the greyscale pixel values as a filtration parameter, constructing a simplicial complex out of pixels less than (or exceeding) a given threshold [Citation29,Citation30]. The resulting sublevel (superlevel) set filtration summarises the critical points of an image, i.e. its local minima, maxima, and saddle points, as well as their higher-dimensional generalisations.

C. Other approaches and recent developments

The above discussion of persistent homology has been limited to the simplest case of simplicial complexes constructed from two-dimensional point clouds. There are a variety of related techniques for studying complex datasets by reducing them to families of graphs or simplicial complexes, which we only mention briefly here due to space constraints.

The Mapper algorithm reduces point clouds to simpler low-dimensional graphs by performing clustering on overlapping subsets of the data [Citation31,Citation32]. Local anomalies such as intersections and cusps can be similarly detected by comparing the persistent homology of different subsets of the data [Citation33].

Standard persistent homology constructs filtrations as a sequence of nested simplicial complexes; as the filtration parameter (e.g. cutoff distance) is increased, edges and higher-dimensional simplices are added to the complex and never removed. In certain situations, e.g. when studying temporal network dynamics, simplices can be both added and removed as the control parameter is varied. Zigzag persistence is a technique that enables the identification of significant topological features in this case [Citation34].

Another important problem is to compute persistent topological features as the multiple control parameters are varied, which is termed multidimensional persistence [Citation35]. This problem is a lot more complicated than the single parameter case, due to the absence of simple persistence diagram representations.

We considered examples where point clouds are used to construct undirected graphs and simplicial complex, encoded by matrices with binary elements , denoting whether a simplex is present or absent. Persistent homology can also be calculated with respect to other fields, such as integers modulo 3, describing e.g. directed graphs or simplices, which can be useful for analysing data, with twists including points sampled from the surface of Möbius strips [Citation25].

III. Applications of topological data analysis to physics

A. Early examples

Early applications of TDA appearing in physics journals in the 2000s considered examples where the underlying data already has a well-defined shape or graph structure, making the construction of graphs more straightforward. Examples include dynamical systems [Citation36], random clouds of spheres [Citation37], random networks [Citation38], and binary image data [Citation39,Citation40].

In the case of over-sampled time-series measurements of dynamical systems, the sampled points will form a single continuous curve in the absence of noise. This fact can be used for topological filtering of certain types of noise, e.g. when a small fraction of the measured points are perturbed, as shown in . By computing the scale-dependent distribution of zeroth Betti numbers one can separate points belonging to the dynamical trajectory (forming a single large cluster) from noise-perturbed points (each forming a separate cluster), filtering out the latter in and improving the accuracy of estimated Lyapunov exponents [Citation36].

Figure 5. (A) Noisy sampling of the chaotic trajectory of the Lorenz attractor and (b) topology-based filtered data, adapted from Ref. [Citation36]. (c) Snapshots of a chaotic two-dimensional fluid and (d) estimates of finite-size effects using image eigendecompositions (SVG) and a TDA-based disorder estimator (TDA) showing the two methods give similar results, adapted from Ref [Citation40].

![Figure 5. (A) Noisy sampling of the chaotic trajectory of the Lorenz attractor and (b) topology-based filtered data, adapted from Ref. [Citation36]. (c) Snapshots of a chaotic two-dimensional fluid and (d) estimates of finite-size effects using image eigendecompositions (SVG) and a TDA-based disorder estimator (TDA) showing the two methods give similar results, adapted from Ref [Citation40].](/cms/asset/913b4452-ff89-46d4-944c-71b41e1290d4/tapx_a_2202331_f0005_b.gif)

A second early application was the analysis of convection in two-dimensional fluids under heating [Citation39,Citation40]. There, the fluid separates into distinct hot and cold regions, as illustrated in . In this case, the zeroth and first Betti numbers were used to characterise the shape of the hot and cold regions. The scaling of the number of distinct microstates (shapes) with the area of the fluid yields an effective dimension of the dynamics that could be computed more efficiently than the conventional approach based on the singular value decomposition of the images’ two-point correlation functions. One application of the effective dimension is the detection of boundary effects, as shown in . The strong contrast between hot and cold regions of the images in this case meant that persistent homology was not required; analysis of the graph formed at a single cutoff scale was sufficient.

B. Persistent homology of point clouds and images

In many applications, the construction of well-defined shape from the data is less straightforward, or one may be interested in identifying structures present at different spatial scales. For example, in the case of point cloud data, it may be difficult to assign a size or radius to the individual points. In other cases, one may want to apply intuition obtained from simple analytically solvable limits to more realistic systems [Citation41,Citation42]. In situations such as these, persistent homology becomes a powerful tool for extracting meaningful shape information from the raw data.

For example, suppose we wish to study the microscopic structure of materials. The raw data naturally takes into account the positions of the constituent atoms and their sizes. Persistent homology enables studying the multi-scale structure of materials using just the positions of atoms in three-dimensional space (obtained from imaging or simulations) together with the standard Euclidean distance. The authors of Refs. [Citation43,Citation44] and used persistence diagrams computed from molecular dynamics simulations of various materials exhibiting glassy phases to characterise their structure.

shows examples of persistence diagrams obtained for liquid, glass, and crystalline phases of silica. In the crystalline phase, the clustering of feature births and deaths reveals scales corresponding to the bond lengths of the material, i.e. separations between the constituent atoms. Moreover, inspection of the cycles corresponding to persistent features also reveals the nature of the short-range order appearing in the glass phase.

Figure 6. Persistence diagrams obtained from molecular dynamics simulations of liquid (a), amorphous (b), and crystalline (c) phases of silica. Point colours indicate the multiplicity (on a logarithmic scale) of one-dimensional features. Insets in (b) illustrate representative cycles corresponding to short- and medium-range order in the amorphous phase. Adapted from Ref. [Citation44].

![Figure 6. Persistence diagrams obtained from molecular dynamics simulations of liquid (a), amorphous (b), and crystalline (c) phases of silica. Point colours indicate the multiplicity (on a logarithmic scale) of one-dimensional features. Insets in (b) illustrate representative cycles corresponding to short- and medium-range order in the amorphous phase. Adapted from Ref. [Citation44].](/cms/asset/a2593b51-da4a-46f6-9228-e42602c7be75/tapx_a_2202331_f0006_oc.jpg)

Subsequent works applied similar techniques to amorphous ices [Citation45], granular media [Citation46,Citation47], spin configurations in lattice spin models and gauge theories [Citation48,Citation49], and two-dimensional materials, where the measures obtained using persistent homology can be directly compared with more standard metrics [Citation50].

Another application of the point cloud formalism concerns the analysis of time-series signals, including the detection of chaotic dynamics. Already in the 1990s, there was interest in applying computational topology to study the shape of the dynamics in phase space, including quantifying the shape of chaotic attractors [Citation51]. Here, the key ingredient is Takens’ embedding theorem, which states that a sequence of observations taken at regular time intervals

can be used to reconstruct the shape of the dynamics by constructing a point cloud of

-dimensional vectors

, provided the embedding dimension

is sufficiently large.

Persistent homology enables the systematic study of dynamics via the shape of the point clouds in the high-dimensional embedding space [Citation52–54]. For example, period-doubling transitions can be detected via the emergence of new persistent clusters. Successive period-doubling transitions as a system approaches the chaotic regime results in the creation of many clusters, which merge into a single line or volume.

Applying persistent homology to image data enables the study of the shapes of images in which there may not be a clear distinction between ‘bright’ and ‘dark’ regions, or in images where structural information at multiple intensity scales is important. Large point cloud datasets for which a direct persistent homology calculation may be quite time-consuming can alternatively be studied using image filtrations by converting the cloud to a density image [Citation55].

Early works on persistent homology of images used Betti numbers to characterise solar magnetic field distributions [Citation56] and force networks in different kinds of compressed granular media, studying the number and connectivity of regions at different scales [Citation57,Citation58]. More recently, persistence diagrams obtained from images have been used to study non-Gaussian temperature fluctuations in the cosmic microwave background [Citation59], the shape of iso-frequency contours in photonic crystals [Citation60], many-body dynamics, and solitons in Bose-Einstein condensates [Citation61,Citation62], phase transitions in spin models [Citation63,Citation64], and order–disorder transitions in nematic liquid crystals [Citation65] and optical waveguide lattices [Citation66]. Recent work aims to better understand how to relate the shape information captured by TDA to physical properties, including the permeability of fractured materials [Citation67].

C. Finding meaning using abstract distance measures

So far we have considered examples where we have some intuitive notion of the shape of the underlying data, and the role of TDA has been to study these shapes more systematically. One exciting emerging application of TDA is in studying and discovering structure in complex systems for which simple visualisations (such as images or phase space trajectories) do not exist, including families of high-energy physics models [Citation68–70]. This typically requires the identification of a suitable distance measure for the data.

For example, in the case of quantum many-body systems measures of entanglement between pairs of subsystems, such as the concurrence or entanglement entropy, can be used to study the abstract shapes of quantum states and group them into different classes [Citation71–73]. Understanding this entanglement structure may be helpful for judging when approximation techniques, such as tensor networks, may be used to efficiently simulate the system of interest.

Another important application of abstract distance measures is in the study of condensed matter systems at finite temperatures, where one would like to quantify the ‘shape’ of an ensemble of system configurations sampled at a given temperature to detect phase transitions and critical points [Citation48,Citation63,Citation64,Citation74]. There are various notions of distance that can be applied in this context, including the geodesic distance between different spin configurations [Citation75] and the quantum distance based on the overlap between eigenfunctions [Citation60,Citation76]. Tests of the Anderson, Hubbard, and Potts models suggest that TDA may be useful for precisely detecting critical points without requiring a computationally expensive finite size scaling analysis [Citation77,Citation78].

IV. Machine learning for physics using topological data analysis

A. Applications of machine learning to physics

Machine learning offers a powerful data-driven approach for modelling, characterising, and designing complex physical systems [Citation6–8,Citation10], including topological materials [Citation79–85]. Two classes of machine learning approaches attracting interest among physicists are supervised and unsupervised learning algorithms. Supervised learning aims to correctly classify new observations after being trained on a set of labelled examples. Unsupervised learning aims to detect novel features in unlabelled datasets, e.g. by grouping similar observations into clusters or identifying outliers.

Dealing with the deluge of data generated by high energy physics experiments was an early application of large-scale machine learning techniques to physics [Citation6,Citation86]. Anomaly-detection techniques are used to identify the small fraction of interesting events to be recorded and processed further. Supervised learning techniques based on human-labelled or computer-generated examples can be used to convert the high-dimensional raw detector data (e.g. particle trajectories and deposited energies) into a signal of interest (e.g. the type of particles generated). Similar techniques are now being adopted in other fields involving high repetition rate experiments, including reconstructing ultrashort optical pulses [Citation87], identifying solitons in Bose-Einstein condensates [Citation88], and optimizing the fidelity of quantum gates [Citation89]. In all these examples, machine learning can be used to perform tasks faster and at a larger scale than conventional approaches.

The performance of machine learning algorithms is closely tied to the quality and quantity of the input data; the machine learning model needs a sufficiently large set of relevant observations to make accurate predictions. On the one hand, the computational costs of machine learning algorithms can be enormous when they are applied to real-world problems involving large-scale datasets. On the other hand, in many physics problems the amount of available data may be highly constrained (e.g. due to high costs of fabrication, characterization, or computational resources), making approaches compatible with sparse datasets essential.

B. Combining TDA with machine learning

TDA methods are promising as a means of enhancing the performance of machine learning methods [Citation15]. Instead of feeding all observables of the system of interest (e.g. entire images or full many-body quantum wavefunctions) into the machine learning algorithm, TDA can identify a smaller set of relevant topological features, which can be used as input into a simpler and faster machine learning model. Especially, TDA methods seem naturally suited to studying phenomena such as topological phase transitions, which may be difficult to capture using conventional techniques.

As noted in Sec.tion II B, a key challenge in combining TDA with machine learning is the question of how best to convert the information encoded into persistence diagrams into a format usable by machine learning algorithms. The two main approaches are distance measure-based and vectorisation.

The authors in Refs. [Citation48,Citation76–78,Citation90] have used distance measures of persistence diagrams to compute the distance matrices used as inputs for kernel-based machine learning algorithms for supervised and unsupervised detection of phase transitions in several lattice models including the Ising, XY, and Heisenberg spin models [Citation64,Citation77] and classification of biological time series [Citation54,Citation91,Citation92]. There are many possible metrics to use. In practice, the Wasserstein and bottleneck distances [Citation76,Citation77] can be time-consuming to compute. There are faster alternatives, including the sliced Wasserstein distance [Citation48] and Fisher kernel [Citation90]; however, they introduce additional hyper-parameters, which need to be optimised.

Vectorisation-based approaches can reduce the persistence diagrams into a simpler format that is more easily interpretable, at the expense of losing some of the contained information. For example, the authors in Refs. [Citation62,Citation66,Citation74] employed simple summary statistics of the feature lifetimes including their Shannon entropy and norms to verify that persistent homology does indeed detect relevant features that can be used to train machine learning models. Alternatively, the authors in Refs. [Citation63,Citation64] and employed persistence images [Citation93], which form a discretised representation of persistence diagrams. One word of caution in the use of the persistence images is that their construction involves hyper-parameters that should be optimised to obtain good performance [Citation15].

Once the persistence diagrams have been converted into a format usable by machine learning algorithms, the final step is to choose a specific machine learning model. Several studies have considered supervised classification using logistic regression and support vector machines, which find an optimal separating hyperplane between different data classes [Citation62–64]. More recent studies comparing various machine learning models suggest that classification and clustering based on topological features can still be a highly nonlinear problem, making nonlinear machine learning methods, such as multidimensional scaling, neural networks, or -nearest neighbours a better choice [Citation48,Citation64,Citation74].

C. Learning phase transitions using TDA

The prospect of discovering novel phases of matter motivates studies of machine learning-based approaches for detecting phase transitions. Supervised learning methods can make use of labelled data drawn from known phases or exactly solvable limits to draw inferences about the location of phase boundaries [Citation94,Citation95]. On the other hand, unsupervised methods such as manifold learning use an appropriately chosen-similarity measure to compare different samples and group them into different classes, without requiring precise knowledge of the number of distinct phases [Citation79–85].

The reliable detection of phase transitions using machine learning requires the use of an appropriate cost function or similarity measure that is sensitive to the transition of interest. For example, topological phase transitions require the bulk band gap of the system to close and re-open, motivating the use of non-local similarity measures invariant under gap-preserving deformations [Citation81] or sensitive to points at which the gap closes [Citation82,Citation85]. These measures typically involve hyper-parameters, such as the kernel resolution, which must be chosen carefully to ensure good accuracy.

By directly capturing shape information of the system of interest, persistent homology-based methods are able to capture phase transitions using simpler models with fewer hyper-parameters. shows an example of a persistent homology-based machine learning pipeline for studying phase transitions in the two-dimensional XY model [Citation64]. Persistence diagrams are computed for a given spin configuration based on the relative angle between neighbouring spins. The persistence diagram is then vectorised into a persistence landscape encoding the probability of obtaining features with a given birth scale and lifetime, which can be averaged over spin configurations sampled at a given temperature. The averaged persistence landscapes obtained for various temperatures are then used to train a machine learning model, such as logistic regression, which estimates the location of the phase transition using the training data.

Figure 7. TDA-based machine learning of phase transitions in the two-dimensional XY model. (a) Persistence diagram computed using a filtration based on correlations between neighbouring spins. (b) the persistence diagram is vectorised into a persistence landscape. (c,d) Examples of average persistence images obtained from spin configurations sampled from ordered (c) and disordered (d) phases. (e) Coefficients of a trained logistic model; blue and red regions denote parts of the persistence images assigned as indicators to the ordered and disordered phases, respectively. (f) Finite size scaling of the logistic regression prediction used to estimate the phase transition point. Blue and red regions denote ranges used for the model training data. Vertical blue line denotes the expected transition point. Adapted from Ref. [Citation64].

![Figure 7. TDA-based machine learning of phase transitions in the two-dimensional XY model. (a) Persistence diagram computed using a filtration based on correlations between neighbouring spins. (b) the persistence diagram is vectorised into a persistence landscape. (c,d) Examples of average persistence images obtained from spin configurations sampled from ordered (c) and disordered (d) phases. (e) Coefficients of a trained logistic model; blue and red regions denote parts of the persistence images assigned as indicators to the ordered and disordered phases, respectively. (f) Finite size scaling of the logistic regression prediction used to estimate the phase transition point. Blue and red regions denote ranges used for the model training data. Vertical blue line denotes the expected transition point. Adapted from Ref. [Citation64].](/cms/asset/37205364-f693-4646-af1c-d9d6007887d5/tapx_a_2202331_f0007_oc.jpg)

V. Future directions

A. New techniques for topological data analysis

An area of active research among physicists is the application of TDA tools to analyse the structure of more complex systems including flow networks involving directed links [Citation96] and time-evolving networks [Citation92]. One approach used in recent studies that is compatible with standard persistent homology tools is to convert the directed network into a regular point cloud using a diffusion map, which constructs edges between a pair of vertices by computing the probability of diffusion between

and

. It will be interesting to explore alternate approaches that can work directly with unidirectional or time-evolving systems without requiring diffusion maps, such as zigzag persistence [Citation97].

The metrics used for quantifying differences between persistence diagrams have applications beyond persistent homology. For example, Skinner et al. [Citation98] used the Wasserstein distance to compare different local neighbourhood structures of disordered media, based on the intuition that it encodes the energy cost required to transform one configuration into another. The advantage of such a topological metric compared to more conventional measures including the Kullback–Leibler divergence is that the former is better at distinguishing weakly-overlapping distributions. Are there other examples where such metrics can be linked to physical observables?

B. Quantum topological data analysis

All of the examples considered in the physics literature relate to the study of low-dimensional topological features using TDA, largely because higher dimensional features are both harder to interpret and become extremely time-consuming to compute for large datasets, due to exponential scaling. The advent of more efficient quantum algorithms for TDA, including the computation of Betti numbers and persistence diagrams, is anticipated to enable the study of higher-dimensional topological features in complex datasets.

The first quantum algorithm for TDA was proposed by Lloyd et al. in 2016 [Citation99]. Their algorithm exhibited an exponential speedup for calculating Betti numbers by using quantum phase estimation to efficiently construct combinatorial Laplacians of simplicial complexes and identify cycles by computing their zero modes. This proposal was followed in 2018 by a small-scale, few-qubit proof-of-concept quantum optics experiment [Citation100].

Subsequent studies have started to address the limitations of the first quantum TDA algorithm [Citation101] by proposing more efficient variants [Citation102–104] as well as quantum algorithms for computing persistent Betti numbers [Citation105,Citation106] and the Wasserstein distance [Citation107]. While most of these algorithms are designed for future fault-tolerant quantum computers, there is also the potential for near-term quantum speedups using shallow quantum circuits with depth linear in the number of input data points, exploiting the efficient implementation of the boundary operator using entangled quantum states [Citation108–111]. While the prospect of an exponential speed-up compared to the best classical TDA algorithms is entrancing, whether and when such a large speed for practical problems will be achieved is under debate [Citation102,Citation103,Citation111–113], especially with new and improved classical algorithms still being developed [Citation114].

C. Learning physics versus machine learning physics

One challenge encountered by existing literature applying TDA to physics problems is that the techniques are unfamiliar to the physics audience. Many of the original TDA articles in which techniques were first introduced are highly theoretical and mathematically rigorous, thus articles in physics journals require long introductions explaining the approaches used to this non-specialist audience. This can lead to a focus on the technical calculation details while perhaps obscuring the bigger picture.

For instance, many articles include examples of persistence diagrams computed from the system of interest in order to illustrate qualitative differences between different phases and states. However, the persistence diagram is itself not easily interpretable, requiring knowledge of the form of the input data and filtration used. For this reason, many studies then apply machine learning techniques to extract quantitative predictions from the information contained in the persistence diagrams.

On the other hand, as physicists we would prefer to make sense of the system ourselves, rather than delegate understanding to a machine learning algorithm. What is of interest to us are which topological features are meaningful and what they look like. The approach used in Ref. [Citation44], where representative cycles of the persistent features are included as insets in the persistence diagrams [reproduced here in ], is one way their meaning can be made more explicit. Still, selecting appropriate features can be challenging when there is no clear boundary between the signal and the noise. It will be interesting to explore other TDA-based techniques for dimensionality reduction and compactly conveying the significant features of high-dimensional physical systems to non-specialists in TDA.

VI. Conclusion

In summary, we have attempted to give an overview of emerging physics applications of topological data analysis methods, focusing on persistent homology. The take-home message is that TDA can compress complex datasets into their essential (topological) features, enabling the training of simpler machine learning models compared to widely used and computationally expensive general-purpose artificial neural networks. Nevertheless, as topological data analysis is relatively new it is still largely employed on an ad-hoc basis and further work is needed to establish a standard set of methods that non-specialists can trust [Citation15].

Topological data analysis has already been fruitfully applied to other areas of research including image analysis and medical science, enabling the extraction of useful insights from complicated hard-to-visualise datasets. We hope that the techniques discussed here and in other recent reviews aimed at the physics audience [Citation11,Citation12] will not merely provide a transient fashionable alternative to more standard methods of data analysis used by physicists but will form a new set of long-lasting tools enabling a better understanding of complex physical systems from classical to quantum.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Hasan MZ, Kane CL. Colloquium: topological insulators. Rev Mod Phys. 2010;82:3045. DOI:10.1103/RevModPhys.82.3045

- Ozawa T, Price HM, Amo A, et al. Topological photonics. Rev Mod Phys. 2019;91:015006. DOI:10.1103/RevModPhys.91.015006

- Ma G, Xiao M, Chan TC. Topological phases in acoustic and mechanical systems. Nat Rev Phys. 2019;1:281. DOI:10.1038/s42254-019-0030-x

- Kim M, Jacob Z, Rho J. Recent advances in 2D, 3D and higher-order topological photonics. Light: Sci & Appl. 2020;9:130. DOI:10.1038/s41377-020-0331-y

- von Klitzing K, Chakraborty T, Kim P, et al. 40 years of the quantum Hall effect. Nat Rev Phys. 2020;2:397. DOI:10.1038/s42254-020-0209-1

- Carleo G, Cirac I, Cranmer K, et al. Machine learning and the physical sciences. Rev Mod Phys. 2019;91:045002. DOI:10.1103/RevModPhys.91.045002

- Mehta P, Bukov M, Wang C-H, et al. A high-bias, low-variance introduction to machine learning for physicists. Phys Rep. 2019;810:1. DOI:10.1016/j.physrep.2019.03.001

- Carrasquilla J. Machine learning for quantum matter. Adv Phys: X. 2020;5:1797528. DOI:10.1080/23746149.2020.1797528

- Yun J, Kim S, So S, et al. Deep learning for topological photonics. Adv Phys: X. 2022;7:2046156. DOI:10.1080/23746149.2022.2046156

- Dawid A, Arnold J, Requena B, et al. Modern applications of machine learning in quantum sciences. arXiv:2204.04198. 2022. DOI:10.48550/ARXIV.2204.04198

- Carlsson G. Topological methods for data modelling. Nat Rev Phys. 2020;2:697. DOI:10.1038/s42254-020-00249-3

- Murugan J, Robertson D. An introduction to topological data analysis for physicists: from LGM to FRBs. arXiv:1904.11044. 2019. DOI:10.48550/ARXIV.1904.11044

- Carlsson G. Topology and data. Bull Amer Math Soc. 2009;46:255. DOI:10.1090/S0273-0979-09-01249-X

- Wasserman L. Topological data analysis. Annu Rev Stat Appl. 2018;5:501. DOI:10.1146/annurev-statistics-031017-100045

- Hensel F, Moor M, Rieck B. A survey of topological machine learning methods. Front Artif Intell. 2021;4:681108. DOI:10.3389/frai.2021.681108

- Hausmann J-C. On the Vietoris-Rips complexes and a cohomology theory for metric spaces. Ann Math Stud. 1995;138:175.

- Robins V. Towards computing homology from finite approximations. Topology Proc. 1999;24:503.

- Cohen-Steiner D, Edelsbrunner H, Harer J. Stability of persistence diagrams. Discrete Comput Geom. 2007;37:103. DOI:10.1007/s00454-006-1276-5

- Chintakunta H, Gentimis T, Gonzalez-Diaz R, et al. An entropy-based persistence barcode. Pattern Recognition. 2015;48:391. DOI:10.1016/j.patcog.2014.06.023

- Rucco M, Castiglione F, Merelli E, et al., Characterisation of the idiotypic immune network through persistent entropy, in Proceedings of ECCS 2014, editors Battiston S, Pellegrini FD, Caldarelli G, and Merelli E (Springer International Publishing, Cham, 2016) pp. 117–24.

- Atienza N, Gonzalez-Díaz R, Soriano-Trigueros M. On the stability of persistent entropy and new summary functions for topological data analysis. Pattern Recognition. 2020;107:107509. DOI:10.1016/j.patcog.2020.107509

- Bubenik P. Statistical topological data analysis using persistence landscapes. J Mach Learn Res. 2015;16:77.

- Bubenik P, Dłotko P. A persistence landscapes toolbox for topological statistics. J Symb Comput. 2017;78:91. DOI:10.1016/j.jsc.2016.03.009

- Maria C, Boissonnat J-D, Glisse M, et al. The gudhi library: simplicial complexes and persistent homology. In: Hong H Yap C, editors. Mathematical Software – ICMS 2014. Berlin Heidelberg, Berlin, Heidelberg: Springer; 2014. pp. 167–174.

- Tralie C, Saul N, Bar-On R. Ripser.Py: a lean persistent homology library for python. J Open Source Software. 2018;3:925. DOI:10.21105/joss.00925

- Tauzin G, Lupo U, Tunstall L, et al. Giotto-tda: a topological data analysis toolkit for machine learning and data exploration. J Mach Learn Res. 2021;22:1.

- Bauer U. Ripser: efficient computation of Vietoris–Rips persistence barcodes. J Appl Comput. 2021;5:391. DOI:10.1007/s41468-021-00071-5

- Otter N, Porter MA, Tillmann U, et al. A roadmap for the computation of persistent homology. EPJ Data Sci. 2017;6:17. DOI:10.1140/epjds/s13688-017-0109-5

- Bendich P, Edelsbrunner H, Kerber M. Computing robustness and persistence for images. IEEE Trans Vis Comput Graph. 2010;16:1251. DOI:10.1109/TVCG.2010.139.

- Robins V, Wood PJ, Sheppard AP. Theory and algorithms for constructing discrete Morse complexes from grayscale digital images. IEEE Trans Pattern Anal Mach Intell. 2011;33:1646. DOI:10.1109/TPAMI.2011.95

- Singh G, Memoli F, Carlsson G. Topological methods for the analysis of high dimensional data sets and 3D object recognition. In: Botsch M, Pajarola R, Chen B, and Zwicker M, editors. Eurographics symposium on point-based graphics. The Eurographics Association; 2007:91. DOI:10.2312/SPBG/SPBG07/091-100

- Yao Y, Sun J, Huang X, et al. Topological methods for exploring low-density states in biomolecular folding pathways. J Chem Phys. 2009;130:144115. DOI:10.1063/1.3103496

- Stolz BJ, Tanner J, Harrington HA, et al. Geometric anomaly detection in data. Proc Nat Acad Sci. 2020;117:19664. DOI:10.1073/pnas.2001741117

- Carlsson G, de Silva V. Zigzag persistence, foundations of computational mathematics. Found Comut Math. 2010;10:367. DOI:10.1007/s10208-010-9066-0

- Carlsson G, Zomorodian A. The theory of multidimensional persistence. Discrete Comput Geom. 2009;42. DOI:10.1007/s00454-009-9176-0

- Robins V, Rooney N, Bradley E. Topology-based signal separation. Chaos Inter J Nonlinear Sci. 2004;14:305. DOI:10.1063/1.1705852.

- Robins V. Betti number signatures of homogeneous Poisson point processes. Phys Rev E. 2006;74:061107. DOI:10.1103/PhysRevE.74.061107

- Horak D, Maletić S, Rajković M. Persistent homology of complex networks. J Stat Mech Theory Exp. 2009:P03034. DOI:10.1088/1742-5468/2009/03/p03034

- Krishan K, Kurtuldu H, Schatz MF, et al. Homology and symmetry breaking in Rayleigh-Bénard convection: experiments and simulations. Phys Fluids. 2007;19:117105. DOI:10.1063/1.2800365

- Kurtuldu H, Mischaikow K, Schatz MF. Extensive scaling from computational homology and Karhunen-Loève decomposition analysis of Rayleigh-Bénard convection experiments. Phys Rev Lett. 2011;107:034503. DOI:10.1103/PhysRevLett.107.034503

- Rocks JW, Liu AJ, Katifori E. Hidden topological structure of flow network functionality. Phys Rev Lett. 2021;126:028102. DOI:10.1103/PhysRevLett.126.028102

- Rocks JW, Liu AJ, Katifori E. Revealing structure-function relationships in functional flow networks via persistent homology. Phys Rev Res. 2020;2:033234. DOI:10.1103/PhysRevResearch.2.033234

- Nakamura T, Hiraoka Y, Hirata A, et al. Persistent homology and many-body atomic structure for medium-range order in the glass. Nanotechnology. 2015;26:304001. DOI:10.1088/0957-4484/26/30/304001

- Hiraoka Y, Nakamura T, Hirata A, et al. Hierarchical structures of amorphous solids characterized by persistent homology. Proc Nat Acad Sci. 2016;113:7035. DOI:10.1073/pnas.1520877113

- Hong S, Kim D. Medium-range order in amorphous ices revealed by persistent homology. J Phys Cond Mat. 2019;31:455403. DOI:10.1088/1361-648x/ab3820

- Ardanza-Trevijano S, Zuriguel I, Arévalo R, et al. Topological analysis of tapped granular media using persistent homology. Phys Rev E. 2014;89:052212. DOI:10.1103/PhysRevE.89.052212

- Saadatfar M, Takeuchi H, Robins V, et al. Pore configuration landscape of granular crystallization. Nat Commun. 2017;8:15082, DOI:10.1038/ncomms15082

- Olsthoorn B, Hellsvik J, Balatsky AV. Finding hidden order in spin models with persistent homology. Phys Rev Res. 2020;2:043308. DOI:10.1103/PhysRevResearch.2.043308

- Sehayek D, Melko RG. Persistent homology of Z2 gauge theories. Phys Rev B. 2022;106:085111. DOI:10.1103/PhysRevB.106.085111

- Ormrod Morley D, Salmon PS, Wilson M. Persistent homology in two-dimensional atomic networks. J Chem Phys. 2021;154:124109. DOI:10.1063/5.0040393

- Muldoon M, MacKay R, Huke J, et al. Topology from time series. Phys D. 1993;65:1–16. DOI:10.1016/0167-2789(92)00026-U

- Maletić S, Zhao Y, Rajković M. Persistent topological features of dynamical systems. Chaos Inter J Nonlinear Sci. 2016;26:053105. DOI:10.1063/1.4949472

- Mittal K, Gupta S. Topological characterization and early detection of bifurcations and chaos in complex systems using persistent homology. Chaos Inter J Nonlinear Sci. 2017;27:051102. DOI:10.1063/1.4983840

- Tran QH, Hasegawa Y. Topological time-series analysis with delay-variant embedding. Phys Rev E. 2019;99:032209. DOI:10.1103/PhysRevE.99.032209

- Tempelman JR, Khasawneh FA. A look into chaos detection through topological data analysis. Phys D. 2020;406:132446. DOI:10.1016/j.physd.2020.132446

- Makarenko N, Karimova L, Novak M. Investigation of global solar magnetic field by computational topology methods. Phys A. 2007;380:98. DOI:10.1016/j.physa.2007.02.052

- Kondic L, Goullet A, O’Hern CS et al. Topology of force networks in compressed granular media. EPL (Europhysics Letters). 2012;97:54001. DOI:10.1209/0295-5075/97/54001

- Pugnaloni LA, Carlevaro CM, Kramár M, et al. Structure of force networks in tapped particulate systems of disks and pentagons. I. clusters and loops. Phys Rev E. 2016;93:062902. DOI:10.1103/PhysRevE.93.062902

- Cole A, Shiu G. Persistent homology and non-Gaussianity. J Cosmol Astropart Phys. 2018;025:025. DOI:10.1088/1475-7516/2018/03/025

- Leykam D, Angelakis DG. Photonic band structure design using persistent homology. APL Photonics. 2021;6:030802. DOI:10.1063/5.0041084

- Spitz D, Berges J, Oberthaler M, et al. Finding self-similar behavior in quantum many-body dynamics via persistent homology. SciPost Phys. 2021;11:60. DOI:10.21468/SciPostPhys.11.3.060

- Leykam D, Rondón I, Angelakis DG. Dark soliton detection using persistent homology. Chaos Inter J Nonlinear Sci. 2022;32:073133. DOI:10.1063/5.0097053

- Cole A, Loges GJ, Shiu G. Quantitative and interpretable order parameters for phase transitions from persistent homology. Phys Rev B. 2021;104:104426. DOI:10.1103/PhysRevB.104.104426

- Sale N, Giansiracusa J, Lucini B. Quantitative analysis of phase transitions in two-dimensional XY models using persistent homology. Phys Rev E. 2022;105:024121. DOI:10.1103/PhysRevE.105.024121

- Membrillo Solis I, Orlova T, Bednarska K, et al. Tracking the time evolution of soft matter systems via topological structural heterogeneity. Commun Mater. 2022;3:1. DOI:10.1038/s43246-021-00223-1

- He Y, Xia S, Angelakis DG, et al. Persistent homology analysis of a generalized Aubry-André-Harper model. Phys Rev B. 2022;106:054210. DOI:10.1103/PhysRevB.106.054210

- Suzuki A, Miyazawa M, Minto JM, et al. Flow estimation solely from image data through persistent homology analysis. Sci Rep. 2021;11:17948. DOI:10.1038/s41598-021-97222-6

- Cirafici M. Persistent homology and string vacua. J High Energy Phys. 2016;2016:45. DOI:10.1007/JHEP03(2016)045

- Cole A, Shiu G. Topological data analysis for the string landscape. J High Energy Phys. 2019;2019:54. DOI:10.1007/JHEP03(2019)054

- Hirakida T, Kashiwa K, Sugano J, et al. Persistent homology analysis of deconfinement transition in effective Polyakov-line model. Int J Mod Physics A. 2020;35:2050049. DOI:10.1142/S0217751X20500499

- di Pierro A, Mancini S, Memarzadeh L, et al. Homological analysis of multi-qubit entanglement. EPL (Europhysics Letters). 2018;123:30006. DOI:10.1209/0295-5075/123/30006

- Mengoni R, Di Pierro A, Memarzadeh L, et al. Persistent homology analysis of multiqubit entanglement. Quantum Inf Computation. 2020;20:375. DOI:10.26421/QIC20.5-6-2

- Olsthoorn B. Persistent homology of quantum entanglement. Phys Rev B. 2021;107:115174. DOI:10.1103/PhysRevB.107.115174

- Tran QH, Chen M, Hasegawa Y. Topological persistence machine of phase transitions. Phys Rev E. 2021;103:052127. DOI:10.1103/PhysRevE.103.052127

- Donato I, Gori M, Pettini M, et al. Persistent homology analysis of phase transitions. Phys Rev E. 2016;93:052138. DOI:10.1103/PhysRevE.93.052138

- Park S, Hwang Y, Yang B-J. Unsupervised learning of topological phase diagram using topological data analysis. Phys Rev B. 2022;105:195115. DOI:10.1103/PhysRevB.105.195115

- Tirelli A, Costa NC. Learning quantum phase transitions through topological data analysis. Phys Rev B. 2021;104:235146. DOI:10.1103/PhysRevB.104.235146

- Tirelli A, Carvalho DO, Oliveira LA, et al. Unsupervised machine learning approaches to the q-state Potts model. Eur Phys J B. 2022;95:189. DOI:10.1140/epjb/s10051-022-00453-3

- Rodriguez-Nieva JF, Scheurer MS. Identifying topological order through unsupervised machine learning. Nat Phys. 2019;15:790. DOI:10.1038/s41567-019-0512-x

- Long Y, Ren J, Chen H. Unsupervised manifold clustering of topological phononics. Phys Rev Lett. 2020;124:185501. DOI:10.1103/PhysRevLett.124.185501

- Scheurer MS, Slager R-J. Unsupervised machine learning and band topology. Phys Rev Lett. 2020;124:226401. DOI:10.1103/PhysRevLett.124.226401

- Che Y, Gneiting C, Liu T, et al. Topological quantum phase transitions retrieved through unsupervised machine learning. Phys Rev B. 2020;102:134213. DOI:10.1103/PhysRevB.102.134213

- Lustig E, Yair O, Talmon R, et al. Identifying topological phase transitions in experiments using manifold learning. Phys Rev Lett. 2020;125:127401. DOI:10.1103/PhysRevLett.125.127401

- Lidiak A, Gong Z. Unsupervised machine learning of quantum phase transitions using diffusion maps. Phys Rev Lett. 2020;125:225701. DOI:10.1103/PhysRevLett.125.225701

- Long Y, Zhang B. Unsupervised data-driven classification of topological gapped systems with symmetries. Phys Rev Lett. 2023;130:036601. DOI:10.1103/PhysRevLett.130.036601

- Albertsson K, Altoe P, Anderson D, et al. Machine learning in high energy physics community white paper. J Phys Conf Series. 2018;1085:022008. DOI:10.1088/1742-6596/1085/2/022008

- Zahavy T, Dikopoltsev A, Moss D et al . Deep learning reconstruction of ultrashort pulses. Optica. 2018;5:666. DOI:10.1364/OPTICA.5.000666

- Guo S, Fritsch AR, Greenberg C, et al. Machine-learning enhanced dark soliton detection in Bose–Einstein condensates. Mach Learn: Sci Technol. 2021;2:035020. DOI:10.1088/2632-2153/abed1e

- Baum Y, Amico M, Howell S, et al. Experimental deep reinforcement learning for error-robust gate-set design on a superconducting quantum computer. PRX Quantum. 2021;2:040324. DOI:10.1103/PRXQuantum.2.040324

- Itabashi K, Tran QH, Hasegawa Y. Evaluating the phase dynamics of coupled oscillators via time-variant topological features. Phys Rev E. 2021;103:032207. DOI:10.1103/PhysRevE.103.032207

- Bhaskar D, Manhart A, Milzman J et al . Analyzing collective motion with machine learning and topology. Chaos Inter J Nonlinear Sci. 2019;29:123125. DOI:10.1063/1.5125493

- Tran QH, Vo VT, Hasegawa Y. Scale-variant topological information for characterizing the structure of complex networks. Phys Rev E. 2019;100:032308. DOI:10.1103/PhysRevE.100.032308

- Adams H, Emerson T, Kirby M, et al. Persistence images: a stable vector representation of persistent homology. J Mach Learn Res. 2017;18:218–252.

- Rem BS, Käming N, Tarnowski M et al . Identifying quantum phase transitions using artificial neural networks on experimental data. Nat Phys. 2019;15:917. DOI:10.1038/s41567-019-0554-0

- Tibaldi S, Magnifico G, Vodola D et al . Unsupervised and supervised learning of interacting topological phases from single-particle correlation functions. SciPost Physics. 2022;14. DOI:10.21468/SciPostPhys.14.1.005

- Le MQ, Taylor D. Persistent homology of convection cycles in network flows. Phys Rev E. 2022;105:044311. DOI:10.1103/PhysRevE.105.044311

- Myers A, Khasawneh F, Munch E. Temporal network analysis using zigzag persistence. EPJ Data Sci. 2022;12. DOI:10.1140/epjds/s13688-023-00379-5

- Skinner DJ, Song B, Jeckel H, et al. Topological metric detects hidden order in disordered media. Phys Rev Lett. 2021;126:048101. DOI:10.1103/PhysRevLett.126.048101

- Lloyd S, Garnerone S, Zanardi P. Quantum algorithms for topological and geometric analysis of data. Nat Commun. 2016;7:10138. DOI:10.1038/ncomms10138

- Huang H-L, Wang X-L, Rohde PP, et al. Demonstration of topological data analysis on a quantum processor, Optica. Optica. 2018;5:193. DOI:10.1364/OPTICA.5.000193

- Neumann N, den Breeijen S. Limitations of clustering using quantum persistent homology. arXiv:1911.10781. 2019. DOI:10.48550/ARXIV.1911.10781

- McArdle S, Gilyén A, Berta M. A streamlined quantum algorithm for topological data analysis with exponentially fewer qubits. arXiv:2209.12887. 2022. DOI:10.48550/ARXIV.2209.12887

- Berry DW, Su Y, Gyurik C et al . Quantifying quantum advantage in topological data analysis. arXiv:2209.13581. 2022. DOI:10.48550/ARXIV.2209.13581

- Vlasic A, Pham A. Understanding the mapping of encode data through an implementation of quantum topological analysis. arXiv:2209.10596. 2022. DOI:10.48550/ARXIV.2209.10596

- Ameneyro B, Maroulas V, Siopsis G. Quantum persistent homology. arXiv:2202.12965. 2022. DOI:10.48550/ARXIV.2202.12965

- Hayakawa R. Quantum algorithm for persistent Betti numbers and topological data analysis. Quantum. 2022;6:873. DOI:10.22331/q-2022-12-07-873

- Berwald JJ, Gottlieb JM, Munch E. Computing Wasserstein distance for persistence diagrams on a quantum computer. arXiv:1809.06433. 2018. DOI:10.48550/ARXIV.1809.06433

- Ubaru S, Akhalwaya IY, Squillante MS, et al. Quantum topological data analysis with linear depth and exponential speedup. arXiv:2108.02811. 2021. DOI:10.48550/ARXIV.2108.02811

- Akhalwaya IY, He Y-H, Horesh L, et al. Representation of the fermionic boundary operator. Phys Rev A. 2022;106:022407. DOI:10.1103/PhysRevA.106.022407

- Kerenidis I, Prakash A. Quantum machine learning with subspace states. arXiv:2202.00054. 2022. DOI:10.48550/ARXIV.2202.00054

- Akhalwaya IY, Ubaru S, Clarkson KL et al . Towards quantum advantage on noisy quantum computers. arXiv:2209.09371. 2022. DOI:10.48550/ARXIV.2209.09371

- Gyurik C, Cade C, Dunjko V. Towards quantum advantage via topological data analysis. Quantum. 2022;6:855. DOI:10.22331/q-2022-11-10-855

- Schmidhuber A, Lloyd S. Complexity-theoretic limitations on quantum algorithms for topological data analysis. arXiv:2209.14286. 2022. DOI:10.48550/ARXIV.2209.14286

- Apers S, Sen S, Szabó D. A (simple) classical algorithm for estimating Betti numbers. arXiv:2211.09618. 2022. DOI:10.48550/ARXIV.2211.09618