Abstract

Background: Minimally invasive surgeries rely on laparoscopic camera views to guide the procedure. Traditionally, an expert surgical assistant operates laparoscopic camera. This process is distracting, and the camera is not always placed in an ideal location. To mitigate these problems, we have developed a laparoscopic automatic navigation method.

Methods: In this article, a way to determining surgery status based on the distance of surgical instruments is presented. Combining with the surgery status, the region of interest (ROI) in laparoscopic image is defined, and related parameters are given in detail. Comprehensive the above content, the method of laparoscopic automatic navigation based on the ROI is proposed.

Results: Finally, introducing the method into a kind of typical minimally invasive laparoscopic surgery robot system (MLSRS), and the method is simulated by using some typical surgical tasks.

Conclusion: The results show that this method is feasible.

1. Introduction

Robot assisted surgery is a new type of surgery developed in recent 30 years, which has advantages of little trauma and faster recovery. But surgical robots cannot instead of surgeon, the main role of it is to assist the surgeon during operation and it cannot be called as intelligent robot because of almost no autonomy especially for the robot arm, which clamps laparoscope.[Citation1,Citation2] Because the number of master manipulators is less than slave manipulators, the surgeon must switch control object to adjust the position and pose of laparoscope from time to time, which will prolong the time of surgery and affect the operation efficiency. How to realize the automatic navigation of laparoscope is the first step of surgical robot toward Intelligence.[Citation3,Citation4]

The key problem of laparoscopic automatic navigation is how to determine the operation field. A main way is to confirm user’s intent. Eye gaze tracking is a way to confirm user’s intent. There have been some method be proposed,[Citation5,Citation6] but still have a series of problems, such as high cost, low accuracy and stability.[Citation7] User’s intent can also be expressed by the surgical instruments. King et al. [Citation8] establish a laparoscopic automatic navigation system based on vision, which can automatically centers the camera’s view on the two instruments and alters the zoom level to ensure both tools are in view at all times. Detecting surgical motions/tasks is another way to confirm user’s intent,[Citation9,Citation10] but this work requires additional tracking hardware or access to the robot’s hardware, it cannot be more easily applied to laparoscopic surgery.

In addition, various laparoscopic automatic navigation systems have been developed,[Citation11–13] most of them are not universal, and need a lot of preparation.

The contribution of this article is proposing a new kind of laparoscopic automatic navigation method based on region of interest (ROI) in laparoscopic image, which can realize the accurate window tracking under the intermittent movement. In our articles, user’s intent is expressed by the operation status, so how to detective operation states is the basis of the method. And the definition of ROI is presented combined with the operation states. According to them, our method is proposed. Then, the method introduced into a kind of typical minimally invasive laparoscopic surgery robot system (MLSRS), it is proved to be feasible through simulation and experiment.

2. Operation status detection method

In the process of surgery, the operation field that surgeons need can be summed up in two cases, one is global field of vision and another is partial field of vision, which corresponding to two different operation status:

Do not dealing with the pathological tissue. When the surgeon searching target or the operation at the beginning, usually need a wide range of operation field. This corresponds to global field of vision.

Dealing with the pathological tissue. When the surgeon dealing with the pathological tissue, usually need to clearly show the surgical instruments and pathological tissue. This corresponds to partial field of vision.

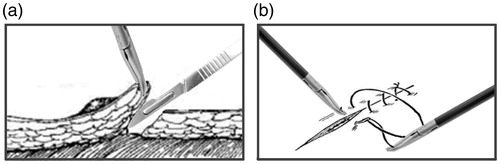

Surgical tasks commonly used mainly include cutting and peeling tissue, intracorporeal knot tying, extracorporeal knot tying, hemostasis, etc. Taking two of them as examples, as shown in , most of the other surgical tasks are around the pathological tissue, so through setting a threshold of space distance between surgical instruments, these two operation states can be simply judged.

Operation states judgment formula is as follows:

(1)

Where LAB stands for the space distance between two surgical instruments, T stands for the threshold, which is set according to the size of pathological tissue.

3. The definition of region of interest

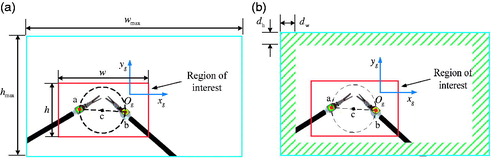

At present, most of the laparoscope equipped by MLSRS can output HD image (1080P), the image can still stay sharp even though zoom in it, but the surgeon only interested in the parts of the laparoscopic image which we usually called ROI, so we choose the ROI as output image, as shown in .

Combining with the surgeon’s demand of the surgical field, the requirements of ROI under different operation status are as follows:

When the surgeon is not dealing with the pathological tissue, the surgical instruments are far apart, the ROI contains a wide surgical field, so do not make special requirements.

When the surgeon is dealing with the pathological tissue, define the average size of two surgical instruments is between SD and SU.

Supposing the coordination of point c is (xc,yc), the length of straight line ab is Lab. The center of ROI is (xc,yc), the size of ROI is as shown in formula (2).

(2)

Where ɛ = KLab/wmax, K is the width factor of ROI, which need to be set according to the operation environment. wmax and hmax are the width and height of original image, respectively.

The proportion of ROI in original image is given as below.

(3)

To guarantee the proportion of core operation area in ROI and reducing the unrelated information in ROI, the width factor K usually take 1.5 ∼ 2.

In order to keep the marks in ROI, the value of K need to be adjusted according to the slant angle of line ab.

(4)

As shown in formula (4), when all the marks of surgical instrument are within the ROI, K is equal to the initial value KC, whereas the value of K change along with the changes of slope angle φ.

As shown in , in order to keep the ROI always within the laparoscopic original image, we define a judgment area. When the ROI and motion judging area have overlap, laparoscope needs to be adjusted; the criterion is given by formula (5).

(5)

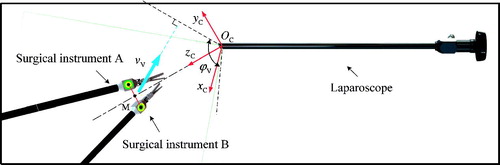

dw and dh stand for the width of the judgment area on the horizontal and vertical direction. Refer to , based on the definition of ROI and its motion state during the navigation and camera imaging model, the values of dw and dh in camera coordinate systemOC - xC yC zC are given by formula (6).

(6)

Where

fx and fy are the focal length of the laparoscope. fx and fy are the focal lengths of the laparoscope. ϕH and ϕV are the visual range angle of laparoscope. vH and vV are the speed of surgical instruments in in the horizontal and vertical direction. Point M is the center of point A and B.

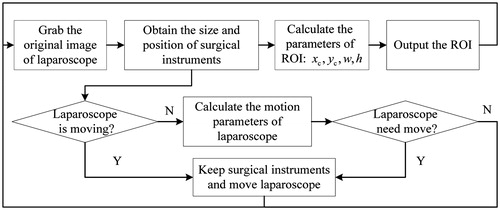

4. The process of laparoscopic automatic navigation

The flow chart of laparoscopic automatic navigation is shown as . First, system collects the original image of laparoscope and acquires the size and position of surgical instruments in laparoscopic image. The parameters of ROI are calculated based on the definition of it. Then the ROI is amplified output to the screen. Next, the laparoscopic motion parameters are calculated, if they are not zero, keep the surgical instruments and move laparoscope, if they are zero, it means that the laparoscope do not need to move. Throughout the laparoscopic automatic navigation, image acquisition and output still be performed.[Citation14]

5. Experiment and discussion

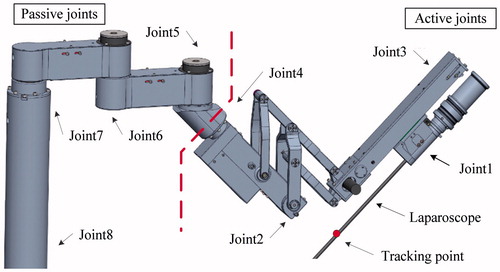

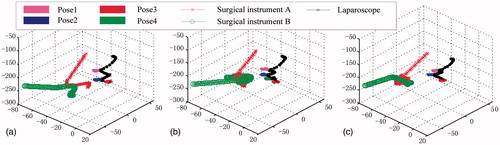

Base on the manipulator of a kind of typical MLSRS (as shown in ), three kind of trajectories, which belong to the surgical task of intracorporeal knot tying are adopted to prove the feasibility of this method.

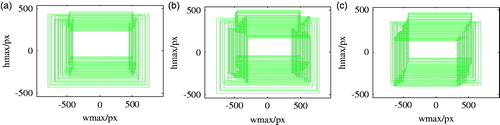

Considering trajectory detection error and joint movement errors, MATLAB is used to simulate and analyze this method. As shown in , , the simulation results show that: for each kind of trajectory, keeping ROI within the original image of laparoscope only need adjust the laparoscopic posture and position 3–4 times. Through point-to-point movement, this method can complete automatic navigation, which effectively avoids the influence of continuous movement of laparoscope.

Figure 6. The visual process of simulation: (a) trajectory 1, (b) trajectory 2, and (c) trajectory 3.

In this article, we also consider the automatic navigation effect under different laparoscopic visual angle. For each kind of trajectory, this method is simulated 10 times. The average adjustment times of laparoscopic pose is shown in .

Table 1. The automatic navigation effect under different laparoscopic visual angle (unit: times).

The results show that: for laparoscope with different visual angle, the adjustment times of laparoscopic pose is less than one, which means it is determined by the instrument trajectories and have little relationships with the laparoscopic visual angle. This method is suitable for most kinds of HD laparoscope.

6. Conclusions

For the problem of switching control object to adjust the operation field during the robot assisted laparoscopic surgery, a method of laparoscopic automatic navigation based on ROI is proposed. Applying the method to a kind of typical MLSRS, MATLAB is used to simulate and analyze this method; the visual process of automatic navigation is presented. We also simulate this method based on the laparoscope with different visual angle, which shows this method is suitable for most kind of HD laparoscope. This method avoids the image dithering and blurring caused by frequent movement of laparoscope, which has a couple of advantages.

Disclosure statement

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of this article.

Funding

This work is partially supported by the National Natural Science Foundation of China [grant No. 61203358/F0306], the Fundamental Research Funds for the Central Universities [grant No. HEUCF160700], the Postdoctoral Funding for Starting Scientific Research of Heilongjiang Province [grant No. LBH-Q12131], Natural Science Foundation of Heilongjiang Province [grant No. F2015034] and the Fundamental Research Funds for the Central Universities.

References

- Cau R, Schoenmakers FBF, Steinbuch M, et al. Design and preliminary test results of a novel microsurgical telemanipulator system. In: the 2014 5th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics. Proceedings; August 12–15; Sao Paulo, Brazil; 2014.

- Yan ZY, Du ZJ, Wu DM. Preoperative guidance optimization of surgical robot based on simulation. Robot. 2014;36:300–308.

- Pandya A, Reisner LA, King BW, et al. A review of camera viewpoint automation in robotic and laparoscopic surgery. Robotics. 2014;3:310–329.

- Kranzfelder M, Staub C, Fiolka A, et al. Toward increased autonomy in the surgical OR: needs, requests, and expectations. Surg Endosc. 2012; 27:1843–1848.

- Zhu D, Gedeon T, Taylor K. Moving to the centre: a gaze-driven remote camera control for teleoperation. Int Comput. 2010;23:85–95.

- Fujii K, Salerno A, Sriskandarajah K, et al. Gaze contingent Cartesian control of a robotic arm for laparoscopic surgery. Paper presented at: IEEE/RSJ International Conference on Intelligent Robots & Systems. 22th Annual Meeting; 2013 November 3–7; Tokyo, Japan.

- Hansen DW, Ji Q. In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans Pattern Anal Mach Intell. 2010;32:478–500.

- King BW, Reisner LA, Pandya AK. Towards an autonomous robot for camera control during laparoscopic surgery. J Laparoscopic Adv Surg Tech. 2013;23:1027–1030.

- Golenberg LP. Task analysis, modeling, and automatic identification of elemental tasks in robot assisted laparoscopic surgery. Detroit: Wayne State University; 2010.

- Lin HC, Shafran I, Yuh D, et al. Towards automatic skill evaluation: detection and segmentation of robot assisted surgical motions. Comput Aided Surg. 2006;11:220–230.

- Omote K, Feussner H, Ungeheuer A, et al. Self-guided robotic camera control for laparoscopic surgery compared with human camera control. Am J Surg. 1999;177:321–324.

- Weede O, Mönnich H, Müller B, et al. An intelligent and autonomous endoscopic guidance system for minimally invasive surgery. In: the 2011 IEEE International Conference on Robotics and Automation. Proceedings; 2011 Sep 11; Shanghai, China; 2011.

- Weede O, Bihlmaier A, Hutzl J, et al. Towards cognitive medical robotics in minimal invasive surgery. In: the Conference on Advances in Robotics. Proceedings; 2013 November 25–29; Pune, India; 2013.

- Jiang JG, Zhang YD. Motion planning and synchronized control of the dental arch generator of the tooth-arrangement robot. Int J Med Robot Comput Assist Surg. 2013;9:94–102.