?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Controlled experiments are widely used in many applications to investigate the causal relationship between input factors and experimental outcomes. A completely randomised design is usually used to randomly assign treatment levels to experimental units. When covariates of the experimental units are available, the experimental design should achieve covariate balancing among the treatment groups, such that the statistical inference of the treatment effects is not confounded with any possible effects of covariates. However, covariate imbalance often exists, because the experiment is carried out based on a single realisation of the complete randomisation. It is more likely to occur and worsen when the size of the experimental units is small or moderate. In this paper, we introduce a new covariate balancing criterion, which measures the differences between kernel density estimates of the covariates of treatment groups. To achieve covariate balance before the treatments are randomly assigned, we partition the experimental units by minimising the criterion, then randomly assign the treatment levels to the partitioned groups. Through numerical examples, we show that the proposed partition approach can improve the accuracy of the difference-in-mean estimator and outperforms the complete randomisation and rerandomisation approaches.

1. Introduction

The controlled experiment is a useful tool for investigating the causal relationship between experimental factors and responses. It has broad applications in many fields, such as science, medicine, social science, business, etc. The construction of the experimental design for a controlled experiment has two steps. First, a factorial experimental design, that is the treatment settings of the experimental factors, is specified. By treatment settings or treatment levels, we mean the combinations of the different factorial settings of all factors. There is a rich body of literature of classic and new methods on a factorial design (Wu & Hamada, Citation2011), and it is beyond the scope of this paper. The second step is to assign treatment settings to the available experimental units. A factorial design can involve only one factor with L treatment levels, or multiple factors with L combinations of treatment settings, which is decided by the design. In either case, there are L−1 treatment effects, such as main effects or interactions between multiple factors, and they are commonly estimated using the difference-in-mean estimator. Although practitioners usually use a completely randomised design for the second step, it has some limitations, as we are going to discuss next. In this paper, we focus on the second step, how to assign experimental units to treatment levels to improve the accuracy of the difference-in-mean estimator.

In many applications, the experimental units are varied with different covariates information. The response measurement of an experimental unit can be influenced by both the treatment setting and the covariates information of the experimental unit. For instance, in a clinical trial of a new hypoglycaemic agent, the experimental factor is the treatment that a patient receives and it has two levels, the new agent (with a fixed dosage) and placebo. The experimental units are the patients who participate in the clinical trial. The patients are partitioned into two groups. The group that receives the placebo is typically called the control group, and the group that receives the new agent is called the treatment group. The treatment effect of the new agent is then estimated by the difference of the average blood glucose level of the treatment group and that of the control group. This estimator is called mean-difference or difference-in-mean estimator (Rubin, Citation2005). The covariates of experimental units include the patients' age, gender, weight, and other physical and medical information. Naturally, the blood glucose level of a patient (the response) is related to the treatment, as well as the physical and medical background (covariates information) of the patient. If the distributions of covariates of the treatment and control groups are significantly different, the estimated treatment effect via the difference-in-mean estimator can be confounded with some covariate effects. If the number of experimental units is large relative to L, the confounding problem can be elevated by a completely randomised design. Asymptotically, the completely randomised design results in the same distribution of covariates for all partitioned groups. In other words, it achieves covariates balance. However, in practice, the experimenter only conducts the experiment once or a few times, each time using a single realisation of the complete randomisation, and usually for a finite number of experimental units. As pointed out by many existing works in the causal inference literature, relying on complete randomisation can be dangerous (Bertsimas et al., Citation2015; Morgan & Rubin, Citation2012). Especially, when the number of experimental units is small or moderate, covariate imbalance among the partitioned groups under complete randomisation could be surprisingly significant, leading to inaccurate estimates and incorrect statistical inference of treatment effects (Bertsimas et al., Citation2015).

The problem of inaccurate estimates induced by the covariate imbalance could be addressed by two types of methods. One type of method is for observational data. The premise is that a completely randomised design is used to assign treatment levels to experimental units before data are collected. Then some adjusting methods are applied at the data analysis stage. These adjusting methods include post-stratification (McHugh & Matts, Citation1983; Xie & Aurisset, Citation2016), propensity score matching (Pearl, Citation2000; Rosenbaum & Rubin, Citation1983), Doubly-robust estimator (Funk et al., Citation2011), coarsened exact matching (CEM) (Blackwell et al., Citation2009), etc. The common theme of these methods is to apply sophisticated weighting schemes to improve the original difference-in-mean estimator and reduce the mean squared error (consisting of the bias and variance) of the estimator the imbalance of covariates. The alternative estimators also include the least-square estimator (Wu & Hamada, Citation2011) of the treatment effects which is based on parametric model assumption. The literature on the observational study in Causal Inference is vast and we refer readers to Imbens and Rubin (Citation2015) and Rosenbaum (Citation2017) for a more comprehensive review.

Another kind of method aims at achieving covariate balance before the random assignment of the treatment levels (Kallus, Citation2018) at the design stage and before data collection. First, the experimental units are partitioned into L groups according to a certain covariate balancing criterion. Then, the L treatment levels are randomly assigned to the L groups. Such methods include randomised block designs (Bernstein, Citation1927), rerandomisation (Morgan & Rubin, Citation2012, Citation2015), and the optimal partition proposed by Bertsimas et al. (Citation2015) and Kallus (Citation2018), etc. With a randomised block design, the experimental units are divided into subgroups called blocks such that the experimental units have similar covariates information in each block. Then, the treatment levels are randomly assigned to the experimental units within each block. Similar to the post-stratification method, blocking can not be directly applied when the covariates are continuous or mixed. Users need to choose a discrete block factor based on continuous or mixed covariates. Morgan and Rubin (Citation2012) and Morgan and Rubin (Citation2015) proposed a rerandomisation method in which the experimenter keeps randomising the experimental units into L groups to achieve a sufficiently small Mahalanobis distance of the covariates between the groups. In Bertsimas et al. (Citation2015), the imbalance is measured as the sum of discrepancies in group means and variances, and the desired partition minimises this imbalance criterion. Kallus (Citation2018) proposed a new kernel allocation to divide the experimental units into balanced groups.

In this paper, we only consider the controlled experiments with continuous covariates. We propose a new criterion to measure the covariate imbalance, and the corresponding optimal partition minimises this new criterion and achieves the best covariates balance between L treatment groups. The problem set-up and assumptions are introduced in Section 2. In Section 3, we propose the new covariate balancing criterion, which measures the differences between the kernel density estimates of the covariates of the L groups of experimental units. In Section 4, we formulate the partition problem into a quadratic integer programming and discuss the choice of the parameters in kernel density estimates and optimisation. The proposed approach is compared with complete randomisation and rerandomisation through simulation and real examples in Section 5. We conclude this paper with some discussions and potential research directions in Section 6.

2. Problem set-up

In this work, we assume that the controlled experiment has N experimental units and they are predetermined and fixed through the data collection process. We first illustrate the problem set-up using the case of L = 2, based on which we derive the covariates balancing criterion in Section 3. In the second part of Section 3, we extend the design criterion from L = 2 case to the general case.

We assume that the response variable follows a general model

(1)

(1) Here

is the indicator of which of the two treatment levels the experimental unit i recieves. Conventionally, we use

to present a baseline level, or control, and

to present the other level, or treatment. We loosely use the terms control and treatment just to make the distinction between the two different levels. Assume the covariates of a experimental unit is

, and

is the observed covariates of the ith experimental unit. The function h is a square-integrable function. When

,

is the mean of the response

. The parameter α is the treatment effect and

is the random noise with zero mean and constant variance

. Furthermore,

is independent of the covariates of all the experimental units, treatment assignment, and the random noise of other experimental units.

Based on (Equation1(1)

(1) ), the sample means of the response in two groups are calculated as

where

and

are number of experimental units in treatment group and control group, respectively. The most commonly used estimator for the treatment effect α is the difference-in-mean estimator

(2)

(2) where

and

are the empirical distributions of the covariates of the treatment group and control group, respectively, and

and

are the mean errors in the corresponding groups.

Before conducting the experiment, the randomness of comes from three sources: random noise, the partition of experimental units in the two groups (if it is done through randomisation), and the random assignment of two levels to the two partitioned groups. More importantly, the three sources of randomness are independent of each other in our framework. Accordingly, the mean of estimator

is

where

and

are the empirical distribution of partitioned Group 1 and Group 2, respectively. The partition can be random or deterministic, depending on the partition method used. Define LA as the Bernoulli random variable representing the Level Assignment. Let LA = 0 if Group 1 is assigned as the treatment group and LA = 1 if Group 1 is assigned as the control group, and

. Obviously, if we only consider the random level assignments given the partition and random noise,

Since the random noise is independent of partition, regardless of distribution of the partition,

Therefore, it does not matter what kind of partition method we use, random or deterministic, the difference-in-mean estimator is always unbiased (Kallus, Citation2018), as long as the Level Assignment is fairly and randomly assigned to the two partitioned groups.

Similarly, considering the three sources of randomness, the variance of is

(3)

(3) In (Equation3

(3)

(3) ), the expectation in the last equation is with respect to the randomness in the partition of the experimental units, and the randomness of level assignment. We can use a more detailed but cumbersome notation and derive

As a result, once the partition of the experimental units is established, the variance of difference-in-mean estimator

in (Equation3

(3)

(3) ) is invariant to the treatment assignment of the two groups, that is,

(4)

(4) Thus, the experimenter should focus on the partition of the experimental units to reduce

. In the following section, we propose an alternative partition method that aims at regulating the variance of the difference-in-mean estimator

.

3. Kernel density estimation based covariate balancing criterion

In this section, we first show the derivation of the KDE-based covariate balancing criterion for the L = 2 following the set-up in Section 2. Then we extend the balancing criterion to the general case.

3.1. The case of L = 2

Define a partition of the experimental units as , where

if the ith experimental unit is partitioned into Group 1 and

if the ith experimental unit is partitioned into Group 2. Note that

is different from the indicator variable

in (Equation1

(1)

(1) ). The order of partition and treatment level assignment does not matter. One can partition the experimental units into two groups first and then randomly assign treatment levels. Or, one can randomly assign treatment levels to the two groups (which are still empty) and fill the groups with experimental units afterward. Therefore, Group 1 is not necessarily the treatment or the control group, that is,

does not imply

or

.

To construct a smooth approximation of the empirical distributions of the covariates in two groups, we estimate the corresponding distributions using the kernel density estimation. The kernel density estimation (KDE) is a popular technique to estimate the density function of a multivariate distribution, which is a generalisation of histogram density estimation but with improved statistical properties (Simonoff, Citation2012). We use this approximation for two reasons: (1) the covariates are continuous in nature, and (2) to bound . With a sample

of a

dimensional random vector drawn from a distribution with density function f, the kernel density estimate is defined to be

(5)

(5) where

is the kernel function which is a symmetric multivariate density function, and

is the positive definite bandwidth matrix. With the smooth approximation of the empirical distributions, by (Equation4

(4)

(4) ), the variance of the difference-in-mean estimator is approximately upper bounded by

(6)

(6) where the inequality follows from Cauchy-Schwarz inequality,

denotes the

-norm of a function,

and

are empirical distributions of covariates in Group 1 and 2, and

and

are the KDE of the covariates of the two groups, respectively. Here the expectation is only with respect to the partition

as explained in (Equation4

(4)

(4) ) in Section 2.

Since depends on the function h, we cannot directly minimise

with respect to partition without making any assumption on h function. To make our approach robust to any assumption on h, we propose using

(7)

(7) as the covariate balancing criterion to partition the experimental units. It is the part of the upper bound that is not a constant and only depends on the partition

. This criterion also appeared in Anderson et al. (Citation1994), which proposed the same criterion as a two-sample test statistic to test whether the two samples are drawn from the same distribution. Their work further supports the idea of using

as the partition criterion from the covariate balancing perspective. A small

value suggests that the covariates samples in the two groups tend to be drawn from the same distribution, then the covariate information in the two groups is balanced.

To achieve a partition with small , one can either find the optimal solution that minimises

or rerandomise the experimental units until a sufficiently small

is obtained. The latter way is doable when the asymptotic distribution of the criterion is available. The asymptotic distribution of

can be constructed using bootstrap method (Anderson et al., Citation1994). However, different from the simple normal asymptotic distribution of Mahalanobis distance derived in Morgan and Rubin (Citation2012), due to the computation cost of the bootstrap method, calculating the threshold of

is computationally expensive. As a result, we choose to construct a partition by minimising

, and we call the partition

the KDE-based partition. Since we use the optimisation approach, our partition scheme is actually deterministic. In other words, the partition scheme we use is to let

with probability equal to 1. This discussion also applies to the general

case. In Section 4, we discuss in detail about how to construct the KDE-based partition that minimises

.

3.2. General case of

For the general case of , the covariate balancing criterion is generalised as follows

(8)

(8) Extending

to the case of L>2, the partition is defined as

, with

. To show the generalised criterion in (Equation8

(8)

(8) ) is reasonable, we explain it under two scenarios: (1) the experiment involves only one factor with L treatment settings; (2) the experiment involves multiple factors and the experimental design contains L different combinations of the treatment settings of these factors. In the first scenario, the treatment effects of interest are the pairwise contrasts between any two treatment levels. The linear model in (Equation1

(1)

(1) ) is extended to

Here

's are the dummy variables corresponding to the assigned treatment level for the ith unit, and

if the ith unit is assigned to treatment level l, and

otherwise, for

. Using this notation, the treatment level L is set to be the baseline, and the rest of the treatment levels are compared to it. The treatment effects

for

are estimated by the difference-in-mean estimator

where

is the sample mean of the observations with treatment level k for

. Often, an experimenter is interested in the pairwise contrasts of the treatment effects

,

. Therefore, one would aim at minimising the largest variance of the corresponding estimator

, that is,

. Following the same deviation in the L = 2 case,

is unbiased with variance

Using the similar argument in (Equation6

(6)

(6) ), the largest variance is approximately upper bounded by

Assuming all the L treatment groups have very similar or the same size of experimental units, one should aim at minimising

. Thus, a natural covariates balancing criterion is

When L = 2, the criterion reduces to (Equation7

(7)

(7) ).

In the second scenario, we explain it via a simple full factorial design with two two-level factors, denoted by A and B. The full factorial design contains L = 4 treatment settings. Using the generic notation in the design of experiments literature, the four treatment settings are

,

,

, and

. The sample mean of the observations in each treatment group is

and the sample size is

for

. There are L−1 = 3 effects to be estimated, which are main effects of A and B (denoted as

and

) and their interaction effect (denoted by

). For simplicity, we assume

, and the total number of experimental units is N = 4n. The commonly used difference-in-mean estimators for

, and

are

Following the same derivation of (Equation4

(4)

(4) ), we obtain the variance of

Using the argument of (Equation6

(6)

(6) ),

is approximately upper bounded

In this upper bound, only

depends on the partition. By triangle inequality,

Similarly, the corresponding norms in the upper bounds of

and

are upper bounded by

Therefore, to regulate the worst-case variance of these three estimators, it makes sense to minimise the largest pairwise difference

,

. Thus, we reach the same criterion as above

For general full or fractional factorial design, if there are L treatment settings, there would be L sample means of the response from each treatment group. If all the L−1 effects (main effects, two-factor interactions, etc) are parameters of interests, then all the pairwise distance of

should be as small as possible so that the covariate balancing is achieved at best across all treatment groups. If only some of the L−1 effects are of interest, it is still ideal to reach covariate balancing across all treatment groups because the difference-in-mean estimators are essentially linear combinations of the group sample means.

4. Construction of KDE-based partition

Constructing a KDE-based partition of the experimental units is essentially an optimisation problem.

(9a)

(9a)

(9b)

(9b)

(9c)

(9c)

(9d)

(9d) Here

is the indicator function that

if A is true, and

otherwise, and

is the size of the experimental units in the

th group. This is an integer programming problem which can be difficult and computational to solve. In the next part, we formulate (Equation9

(9a)

(9a) ) into a quadratic integer programming problem for L = 2. It can be solved efficiently using modern optimisation tools.

4.1. Optimisation

To facilitate the formulation and computation of the optimisation problem, we derive a more concrete formula of defined in (Equation8

(8)

(8) ) for general

. For simplicity, we assume the number of experimental units is equal for all groups, so N is divisible by L. Denote the number of experimental units in the lth partitioned group as n, and N = nL. For any

, we have

where the matrix operator

is defined as the summation of all the entries of a matrix. Then,

can be computed as

(10)

(10) where the matrix

is a symmetric matrix of size

, with elements defined as,

(11)

(11)

(12)

(12) It is well-known that the choice of kernel function K is not essential to the KDE (Silverman, Citation1986). To illustrate the partition method, we choose the commonly used multivariate Gaussian kernel

. The entries of

using the Gaussian kernel can be calculated analytically,

As a special case,

. Note that the above calculation applies when the domain of

, denoted by Ω, is unbounded, i.e.

. If Ω is a subset of

, we can derive the integration in the range of Ω, and the resulting formula would involve the CDF of normal. But here we still integrate with the range of

, and the approximation error is small since the value of the estimated density function should be small outside of Ω.

Given a specific partition , we partition the

matrix into

sub-matrices accordingly, such that each sub-matrix

corresponds to the experimental units in group r and s. That is, the entries of sub-matrix

are

such that

and

for

. Notice that such a definition of the block matrices depends on the partition

. Thus, the entries of the sub-matrices would change as the partition is varied. But the entries of the

for each pair of

remain the same for all

. So the entries of the matrix

only need to be computed once for computing

values for different partitions.

For L = 2, the objective function . Recall that by the definition of partition vector

,

if the ith experimental unit is in Group 1, and

if the ith experimental unit is in Group 2. Then, the objective function

could be rewritten as

(13)

(13) where

. As a result, for L = 2, the optimisation problem (Equation9

(9a)

(9a) ) is reformulated into

(14a)

(14a)

(14b)

(14b)

(14c)

(14c)

This is a quadratic integer programming that can be solved efficiently by Gurobi Optimiser (Gurobi Optimization, LLC, Citation2020) for small- or moderately-sized experiments. For large-sized experiments or the more general case of , stochastic optimisation tools such as genetic algorithm (Miller & Goldberg, Citation1995) and simulated annealing (Van Laarhoven & Aarts, Citation1987) can be adopted to solve the optimisation. Regardless of the optimisation method, the matrix

is computed only once in the optimisation procedure, which significantly cuts down the computation.

4.2. Choice of bandwidth matrix

The accuracy of the KDE is sensitive to the choice of bandwidth matrix (Simonoff, Citation2012; Wand & Jones, Citation1993). Many methods have been developed to construct

under various criteria (de Lima & Atuncar, Citation2011; Duong & Hazelton, Citation2005; Jones et al., Citation1996; Sain et al., Citation1994; Sheather & Jones, Citation1991; Wand & Jones, Citation1994; Zhang et al., Citation2006). Besides these methods,

can be chosen by some rules of thumb, including Silverman's rule of thumb (Silverman, Citation1986) and Scott's rule (Scott, Citation2015). But they may lead to a suboptimal KDE (Duong & Hazelton, Citation2003; Wand & Jones, Citation1993) due to the diagonality constraint of

. Another rule of thumb uses a full bandwidth matrix as

(15)

(15) where

is the estimated covariance matrix with sample size n, and d is the covariate dimension. It can be considered as a generalisation of Scott's rule (Härdle et al., Citation2012). To compromise between the computational cost and the accuracy of the KDE, we propose using the rule of thumb in (Equation15

(15)

(15) ). It is easy to compute and leads to a more accurate KDE compared to the simple diagonal matrix. We did a series of numerical comparisons to test the impact of

on the KDE-based partition (not reported here due to the space limit). The results show that (Equation15

(15)

(15) ) leads to similar partitions compared with the more computationally demanding methods such as cross-validation (Duong & Hazelton, Citation2005; Sain et al., Citation1994) and Bayesian methods (de Lima & Atuncar, Citation2011; Zhang et al., Citation2006). Similar observations were found in the work by Anderson et al. (Citation1994). As pointed out in Anderson et al. (Citation1994), the criterion

aims at measuring the discrepancy between the distributions from which the samples are drawn, but not precisely estimating those distributions. Although the bandwidth matrix

plays an important role in the estimation of distribution, it is not surprising that the KDE-based partition is robust to the choice of

.

5. Examples

Example 1. Simulation Example. We compare the performance of the proposed KDE-based partition with complete randomisation and rerandomisation (Morgan & Rubin, Citation2012) through a simulation example with d = 2 covariates. Three different types of mean function h are considered.

Model 1. Linear basis:

,

Model 2. Quadratic basis:

,

Model 3 Sinusoidal model:

,

The notation is the indicator of the treatment level assignment. To generate data from these models, we need to specify the values of the parameters, including the treatment effect α, and others

's,

's, ϕ and θ. Let

for all models. In Model 1 and Model 2, the regression coefficients

's,

's and θ are sampled from a uniform distribution

. In Model 3,

,

,

and

are randomly generated from

, and ϕ is randomly generated from

. The observed covariates are generated from multivariate standard normal distribution

. All these values are fixed through the simulations. Since

is only affected by the partition methods and is invariant to the variance of the noise

, it does not matter to the comparison of different methods. Therefore in this and the next example, we set

.

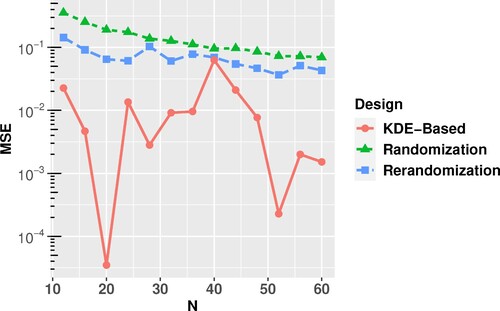

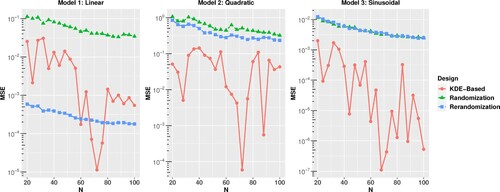

To compare the proposed approach with other random methods, m = 1000 random partitions are generated via complete randomisation and rerandomisation approaches. For each partition, the treatment levels are randomly assigned to the two groups, and m = 1000 designs are generated for the two random approaches. Since the proposed KDE-partition is an optimisation-based method, the partition is deterministic. With all possible treatment-level assignments, there are only two designs. For each design obtained from the three methods, we generate the response data from the three models with the fixed parameters and covariates. Then, the estimated mean squared error of the difference-in-mean estimator is calculated for increasing sample size from N = 20 to N = 100, and they are plotted in Figure .When the true relationship between the response y and covariate

is linear, rerandomisation outperforms the other two methods. Morgan and Rubin (Citation2012) showed that, compared to complete randomisation, the rerandomisation with Mahalanobis distance criterion can reduce

significantly when the mean function

contains only the linear terms of the covariates. When a more complicated relationship such as Model 2 or 3 is considered, the KDE-based partition outperforms the complete randomisation and rerandomisation by a large margin. In practice, the true mean function h is rarely as simple as a linear function and usually contains higher-order terms of the covariates. Thus, from a practical perspective, we suggest that the KDE-based partition is a better choice.

Figure 1. Comparison of the estimated mean squared error of difference-in-mean estimator using three partition methods for Example 1.

We further explore the performance of the proposed KDE-based partition in matching the empirical distributions of the covariates in two groups. We compare the difference of the empirical distributions under complete randomisation, rerandomisation, and KDE-based partition. The discrepancy of the first and second raw moments of the two empirical distributions over the m = 1000 partitions are calculated and reported in Table . In general, rerandomisation performs the best in matching the means of the empirical distributions, which to some extent implies that it performs the best under Model 1 (the model with main effect only). The KDE-based partition consistently outperforms the complete randomisation and is superior to the other two methods for the second moments since it matches the approximated density functions rather than just the first and second moments.

Table 1. Discrepancy of moments under different partition methods.

Example 2. Real Data Example. We compare the KDE-based partition with the complete randomisation and rerandomisation using a real data set. The data set is from a diabetes study (Efron et al., Citation2004). It contains 422 observations of d = 10 covariates and a univariate response. The covariates are age, sex, body mass index, average blood pressure, and six blood serum measurements of the patients, and the response is a quantitative measure of disease progression. The data are only observational data and do not contain any experimental factors.

To use this data, we do not assume any functional form for . Instead, we assume the observed quantitative measure of disease progression is the sum

. Let the true value of the treatment effect

. Given treatment assignment x, the response data including the treatment effect is,

.

For different values of N, ranging from N = 12 to N = 60, m = 1000 partitions are generated using complete randomisation and rerandomisation. As explained before, for each N value, the optimal KDE-based partition is deterministic. For each of the partitions obtained from the three methods, we randomly assign treatment settings to the two partitioned groups to obtain the design, and then compute the response y accordingly. The estimated mean squared error of the difference-in-mean estimator is calculated for each N. They are shown in Figure . The KDE-based partition outperforms the other two partition methods for all sample sizes.

6. Discussion

In this paper, we introduce a KDE-based partition method for the controlled experiments. By adopting a smooth approximation of the covariate empirical distributions, we propose a new covariate balancing criterion. It measures the difference between the distributions of covariates in the partitioned groups. We use quadratic integer programming to construct the partition that minimises the covariate balancing criterion for the two-level experiments. If the number of treatment settings is more than two, other stochastic optimisation methods can be applied. The design generated via the KDE-partition can regulate the variance of the difference-in-mean estimator. Compared with the complete randomisation and rerandomisation methods, the simulation and real examples show that the proposed method leads to a more accurate difference-in-mean estimation of the treatment effect when the underlying model involves more complicated functions of the covariates. The simulation example also confirms that the covariates' distributions of the groups are better matched using the proposed method.

It is worth pointing out that, when the KDE-based partition is used, the classical hypothesis testing procedure for the difference-in-mean estimator is not applicable, since the partition is a deterministic solution and the random treatment assignments only provide two different designs when L = 2. Fortunately, a sophisticated testing procedure using bootstrap method has been established and proven to be powerful (Bertsimas et al., Citation2015) for the sharp null hypothesis (Rubin, Citation1980), . The detailed bootstrap algorithm is in Algorithm 1.

One limitation of the proposed KDE-based partition is the ‘curse of dimensionality’. It is well-known that KDE may perform poorly when the dimension of the covariate is large relative to the sample size. To overcome this problem, the experimenter can add a dimension reduction step prior to the partition of the experimental units. Based on the properties of the covariate data, one can choose the appropriate dimension reduction method from various choices, such as the principal component analysis (PCA) and the nonlinear variants of PCA. For instance, using PCA, the experimenter can select a small but sufficient number of the principal components and apply the KDE-based partition on the linearly transformed covariates of a much lower dimension. We also want to alert the readers with another limitation of the KDE-based partition. Similar to other optimal covariates balancing ideas, such as Bertsimas et al. (Citation2015) and Kallus (Citation2018), the KDE-based partition assumes all the influential covariates to the response are known to the experimenter and their data are included in the observed covariates data. Otherwise, if there are latent but important covariates, the optimal partition methods, including the proposed KDE-based partition, might lead to an estimator with large variance because it deterministically balances the experimental units based on incomplete covariate information. In this case, we recommend the randomisation or rerandomisation methods.

The proposed KDE-based partition method can be used in other scenarios beyond controlled experiments. Essentially, we have proposed a density-based partition method that minimises the differences of data between groups. It can be incorporated into any statistical tool that needs to partition data into similar groups, such as cross-validation, divide-and-conquer, etc. We hope to explore these directions in the future. In this work, we do not assume any interaction terms between the covariates and the treatment effect. However, interaction effects are likely to occur in practice. Another interesting direction is the partition of the experimental units considering the interaction terms in the model.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Yiou Li

Dr Yiou Li is an Assistant Professor of the Department of Mathematical Sciences at DePaul University. She obtained her MS in Mathematical Finance and PhD in Applied Mathematics from the college of computing at Illinois Institute of Technology. Dr. Li's research focuses on various areas in Statistics, including statistical design and analysis of physical and computer experiments, statistical modeling and data analysis in economics and finance, etc. She has publications and submitted papers in statistical journals including International Journal for Uncertainty Quantification, Journal of Statistical Planning and Inference, Canadian Journal of Statistics, Statistica Sinica, etc.

Lulu Kang

Dr Lulu Kang is an Associate Professor of the Department of Applied Math at Illinois Institute of Technology (IIT). She obtained her MS in Operations Research and PhD in Industrial Engineering from the Stewart School of Industrial and Systems Engineering at Georgia Institute of Technology. Dr Kang has worked on various areas in Statistics, including uncertainty quantification, statistical design and analysis of experiments, Bayesian computational statistics, etc. She has publications and submitted papers in top statistical journals including Techometrics, SIAM/ASA Journal on Uncertainty Quantification, Statistica Sinica, etc. Dr Kang is currently the associate editor for journals SIAM/ASA Journal on Uncertainty Quantification and Technometrics.

Xiao Huang

Dr Xiao Huang obtained his PhD degree in Applied Mathematics and a Master in Mathematical Finance from Illinois Institute of Technology. His PhD advisor was Dr Lulu Kang. He collaborated with Dr Lulu Kang and Dr Yiou Li on this work during his PhD study at Illinois Tech. After graduation from Illinois Tech in 2019, Dr Huang has worked as a Data Scientist at United Airlines.

References

- Anderson, N. H., Hall, P., & Titterington, D. M. (1994). Two-sample test statistics for measuring discrepancies between two multivariate probability density functions using kernel-based density estimates. Journal of Multivariate Analysis, 50(1), 41–54. https://doi.org/10.1006/jmva.1994.1033

- Bernstein, S. (1927). Sur l'extension du théorème limite du calcul des probabilités aux sommes de quantités dépendantes. Mathematische Annalen, 97(1), 1–59. https://doi.org/10.1007/BF01447859

- Bertsimas, D., Johnson, M., & Kallus, N. (2015). The power of optimization over randomization in designing experiments involving small samples. Operations Research, 63, 868–876. https://doi.org/10.1287/opre.2015.1361

- Blackwell, M., Iacus, S., King, G., & Porro, G. (2009). cem: Coarsened exact matching in Stata. The Stata Journal, 9(4), 524–546. https://doi.org/10.1177/1536867X0900900402

- de Lima, M. S., & G. S. Atuncar (2011). A Bayesian method to estimate the optimal bandwidth for multivariate kernel estimator. Journal of Nonparametric Statistics, 23(1), 137–148. https://doi.org/10.1080/10485252.2010.485200

- Duong, T., & Hazelton, M. (2003). Plug-in bandwidth matrices for bivariate kernel density estimation. Journal of Nonparametric Statistics, 15(1), 17–30. https://doi.org/10.1080/10485250306039

- Duong, T., & Hazelton, M. L. (2005). Cross-validation bandwidth matrices for multivariate kernel density estimation. Scandinavian Journal of Statistics, 32(3), 485–506. https://doi.org/10.1111/sjos.2005.32.issue-3

- Efron, B., Hastie, T., Johnstone, I., & Tibshirani, R. (2004). Least angle regression. The Annals of Statistics, 32(2), 407–499. https://doi.org/10.1214/009053604000000067

- Funk, M. J., Westreich, D., Wiesen, C., Stürmer, T., Brookhart, M. A., & Davidian, M. (2011). Doubly robust estimation of causal effects. American Journal of Epidemiology, 173(7), 761–767. https://doi.org/10.1093/aje/kwq439

- Gurobi Optimization, LLC (2020). Gurobi optimizer reference manual.

- Härdle, W. K., Müller, M., Sperlich, S., & Werwatz, A. (2012). Nonparametric and semiparametric models. Springer Science & Business Media.

- Imbens, G. W., & Rubin, D. B. (2015). Causal inference for statistics, social, and biomedical sciences: An introduction. Cambridge University Press.

- Jones, M. C., Marron, J. S., & Sheather, S. J. (1996). Progress in data-based bandwidth selection for kernel density estimation. Computational Statistics, 11, 337–381.

- Kallus, N. (2018). Optimal a priori balance in the design of controlled experiments. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 80(1), 85–112. https://doi.org/10.1111/rssb.12240

- McHugh, R., & Matts, J. (1983). Post-stratification in the randomized clinical trial. Biometrics, 39(1), 217–225. https://doi.org/10.2307/2530821

- Miller, B. L., & Goldberg, D. E. (1995). Genetic algorithms, tournament selection, and the effects of noise. Complex Systems, 9, 193–212.

- Morgan, K. L., & Rubin, D. B. (2012). Rerandomization to improve covariate balance in experiments. The Annals of Statistics, 40(2), 1263–1282. https://doi.org/10.1214/12-AOS1008

- Morgan, K. L., & Rubin, D. B. (2015). Rerandomization to balance tiers of covariates. Journal of the American Statistical Association, 110(512), 1412–1421. https://doi.org/10.1080/01621459.2015.1079528

- Pearl, J. (2000). Causality: Models, reasoning, and inference. Cambridge University Press.

- Rosenbaum, P. (2017). Observation and experiment: An introduction to causal inference. Harvard University Press.

- Rosenbaum, P. R., & Rubin, D. B. (1983). The central role of the propensity score in observational studies for causal effects. Biometrika, 70, 41–55. https://doi.org/10.1093/biomet/70.1.41

- Rubin, D. B. (1980). Randomization analysis of experimental data: The Fisher randomization test comment. Journal of the American Statistical Association, 75, 591–593.

- Rubin, D. B. (2005). Causal inference using potential outcomes: Design, modeling, decisions. Journal of the American Statistical Association, 100(469), 322–331. https://doi.org/10.1198/016214504000001880

- Sain, S. R., Baggerly, K. A., & Scott, D. W. (1994). Cross-validation of multivariate densities. Journal of the American Statistical Association, 89(427), 807–817. https://doi.org/10.1080/01621459.1994.10476814

- Scott, D. W. (2015). Multivariate density estimation: Theory, practice, and visualization. Wiley.

- Sheather, S. J., & Jones, M. C. (1991). A reliable data-based bandwidth selection method for kernel density estimation. Journal of the Royal Statistical Society. Series B (Methodological), 53(3), 683–690. https://doi.org/10.1111/rssb.1991.53.issue-3

- Silverman, B. W. (1986). Density estimation for statistics and data analysis, vol. CRC Press.

- Simonoff, J. S. (2012). Smoothing methods in statistics. Springer Science & Business Media.

- Van Laarhoven, P. J., & Aarts, E. H. (1987). Simulated annealing. In Simulated annealing: Theory and applications (pp. 7–15). Springer.

- Wand, M. P., & Jones, M. C. (1993). Comparison of smoothing parameterizations in bivariate kernel density estimation. Journal of the American Statistical Association, 88(422), 520–528. https://doi.org/10.1080/01621459.1993.10476303

- Wand, M. P., & Jones, M. C. (1994). Multivariate plug-in bandwidth selection. Computational Statistics, 9, 97–116.

- Wu, C. J., & Hamada, M. S. (2011). Experiments: planning, analysis, and optimization, vol. Wiley.

- Xie, H., & Aurisset, J. (2016). Improving the sensitivity of online controlled experiments: Case studies at netflix. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 645–654). ACM.

- Zhang, X., King, M. L., & Hyndman, R. J. (2006). A Bayesian approach to bandwidth selection for multivariate kernel density estimation. Computational Statistics & Data Analysis, 50(11), 3009–3031. https://doi.org/10.1016/j.csda.2005.06.019