Abstract

Numerous governments worldwide have issued national artificial intelligence (AI) strategies in the last five years to deal with the opportunities and challenges posed by this technology. However, a systematic understanding of the roles and functions that the governments are taking is lacking in the academic literature. Therefore, this research uses qualitative content analysis and Latent Dirichlet Allocation (LDA) topic modeling methodologies to investigate the texts of 31 strategies from across the globe. The findings of the qualitative content analysis highlight thirteen functions of the state, which include human capital, ethics, R&D, regulation, data, private sector support, public sector applications, diffusion and awareness, digital infrastructure, national security, national challenges, international cooperation, and financial support. We combine these functions into three general themes, representing the state’s role: development, control, and promotion. LDA topic modeling results are also reflective of these themes. Each general theme is present in every national strategy’s text, but the proportion they occupy in the text is different. The combined typology based on two methods reveals that the countries from the post-soviet bloc and East Asia prioritize the theme “development,” highlighting the high level of the state’s involvement in AI innovation. The countries from the EU focus on “control,” which reflects the union’s hard stance on AI regulation, whereas countries like the UK, the US, and Ireland emphasize a more hands-off governance arrangement with the leading role of the private sector by prioritizing “promotion.”

1. Introduction

Academic investigation of the algorithm-driven governance (Yeung Citation2018) usually focuses on two distinctive domains (Gritsenko and Wood Citation2022). The first line of research explores how algorithms are used as a tool of governance—how algorithms can alter human behavior (Eyert, Irgmaier, and Ulbricht Citation2022). Researchers interested in these issues investigate intricate relations between the practice of algorithmic surveillance and the human rights (Kosta Citation2022), the role of screen-level bureaucrats’ agency in the decision-making algorithmic loops (Binns Citation2022), and the interplay between policy decisions and algorithmic recommender systems (Coglianese and Lehr Citation2018; Katzenbach and Ulbricht Citation2019). Thus, they tend to perceive algorithms as unique tools through which the population is regulated (Bellanova and Goede Citation2022).

The second line of research is interested in the emerging practice of regulation and governance of the algorithms (Gritsenko and Wood Citation2022). The current state of discussion about the need to regulate the tech industry, and AI technology, in particular, tends to focus on the choice of regulatory instruments from command-and-control regulation to industry self-regulation (Yeung and Bygrave Citation2022). The lack of reliable data complicates regulatory interventions for emerging technologies forcing governments to adopt flexible governing arrangements (Taylor Citation2020), often realized in the form of soft law (Guttierez and Marchant Citation2021). Soft law approaches to regulating emerging technologies can be understood as a “shorthand term to cover a variety of non-binding norms and techniques for implementing them” (Abbott, Marchant, and Corley Citation2012). At its weakest form, soft laws represent numerous standards, principles, guidelines, and best practices, which set certain expectations but are not directly enforceable by the government (Marchant Citation2019). This regulatory approach usually relies on decentralizing the power between private, public, and other types of actors and institutions (Abbott Citation2013). Recent research projects focused on studying soft AI law identified over 600 soft law documents produced by different stakeholders under different jurisdictions (Gutierrez and Marchant Citation2021).

Though the academic field of study of soft AI law is in its infancy, there have already been attempts to study the documents systematically. Several researchers found a high degree of similarity between AI ethics principles from the documents released by different stakeholders (Fjeld et al. Citation2020; Hagendorff Citation2020; Jobin, Ienca, and Vayena Citation2019). However, other researchers highlight that certain ethical principles will come into conflict with each other when implemented in practice (Tzachor et al. Citation2020; Whittlestone et al. Citation2019), that there are ambiguities about the meaning of ethical principles in different documents (Zeng, Lu, and Huangfu Citation2018) and its interpretations in different cultural contexts (ÓhÉigeartaigh et al. Citation2020), as well as problems associated with translating the high-level principles into practice (Morley, Elhalal, et al. Citation2021; Morley et al. Citation2020; Morley, Kinsey, et al. Citation2021).

Nevertheless, while scholarly attention is paid to investigating ethical principles for AI, another vital policy document—national AI strategies—remains an under-investigated object of inquiry. There have been attempts to systematically analyze policy initiatives from the European national AI strategies, which were grouped into five policy areas: human capital, from the lab to the market, networking, regulation, and infrastructure (Van Roy et al. Citation2021). However, this study does not include any countries that are not part of the European Union and are not developing their strategies according to European standards. This limited scope prevents it from conducting a genuinely global analysis of these documents—similar to Radu’s paper (Radu Citation2021), which only includes twelve strategies in the study. Other projects analyze the sociotechnical imaginaries as presented in the discourse of four national AI strategies (Bareis and Katzenbach Citation2022), group strategies together with AI ethics guidelines produced by the private sector (Schiff et al. Citation2020), focus on the comparison between two countries (China and India) (Kumar Citation2021) or concentrate on an in-depth analysis of the national AI strategy of a single country (Chatterjee Citation2020; Roberts et al. Citation2021). van Berkel et al. (Citation2020) analyze 25 documents and include national AI strategies of countries outside North America and Europe, but their analysis is limited to a quantitative approach, which “does not allow for a nuanced understanding of the differences in perspective when discussing these topics.”

Another strain of research investigates the state’s role as framed in the discussion of AI governance based on the analysis of 49 documents. The results show that the state takes three primary roles in this process: acting as a “promoter” or “facilitator” to utilize the benefits of AI for the public good; as a “guarantor” to mitigate the risks stemming from this technology; and as a “moderator” and “enabler of societal change” to enable the discussion about AI risks and benefits from different stakeholders (Ulnicane et al. Citation2021). These categories are adopted from the work of Borras and Edler. They first introduced four governance modes of socio-technical systems based on the differences between the role of state and non-state actors in this process and the nature of their coordination (hierarchical and non-hierarchical). These categories include self-regulation (non-state actors, non-hierarchical); oligopoly (non-state actors, hierarchical); state as primus inter pares (state actors; non-hierarchical); and command and control (state actors, hierarchical) (Borras and Edler Citation2014). This typology was further elaborated by adding 13 roles of the state within these four governance modes. These include observer, warner, mitigator, opportunist, facilitator, lead-user, enabler of societal engagement, gatekeeper, promoter, moderator, initiator, guarantor, and watchdog (Borrás and Edler Citation2020).

In this study, we develop a typology of the priorities in the state’s roles in governing AI based on the analysis of 31 national AI strategies. By delving into the broad categories of “promoter” and “facilitator,” “guarantor,” “moderator,” and “enabler of societal change,” we investigate which country emphasizes the development of AI, the control over AI, and the promotion of AI.

We adopt and combine two research methodologies—qualitative content analysis and Latent Dirichlet Allocation (LDA) topic modeling—for this task. These inductive methodologies were chosen to level out the potential subjectivity associated with the qualitative content analysis and the lack of nuanced textual interpretation of the LDA topic modeling through the mixed method design, which has proven to be effective for the policy research involving LDA topic modeling (Isoaho, Gritsenko, and Mäkelä Citation2021).

We first analyze each strategy’s text qualitatively to determine the typology of the state’s functions. The 13 categories of functions are then grouped into three broad themes: development, control, and promotion. The proportion of each theme in one document becomes the first pillar of the combined typology. LDA topic modeling is then used to quantitatively model three topics within the corpus of the data. Each document is a combination of three topics, reflective of development, control, and promotion themes. The proportion of each topic in each document becomes the second pillar of the combined typology. The countries are finally grouped into three categories of development, control, and promotion based on the combined prioritization of one of these themes in its text.

2. Qualitative content analysis

2.1. Methodology and data

To compile a representative database of national AI strategies, three databases were consulted:

AI Governance Database by Nesta (Citation2022)

The OECD AI Policy Observatory (Citation2022)

AI Ethics Guidelines Global Inventory by Algorithm Watch (Citation2022)

Based on the consultation of the databases, 41 national AI strategies have been gathered. However, it was impossible to find the text of seven strategies in English (Argentina, Brazil, Cyprus, Italy, Latvia, Poland, and Slovenia), so they were excluded from the analysis. Additionally, the strategies of Spain, France, and Slovakia were excluded from the analysis because their scope goes beyond just AI.

The content analysis technique was applied to each of the documents. They were manually coded. During the coding process, all text relevant to the functions of the government and its actions was inductively coded (Wicks Citation2017). After coding the documents, the codes were themed together to construct broader categories.

2.2. General trends in the themes of national AI strategies

The search identified 31 national AI strategies (). The data reveals a trend where after slow growth in 2017 (one document) and 2018 (four documents), there is a significant increase in the number of national AI strategies published in 2019 (17 documents), which is followed by a rapid decrease in the number in 2020 (six documents) and 2021 (three documents).

Table 1. National AI strategies of 31 countries.

Regarding geographic distribution, the data shows that most strategies are published by more economically developed countries in Europe, North America, and Asia. Many countries in the European Union have published national AI strategies (n = 16), which can be explained by the fact that it is a union-level initiative to encourage the development of national AI strategies. Big emerging economies of China, India, and Russia have also published their strategy, while Uruguay is the only country from South America included in the analysis.

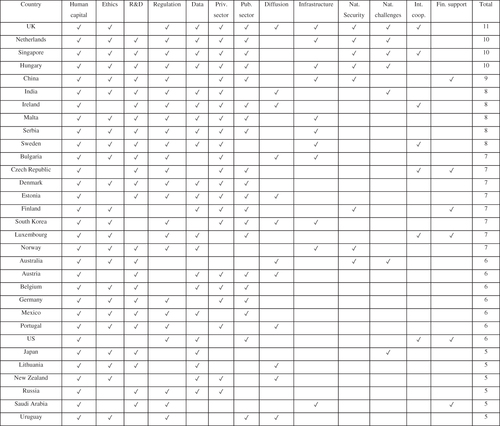

Thirteen overarching themes have emerged from the content analysis of the state’s functions stated in the national AI strategies. These themes are (by the frequency of the number of strategies in which they are featured): Human capital, Research and development (R&D), Regulation, Support for the private sector, Ethics, Applications in the public sector, Data, Digital infrastructure, National challenges, Diffusion and awareness, Financial support, National security, and International cooperation ().

The only theme that was touched upon in every national strategy analyzed in this project is Human Capital. Six other themes appeared in more than half of all the documents. These include Ethics (24/34), R&D (24/34), Regulation (24/34), Data (22/34), Private sector support (22/34), and Public sector applications (20/34).

No country has mentioned all thirteen themes in its national AI strategy. The UK’s AI strategy has 11 themes in its text, which is more than any other country. The UK’s strategy is also the latest addition to this analysis, as the strategy was published on September 22, 2021. The strategies of the Netherlands and Singapore include ten themes, while China’s and Hungary’s—have nine themes. The number of themes mentioned in the strategy does not refer to the quality of the strategy (as some strategies may have fewer themes but are much more detailed and comprehensive in their approach) but rather show the scope of the strategy’s ambition—how many domains it tries to cover.

A detailed evaluation of the thematic content for each of the thirteen categories is presented in the next section.

2.3. Discussion of themes

Human capital () is the most prevalent theme in national AI strategies. All 31 strategies touched upon this theme in their text. In the context of this research, human capital can be understood as different measures aimed at attracting and growing AI talent within the country. All strategies propose developing a new educational program for different levels in the educational system and creating training and re-training programs for adults, including initiatives for life-long learning. Several strategies also propose using AI tools to personalize educational services and utilize AI technologies for university management. The establishment of research centers for the attraction of talent is also emphasized, alongside the creation of AI faculty positions, including shared positions between academia and industry. Different types of talent attraction programs are also introduced, including programs aimed specifically at foreign talent or bringing local talent back to the home country. Many countries propose developing online educational platforms and AI courses for talent development and providing teachers with training courses. Discussion of ethical issues related to AI in education, informal training institutions and financial incentives for re-skilling, increasing women researchers’ participation in AI, improving the ICT environment at schools, AI education for the military, and a new visa regime seem to be more country-specific initiatives.

Table 2. Human capital.

Ethics () is the second most common theme. This theme refers to different non-enforceable and non-binding value-based principles through which the technology of AI will be governed and regulated. Therefore, most strategies emphasize the importance of developing a list of ethical principles, creating a code of ethics, and establishing an ethics committee. Many strategies highlight the importance of international cooperation on ethical principles and emphasize the need for greater transparency and responsibility regarding the development and application of AI and ethical principles. Some countries articulate the importance of developing AI technologies in a more sustainable and energy-efficient manner, guaranteeing inclusivity, diversity, and human rights protection through corporate governance mechanisms.

Table 3. Ethics.

R&D () is the third most common theme discussed in the strategies, which refers to different policy instruments aimed at developing a solid research basis for AI in the country. Most countries are determined to cultivate AI research by creating general-purpose research centers dedicated to this technology, with different funding schemes and fellowships for researchers at different career stages. Other countries articulate their intentions in a broader sense by wanting to establish the foundation for basic and applied science and, through that, encourage the development of the academic discipline of AI, increase academia-industry collaboration, and accelerate the internationalization of this academic discipline. Academic publishing and providing more computing resources are also the ways certain countries see the development of AI research can progress.

Table 4. R&D.

Regulation () is the fourth most common theme in the strategies, which stands for different rules-based approaches to regulating the technology of AI. Legal frameworks, guidelines, and standards are often discussed as the necessary first step in developing a regulatory regime for AI in the text of national AI strategies, which can be supported by the creation of a risk-assessment framework and a mechanism to monitor AI impacts on society, rules-monitoring, clear transparency, and accountability requirements, and aligning local standards with international standards, including data standards. Other suggestions propose revising national legislation broadly to make it suitable for AI and updating intellectual property law, personal data law, and tax and competition policy. Several countries also include the creation of a regulatory sandbox for AI in their national AI strategy.

Table 5. Regulation.

Data () is the fifth most common theme. It refers to different policy measures to develop data infrastructure and policy mechanisms to utilize it. Creating large sets of open and usable data for AI is one of the main goals of several strategies, including giving access to data from the public sector. Creating data-sharing platforms between sectors or a data marketplace is another popular tool to facilitate the utilization of different data while establishing other regulatory frameworks and overseeing institutions is discussed in the context of data regulation. The creation of small data AI solutions and reuse of data, utilization of public data for climate change, and the creation of data and network infrastructure are also discussed.

Table 6. Data.

Support for the private sector () is the sixth most common theme in the strategies, which includes different instruments to support the private sector in applying and developing AI solutions. Providing various sources of funding for private companies through grants, accelerators, and technology support programs is the most direct way for the government to support the private industry in utilizing AI technologies. Other efforts concentrate on creating a channel for the dialogue between the government and the industry about the importance of this technology, setting up an innovation hub and pilot project testing zones, championing local AI organizations abroad, removing legal obstacles to innovation, setting up liaison offices abroad, and creating an AI marketplace.

Table 7. Support for the private sector.

Public sector application () is the seventh most common theme discussed in the documents. It represents how the government can utilize AI technology to provide public services. The general vector for integrating AI technologies in other public administration domains through pilot projects is commonly discussed in the strategies. The strategies include creating a special AI task force within the government to lead this process and developing a procurement mechanism for AI. Raising awareness amongst government employees about the technology of AI through different channels is another domain of action discussed in the strategies, with some countries emphasizing the importance of using AI technologies for public safety and security.

Table 8. Public sector applications.

Diffusion and awareness () is the eighth most common theme; however, compared to the previous seven themes, the remaining six appear in less than half of all the strategies included in the analysis. The theme of diffusion and awareness, thus, incorporates different instruments aimed at spreading information about AI to the broader public for the potential utilization of this technology by other actors. The key awareness-spreading activities include promotional discussions, competitions, hackathons, massive open online courses, nominating the AI ambassador, innovation missions, and a repository of AI challenges.

Table 9. Diffusion and awareness.

Digital infrastructure () is the ninth most discussed theme in the strategies, which refers to different governmental instruments aimed at developing other infrastructural solutions needed for AI. This infrastructure includes high-performance computing and supercomputers, data centers, platforms and marketplaces, infrastructure for network connectivity, and 5G. Smaller countries also tend to emphasize the importance of the local language data resource.

Table 10. Digital infrastructure.

The applications of AI technologies in the context of national security () are discussed in eight documents included in this analysis. This theme discusses proposals, such as investing in defense AI and developing law enforcement applications, military-civilian integration strategy, cybersecurity and defense AI strategies, border clearance, and cyber resilience.

Table 11. National security.

Only seven AI strategies discuss national challenges () and international cooperation (). National challenges include specific measures to support AI development for tackling the most pressing societal issues in the country, which include healthcare, agriculture, education, smart cities, transport and logistics, energy, manufacturing, infrastructure and disaster prevention, and security. International cooperation theme refers to the governmental actions in the international arena, such as participation in international policy groups and discussions and conducting multilateral advocacy, sharing expertise and creating open-source projects, conducting multinational AI R&D projects, developing international AI and data standards, and promoting local AI research internationally.

Table 12. National challenges.

Table 13. International cooperation.

While most countries discuss different financial support mechanisms as part of other themes addressed in this paper, six countries set providing financial support for AI technologies as one of their key goals in AI strategies (). This support is mostly coming directly from the government but also from public-private partnerships.

Table 14. Financial support.

3. LDA topic modeling

3.1. Methodology and data

Topic modeling is an approach to identifying topics that best describe the content of the textual documents (Debnath and Bardhan Citation2020). The topics (list of words) are comprised of the hidden variable relations between words and their appearance throughout the documents. Each of the documents is understood as a combination of different topics (Tong and Zhang Citation2016). The machine learning models cannot understand the meanings and concepts of the sentences but rather understand texts as a combination of words drawn from different baskets of words, where each basket corresponds to one topic (Jelodar et al. Citation2019).

Topic modeling based on LDA is an unsupervised machine learning technique that determines clusters of words (topics) from a set of documents. It then analyzes the proportion of these clusters of words in each document (Debnath and Bardhan Citation2020). Words have a higher probability of belonging to a specific topic if they frequently co-occur in the corpus of texts, which allows for determining what group of words comprises what topic (Altaweel, Bone, and Abrams Citation2019).

The textual data used for topic modeling is the text of 31 national AI strategies. There were three key steps in the analysis. The first step was the pre-processing of the data for the study. All the words in the documents were changed to lowercase letters and lemmatized [conversion of the grammatical form of a word into its base form (Debnath and Bardhan Citation2020)]. Unnecessary symbols were removed, alongside numbers, punctuation marks, and white spaces. English stop-words, such as “a,” “an,” “and,” and “to” were also removed. The authors created an additional list of stop words to delete all words associated with country names, languages, and nationalities. Other words, such as standard abbreviations and disproportionally common terms (such as “AI” or “strategy”), were also removed. The analysis was conducted using R 4.1.3 (R Core Team Citation2022) and several R packages: tm (Feinerer and Hornik Citation2020), topic models (Grün and Hornik Citation2011), ggplot2 (Wickham Citation2016), LDA (Chang Citation2015), and ldatuning (Murzintcev Citation2020).

The second step was converting the documents into a document term matrix and determining the most frequent words in the data set. Similarly to the approaches used by other researchers in the field (Debnath and Bardhan Citation2020; Li et al. Citation2019; Walker et al. Citation2019), four metrics (Arun et al. Citation2010; Cao et al. Citation2009; Deveaud, Sanjuan, and Bellot Citation2014; Griffiths and Steyvers Citation2004) were used as benchmarks to determine the optimal number of topics. It was decided to choose three topics for the analysis based on human validation because increasing the number of topics revealed that it is harder to interpret the topic modeling results.

Finally, the analysis of the results of LDA topic modeling and its visualization was conducted in the third step. We determined what proportion each topic constitutes in each document, what words form each topic, and the probability of each topic in the whole data set. The topics were also given names based on analyzing the most prominent words in each topic.

3.2. Topics identified in national AI strategies

As discussed above, the approximation of the number of topics in the data was conducted with the usage of four benchmarking categories (Arun et al. Citation2010; Cao et al. Citation2009; Deveaud, Sanjuan, and Bellot Citation2014; Griffiths and Steyvers Citation2004) and human judgment based on the results of trial and error approach with the most likely optimal number of topics highlighted through benchmarking. The results of this approach revealed that three topics are the most suitable number for this research, which represent the three general themes: development, control, and promotion.

High-frequency words appearing within each of the three topics were revealed by topic modeling. shows the 20 most frequent words appearing in each topic with the probability of each word’s appearance in this topic. However, the interpretability of the results of initial topic modeling is not always straightforward. Thus, to increase the interpretability of the results, an additional re-ranking of words within each topic was conducted to look at words that appear within the topic less frequently (Alokaili, Aletras, and Stevenson Citation2019)—as it will highlight less general and more specific words from each of the topics. shows the ten words describing each topic after re-ranking. Based on the analysis of the terms that constitute each topic in both iterations, it was decided to assign names to each topic, representing broad general themes contained within them. Thus, the topics were named “Development,” “Control,” and “Promotion.”

Table 15. Most frequent words in each topic.

Table 16. Re-ranked words in each topic.

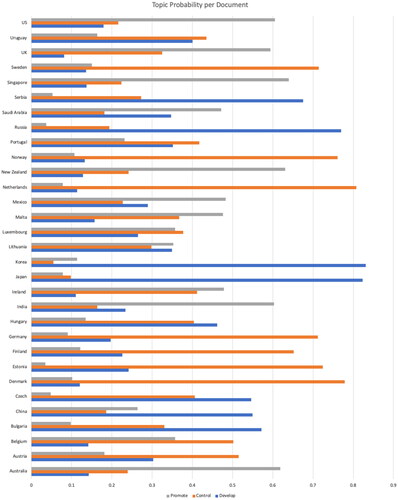

LDA topic modeling allows for determining the proportionality of each topic in the whole collection of documents. The results reveal () that the most probable topic to appear in the collection of the documents is topic 2, “Control,” which constitutes 39% of the whole corpus of documents. This is followed by topic 1, “Development,” and topic 3, “Promote,” which constitute 32 and 28%, respectively.

Table 17. Topic proportion in all documents.

Beyond finding the proportion of the topics in all documents, LDA topic modeling also allows seeing how each topic is distributed within each document. This shows that even though each document has a predominant topic, other topics are also present within it to a lesser degree. shows how each topic is distributed within each document.

As seen in , most of the documents have one of the topics emphasized more than others. In some extreme cases, one topic can constitute over 70% of the document, which is the case for Denmark, Estonia, Germany, Netherlands, New Zealand, and Sweden with the topic “Control.” In contrast, the topic “Development” constitutes more than 70% of Japan’s, South Korea’s, and Russia’s national AI strategies.

However, there are also several documents where the distinction between primary and other topics is less evident. For example, 43% of the national AI strategy of Uruguay is related to the topic “Control,” while 40% of the document is related to the topic “Development.” A similar situation is seen in Hungary’s and Portugal’s strategies. While Ireland and Malta emphasize “Control” and “Promotion” nearly equally.

4. Unified typology

The results of both methodological approaches allow for the creation of a unified typology based on the prioritization of different themes within national AI strategy. To compensate for the shortcomings of both methodologies, the final typology is based equally on the results of both methods.

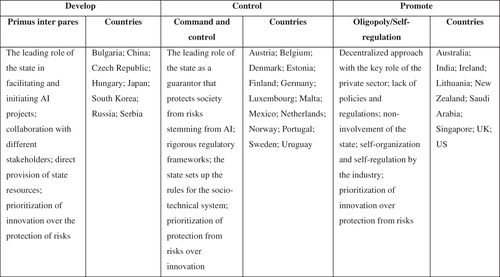

In the first step, we group the 13 goals of national AI strategies found through qualitative content analysis into three broad themes based on the government’s actions: Development, Control, and Promotion (). The theme “Development” includes R&D, Public sector applications, Infrastructure, and National Challenges. The theme “Control” includes Ethics, Regulation, Data, and National Security. The theme “Promotion” includes Human capital, Private sector support, Diffusion, International cooperation, and Financial support.

Table 18. State’s roles.

In the second step, we determine which proportion each theme occupies in each national AI strategy and develop a typology based on qualitative content analysis. We first look at how many functions (from ) constituting one theme are present in the text of a strategy and what is the proportion of this theme in comparison with two other themes (). For example, out of eight functions in total, Ireland has two functions (R&D and Public sector applications) in the theme “Development” (25%), two functions (Regulation and Data) in the theme “Control” (25%), and four functions (Human capital, Private sector support, Diffusion, and International Cooperation) in the theme “Promotion” (50%). Thus, qualitative analysis results show that Ireland prioritizes promoting AI technologies over its development and control. However, most countries equally emphasize two or three themes, such as Australia (33.3% for each theme). This complicates the derivation of a typology just from the qualitative content analysis results.

Table 19. Theme prioritization based on qualitative content analysis.

In the third step, we look at the results of LDA topic modeling to see what proportion each topic occupies in each document (). Our final typology is based on adding the proportions from qualitative content analysis to the proportions from LDA topic modeling to see which theme is emphasized more when the two are combined. For example, for Lithuania, qualitative content analysis reveals that 20% of the state’s functions articulated in the national AI strategy are related to Development, while 40% are to Control and Promotion, respectively (). LDA topic modeling results show that 34% of Lithuanian strategy is devoted to the topic “Development,” while 29 and 35% are to “Control” and “Promotion,” respectively (). The combination of the two proportions reveals that 27% of Lithuania’s national AI strategy is related to Development, 34.5% to Control, and 37.5% to Promotion. Thus, Lithuania prioritizes “Promotion” overall. The countries are then grouped accordingly. shows the final grouping of the countries under three categories of “Development,” “Control,” and “Promotion,” as well as the summary of the key characteristics of these categories.

5. Discussion

The analysis results reveal a typology of national AI strategies grouped around three themes representing the state’s actions in governing AI. These categories (Development, Control, and Promotion) are derived from the work of other researchers investigating the role of the state in governing the socio-technical systems (Borras and Edler Citation2014; Borrás and Edler Citation2020) and the socio-technical system of AI in particular (Ulnicane et al. Citation2021).

The typology shows that eight countries prioritize Development. These include several European countries from a former Soviet bloc, Russia, and countries in East Asia (China, South Korea, and Japan). According to our typology, they emphasize R&D, application of AI technologies in the public sector, development of the infrastructure for AI technologies, and national challenges. The state takes the leading role in facilitating and initiating AI projects in this group. This does not mean that the state acts as the sole developer of AI but instead uses its resources to promote a direction for technological development, which the private sector will adopt.

There are peculiarities in how the state engages in AI activities in these countries, such as actively monitoring and engaging with the work of technology companies to achieve national priorities in China or getting rid of regulatory barriers to the development of AI by the private sector in Russia. Nevertheless, on a macro level, this type of governance is most closely associated with a primus inter pares mode—the state takes the leading role in facilitating the collaboration with other stakeholders, but the solutions are not directly developed by the state (Borrás and Edler Citation2020).

The unique feature of the countries in this group is that the state takes the leading role in the governance of the emerging socio-technical system of AI but does it to facilitate and encourage the advances in this domain through the provision of state resources and collaboration with the public sector. As such, the countries in this group prioritize innovation over protection from risks, but not by designing a hands-off governance architecture. Instead, it is a mode of AI governance where the state chooses the trajectory for the technology’s development and then navigates other stakeholders to join on this path.

This mode of governance is best suited for utilizing the technologies of AI on a macro scale for achieving the government’s goals because it has a very high mobilizing potential for engaging various stakeholders without compromising on rigid strategy. The consequences of this approach for governing AI in the near future can be seen in establishing country-scale projects, such as smart cities or social scoring systems in China in collaboration with the private sector, or using AI to achieve an ambitious concept of society 5.0 in Japan.

The group “Control” primarily consists of the countries from the EU, together with Norway, Mexico, and Uruguay. The countries in this group prioritize AI ethics, regulation of AI, protection and utilization of data, and application of AI for national security purposes. In these countries, the state takes the role of guarantor and actively tries to protect society from risks stemming from applications of AI. This does not mean that all developments and applications of AI are under direct state control. Instead, the state tries to develop a more rigorous regulatory framework for this technology and steer its development toward mitigating risks.

The prevalence of countries from the EU in this group signals the stance that the union takes regarding AI. By releasing the draft of arguably the most developed hard law for AI (the AI Act), the EU sees the need to develop a framework for protecting the fundamental rights of citizens. As the AI Act is a union-level regulation, the governance initiatives from the country members should be aligned with it. Norway, though not a member of the EU, is a part of the free trade zone, which can be the reason why its national AI strategy also prioritizes Control. While for Mexico and Uruguay, the theme of Control is emphasized in their documents more than other themes, but the difference with other themes is insignificant. This can signal that the state may prioritize and take on other roles in the future.

This mode of governance is most closely associated with command and control, where the state takes up the leading role in bringing change to the socio-technical system for AI. This does not mean that most of the technologies will be developed and deployed by the state directly, but that the state will be more active in setting up the rules for the functioning of the whole socio-technical system. The countries in this group prioritize protection from the risks stemming from AI over innovation. As such, in the long run, policies and regulations developed by the state will play a major role in how AI technologies are developed and utilized in these countries.

The group “Promotion” has a more diverse list of countries, which includes the UK, the US, Singapore, Saudi Arabia, New Zealand, Lithuania, Ireland, India, and Australia. The countries in this group emphasize the development of human capital, provision of support to the private sector, promotion of information about AI, international cooperation, and direct financial support for AI development from the government. In these countries, the state takes the role of a promoter and facilitator of AI projects. Its role in the governance of this socio-technical system is more indirect, as it tries to create the conditions within the country for other stakeholders to take on the development of AI.

The difference between the first group and the last group lies in how the state engages in the governance of the socio-technical system of AI. While the first group collaborates with other stakeholders to steer the development of AI toward national goals, the countries in this group promote a more decentralized approach with more responsibility placed on the private sector. Similarly to the first group, it prioritizes innovation over protection from risks but places more trust in the self-organizing power of the industry.

The countries in this group will place less importance on the state’s involvement in the socio-technical system of AI and have fewer protective policies and regulations. This is already the case in the UK, where the regulatory strategy for AI in the UK is based on a pro-innovation approach. Similar tendencies can be observed in the US, where there are no federal-level regulations for this technology. At the same time, Ireland has a long-running reputation for being a tax haven for technological companies.

This mode of governance resembles both oligopoly and self-regulation. On the one hand, the lack of the state’s involvement in the governance of AI creates opportunities for a few big firms to take over the whole market. On the other hand, the state’s non-involvement also creates a non-hierarchical decentralized system, the development of which is primarily (if not exclusively) driven by private actors. In the long run, this can either lead to a very oligopolistic or to a vibrant decentralized market, where the state will be primarily responsible for facilitating and supporting the development of AI, with little emphasis placed on protection from AI risks.

6. Conclusion

This research project analyzed national AI strategies of 31 countries published between 2017 and 2021. The analysis of these documents consisted of two parts—qualitative content analysis and LDA topic modeling. The qualitative content analysis revealed thirteen thematic functions of the state: Human Capital, Ethics, R&D, Data, Support for the Private Sector, Public Sector Applications, Diffusion and Awareness, Digital Infrastructure, National Security, National Challenges, International Cooperation, and Financial Support. These functions were grouped into three broad themes, “Development,” “Control,” and “Promotion,” which are derived from the typology of the state’s role in governing socio-technical systems (Borras and Edler Citation2014; Borrás and Edler Citation2020).

LDA topic modeling results reveal that all documents can be grouped around the same three general themes based on the words that the three topics are comprised of. We developed a unified typology based on the proportions that each general theme occupies in the text of each national AI strategy, as found through qualitative content analysis and LDA topic modeling. The countries are grouped under umbrella themes “Development,” “Control,” and “Promotion” based on the combined prioritization of one of the themes in their texts.

The typology shows that European countries from a former Soviet bloc, China, and countries in East Asia tend to emphasize the theme of “Development.” The state takes the coordinating role in the governance of the socio-technical system of AI in this group as it actively collaborates with different stakeholders and directly and indirectly facilitates the development of AI. The countries from the EU tend to prioritize the theme of “Control,” which can be determined by the union-level attempt to actively regulate this technology and protect the fundamental rights of citizens from AI risks. Countries in the group “Promotion,” such as the UK, the US, or Ireland, choose a more indirect approach to governing AI. Instead of being directly involved in the development of AI, the state acts as a promoter, trying to facilitate innovation through the work of other stakeholders.

These findings have broader policy implications. First, this typology helps explain the differences between how countries see the state’s role in governing the socio-technical system of AI. Second, this understanding of early differences in the design of the governance regime can be a helpful lens for looking at the evolution of approaches to governing this technology in the long run. Third, this typology can be used by policymakers in countries that do not yet have any policy interventions for governing AI, to decide what priority to take. Alternatively, policymakers from the countries included in the typology may reconsider some of their priorities in comparison to other countries.

As we only focus on one policy document—national AI strategy—future research projects should look at the landscape of AI governance more holistically and include other policy documents to develop a more comprehensive typology. The results of our analysis show that qualitative content analysis and LDA topic modeling can be complementary methodologies for this task. Additionally, empirically-rich case studies of the governance regimes for AI in different countries can be used to determine the unique roles that the state takes in each context, based on 13 roles proposed by Borrás and Edler (Citation2020).

Supplemental Material

Download Zip (1 MB)Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Abbott, K. 2013. “Introduction: The Challenges of Oversight for Emerging Technologies.” In Innovative Governance Models for Emerging Technologies, edited by G. Marchant, K. Abbott, and B. Allenby, 1–16. Edward Elgar Publishing. doi:10.4337/9781782545644.00006.

- Abbott, K. W., G. E. Marchant, and E. A. Corley. 2012. “Soft Law Oversight Mechanisms for Nanotechnology.” Jurimetrics 52 (3): 279–312.

- Algorithm Watch. 2022. AI Ethics Guidelines Global Inventory. https://inventory.algorithmwatch.org/

- Alokaili, A., N. Aletras, and M. Stevenson. 2019. “Re-Ranking Words to Improve Interpretability of Automatically Generated Topics.” ArXiv:1903.12542 [Cs]. http://arxiv.org/abs/1903.12542

- Altaweel, M., C. Bone, and J. Abrams. 2019. “Documents as Data: A Content Analysis and Topic Modeling Approach for Analyzing Responses to Ecological Disturbances.” Ecological Informatics 51: 82–95. doi:10.1016/j.ecoinf.2019.02.014.

- Arun, R., V. Suresh, C. E. Veni Madhavan, and M. N. Narasimha Murthy. 2010. “On Finding the Natural Number of Topics with Latent Dirichlet Allocation: Some Observations.” In Advances in Knowledge Discovery and Data Mining, edited by M. J. Zaki, J. X. Yu, B. Ravindran, and V. Pudi, 391–402. Springer. doi:10.1007/978-3-642-13657-3_43.

- Bareis, J., and C. Katzenbach. 2022. “Talking AI into Being: The Narratives and Imaginaries of National AI Strategies and Their Performative Politics.” Science, Technology, & Human Values 47 (5): 855–881. doi:10.1177/01622439211030007.

- Bellanova, R., and M. de Goede. 2022. “The Algorithmic Regulation of Security: An Infrastructural Perspective.” Regulation & Governance 16 (1): 102–118. doi:10.1111/rego.12338.

- Binns, R. 2022. “Human Judgment in Algorithmic Loops: Individual Justice and Automated Decision-Making.” Regulation & Governance 16 (1): 197–211. doi:10.1111/rego.12358.

- Borras, S., and J. Edler. 2014. The Governance of Socio-Technical Systems: Explaining Change. Cheltenham: Edward Elgar.

- Borrás, S., and J. Edler. 2020. “The Roles of the State in the Governance of Socio-Technical Systems’ Transformation.” Research Policy 49 (5): 103971. doi:10.1016/j.respol.2020.103971.

- Cao, J., T. Xia, J. Li, Y. Zhang, and S. Tang. 2009. “A Density-Based Method for Adaptive LDA Model Selection.” Neurocomputing 72 (7–9): 1775–1781. doi:10.1016/j.neucom.2008.06.011.

- Chang, J. 2015. LDA: Collapsed Gibbs Sampling. Methods for Topic Models. R package version 1.4.2. https://CRAN.R-project.org/package=lda

- Chatterjee, S. 2020. “AI Strategy of India: Policy Framework, Adoption Challenges and Actions for Government.” Transforming Government: People, Process and Policy 14 (5): 757–775. doi:10.1108/TG-05-2019-0031.

- Coglianese, C., and D. Lehr. 2018. Transparency and Algorithmic Governance (SSRN Scholarly Paper ID 3293008). Social Science Research Network. https://papers.ssrn.com/abstract=3293008

- Debnath, R., and R. Bardhan. 2020. “India Nudges to Contain COVID-19 Pandemic: A Reactive Public Policy Analysis Using Machine-Learning Based Topic Modelling.” PLOS One 15 (9): e0238972. doi:10.1371/journal.pone.0238972.

- Deveaud, R., E. Sanjuan, and P. Bellot. 2014. “Accurate and Effective Latent Concept Modeling for Ad Hoc Information Retrieval.” Document Numérique 17 (1): 61–84. doi:10.3166/dn.17.1.61-84.

- Eyert, F., F. Irgmaier, and L. Ulbricht. 2022. “Extending the Framework of Algorithmic Regulation. The Uber Case.” Regulation & Governance 16 (1): 23–44. doi:10.1111/rego.12371.

- Feinerer, I., and K. Hornik. 2020. tm: Text mining package. R package version 0.7-8. https://CRAN.R-project.org/package=tm

- Fjeld, J., N. Achten, H. Hilligoss, A. Nagy, and M. Srikumar. 2020. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-based Approaches to Principles for AI. https://dash.harvard.edu/handle/1/42160420

- Griffiths, T. L., and M. Steyvers. 2004. “Finding Scientific Topics.” Proceedings of the National Academy of Sciences of the United States of America 101 (suppl_1): 5228–5235. doi:10.1073/pnas.0307752101.

- Gritsenko, D., and M. Wood. 2022. “Algorithmic Governance: A Modes of Governance Approach.” Regulation & Governance 16 (1): 45–62. doi:10.1111/rego.12367.

- Grün, B., and K. Hornik. 2011. “Topicmodels: An R Package for Fitting Topic Models.” Journal of Statistical Software 40 (13): 1–30. doi:10.18637/jss.v040.i13.

- Gutierrez, C. I., and G. E. Marchant. 2021. A Global Perspective of Soft Law Programs for the Governance of Artificial Intelligence (SSRN Scholarly Paper ID 3855171). Social Science Research Network. doi:10.2139/ssrn.3855171.

- Guttierez, and Marchant 2021. How soft law is used in AI governance. Brookings, May 27. https://www.brookings.edu/techstream/how-soft-law-is-used-in-ai-governance/

- Hagendorff, T. 2020. “The Ethics of AI Ethics: An Evaluation of Guidelines.” Minds and Machines 30 (1): 99–120. doi:10.1007/s11023-020-09517-8.

- Isoaho, K., D. Gritsenko, and E. Mäkelä. 2021. “Topic Modeling and Text Analysis for Qualitative Policy Research.” Policy Studies Journal 49 (1): 300–324. doi:10.1111/psj.12343.

- Jelodar, H., Y. Wang, C. Yuan, X. Feng, X. Jiang, Y. Li, and L. Zhao. 2019. “Latent Dirichlet Allocation (LDA) and Topic Modeling: Models, Applications, A Survey.” Multimedia Tools and Applications 78 (11): 15169–15211. doi:10.1007/s11042-018-6894-4.

- Jobin, A., M. Ienca, and E. Vayena. 2019. “The Global Landscape of AI Ethics Guidelines.” Nature Machine Intelligence 1 (9): 389–399. doi:10.1038/s42256-019-0088-2.

- Katzenbach, C., and L. Ulbricht. 2019. “Algorithmic Governance.” Internet Policy Review 8 (4): 1–18. doi:10.14763/2019.4.1424.

- Kosta, E. 2022. “Algorithmic State Surveillance: Challenging the Notion of Agency in Human Rights.” Regulation & Governance 16 (1): 212–224. doi:10.1111/rego.12331.

- Kumar, A. 2021. National AI Policy/Strategy of India and China: A Comparative Analysis. https://ris.org.in/sites/default/files/Publication%20File/DP%20265%20Amit%20Kumar.pdf

- Li, Y., B. Rapkin, T. M. Atkinson, E. Schofield, and B. H. Bochner. 2019. “Leveraging Latent Dirichlet Allocation in Processing Free-Text Personal Goals among Patients Undergoing Bladder Cancer Surgery.” Quality of Life Research 28 (6): 1441–1455. doi:10.1007/s11136-019-02132-w.

- Marchant, G. 2019. ‘Soft Law’ Governance Of Artificial Intelligence | AI Pulse. https://aipulse.org/soft-law-governance-of-artificial-intelligence/

- Morley, J., A. Elhalal, F. Garcia, L. Kinsey, J. Mökander, and L. Floridi. 2021. “Ethics as a Service: A Pragmatic Operationalisation of AI Ethics.” Minds and Machines 31 (2): 239–256. doi:10.1007/s11023-021-09563-w.

- Morley, J., L. Floridi, L. Kinsey, and A. Elhalal. 2020. “From What to How: An Initial Review of Publicly Available AI Ethics Tools, Methods and Research to Translate Principles into Practices.” Science and Engineering Ethics 26 (4): 2141–2168. doi:10.1007/s11948-019-00165-5.

- Morley, J., L. Kinsey, A. Elhalal, F. Garcia, M. Ziosi, and L. Floridi. 2021. “Operationalising AI Ethics: Barriers, Enablers and Next Steps.” AI & Society. doi:10.1007/s00146-021-01308-8.

- Murzintcev, N. 2020. ldatuning: Tuning of the Latent Dirichlet Allocation Models Parameters. R package version 1.0.2. https://CRAN.R-project.org/package=ldatuning

- Nesta. 2022. AI Governance Database. https://www.nesta.org.uk/data-visualisation-and-interactive/ai-governance-database/

- OECD. 2022. OECD AI Policy Observatory. https://www.oecd.ai/

- ÓhÉigeartaigh, S. S., J. Whittlestone, Y. Liu, Y. Zeng, and Z. Liu. 2020. “Overcoming Barriers to Cross-Cultural Cooperation in AI Ethics and Governance.” Philosophy & Technology 33 (4): 571–593. doi:10.1007/s13347-020-00402-x.

- R Core Team. 2022. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. https://www.R-project.org/.

- Radu, R. 2021. “Steering the Governance of Artificial Intelligence: National Strategies in Perspective.” Policy and Society 40 (2): 178–193. doi:10.1080/14494035.2021.1929728.

- Roberts, H., J. Cowls, J. Morley, M. Taddeo, V. Wang, and L. Floridi. 2021. “The Chinese Approach to Artificial Intelligence: An Analysis of Policy, Ethics, and Regulation.” AI & Society 36 (1): 59–77. doi:10.1007/s00146-020-00992-2.

- Schiff, D., J. Biddle, J. Borenstein, and K. Laas. 2020. “What’s Next for AI Ethics, Policy, and Governance? A Global Overview.” Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, 153–158. doi:10.1145/3375627.3375804.

- Taylor, R. 2020. “Quantum Artificial Intelligence: A “Precautionary” U.S. Approach?” Telecommunications Policy 44 (6): 101909. doi:10.1016/j.telpol.2020.101909.

- Tong, Z., and H. Zhang. 2016. “A Text Mining Research Based on LDA Topic Modelling.” Computer Science & Information Technology (CS & IT) Conference Proceedings 6 (6): 201–210. doi:10.5121/csit.2016.60616.

- Tzachor, A., J. Whittlestone, L. Sundaram, and S. Ó. hÉigeartaigh. 2020. “Artificial Intelligence in a Crisis Needs Ethics with Urgency.” Nature Machine Intelligence 2 (7): 365–366. doi:10.1038/s42256-020-0195-0.

- Ulnicane, I., W. Knight, T. Leach, B. C. Stahl, and W.-G. Wanjiku. 2021. “Framing Governance for a Contested Emerging Technology: Insights from AI Policy.” Policy and Society 40 (2): 158–177. doi:10.1080/14494035.2020.1855800.

- van Berkel, N., E. Papachristos, A. Giachanou, S. Hosio, and M. B. Skov. 2020. “A Systematic Assessment of National Artificial Intelligence Policies: Perspectives from the Nordics and Beyond.” Proceedings of the 11th Nordic Conference on Human-Computer Interaction: Shaping Experiences, Shaping Society, 1–12. doi:10.1145/3419249.3420106.

- Van Roy, V., Rossetti, F., Perset, K., Galindo-Romero, L. (2021) AI Watch - National strategies on Artificial Intelligence: A European perspective, 2021 edition. EUR 30745 EN, Publications Office of the European Union, Luxembourg, ISBN 978-92- 76-39081-7, doi:10.2760/069178, JRC122684.

- Walker, Richard M., Yanto Chandra, Jiasheng Zhang, and Arjen Witteloostuijn. 2019. “Topic Modeling the Research-Practice Gap in Public Administration.” Public Administration Review 79 (6): 931–937. doi:10.1111/puar.13095.

- Whittlestone, J., R. Nyrup, A. Alexandrova, and S. Cave. 2019. “The Role and Limits of Principles in AI Ethics: Towards a Focus on Tensions.” Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, 195–200. doi:10.1145/3306618.3314289.

- Wickham, H. 2016. ggplot2: Elegant Graphics for Data Analysis. New York, NY: Springer-Verlag.

- Wicks, D. 2017. “The Coding Manual for Qualitative Researchers (3rd Edition).” Qualitative Research in Organizations and Management: An International Journal 12 (2): 169–170. doi:10.1108/QROM-08-2016-1408.

- Yeung, K. 2018. “Algorithmic Regulation: A Critical Interrogation.” Regulation & Governance 12 (4): 505–523. doi:10.1111/rego.12158.

- Yeung, K., and L. A. Bygrave. 2022. “Demystifying the Modernized European Data Protection Regime: Cross-Disciplinary Insights from Legal and Regulatory Governance Scholarship.” Regulation & Governance 16 (1): 137–155. doi:10.1111/rego.12401.

- Zeng, Y., E. Lu, and C. Huangfu. 2018. “Linking Artificial Intelligence Principles.” ArXiv:1812.04814. http://arxiv.org/abs/1812.04814

Appendix 1.

Qualitative content analysis

The qualitative content analysis consisted of three steps. In the first step, we inductively coded all the text relevant to the government’s functions and actions in the texts of 31 national AI strategies. The coding was conducted in NVivo for Mac version 12. In the coding process, we primarily focused on the relevant parts of the policy documents, without coding the whole document line by line, excluding Supplementary Materials, such as budget figures.

The codes were mapped together in the second step through a code-mapping process. We could group the codes into organized categories by having an overview of the whole corpus of codes.

In the third step, we collected similarly coded passages in the data to derive broader categories that describe a major function the government is trying to exercise via its actions by applying a pattern coding technique. This process resulted in 13 “meta codes,” which represent high-level functions that the governments intend to perform in governing the socio-technical system of AI. These categories include human capital, ethics, R&D, regulation, data, private sector support, public sector applications, diffusion and awareness, digital infrastructure, national security, national challenges, international cooperation, and financial support.

Appendix 2.

LDA topic modeling

To prepare the data for LDA Topic Modeling, we first created files with txt resolution for each of the 31 documents with its text. These files are attached as the data alongside the manuscript. We then uploaded this data into RStudio and created a corpus of data. The process of LDA Topic Modeling consisted of three major steps: cleaning the data, running the LDA model, and analyzing and visualizing the results.

In the data cleaning process, we first transformed the whole corpus of data into lowercase letters. We then deleted symbols, such as “–,” “/,” “’,” etc. We then deleted the English stop words, which were downloaded from: https://ladal.edu.au/resources/stopwords_en.txt. Next, we deleted numbers, punctuations, and whitespaces from the corpus of data. We also deleted words associated with the country names, nationalities, and overused words, such as AI and strategy. After that, we lemmatized the words.

In the second step of the LDA Topic Modeling, we created a document term matrix based on the cleaned data from the previous step. We then used the four techniques to find the optimal number of topics that were discussed in the manuscript. Finally, we created and ran an LDA model with three topics.

In the last step, we proceeded with analyzing and visualizing the results of LDA topic modeling. We first found the most common topic per document, followed by finding the 20 most common words per topic. We also found the probability of words for each topic. To increase the interpretability of topics, we re-ranked the words within them to find more unique words for each topic. Based on the results of both iterations of looking at common words, we named the topics: Development, Control, and Promotion.

We found the probabilities that each topic occupies in each document, which was used in the combined typology discussed in the manuscript. Finally, we found the proportion that topics occupy in the whole corpus of data. These results were visualized with Microsoft Office tools.