Abstract

Background

General practitioners (GPs) are confronted with a wide variety of clinical questions, many of which remain unanswered.

Methods

In order to assist GPs in finding quick, evidence-based answers, we developed a learning program (LP) with a short interactive workshop based on a simple three-step-heuristic to improve their search and appraisal competence (SAC). We evaluated the LP effectiveness with a randomized controlled trial (RCT). Participants (intervention group [IG] n=20; control group [CG] n=31) rated acceptance and satisfaction and also answered 39 knowledge questions to assess their SAC. We controlled for previous knowledge in content areas covered by the test.

Results

Main outcome – SAC: within both groups, the pre–post test shows significant (P=0.00) improvements in correctness (IG 15% vs CG 11%) and confidence (32% vs 26%) to find evidence-based answers. However, the SAC difference was not significant in the RCT.

Other measures

Most workshop participants rated “learning atmosphere” (90%), “skills acquired” (90%), and “relevancy to my practice” (86%) as good or very good. The LP-recommendations were implemented by 67% of the IG, whereas 15% of the CG already conformed to LP recommendations spontaneously (odds ratio 9.6, P=0.00). After literature search, the IG showed a (not significantly) higher satisfaction regarding “time spent” (IG 80% vs CG 65%), “quality of information” (65% vs 54%), and “amount of information” (53% vs 47%).

Conclusion

Long-standing established GPs have a good SAC. Despite high acceptance, strong learning effects, positive search experience, and significant increase of SAC in the pre–post test, the RCT of our LP showed no significant difference in SAC between IG and CG. However, we suggest that our simple decision heuristic merits further investigation.

Introduction

Unanswered questions

In primary care, patients present a wide spectrum of clinical problems to their general practitioners (GPs). The questions that are brought up in these encounters touch upon virtually every clinical topic.Citation1–Citation3 According to a recent systematic review, ten patient visits left GPs with approximately six unanswered questions on average. Only ~50% of GPs attempted to find an answer.Citation4 The main reasons given by GPs for not pursuing their open questions further were lack of time and doubt that relevant information was available at all.

Available information

There is a discrepancy between the situation described earlier and the large amount of medical information available. Structured databases and other sources are accessible via the Internet. Online evidence has the potential to provide quick answers to physicians’ questions.Citation5

Clinicians’ use of electronic resources has been reported to be increasing.Citation6 Examinations of resources used in primary care have revealed that, after textbooks and discussions with other physicians, Internet use represents the third most frequently used resource.Citation4,Citation6–Citation10

Answering questions

Evidence-based medicine (EbM) has provided a framework for accessing, appraising, and using the medical literature relevant to individual patients. One of its pioneers, David Sackett, coined the paradigm of “Five Steps”: question formulation, literature search, critical appraisal, implementation of results, and evaluation.Citation11 However, time constraints and lack of skills prevent most physicians from following this model.Citation1,Citation10,Citation12–Citation16

As an alternative to the classical appraising mode, the McMaster Group already suggested the searching mode, which primarily uses databases of processed information for quick answers to questions arising in everyday care situations. Even physicians familiar with EbM appraisal methods would resort to less sophisticated strategies for their everyday clinical questions. Slawson and Shaughnessy have formulated a need for simple heuristics not just to appraise but to manage medical information.Citation17,Citation18

Methods

Learning program (LP)

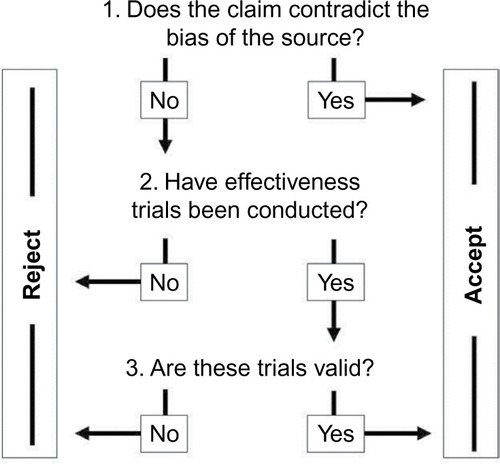

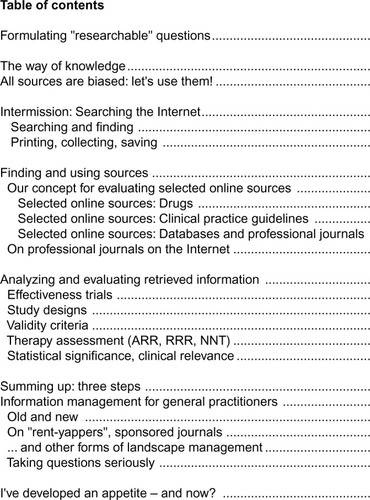

We developed an LP that is based on a simple three-step heuristic (). The aim of the program is to help GPs use Internet sources for their clinical questions. This can be taught in interactive seminars. All information is also available in the form of a booklet for individual study. A CD-ROM with relevant URLs and background material helps GPs put into practice what they have learned. In order to improve the effectiveness of the program, we used multimedia (workshop [WS], booklet, CD, Internet) and multiple exposures (lectures, exercises, homework).Citation19

Simple heuristic

The content of the LP () was structured around the three-step heuristic,Citation20 shown in . Its application ensures that GPs search and appraise sources in a methodological way and also helps them decide when to end their search. We regard this as especially important because GPs often feel overwhelmed by the amount of available information.Citation21–Citation23 This set of three questions is not meant to be followed strictly in every literature search. It is also meant to encourage GPs to reflect on their own search and appraisal habits.

Figure 2 Workshop table of contents.

Example 1: a commercial manufacturer mentions in the drug-sheet of a lipid-lowering drug that no long-term studies with patient-relevant outcomes have been conducted so far. This information is not in line with the interest of the manufacturer (source); it should therefore be accepted without further search. The claim of a manufacturer that a herbal preparation alleviates tumor pain, however, would not lead to immediate acceptance but should motivate further research.

Example 2: if for a herbal preparation, no formal evaluation, such as a controlled study, can be found, its use should be avoided. Only if the claim is in line with the interest of the source and trials evaluating a particular treatment exist, a more detailed appraisal of study design features is required. Here the most valid and relevant criteria should be checked first, such as:

is this a randomized controlled trial (RCT)?

is the main outcome clinically relevant?

can the choice of the control treatment be justified?

which patients have been recruited into the study (external generalizability)?

Participants

GPs located in five different cities in Germany were invited to take part in the main study. Participants had to be motivated to attend two WS sessions and contribute to the evaluation.

Stratification

GPs were asked whether they had performed an Internet search for a clinical question during the previous year. The answer (yes/no) was used for stratification.

Randomization

The computer-assisted randomization was undertaken by the central clinical trials unit at the University of Marburg. Within the two stratification-groups, permuted blocks of variable length were formed, including one to four participants. According to the order of registration, the GPs were assigned to either the intervention group (IG) or the control group (CG). In order to maintain the allocation concealment, the participants were not given details about the study design or the allocation process. The control arm was a waiting group of GPs who were offered the WS after study completion and evaluation of the main outcome.

Intervention

GPs attended two interactive small group WS in a computer classroom. shows the contents of the WS. Sessions provided information on databases that are useful for GPs. Their scope, advantages, and disadvantages were discussed. There was an emphasis on processed evidence-based information as opposed to original papers, such as drug bulletins, clinical evidence (British Medical Journal) or national and international guidelines. Practical search strategies, bias of sources, and simple criteria to check the validity of information were covered. There was ample opportunity for hands-on exercises and discussion of participants’ experience with the sources provided. WS lasted at least 2 hours each with a 4-week interval in-between. More specific background information can be found in the “Supplementary materials” section (English) and on the Internet (German).Citation24

Study outcomes

The primary outcome of the study was GPs’ search and appraisal competence (SAC). The secondary outcome was to determine the acceptance and satisfaction with the WS.

WS acceptance

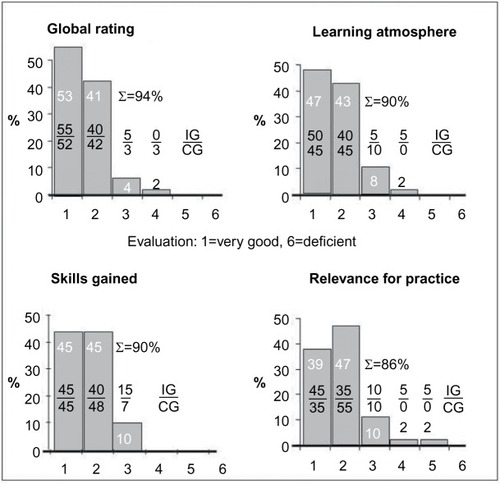

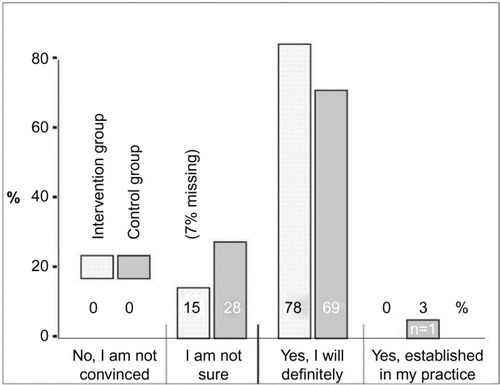

We asked GPs to evaluate both WS using a semi-standardized questionnaire each time directly after the event. Fifteen questions in a 6-point Likert scale covered organization, educational process, and relevance of content (1= very good, 6= deficient). Finally, they estimated their own commitment for change regarding content covered by the WS in a standardized way.Citation25

Search satisfaction

GPs were asked to rate the relevance of content and their satisfaction using the strategies learnt. Acceptance and satisfaction were evaluated not only by IG participants but also by control subjects after their participation in the WS.

SAC

We evaluated GPs’ SAC by additionally (after pre–post test) correct answered clinical questions.

Clinical questions

Thirty-nine clinical questions from seven clinical domains were developed by the study team. Questions concerned prevention, therapy, diagnosis, and prognosis. Most questions were in multiple-choice format, but GPs were also asked to elaborate on some open questions. The appropriateness of answers was checked by experts in EbM and clinical areas covered (see the “Acknowledgments” section).

Pre–post test

All participants had to answer each question twice: first, spontaneously in order to establish their baseline knowledge and again after a thorough search. We could thus control for previous knowledge.

If participants improved, ie, gave an incorrect answer at baseline but a correct answer after thorough search, the answer was scored “+1”. In the case of a correct answer at baseline but a wrong answer after the search, the score was “−1”; no change resulted in “0”. We arrived at an overall SAC score by adding up the single question scores for each participating GP. The result can theoretically vary between −39 (−100%) and +39 (+100%) additional correct answers. Besides the objective professional correctness, we checked the subjective confidence (0%–100%).

Pilot study

Before starting the study, we checked the comprehensibility and the level of difficulty of the 39 clinical questions with a group of 12 GPs. Subsequently, we held the workshop (WS) with another 14 GPs to improve methods and content including learning material. These GPs did not take part in the main study.

Statistics

The data analyses were performed with SPSS software (SPSS Inc., Chicago, IL, USA). For baseline comparisons, we report medians, interquartile ranges, and results of the Mann-Whitney U test (P-values) for continuous variables and percentages, and results of asymptotic χ2 tests for categorical variables. Differences in SAC improvement (before vs after; between study arms) were evaluated by the Mann-Whitney U test (P-value). We used the Hodges-Lehmann estimator to obtain the 95% confidence interval for the difference between medians.Citation26

Results

Study participants

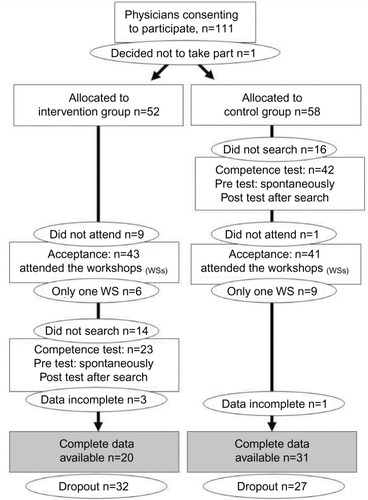

Recruitment, randomization, and flow of participants are shown in . Twelve percent (n=110 of 905) of the GPs originally approached gave written consent to participate and were randomized thereafter. Seventy-six percent (n=84 of 110) of subscribers attended at least one WS. Finally, complete data were available from 46% (n=51 of 110) of the original group. Consequently, the dropout rate was 64% due to delayed information search (n=30 “did not search”), absence (n=10 “did not attend”), and incomplete WS attendance (n=15 “only one WS”) or incomplete data (n=4).

Characterization of study participants

Participants’ characteristics are shown in . In most respects, they resembled the population of GPs in Hessen. The IG and CG did not differ significantly, but in the IG, there were slightly more GPs with Internet search experience and a DocCheck™ password (access to content provided by the pharmaceutical industry).

Table 1 Preconditions of study participants

Acceptance

GPs rated the Continuing Medical Education WS as very positive (). Over 80% of respondents rated all items as good or very good. We obtained similar ratings referring to WS organization and educational process (data not shown). At the end of the WS, most participants (78% in the IG and 69% in the CG) made a commitment to change their information management according to what they had learnt during the WS ().

Literature search experience

In order to find answers to standardized questions, the IG used more sources per topic and spent more time searching than the CG (). Members of the IG were slightly more satisfied with the quality and amount of information as well as the time spent. Ratings for the sources’ trustworthiness and relevance were almost identical in both study arms.

Table 2 Literature search experience

Literature search sources

IG and CG preferred different sources to search literature (). The IG used more Internet sources (+22%) and targeted web sites (+37%). Overall, the WS recommendations on literature review were implemented well (67%, odds ratio 9.6, 95% confidence interval 2.6–36.4; P=0.0008).

Table 3 Sources of information used for literature review

SAC

The improvement in answers to clinical questions was the main outcome measure. In both study arms, GPs performed better with a thorough search than at baseline (). Median improvement was 11% in the CG (Wilcoxon test: P<0.0003) and 15% in the IG (Wilcoxon test: P=0.007). Although IG GPs improved slightly more than controls, the between-group comparison was statistically not significant (P=0.29).

Table 4 Effectiveness measures of search and appraisal competence

Discussion

Our study shows good WS acceptance, strong learning effects, positive search experience, and significant increase of SAC in the pre–post test comparison. Also, SAC improved more in the IG than in the CG although the difference was not statistically significant in the RCT.

The potential of online evidence in order to improve SAC skills of GPs has been demonstrated in controlled laboratory settings. Previous pre–post trials have shown an improvement from 10% to 18% in GPs’ answers to typical clinical scenarios following the use of online evidence.Citation5,Citation25,Citation27 Our results correspond to these findings, with the proportion of correct answers increasing by 15% in the IG ().

The learning effect on the IG could be shown through the implementation of suggestions given in the WS (, odds ratio 9.6) of using targeted Internet sources (odds ratio 4.8), pharma-critical drug bulletins (odds ratio 2.4) or guidelines (odds ratio 2.1). We encouraged our participants to use targeted sources they know well despite their limitations of scope and possible bias because accessing the Internet via unspecific search engines such as Google or Yahoo is prone to error.Citation28,Citation29

In many areas requiring decision making under time pressure and with limited information, fast and frugal heuristics have been shown to be valid and helpful.Citation30 Our three-step heuristicCitation20 () not only provides rules for search and appraisal of validity but also rules for when to stop a search to save time. This seems important because lack of time is one of the main reasons why questions remain unanswered.Citation4 In our study (), the IG invested 17% more amount of time but investigated 36% more sources in this time. Moreover, GPs in the IG were more satisfied with the amount of time invested (+16%), the quality (+11%), and the quantity (+6%) of the information thus obtained.

Our recruitment rates of 12% (, “consenting to participate”) and 6% (“complete data available”), respectively, seem rather low. However, the experience of other studies with GPs in Germany shows that “it is generally possible to enlist 3%–4% of the initially contacted practices”.Citation31 Thus, our recruitment quota of 12% actually indicates that GPs have a relatively large interest in the topic of our study. The positive comments by participants are in line with this idea.

GPs in the CG had more previous methodological knowledge than we had expected. They more often obtained their information from the Internet (59%) than from printed media (31%) or through consultation of colleagues (10%). Literature has so far listed the relative importance of these sources of information in the reverse order.Citation4,Citation10,Citation32 This may be an indication of a powerful CG.

Strengths and limitations of the study

The main strengths are the RCT study design and the inclusion of experienced GPs in private practice because they are often underrepresented in studies of appraisal skills training.Citation33–Citation35

Only 46% () of those approached actually terminated the study completely. Although this limits the external validity of our findings, it reflects the reality of continuing medical education where physicians are usually free to decide which program they want to take part in. Though, study participants’ characteristics were similar to the population of GPs in Hessen and Germany.

More serious is the high dropout rate (54%) which differed between study arms (IG: 62% vs CG: 47%). As a result, the power of the study was limited and our findings therefore failed to reach statistical significance.

Apart from a lack of power due to the small sample size, a ceiling effect remains as a possible explanation for the negative result. Theoretically, even control physicians may operate at such a high level regarding their SAC that a WS of the kind evaluated in this study would not improve the outcome to a relevant degree. We regard this as unlikely. However, there remains the possibility that we recruited GPs with above-average SAC skills. This would be an alternative explanation for the negative findings of our study.

In any case, it is known that training effects become harder to detect with longer professional experience, more previous knowledge, and higher qualifications.Citation5 Furthermore, a longer and more intense learning experience might be required to achieve a measurable increase in SAC.Citation36

Conclusion

Conclusions regarding our learning project: the present RCT could not prove the superiority of our intervention based on the three-step heuristic. Lack of power and above-average SAC skills of the sample are the most likely explanation. Moreover, longer and more intense teaching efforts are required to improve experienced GPs’ skills and change their behavior. On the other hand, the WS was well accepted and participants were highly satisfied. We therefore suggest that educators and researchers evaluate the three-step heuristic in their respective settings.

The high acceptance and proof of effectiveness have encouraged us to continue offering WSs for GPs and to further develop our LP. However, the success of our LP is made complicated by the fact that only two to three consecutive WSs are possible for a group of GPs. We are currently working on optimizing our material for the adoption of a blended learning LP. The initiative required of each participant in a blended learning scenario could help us manage the natural process of self-selection in a constructive way.

General conclusions regarding educational projects: an RCT is an important tool to test effectiveness. Applying only a pre–post test without a CG can easily lead to an overestimation of the effectiveness of an intervention.

Acknowledgments

We wish to thank 137 GPs from the pilot and intervention phase who gave their time to participate in this study. We thank GPs in Bielefeld Giessen, Kassel, Marburg, and Siegen.

We thank Nicole Burchardi, PhD, Clinical Trials Unit at the University of Marburg for statistical advice. We also want to thank experts in EbM for their support in the development of the study questionnaire: Lise Bjerre, MD (Department of Family Medicine, Georg August University of Göttingen, Germany); Judith Günther, MSc (pharmafacts, Freiburg, Germany); Ralf Karger, MD, MSc (Department of Haematology, Philipps-University of Marburg, Germany); Monika Lelgemann, MD, MSc (HTA-Centre, Bremen, Germany); Dagmar Luhmann, PhD (Department of Social Medicine, University of Schleswig-Holstein, Campus Lubeck, Germany); and Antje Timmer, MD (German Cochrane Centre, University of Freiburg, Germany).

The project was funded by the Federal Ministry of Education and Research in Germany (BMBF).

Disclosure

The authors report no conflicts of interest in this work.

References

- CovellDGUmanGCManningPRInformation needs in office practice: are they being met?Ann Intern Med198510345965994037559

- ElyJWBurchRJVinsonDCThe information needs of family physicians: case-specific clinical questionsJ Fam Pract19923532652691517722

- ElyJWLevyBTHartzAWhat clinical information resources are available in family physicians’ offices?J Fam Pract199948213513910037545

- Del FiolGuilhermeWorkmanTEGormanPNClinical questions raised by clinicians at the point of care: a systematic reviewJAMA Intern Med2014174571071824663331

- WestbrookJICoieraEWGoslingASDo online information retrieval systems help experienced clinicians answer clinical questions?J Am Med Inform Assoc200512331532115684126

- ClarkeMABeldenJLKoopmanRJInformation needs and information-seeking behavior analysis of primary care physicians and nurses: a literature reviewHealth Info Libr J201330317819023981019

- KosteniukJGMorganDGD’ArcyCKUse and perceptions of information among family physicians: sources considered accessible, relevant, and reliableJ Med Libr Assoc20131011323723405045

- DwairyMDowellACStahlJThe application of foraging theory to the information searching behavior of general practitionersBMC Fam Pract2011129021861880

- CoumouHCMeijmanFJHow do primary care physicians seek answers to clinical questions? A literature reviewJ Med Libr Assoc2006941556016404470

- ElyJWOsheroffJAChamblissMLEbellMHRosenbaumMEAnswering physicians’ clinical questions: obstacles and potential solutionsJ Am Med Inform Assoc200512221722415561792

- SackettDLRosenbergWMGrayJAHaynesRBRichardsonWSEvidence based medicine: what it is and what it isn’tBMJ1996312702371728555924

- ElyJWOsheroffJAEbellMHAnalysis of questions asked by family doctors regarding patient careBMJ1999319720635836110435959

- CurleySPConnellyDPRichECPhysicians’ use of medical knowledge resources: preliminary theoretical framework and findingsMed Decis Making19901042312412122168

- YoungJMWardJEEvidence-based medicine in general practice: beliefs and barriers among Australian GPsJ Eval Clin Pract20017220121011489044

- CogdillKWFriedmanCPJenkinsCGMaysBESharpMCInformation needs and information seeking in community medical educationAcad Med200075548448610824774

- McCordGSmuckerWDSeliusBAAnswering questions at the point of care: do residents practice EBM or manage information sources?Acad Med200782329830317327723

- SlawsonDCShaughnessyAFObtaining useful information from expert based sourcesBMJ199731470859479499099121

- HaynesRBHollandJCotoiCMcMaster PLUS: a cluster randomized clinical trial of an intervention to accelerate clinical use of evidence-based information from digital librariesJ Am Med Inform Assoc200613659360016929034

- MarinopoulosSSDormanTRatanawongsaNEffectiveness of continuing medical educationEvid Rep Technol Assess (Full Rep)2007149169

- Donner-BanzhoffNSchmidtABaumEDer evidenzbasierte Praktiker: Ein Beitrag zum hausarztlichen Informationsmanagement. [The evidence based practitioner: A contribution to family medicine information management]Z Allg Med20037910501506 German

- SmithRStrategies for coping with information overloadBMJ2010341c712621159764

- HallAWaltonGInformation overload within the health care system: a literature reviewHealth Info Libr J200421210210815191601

- GreenMLRuffTRWhy do residents fail to answer their clinical questions? A qualitative study of barriers to practicing evidence-based medicineAcad Med200580217618215671325

- Donner-BanzhoffNPERLEN: Patientenorientierte Evidenzbasierte Recherche Lernen Entwickeln Nutzen [The PEARL-Project, PEARL: Patient-orientated Evidence-based Appraisal Research Learning]Allgemeinmedizin-Projekte Available from: http://www.allgemein-medizin-projekte.de/index.php?id=22Accessed March 3, 2016

- LoweMRappoltSJaglalSMacdonaldGThe role of reflection in implementing learning from continuing education into practiceJ Contin Educ Health Prof200727314314817876839

- BrunnerEMunzelUNichtparametrische Datenanalyse: Unverbundene Stichproben - Statistik und ihre Anwendungen. [Nonparametric data analysis: Unconnected Sampling - Statistics and its applications]BerlinSpringer2002 German

- McKibbonKALokkerCKeepanasserilAWilczynskiNLHaynesRBNet improvement of correct answers to therapy questions after pubmed searches: pre/post comparisonJ Med Internet Res20131511e24324217329

- BadgettRobert GDyllaDPMegisonSDHarmonEGAn experimental search strategy retrieves more precise results than PubMed and Google for questions about medical interventionsPeerJ20153e91325922798

- McKibbonKAFridsmaDBEffectiveness of clinician-selected electronic information resources for answering primary care physicians’ information needsJ Am Med Inform Assoc200613665365916929042

- GigerenzerGToddPMSimple heuristics that make us smart: Evolution and cognitionNew YorkOxford University Press1999

- GüthlinCBeyerMErlerARekrutierung von Hausarztpraxen für Forschungsprojekte - Erfahrungen aus fünf allgemeinmedizinischen Studien [Recruitment of Family Practitioners for Research - Experiences from Five Studies]ZFA2012884173180

- GormanPNYaoPSeshadriVFinding the answers in primary care: information seeking by rural and nonrural cliniciansStud Health Technol Inform2004107Pt 21133113715360989

- WeberschockTBGinnTCReinholdJChange in knowledge and skills of Year 3 undergraduates in evidence-based medicine seminarsMed Educ200539766567115960786

- HershWRCrabtreeMKHickamDHFactors associated with success in searching MEDLINE and applying evidence to answer clinical questionsJ Am Med Inform Assoc20029328329311971889

- CabellCHSchardtCSandersLCoreyGRKeitzSAResident utilization of information technologyJ Gen Intern Med2001161283884411903763

- WeberschockTDörrJValipourAEvidenzbasierte Medizin in Aus-, Weiter- und Fortbildung im deutschsprachigen Raum: Ein Survey. [Evidence-based medicine teaching activities in the German-speaking area: a survey]Z Evid, Fortbild Qual Gesundhwes20131071512 German23415337

- German Medical AssociationPublished statistics of the Federal Chamber of Physicians in Germany Available from: www.bundesaerztekammer.de/page.asp?his=0.3.1667.6371Accessed May 6, 2016

- Landesarztekämmer HessenDetails of the Regional Chamber of Physicians in Hessen Available from: www.laekh.de/aerzte/mitgliedschaft/mitgliedschaft-mitgliederstatistikenAccessed May 6, 2016

- Deutscher Ärzteverlag GmbH37.Representative sample: Koch K Primary Care in Germany – an International Comparison Available from: www.aerzteblatt.de/pdf/DI/104/38/a2584e.pdfAccessed May 6, 2016