Abstract

Ocular ultrasound is now in increasing demand in routine ophthalmic clinical practice not only because it is noninvasive but also because of ever-advancing technology providing higher resolution imaging. It is however a difficult branch of ophthalmic investigations to grasp, as it requires a high skill level to interface with the technology and provide accurate interpretation of images for ophthalmic diagnosis and management. It is even more labor intensive to teach ocular ultrasound to another fellow clinician. One of the fundamental skills that proved difficult to learn and teach is the need for the examiner to “mentally convert” 2-dimensional B-scan images into 3-dimensional (3D) interpretations. An additional challenge is the requirement to carry out this task in real time. We have developed a novel approach to teach ocular ultrasound by using a novel 3D ocular model. A 3D virtual model is built using widely available, open source, software. The model is then used to generate movie clips simulating different movements and orientations of the scanner head. Using Blender, Quicktime motion clips are choreographed and collated into interactive quizzes and other pertinent pedagogical media. The process involves scripting motion vectors, rotation, and tracking of both the virtual stereo camera and the model. The resulting sequence is then rendered for twinned right- and left-eye views. Finally, the twinned views are synchronized and combined in a format compatible with the stereo projection apparatus. This new model will help the student with spatial awareness and allow for assimilation of this awareness into clinical practice. It will also help with grasping the nomenclature used in ocular ultrasound as well as helping with localization of lesions and obtaining the best possible images for echographic diagnosis, accurate measurements, and reporting.

Introduction

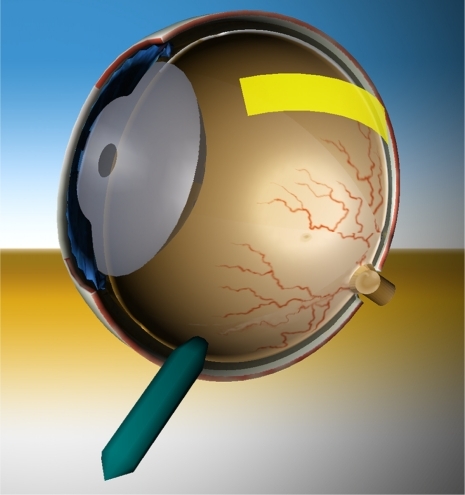

Current ophthalmic simulators demonstrate the equipment setup and 3-dimensional (3D) image perception in a 2D environment. However they do not simulate the 3D nature of the eye so that the trainee does not appreciate the structures within the eye in true 3D, and therefore depth perception and spatial awareness are a problem. Depth perception and spatial awareness are very important in that manipulation of instruments within the eye by the examiner involves distances that are microscopic.

It is critical that ophthalmologists learn in three dimensions for procedures. In ocular ultrasound it is important to know where the resultant ultrasound beam is being placed according to probe position and hence the structures being examined. The most challenging aspect of ocular B-scan ultrasound is the conversion, by the operator, of 2D slice of image into 3D interpretations. This also needs to be realized in real time in order to formulate an echographic diagnostic interpretation. In photocoagulation of the retina and YAG laser capsulotomy it is important to know exactly where and at what depth to aim the laser so as not to damage adjacent structures that can potentially have blinding consequences in real patient situations.

Use of 3D models will provide a safer environment in which clinicians can learn. Hence patients will not need to have procedures done by less experienced clinicians until they are deemed competent by way of learning from these models.

There is no current technology that can simulate the procedure in true 3D to give the ophthalmologist even more insight into the complexity of the procedure they are simulating.

We have studied extensively 3D computer technology and its use in teaching medical procedures.Citation1–Citation6

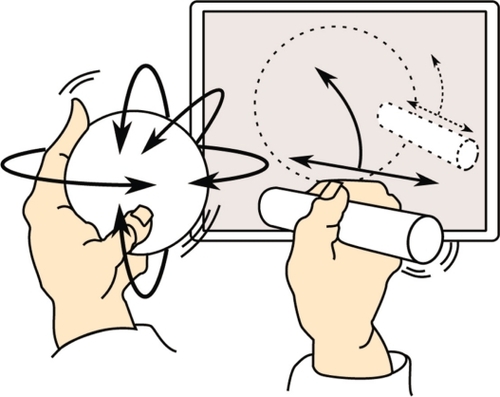

The proposed solution is real 3D and the team has developed an eye model that can be manipulated in 3 dimensions to give a user-defined perspective of the model eye that is an ideal teaching tool (see ).

This technology can be integrated into simulators of medical equipment, such as ophthalmoscopes, ultrasound probes, and lasers, to give real time 3D feedback on ophthalmologists’ aiming and operation of the equipment and simulate the consequences of their actions, such as measuring distances in ultrasound.

The system can evaluate their technique and compare their performance against a gold standard performed by a competent operator. The system can give them feedback and illustrate the gold standard performance for educational purposes.

Methods

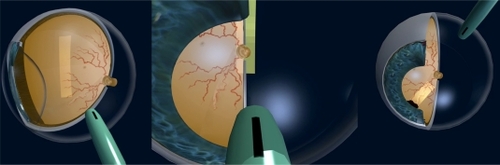

We have developed a novel approach to teach ocular ultrasound by using a novel 3D ocular model. A 3D virtual model is built using widely available, open source, software (see ). The model is then used to generate movie clips simulating different orientations of the scanner head. Using Blender, Quicktime motion clips are choreographed and collated into interactive quizzes and other pertinent pedagogical media. The process involves scripting motion vectors, rotation, and tracking, of both the virtual stereo camera and the model.

The resulting sequence is then rendered for twinned right- and left-eye views. Finally, the twinned views are synchronized and combined in a format compatible with the stereo projection apparatus.

The process of making models and the various simulations are detailed further on our website (http://www.abdn.ac.uk/~com168/ophth/development_process.shtml) to illustrate further the potential of our models.

Results and discussion

The eye models are currently being used to teach ocular ultrasound and they have been made to simulate ultrasound positions and lesion localization (see ).

These models will help the student with spatial awareness and allow for assimilation of this awareness into clinical practice. It will also help with grasping the nomenclature used in ocular ultrasound as well as helping with localization of lesions and obtaining the best possible images for echographic diagnosis, accurate measurements, and reporting.

A further model has been developed from our experience and work on eye modeling in Blender. This model is interactive and can be manipulated in any direction and plane using a graphics interface pad.

This model has much potential to teach where both trainer and trainee can interact with it. It will be used to teach not only ultrasound, but also ophthalmic anatomy, and surgical and laser procedures.

Future development

To date a virtual eye model has been developed (see ) and used to test the potential for learning enhancement with students of ophthalmology, as detailed .

Table 1 Pedagogical applications

Possible hardware integration

We are currently working on specifying and commissioning a prototype of the hardware and drivers of peripheral devices to control a range of Open Source software such as OpenSceneGraph (OSG) and Blender, thereby allowing real-time manipulation of the virtual eye models in pedagogical scenarios.

Items include

Develop the prototype peripheral hardware and electronics:

Globe peripheral and drivers broadcasting the orientation – pitch, yaw, and tilt – the real-time orientation of the globe.

Probe peripheral and drivers broadcasting proximity, and orientation of the probe relative to the globe, in real-time.

WiFi communication with host computer and OSG tracking module.

Interactively develop and field test both drivers’ integration with OSG. This involves writing an OSG manipulator module which compiles on Windows, Macintosh, and Linux.

Develop and field test software modules utilizing the generated OSG manipulator data on the existing eye model, thereby manipulating and displaying:

real-time orientation of the globe model affecting the virtual eye model.

real-time orientation of the probe relative to the globe affecting the virtual probe and, ultimately (see 4 below), generating synthesized scanner output.

Develop and field test associated ophthalmology teaching and learning modules utilizing these tools, and including refinement of the existing eye model’s appearance.

Develop a visual synthesizer module for OSG, generating an inset panel within the 3D environment displaying simulated, real-time, ultrasonic scanner output dependent on the probe’s position relative to the globe

Extend the application display capabilities to 3D spectacles and thus fully immersive 3D augmented reality experiences, for example:

Within simulated microscope apparatus.

A multiuser server for small group anatomy tutorials utilizing 3D spectacles. This needs to track each user’s head position relative to the assumed location of the virtual model.

Evaluate monoscreen-3D display for out-of-hours/library access.

Conclusion

There is much potential for teaching and learning using these interactive eye models. Further refinement of these models and appropriate hardware integration will allow us to develop this tool for the benefit of teaching ocular ultrasound as well as surgical and laser procedures without having to put patients at risk.

It is important for us to develop this technology further for the benefit of the ophthalmological profession and fraternity.

Disclosure

The authors disclose no conflicts of interest.

References

- AttaHROphthalmic Ultrasound: A Practical GuideLondon, UKButterworth-Heinemann1996

- LeeDVirtual reality simulation of the ophthalmoscopic examination MSc Thesis. University of California of Los Angeles;1997

- PimentelKTeixeiraKVirtual Reality: Through the Looking GlassNew YorkMcGraw-Hill1993

- RhodesMLComputer graphics and medicine: a complex partnershipIEEE Comput Graph Appl19971712228

- HunterIWJonesLASagarMALafontaineSRHunterPJOphthalmic microsurgical robot and associated virtual environmentComput Biol Med19952521731827554835

- MostafawySKermaniOLubatschowskiHVirtual eye: retinal image visualisation of the human eyeIEEE Comput Graph Appl1997171812