Abstract

Background

Previous studies of musical emotion largely depended on the lexical approach which suffered from overlaps between emotions.

Methods

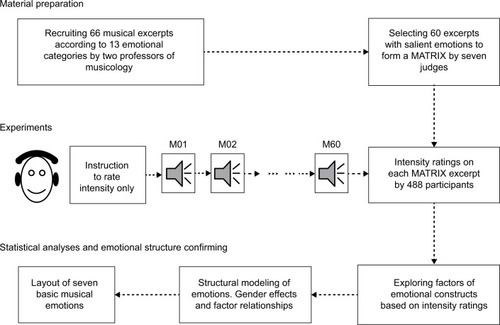

In the present study, we explored emotional domains through a dimensional approach based on the intensity ratings on the emotion perceived in music. Altogether, 488 university students were invited to listen to 60 musical excerpts (most of them classical), to rate the intensity of emotion perceived without naming the emotion. Later, we conducted the exploratory factor analysis on the intensity ratings to look for the latent structures of musical emotion and then applied the confirmatory factor analysis to verify the validity of the proposed model of emotional structure.

Results

After first- and second-order factor analyses, seven emotional factors (domains, with 38 musical excerpts) were identified: Happiness, Tenderness, Sadness, Passion, Anger, Anxiousness, and Depression, which formed a satisfactory model. No gender difference was found regarding the perceived intensity of musical emotion.

Conclusion

Our study has offered evidence to delineate basic musical emotions into seven domains.

Introduction

Music plays an important role in everyday life, such as in commercial sites to stimulate consuming behaviorsCitation1 and in leisure activity to regulate individual emotion and mood.Citation2 It is also used as a kind of psychotherapy for painCitation3 and other emotional problems.Citation4 Indeed, when regarding processing or recognizing a putative emotion selected from a musical source, we are not sure that an emotion is specifically elicited by its putative vector, neither we are sure that an emotion is specifically recognized from its putative vector. Therefore, the music effectiveness is not ideal due to the imprecise application of desired emotional music, which results from the rough classification of emotion. The question of how many emotions people can perceive from music is still under debate, despite there being plenty of research on music and emotion. Many studies were focused on musical emotions which were not theoretically driven or not comprehensive,Citation5,Citation6 for example, researchers tended to select several emotions of interest (mostly happiness, sadness, and anger) for their designs. Given that music influences various fields including scientific research, education, and therapy, it is of vital theoretical and practical significance to acquire a comprehensive picture of what kinds of emotion are effectively conveyed by music.

There are several proposed models to depict musical emotions: the circumplex model,Citation7 the positive activation–negative activation model,Citation8,Citation9 and the vector model.Citation10 These models are mostly based on the planar quadrant distributions of arousal and valence of emotion expressed in music, but also have their own uniqueness. For instance, the circumplex model proposes that emotions are distributed in a circular pattern centered on medium arousal and neutral valence. The vector model illustrates an underlying dimension of arousal and a binary choice of valence that determines direction, which results in two vectors. Both vectors start at zero arousal and neutral valence and proceed as straight lines, one in a positive and the other one in a negative valence direction. Apparently, both vector model and the positive activation–negative activation model include the neutral valence and the high arousal ratings, which result from the averaging of individual positive and negative valence ratings; therefore, they provide overall better fits of emotional classification than the circumplex model does.Citation9,Citation11 However, the two-dimensional models are not ideally independent, since the higher arousal states are more likely to be defined by valence and the lower arousal states are more likely to be neutral.Citation11 Practically, in a human neurophysiological study, the magnitudes of the event-related skin conductance responses varied according to different musical emotions, but did not parallel with subjects’ ratings on their arousal levels.Citation12 Nevertheless, the multiple-dimensional method based on the factor analyses might be a better way to classify musical emotions.

In parallel, when referring to the types of emotion conveyed by music, most previous approaches to delineating musical emotions were taxonomical (lexical), that is, to characterize these emotions with terms. For instance, Hevner developed a celebrated, music-specific emotion model, including eight emotional clusters (ie, Sober, Gloomy, Longing, Lyrical, Sprightly, Joyous, Restless, and Robust), each described with six to eleven emotion-colored adjectives.Citation13 The eight groups were arranged into a circle, so that the adjacent clusters possessed certain features in common, while the clusters in the opposite location deviated mostly from each other. This musical emotion model was later refined and rearranged into 9–13 clusters and then sorted into six subgroups.Citation14,Citation15 Furthermore, in a study of updating the Hevner adjectives, nine emotional clusters were elaborated.Citation16 This outcome was partly supported by Juslin and Laukka who studied every day listening and found nine clusters of emotion, labeled as joy, sadness, love, calm, anger, tenderness, longing, solemnity, and anxiety.Citation17 Recently, Zentner et al compiled a comprehensive list of emotion-colored adjectives and asked participants to rate the frequency of the given emotions they perceived in their preferred music and in their everyday life.Citation18 They performed the exploratory factor analysis (EFA) and confirmatory factor analysis (CFA) on the ratings and developed the Geneva Emotional Music Scale which consisted of nine factors: wonder, transcendence, tenderness, nostalgia, peacefulness, power, joyful activation, tension, and sadness.

Through the lexical approach on musical emotions, some emotions, such as happiness, anger, sadness, tenderness, and anxiety, were consistently recognized in children, in individuals of different cultures, and in brain-damaged or neuropsychiatric patients.Citation19 However, these studies suffered from several shortcomings. Firstly, the semantic consideration was the sorting of emotion-colored adjectives which were suitable for describing rather than for classifying musical emotion. Meanwhile, this kind of classification was influenced by cultural information embedded into language. When it came to some languages without terms describing emotion,Citation20 such a method would fail to generate a musical emotion-related structure. Secondly, the classification clusters were subjective; thus, the structure of musical emotion derived would lack structural validity. For instance, nouns with similar meanings, such as sorrow, sadness, and grief, were prudently classified into the same emotional family;Citation21 thus, they might fail to capture nuanced emotional categories. Zentner et al,Citation18 however, applied the EFA to the emotional terms, but they focused more on the music-induced emotions and reached unsatisfactory validities of those perceived emotions. Thirdly, the musical stimuli were not presented to participants in most studies, and participants reported their judgments which were greatly dependent on their memory and their past musical experiences.

The purpose of the present study was to delineate emotions conveyed in music, with two approaches. One was that we prepared a more comprehensive pool of musical excerpts (MATRIX, most of them were classical) which conveyed 13 categories of dominant musical emotions as proposed through a previous lexical study.Citation15 The other was that we invited participants to rate only the intensity of the emotion they perceived (instead of naming that emotion) when listening to each of the MATRIX excerpts. The intensity ratings are measured with the Likert scale which is frequently used in psychological assessments, and produce multiple-dimensional structures of personality or other psychological measures.Citation22,Citation23 Then, we constructed and validated the basic structures of musical emotion by conducting both EFA and CFA on the intensity ratings. Actually, in this way, the investigators have successfully delineated the structures of facial expressions of emotionCitation24 and picture vectors of emotion.Citation25 In the current study, we have hypothesized that musical emotion at least includes happiness, tenderness, sadness, anger, and anxiousness and these emotions have their discriminant and convergent validities, since previous documentation had indicated that these emotions are the basic ones related to music.Citation17,Citation26 Moreover, we would like to test the gender difference, since it is well acknowledged that females are more sensitive to negative emotion than males are.Citation27

Materials and methods

Participants

Altogether, 488 university students who were not majoring in musicology and related specialties and were without previous musical training participated in the study: 214 males (mean age, 20.13 years with SD 1.51; age range, 16–28 years) and 274 females (mean age, 19.86 with SD 1.41; range, 17–28 years). All participants were with normal hearing, without any neurological or psychiatric disorders, and were free from drug or alcohol 72 hours prior to the test. The study protocol was approved by the Ethics Committee of Zhejiang University College of Medicine (No. ZGL201606-1-2), and all participants gave their written informed consents (the informed consents of the young adolescents were signed by their guardians).

Likert scale

Likert scale was used to investigate the intensity ratings of the perceived musical emotion from each participant. In the current study, we defined the scale with nine grades (from 0 to 8); the larger the number, the stronger the emotion a participant perceived. Zero indicates that the intensity of perceived emotion is very low or approaches to peace, 1 indicates the intensity is weak, 4 indicates it is moderate, and 8 indicates the strongest intensity.

Musical excerpts and procedure

In order to construct a musical database, we set up a list of emotional labels containing as many names of emotion as possible, which included 13 musical emotions (ie, sad, fanciful, frustrated, agitated, longing, dreamy, passionate, mysterious, scared, dramatic, delicate, bluesy, and happy) as proposed in a lexical study.Citation15 Bearing these emotions in mind, 66 excerpts of nonvocal music, most of which were classical, were chosen by two well-experienced professors of musicology (also our coauthors: one PhD holder, TD; and one master’s holder, YY).

Then, the preliminary 66 musical excerpts were presented to seven judges: the above-mentioned two musicology professors and other five of our co-authors (one PhD holder in musicology, LS; one PhD holder in psychiatry, WW; one MD candidate, CS; one master’s candidate in psychiatry, CW; and one master’s candidate in psychology, MW). The judges were asked to listen to the musical excerpts and to decide whether they conveyed significantly salient emotions. Each excerpt was voted on by seven judges independently. If an excerpt received more than four “no” votes, it was dismissed. If an excerpt received three “yes” and three “no” votes, the seventh judge (WW) made the final decision. Finally, 60 musical excerpts were retained to form a MATRIX. illustrates the information about the MATRIX musical excerpts, and the related musical scores (texts) will be available upon request to the authors. The mean duration of these excerpts was 30.72 seconds (with SD 9.51) and ranged from 16 to 62 seconds, and the different durations were due to different needs for bearing salient emotions.

Table 1 Information of the 60 musical excerpts: their original sources, start–stop bars, durations (seconds), playing instruments, composers, putative emotions, and acoustic featuresTable Footnotea

illustrates the general procedure of the experiment. Participants were led to a quiet room to have rest for 5 minutes. Later, they were explained that the present study was designed to delineate musical emotions. They were then asked to listen to the 60 musical excerpts one by one with an interval of 6 seconds in between as designed in previous studies.Citation6 Without warning of start or end, these excerpts were presented only once through headphones, played by a computer MUSIC Jukebox software. The presentation order of the excerpts was randomized, and no more than three successive excerpts belonged to the same putative emotion. During the experiment, participants sat comfortably on the chair and the volume of the music was adjusted to a comfortable level. They were allowed to pause the presentation of music when needed. After listening to each excerpt, they were asked to choose a number corresponding to the emotional intensity they perceived, using the Likert scale (described above). During the experiment, the participants might silently name the emotion they perceived when listening to a musical excerpt, but they were instructed to rate only the intensity of emotion they perceived from that excerpt. The whole experiment lasted about 40 minutes.

Statistical and data analyses

First, the ratings of emotion intensity of the 60 musical excerpts were subjected to a principal axis analysis by a computer program, the Predictive Analytics Software Statistics, Release Version 20.0 (2013; SPSS Inc., Chicago, IL, USA). Factor loadings were rotated orthogonally using the varimax normalized method and the factors (emotion clusters) and their items (musical excerpts) were determined. Then, the number of extracted factors was determined by the scree plot as well as the parallel analysis.Citation28 Then, the fit of factor model was evaluated by AMOS,Citation29 a CFA for the structural equation modeling on the same participant sample to evaluate the preliminary test of the model fit proposed by EFA. We conducted both EFA and CFA on the same sample for a cross-validation procedure as conducted previously.Citation30–Citation32

The factors whose items conveyed significantly different emotions were subjected to a second round of principal axis analysis to obtain sub-factors (facets). Again, the facet loadings were rotated orthogonally via the varimax normalized method. The number of extracted facets in the following analysis was determined by the scree plot and eigenvalues. Items which were loaded less heavily (below 0.4) on the target facet or cross-loaded heavily (above 0.4) were removed. As performed in the first-level factor, the facet model was evaluated via CFA and the goodness of fit of final model was tested. Then, the internal reliabilities (Cronbach’s α values) of each facet were analyzed. Finally, we applied two-way ANOVA (Gender × Emotion) to detect the perceived emotional intensity differences between gender and emotion. Once significance was detected, the post hoc test of the least significant difference method was conducted to detect the effect of gender or emotion. A P-value <0.05 was considered as significant.

Results

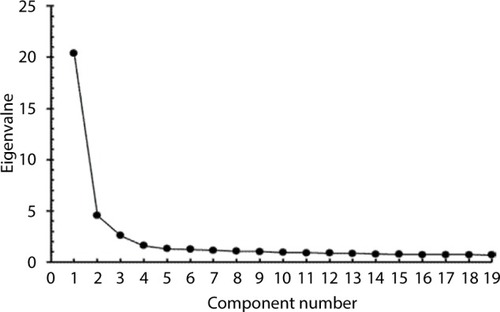

The principal axis factor analysis revealed nine eigenvalues higher than 1.0, which were 20.42, 4.55, 2.58, 1.62, 1.32, 1.26, 1.16, 1.06, and 1.03, respectively. The scree plot () indicated approximately four factors, which were the same as parallel analysis indicated. The first three, four, five, and six factors accounted for 45.92%, 48.61%, 50.81%, and 52.91% of the total variance, respectively. Hence, we established three-, four-, five-, and six-factor modeling, and then evaluated the model fit parameters; the results indicated that three-factor model was the most suitable one (). These three factors contained 26, 17, and 17 music excerpts, with Cronbach’s α values of 0.95, 0.92, and 0.91, respectively.

Figure 2 Scree plot from the exploratory factor analysis of the intensity ratings on 60 musical excerpts in 488 participants.

Table 2 Model fit parameters based on the first-order analyses in 488 participants

For factor 1, the ratings of intensity of emotion on the 26 excerpts were analyzed again by principal axis factor analysis, and the varimax normalized method rotation, for the second-order layout. Three facets with eigenvalues of 11.02, 1.37, and 1.09, respectively, came out, accounting for 51.88% of the total variance. With the loading criteria, three excerpts (M13, M22, and M34) were removed from the first facet and three (M18, M38, and M56) from the third. Out of the remaining ones, two excerpts (M15 and M25) within the first facet and two (M06 and M36) within the second facet which displayed distinct emotion from the others were deleted. Eventually, the first facet consisting of seven excerpts, mostly in minor mode, played by instruments such as piano, oboe, flute, and violin, expressed with slow tempo, little variability of sound level, soft change between long and short notes, legato melody with long phrases, and soft timbre, was named Tenderness. The second facet with five excerpts possessing moderate tempo, simple and consonant harmony, high pitch range, firm rhythm, and fast tone attack was named Happiness. Consistent with the first facet, the melodic instruments used in the excerpts were violin and piano. The third facet with four excerpts featured with slow tempo, low note density, minor mode, little pitch variability, consonant harmony, descending melodic lines, and soft timbre, was played by flute, violin, and cello, and was called Sadness. The internal consistencies of these three facets were 0.85, 0.80, and 0.72, respectively ().

Table 3 The internal consistencies of seven music domains (second-order level) in all participants (n=488) and the intensity ratings (mean ± SD) of each domain in female (n=274) and male (n=214) participants

For factor 2, the principal axis factor analysis to run for the second-order layout revealed two facets with eigenvalues of 7.57 and 1.23, which accounted for 51.75% of the total variance. According to the loading criteria, two excerpts (M12 and M47) and one excerpt (M26) were removed from the fourth and fifth facets, respectively. Moreover, one excerpt (M07) was excluded from the fifth facet since it expressed distinguished emotion from the others. Finally, the fourth facet including nine musical excerpts with characteristics of more tonal modulations, faster tempo, higher note density, more dissonant and complex harmony, faster tone attacks, and stronger intensity fluctuations was named Anger. The fifth facet with four excerpts was named Passion, accompanying with features of faster tempo, more staccato articulations, higher note density, and stronger intensity fluctuations. In most excerpts with these two emotions, percussion instruments were used frequently to establish an intense atmosphere. The internal consistency was 0.87 for Anger and 0.80 for Passion ().

For factor 3, two facets with eigenvalues of 7.05 and 1.05 emerged after the second-order oriented principal axis factor analysis, which accounted for 47.65% of the total variance. Two items (M09 and M49) of the sixth facet and three items (M05, M55, and M51) of the seventh facet were removed due to their cross-loadings >0.40. One item (M53) and other two (M19 and M40) were removed from the sixth and seventh facets, respectively, due to their less congruence in emotion with the others. The sixth facet with six items was named Depression, with slow tempo, low note density, little intensity fluctuations, and falling melodic lines, and in minor mode. Most excerpts were played with instruments in soft timbre, such as violin, cello, and piano, and French horn which was grouped together in orchestration. The seventh facet with three items exhibiting more tonal modulations, faster tempo, higher note density, stronger intensity fluctuations, and wider pitch range was named Anxiousness. All excerpts were played with piano. The internal consistencies were 0.81 and 0.69 for Depression and Anxiousness, respectively ().

The remaining 38 music excerpts under seven facets constructed a model with satisfactory construct validities as indicated by parameters such as the χ2/df of 2.48, goodness of fit index 0.84, adjusted goodness of fit index 0.82, Tucker–Lewis index 0.87, comparative fit index 0.88, root mean square error of approximation 0.055, and standardized root square residual 0.062. There was no gender effect on intensity ratings (Gender effect: F (1, 487)=1.16, P=0.28, mean square effect [MSE]=7.54; Emotion × Gender interaction: F (6, 2,922)=0.26, P=0.96, MSE =0.17), although there was an emotional effect on intensity ratings (Emotion effect: F (6, 2,922)=293.32, P<0.001, MSE =195.99), as also found in . The intensity of various emotions compared in pairs showed any two of these emotions were significantly different, except Sadness and Happiness, and Tenderness and Depression. The intercorrelations (ranging from 0.44 to 0.75) between any two emotions were significant ().

Table 4 Inter-relationships between seven musical emotions in 488 participants

Discussion

We found seven emotions using both EFA and CFA on the intensity rating of musical excerpts, that is, Tenderness, Happiness, Sadness, Anger, Passion, Depression, and Anxiousness, which partly supported our hypothesis. The parameters of fitting model were satisfactory, indicating the seven music emotion model displayed nice construct validity; the internal α of each emotional domain was also satisfactory, indicating each possessed good internal consistency. Unfortunately, we did not find any gender difference on the intensity ratings on the musical emotions perceived by participants.

Five emotions, that is, Happiness, Sadness, Tenderness, Anger, and Anxiousness, found in our study were well acknowledged as the basic ones in music field.Citation19,Citation33,Citation34 They were always among the top ten answers when individuals were asked which emotion might be expressed by music,Citation17,Citation35 and they even could be communicated with a high accuracy which was comparable to the facial and vocal emotions.Citation21,Citation34 Our results were in line with that these emotions were easily recognized by various populations with a high accuracy.Citation19 Happiness and Sadness were the most identifiable ones in music,Citation34 for they were relatively effortless to express due to their fairly consistent but different features when referring to their mode and tempo.Citation36 Tenderness was not simply a general positive emotion,Citation37 but was obvious in both music performance and vocal expression, and it was highly recognized in previous studies.Citation21 Both Anger and Anxiousness had negative valence and high arousal, but they were different in various aspects.Citation21,Citation34,Citation38 For instance, Anxiousness was often categorized into “fear family”, and was an emotion oriented to the future; this feature was different from Anger which was oriented to the present.Citation21 Indeed, both fear and anger are associated with acoustic features such as fast tempo, increased sound level variability, and high pitch. However, anger is conveyed through higher sound level, higher-frequency energy, and more pitch variability than those of fear.Citation34 Furthermore, these two musical emotions function differently; angry stimuli exposure could induce the experience of anger and thus modulated individuals’ own emotion, but anxious listeners hardly immersed themselves into anxiety.Citation38,Citation39

In our study, passion facet first emerged together with Anger, which might be due to that most excerpts with these two emotions were played with not only rhythmic instruments but also colorful instruments such as in percussion. It was similar to Hevner’s adjective circle, where agitated and passionate were in the same cluster.Citation13 This affective cluster was characterized by fast tempo, low pitch, firm rhythm, complex harmony, and descending melody.Citation40 However, in our study, we found our passionate musical excerpts were accompanied with features of uniform modality and consonant harmony, while musical excerpts in Anger showed increased modal interchanges and dissonant harmony. Therefore, Passion was presented as an independent emotion, as it was characterized to one of the five emotions in a previous reportCitation41 and it was supported that passion together with anger were one of the best labels describing musical emotion.Citation42 In addition, in music information retrieval and musical emotion recognition research, passion emerged with the sound and music features such as timbral texture, rhythmic content, and pitch content through various algorithms.Citation15,Citation42,Citation43

In the current study, we found that Depression and Sadness were independent emotions rather than belonging to the “sad family”. In Hevner’s adjective circle, sadness and depression together with other sad-colored adjectives were classified into one domain that reflected sadness-loaded emotion,Citation13 which was confirmed in other similar lexical studies.Citation14,Citation16,Citation18,Citation44 However, when the participants recognize broad emotion family with a high agreement, they might also perceive nuance within the big emotion family, though with a low agreement.Citation26 For instance, Brown found that the agreement of sorting music into six broad emotions was high, while the agreement of sorting nuances or variants of this quality was low.Citation45 Actually, sadness-like emotion possesses many variants such as passiveness and grief,Citation33 and people could perceive various sadness-like emotions when listening to sad music.Citation44,Citation46 In our study, based on the intensity perceived only, irrespective of the semantic considerations of emotion, we separated the two classes of sad-like music. Our results were supported by a clinical consideration that depression was a severe form or medicalization label of sadness.Citation47 Moreover, in music, it has been consistently found that sad music induces negative, positive, and mixed emotions,Citation44,Citation48,Citation49 and music-evoked sadness is composed of grief, melancholia, and sweet sorrow.Citation50 Although many factors such as personality, memorable experience, preference, and musical expectancy might account for such intriguing results,Citation51 one possible interpretation is that researchers did not distinguish the subtypes of sadness-like music, since they also conveyed positive emotions such as romance and some blithe beyond sadness.Citation44

Intercorrelations between emotions were at medium to high levels in our study. On one hand, it might be due to the intensity rating method, since previous results showed that individuals’ background had no effect on emotion ratings.Citation52 On the other hand, musical emotions were often experienced in a blended manner, rather than being fully separated.Citation18 Nonetheless, these intercorrelations reflected the complexity of emotion perception in musical context, for example, a piece of music could express both joy and surprise.Citation52 Moreover, mixed emotions expressed in music might produce nuanced feelings, causing low satisfactory agreement on them among individuals.Citation53

The present study has also suffered from some design flaws. Firstly, most musical excerpts were chosen from the classical genre and the number of each musical excerpt was small. Different genres might show different musical emotions. Further studies might be expanded to various genres of music. Moreover, our coauthors acted as judges to filter the musical excerpts, which might be relatively subjective. A more neutral group of specialists rather than coauthors might guarantee stronger quality control of the MATRIX. Secondly, our participants were young adults and less musicologically trained, which might lead to the nonsignificant gender difference as suggested by previous results.Citation54 Whether our results could be extended into general or music-specialized population needs to be confirmed. Thirdly, we focused on the intensity only, and whether other methods such as emotion labeling (through categorical or nominal approach) would yield differently remains to be determined. Meanwhile, in order to provide as many musical excerpts with salient emotion as possible, we neither controlled the instruments used, nor the duration or the volume of each musical excerpt strictly, which might affect the intensity ratings of our participants. Future research might use artificial musical pieces or standardize the musical structure and duration to control the musical emotion perception. Fourthly, many cross-cultural studies have shown that Westerners prefer emotions of higher arousal than the Easterners do.Citation55 Our participants were all Chinese; therefore, whether there was a cultural influence on the intensity ratings of our participants remains to be seen.

Conclusion

Our study was free from the influence of lexicons or musicological background, which might provide a new way to delineate basic musical emotions. Indeed, through both EFA and CFA on the intensity ratings only, we have delineated seven musical emotions: Tenderness, Happiness, Sadness, Anger, Passion, Depression, and Anxiousness.

Ethics statement

The research conformed to the Helsinki Declaration concerning human rights and informed consent, and followed correct procedures concerning treatment of humans in research.

Author contributions

WW conceived the study and participated in the design and coordination of the study. CS, MW, LS, and CW conducted the tests. All authors contributed to data analysis, drafting and revising the article, gave final approval of the version to be published, and agree to be accountable for all aspects of the work.

Acknowledgments

The authors would like to thank Shanhui Shen, Yaoxi Chen, Hongying Fan, Jiawei Wang, Guorong Ma, Qisha Zhu, Bingren Zhang, and Xu Shao for their assistance during the experiment.

Disclosure

The study was supported by a grant from the Natural Science Foundation of China (No. 81771475) to the corresponding author Dr Wei Wang. Dr Javier Cabanyes-Truffino is currently working in the Faculty of Psychology, University of Navarre, Pamplona, Spain. The authors report no other conflicts of interest in this work.

References

- JainRBagdareSMusic and consumption experience: a reviewInt J Retail Distrib Manag2011394289302

- SaarikallioSNieminenSBratticoEAffective reactions to musical stimuli reflect emotional use of music in everyday lifeMusic Sci20131712739

- FinlayKAAnilKPassing the time when in pain: investigating the role of musical valencePsychomusicology20162615666

- ErkkiläJGoldCFachnerJAla-RuonaEPunkanenMVanhalaMThe effect of improvisational music therapy on the treatment of depression: protocol for a randomised controlled trialBMC Psychiatry2008815018588701

- PeretzIHappy, sad, scary and peaceful musical excerpts for research on emotionsCogn Emot2008224720752

- SongYDixonSPearceMTHalpernARPerceived and induced emotion responses to popular music: categorical and dimensional modelsMusic Percept2016334472492

- RussellJAA circumplex model of affectJ Pers Soc Psychol198039611611178

- WatsonDTellegenAToward a consensual structure of moodPsychol Bull19859822192353901060

- WatsonDWieseDVaidyaJTellegenAThe two general activation systems of affect: structural findings, evolutionary considerations, and psychobiological evidenceJ Pers Soc Psychol1999765820838

- BradleyMMGreenwaldMKPetryMCLangPJRemembering pictures: pleasure and arousal in memoryJ Exp Psychol Learn Mem Cogn19921823793901532823

- RubinDCTalaricoJMA comparison of dimensional models of emotion: evidence from emotions, prototypical events, autobiographical memories, and wordsMemory200917880280819691001

- KhalfaSIsabellePJean-PierreBManonREvent-related skin conductance responses to musical emotions in humansNeurosci Lett2002328214514912133576

- HevnerKExperimental studies of the elements of expression in musicAm J Psychol1936482246268

- FarnsworthPRA study of the Hevner adjective listJ Aesthet Art Critici195413197103

- LiTOgiharaMDetecting emotion in musicPaper presented at: International Symposium/Conference on Music Information RetrievalOctober 27–30 2003Baltimore, MD, USA

- SchubertEUpdate of the Hevner adjective checklistPercept Mot Skills2003963 Pt 21117112212929763

- JuslinPNLaukkaPExpression, perception, and induction of musical emotions: a review and a questionnaire study of everyday listeningJ New Music Res2004333217238

- ZentnerMGrandjeanDSchererKREmotions evoked by the sound of music: characterization, classification, and measurementEmotion20088449452118729581

- EerolaTVuoskoskiJKA review of music and emotion studies: approaches, emotion models, and stimuliMusic Percept2013303307340

- HupkaRBLentonAPHutchisonKAUniversal development of emotion categories in natural languageJ Pers Soc Psychol1999772247278

- JuslinPNLaukkaPCommunication of emotions in vocal expression and music performance: different channels, same code?Psychol Bull2003129577081412956543

- RoselliniAJBrownTAThe NEO Five-Factor Inventory: latent structure and relationships with dimensions of anxiety and depressive disorders in a large clinical sampleAssessment2011181273820881102

- ClarkeDMKissaneDWTrauerTSmithGCDemoralization, anhedonia and grief in patients with severe physical illnessWorld Psychiatry2005429610516633525

- HuangJFanJHeWCould intensity ratings of Matsumoto and Ekman’s JACFEE pictures delineate basic emotions? A principal component analysis in Chinese university studentsPers Individ Dif2009463331335

- XuZZhuRShenCSelecting pure-emotion materials from the International Affective Picture System (IAPS) by Chinese university students: a study based on intensity-ratings onlyHeliyon201738e0038928920091

- JuslinPNWhat does music express? Basic emotions and beyondFront Psychol20134259624046758

- BieleCGrabowskaASex differences in perception of emotion intensity in dynamic and static facial expressionsExp Brain Res200617111616628369

- O’ConnorBPSPSS and SAS programs for determining the number of components using parallel analysis and Velicer’s MAP testBehav Res Methods Instrum Comput200032339640211029811

- ArbuckleJAAMOS User’s Guide (V.3.6)Chicago, ILSmall Waters Corp1997

- LaiJSNowinskiCVictorsonDQuality-of-life measures in children with neurological conditions: pediatric Neuro-QOLNeurorehabil Neural Repair2012261364721788436

- ToyamaMYamadaYThe relationships among tourist novelty, familiarity, satisfaction, and destination loyalty: beyond the novelty-familiarity continuumInt J Mark Stud2012461018

- BarryTJHermansDLenaertBDebeerEGriffithJWThe eacs: attentional control in the presence of emotionPers Individ Dif2013557777782

- PlutchikRThe Psychology and Biology of Emotions2NewYork, NYHarper-Collins1994

- LindströmEJuslinPNBresinRWilliamonA“Expressivity comes from within your soul”: a questionnaire study of music students’ perspectives on expressivityRes Stud Music Educ20032012347

- MohnCArgstatterHWilkerF-WPerception of six basic emotions in musicPsychol Music2011394503517

- HunterPGSchellenbergEGMusic and emotionJonesMRFayRRPopperANMusic PerceptionNew YorkSpringer2010129164

- KalawskiJPIs tenderness a basic emotion?Motiv Emot2010342158167

- SharmanLDingleGAExtreme metal music and anger processingFront Hum Neurosci2015927226052277

- SchwartzGEWeinbergerDASingerJACardiovascular differentiation of happiness, sadness, anger, and fear following imagery and exercisePsychosom Med19814343433647280162

- HevnerKThe affective value of pitch and tempo in musicAm J Psychol1937494621630

- HuXDownieJSExploring mood metadata: relationships with genre, artist and usage metadataPaper presented at: International Symposium/Conference on Music Information RetrievalSeptember 23–27 2007Vienna, Austria

- SkowronekJMcKinneyMFde ParSVA demonstrator for automatic music mood estimationPaper presented at: International Symposium/Conference on Music Information RetrievalSeptember 23–27 2007Vienna, Austria

- HuXDownieJSLaurierCBayMEhmannAFThe 2007 MIREX audio mood classification task: lessons learnedPaper presented at: International Symposium/Conference on Music Information RetrievalSeptember 14–18 2008Philadelphia, PA, USA

- KawakamiAFurukawaKKatahiraKOkanoyaKSad music induces pleasant emotionFront Psychol2013431123785342

- BrownRMusic and languageTaylorRDocumentary Report of the Ann Arbor Symposium: Applications of Psychology to the Teaching and Learning of MusicReston, VAMusic Educators National Conference1981233265

- KawakamiAFurukawaKOkanoyaKMusic evokes vicarious emotions in listenersFront Psychol2014543124910621

- Durà-VilàGLittlewoodRLeaveyGDepression and the medicalization of sadness: conceptualization and recommended help-seekingInt J Soc Psychiatry201359216517522187003

- EerolaTPeltolaHRMemorable experiences with sad music-reasons, reactions and mechanisms of three types of experiencesPLoS One2016116e015744427300268

- TaruffiLKoelschSThe paradox of music-evoked sadness: an online surveyPLoS One2014910e11049025330315

- PeltolaH-REerolaTFifty shades of blue: classification of music-evoked sadnessMusic Sci201620184102

- JuslinPNSlobodaJADeutschDDeutschDMusic and emotionDeutschDDeutschDThe Psychology of MusicSan Diego, CA, USAElsevier Academic Press2013583645

- KallinenKEmotional ratings of music excerpts in the western art music repertoire and their self-organization in the Kohonen neural networkPsychol Music2005334373393

- JuslinPNCan results from studies of perceived expression in musical performances be generalized across response formats?Psychomusicology1997161-277101

- RobazzaCMacalusoCD’UrsoVEmotional reactions to music by gender, age, and expertisePercept Mot Skills19947929399447870518

- LimNCultural differences in emotion: differences in emotional arousal level between the East and the WestIntegr Med Res20165210510928462104