Abstract

For the past two decades, there have been calls for medical education to become more evidence-based. Whilst previous works have described how to use such methods, there are no works discussing when or why to select different methods from either a conceptual or pragmatic perspective. This question is not to suggest the superiority of such methods, but that having a clear rationale to underpin such choices is key and should be communicated to the reader of such works. Our goal within this manuscript is to consider the philosophical alignment of these different review and synthesis modalities and how this impacts on their suitability to answer different systematic review questions within health education. The key characteristic of a systematic review that should impact the synthesis choice is discussed in detail. By clearly defining this and the related outcome expected from the review and for educators who will receive this outcome, the alignment will become apparent. This will then allow deployment of an appropriate methodology that is fit for purpose and will indeed justify the significant work needed to complete a systematic. Key items discussed are the positivist synthesis methods meta-analysis and content analysis to address questions in the form of ‘whether and what’ education is effective. These can be juxtaposed with the constructivist aligned thematic analysis and meta-ethnography to address questions in the form of ‘why’. The concept of the realist review is also considered. It is proposed that authors of such work should describe their research alignment and the link between question, alignment and evidence synthesis method selected. The process of exploring the range of modalities and their alignment highlights gaps in the researcher’s arsenal. Future works are needed to explore the impact of such changes in writing from authors of medical education systematic review.

Background

For the past two decades, there have been calls for medical education to become more evidence-based (Bligh & Anderson Citation2000; Carline Citation2004; Chen et al. Citation2005; Gordon et al. Citation2014b). This is to ensure that health educators can move from a position of eminence and experience based opinion to a position that considers and integrates the whole state of the research field to ensure best practice. There are researchers in the field who believe that the primary evidence base is poor due to the confounding variables that impact and potentially limit conclusions, whilst others have interpreted this as a lack of understanding of social science methodology and outcomes (Dornan et al. Citation2008). Underpinning both these views is a recognition that poor execution and poor writing of medical education research has been common (Gordon et al. Citation2013a) and presents a significant challenge to those looking to interpret evidence in the field using secondary research methods (Gordon et al. Citation2013b).

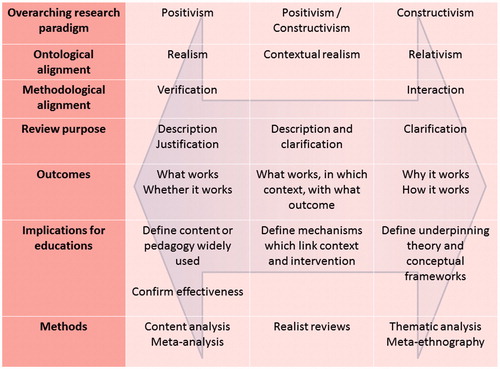

The BEST EVIDENCE MEDICAL EDUCATION Collaboration (BEME Citation2015) is an international group of individuals, universities and professional organizations established in 1999 (Harden et al. Citation1999) to address these needs and mirroring the revolution seen in healthcare. Over the last 16 years, much effort has allowed the development of techniques to achieve this and has fostered the wider recognition and use of evidence synthesis within health education research (Gordon Citation2014a). Crucially underpinning all such works has been alignment with a systematic methodology. This can best be summarized as a methodology that is designed prior to works beginning and therefore is transparent and presented in a manner that is clearly reproducible. BEME believe this is the key to move from eminence based reviews to evidence based reviews. One of the key challenges for those completing systematic reviews in the field is the kaleidoscopic nature of research in any given area, with a range of research questions and modalities investigating any given issue. Cook et al. (Citation2008a) illumined this issue elegantly by considering studies as focusing on justification (whether), descriptive (what) and clarification (how and why). Previously, it has been proposed that considering this framework is the key in producing more relevant systematic reviews (Gordon et al. Citation2014b). However, this increased range of review questions and objectives have resulted in an exponential increase in synthesis modalities within the field. Examples include the use of content analysis, case survey, thematic analysis, meta-narrative, meta-ethnography and realist review, as well as more traditional quantitative health synthesis techniques such as meta-analysis (Sharma et al. Citation2015). These briefly explained in . Whilst previous works have described how to use such methods (Bearman & Dawson Citation2013; Wong et al. Citation2013), there are no works discussing when or why to select different methods from either a conceptual or pragmatic perspective. This question is not to suggest the superiority of such methods, but that having a clear rationale to underpin such choices is the key and should be communicated to the reader of such works (Gordon & Gibbs Citation2014c) ensuring appropriate and therefore valid deployment.

Appendix 1. Examples of synthesis methods within health education systematic review.

The goal of this manuscript is to consider the philosophical alignment of these different review and synthesis modalities and how this impacts on their suitability to answer different systematic review questions within health education.

Systematic review frameworks

Before considering different modalities for synthesizing evidence, it is the key to highlight the context for this discussion and assumptions being made. Whilst many methods can be applied to a dataset to synthesize evidence, the overarching context that such works are being considered within is systematic review. These are defined by the author as reviews that “identify, select, appraise, extract and synthesize all high-quality research evidence relevant to a given question” and their key characteristics described above. This author fully supports the position of BEME that only a systematic methodology can best align with an evidence based health education model. However, this means that readers will also recognize that this alignment itself draws from a positivist research paradigm. In such a paradigm, knowledge in the form of an authoritative truth is derived from empirical evidence. It is this author’s belief that positivism of method can be combined with a contrasting synthesis research paradigm.

There are a number of different frameworks for such reviews, but when they are considered together they are stark in their similarities. This was emphasized during the production of the recently published publication standards for health education systematic review, the STORIES statement (Gordon & Gibbs Citation2014c). During this process, a number of novel elements for inclusion within health education reviews were identified, but starker were the vast number of elements that were shared between all previously identified health systematic review frameworks (70% of items). This homogeneity was focused on the data identification, extraction and quality assessment elements, the implicitly ‘systematic’ positivist elements in the review process. This is the key to consider as there was a corresponding lack of consensus regarding synthesis techniques and indeed these must be treated as two independent variables within a single research project. This highlights that the use of a given method to synthesis evidence does not implicitly allow a reader to infer that it was deployed in a systematic manner. For example, a realist review is based on a realist philosophy of science and considers the interaction between context, mechanism and outcome and is supported by a recently produced set of publication guidelines in the medical education context (Wong et al. Citation2013). However, such a synthesis method can be deployed in both a systematic and non-systematic fashion. The key difference does not reside in the synthesis method, but in the transparency and reproducibility of the process.

Research paradigms of synthesis methodologies

Once the method of systematic review has been defined, the method of evidence synthesis must be selected. There is a discordance that must be embraced when considering the eclectic group of evidence synthesis techniques that can be selected and will be discussed. This is best summarized by propositioning that the methodological paradigms such techniques occupy are diametrically opposed.

Methods such as content analysis and meta-analysis (Bearman & Dawson Citation2013) consider a verification or falsification paradigm, routed in their Cochrane quantitative and positivist alignment. These methods in the context of medical education view teaching through the ontological lens of realism (theories refer to real features and phenomena within the world around them) achieving descriptive and justification outcomes for the review—the so called ‘what’ and ‘whether’ questions (Cook et al. Citation2008a). As such, educational truth is seen as something that is observable, measurable and therefore hopefully can be reproduced by others where there is a need, achieving impact for the education. This can be examined through the following example. If a team completes a review in the context of simulation education in health, a finding may be that that ‘a certain form of simulation’ is used by many. Such a descriptive outcome will clearly define these content and pedagogy parameters. This suggests an objectivism and positivist standpoint that some may feel is inappropriate. However, as this finding does not in any way address the effectiveness of such a form of education, rather focusing on defining ‘what’ the current evidence base suggests the wider body of educators employ, there is clearly a role for such works.

There are some reviews that will align with a justification focus and this may lead to a further step that a form of simulation education will achieve required competence in resuscitation skills. This statement is intended to simultaneously highlight both the problems that exist with this paradigm and the weakness of much work that exists aligned to such a paradigm. The issue with systematic reviews in medical education that occupy such a research paradigm is that a strictly realist viewpoint innately limits the wider use of such work for readers working in other contexts. It is possible within this context that such a review could occupy an extremely well defined and specific area of scope within a content and pedagogical field. In this situation, it could be argued that there is an objective and definable set of criteria that can then be linked to an outcome. But this is not the focus for many such reviews, with huge topics and widely scoped reviews common (Cook et al. Citation2008b). It is proposed that within such reviews it is extremely contentious to argue that any meaningful findings about the make up or pedagogy that are best evidence to achieve a certain outcome can be made. This links to the weaknesses of many such justification works in medical education systematic review. Heterogeneity, both educational and methodological (Sharma et al. Citation2015) are a significant barrier. As such, this further cements the view that meta-analysis as exemplified within Cochrane methodology should be used sparingly and well justified when used.

Techniques such as meta-ethnography and thematic analysis are aligned with an interaction methodology, taking an inductive approach to knowledge generation (Bearman & Dawson Citation2013) often within the context of clarification systematic reviews (Cook et al. Citation2008a). These reviews seek to explore the nature of the evidence base and view the educational truth that is being explored through this evidence base, accepting that the understanding of the researchers allows a contextual interpretation of this truth. In the context of a systematic review these findings have been derived from a systematic process with a clearly described and transparent methodology, ensuring an element of methodological objectivity, but obviously grounded in and possibly limited by the evidence base that is discovered. This exemplifies the opposing research traditions previously highlighted. The positivist tendencies of systematic review juxtaposed with the overarching constructivist (the world is independent of human minds, but knowledge of the world is always a human and social constructs) nature of these particular synthesis methods.

The content and context of different elements of the evidence base may themselves be diverse and indeed divergent. There exists a possibility to apply such methodologies in a manner that focuses on different explanatory frameworks, embracing this divergence and aligning with a relativist ontological perspective (Green & Britten Citation1998). This is in some ways comparable to the role of sensitivity and subgroup analysis in a positivist Cochrane format systematic review, but born out of an opposing constructivist research paradigm. An example would allow us to return to our form of simulation that enhances resuscitation skills. It may be found that studies fall into two different groups. The first involves homogenous groups of learners and the second heterogeneous groups of different health professionals. The question of whether this technique is effective or ‘how’ it is affective may be completely different within these two different contexts and allow diverse ‘truth’ to exist. This divergence is illuminating to both researchers and clinical teachers.

When considering the goal of clarification reviews to explore the ‘how’ and ‘why’ questions within medical education, these methods have the potential to yield deeply meaningful results for those looking to design and amend education, as well as those wishing to more fully understand ‘impact’ from an epistemological perspective. Such a view of impact can consider multiple diverging outcomes with co-existing best evidence that can all co-exist. However, such works will not necessarily define “what” to use and “whether” it works, leaving authors to decide on the appropriate context to deploy these methods.

At this point, it is worth mentioning realist reviews. Whilst realism has been discussed in detail within this digest, realist reviews are not aligned directly with the ontological stance of realism, which is essentially a positivist paradigm. Realist reviews assumes there is a [social] reality that cannot be measured directly (because it is processed through our brains, language, culture and so on), but can be known indirectly. As such, it is argued that realist reviews exist between positivism (“there is a real world which we can apprehend directly through observation”) and constructivism (“given that all we can know has been interpreted through human senses and the human brain, we cannot know for sure what the nature of reality is”) (Wong et al. Citation2013). It is therefore quite understandable that this evidence synthesis method has had much interest from those working in the field in recent years as it clearly bridges different research paradigms. However, the use of a priori theory and the focus on context and mechanism could be limiting in some situations, particularly when considering the potential to consider divergent theoretical solutions as already discussed. This once again leaves potential authors with the question of not whether but when to correctly and most usefully deploy such a modality. The range of modalities discussed, as well as the key characteristics discussed within this paper are illustrated within .

Discussion

The growth of systematic review within medical education is exponential, yet has been matched and perhaps surpassed by a girth of synthesis modalities that have been deployed by researchers in the field. The goal of this piece has not been to advocate the use of a specific method. On the contrary, the issue is not one of correct or incorrect methodologies, but of their appropriate or inappropriate deployment.

The key characteristic of a systematic review that should impact this choice is the question being addressed. By clearly defining this and the related outcome expected from the review and for educators who will receive this outcome, the alignment will become apparent. This will then allow deployment of an appropriate methodology that is fit for purpose and will indeed justify the significant work needed to complete a systematic review and the significant effort needed to read such a piece. Additionally, it is proposed that authors of such work should describe their research alignment and the link between question, alignment and evidence synthesis method selected. This supports understanding within readers and also differentiates the work from pieces overlapping in the same area that may have mutually exclusive questions and findings. Future works are needed to explore the impact of such changes in writing from authors of medical education systematic review.

The process of exploring the range of modalities and their alignment highlights gaps in the researcher’s arsenal. This seems particularly relevant in the research paradigm ‘middle ground’, where only realist review seem to currently reside. This appears to be a particularly interesting area where secondary research can accept an element of both a positivist view of absolute truth, whilst accepting a constructivist perspective that multiple truths may exist. This may be particularly relevant as more primary research works in medical education begin to define relevant theory and frameworks that may themselves need to be reviewed in a manner that will not clearly occupy a specific ontological stance. This philosophical center ground may be where the future of systematic review in medical education must lie and so scholarly input and debate in this area is invited.

Conclusion

The systematic review process in medical education is established and well defined, but synthesis methodologies are wide ranging and currently often employed without any justification, thereby limiting the usefulness of the resulting works. Considering the core questions of medical education systematic review and the research paradigm these align to allow authors to select an appropriate synthesis methodology. This can ensure the most relevant outcomes for educators in the field and the true utility of medical education systematic review.

Notes on contributor

Morris Gordon, MBChB, MRCPCH, MMEd, is a Paediatrician in Blackpool Victoria Hospital, UK and the head of Professionalism and Careers at the University of Central Lancashire, Preston, UK. He is the head of the UCLAN/Blackpool BEME International Collaborating Centre and a member of the BEME editorial board.

Acknowledgments

The author thanks Rochelle Tractenberg for her considerable support, scholarly discourse regarding and final review of this piece.

Disclosure statement

The author reports no conflicts of interest. The author alone is responsible for the content and writing of the article.

References

- Bearman M, Dawson P. 2013. Qualitative synthesis and systematic review in health professions education. Med Educ. 47:252–260.

- Best Evidence Medical Education. 2015. BEME homepage. [cited 2015 Dec 11] Available from: http://www.bemecollaboration.org.

- Bligh J, Anderson MB. 2000. Medical teachers and evidence. Med Educ. 24:162–163.

- Carline JD. 2004. Funding medical education research: opportunities and issues. Acad Med. 79:918–924.

- Chen FM, Burstin H, Huntington J. 2005. The importance of clinical outcomes in medical education research. Med Educ. 39:350–351.

- Cook DA, Bordage G, Schmidt H. 2008a. Description, justification, and clarification: a framework for classifying the purposes of research in medical education. Med Educ. 42:128–133.

- Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. 2008b. Internet-based learning in the health professions: a meta-analysis. JAMA. 300:1181–1196.

- Dornan T, Peile JS, Spencer J. 2008. On ‘evidence’. Med Educ. 42:232–233.

- Gordon M, Darbyshire D, Saifuddin A, Vimalesvaran K. 2013a. Limitations of poster presentations reporting educational innovations at a major international medical education conference. Med Educ Online. 18:20498.

- Gordon M, Darbyshire D, Baker P. 2013b. Separating the wheat from the Chaff. Systematic review in medical education. Med Educ. 47:632.

- Gordon M. 2014a. Developing healthcare non-technical skills training through educational innovation and synthesis of educational research. [cited 2015 Jul 20 ] Available from http://usir.salford.ac.uk/30826/1/M_GORDON_THESIS_FINAL_AMMENDED_FULL_SUBMISSION.pdf.

- Gordon M, Vaz Carneiro A, Patricio M, Gibbs T. 2014b. Missed opportunities in health care education evidence synthesis. Med Educ. 48:644–645.

- Gordon M, Gibbs T. 2014c. STORIES statement: publication standards for healthcare education evidence synthesis. BMC Med. 12:143.

- Green J, Britten N. 1998. Qualitative research and evidence based medicine. BMJ. 316:1230–1232.

- Harden R, Grant J, Buckley G, Hart IR. 1999. BEME guide no. 1: Best evidence medical education. Med Teach. 21:553–562.

- Sharma R, Gordon M, Dharamsi S, Gibbs TS. 2015. Systematic reviews in medical education: a practical approach: AMEE guide 94. Med Teach. 37:108–124.

- Wong G, Greenhalgh T, Westhorp G, Buckingham J, Pawson R. 2013. RAMESES publication standards: realist syntheses. BMC Med. 11:21.