Abstract

Background

For more than 20 years, medical literature has increasingly documented the need for students to learn, practice and demonstrate competence in basic clinical knowledge and skills. In 2001, the Louisiana State University Health Science Centers (LSUHSC) School of Medicine – New Orleans replaced its traditional Introduction in to Clinical Medicine (ICM) course with the Science and Practice of Medicine (SPM) course. The main component within the SPM course is the Clinical Skills Lab (CSL). The CSL teaches 30 plus skills to all pre-clinical medical students (Years 1 and 2).

Methods

Since 2002, an annual longitudinal evaluation questionnaire was distributed to all medical students targeting the skills taught in the CSL. Students were asked to rate their self- confidence (Dreyfus and Likert-type) and estimate the number of times each clinical skill was performed (clinically/non-clinically). Of the 30 plus skills taught, 8 were selected for further evaluation.

Results

An analysis was performed on the eight skills selected to determine the effectiveness of the CSL. All students that participated in the CSL reported a significant improvement in self-confidence and in number performed in the clinically/non-clinically setting when compared to students that did not experience the CSL. For example, without CSL training, the percentage of students reported at the end of their second year self-perceived expertise as “novice” ranged from 21.4% (CPR) to 84.7% (GU catheterization). Students who completed the two-years CSL, only 7.8% rated their self-perceived expertise at the end of the second year as “novice” and 18.8% for GU catheterization.

Conclusion

The CSL design is not to replace real clinical patient experiences. It's to provide early exposure, medial knowledge, professionalism and opportunity to practice skills in a patient free environment.

Teaching and assuring medical students’ competence in fundamental clinical skills have been persistent challenges in curriculum development for decades. Curriculum models have traditionally emphasized clinical skills training predominantly in third-year clerkships and using apprentice-type learning from patient encounters (e.g., ‘see one, do one, teach one’). The changing landscapes of healthcare, program accreditation, and medical education have become increasingly more complex and less conducive to such heavy reliance on apprentice-type teaching and learning. Today's patients and providers are sometimes less tolerant of students’ inexperience and novice abilities. With increased outpatient care, shorter hospital stays, and less time for teaching in clinical settings, students’ access to sufficient and appropriate patient situations has decreased and their opportunities for learning and practice can vary considerably.

For more than 20 years the medical literature has increasingly documented the need for standardized curriculum components in which students can learn, practice, and demonstrate competence in basic clinical knowledge and skills. For example, Kowlowitz et al. Citation1 reported that only a minority of medical students gained meaningful experience performing basic procedures and many did not receive feedback that included standardized evaluation of skills performance. They argued for explicit curricular time and specific criteria for teaching, supervising, and assessing clinical procedures among medical students. Similarly, Nelson and Traub Citation2 revealed that 47 of 60 responding US medical schools (78%) reported no formal training component for clinical procedures in their student curriculum. Governing bodies have disseminated curricular frameworks and specific recommendations for educational reform and outcomes Citation3 Citation4 Citation5 . These and other initiatives have contributed to the rapid expansion of longitudinal pre-clinical doctoring courses, clinical skills centers, and use of standardized patients Citation6 Citation7 Citation8 . Some studies Citation9 Citation10 have provided evidence that certain clinical skills training initiatives have improved clinical proficiency of students and overall attitude, knowledge, and enjoyment. However, Goodfellow and Claydon Citation11 documented little or no exposure to basic skills prior to graduation and others Citation12 Citation13 Citation14 Citation15 Citation16 have revealed limited progress and argued for more explicit approaches (e.g., standardized curricula and clinical skills training laboratories). Despite the passage of time and various reform initiatives, there is still a fundamental need for educational models reflecting standardized, competency-based curricula that include structured teaching, observation, assessment, feedback, and deliberate practice towards mastery Citation17 Citation18.

In August 2001 the faculty at Louisiana State University School of Medicine – New Orleans replaced its traditional two-year Introduction to Clinical Medicine course with a new simulation and population-based longitudinal pre-clinical course, Science and Practice of Medicine (SPM; Years 1 and 2), that targeted patient communication, professionalism, patient assessment, and initial acquisition of fundamental clinical skills and procedures. Each year of the SPM course consisted of three major components emphasizing active learning strategies (e.g., small groups, collaborative efforts with basic science courses, peer-assisted learning, and human patient simulation): clinical forums, diagnostic reasoning, and a clinical skills laboratory (CSL). A complete description of the SPM course can be viewed at www.medschool.lsuhsc.edu/medical_education/undergraduate/ and details of the CSL, including pre-session learning materials, can be viewed at www.medschool.lsuhsc.edu/medical_education/undergraduate/skills_lab.asp.

Our review of the literature and subsequent feedback from colleagues in the field suggested that the CSL was the first required simulation-based, pre-clinical clinical skills laboratory in the United States. Since first presenting the CSL component of the SPM course at a national conference Citation19, many colleagues from other medical and allied health schools in the United States and abroad have visited and inquired about how to replicate or adapt our model at their institutions. The purpose of this article is thus to describe the structure and methodology of the pre-clinical CSL component and offer evidence of its effectiveness.

Background and Rationale for Creating the Clinical Skills Laboratory

The CSL is one of three major components of the SPM course. The clinical forums component has small group sessions with faculty, senior students, and peers regarding doctor-patient interactions and communication, case-based presentations and discussions, and application of medical ethics and professionalism principles. In the diagnostic reasoning component, students complete DxR computer-based cases (www.dxrgroup.com/) to learn and practice clinical reasoning and patient management (on average one case per week). Weekly large group discussions are co-facilitated by clinical and basic science faculty members to debrief, provide feedback on responses, and help students integrate important basic sciences into clinical practice for the assigned DxR case. For the CSL, students complete a series of hands-on, situation-based learning and assessment sessions targeting 36 core clinical skills and procedures, starting the first week of medical school and continuing until the end of Year 2. CSL sessions reflect features of deliberate practice Citation17 Citation18, while enhancing self-confidence, problem-solving skills, professional development, and attention to patient care and safety. The CSL makes extensive use of anatomical trainers and low- and high-fidelity human patient simulation in scenario-based activities. Our school does not use a standardized patients program in its curriculum.

As a whole, the CSL was designed as a standardized, competency-based, and learner-centered curriculum. Faculty leaders designed the curriculum to emphasize active learning, learner-centered participation and use of situation-based simulation, supported by prior evidence that such pre-clinical experiences can positively impact students’ perceptions of medical school and their development as physicians Citation2 Citation9 Citation20 Citation21. Further, the inclusion of deliberate coaching and practice methods in the CSL curriculum was influenced by previous work that reported significant improvement in clinical skills being related to participation in pre-clinical mentoring relationships Citation2 Citation10 Citation21.

Curricular Structure of the Clinical Skills Lab

As shown in , the CSL curriculum is composed of seven sessions in Year 1 and eight in Year 2 targeting a total of 36 clinical skills (cognitive and/or technical) and procedures. Each student class (enrollment ranging from 167 to 194 students/class) is separated into 16 groups of no more than 12 students each. This requires each CSL session to be taught 16 times per year. All CSL sessions are taught by a single instructor to maintain consistency of content, methods, and performance assessment criteria across sessions. Each session lasts between one and five hours, with most averaging one hour to 90 minutes. First-year students complete a total of 12 hours in face-to-face sessions, while second-year students complete 10 hours.

Table 1. Overview of Clinical Skills Laboratory curriculum: Sessions, competency-based learning objectives, and performance applications/formats

The majority of CSL sessions are taught in a designated space consisting of slightly more than 500 square feet within the Isidore Cohn, Jr. MD Student Learning Center, a centralized educational resource situated organizationally within the Offices of the Dean, School of Medicine. The CSL facility configuration reflects an old-style hospital patient ward (i.e., multiple patient beds in one large room) that includes five patient examination tables, each outfitted with a low-fidelity adult simulator. One low-fidelity pediatric simulator is also available and used when needed for specific sessions. Depending on the particular CSL session, all five simulators can be used to simulate the same patient features (e.g., same heart and/or lung sounds) or as five different patients to meet the learning, practice, and assessment goals of a session. When the CSL uses cadaveric specimens, these sessions are held in a wet lab located with in the Learning Center.

Sessions are scheduled in a specific order to facilitate students building on their prior learning experiences and to coordinate with other curricular elements (e.g., history taking and physical examination of real patients, other components of the SPM course, and basic science lectures and laboratory experiences). For example, in Year 1 students conduct ECGs and apply content of electrophysiology to human patient simulation scenarios in the CSL session targeting cardiac rhythms at the same time as they are learning cardiac rhythms in their physiology course (Year 1 – Human Physiology).

Instructional Design of Each CSL Session

Prior to each CSL session, students must complete two self-directed learning and assessment activities to ensure readiness and effective use of time in the focused, structured, fast-paced, problem-solving, interactive activities.

Review assigned sections of the LSUHSC Emergency Medicine Medical Student Procedure Manual, and other assigned web-based material (e.g., learning objectives/clinical competencies, notes, links, videos, and PowerPoint presentation).

Complete a pre-test consisting of 10 multiple-choice, single-best-answer items pertaining to the targeted learning objectives. The completed pre-test serves as the ‘admission ticket.’ Failure to complete requires students to reschedule for another session.

A standardized structure is used for each 60–90-minute session. Upon entering a CSL session, each student is assigned immediately to a small group of two or three members. The lab session begins with a 15–20-minute segment during which the instructor provides a brief overview of the objectives and an interactive lecture/discussion that includes a review of the pre-test content, key content from pre-session preparation materials, and clinical demonstration of the targeted clinical skills and/or procedure. For the remainder of the session, students rotate with their assigned small groups to each of five or six stations every five to 15 minutes and practice the targeted skills and behaviors.

Stations might focus on learning technical aspects of clinical skills and procedures, diagnosis, problem-solving, and/or non-technical skills (e.g., communication). For example, in a lumbar puncture station, students use an anatomical model, an actual patient kit, and a standardized performance checklist for self-instruction and peer evaluation. Depending on the specific clinical skill or procedure, the associated checklist includes items addressing relevant facts, indications and contraindications, complications, materials, and techniques (e.g., ordered steps and standard precautions). When a student completes the assigned exercise, they switch roles and repeat the process until each one has completed the learning cycle for the station. Thus each student learns, performs, observes, assesses, and provides feedback using the standardized performance checklist as a guide. Several lab sessions target clinical reasoning and require students to assess a patient simulator (e.g., vital signs, heart/lung sounds, and rhythm recognition), develop a differential diagnosis, and/or determine the next best treatment option. Each student in a group conducts his/her own assessment independently and answers three to four multiple-choice (single best answer) or fill-in-the-blank questions pertaining to treatment, diagnosis and/or assessment of the patient (5–6 minutes). The small group then uses the remainder of their allotted station time (∼1–2 minutes) to discuss their findings and options for the case, encouraging team problem-solving, peer interaction, and peer teaching. After completing a station, the students rotate together to the next station to perform new tasks, and continue in this fashion until all the stations have been completed. As students rotate and complete stations, the instructor observes directly, rotates among the learner groups/stations, monitors performance and time, and provides feedback as appropriate to small groups and the class as a whole.

Once stations are completed, the instructor conducts a large group debriefing discussion to reinforce core content and skills, facilitate learning through questioning, promote student input, and utilize reflective critique. Debriefing discussions emphasize integration of relevant basic science and clinical skills, and provide additional references for individual learning. The learning objectives are reviewed, questions are answered, and the case/station assessments/checklists/group practical quizzes are collected at the end of the session. For all CSL stations, students are expected to demonstrate professionalism, good hand hygiene, universal precautions, and maintaining a sterile field when indicated. All CSL pre-tests are graded and require the students to achieve a minimum passing score. Some CSL sessions have an associated pre-test and group practical quiz, thus both scores are added together for an overall grade. To date, the SPM course has been graded as pass or fail.

Evidence of CSL Effectiveness

Evaluation of the effectiveness of clinical skills training in the CSL has been guided by Kirkpatrick's four-level taxonomy (1 = reaction/satisfaction, 2 = learning, 3 = behavior, 4 = outcomes/impact) Citation22. Direct observations and informal feedback from students enrolled in the CSL have consistently revealed positive reactions. Direct observations of students during CSL sessions and unsolicited comments from clinical faculty members who work with students have provided some evidence, albeit anecdotal, suggesting that students are learning targeted knowledge and skills. Results of the annual student evaluations of the course have been consistently very positive. Initial efforts to evaluate systematically the influence of the CSL on students’ learning and behaviors have included the design and administration of a longitudinal survey of students’ self-reported self-efficacy (i.e., confidence) and practice for each of the targeted clinical skills/procedures through annual administration of the clinical skills laboratory questionnaire (CSLQ) to all LSUHSC-New Orleans medical students (Years 1–4). From the onset, a key premise of the CSL curriculum was that active learning and deliberate practice in these sessions would increase students’ confidence in engaging in core clinical skills and procedures, and in turn result in increased student participation and practice in subsequent opportunities (actual and simulated) as they progress through medical school.

Following review and approval by the LSUHSC-New Orleans Institutional Review Board, a paper-based form of the CSLQ was administered to all LSUHSC-NO medical students (Years 1–4) in May 2002, immediately following completion of the first year-long implementation of the CSL sessions. At the time of this administration, only first-year students had used the CSL and students in Years 2–4 provided baseline data on those who had not experienced the new CSL curriculum. Since 2002 the questionnaire has been administered annually as part of a longitudinal program evaluation plan. Since May 2003 the CSLQ has been distributed to all LSUHSC-NO medical students (Years 1–4) as an authenticated and confidential web-based form of the questionnaire using WebQ (http://lionis.net).

The initial questionnaire (2002) targeted 32 clinical skills/procedures taught in the CSL and this number has increased as new skills and procedures have been incorporated into the curriculum. Currently, the CSLQ includes 36 clinical skills and procedures. Students complete the CSLQ by rating their self-confidence in performing each of the clinical skills/procedures taught in the CSL and estimating the number of times they performed/practiced each skill/procedure in real or simulated situations during the current academic year. From 2002 to 2004 self-confidence was measured based on self-perceived expertise using response categories reflecting the Dreyfus conceptual framework (1 = novice, 2 = advanced beginner, 3 = competent, 4 = proficient, 5 = expert) Citation23 and students estimated the number of times that had performed the skill/procedure during the academic year using categorical response choices (0, 1–2, 3–10, 11–20, >20). Since 2005 students’ self-efficacy has been measured using a five-point Likert-type confidence scale (1 = not confident at all to 5 = completely confident) and students have reported an absolute number for the times they performed/practiced each targeted skill/procedure.

Data Collection and Analysis

A longitudinal study of students’ evolving self-efficacy and engagement in performing clinical skills and procedures prior to and following the CSL was originally planned. However, the impact of Hurricane Katrina altered the study context significantly before and after 2005, particularly in terms of the population and patient census in New Orleans, and correspondingly the overall clinical environment and opportunities for students to engage in direct patient care (substantially reduced patient access and volume). Consequently, the opportunities for students to practice the targeted clinical skills and procedures were substantially different before and after Hurricane Katrina. In addition, the CSL and students’ clinical learning were temporarily displaced to other locations. The CSL facilities were completely destroyed and interim arrangements were necessary to continue the curriculum until full implementation could be restored. In addition to the disaster and aftermath of Katrina, the assessment tool (scale) was changed in 2003, as previously described, thus affecting our ability complete the longitudinal analysis that was originally planned. Therefore, we decided to evaluate the effectiveness of the CSL using two sets of data collected with the CLSQ.

First, data collected prior to 2005 were examined to assess differences in students’ self-perceptions of expertise (i.e., novice, advanced beginner, competent, proficient, expert) and self-reported practice based on whether they experienced the CSL curriculum. Second, data collected after 2005 and through 2009, representing a cohort of medical students across their four years of medical school, were analyzed to examine differences in medical students’ self-reported practice of clinical skills and procedures in the second year prior to and after the CSL training sessions. For the purposes of this analysis, eight of the most important clinical skills/procedures taught in the CSL were selected based on evidence from the literature Citation24 Citation25. Finally, the extent to which self-confidence and practice correlated was also examined. Data screening and examination of missing values were completed prior to data analysis.

The first dataset (pre-Hurricane Katrina) included responses of second-year students collected in May 2002, reflecting the last medical student class that did not experience the CSL curriculum (pre-CLSQ), and responses of second-year students collected via the CLSQ in May 2003 and 2004, representing the first two classes who completed both years of the CSL curriculum. The chi-square test was used to compare self-efficacy ratings and number of procedures performed (practice) for students without (2002 data) and with the CSL. Spearman's correlation coefficient was computed to measure the correlation between students’ self-efficacy and number of procedures performed.

For the second dataset (post-Hurricane Katrina), entering first-year medical students completed the CSL in August 2005, just prior to starting classes. These students also completed the CSLQ at the end of each subsequent academic year: after CSL training (i.e., at the end of second year), at the end of the third year (i.e., core clerkships), and at the end of fourth year (i.e., just prior to graduation). For each CLSQ administration to this completed cohort, we calculated and examined the mean of students’ self-efficacy, determined by confidence ratings (i.e., five-point Likert-type scale ranging from 1 = not confident at all to 5 = completely confident).

Results

Response rates for the first dataset (2002–2004) were 54.44% (2002, without CSL), 36.53% (2003, with CSL), and 53.14% (2004, with CSL). The overall combined response rate reflecting students completing the CSL is 45.03% (2003–2004). As shown in , analysis of the first dataset (2002–2004) revealed that second-year students’ self-perceived expertise in performing the eight selected clinical skills was significantly higher for those experiencing the CSL than for those who did not. For example, without CSL training, the percentage of students reporting at the end of their second year self-perceived expertise as ‘novice’ ranged from 21.4% (cardiopulmonary resuscitation–CPR) to 84.7% (GU catheterization–GUC). For students who completed the two-year CSL, only 7.8% rated their self-perceived expertise at the end of the second year as ‘novice’ for CPR and 18.8% for GUC. More interestingly, shows that the number of procedures performed by the end of the second year was significantly greater for the students who completed CSL training than for those who did not have the CSL in the curriculum. Again, using CPR and GUC as examples, without the CSL 38.9% and 88.8% of students reported not performing any CPR or GUC in the second year, compared to 3.9% and 9.1% respectively for students with the CSL. Finally, as shown in , the correlation between students’ self-efficacy level and the number of procedures performed in the second year was positive and statistically significant.

Table 2. Comparison of second-year students’ self-perceived expertise for performing eight clinical procedures before and after clinical skills lab training.

Table 3. Comparison of number of procedure performed by students in the second year before and after clinical skills lab training.

Table 4. Correlation between self-efficacy level in performing procedures and the number of procedure performed in second year.

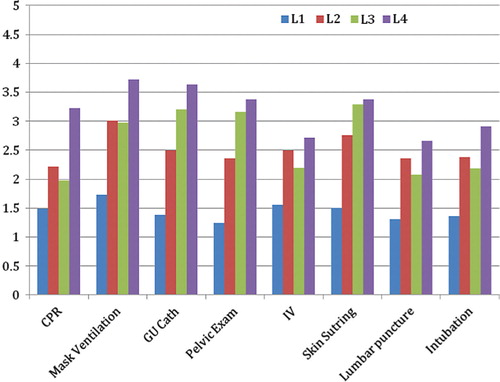

For the second dataset (2005–2009) that included the completed class cohort (n = 194), response rates for the four data collection points were 50.00%, 40.21%, 26.29%, and 16.49% from Years 1 to 4. Examining the self-efficacy scores for the completed cohort across their four years of medical school, shows that students’ self-efficacy scores increased after completing CSL training. For some procedures, the self-confidence gained as a result of the CSL simulation experiences decreased slightly by the end of their third-year clerkships (CPR, IV, lumbar puncture, and intubation). However, as shown in , confidence levels were sustained (mask ventilation) or increased (GUC, pelvic exam, and skin suturing) by the end of the third-year clerkships. As a pattern, the CSL experiences contributed more than 50% of the observed increase in self-efficacy/confidence.

Figure 1. Mean scores for medical students’ self-efficacy/confidence ratings (5-point Likert scale) for performing eight clinical skills at four points during medical school: 1) Beginning, immediately prior to starting curriculum (L1), 2) End of second year, immediately after CSL training (L2), 3) End of third year, completion of core clerkships (L3), and 4) At the end of medical school, just prior to graduation (L4).

Discussion and Conclusions

Like so many medical schools, the LSU-New Orleans faculty responded to public calls for curriculum reform and revised its undergraduate medical education, particularly with the introduction of SPM in Years 1 and 2 and the construction of a state-of-the-art school-wide learning center in 2001. One particularly novel component of the SPM course was the CSL, the focus of this study. Drawing on Kowlowitz et al. Citation1, Bradley and Bligh Citation26, and others, the CSL curriculum was designed as a standardized, competency-based, learner-centered training program in which students demonstrated responsibility for their own and each other's learning in a safe, low-stress learning environment using elements of mastery learning (e.g., criterion-based performance, focused practice, coaching with focused feedback). The incorporation of various media and forms of simulation (except standardized patients) provided opportunities to learn and practice in a supervised, standardized, patient-safe setting.

The CSL was not designed to replace real clinical experience and interactions with patients or to achieve complete mastery of clinical skills and procedures. Overall, its goal was to develop students’ self-efficacy (or confidence) in performing fundamental clinical skills as the first rung in the learning ladder toward full-time clinical learning (e.g., clerkships). An equally important goal was to engage students from the beginning of medical school in the practices of mastery learning and a focus on developing competency in knowledge, technical and non-technical skills, and professionalism. The premise was that standardized early exposure would strengthen students’ pre-clinical preparation and self-confidence, which in turn would facilitate their future efforts to pursue and succeed in performing such core clinical skills and procedures correctly and safely. Others have since shared similar perspectives on this type of pre-clinical learning Citation27 Citation28 Citation29 .

Despite the hurdles and interruptions of implementing an innovative curriculum and dealing with unprecedented disaster (recovering from Hurricane Katrina and rebuilding the program and facilities), the evaluation results reported here provide evidence of achieving the primary CSL curriculum goals. Despite declining response rates from Years 1–4 for the graduating class of 2009 cohort, the results reveal a positive influence on students’ self-efficacy and engagement in the targeted skills and procedures. The decrease in confidence after full-time learning in clinical clerkships shown in was not surprising, and may reflect either inflated self-confidence that was calibrated as a result of real-life experiences, realizing limited real-life opportunities to perform skills and procedures (particularly in the altered post-Katrina environment), or both.

Encouraging were the results for those clinical skills where increased self-confidence immediately following completion of the CSL was further increased by the end of the third-year clerkships, offering evidence of the desired scaffolding effect for learning in medical school. While a higher and more consistent response rate across all four years would have facilitated a more conclusive interpretation, one possible explanation is that students’ reported self-efficacy and preparedness achieved through the CSL did facilitate their pursuit of and active engagement in opportunities to perform the identified clinical skills and procedures, thus further increasing their self-efficacy and abilities to perform.

With the passage of the most recent and revolutionary healthcare legislation, the future professional practice and delivery of services in the United States, while not yet fully understood, will certainly change dramatically. At the same time, accreditation agencies have increasingly required additional evidence of achieving comparability, standardization, competency-based outcomes, and mastery learning in curricula and professional training experiences. Other scholarly investigations Citation30 Citation31 offer further recommendations for substantial changes to the content emphasis, structures, and foci of medical education. Required topics and skills continue to increase for degree programs, while time and resources for teaching, learning, and assessment seem not to keep pace. Such sweeping changes and increasing accreditation requirements will certainly continue to influence the way medical students, residents, and other healthcare providers are educated initially and throughout their careers. The model of teaching and learning represented in the CSL is one example of how pre-clinical learning can be substantially enhanced and offer an early educational scaffold on which subsequent and advanced learning can be built systematically for all learners in a degree program. In addition, the small group sessions emphasize cognitive and psychomotor skills and scenario-based opportunities to apply concepts and principles of medical ethics, teamwork, and professionalism that are so critical to providing high-quality, compassionate, and safe patient care. Such early exposure to clinical skills and professional practice can positively influence students’ perceptions of their medical school experience and facilitate their development as physicians Citation2 Citation9 Citation20 Citation21 Citation26.

Despite limitations of this study (e.g., declining response rates, single school site), the combination of various sources of evidence of effectiveness and our direct observations and experiences support the CSL as an effective standardized, competency-based model for enhancing development of fundamental clinical skills and procedures. Also, the CSL has been in place for nearly 10 years, and even with the necessary rebuilding following Hurricane Katrina, little modification of core features has been required, while we have expanded and enhanced considerably over the years. Although we report effectiveness evidence for implementation at only one school, the overwhelming interest and feedback from colleagues who have examined and used our model and experiences to pursue developments at their own schools provide additional support for the feasibility and potential contribution to enhance students’ clinical competence. Finally, at LSU the CSL has been a scaffold and stimulus for developing standardized, competency-based approaches to learning in other areas of the MD degree program (e.g., core simulation-based curriculum in the third year), in graduate medical education (e.g., ‘skills fairs’ for residents and fellows), and in continuing medical education and collaboration with industry (e.g., targeting new procedures and uses of technology). As we continue to refine and expand the CSL, we are pursuing other measures of effectiveness, particularly in terms of Kirkpatrick's Level 3 evaluation, behavior (e.g., performance in clerkships, actual patient care), and Level 4 (e.g., lasting impact, learning, program and organizational outcomes, return on investment). We are also exploring new applications of the model reflected in our CSL for creating standardized, competency-based, and cost-effective teaching, learning, and assessment. Our current work examining the cost-effectiveness of the CSL suggests that it is a cost-effective model and we look forward to disseminating the results in the near future.

References

- Kowlowitz V, Curtis P, Solane PD. The procedural skills for medical students: expectations and experiences. Academic Medicine. 1990; 65: 656–658.

- Nelson MS, Traub S. Clinical skills training in US medical students. Academic Medicine. 1993; 68(12): 926–928.

- GMC. Tomorrow's doctors. General Medical Council. 2003, www.gmc-uk.org/education/undergraduate/undergraduate_policy/tomorrows_doctors.asp.

- Association of American Medical Colleges.1998. Medical information and population health. , www.aamc.org/meded/msop/.

- Dent J. Current trends and future implications in the developing role of clinical skills centres. Medical Teacher. 2001; 23: 483–489.

- Bligh J. The clinical skills unit. Postgraduate Medical Journal. 1995; 71: 730–732.

- Dent JA, Angell-Preece HM, Ball HM, Ker JS. Using the ambulatory care teaching centre to develop opportunities for integrated learning. Medical Teacher. 2001; 23: 171–175.

- Ledingham I McA, Harden RM. Twelve tips for setting up a clinical skills training facility. Medical Teacher. 1998; 20: 503–507.

- Marcus E, White R, Rubin RH. Early clinical skills training. Academic Medicine. 1994; 69: 415.

- Curry RH, Makoul G. An active-learning approach to basic clinical skills. Academic Medicine. 1996; 71: 41–44.

- Goodfellow PB, Claydon P. Students sitting medical finals–ready to be house officers?. Journal of the Royal Society of Medicine. 2001; 94: 516–520.

- McManus I, Richards P, Winder B, Sproston K, Vincent C. The changing clinical experience of British medical students. Lancet. 1993; 341: 941–944.

- McManus I, Richards P, Winder B. Clinical experience of UK medical students. Lancet. 1998; 351: 802–803.

- Remmen R, Scherpbier A, Derese A, Denekens J, Hermann I, Van der Vleuten C, et al.. Unsatisfactory basic skills performance by students in traditional medical curricula. Medical Teacher. 1998; 20: 579–582.

- Remmen R, Derese A, Scherpbier A, Denekens J, Ingeborg H, Van Der Vleuten C, et al.. Can medical schools rely on clerkships to train students in basic clinical skills?. Medical Education. 1999; 33: 600–605.

- Sanders CW, Edwards JC, Burdenski TK. A survey of basic technical skills of medical students. Academic Medicine. 2004; 79: 873–875.

- Ericcson KA, Krampe RT, Tesch-Romer C. The role of deliberate practice in the acquisition of expert performance. Psychological Review. 1993; 100: 363–406.

- Ericcson KA. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Academic Medicine. 2004; 79: S70–S81.

- Lofaso D, DeBlieux P, Chauvin S, Yang T, DiCarlo R, Hilton C. Examining the effectiveness of teaching pre-clinical medical students to perform core clinical skills and procedures. Southern Group on Educational Affairs Association of American Medical Colleges. Savannah GA, 2004

- Lieberman SA, Stroup-Benham CA, Peel JL, Camp MG. Medical student perception of the academic environment: a prospective comparison of traditional and problem-based curriculum. Academic Medicine. 1997; 72: S13–15.

- Lam TP, Irwin M, Chow LWC, Chan P. Early introduction of clinical skills teaching in a medical curriculum–factors affecting students’ learning. Medical Education. 2002; 36: 233–240.

- Kirkpatrick D. Evaluating training programs: the four levels. Berrett-Kohler. San Francisco CA, 1994

- Dreyfus S, Dreyfus H. A five-stage model of the mental activities involved in directed skill acquisition. University of California at Berkeley, Operations Research Center; 1980.

- Turner S, Hanson J, deGara C. Procedural skills: what's taught in medical school, what ought to be?. Education for Health. 2007; 20: 1–5.

- Ringsted C, Schroeder V, Henriksen J, Ramsing B, Lyngdorf P, Jonsson V, et al.. Medical students’ experience in practical skills is far from stakeholders’ expectations. Medical Teacher. 2001; 23: 412–416.

- Bradley P, Bligh J. One year's experience with a clinical skills resource centre. Medical Education. 1999; 33: 114–120.

- Bradley P. Introducing clinical skills training in the undergraduate medical curriculum. Medical Education. 2002; 24: 209–212.

- Bradley P, Bligh J. Clinical skills centers: where are we going?. Medical Education. 2005; 39: 649–650.

- Peeraer G, Scherpbier A, Remmen R. Clinical skills training in a skills lab compared with skills training in internships: comparison of skills development currucula. Educational for Health Journal (online). 2007: 125.

- Cooke M, Irby DM, O'Brien BC. Educating physicians: a call for reform of medical school and residency. Carnegie Foundation for the Advancement of Teaching, Preparation for the Professions. Jossey-Bass. San Francisco CA, 2010

- Finnerty EP, Chauvin SW, Bonnaminio G, Andrews M, Carroll RG, Pangaro L. Flexner revisited: the role and value of the basic sciences in medical education. Academic Medicine. 2010; 85: 349–355.