Abstract

Background

Simulation has been identified as a means of assessing resident physicians’ mastery of technical skills, but there is a lack of evidence for its utility in longitudinal assessments of residents’ non-technical clinical abilities. We evaluated the growth of crisis resource management (CRM) skills in the simulation setting using a validated tool, the Ottawa Crisis Resource Management Global Rating Scale (Ottawa GRS). We hypothesized that the Ottawa GRS would reflect progressive growth of CRM ability throughout residency.

Methods

Forty-five emergency medicine residents were tracked with annual simulation assessments between 2006 and 2011. We used mixed-methods repeated-measures regression analyses to evaluate elements of the Ottawa GRS by level of training to predict performance growth throughout a 3-year residency.

Results

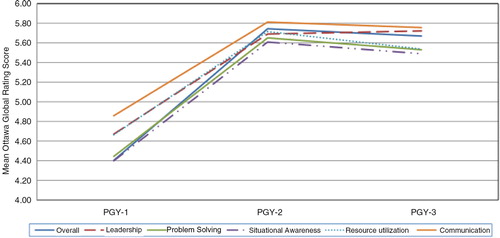

Ottawa GRS scores increased over time, and the domains of leadership, problem solving, and resource utilization, in particular, were predictive of overall performance. There was a significant gain in all Ottawa GRS components between postgraduate years 1 and 2, but no significant difference in GRS performance between years 2 and 3.

Conclusions

In summary, CRM skills are progressive abilities, and simulation is a useful modality for tracking their development. Modification of this tool may be needed to assess advanced learners’ gains in performance.

The current zeitgeist in graduate medical education centers around a competency-based model that emphasizes individualized instruction and objective evidence of learning outcomes (Citation1). In the United States, the Accreditation Council for Graduate Medical Education (ACGME) announced the current phase of the Outcomes Project, the Next Accreditation System (NAS), in February of 2012 (Citation2, Citation3). The NAS directs residency programs to demonstrate trainees’ progressive mastery of knowledge, skills, and behaviors pertaining to the six core clinical competencies. In the field of emergency medicine (EM), simulation has been proposed as one of the key modalities for assessing residents’ clinical abilities within these newly framed ‘Milestones’ (Citation4). The 2008 Society for Academic Emergency Medicine Consensus Conference on ‘The Science of Simulation in Healthcare’ held that ‘strong leadership skills are crucial to the practice of EM and thus should be a focus of team training’ (Citation5). As the NAS is enacted, there is a growing need for evidence-based tools to evaluate trainees’ teamwork and interpersonal communication skills (Citation6). Crisis resource management (CRM) refers to the constellation of cognitive and interpersonal communication skills that comprise effective team performance (Citation7, Citation8).

Simulation has been well established as a means of assessing competency in a variety of individual clinical applications. However, limited evidence exists for the use of simulation to demonstrate growth of clinical competencies within individual learners over time. While the Ottawa GRS has been used to evaluate residents and other health professionals in a given (static) stage of their clinical development, there is no current literature reporting the use of the Ottawa GRS to evaluate learners’ longitudinal growth Citation9–(Citation14) . We hypothesized that EM residents’ non-technical skills develop in a positive and relatively linear fashion over the course of residency, and that the Ottawa GRS instrument would reflect this growth.

Methods

This was an observational cohort study conducted at a single academic emergency medicine residency program based in Northern California. The Institutional Review Board at the UC Davis School of Medicine approved the study. Individual resident data were de-identified prior to analysis.

The Department of Emergency Medicine at UC Davis has held annual resident simulation assessments since 2006 to augment training and to satisfy ACGME requirements in resident evaluation. Residents are tested in a single session in which each resident performs one case. Residents are scored by multiple faculty raters using the Ottawa Crisis Resource Management Global Rating Scale (Ottawa GRS), a tool developed to evaluate team leadership and communication in the simulation environment (Citation14). Simulated patient scenarios were developed via an iterative process by EM faculty with expertise in simulation education and scenario development. The scenarios were targeted in complexity to postgraduate year of training and were vetted by a consensus group of EM faculty with extensive simulation experience.

One hundred and three residents trained at the UC Davis EM program during the study period, 2006–2011. The EM residency at UC Davis is a 3-year, ACGME approved program established in 1989. UC Davis residents spend 70% of their clinical education at UC Davis Medical Center (UCDMC), an urban academic Level I trauma center with an annual emergency department census of 70,000 visits per year. Residents also train at a nearby private Level II trauma center with an annual Emergency Department census of 95,000 visits per year.

We included in our analysis only residents for whom we had complete longitudinal data during the study period (i.e., all 3 years of training). Fifty-eight residents were excluded from our study: 51 residents due to training cycle (i.e., entered the study as a PGY-2 or PGY-3 or completed the study as a PGY-1 or PGY-2), four residents entered the residency program as PGY-2 or PGY-3 and did not complete the PGY-1 assessment, two residents did not participate in the simulation assessment in their PGY-3 year, and one resident left the training program after the intern year. The remaining 45 residents qualified for the analysis.

Study protocol

The PGY-1-level scenario involves a patient who presents with an ST-segment elevation myocardial infarction. The patient decompensates into ventricular fibrillation and requires advanced cardiac life support. The PGY-2-level scenario involves a patient in respiratory failure and septic shock who ultimately requires intubation, central venous access, and vasopressor support. The PGY-3-level scenario involves a patient with multi-system trauma who requires intubation, chest tube placement, blood transfusion, and negotiation with a difficult consultant (Appendices A, B, and C).

All simulations were conducted in November and December of each academic year during the study period. Residents managed their cases individually after a brief standardized introduction to the simulator and case stem. A METI high-fidelity Human Patient Simulator (CAE Healthcare, Quebec, Canada) was used for all scenarios. Medical and nursing students as well as emergency department nurses served as confederates in the scenarios. Confederates were instructed to follow the direction of the participating resident but were not permitted to offer any suggestions in management or clinical decision making.

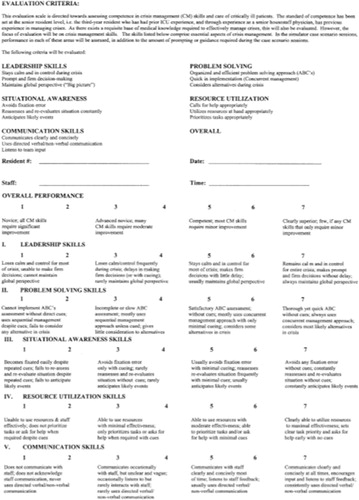

Residents’ performance was rated using the Ottawa GRS Scale (Appendix D) (Citation14). The Ottawa GRS consists of five CRM-related domains (leadership, problem solving, situational awareness, resource utilization, and communication) and an overall performance rating. Each domain is ranked on a 7-point Likert-style scale (seven being the highest), with descriptive anchors to aid in scoring. The Ottawa GRS has been previously validated and has shown strong interrater reliability and discriminative ability between PGY-1 and PGY-3 trainees (Citation13). Scores were used to provide formative feedback to the resident as well as to identify residents in need of remediation. Twelve residents performed below expectations during at least one of the three assessments and were allowed a retest using an alternate scenario. Data from repeat testing was not included in our analysis.

Three to five EM faculty members were present for each session and directly observed the simulation exams. Each of the faculty raters evaluated the residents during the simulation and independently recorded their scores using the Ottawa GRS instrument. All assessments were performed in real time without reliance on video playback. While the number of raters during a given assessment varied, the group of faculty raters (eight in total) remained consistent throughout the study period. Each of the raters received training on the use of the Ottawa GRS instrument and each was asked to score resident performance based on the descriptive anchors embedded in the Ottawa GRS instrument.

Outcome measures

The primary outcome of interest was to measure the interval change in resident performance by PGY level for the individual components of the Ottawa GRS. The secondary outcome of interest was to determine which elements of the Ottawa GRS were most predictive of overall performance.

Data analysis

We evaluated mean GRS scores with standard deviations and interquartile ranges by individual over time and by PGY status.

We constructed two repeated measures models to evaluate: 1) the interval significance of individual performance within a PGY class and between classes within the institution and 2) the components of the Ottawa GRS that were predictive of overall performance. The first was a mixed-effects repeated measures model that included each component of the Ottawa GRS as a candidate predictor for interval significance of an individual's performance throughout residency. The second was a repeated-measures generalized linear mixed model, which included components of the Ottawa GRS (leadership, problem solving, situational awareness, resource utilization, communication, and overall) and interaction terms as candidate predictors of overall performance. The second model included a pooled analysis of all participants, controlling for PGY status. In each model, the clustered fixed effects were the individual (subject) effect and his or her PGY status. Specifications for the Ottawa GRS component model and the Ottawa GRS time-interval model are included in the corresponding tables. All analyses were performed using SAS software, version 9.3 (SAS Institute Inc., Cary, NC, USA; 2011).

Results

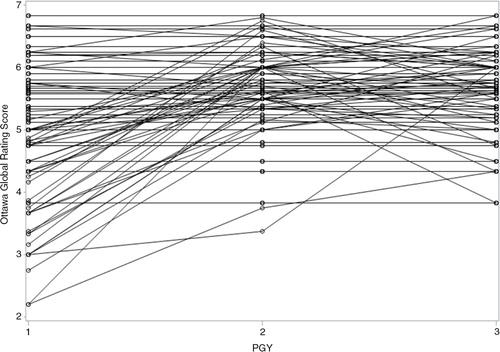

The demographics of the 45 residents are shown in . Mean GRS scores increased throughout each training year, with greater within-group variance for the PGY-1 level residents than in subsequent training years (). The greatest increase in mean GRS scores occurred between the PGY-1 and PGY-2 years (), despite individual variability in performance trajectory ().

Appendix D. Ottawa Crisis Resource Management Global Rating Scale (Ottawa GRS)

Table 1 Resident participant characteristics, N=45

Table 2 Mean scores for components of the Ottawa Global Rating Scale, N=45

The overall model demonstrated varying performance of individual components of the GRS (). Leadership, problem solving, and resource utilization showed statistical significance in the overall model.

Table 3 Model performance using components of the Ottawa Global Rating Scale

In the longitudinal growth model, the individual components of the Ottawa GRS – as predictors of overall performance – varied in statistical significance over time (). All components were statistically significant in the interval from PGY-1 to PGY-2. In contrast, no significant difference in performance in any GRS domain in the interval from PGY-2 to PGY-3 was detected.

Table 4 Interval significance of components of the Ottawa Global Rating Scale

Discussion

In this first study to evaluate critical resource management skills over time, we found that the Ottawa GRS readily identified early growth of residents’ skills. However, the Ottawa GRS was unable to discriminate scores between higher levels of training. Early learners showed considerable variance in individual scores. As training progressed, learners’ scores clustered closer together (i.e., there was a decrease in within-group variance and increase in between-group variance). The finer differences in GRS domains were not detectable in advanced learners.

Our results should be contextualized in the step-wise evolution of the current graduate medical education evaluation paradigm. In 1999, the ACGME instituted the Outcomes Project, a multi-year residency accreditation initiative based on educational outcomes and resident performance. This project was based on six identified core competencies: patient care, medical knowledge, practice-based learning and improvement, interpersonal skills and communication, professionalism, and systems-based practice (Citation15). The ACGME convened again in 2007–2008 to identify assessment methods for graduate medical programs. The group concluded that each specialty should adopt or develop specialty-appropriate assessment tools, termed ‘Milestones’, to encourage longitudinal assessment of residents, laying the groundwork for the NAS (Citation16).

The EM milestones were introduced in 2012 and consist of 23 areas of core knowledge (e.g., diagnosis, pharmacotherapy), skill (e.g., vascular access, airway management), and behavior (e.g., task switching, team management) common to the practice of EM (Citation3, Citation17). While documenting successful completion of procedural skills can be relatively straightforward, non-technical skills are more difficult to evaluate objectively (Citation18).

In the field of healthcare simulation, a number of different tools have been developed to assess CRM ability, a concept which originated in the aviation industry as ‘Crew Resource Management’ and which describes the non-technical skills (e.g., leadership, situational awareness, and communication) needed to successfully coordinate the behaviors of multiple individuals engaged in complex activities (Citation19). Gaba and Deanda adopted these principles to train anesthesiologists in critical operating room scenarios (Citation8, Citation20). These concepts were quickly embraced by other specialties such as EM and critical care (Citation21). Examples of CRM assessment instruments include the ANTS scale (Anesthetists’ Non-Technical Skills), NOTECHS scale (Non-technical Skills), the OTAS (Observational Teamwork Assessment of Surgery), and the Ottawa GRS (Citation14, Citation22–Citation24) . We selected the Ottawa GRS for our study because it had been rigorously validated and is straightforward and intuitive to use.

The goal of our study was to adopt a CRM assessment tool intended for discrete simulation scenarios and to use it as a ‘growth chart’ of CRM ability for individual trainees in the real-world setting of a residency program. In this novel approach, we found a statistically significant difference in performance across all components of the Ottawa GRS between PGY-1 and PGY-2 levels. However, no significant differences were found in performance on any component of the Ottawa GRS between PGY-2 and PGY-3 levels.

Our findings may be explained through the trainee's own perspective. First-year residents come to an institution with varying backgrounds, strengths, and weaknesses. The within-group variance seen in earlier stages later diminishes, likely due to the natural course of training in the same environment. As learners ‘homogenize’ through their training, the Ottawa CRM loses discriminative ability to detect progression through domains. This is consistent with the study by Kim et al. (Citation14) which reported that the GRS instrument had good discriminative ability when comparing PGY-1 residents to PGY-3 residents in two standardized emergency scenarios.

Our study has a number of important limitations. It was conducted at a single residency program and included a limited number of participants in a single site, affecting the generalizability of our observed results. Raters were faculty within our department and therefore not blinded to resident training level and prior clinical performance. The data were obtained over the course of multiple years by a small but variable group of faculty raters, as all raters were not present for every scenario. Individual rater data were not tracked in the database. Although interrater reliability for a particular encounter could not be evaluated, the Ottawa GRS has previously been shown to demonstrate good interrater reliability (Citation14). While the raters did not receive extensive training in the use of the Ottawa GRS instrument, they were well versed in its rankings and appropriate use; in addition, the investigators felt the tool was sufficiently intuitive given its use of descriptive anchors to provide a reasonably reproducible score on an individual encounter.

Other institution-specific factors likely influenced our findings. The scenarios were honed over multiple years preceding the study and were targeted to each PGY level; however, they were not originally designed specifically to evoke responses for discrete GRS domains. The relatively high mean scores of residents at all levels of training suggests a possible ceiling effect that obscures true differences in ability in the advanced stages of residency (i.e., the interval between PGY-2 and PGY-3). This leniency bias may be secondary to generational differences in the student–teacher relationship, inadequate rater training, or inherent properties of global rating scales in resident assessment (Citation25, Citation26) – all features with which many programs struggle.

Finally, our study design was vulnerable to a number of confounders commonly encountered in education studies. Given the frequency with which our residents are exposed to simulation, it is possible that the gains observed in residents’ CRM ability are attributable in part to increased confidence and facility with simulation itself, rather than with the material tested. Likewise, although participants were instructed not to share details of the scenarios with each other, some degree of “contamination” between groups was unavoidable.

Conclusions

Our study supports the use of the Ottawa GRS to demonstrate progression of CRM ability in individual learners over the course of residency training in EM. Specifically, the Ottawa GRS instrument shows promise as a tool for charting growth early in training but may need modification for use in advanced learners. These findings may benefit the design of a future prospective, multicenter, multi-domain observational study of residents’ attainment of developmental milestones in EM.

Conflict of interest and funding

The authors report no declarations of interest.

Acknowledgements

The authors thank Gupreet Bola, the nursing staff of the emergency department at UC Davis Medical Center, and the staff of the Center for Virtual Care at UC Davis Medical Center for their kind assistance and participation in the multidisciplinary clinical scenarios.

References

- Weinberger SE , Pereira AG , Iobst WF , Mechaber AJ , Bronze MS . Competency-based education and training in internal medicine. Ann Intern Med. 2010; 153: 751–6. [PubMed Abstract].

- Beeson MS , Vozenilek JA . Specialty milestones and the next accreditation system: an opportunity for the simulation community. Simul Healthc 2014. 2: 65–9.

- Ling LJ , Beeson MS . Milestones in emergency medicine. J Acute Med. 2012; 2: 65–9.

- Emergency Medicine Milestones. Available from: http://www.cordem.org [cited 4 November 2014].

- Fernandez R , Vozenilek JA , Hegarty CB , Motola I , Reznek M , Phrampus PE , etal. Developing expert medical teams: toward an evidence-based approach. Acad Emerg Med. 2008; 15: 1025–36. [PubMed Abstract].

- Nasca TJ , Philibert I , Brigham T , Flynn TC . The next GME accreditation system – rationale and benefits. N Engl J Med. 2012; 366: 1051–6. [PubMed Abstract].

- Cheng A , Donoghue A , Gilfoyle E , Eppich W . Simulation-based crisis resource management training for pediatric critical care medicine: a review for instructors. Pediatr Crit Care Med. 2012; 13: 197–203. [PubMed Abstract].

- Howard SK , Gaba DM , Fish KJ , Yang G , Sarnquist FH . Anesthesia crisis resource management training: teaching anesthesiologists to handle critical incidents. Aviat Space Environ Med. 1992; 63: 763–70. [PubMed Abstract].

- Clarke S , Horeczko T , Cotton D , Bair A . Heart rate, anxiety and performance of residents during a simulated critical clinical encounter: a pilot study. BMC Med Educ. 2014; 14: 153. [PubMed Abstract] [PubMed CentralFull Text].

- Nunnink L , Foot C , Venkatesh B , Corke C , Saxena M , Lucey M , etal. High-stakes assessment of the non-technical skills of critical care trainees using simulation: feasibility, acceptability and reliability. Crit Care Resusc. 2014; 16: 6–12. [PubMed Abstract].

- Hicks CM , Kiss A , Bandiera GW , Denny CJ . Crisis Resources for Emergency Workers (CREW II): results of a pilot study and simulation-based crisis resource management course for emergency medicine residents. CJEM. 2012; 14: 354–62. [PubMed Abstract].

- Hayter MA , Bould MD , Afsari M , Riem N , Chiu M , Boet S . Does warm-up using mental practice improve crisis resource management performance? A simulation study. Br J Anaesth. 2013; 110: 299–304. [PubMed Abstract].

- Kim J , Neilipovitz D , Cardinal P , Chiu M . A comparison of global rating scale and checklist scores in the validation of an evaluation tool to assess performance in the resuscitation of critically ill patients during simulated emergencies (abbreviated as ‘CRM simulator study IB’). Simul Healthc. 2009; 4: 6–16. [PubMed Abstract].

- Kim J , Neilipovitz D , Cardinal P , Chiu M , Clinch J . A pilot study using high-fidelity simulation to formally evaluate performance in the resuscitation of critically ill patients: The University of Ottawa Critical Care Medicine, High-Fidelity Simulation, and Crisis Resource Management I Study. Crit Care Med. 2006; 34: 2167–74. [PubMed Abstract].

- Swing SR . The ACGME outcome project: retrospective and prospective. Med Teach. 2007; 29: 648–54. [PubMed Abstract].

- Swing SR , Clyman SG , Holmboe ES , Williams RG . Advancing resident assessment in graduate medical education. J Grad Med Educ. 2009; 1: 278–86. [PubMed Abstract] [PubMed CentralFull Text].

- Kessler CS , Leone KA . The current state of core competency assessment in emergency medicine and a future research agenda: recommendations of the working group on assessment of observable learner performance. Acad Emerg Med. 2012; 19: 1354–9. [PubMed Abstract].

- Chen EH , O'Sullivan PS , Pfennig CL , Leone K , Kessler CS . Assessing systems-based practice. Acad Emerg Med. 2012; 19: 1366–71. [PubMed Abstract].

- Helmreich R . Kanki B , Helmreich R , Anca J . Why CRM? Empirical and theorectical bases of human factors teaching. Crew resource management. 2010; 2nd ed, San Diego, CA: Elsevier. 4–5.

- Holzman RS , Cooper JB , Gaba DM , Philip JH , Small SD , Feinstein D . Anesthesia crisis resource management: real-life simulation training in operating room crises. J Clin Anesth. 1995; 7: 675–87. [PubMed Abstract].

- Reznek M , Smith-Coggins R , Howard S , Kiran K , Harter P , Sowb Y , etal. Emergency medicine crisis resource management (EMCRM): pilot study of a simulation-based crisis management course for emergency medicine. Acad Emerg Med. 2003; 10: 386–9. [PubMed Abstract].

- Fletcher G , Flin R , McGeorge P , Glavin R , Maran N , Patey R . Anaesthetists’ Non-Technical Skills (ANTS): evaluation of a behavioural marker system. Br J Anaesth. 2003; 90: 580–8. [PubMed Abstract].

- Steinemann S , Berg B , DiTullio A , Skinner A , Terada K , Anzelon K , etal. Assessing teamwork in the trauma bay: introduction of a modified ‘NOTECHS’ scale for trauma. Am J Surg. 2012; 203: 69–75. [PubMed Abstract].

- Russ S , Hull L , Rout S , Vincent C , Darzi A , Sevdalis N . Observational teamwork assessment for surgery: feasibility of clinical and nonclinical assessor calibration with short-term training. Ann Surg. 2012; 255: 804–9. [PubMed Abstract].

- Ringsted C , Ostergaard D , Ravn L , Pedersen JA , Berlac PA , van der Vleuten CP . A feasibility study comparing checklists and global rating forms to assess resident performance in clinical skills. Med Teach. 2003; 25: 654–8. [PubMed Abstract].

- Twenge JM . Generational changes and their impact in the classroom: teaching generation me. Med Educ. 2009; 43: 398–405. [PubMed Abstract].