Figures & data

Figure 1. A) Ratio-based edge detector using σhh (L-band). Credits: (Schou et al. Citation2003) b) RBED edge detector. Credits: (Wei and Feng Citation2015). c) Multiscale edge detector. Credits: (Xiang et al. Citation2017a).

Figure 2. Examples of synthetic images of simulated simple scenarios. a) Wei and Feng (Citation2015) b) Zhan et al. (Citation2013) c) Xiang et al. (Citation2017b).

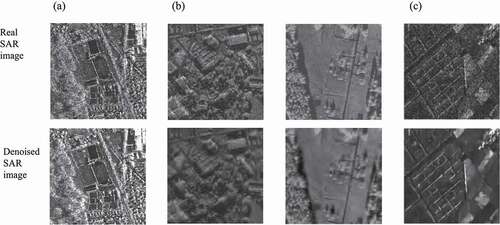

Figure 3. A) Denoised with SAR-BM3D. Credits: (Parrilli et al. Citation2011). b) Denoised with soft thresholding. Credits: (Achim et al. Citation2003). c) Denoised with SAR-CNN. Credits: (Molini et al. Citation2020).

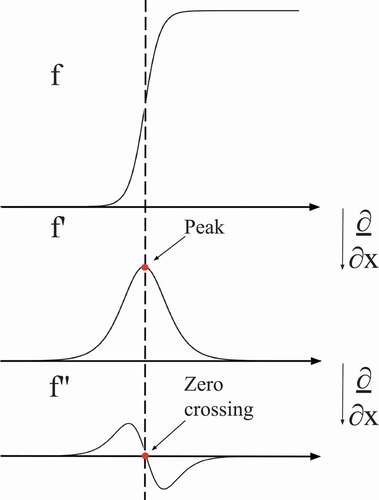

Figure 4. Difference in first and second derivatives-based edge detection. f indicates the image intensity.

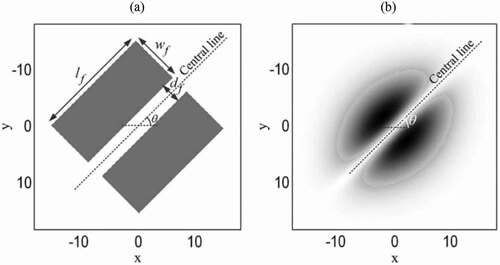

Figure 6. Examples of directional filters. α is measured counterclockwise from the horizontal axis. Credits: Schowengerdt (Citation2007).

Figure 7. Example of the real parts of the Gabor filters with different orientations and frequencies to capture various patterns. These filters are also used to create one of our filter banks.

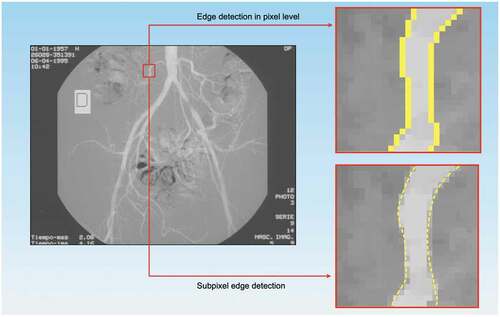

Figure 8. Subpixel edge detection where edges are precisely located inside the pixels. Credits: (Trujillo-Pino et al. Citation2013).

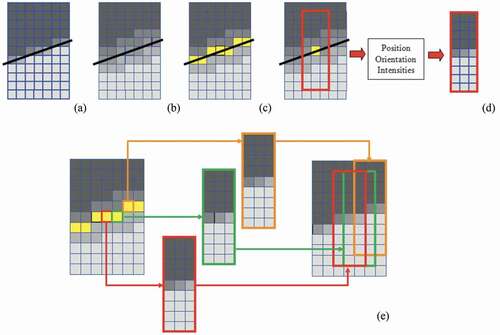

Figure 9. Subpixel edge detection algorithm based on partial area effect. a) Input image; b) Smoothed image; c) Detecting edge pixels; d) a synthetic 9×3 subimage is created from the features of each edge pixel; e) Subimages are combined to achieve a complete restored image. Credits: (Trujillo-Pino et al. Citation2013).

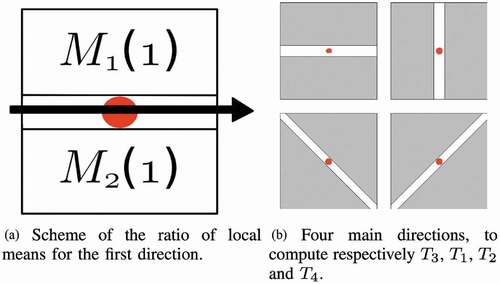

Figure 10. Scheme of the ROA method. Credits: Dellinger et al. (Citation2015).

Figure 11. Difference between (a) rectangle bi-windows and (b) GGS bi-windows at orientation π . Credits: Shui and Cheng (Citation2012).

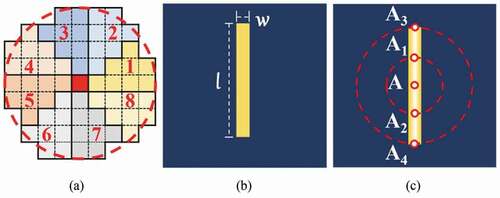

Figure 12. (a) Circular window with 4 pixel radius, (b) prototype pattern (bar) for RUSTICO, (c) positions of local differences of the Gaussians’ maxima along with concentric circles. Credits: Li et al. (Citation2022).

Table 1. Evaluation of various denoising methods over the entire BSDS500-speckled dataset.

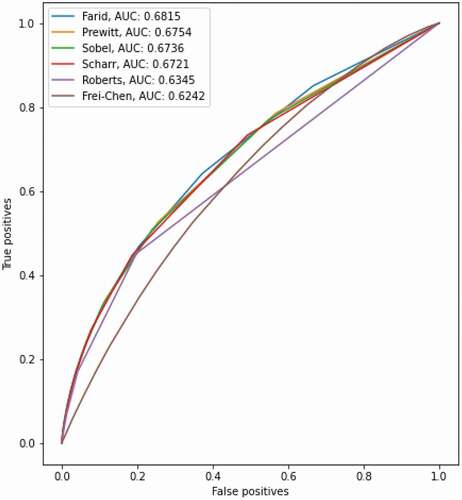

Table 2. Evaluation of the first-order derivatives-based edge detectors.

Table 3. Evaluation of the different parameters for LoG.

Table 4. Evaluation of the different parameters for DoG. Ratio indicates the size ratio of the kernels.

Table 5. Evaluation of Canny edge detection for different sigma options and threshold ratios.

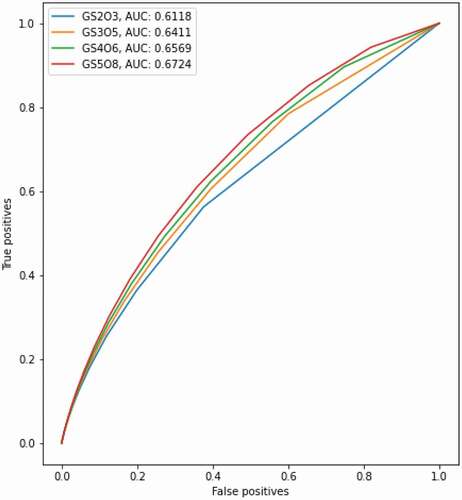

Table 6. Evaluation of Gabor edge detection for different scales (S) and orientations (O).

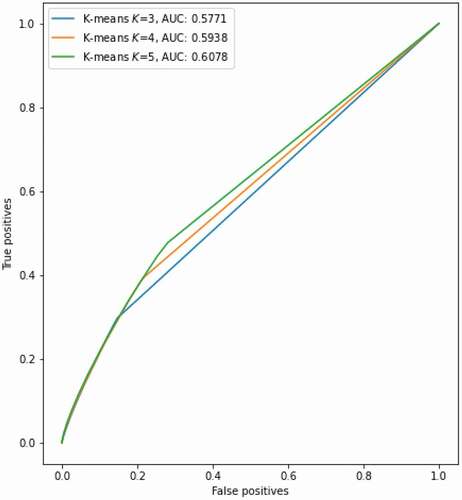

Table 7. Evaluation of different number of clusters for K-means-based edge detection.

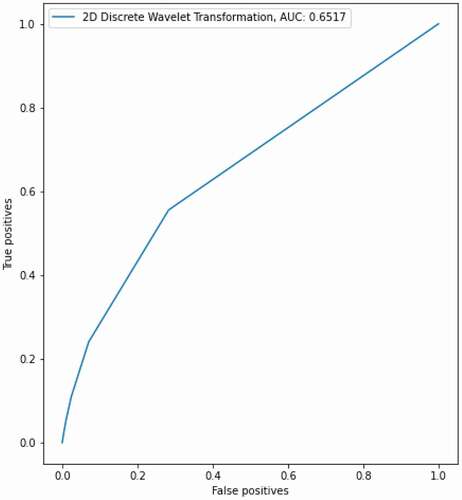

Table 8. Evaluation of 2D discrete wavelet transformation-based edge detection.

Table 9. Evaluation of different iterations for the subpixel-based edge detection method.

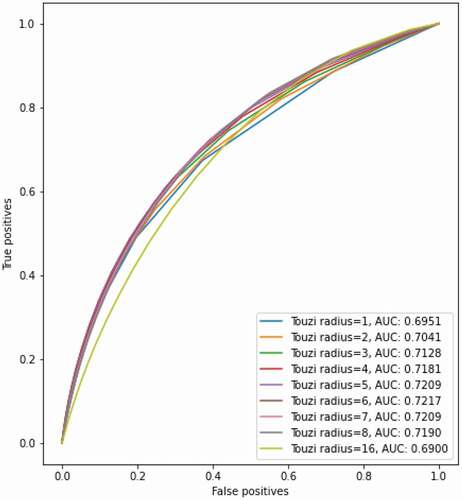

Table 10. Evaluation of different parameters for Touzi.

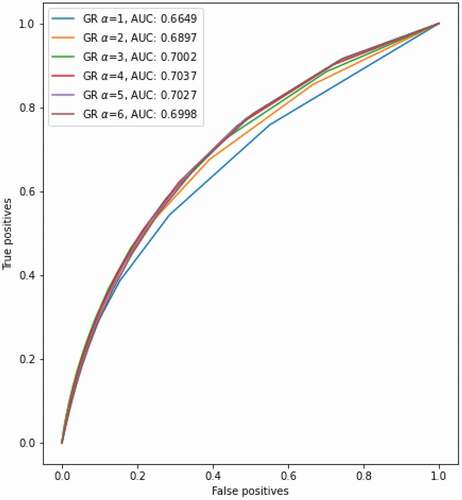

Table 11. Evaluation of different parameters for GR.

Table 12. Evaluation of different parameters for the Gaussian-Gamma-shaped bi-windows.

Table 13. Evaluation of different parameters for ROLSS RUSTICO.

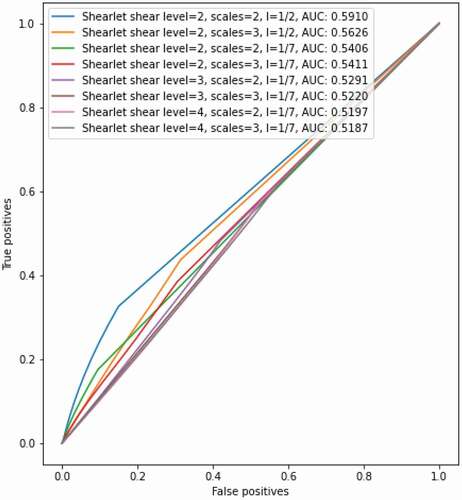

Table 14. Evaluation of different parameters for SAR-Shearlet, where denotes the effective support length of the Mexican hat wavelet.

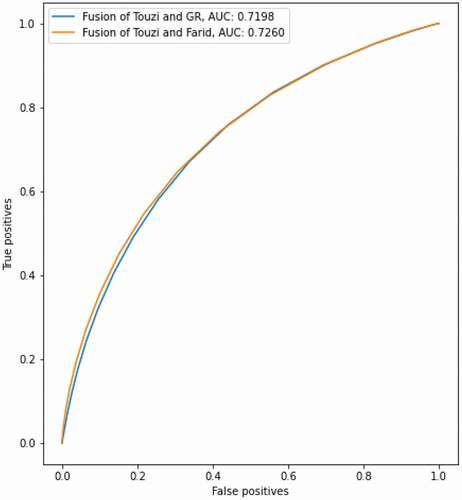

Table 15. Evaluation of the fusion schemes for combining the SAR-specific Touzi and optical Farid methods.

Table 16. Evaluation of the fusing schemes for combining the ROA-based Touzi and ROEWA-based GR.

Table 17. Evaluation of the gradient magnitude fusion for the SAR-specific Touzi and non-SAR Farid method.

Table 18. Evaluation of the gradient magnitude fusion for combining the ROA-based Touzi and ROEWA-based GR.

Table 19. Overview of the best performing methods over the training set, ranked by F1-score.

Table 20. BSDS500-speckled Benchmark. The rows are sorted by ODS F1. The best results are highlighted in bold, while the second best results are underlined.

Table 21. BSDS500-speckled Noisy Benchmark. The rows are sorted by ODS F1. The best results are highlighted in bold, while the second best results are underlined.

Figure 24. A) Original clean Sentinel-1 Lelystad image. Credits image: Dalsasso et al. (Citation2021) b) Pseudo ground-truth. Credits image: Liu et al., (Citation2020) c) 1-look speckled image. d) Denoised 1-look image.

Table 22. The performances of different methods on the Sentinel-1 Lelystad, ordered by F1 score.

Table 23. Run times (measured in seconds) of the algorithms for SAR image evaluations.Footnote11.

Figure 26. A single look TerraSAR-X image of Texas, USA, around the Winkler County Sinkhole #2 near Odessa. Credits: © DLR e.V. 2022 and © Airbus Defence and Space GmbH 2022. a) Original noisy image. b) Denoised with SARBLF.

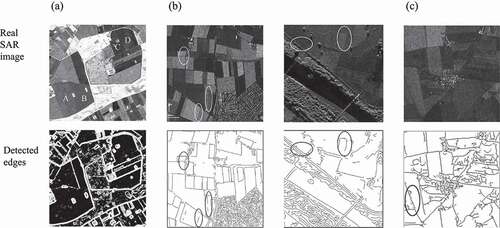

Figure 27. Edge detection performances on a TerraSAR-X image of Texas, USA, around the Winkler County Sinkhole #2 near Odessa. Credits: © DLR e.V. 2022 and © Airbus Defence and Space GmbH 2022.

Figure 28. Sentinel-1 image of Texas, USA, captured around Red Bluff Reservoir on the Pecos River near Reeves County with different polarizations. a) Sigma0 VH b) Sigma0 VV. Both images are denoised by SAR- BLF. Credits: Copernicus Sentinel data 2022.

Table 24. Matching consistency of different algorithms over different polarizations (VV and VH) of the same area of 1900 by 7200 pixels (13.68 million pixels), ordered by MEP.

Data availability statement

The data that support the findings of this study are available at https://github.com/ChenguangTelecom/GRHED, and the benchmark suite is available at https://github.com/readmees/SAR_edge_benchmark.