Figures & data

FIGURE 2. (Middle) Device attached to person’s back for sonifying trunk movement during the forward reach exercise (Left). (Right) Examples of SESs for a forward reach exercise: The Flat sound is a repetition of the same tone played between the starting standing position and the maximum stretching position. The Wave sound is a combination of two tone scales (phrases), an ascending one ending at the easier stretching target and a descending one to the final more challenging target. The reaching of the easier target is marked by the highest tone (Singh et al., Citation2014).

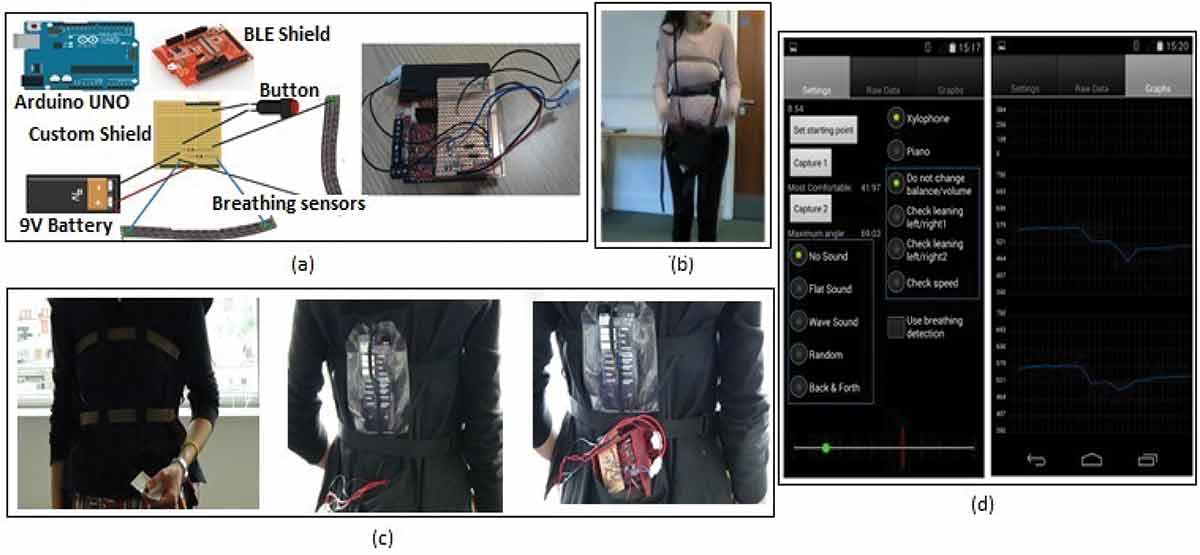

FIGURE 3. Final design of the wearable device: (a) Architecture of the breathing module built using Arduino UNO. (b) Breathing sensors. (c) Front and back views of the tabard with integrated breathing sensors, button held in the person’s hand for calibration, the smartphone in its pocket on the back of the trunk and the breathing sensing module shown outside its corresponding pocket. (d) Smartphone interface for selecting sonified exercise spaces and visualization of the tracked signals.

FIGURE 4. Description of the Implemented Sonified Exercise Spaces (SESs) for the Forward Reach Exercise.

FIGURE 4. (Continued).

FIGURE 6. Description of Independent and Dependent Variables for each Part of the Study.

FIGURE 7. Mean (± SE) perceived and actual bend angle for all four sound conditions in the study with the wearable device.

FIGURE 8. Mean (± SE) ratings (0 = worst to 6 = best) on awareness, performance, motivation, and relaxation for all four sound conditions in the study with the Kinect (left) and the wearable device (right).

FIGURE 9. Distribution of results for all the sounds.

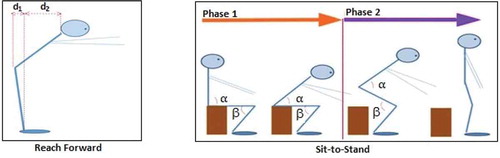

FIGURE A1. (Left) To detect forward reach movement and related guarding strategies, the system monitors the distances between shoulders, hips, and feet. (Right) The progress of the sit-to-stand movement is detected by monitoring the angles between trunk, thigh, and crus.

FIGURE B1. Results from Statistical Comparisons between the Sound Conditions (Different Amounts of Information) on Perceived and Actual Bend Angle During Forward Reach Exercising Using the Wearable Device. Bonferroni Correction was Applied to Multiple Comparisons (p = .008 Corresponding to a Significance Level of α = 0.05).

FIGURE B2. Statistical Comparison of Sonification Effects During Physical Activity using the Kinect-based Device on Awareness, Performance, Motivation and Relaxation.

FIGURE B3. Statistical Comparison of Sonification Effects During Forward Reach with the Wearable Device on Awareness, Performance, Motivation and Relaxation.

FIGURE B4. Results from the Comparisons of the Effects Between Sound Conditions with and Without Target on Awareness, Performance, Motivation, and Relaxation.

FIGURE B5. Results from Statistical Comparisons Between the Sound Conditions (Different Amounts of Information) Using the Wearable Device and Performing Movement Aimed Towards a Target.