Figures & data

Figure 2. Dataset for image classification task with ten classes: Calculator, Cigarette Pack, Fork, Glasses, Hook, Mug, Rubber Duck, Scissor, Stapler, and Toothbrush

Table 1. Eight learning algorithms with their learning rates, mini-batch sizes, number of layers, and number of epochs

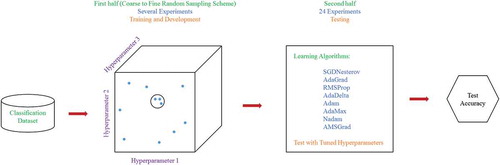

Figure 3. The layout of first and second phases of the proposed algorithm using coarse to fine random sampling scheme

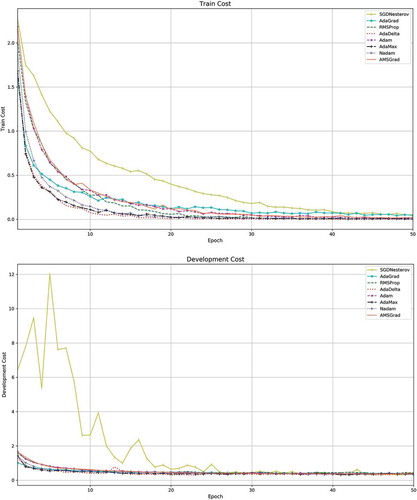

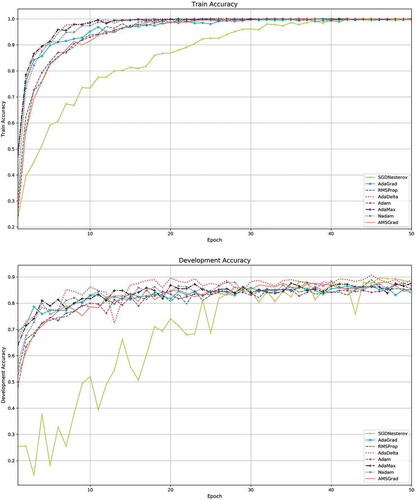

Figure 4. Accuracy and cost curves of eight learning algorithms on training and development sets. (a) Training accuracy, (b) Development accuracy, (c) Training cost, (d) Development cost

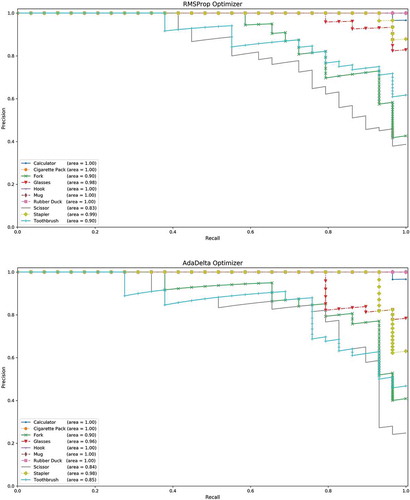

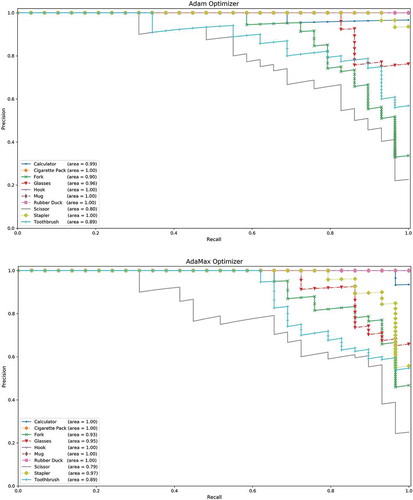

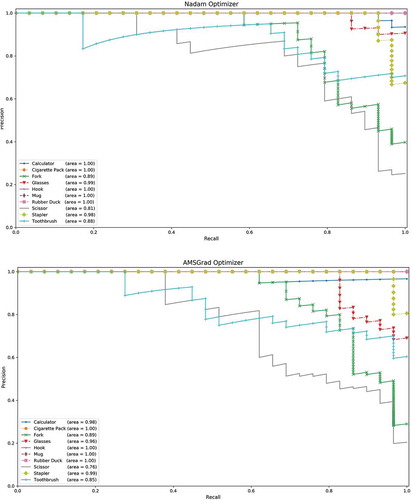

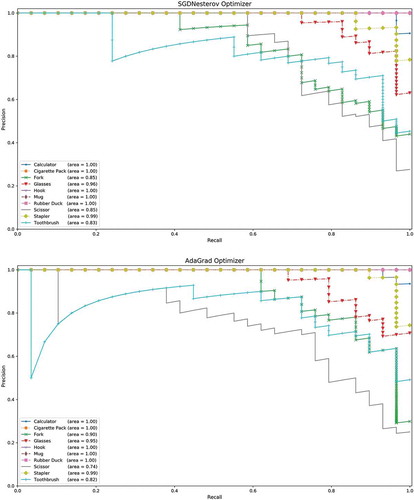

Figure 5. Precision-recall curves of eight learning algorithms on test set of ten object classes. (a) SGDNesterov optimizer, (b) AdaGrad optimizer, (c) RMSProp optimizer, (d) AdaDelta optimizer, (e) Adam optimizer, (f) AdaMax optimizer, (g) Nadam optimizer, (h) AMSGrad optimizer

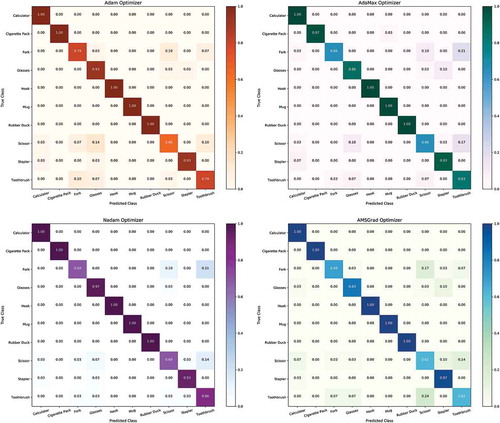

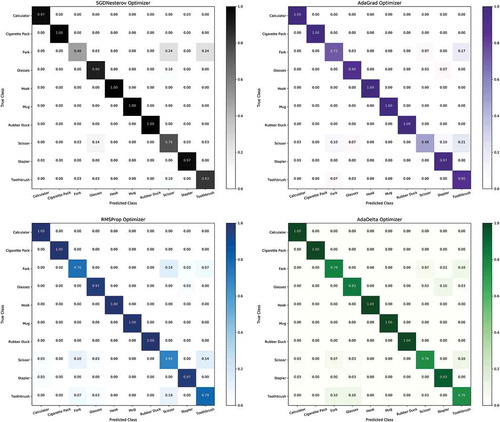

Figure 6. Confusion matrices of eight learning algorithms on test set of ten object classes. (a) SGDNesterov optimizer, (b) AdaGrad optimizer, (c) RMSProp optimizer, (d) AdaDelta optimizer, (e) Adam optimizer, (f) AdaMax optimizer, (g) Nadam optimizer, (h) AMSGrad optimizer

Table 2. Training time, memory utilization, and accuracy on test set of eight learning algorithms. Bold font shows the best results