Figures & data

Figure 2. The architecture of CBOW and Skip-gram as described in (Mikolov et al. Citation2013b).

Table 1. Word embedding mapping methods

Table 2. An approximate count of articles and tokens in Wikipedia dumps for each language (K = 1000)

Table 3. The number of words in seed dictionaries and size of the training, validation, and test sets (K = 1000)

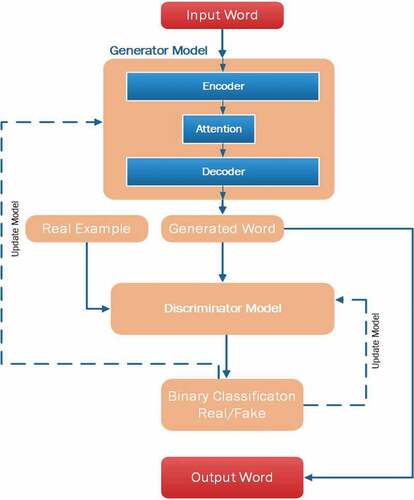

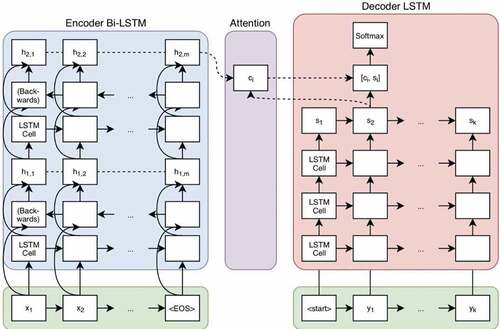

Figure 5. Encoder-Decoder architecture with an attention mechanism (Bahdanau, Cho, and Bengio Citation2016).

Table 4. Implemented model’s performance in different networks

Table 5. Accuracy of the proposed method compared with previous works